Brain-Inspired AI Breakthrough: Lp-Convolution Enhances Machine Vision

2 Sources

2 Sources

[1]

Brain-inspired AI breakthrough: Making computers see more like humans

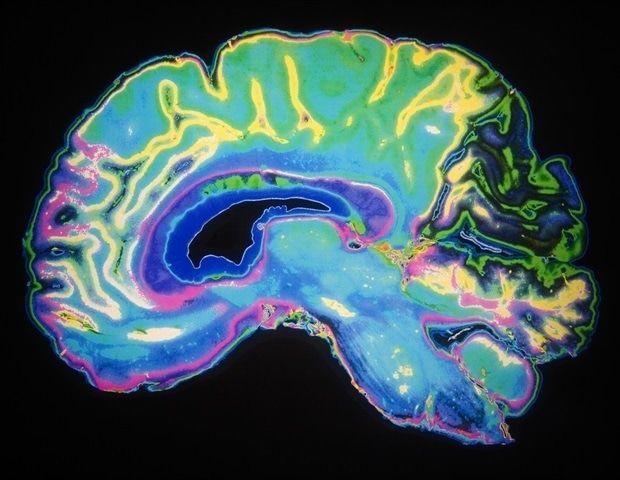

A team of researchers from the Institute for Basic Science (IBS), Yonsei University, and the Max Planck Institute have developed a new artificial intelligence (AI) technique that brings machine vision closer to how the human brain processes images. Called Lp-Convolution, this method improves the accuracy and efficiency of image recognition systems while reducing the computational burden of existing AI models. Bridging the Gap Between CNNs and the Human Brain The human brain is remarkably efficient at identifying key details in complex scenes, an ability that traditional AI systems have struggled to replicate. Convolutional Neural Networks (CNNs) -- the most widely used AI model for image recognition -- process images using small, square-shaped filters. While effective, this rigid approach limits their ability to capture broader patterns in fragmented data. More recently, Vision Transformers (ViTs) have shown superior performance by analyzing entire images at once, but they require massive computational power and large datasets, making them impractical for many real-world applications. Inspired by how the brain's visual cortex processes information selectively through circular, sparse connections, the research team sought a middle ground: Could a brain-like approach make CNNs both efficient and powerful? Introducing Lp-Convolution: A Smarter Way to See To answer this, the team developed Lp-Convolution, a novel method that uses a multivariate p-generalized normal distribution (MPND) to reshape CNN filters dynamically. Unlike traditional CNNs, which use fixed square filters, Lp-Convolution allows AI models to adapt their filter shapes -- stretching horizontally or vertically based on the task, much like how the human brain selectively focuses on relevant details. This breakthrough solves a long-standing challenge in AI research, known as the large kernel problem. Simply increasing filter sizes in CNNs (e.g., using 7×7 or larger kernels) usually does not improve performance, despite adding more parameters. Lp-Convolution overcomes this limitation by introducing flexible, biologically inspired connectivity patterns. Real-World Performance: Stronger, Smarter, and More Robust AI In tests on standard image classification datasets (CIFAR-100, TinyImageNet), Lp-Convolution significantly improved accuracy on both classic models like AlexNet and modern architectures like RepLKNet. The method also proved to be highly robust against corrupted data, a major challenge in real-world AI applications. Moreover, the researchers found that when the Lp-masks used in their method resembled a Gaussian distribution, the AI's internal processing patterns closely matched biological neural activity, as confirmed through comparisons with mouse brain data. "We humans quickly spot what matters in a crowded scene," said Dr. C. Justin LEE, Director of the Center for Cognition and Sociality within the Institute for Basic Science. "Our Lp-Convolution mimics this ability, allowing AI to flexibly focus on the most relevant parts of an image -- just like the brain does." Impact and Future Applications Unlike previous efforts that either relied on small, rigid filters or required resource-heavy transformers, Lp-Convolution offers a practical, efficient alternative. This innovation could revolutionize fields such as: - Autonomous driving, where AI must quickly detect obstacles in real time - Medical imaging, improving AI-based diagnoses by highlighting subtle details - Robotics, enabling smarter and more adaptable machine vision under changing conditions "This work is a powerful contribution to both AI and neuroscience," said Director C. Justin Lee. "By aligning AI more closely with the brain, we've unlocked new potential for CNNs, making them smarter, more adaptable, and more biologically realistic." Looking ahead, the team plans to refine this technology further, exploring its applications in complex reasoning tasks such as puzzle-solving (e.g., Sudoku) and real-time image processing. The study will be presented at the International Conference on Learning Representations (ICLR) 2025, and the research team has made their code and models publicly available:

[2]

Brain-inspired AI technique mimics human visual processing to enhance machine vision

A team of researchers from the Institute for Basic Science, Yonsei University, and the Max Planck Institute have developed a new artificial intelligence (AI) technique that brings machine vision closer to how the human brain processes images. Called Lp-Convolution, this method improves the accuracy and efficiency of image recognition systems while reducing the computational burden of existing AI models. The human brain is remarkably efficient at identifying key details in complex scenes, an ability that traditional AI systems have struggled to replicate. Convolutional Neural Networks (CNNs) -- the most widely used AI model for image recognition -- process images using small, square-shaped filters. While effective, this rigid approach limits their ability to capture broader patterns in fragmented data. More recently, vision transformers have shown superior performance by analyzing entire images at once, but they require massive computational power and large datasets, making them impractical for many real-world applications. Inspired by how the brain's visual cortex processes information selectively through circular, sparse connections, the research team sought a middle ground: Could a brain-like approach make CNNs both efficient and powerful? Introducing Lp-Convolution: A smarter way to see To answer this, the team developed Lp-Convolution, a novel method that uses a multivariate p-generalized normal distribution (MPND) to reshape CNN filters dynamically. Unlike traditional CNNs, which use fixed square filters, Lp-Convolution allows AI models to adapt their filter shapes -- stretching horizontally or vertically based on the task, much like how the human brain selectively focuses on relevant details. This breakthrough solves a long-standing challenge in AI research, known as the large kernel problem. Simply increasing filter sizes in CNNs (e.g., using 7×7 or larger kernels) usually does not improve performance, despite adding more parameters. Lp-Convolution overcomes this limitation by introducing flexible, biologically inspired connectivity patterns. Real-world performance: Stronger, smarter, and more robust AI In tests on standard image classification datasets (CIFAR-100, TinyImageNet), Lp-Convolution significantly improved accuracy on both classic models like AlexNet and modern architectures like RepLKNet. The method also proved to be highly robust against corrupted data, a major challenge in real-world AI applications. Moreover, the researchers found that when the Lp-masks used in their method resembled a Gaussian distribution, the AI's internal processing patterns closely matched biological neural activity, as confirmed through comparisons with mouse brain data. "We humans quickly spot what matters in a crowded scene," said Dr. C. Justin Lee, Director of the Center for Cognition and Sociality within the Institute for Basic Science. "Our Lp-Convolution mimics this ability, allowing AI to flexibly focus on the most relevant parts of an image -- just like the brain does." Impact and future applications Unlike previous efforts that either relied on small, rigid filters or required resource-heavy transformers, Lp-Convolution offers a practical, efficient alternative. This innovation could revolutionize fields such as: "This work is a powerful contribution to both AI and neuroscience," said Director Lee. "By aligning AI more closely with the brain, we've unlocked new potential for CNNs, making them smarter, more adaptable, and more biologically realistic." Looking ahead, the team plans to refine this technology further, exploring its applications in complex reasoning tasks such as puzzle-solving (e.g., Sudoku) and real-time image processing. The study will be presented at the International Conference on Learning Representations (ICLR 2025), and the research team has made their code and models publicly available on GitHub and OpenReview.net.

Share

Share

Copy Link

Researchers develop Lp-Convolution, a new AI technique that mimics human visual processing, improving image recognition accuracy and efficiency while reducing computational burden.

Bridging the Gap Between AI and Human Vision

Researchers from the Institute for Basic Science, Yonsei University, and the Max Planck Institute have developed a groundbreaking AI technique called Lp-Convolution, which brings machine vision closer to human visual processing

1

2

. This innovative approach addresses longstanding challenges in AI image recognition, offering improved accuracy and efficiency while reducing computational demands.The Challenge of Replicating Human Vision

Traditional Convolutional Neural Networks (CNNs) have been the cornerstone of AI image recognition, but their rigid, square-shaped filters limit their ability to capture broader patterns in complex scenes. While Vision Transformers (ViTs) have shown superior performance, their massive computational requirements make them impractical for many real-world applications

1

.Lp-Convolution: A Brain-Inspired Solution

Inspired by the human brain's visual cortex, which processes information through circular, sparse connections, the research team developed Lp-Convolution. This method uses a multivariate p-generalized normal distribution (MPND) to dynamically reshape CNN filters

1

2

. Unlike traditional CNNs, Lp-Convolution allows AI models to adapt their filter shapes based on the task at hand, mimicking the brain's ability to selectively focus on relevant details.Overcoming the Large Kernel Problem

Lp-Convolution solves the "large kernel problem" in AI research. Traditionally, increasing filter sizes in CNNs did not improve performance despite adding more parameters. Lp-Convolution introduces flexible, biologically inspired connectivity patterns that overcome this limitation

1

.Impressive Performance and Biological Realism

In tests on standard image classification datasets (CIFAR-100, TinyImageNet), Lp-Convolution significantly improved accuracy for both classic models like AlexNet and modern architectures like RepLKNet. The method also demonstrated high robustness against corrupted data, a critical factor in real-world AI applications

1

2

.Notably, when the Lp-masks resembled a Gaussian distribution, the AI's internal processing patterns closely matched biological neural activity, as confirmed through comparisons with mouse brain data

1

.Related Stories

Potential Real-World Applications

Dr. C. Justin Lee, Director of the Center for Cognition and Sociality at the Institute for Basic Science, highlighted the technique's potential to revolutionize various fields

1

2

:- Autonomous driving: Enhancing real-time obstacle detection

- Medical imaging: Improving AI-based diagnoses by highlighting subtle details

- Robotics: Enabling more adaptable machine vision under changing conditions

Future Directions and Availability

The research team plans to refine the technology further, exploring its applications in complex reasoning tasks such as puzzle-solving and real-time image processing

1

2

. The study will be presented at the International Conference on Learning Representations (ICLR) 2025, and the team has made their code and models publicly available on GitHub and OpenReview.net2

.This breakthrough represents a significant step forward in aligning AI more closely with human cognition, potentially unlocking new capabilities in machine learning and artificial intelligence.

References

Summarized by

Navi

Related Stories

MovieNet: Brain-Inspired AI Revolutionizes Video Analysis with Human-Like Accuracy

10 Dec 2024•Science and Research

AI Breakthrough: Predicting Human Thoughts and Revealing Brain Insights

11 Sept 2024

Brain Cells Outperform AI in Learning Speed and Efficiency, Study Reveals

13 Aug 2025•Science and Research

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology