Breakthrough Brain-Computer Interface Restores Real-Time Speech for ALS Patient

9 Sources

9 Sources

[1]

World first: brain implant lets man speak with expression -- and sing

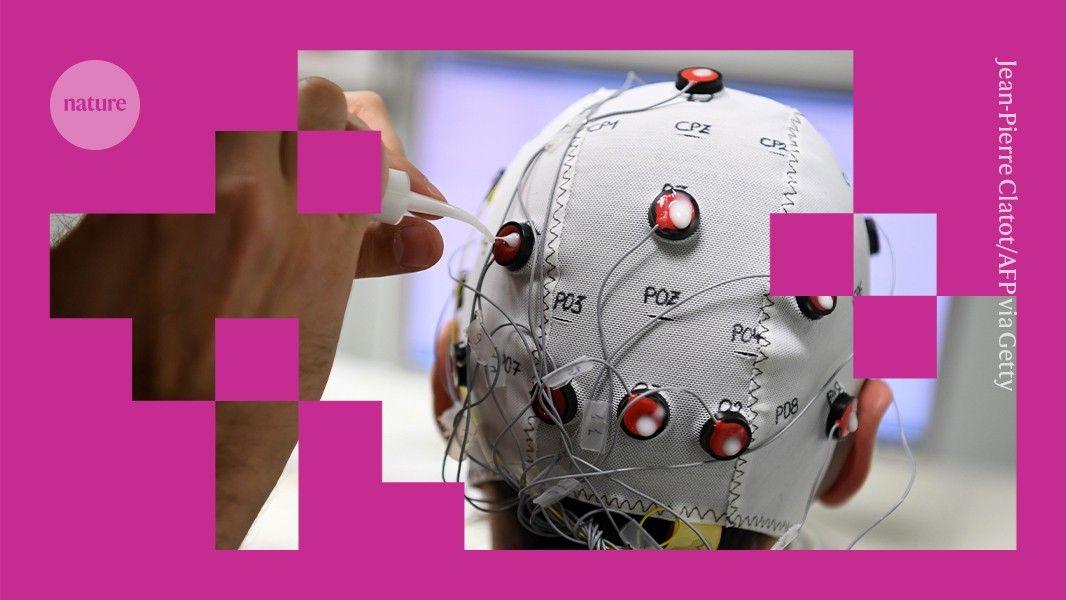

A man with a severe speech disability is able to speak expressively and sing using a brain implant that translates his neural activity into words almost instantly. The device conveys changes of tone when he asks questions, emphasizes the words of his choice and allows him to hum a string of notes in three pitches. The system -- known as a brain-computer interface (BCI) -- used artificial intelligence (AI) to decode the participant's electrical brain activity as he attempted to speak. The device is the first to reproduce not only a person's intended words but also features of natural speech such as tone, pitch and emphasis, which help to express meaning and emotion. In a study, a synthetic voice that mimicked the participant's own spoke his words within 10 milliseconds of the neural activity that signalled his intention to speak. The system, described today in Nature, marks a significant improvement over earlier BCI models, which streamed speech within three seconds or produced it only after users finished miming an entire sentence. "This is the holy grail in speech BCIs," says Christian Herff, a computational neuroscientist at Maastricht University, the Netherlands, who was not involved in the study. "This is now real, spontaneous, continuous speech." The study participant, a 45-year-old man, lost his ability to speak clearly after developing amyotrophic lateral sclerosis, a form of motor neuron disease, which damages the nerves that control muscle movements, including those needed for speech. Although he could still make sounds and mouth words, his speech was slow and unclear. Five years after his symptoms began, the participant underwent surgery to insert 256 silicon electrodes, each 1.5-mm long, in a brain region that controls movement. Study co-author Maitreyee Wairagkar, a neuroscientist at the University of California, Davis, and her colleagues trained deep-learning algorithms to capture the signals in his brain every 10 milliseconds. Their system decodes, in real time, the sounds the man attempts to produce rather than his intended words or the constituent phonemes -- the subunits of speech that form spoken words. "We don't always use words to communicate what we want. We have interjections. We have other expressive vocalizations that are not in the vocabulary," explains Wairagkar. "In order to do that, we have adopted this approach, which is completely unrestricted." The team also personalized the synthetic voice to sound like the man's own, by training AI algorithms on recordings of interviews he had done before the onset of his disease. The team asked the participant to attempt to make interjections such as 'aah', 'ooh' and 'hmm' and say made-up words. The BCI successfully produced these sounds, showing that it could generate speech without needing a fixed vocabulary. Using the device, the participant spelt out words, responded to open-ended questions and said whatever he wanted, using some words that were not part of the decoder's training data. He told the researchers that listening to the synthetic voice produce his speech made him "feel happy" and that it felt like his "real voice". In other experiments, the BCI identified whether the participant was attempting to say a sentence as a question or as a statement. The system could also determine when he stressed different words in the same sentence and adjust the tone of his synthetic voice accordingly. "We are bringing in all these different elements of human speech which are really important," says Wairagkar. Previous BCIs could produce only flat, monotone speech. "This is a bit of a paradigm shift in the sense that it can really lead to a real-life tool," says Silvia Marchesotti, a neuroengineer at the University of Geneva in Switzerland. The system's features "would be crucial for adoption for daily use for the patients in the future".

[2]

Mind-reading AI turns paralysed man's brainwaves into instant speech

A brain-computer interface has enabled a man with paralysis to have real-time conversations, without the usual delay in speech A man who lost the ability to speak can now hold real-time conversations and even sing through a brain-controlled synthetic voice. The brain-computer interface reads the man's neural activity via electrodes implanted in his brain and then instantaneously generates speech sounds that reflect his intended pitch, intonation and emphasis. "This is kind of the first of its kind for instantaneous voice synthesis - within 25 milliseconds," says Sergey Stavisky at the University of California, Davis. The technology needs to be improved to make the speech easier to understand, says Maitreyee Wairagkar, also at UC Davis. But the man, who lost the ability to talk due to amyotrophic lateral sclerosis, still says it makes him "happy" and that it feels like his real voice, according to Wairagkar. Speech neuroprostheses that use brain-computer interfaces already exist, but these generally take several seconds to convert brain activity into sounds. That makes natural conversation hard, as people can't interrupt, clarify or respond in real time, says Stavisky. "It's like having a phone conversation with a bad connection." To synthesise speech more realistically, Wairagkar, Stavisky and their colleagues implanted 256 electrodes into the parts of the man's brain that help control the facial muscles used for speaking. Then, across multiple sessions, the researchers showed him thousands of sentences on a screen and asked him to try saying them aloud, sometimes with specific intonations, while recording his brain activity. "The idea is that, for example, you could say, 'How are you doing today?' or 'How are you doing today?", and that changes the semantics of the sentence," says Stavisky. "That makes for a much richer, more natural exchange - and a big step forward compared to previous systems." Next, the team fed that data into an artificial intelligence model that was trained to associate specific patterns of neural activity with the words and inflections the man was trying to express. The machine then generated speech based on the brain signals, producing a voice that reflected both what he intended to say and how he wanted to say it. The researchers even trained the AI on voice recordings from before the man's condition progressed, using voice-cloning technology to make the synthetic voice sound like his own. In another part of the experiment, the researchers had him try to sing simple melodies using different pitches. Their model decoded his intended pitch in real time and then adjusted the singing voice it produced. He also used the system to speak without being prompted and to produce sounds like "hmm", "eww" or made-up words, says Wairagkar. "He's a very articulate and intelligent man," says team member David Brandman, also at UC Davis. "He's gone from being paralysed and unable to speak to continuing to work full-time and have meaningful conversations."

[3]

Brain Implant Lets Man with ALS Speak and Sing with His 'Real Voice'

I agree my information will be processed in accordance with the Scientific American and Springer Nature Limited Privacy Policy. A man with a severe speech disability is able to speak expressively and sing using a brain implant that translates his neural activity into words almost instantly. The device conveys changes of tone when he asks questions, emphasizes the words of his choice and allows him to hum a string of notes in three pitches. The system -- known as a brain-computer interface (BCI) -- used artificial intelligence (AI) to decode the participant's electrical brain activity as he attempted to speak. The device is the first to reproduce not only a person's intended words but also features of natural speech such as tone, pitch and emphasis, which help to express meaning and emotion. In a study, a synthetic voice that mimicked the participant's own spoke his words within 10 milliseconds of the neural activity that signalled his intention to speak. The system, described today in Nature, marks a significant improvement over earlier BCI models, which streamed speech within three seconds or produced it only after users finished miming an entire sentence. If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today. "This is the holy grail in speech BCIs," says Christian Herff, a computational neuroscientist at Maastricht University, the Netherlands, who was not involved in the study. "This is now real, spontaneous, continuous speech." The study participant, a 45-year-old man, lost his ability to speak clearly after developing amyotrophic lateral sclerosis, a form of motor neuron disease, which damages the nerves that control muscle movements, including those needed for speech. Although he could still make sounds and mouth words, his speech was slow and unclear. Five years after his symptoms began, the participant underwent surgery to insert 256 silicon electrodes, each 1.5-mm long, in a brain region that controls movement. Study co-author Maitreyee Wairagkar, a neuroscientist at the University of California, Davis, and her colleagues trained deep-learning algorithms to capture the signals in his brain every 10 milliseconds. Their system decodes, in real time, the sounds the man attempts to produce rather than his intended words or the constituent phonemes -- the subunits of speech that form spoken words. "We don't always use words to communicate what we want. We have interjections. We have other expressive vocalizations that are not in the vocabulary," explains Wairagkar. "In order to do that, we have adopted this approach, which is completely unrestricted." The team also personalized the synthetic voice to sound like the man's own, by training AI algorithms on recordings of interviews he had done before the onset of his disease. The team asked the participant to attempt to make interjections such as 'aah', 'ooh' and 'hmm' and say made-up words. The BCI successfully produced these sounds, showing that it could generate speech without needing a fixed vocabulary. Using the device, the participant spelt out words, responded to open-ended questions and said whatever he wanted, using some words that were not part of the decoder's training data. He told the researchers that listening to the synthetic voice produce his speech made him "feel happy" and that it felt like his "real voice". In other experiments, the BCI identified whether the participant was attempting to say a sentence as a question or as a statement. The system could also determine when he stressed different words in the same sentence and adjust the tone of his synthetic voice accordingly. "We are bringing in all these different elements of human speech which are really important," says Wairagkar. Previous BCIs could produce only flat, monotone speech. "This is a bit of a paradigm shift in the sense that it can really lead to a real-life tool," says Silvia Marchesotti, a neuroengineer at the University of Geneva in Switzerland. The system's features "would be crucial for adoption for daily use for the patients in the future."

[4]

Brain-computer interface restores real-time speech in als patient

In a new study published in the scientific journal Nature, the researchers demonstrate how this new technology can instantaneously translate brain activity into voice as a person tries to speak -- effectively creating a digital vocal tract. The system allowed the study participant, who has amyotrophic lateral sclerosis (ALS), to "speak" through a computer with his family in real time, change his intonation and "sing" simple melodies. "Translating neural activity into text, which is how our previous speech brain-computer interface works, is akin to text messaging. It's a big improvement compared to standard assistive technologies, but it still leads to delayed conversation. By comparison, this new real-time voice synthesis is more like a voice call," said Sergey Stavisky, senior author of the paper and an assistant professor in the UC Davis Department of Neurological Surgery. Stavisky co-directs the UC Davis Neuroprosthetics Lab. "With instantaneous voice synthesis, neuroprosthesis users will be able to be more included in a conversation. For example, they can interrupt, and people are less likely to interrupt them accidentally," Stavisky said. Decoding brain signals at heart of new technology The man is enrolled in the BrainGate2 clinical trial at UC Davis Health. His ability to communicate through a computer has been made possible with an investigational brain-computer interface (BCI). It consists of four microelectrode arrays surgically implanted into the region of the brain responsible for producing speech. These devices record the activity of neurons in the brain and send it to computers that interpret the signals to reconstruct voice. "The main barrier to synthesizing voice in real-time was not knowing exactly when and how the person with speech loss is trying to speak," said Maitreyee Wairagkar, first author of the study and project scientist in the Neuroprosthetics Lab at UC Davis. "Our algorithms map neural activity to intended sounds at each moment of time. This makes it possible to synthesize nuances in speech and give the participant control over the cadence of his BCI-voice." Instantaneous, expressive speech with BCI shows promise The brain-computer interface was able to translate the study participant's neural signals into audible speech played through a speaker very quickly -- one-fortieth of a second. This short delay is similar to the delay a person experiences when they speak and hear the sound of their own voice. The technology also allowed the participant to say new words (words not already known to the system) and to make interjections. He was able to modulate the intonation of his generated computer voice to ask a question or emphasize specific words in a sentence. The participant also took steps toward varying pitch by singing simple, short melodies. His BCI-synthesized voice was often intelligible: Listeners could understand almost 60% of the synthesized words correctly (as opposed to 4% when he was not using the BCI). Real-time speech helped by algorithms The process of instantaneously translating brain activity into synthesized speech is helped by advanced artificial intelligence algorithms. The algorithms for the new system were trained with data collected while the participant was asked to try to speak sentences shown to him on a computer screen. This gave the researchers information about what he was trying to say. The neural activity showed the firing patterns of hundreds of neurons. The researchers aligned those patterns with the speech sounds the participant was trying to produce at that moment in time. This helped the algorithm learn to accurately reconstruct the participant's voice from just his neural signals. Clinical trial offers hope "Our voice is part of what makes us who we are. Losing the ability to speak is devastating for people living with neurological conditions," said David Brandman, co-director of the UC Davis Neuroprosthetics Lab and the neurosurgeon who performed the participant's implant. "The results of this research provide hope for people who want to talk but can't. We showed how a paralyzed man was empowered to speak with a synthesized version of his voice. This kind of technology could be transformative for people living with paralysis." Brandman is an assistant professor in the Department of Neurological Surgery and is the site-responsible principal investigator of the BrainGate2 clinical trial. Limitations The researchers note that although the findings are promising, brain-to-voice neuroprostheses remain in an early phase. A key limitation is that the research was performed with a single participant with ALS. It will be crucial to replicate these results with more participants, including those who have speech loss from other causes, such as stroke. The BrainGate2 trial is enrolling participants. To learn more about the study, visit braingate.org or contact [email protected]. Caution: Investigational device, limited by federal law to investigational use.

[5]

Brain interface restores real-time speech for man with ALS

University of California - Davis HealthJun 11 2025 Researchers at the University of California, Davis, have developed an investigational brain-computer interface that holds promise for restoring the voices of people who have lost the ability to speak due to neurological conditions. In a new study published in the scientific journal Nature, the researchers demonstrate how this new technology can instantaneously translate brain activity into voice as a person tries to speak - effectively creating a digital vocal tract. The system allowed the study participant, who has amyotrophic lateral sclerosis (ALS), to "speak" through a computer with his family in real time, change his intonation and "sing" simple melodies. Translating neural activity into text, which is how our previous speech brain-computer interface works, is akin to text messaging. It's a big improvement compared to standard assistive technologies, but it still leads to delayed conversation. By comparison, this new real-time voice synthesis is more like a voice call." Sergey Stavisky, senior author of the paper and assistant professor in the UC Davis Department of Neurological Surgery Stavisky co-directs the UC Davis Neuroprosthetics Lab. "With instantaneous voice synthesis, neuroprosthesis users will be able to be more included in a conversation. For example, they can interrupt, and people are less likely to interrupt them accidentally," Stavisky said. Decoding brain signals at heart of new technology The man is enrolled in the BrainGate2 clinical trial at UC Davis Health. His ability to communicate through a computer has been made possible with an investigational brain-computer interface (BCI). It consists of four microelectrode arrays surgically implanted into the region of the brain responsible for producing speech. These devices record the activity of neurons in the brain and send it to computers that interpret the signals to reconstruct voice. "The main barrier to synthesizing voice in real-time was not knowing exactly when and how the person with speech loss is trying to speak," said Maitreyee Wairagkar, first author of the study and project scientist in the Neuroprosthetics Lab at UC Davis. "Our algorithms map neural activity to intended sounds at each moment of time. This makes it possible to synthesize nuances in speech and give the participant control over the cadence of his BCI-voice." Instantaneous, expressive speech with BCI shows promise The brain-computer interface was able to translate the study participant's neural signals into audible speech played through a speaker very quickly - one-fortieth of a second. This short delay is similar to the delay a person experiences when they speak and hear the sound of their own voice. The technology also allowed the participant to say new words (words not already known to the system) and to make interjections. He was able to modulate the intonation of his generated computer voice to ask a question or emphasize specific words in a sentence. The participant also took steps toward varying pitch by singing simple, short melodies. His BCI-synthesized voice was often intelligible: Listeners could understand almost 60% of the synthesized words correctly (as opposed to 4% when he was not using the BCI). Real-time speech helped by algorithms The process of instantaneously translating brain activity into synthesized speech is helped by advanced artificial intelligence algorithms. The algorithms for the new system were trained with data collected while the participant was asked to try to speak sentences shown to him on a computer screen. This gave the researchers information about what he was trying to say. The neural activity showed the firing patterns of hundreds of neurons. The researchers aligned those patterns with the speech sounds the participant was trying to produce at that moment in time. This helped the algorithm learn to accurately reconstruct the participant's voice from just his neural signals. Clinical trial offers hope "Our voice is part of what makes us who we are. Losing the ability to speak is devastating for people living with neurological conditions," said David Brandman, co-director of the UC Davis Neuroprosthetics Lab and the neurosurgeon who performed the participant's implant. "The results of this research provide hope for people who want to talk but can't. We showed how a paralyzed man was empowered to speak with a synthesized version of his voice. This kind of technology could be transformative for people living with paralysis." Brandman is an assistant professor in the Department of Neurological Surgery and is the site-responsible principal investigator of the BrainGate2 clinical trial. Limitations The researchers note that although the findings are promising, brain-to-voice neuroprostheses remain in an early phase. A key limitation is that the research was performed with a single participant with ALS. It will be crucial to replicate these results with more participants, including those who have speech loss from other causes, such as stroke. The BrainGate2 trial is enrolling participants. To learn more about the study, visit braingate.org or contact [email protected]. University of California - Davis Health

[6]

First-of-its-kind technology helps man with ALS speak in real time

Researchers at the University of California, Davis, have developed an investigational brain-computer interface that holds promise for restoring the voices of people who have lost the ability to speak due to neurological conditions. In a new study published in the journal Nature, the researchers demonstrate how this new technology can instantaneously translate brain activity into voice as a person tries to speak -- effectively creating a digital vocal tract. The system allowed the study participant, who has amyotrophic lateral sclerosis (ALS), to speak through a computer with his family in real time, change his intonation and sing simple melodies. "Translating neural activity into text, which is how our previous speech brain-computer interface works, is akin to text messaging. It's a big improvement compared to standard assistive technologies, but it still leads to delayed conversation. By comparison, this new real-time voice synthesis is more like a voice call," said Sergey Stavisky, senior author of the paper and an assistant professor in the UC Davis Department of Neurological Surgery. Stavisky co-directs the UC Davis Neuroprosthetics Lab. "With instantaneous voice synthesis, neuroprosthesis users will be able to be more included in a conversation. For example, they can interrupt, and people are less likely to interrupt them accidentally," Stavisky said. Decoding brain signals at heart of new technology The man is enrolled in the BrainGate2 clinical trial at UC Davis Health. His ability to communicate through a computer has been made possible with an investigational brain-computer interface (BCI). It consists of four microelectrode arrays surgically implanted into the region of the brain responsible for producing speech. These devices record the activity of neurons in the brain and send it to computers that interpret the signals to reconstruct voice. "The main barrier to synthesizing voice in real-time was not knowing exactly when and how the person with speech loss is trying to speak," said Maitreyee Wairagkar, first author of the study and project scientist in the Neuroprosthetics Lab at UC Davis. "Our algorithms map neural activity to intended sounds at each moment of time. This makes it possible to synthesize nuances in speech and give the participant control over the cadence of his BCI-voice." Instantaneous, expressive speech with BCI shows promise The brain-computer interface was able to translate the study participant's neural signals into audible speech played through a speaker very quickly -- one-fortieth of a second. This short delay is similar to the delay a person experiences when they speak and hear the sound of their own voice. The technology also allowed the participant to say new words (words not already known to the system) and to make interjections. He was able to modulate the intonation of his generated computer voice to ask a question or emphasize specific words in a sentence. The participant also took steps toward varying pitch by singing simple, short melodies. His BCI-synthesized voice was often intelligible: Listeners could understand almost 60% of the synthesized words correctly (as opposed to 4% when he was not using the BCI). Real-time speech helped by algorithms The process of instantaneously translating brain activity into synthesized speech is helped by advanced artificial intelligence algorithms. The algorithms for the new system were trained with data collected while the participant was asked to try to speak sentences shown to him on a computer screen. This gave the researchers information about what he was trying to say. The neural activity showed the firing patterns of hundreds of neurons. The researchers aligned those patterns with the speech sounds the participant was trying to produce at that moment in time. This helped the algorithm learn to accurately reconstruct the participant's voice from just his neural signals. Clinical trial offers hope "Our voice is part of what makes us who we are. Losing the ability to speak is devastating for people living with neurological conditions," said David Brandman, co-director of the UC Davis Neuroprosthetics Lab and the neurosurgeon who performed the participant's implant. "The results of this research provide hope for people who want to talk but can't. We showed how a paralyzed man was empowered to speak with a synthesized version of his voice. This kind of technology could be transformative for people living with paralysis." Brandman is an assistant professor in the Department of Neurological Surgery and is the site-responsible principal investigator of the BrainGate2 clinical trial. The researchers note that although the findings are promising, brain-to-voice neuroprostheses remain in an early phase. A key limitation is that the research was performed with a single participant with ALS. It will be crucial to replicate these results with more participants, including those who have speech loss from other causes, such as stroke.

[7]

Brain implant breakthrough helps ALS man talk - and sing - again

The study participant's neural signals from reading on-screen text helped train the AI decoder model In another advancement in the field of brain-computer interfaces (BCI), a new implant-based system has enabled a paralyzed person to not only talk, but also 'sing' simple melodies through a computer - with practically no delay. The tech developed by researchers at University of California, Davis (UC Davis) was trialed with a study participant who suffers from amyotrophic lateral sclerosis (ALS). It essentially captured raw neural signals through four microelectrode arrays surgically implanted into the region of the brain responsible for physically producing speech. In combination with low-latency processing and an AI-driven decoding model, the participant's speech was synthesized in real time through a speaker. To be clear, this means the system isn't trying to read the participant's thoughts, bur rather translating the brain signals produced when he tries to use his muscles to speak. The system also sounds like the participant, thanks to a voice cloning algorithm trained on audio samples captured before they developed ALS. The entire process, from acquiring the raw neural signals to generating speech samples, occurs within 10 milliseconds, enabling near-instantaneous speech. The BCI also recognized when the participant was attempting to sing, identified one of three intended pitches, and modulated his voice to synthesize vocal melodies. This bit, demonstrated in a video supplied by the researchers, appears rudimentary, but it feels wrong to use that word to describe such a remarkable development in enabling nuanced communication among paralyzed people who may have felt they'd never express themselves naturally again. Sergey Stavisky, senior author of the paper on this tech that's set to appear in Nature, explained that this is a major step in that direction. "With instantaneous voice synthesis, neuroprosthesis users will be able to be more included in a conversation," he said. "For example, they can interrupt, and people are less likely to interrupt them accidentally." If this work sounds familiar, it's because it's similar to tech we saw in April from University of California Berkeley and University of California San Francisco. Both systems gather neural signals using brain implants from the motor cortex, and leverage AI-powered systems trained on data captured from the participant attempting to speak words displayed on a screen. What's also cool about the UC Davis tech is that it reproduced the participant's attempts to interject with 'aah', 'ooh,' and 'hmm.' It was even able to identify whether he was saying a sentence as a question or a statement, and when he was stressing certain words. The team said it also successfully reproduced made-up words outside of the AI decoder's training data. All of this makes for far more expressive synthesized speech than previous systems. Technologies like these could transform the lives of paralyzed people, and it's incredible to see these incremental advancements up close. The UC Davis researchers note that their study only involved a single participant, and their subsequent work will see them attempt to replicate these results with more subjects exhibiting speech loss from other conditions. "This is the holy grail in speech BCIs," Christian Herff, a computational neuroscientist at Maastricht University in the Netherlands, who was not involved in the study, commented in Nature. "This is now real, spontaneous, continuous speech."

[8]

UC Davis breakthrough lets ALS patient speak using only his thoughts

Allowing people with disabilities to talk by just thinking about a word, that's what UC Davis researchers hope to accomplish with new cutting-edge technology. It can be a breakthrough for people with ALS and other nonverbal conditions. One UC Davis Health patient has been diagnosed with ALS, a neurological disease that makes it impossible to speak out loud. Scientists have now directly wired his brain into a computer, allowing him to speak through it using only his thoughts. "It has been very exciting to see the system work," said Maitreyee Wairagkar, a UC Davis neuroprosthetics lab project scientist. The technology involves surgically implanting small electrodes. Artificial intelligence can then translate the neural activity into words. UC Davis researchers say it took the patient, who's not being publicly named, very little time to learn the technology. "Within 30 minutes, he was able to use this system to speak with a restricted vocabulary," Wairagkar said. It takes just milliseconds for brain waves to be interpreted by the computer, making it possible to hold a real-time conversation. "[The patient] has said that the voice that is synthesized with the system sounds like his own voice and that makes him happy," Wairagkar said. And it's not just words. The technology can even be used to sing. "These are just very simple melodies that we designed to see whether the system can capture his intention to change the pitch," Wairagkar said. Previously, ALS patients would use muscle or eye movements to type on a computer and generate a synthesized voice. That's how physicist Stephen Hawking, who also had ALS, was able to slowly speak. This new technology is faster but has only been used on one patient so far. Now, there's hope that these microchip implants could one day help other people with spinal cord and brain stem injuries. "There are millions of people around the world who live with speech disabilities," Wairagkar said. The UC Davis scientific study was just published in the journal "Nature," and researchers are looking for other volunteers to participate in the program.

[9]

First-of-Its-Kind Technology Helps Man with ALS 'Speak' in Real Time | Newswise

New brain-computer interface system enables faster, more natural conversation Newswise -- (Sacramento, Calif.) -- Researchers at the University of California, Davis, have developed an investigational brain-computer interface that holds promise for restoring the ability to hold real-time conversations to people who have lost the ability to speak due to neurological conditions. In a new study published in the scientific journal Nature, the researchers demonstrate how this new technology can instantaneously translate brain activity into voice as a person tries to speak -- effectively creating a digital vocal tract with no detectable delay. The system allowed the study participant, who has amyotrophic lateral sclerosis (ALS), to "speak" through a computer with his family in real time, change his intonation and "sing" simple melodies. "Translating neural activity into text, which is how our previous speech brain-computer interface works, is akin to text messaging. It's a big improvement compared to standard assistive technologies, but it still leads to delayed conversation. By comparison, this new real-time voice synthesis is more like a voice call," said Sergey Stavisky, senior author of the paper and an assistant professor in the UC Davis Department of Neurological Surgery. Stavisky co-directs the UC Davis Neuroprosthetics Lab. "With instantaneous voice synthesis, neuroprosthesis users will be able to be more included in a conversation. For example, they can interrupt, and people are less likely to interrupt them accidentally," Stavisky said. Decoding brain signals at heart of new technology The man is enrolled in the BrainGate2 clinical trial at UC Davis Health. His ability to communicate through a computer has been made possible with an investigational brain-computer interface (BCI). It consists of four microelectrode arrays surgically implanted into the region of the brain responsible for producing speech. These devices record the activity of neurons in the brain and send it to computers that interpret the signals to reconstruct voice. "The main barrier to synthesizing voice in real-time was not knowing exactly when and how the person with speech loss is trying to speak," said Maitreyee Wairagkar, first author of the study and project scientist in the Neuroprosthetics Lab at UC Davis. "Our algorithms map neural activity to intended sounds at each moment of time. This makes it possible to synthesize nuances in speech and give the participant control over the cadence of his BCI-voice." Instantaneous, expressive speech with BCI shows promise The brain-computer interface was able to translate the study participant's neural signals into audible speech played through a speaker very quickly -- one-fortieth of a second. This short delay is similar to the delay a person experiences when they speak and hear the sound of their own voice. The technology also allowed the participant to say new words (words not already known to the system) and to make interjections. He was able to modulate the intonation of his generated computer voice to ask a question or emphasize specific words in a sentence. The participant also took steps toward varying pitch by singing simple, short melodies. His BCI-synthesized voice was often intelligible: Listeners could understand almost 60% of the synthesized words correctly (as opposed to 4% when he was not using the BCI). Real-time speech helped by algorithms The process of instantaneously translating brain activity into synthesized speech is helped by advanced artificial intelligence algorithms. The algorithms for the new system were trained with data collected while the participant was asked to try to speak sentences shown to him on a computer screen. This gave the researchers information about what he was trying to say. The neural activity showed the firing patterns of hundreds of neurons. The researchers aligned those patterns with the speech sounds the participant was trying to produce at that moment in time. This helped the algorithm learn to accurately reconstruct the participant's voice from just his neural signals. Clinical trial offers hope "Our voice is part of what makes us who we are. Losing the ability to speak is devastating for people living with neurological conditions," said David Brandman, co-director of the UC Davis Neuroprosthetics Lab and the neurosurgeon who performed the participant's implant. "The results of this research provide hope for people who want to talk but can't. We showed how a paralyzed man was empowered to speak with a synthesized version of his voice. This kind of technology could be transformative for people living with paralysis." Brandman is an assistant professor in the Department of Neurological Surgery and is the site-responsible principal investigator of the BrainGate2 clinical trial. Limitations The researchers note that although the findings are promising, brain-to-voice neuroprostheses remain in an early phase. A key limitation is that the research was performed with a single participant with ALS. It will be crucial to replicate these results with more participants, including those who have speech loss from other causes, such as stroke. The BrainGate2 trial is enrolling participants. To learn more about the study, visit braingate.org or contact [email protected]. Caution: Investigational device, limited by federal law to investigational use.

Share

Share

Copy Link

Researchers at UC Davis have developed a brain-computer interface that allows a man with ALS to speak in real-time using a synthetic voice that mimics his own, including intonation and singing.

Groundbreaking Brain-Computer Interface Restores Speech

In a significant breakthrough for neurotechnology and assistive communication, researchers at the University of California, Davis have developed a brain-computer interface (BCI) that allows a man with amyotrophic lateral sclerosis (ALS) to speak in real-time using a synthetic voice. This innovative system, described in a study published in Nature, marks a substantial improvement over previous BCIs by reproducing not only the intended words but also natural speech features such as tone, pitch, and emphasis

1

2

.The Technology Behind the Voice

Source: New Scientist

The BCI consists of 256 silicon electrodes implanted in the brain region controlling movement. These electrodes capture neural signals every 10 milliseconds, which are then decoded by deep-learning algorithms. Unlike previous systems that focused on decoding intended words or phonemes, this approach decodes the sounds the participant attempts to produce in real-time

1

3

.Dr. Maitreyee Wairagkar, a neuroscientist at UC Davis and co-author of the study, explains: "We don't always use words to communicate what we want. We have interjections. We have other expressive vocalizations that are not in the vocabulary. In order to do that, we have adopted this approach, which is completely unrestricted"

1

.Personalized and Expressive Communication

The system goes beyond mere word production. It allows the participant to:

- Speak with intonation, asking questions and emphasizing specific words

- Make interjections and produce sounds like "hmm" and "eww"

- Sing simple melodies in different pitches

Importantly, the synthetic voice was personalized to sound like the participant's own voice, using AI algorithms trained on recordings from before the onset of his disease

2

4

.Related Stories

Real-Time Performance and User Experience

Source: ScienceDaily

The BCI's performance is remarkable, with the synthetic voice speaking words within 10 milliseconds of the neural activity signaling the intention to speak. This near-instantaneous response allows for natural conversation flow, including the ability to interrupt and respond in real-time

2

5

.The 45-year-old participant, who lost his ability to speak clearly due to ALS, reported feeling "happy" when listening to the synthetic voice produce his speech, saying it felt like his "real voice"

1

3

.Implications and Future Directions

This technology represents a significant step forward in restoring communication for people with severe speech disabilities. Dr. Christian Herff, a computational neuroscientist not involved in the study, called it "the holy grail in speech BCIs"

1

.However, the researchers caution that brain-to-voice neuroprostheses are still in an early phase. The study was conducted with a single participant, and replication with more individuals, including those with speech loss from other causes like stroke, will be crucial

4

5

.

Source: News-Medical

Dr. David Brandman, co-director of the UC Davis Neuroprosthetics Lab, emphasized the potential impact: "The results of this research provide hope for people who want to talk but can't. We showed how a paralyzed man was empowered to speak with a synthesized version of his voice. This kind of technology could be transformative for people living with paralysis"

4

.As research continues, this breakthrough offers a glimpse into a future where those who have lost their voice to neurological conditions may once again be able to express themselves freely and naturally.

References

Summarized by

Navi

[2]

[3]

[5]

Related Stories

Brain Implant Breakthrough: Real-Time Thought-to-Speech Translation

01 Apr 2025•Science and Research

Brain Implant Decodes Inner Speech with Password Protection, Advancing AI-Powered Communication

15 Aug 2025•Science and Research

Breakthrough: AI-Powered Brain Implant Enables Paralyzed Man to Control Robotic Arm for Record 7 Months

07 Mar 2025•Science and Research

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology