Building AI Voice Agents: A Comprehensive Guide to OpenAI's Real-Time API and Voice Technology

3 Sources

3 Sources

[1]

How to Build an AI Voice Agent using OpenAI Real-Time API

If you are interested in building your very own AI voice agent using the new OpenAI Real-Time API. You might be interested in a new guide by Bart Slodyczka which takes you through the essential stages of developing an AI voice agent, from integrating with Twilio to deploying your application on Replit. Building an AI voice agent using OpenAI's Real-Time API as a rewarding project and requires a little careful planning and execution. however it can create sophisticated systems that engage users in real-time conversations and efficiently process data. OpenAI's Real-Time API is a powerful tool that enables developers to create speech-to-speech applications with ease. This API allows your AI voice agent to: By using the capabilities of OpenAI's Real-Time API, you can build an AI voice agent that excels in applications such as customer service bots and virtual assistants, where instant interaction is crucial. Here are a selection of other articles from our extensive library of content you may find of interest on the subject of OpenAI Real-Time API : To enable your AI voice agent to handle phone calls effectively, it is essential to integrate it with Twilio. Twilio provides a comprehensive infrastructure for managing phone numbers and facilitating both incoming and outgoing calls. By integrating your AI voice agent with Twilio, you can ensure: This integration is a critical step in creating an AI voice agent that can engage with users seamlessly through phone conversations. To assist real-time communication between your AI voice agent and users, using WebSocket technology is crucial. WebSocket maintains a persistent connection between the client and server, allowing for instant data exchange. By implementing WebSocket in your AI voice agent, you can: Incorporating WebSocket technology is essential for building an AI voice agent that can engage in natural, real-time conversations with users. To efficiently manage your code and simplify deployment, it is recommended to set up a GitHub repository and deploy your application on Replit. GitHub serves as a centralized platform for version control and collaboration, allowing you to: Once your code is ready, deploying your AI voice agent on Replit provides a cloud-based environment that simplifies the deployment process. Replit enables your application to operate continuously, ensuring reliable and uninterrupted service for your users. Customization is a vital aspect of developing an AI voice agent that aligns with your brand's identity and meets your specific requirements. By tailoring the system message and voice settings, you can: Customizing your AI voice agent allows you to create a distinctive and engaging experience that resonates with your target audience. To handle multiple phone calls simultaneously, implementing effective session management techniques is crucial. By managing sessions efficiently, you can ensure that: Robust session management is essential for creating an AI voice agent that can handle multiple users simultaneously, providing a seamless experience for each individual caller. Integrating your AI voice agent with Make.com can significantly enhance its data processing capabilities. Make.com allows you to automate data handling and streamline workflows, allowing your AI agent to: By using Make.com's automation features, you can create an AI voice agent that efficiently processes data, providing users with accurate and timely information. To ensure your AI voice agent remains reliable and available to users, it is crucial to deploy your application for continuous operation. This involves: Additionally, planning for future enhancements is essential to keep your AI voice agent competitive and relevant. Consider implementing features such as function calls and knowledge base integration to expand your AI agent's capabilities and make it more versatile and intelligent. By following this guide, you can successfully build an AI voice agent using OpenAI's Real-Time API. Each step, from integration to deployment, plays a vital role in creating a robust and efficient system that engages users in natural, real-time conversations. With the right tools, techniques, and a focus on continuous improvement, your AI voice agent can deliver an exceptional user experience and drive business success.

[2]

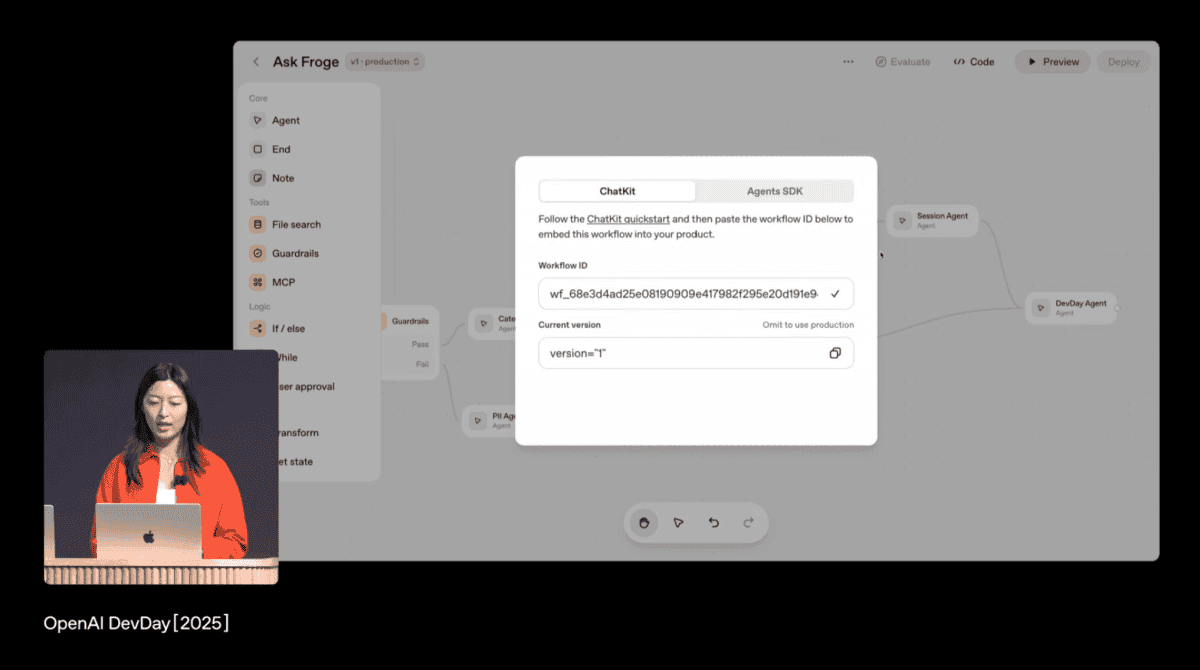

OpenAI Realtime API Demo: Building Your Own AI Voice Assistant

This week OpenAI held its DevDay 2024 revealing a wealth of new updates aimed at enhancing developer capabilities. The key announcements include a realtime API for voice interactions, a vision fine-tuning API, prompt casing APIs, and model distillation techniques. These updates are designed to improve the efficiency and functionality of applications using OpenAI's technology. If you are interested in learning more about the realtime API for voice interactions. You might be interested in this demonstration takes you through the process of creating an AI Voice Assistant using Twilio Voice and OpenAI Realtime API with Node.js. The TwilioDevs team outlines the prerequisites, setup, and implementation steps to build a sophisticated voice assistant capable of engaging in realtime communication with users. Before embarking on this project, ensure you have the following: To lay the groundwork for your application, start by creating a dedicated project directory and initializing it with npm. This crucial step establishes the foundation upon which you will build your voice assistant. Next, install essential dependencies such as Fastify, WebSockets, dotenv, and other related modules. These powerful tools play a vital role in constructing a robust and scalable application capable of handling realtime API interactions. To ensure the security of sensitive information, configure your environment variables using the dotenv module. This allows you to safely store and access your OpenAI API key and other confidential data without exposing them in your codebase. With the project setup complete, dive into the heart of your application development. Begin by importing the necessary dependencies and configuring the Fastify web framework. Fastify is an excellent choice for this project due to its speed and low overhead, making it well-suited for realtime applications. To streamline your configuration, set up constants for system messages, voice settings, and the server port, allowing for easy customization and maintenance. Here are a selection of other articles from our extensive library of content you may find of interest on the subject of AI voice technologies, tools and platforms : Next, define the routes for your Fastify web application. The root route serves as a confirmation that your server is running correctly, providing a quick way to verify the health of your application. Another critical route will be responsible for handling incoming voice calls and establishing a connection to Twilio Media Streams. This enables your voice assistant to engage in real-time voice interaction with users, opening up a world of possibilities for enhanced communication. To enable your AI assistant to process and respond to voice inputs in real-time, establishing a WebSocket connection to the OpenAI Realtime API is of utmost importance. This connection acts as a bridge between your application and the powerful language model, allowing for seamless communication and intelligent responses. To fine-tune your AI assistant's behavior and align it with your specific requirements, configure session settings that define its personality, tone, and knowledge base. Efficiently handle events and messages from both the OpenAI and Twilio WebSocket connections to ensure a smooth flow of data between the user, Twilio, and OpenAI. This careful management of communication channels is key to delivering a responsive and engaging user experience. With your application fully developed, it's time to bring it to life. Start the Fastify server to make your voice assistant operational and ready to handle incoming calls. To provide public access to your local server, employ ngrok, a powerful tool that creates an HTTP tunnel. This step is crucial for testing and deployment, as it allows Twilio to seamlessly communicate with your application, even if it's running on your local machine. To ensure that incoming calls are routed correctly to your AI voice assistant, update your Twilio phone number settings to point to the ngrok URL. This configuration establishes a direct link between Twilio and your application, allowing users to interact with your voice assistant effortlessly. Before deploying your AI voice assistant to production, thoroughly test its functionality using the Twilio Dev Phone. This invaluable tool simulates phone calls, allowing you to verify the interaction between the user and the AI assistant in a controlled environment. Pay close attention to the accuracy and promptness of the AI's responses to voice inputs, ensuring a smooth and satisfactory user experience. To further enhance your understanding and implementation of the AI voice assistant, explore additional resources such as blog posts and code samples. These materials offer deeper insights into the intricacies of Twilio and OpenAI integration, providing practical examples and best practices to help you refine your application. By following this guide, you can create a highly personalized and intelligent AI voice assistant that uses the power of Twilio and OpenAI technologies. The step-by-step approach outlined here ensures a smooth setup and testing process, empowering you to harness innovative voice interaction technology and deliver an exceptional user experience. Embrace the potential of AI-driven communication and transform the way users interact with your applications through the power of voice. For more information on the OpenAI Realtime API jump over to the official website.

[3]

Build Your Own AI Voice Character App in Under 40 Minutes

AI voice character apps have emerged as a captivating and interactive way to engage users. With the advent of advanced tools and frameworks, creating an AI voice character app is now more accessible than ever before. This guide by Greg Isenberg will walk you through the process of developing a fully functional AI voice character app in less than 40 minutes. AI voice character apps have gained significant popularity due to their ability to provide an immersive and personalized user experience. These apps serve a wide range of purposes, from entertainment and gaming to practical applications such as virtual assistants and educational tools. For instance, you could develop a weatherman character app that delivers weather updates in a unique and engaging voice, making the information more relatable and memorable for users. To embark on your AI voice character app development journey, you'll need a few essential tools in your arsenal: To navigate the development process effectively, a solid foundation in basic coding skills, including cloning repositories and deploying apps, is crucial. These skills will enable you to set up your development environment swiftly and efficiently. Here are a selection of other articles from our extensive library of content you may find of interest on the subject of AI voice : 1. Setting Up the Development Environment: - Begin by cloning the repository from GitHub, which contains the necessary starter files and configurations. - Install the required dependencies using npm or yarn to ensure a smooth development process. 2. Connecting to Daily's Backend: - Obtain an API key from Daily, which will enable seamless communication between your app and Daily's backend services. - Integrate the API key into your app, allowing you to use Daily's powerful voice synthesis and character behavior capabilities. 3. Configuring Personality and Voice Settings: - Customize the AI character's personality and voice settings to align with your app's theme and intended user experience. - Use Pip Cat AI to fine-tune the voice characteristics, ensuring a natural and engaging voice output. 4. Implementing Function Calling: - Set up function calls that allow the AI to perform specific tasks based on user input or predefined triggers. - For example, configure the app to fetch weather data using a weather API, allowing the AI character to provide accurate and up-to-date information. Function calling plays a pivotal role in bringing your AI voice character app to life. It enables the AI to execute specific tasks and respond to user interactions seamlessly. Here's how you can set it up: - Setting Up Function Calls: - Define the functions that the AI will call based on user input or specific triggers. - Implement the necessary logic for each function, such as fetching weather data or performing calculations. - Configuring a Weather Tool: - Integrate a reliable weather API into your app to provide real-time weather updates. - Handle API responses efficiently to ensure accurate data display and seamless user experience. Deploying your app is a breeze with Vercel, a powerful platform for deploying web applications. Follow these simple steps: - Using Vercel for Deployment: - Connect your GitHub repository to Vercel, allowing seamless integration and automatic deployments. - Deploy your app with a single command, making it instantly accessible online for users to interact with. Once deployed, your AI voice character app is ready to captivate and engage users. Demonstrate its capabilities by making a FaceTime call with a virtual anime character (vtuber), highlighting the interactive and immersive potential of AI voice technology. This showcase will leave a lasting impression on your audience and inspire them to explore the possibilities of AI voice apps further. By following this guide, you now have the knowledge and tools to create a captivating AI voice character app in less than 40 minutes. The combination of creativity and innovative technology empowers you to develop apps that push the boundaries of user engagement and interaction. As you embark on this exciting journey, remember to experiment with different characters and functionalities to unlock the full potential of AI voice apps. Engage with your audience by encouraging them to like, comment, and subscribe for more insightful content and updates on your AI development endeavors. For further learning and exploration, refer to the documentation and tools mentioned throughout this tutorial. Access the deployed demo app to gain hands-on experience and witness the power of AI voice technology firsthand. Stay connected with the creators and the AI development community to stay updated on the latest advancements and opportunities in this rapidly evolving field. By harnessing the potential of AI voice technology, you can create apps that not only entertain and inform but also transform the way users interact with digital content. So, let your creativity soar and embark on this thrilling journey of building AI voice character apps that leave a lasting impact on your audience.

Share

Share

Copy Link

A detailed exploration of creating AI voice agents using OpenAI's Real-Time API, covering integration with Twilio, WebSocket technology, and deployment strategies.

The Rise of AI Voice Agents

The development of AI voice agents has gained significant traction, offering new possibilities for real-time, voice-based interactions. OpenAI's Real-Time API has emerged as a powerful tool for developers to create sophisticated speech-to-speech applications

1

. This technology enables AI voice agents to process speech input, generate responses, and convert text to speech in real-time, opening up applications in customer service, virtual assistance, and more1

2

.Integrating with Twilio for Phone Functionality

A crucial step in building a functional AI voice agent is integrating it with a telephony service like Twilio. This integration allows the AI agent to handle phone calls effectively, managing both incoming and outgoing communications. Twilio provides the necessary infrastructure for phone number management and call facilitation, ensuring seamless interaction between users and the AI agent

1

.Leveraging WebSocket Technology

To achieve real-time communication between the AI voice agent and users, WebSocket technology plays a vital role. WebSocket maintains a persistent connection, enabling instant data exchange. This is essential for natural, flowing conversations and allows for features such as real-time transcription and immediate response generation

1

2

.Deployment and Version Control

For efficient code management and simplified deployment, developers are advised to utilize GitHub for version control and Replit for cloud-based deployment. This approach facilitates collaboration, tracks changes, and ensures continuous operation of the AI voice agent

1

. Alternatively, platforms like Vercel can be used for quick and easy deployment, especially for web-based applications3

.Customization and Branding

Customization is key to creating a unique AI voice character that aligns with specific brand identities or use cases. This involves tailoring the system message, voice settings, and personality traits of the AI agent. For instance, developers can create specialized characters like a weatherman for delivering weather updates in an engaging manner

1

3

.Session Management and Scalability

To handle multiple concurrent users, implementing robust session management techniques is crucial. This ensures that each user interaction is isolated and managed efficiently, allowing the AI voice agent to scale and handle multiple calls simultaneously

1

.Related Stories

Enhancing Data Processing with Make.com

Integrating the AI voice agent with platforms like Make.com can significantly improve its data processing capabilities. This allows for automated data handling and streamlined workflows, enabling the AI agent to access and process information from various sources efficiently

1

.Development Process and Tools

The development of an AI voice agent typically involves several key steps:

- Setting up the development environment with necessary dependencies

2

3

. - Connecting to backend services like Daily for voice synthesis

3

. - Configuring personality and voice settings

3

. - Implementing function calling for specific tasks

2

3

. - Integrating with APIs for additional functionalities, such as weather data retrieval

3

.

Testing and Demonstration

Before deployment, thorough testing is essential. Tools like the Twilio Dev Phone can be used to simulate phone calls and verify the AI agent's functionality

2

. Demonstrations, such as making a FaceTime call with a virtual anime character, can showcase the interactive potential of the technology3

.Future Enhancements

As the field of AI voice technology continues to evolve, future enhancements may include more advanced function calls, improved knowledge base integration, and even more natural-sounding voice synthesis. These advancements will further expand the capabilities and applications of AI voice agents

1

2

3

.By following these guidelines and leveraging the latest tools and APIs, developers can create sophisticated AI voice agents capable of engaging users in natural, real-time conversations across various applications and industries.

References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology