ChatGPT's 'Live Camera' Feature: Advanced Voice Mode Set to Gain Visual Capabilities

4 Sources

4 Sources

[1]

Live Camera is coming soon to ChatGPT -- here's what we know

It's hard to believe it's been six months since the initial demo of OpenAI's visual AI that we were told could identify just about anything and even solve math equations, but we may finally be getting closer to some sort of rollout. As spotted in the code of the latest ChatGPT beta, OpenAI's app now has references to 'Live Camera' video features that would essentially add 'eyes' to its very impressive (and conversational) Advanced Voice Mode. First seen by Android Authority, code in version 1.2024.317 reveals "Live camera functionality", "Real-time processing", "Voice mode integration" and "Visual recognition capabilities". This would allow you to open the webcam while talking to Advanced Voice so it can give live feedback on what it can see in front of you. It is similar to Google's anticipated Project Astra with real-time visual analysis. The strings found in the beta version of the ChatGPT Android app suggest the Live Camera feature could arrive as part of a ChatGPT beta in the near future. In the demos from May during OpenAI's Spring Update, the video features could recognize a dog, its actions with a ball, and more, while remembering key pieces of information like the dog's name. Another, later demo, showed someone using ChatGPT Live Camera while touring London to have it point out details of different locations and landmarks. While we've had Advanced Voice rollout to everyone, including in the web, things have been quiet on Live Camera in the months since the announcement. Android Authority also says it "spotted warnings for users that advise them not to use the Live camera feature for live navigation or other decisions impacting their health or safety." Here's hoping for news soon, as it was definitely one of the most impressive reveals we've seen from OpenAI so far.

[2]

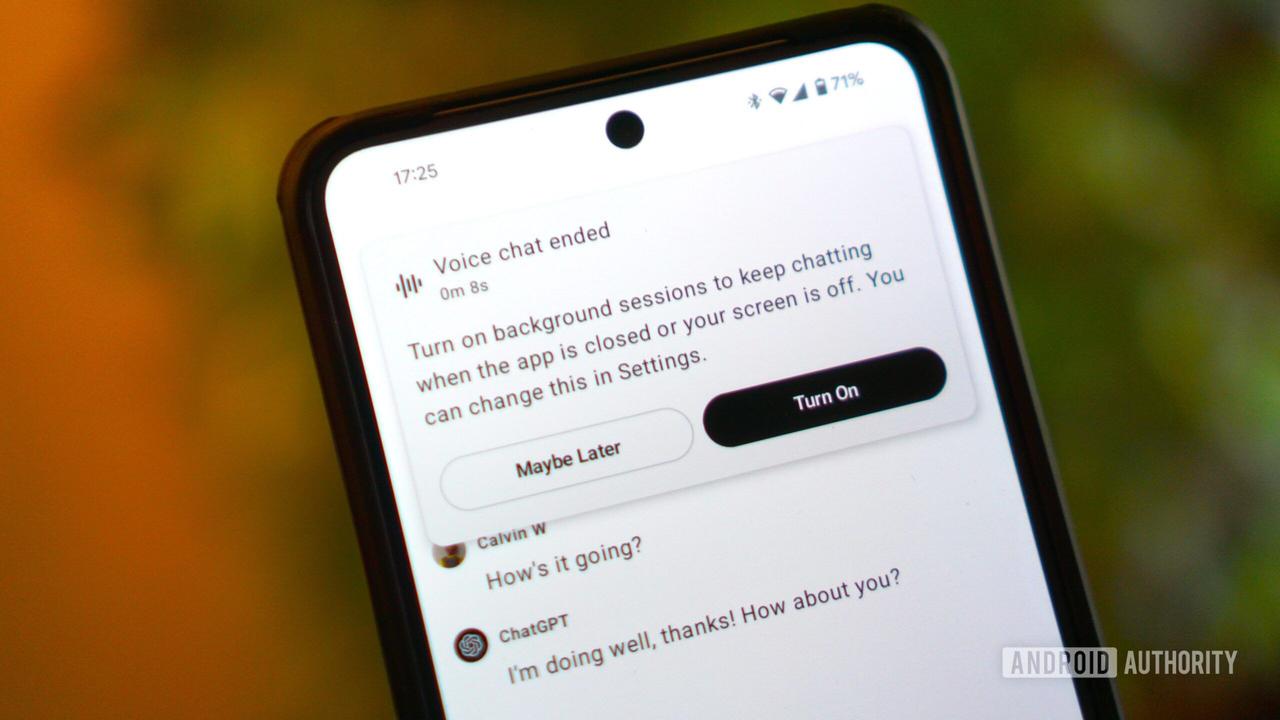

ChatGPT's Advanced Voice Mode could get a new 'Live Camera' feature

ChatGPT might get live vision soon. Credit: Koshiro K / Shutterstock ChatGPT's highly-anticipated vision capabilities might be coming soon, according to some eagle-eyed sleuths. Android Authority spotted some lines of code in the Advanced Voice Mode part of the latest ChatGPT v1.2024.317 beta build, which point to something called "Live camera." The code appears to be a warning to users to not use Live camera "for live navigation or decisions that may impact your health or safety." Another line in the code seems to give instructions for vision capabilities saying, "Tap the camera icon to let ChatGPT view and chat about your surroundings." ChatGPT's ability to visually process information was a major feature debuted at the OpenAI event last May, launching GPT-4o. Demos from the event showed how GPT-4o could use a mobile or desktop camera to identify subjects and remember details about the visuals. One particular demo featured GPT-4o identifying a dog playing with a tennis ball and remembering that it's name is "Bowser." Since the OpenAI event and subsequent early access to a few lucky alpha testers, not much has been said about GPT-4o with vision. Meanwhile, OpenAI shipped Advanced Voice Mode to ChatGPT Plus and Team users in September. If ChatGPT's vision mode is imminent as the code suggests, users will soon be able to test out of both components of the new GPT-4o features teased last spring. OpenAI has been busy lately, despite reports of diminishing returns with future models. Last month, it launched ChatGPT Search, which connects the AI model to the web, providing real-time information. It is also rumored to be working on some kind of agent that's capable of multi-step tasks on the user's behalf, like writing code and browsing the web, possibly slated for a January release.

[3]

ChatGPT's Live Video Feature Spotted, Might Be Released Soon

ChatGPT might soon gain the ability to answer queries after looking through your smartphone's camera. As per a report, evidence for the Live Video feature, which is part of OpenAI's Advanced Voice Mode, was spotted in the latest ChatGPT for Android beta app. This capability was first demonstrated in May during the AI firm's Spring Updates event. It allows the chatbot to access the smartphone's camera and answer queries about the user's surroundings in real-time. While the emotive voice capability was released a couple of months ago, the company has so far not announced a possible release date for the Live Video feature. An Android Authority report detailed the evidence of the Live Video feature, which was found during an Android package kit (APK) teardown process of the app. Several strings of code relating to the capability were seen in the ChatGPT for Android beta version 1.2024.317. Notably, the Live Video feature is part of ChatGPT's Advanced Voice Mode, and it lets the AI chatbot process video data in real-time to answer queries and interact with the user in real-time. With this, ChatGPT can look into a user's fridge and scan ingredients and suggest a recipe. It can also analyse the user's expressions and try to gauge their mood. This was coupled with the emotive voice capability which lets the AI speak in a more natural and expressive manner. As per the report, multiple strings of code relating to the feature were seen. One such string states, "Tap the camera icon to let ChatGPT view and chat about your surroundings," which is the same description OpenAI gave for the feature during the demo. Other strings reportedly include phrases such as "Live camera" and "Beta", which highlight that the feature can work in real-time and that the under-development feature will likely be released to beta users first. Another string of code also includes an advisory for users to not use the Live Video feature for live navigation or decisions that can impact users' health or safety. While the existence of these strings does not point towards the release of the feature, after a delay of eight months, this is the first time a piece of conclusive evidence that the company is working on the feature has been found. Earlier, OpenAI claimed that the feature was being delayed in order to protect users. Notably, Google DeepMind also demonstrated a similar AI vision feature at the Google I/O event in May. Part of Project Astra, the feature gives Gemini to see the user's surroundings using the device's camera. In the demo, Google's AI tool could correctly identify objects, deduce current weather conditions, and even remember objects it saw earlier in the live video session. So far, the Mountain View-based tech giant has also not given a timeline on when this feature might be introduced.

[4]

ChatGPT's Advanced Voice Mode could finally get 'eyes' soon with sci-fi video calling feature

Beta code references 'Live camera' hinting an impending launch ChatGPT's long-anticipated 'eyes' could be coming to Advanced Voice Mode soon allowing you to have video calls with AI. OpenAI originally unveiled the feature in May showcasing how Advanced Voice Mode could see what you show it and speak back to you about the subject. In the demo, Advanced Voice Mode was shown a dog and was able to determine the subject and everything else related to it, including the animal's name. Since that demo and an Alpha release, OpenAI hasn't mentioned this feature and we've heard nothing about its development. Until now. Code in the latest ChatGPT v1.2024.317 beta build, originally spotted by Android Authority, hints at ChatGPT's eyes coming sooner rather than later. OpenAI hasn't officially confirmed the name for the sci-fi video call feature yet, but according to the code strings it's going to be called 'Live Camera'. For users who have been waiting for further information on ChatGPT's Visual Intelligence and Google Lens competitor, this is a good sign that Live camera could be entering into beta soon followed by a wider official release. A video call option sounds like the natural evolution of ChatGPT's Advanced Voice Mode, allowing you to effectively video call AI. While that might sound incredibly dystopian, it could end up being a fantastic addition to the way we interact with AI models. ChatGPT's Advanced Voice Mode and other AI voice assistants, like Gemini Live, have proven that there is far more to interacting with AI than a chatbot. Offering as many ways to interact with AI as possible allows users to decide how it best fits their needs and opens up new ways of implementing the software into daily life. I expect this 'Live camera' functionality to be a game-changer for accessibility needs, especially for those with visual impairment. Hopefully we hear more about 'Live camera' soon, but it's good to know, at least, that OpenAI hasn't forgotten the feature's existence.

Share

Share

Copy Link

OpenAI's ChatGPT is on the verge of introducing a 'Live Camera' feature, integrating visual capabilities with its Advanced Voice Mode. This development, spotted in beta code, could revolutionize AI interactions by enabling real-time visual processing and analysis.

ChatGPT's 'Live Camera' Feature Nears Launch

OpenAI's ChatGPT is poised to introduce a groundbreaking 'Live Camera' feature, potentially revolutionizing how users interact with AI. This development, first teased during OpenAI's Spring Update in May, is now closer to reality as evidence of its implementation has been discovered in recent beta code

1

2

.Beta Code Reveals Imminent Launch

Android Authority's analysis of ChatGPT's latest beta version (v1.2024.317) uncovered several code strings referencing "Live camera functionality," "Real-time processing," and "Visual recognition capabilities"

1

. These findings suggest that the feature could be released as part of a ChatGPT beta in the near future, integrating seamlessly with the existing Advanced Voice Mode3

.Capabilities and Potential Applications

The 'Live Camera' feature is expected to enable ChatGPT to process and analyze visual information in real-time. According to demonstrations from OpenAI's Spring Update, the AI could:

- Recognize objects and actions (e.g., a dog playing with a ball)

- Remember key details (such as the dog's name)

- Provide information about landmarks and locations during tours

- Analyze ingredients in a refrigerator and suggest recipes

- Potentially gauge user emotions through facial expression analysis

1

4

Integration with Advanced Voice Mode

This visual capability is set to complement ChatGPT's Advanced Voice Mode, which was rolled out to all users in September. The combination of voice and visual processing could create a more immersive and interactive AI experience, akin to having a video call with an AI assistant

2

4

.Safety Considerations

The beta code also includes warnings advising users against using the Live Camera feature "for live navigation or decisions that may impact your health or safety"

1

3

. This precautionary measure highlights OpenAI's focus on responsible AI deployment.Related Stories

Industry Context and Competition

OpenAI's move comes amidst growing competition in the AI space. Google DeepMind demonstrated a similar AI vision feature, part of Project Astra, at the Google I/O event in May. This feature would allow Gemini to interpret visual information from a device's camera

3

.Potential Impact and Future Developments

The introduction of 'Live Camera' functionality could be a game-changer for accessibility, particularly for individuals with visual impairments

4

. It also opens up new possibilities for AI integration into daily life, potentially transforming how users interact with their surroundings through AI assistance.As OpenAI continues to innovate, there are rumors of additional developments, including an AI agent capable of performing multi-step tasks such as writing code and browsing the web

2

. These advancements underscore the rapid evolution of AI technology and its growing role in enhancing human-computer interaction.References

Summarized by

Navi

[1]

[3]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology