Children Outpace AI in Language Learning: New Framework Reveals Key Differences

3 Sources

3 Sources

[1]

Brains over bots: Why toddlers still beat AI at learning language

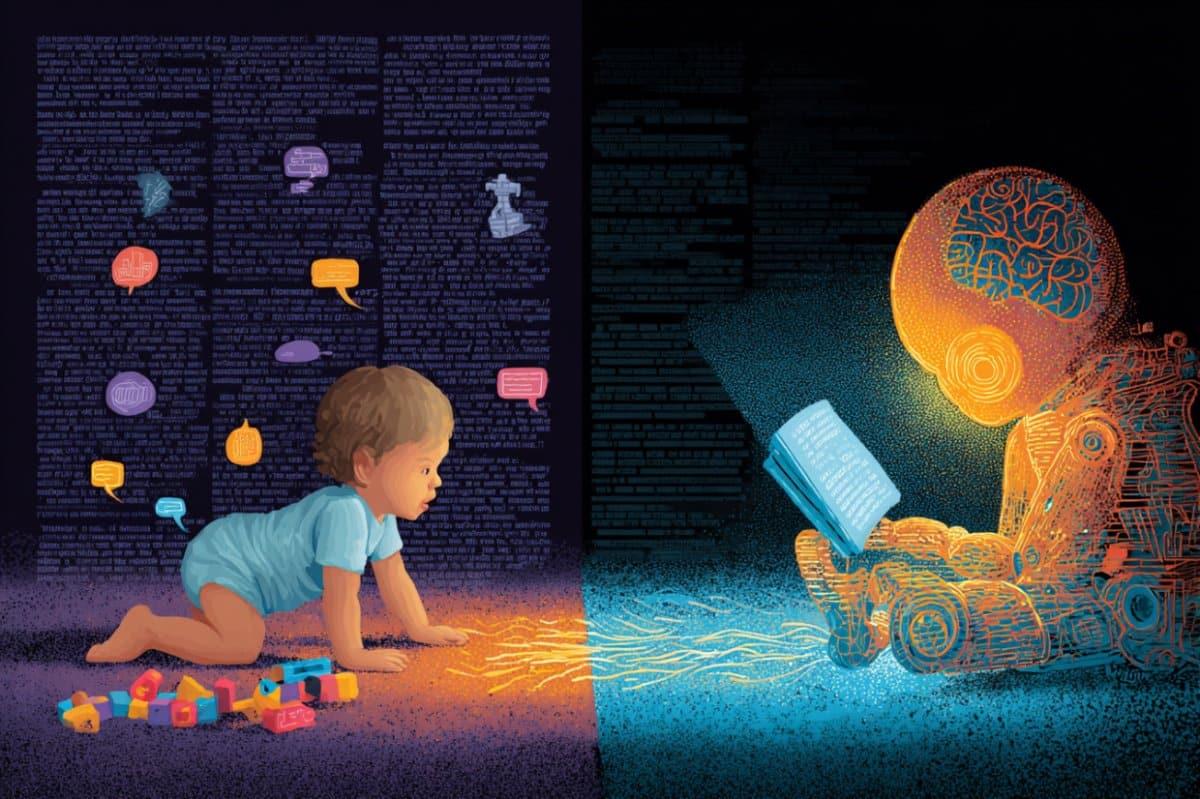

Even the smartest machines can't match young minds at language learning. Researchers share new findings on how children stay ahead of AI -- and why it matters. If a human learned a language at the same rate as ChatGPT, it would take them 92,000 years. While machines can crunch massive datasets at lightning speed, when it comes to acquiring natural language, children leave artificial intelligence in the dust. A newly published framework in Trends in Cognitive Sciences by Professor Caroline Rowland of the Max Planck Institute for Psycholinguistics, in collaboration with colleagues at the ESRC LuCiD Center in the UK, presents a novel framework to explain how children achieve this remarkable feat. An explosion of new technology Scientists can now observe, in unprecedented detail, how children interact with their caregivers and surroundings, fueled by recent advances in research tools such as head-mounted eye-tracking and AI-powered speech recognition. But despite the rapid growth in data collection methods, theoretical models explaining how this information translates into fluent language have lagged behind. The new framework addresses this gap. Synthesizing wide-ranging evidence from computational science, linguistics, neuroscience and psychology, the research team proposes that the key to understanding how children learn language so much faster than AI, lies not in how much information they receive -- but in how they learn from it. Children vs. ChatGPT: What's the difference? Unlike machines that learn primarily, and passively, from written text, children acquire language through an active, ever-changing developmental process driven by their growing social, cognitive, and motor skills. Children use all their senses -- seeing, hearing, smelling, listening and touching -- to make sense of the world and build their language skills. This world provides them with rich, and coordinated signals from multiple senses, giving them diverse and synchronized cues to help them figure out how language works. And children do not just sit back wait for language to come to them -- they actively explore their surroundings, continuously creating new opportunities to learn. "AI systems process data ... but children really live it," Rowland notes. "Their learning is embodied, interactive, and deeply embedded in social and sensory contexts. They seek out experiences and dynamically adapt their learning in response -- exploring objects with their hands and mouths, crawling towards new and exciting toys, or pointing at objects they find interesting. That's what enables them to master language so quickly." Implications beyond early childhood These insights don't just reshape our understanding of child development -- they hold far-reaching implications for research in artificial intelligence, adult language processing, and even the evolution of human language itself. "AI researchers could learn a lot from babies," says Rowland. "If we want machines to learn language as well as humans, perhaps we need to rethink how we design them -- from the ground up."

[2]

Why Children Learn Language Faster Than AI - Neuroscience News

Summary: Despite AI's massive processing power, children still far outperform machines in learning language, and a new framework helps explain why. Unlike AI systems that passively absorb text, children learn through multisensory exploration, social interaction, and self-driven curiosity. Their language learning is active, embodied, and deeply tied to motor, cognitive, and emotional development. These insights not only reshape how we understand early childhood but may also guide the future design of more human-like AI systems. Even the smartest machines can't match young minds at language learning. Researchers share new findings on how children stay ahead of AI - and why it matters. If a human learned language at the same rate as ChatGPT, it would take them 92,000 years. While machines can crunch massive datasets at lightning speed, when it comes to acquiring natural language, children leave artificial intelligence in the dust. A newly published framework in Trends in Cognitive Sciences by Professor Caroline Rowland of the Max Planck Institute for Psycholinguistics, in collaboration with colleagues at the ESRC LuCiD Centre in the UK, presents a novel framework to explain how children achieve this remarkable feat. An explosion of new technology Scientists can now observe, in unprecedented detail, how children interact with their caregivers and surroundings, fueled by recent advances in research tools such as head-mounted eye-tracking and AI-powered speech recognition. But despite the rapid growth in data collection methods, theoretical models explaining how this information translates into fluent language have lagged behind. The new framework addresses this gap. Synthesizing wide-ranging evidence from computational science, linguistics, neuroscience and psychology, the research team proposes that the key to understanding how children learn language so much faster than AI, lies not in how much information they receive - but in how they learn from it. Children vs. ChatGPT: What's the difference? Unlike machines that learn primarily, and passively, from written text, children acquire language through an active, ever-changing developmental process driven by their growing social, cognitive, and motor skills. Children use all their senses - seeing, hearing, smelling, listening and touching - to make sense of the world and build their language skills. This world provides them with rich, and coordinated signals from multiple senses, giving them diverse and synchronized cues to help them figure out how language works. And children do not just sit back wait for language to come to them - they actively explore their surroundings, continuously creating new opportunities to learn. "AI systems process data ... but children really live it", Rowland notes. "Their learning is embodied, interactive, and deeply embedded in social and sensory contexts. They seek out experiences and dynamically adapt their learning in response - exploring objects with their hands and mouths, crawling towards new and exciting toys, or pointing at objects they find interesting. That's what enables them to master language so quickly." Implications beyond early childhood These insights don't just reshape our understanding of child development - they hold far-reaching implications for research in artificial intelligence, adult language processing, and even the evolution of human language itself. "AI researchers could learn a lot from babies," says Rowland. "If we want machines to learn language as well as humans, perhaps we need to rethink how we design them - from the ground up." Brains over Bots: Why Toddlers Still Beat AI at Learning Language Explaining how children build a language system is a central goal of research in language acquisition, with broad implications for language evolution, adult language processing, and artificial intelligence (AI). Here, we propose a constructivist framework for future theory-building in language acquisition. We describe four components of constructivism, drawing on wide-ranging evidence to argue that theories based on these components will be well suited to explaining developmental change. We show how adopting a constructivist framework both provides plausible answers to old questions (e.g., how children build linguistic representations from their input) and generates new questions (e.g., how children adapt to the affordances provided by different cultures and languages).

[3]

Children are much better at learning language than AI - Earth.com

In raw processing power, a large language model will outclass toddlers. Yet when a team of researchers did the math, they found a startling gap: if humans learned language at the same rate as ChatGPT, it would take them 92,000 years. The calculation highlights a long-standing mystery. Children master speech and grammar after only a few years of everyday experience, whereas state-of-the-art AI, trained on billions of words, still trips over basic nuance. A new study conducted at the Max Planck Institute for Psycholinguistics offers the most comprehensive explanation to date. Led by developmental psychologist Caroline Rowland and colleagues at the UK-based ESRC LuCiD Center, the research proposes that the decisive advantage is not the amount of data children receive, but the way they interact with it. Their active, embodied, and socially embedded learning engine may also hold lessons for the next generation of artificial intelligence. The past decade has produced a multitude of tools - head-mounted eye trackers, wearable microphones, machine-vision scene analyzers - that let scientists capture moment-by-moment snapshots of childhood. A typical modern dataset might include everything a two-year-old sees, hears, grasps, and babbles during an ordinary afternoon. What has been missing, Rowland and her co-authors argue, is an integrated theory of how these multisensory streams translate into grammar and vocabulary. The framework they propose rests on several mutually reinforcing principles. First is multisensory integration. Unlike text-bound chatbots, babies process language against a rich backdrop of sights, sounds, tastes, smells, and textures. A spoonful of mashed banana, for instance, comes with a color, a smell, a shape, a temperature, and a spoken label from a caregiver. Over time, these correlated cues help infants crack linguistic codes that would baffle a purely textual learner. Second is embodiment. Children's bodies are constantly in motion - rolling, crawling, pointing, mouthing objects. Each action changes the incoming data stream, generating fresh correlations between words and physical experiences, like associating the word cup with the feel of a plastic rim. This kind of "closed-loop" activity allows children to test hypotheses on the fly, using movement as part of the learning process. Social immersion plays a third critical role. Caregivers instinctively tailor their speech in real time, repeating words, exaggerating intonation, or shifting topics based on the child's attention. Whereas AI systems read from static datasets, children receive a dynamic, personalized curriculum designed by human minds evolved for teaching. A fourth principle is incremental plasticity. The young brain reorganizes rapidly, strengthening and pruning neural connections in response to experience. This adaptability allows children to shift their learning priorities as they grow- starting with mastering sounds, then words, then grammar - without needing to discard earlier knowledge. Finally, there is motivation and curiosity. Perhaps most crucially, toddlers want to decode the world around them. They actively seek novelty, request clarification, and show visible delight when they succeed, sustaining hours of daily language practice without explicit instruction. "AI systems process data... but children really live it," Rowland explained. "Their learning is embodied, interactive, and deeply embedded in social and sensory contexts." "They seek out experiences and dynamically adapt their learning in response - exploring objects with their hands and mouths, crawling toward new and exciting toys, or pointing at objects they find interesting. That's what enables them to master language so quickly." The authors believe these insights could reshape machine-learning strategy. Current large language models ingest terabytes of written text, a modality children barely encounter until school age. To narrow the performance gap, engineers might endow robots with multisensory inputs, motor exploration, and real-time social feedback loops - essentially giving silicon learners a simulated childhood. "AI researchers could learn a lot from babies," Rowland said. "If we want machines to learn language as well as humans, perhaps we need to rethink how we design them - from the ground up." Beyond technology, the framework could illuminate adult second-language acquisition, evolutionary linguistics, and educational practice. For example, it suggests that immersive, interactive classrooms may outperform rote drills, and that endangered languages could be revitalized by recreating full sensory contexts for young speakers. Rowland's group is already testing the model with longitudinal recordings from multilingual families. Meanwhile, cognitive neuroscientists plan brain-imaging studies to chart how sensory-motor loops sculpt language circuits. On the AI front, several labs are experimenting with embodied agents that crawl through virtual nurseries, manipulating objects while linking words to experience. Whether those synthetic toddlers can close a 92,000-year deficit remains to be seen. What is clear is that humanity's smallest linguists still wield secrets that even the largest neural networks have yet to crack. Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates. Check us out on EarthSnap, a free app brought to you by Eric Ralls and Earth.com.

Share

Share

Copy Link

A new study highlights how children's multisensory, active learning approach gives them a significant edge over AI in language acquisition, potentially reshaping AI development and our understanding of human cognition.

The Remarkable Language Learning Abilities of Children

A groundbreaking study published in Trends in Cognitive Sciences has shed light on why children are significantly more adept at learning language than even the most advanced artificial intelligence (AI) systems. Led by Professor Caroline Rowland of the Max Planck Institute for Psycholinguistics, in collaboration with colleagues at the ESRC LuCiD Center in the UK, the research presents a novel framework that explains children's remarkable language acquisition abilities

1

.The study reveals a startling statistic: if humans were to learn language at the same rate as ChatGPT, it would take them 92,000 years to achieve fluency. This vast difference highlights the unique capabilities of the human brain in language acquisition, especially during early childhood

2

.The Key to Children's Language Learning Success

The research team proposes that the critical factor in children's rapid language acquisition is not the quantity of information they receive, but rather how they process and learn from it. Unlike AI systems that primarily learn from written text, children engage in an active, multisensory learning process deeply embedded in their social and physical environments

3

.

Source: Neuroscience News

Professor Rowland explains, "AI systems process data ... but children really live it. Their learning is embodied, interactive, and deeply embedded in social and sensory contexts"

1

.The Multifaceted Approach of Child Language Learning

The framework identifies several key components that contribute to children's superior language learning abilities:

-

Multisensory Integration: Children use all their senses to understand language, creating rich, coordinated signals that help them decipher linguistic patterns

2

. -

Embodied Learning: Through physical exploration and interaction with their environment, children generate new opportunities for language learning

3

.

Source: Phys.org

-

Social Immersion: Caregivers instinctively adapt their speech to the child's needs, providing a personalized, dynamic learning experience

3

. -

Incremental Plasticity: The young brain's ability to rapidly reorganize allows for efficient adaptation to different stages of language learning

3

. -

Motivation and Curiosity: Children's innate desire to understand their world drives sustained, self-motivated language practice

3

.

Related Stories

Implications for AI Development and Beyond

This research has far-reaching implications not only for our understanding of child development but also for the future of AI. Rowland suggests that AI researchers could learn valuable lessons from studying how babies acquire language

1

.The insights gained from this study could potentially reshape machine learning strategies. Some researchers are already experimenting with embodied AI agents that explore virtual environments, attempting to bridge the gap between artificial and human language learning

3

.Beyond AI, the framework could inform approaches to adult second-language acquisition, evolutionary linguistics, and educational practices. It suggests that immersive, interactive learning environments may be more effective than traditional rote learning methods

3

.References

Summarized by

Navi

[2]

Related Stories

New AI Model Mimics Toddler Learning, Offering Insights into Human Cognition and AI Development

24 Jan 2025•Science and Research

Study Reveals AI Language Models Learn Like Humans, But Lack Abstract Thought

13 May 2025•Science and Research

AI 'Kindergarten' Training Enhances Complex Task Learning in Neural Networks

20 May 2025•Science and Research

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology