Chinese AI Startup Moonshot Releases Open-Source Model That Claims to Outperform GPT-5

7 Sources

7 Sources

[1]

A new Chinese AI model claims to outperform GPT-5 and Sonnet 4.5 - and it's free

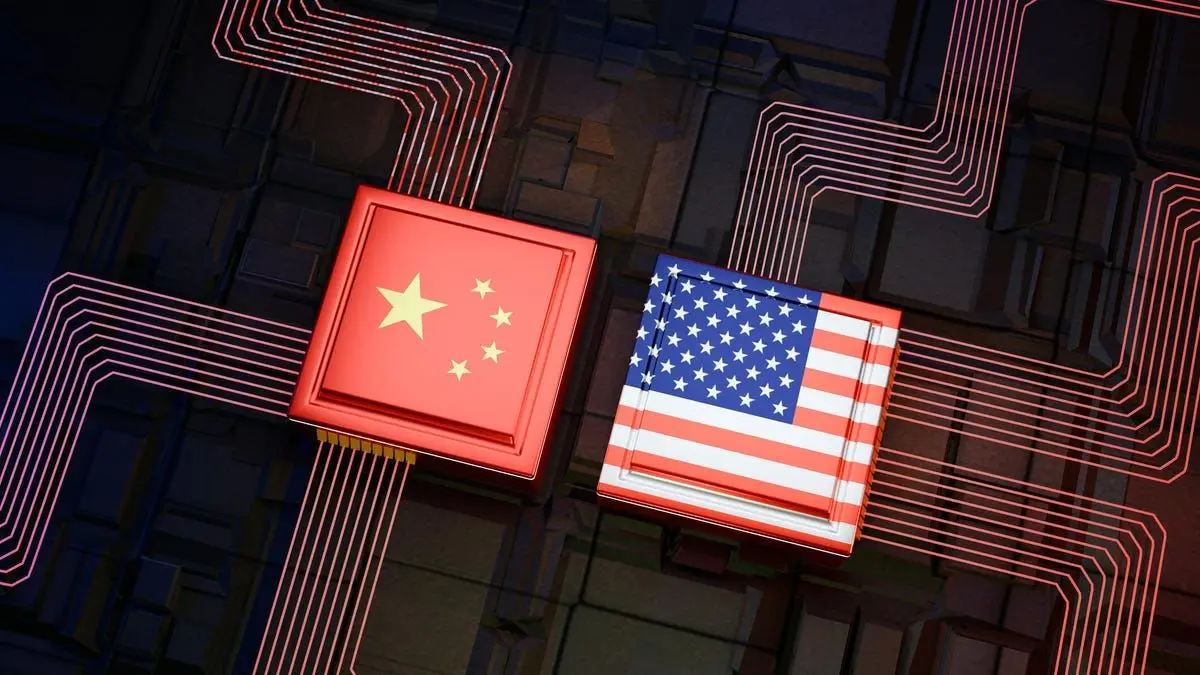

The global AI arms race stays in constant flux, this time thanks to the arrival of a new model from the up-and-coming Chinese AI lab Moonshot. Also: Why open source may not survive the rise of generative AI On Thursday, the Beijing-based company released Kimi K2 Thinking, a reasoning model that it says outperforms OpenAI's GPT-5 and Anthropic's Claude Sonnet 4.5 on key benchmarks, including Humanity's Last Exam, BrowseComp (which tests AI agents' ability to extract hard-to-find online information via web browsers), and Seal-0 (which assesses reasoning capabilities). Kimi K2 Thinking also showed coding abilities that were comparable to GPT-5 and Sonnet 4.5, but not notably more impressive. "By reasoning while actively using a diverse set of tools, K2 Thinking is capable of planning, reasoning, executing, and adapting across hundreds of steps to tackle some of the most challenging academic and analytical problems," Moonshot wrote on its website. Kimi K2 Thinking is a Mixture-of-Experts (MoE) model blending long-horizon planning, adaptive reasoning, and the use of online tools (such as browsers), "continually generating and refining hypotheses, verifying evidence, reasoning, and constructing coherent answers," the company wrote. "This interleaved reasoning allows it to decompose ambiguous, open-ended problems into clear, actionable subtasks." It was trained with around 1 trillion parameters and can be accessed on Hugging Face. Also: The best free AI for coding in 2025 - only 3 make the cut now Crucially, Kimi K2 Thinking -- which builds upon the Kimi K2 model released in July -- is open source, meaning developers can access and build upon the underlying code and weights for free. Read that again: A model that (according to Moonshot) has more advanced agentic capabilities than frontier models from OpenAI and Anthropic is free. Moonshot also said it cost less than $5 million to train -- $4.6 million to be exact, according to CNBC -- a vanishingly small amount compared to the billions that have been spent by the most prominent AI labs in the U.S. If externally verified, the implications of that could be huge -- or flame out like the DeepSeek-induced panic of January 2025 did. First and foremost, there's the commercial side of things. Since the arrival of ChatGPT just under three years ago, business owners have been bombarded with pressure to onboard new AI tools, especially agents, which tech developers have marketed as productivity boosters and virtual assistants. That often meant paying for business-tier offerings, like OpenAI's ChatGPT for Enterprise. (Disclosure: Ziff Davis, ZDNET's parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.) Until now, the general sales pitch across Silicon Valley has been that it's worthwhile to pay for proprietary AI tools from a leading developer, since -- to paraphrase what's become a popular marketing trope -- even if AI doesn't put you out of business, another company using AI almost certainly will (never mind the fact that the vast majority of businesses using AI haven't seen any measurable ROI). Also: As OpenAI hits 1 million business customers, could the AI ROI tide finally be turning? Similar to DeepSeek's R1, the arrival of Moonshot's new model throws the entire logic of that sales pitch into question. Suddenly, businesses have at their disposal a free AI model that's supposedly better at performing critical agentic tasks than the best proprietary models available. Of course, it's highly unlikely that legions of businesses are going to throw the AI baby out with the bathwater and immediately cancel their OpenAI or Anthropic enterprise subscriptions just because the latest hotshot Chinese firm claims to have built a more advanced model. But it'll certainly turn some heads and get people wondering again: Maybe the proprietary, subscription-based model of AI they've been sold isn't the only way of the future. In fact, it's already happening: Some US companies like Airbnb now prefer AI tools from Chinese companies over those from their American counterparts, citing both their better performance across some critical tasks as well as their lower cost. Of course, some experts have expressed concern that open-source models, especially with foreign origins, pose an added security risk; several US agencies and other countries swiftly banned DeepSeek. If the January arrival of R1 was that country's "Sputnik Moment," then Thursday's debut of Moonshot's Kimi K2 Model is the Chinese AI industry's moon landing (pun intended). Also: AI agents are only as good as the data they're given, and that's a big issue for businesses American policymakers and tech pundits have commonly framed that race as an ideological one, with "American AI" on one side supposedly encapsulating the ideals of Western liberal democracy and "Chinese AI" on the other, representing centralized control over the flow and censorship of information. While it's true that some AI models built by Chinese labs exhibit biases and censor information that seem to align with the official policies of the Chinese Communist Party, it's important to bear in mind that all AI systems -- regardless of where their parent companies are based -- come with some kind of bias; the technology you use will to some degree reflect the worldview of the people who built it and the bias embedded in the data used to train it. In any event, ideological concerns may take a backseat to financial ones if the new Kimi model's performance holds up to the impressive metrics on Moonshot's website. No investor can overlook that paltry $4.6 million pricetag. Also: I've been testing the top AI browsers - here's which ones actually impressed me Here in the US, while businesses and individual consumers have been sold the idea that it's worth paying for a top-tier proprietary model, investors have been sold the story that in order to build those tools, companies need to spend enormous sums of money, well into the tens of billions of dollars, even though many of those companies aren't yet profitable. So far, it's been working. Leading US AI labs like OpenAI and Anthropic are now valued in the hundreds of billions, and their spending on the infrastructure and compute required to build increasingly advanced models has been ramping up by the day. But fears have been growing around the prospect of an AI bubble: the possibility that a large segment of our global economy has been inextricably tied up with a commodity that, in the end, might not be able to generate a profit, and which could send the whole house of cards toppling down, like the widespread use of securitized derivatives did to the housing market in 2008. Also: Gartner dropped its 2026 tech trends - and it's not all AI: Here's the list Only time will tell if we're actually living inside an AI bubble. But one thing is certain: The sudden arrival of a free tool that outperforms leading models from OpenAI and Anthropic is going to make many tech investors' eyes water -- and wonder if they ought to be backing a different horse.

[2]

Alibaba-backed Moonshot releases its second AI update in four months as China's AI race heats up

Alibaba-backed Chinese startup Moonshot has launched one of the more popular generative AI chatbots called Kimi. Chinese startup Moonshot on Thursday released its latest generative artificial intelligence model which claims to beat OpenAI's ChatGPT in "agentic" capabilities -- or understanding what a user wants without explicit step-by-step instructions. Despite U.S. restrictions on Chinese businesses' access to high-end chips, companies such as DeepSeek have released AI models that are open sourced and with user fees a fraction of ChatGPT's. DeepSeek also claimed it spent $5.6 million for its V3 model -- in contrast to the billions spent by OpenAI. The Kimi K2 Thinking model cost $4.6 million to train, according to a source familiar with the matter. It can automatically select 200 to 300 tools to complete tasks on its own, reducing the need for human intervention according to Moonshot. CNBC was unable to independently verify the DeepSeek or Kimi figures. DeepSeek last month released a new AI model that claims to improve performance by using visual clues to expand the context of information it is processing at once.

[3]

Moonshot's open source Kimi K2 Thinking outperforms GPT-5, Claude Sonnet 4.5

Even as concern and skepticism grows over U.S. AI startup OpenAI's buildout strategy and high spending commitments, Chinese open source AI providers are escalating their competition and one has even caught up to OpenAI's flagship, paid proprietary model GPT-5 in key third-party performance benchmarks with a new, free model. The Chinese AI startup Moonshot AI's new Kimi K2 Thinking model, released today, has vaulted past both proprietary and open-weight competitors to claim the top position in reasoning, coding, and agentic-tool benchmarks. Despite being fully open-source, the model now outperforms OpenAI's GPT-5, Anthropic's Claude Sonnet 4.5 (Thinking mode), and xAI's Grok-4 on several standard evaluations -- an inflection point for the competitiveness of open AI systems. Developers can access the model via platform.moonshot.ai and kimi.com; weights and code are hosted on Hugging Face. The open release includes APIs for chat, reasoning, and multi-tool workflows. Users can try out Kimi K2 Thinking directly through its own ChatGPT-like website competitor and on a Hugging Face space as well. Modified Standard Open Source License Moonshot AI has formally released Kimi K2 Thinking under a Modified MIT License on Hugging Face. The license grants full commercial and derivative rights -- meaning individual researchers and developers working on behalf of enterprise clients can access it freely and use it in commercial applications -- but adds one restriction: "If the software or any derivative product serves over 100 million monthly active users or generates over $20 million USD per month in revenue, the deployer must prominently display 'Kimi K2' on the product's user interface." For most research and enterprise applications, this clause functions as a light-touch attribution requirement while preserving the freedoms of standard MIT licensing. It makes K2 Thinking one of the most permissively licensed frontier-class models currently available. A New Benchmark Leader Kimi K2 Thinking is a Mixture-of-Experts (MoE) model built around one trillion parameters, of which 32 billion activate per inference. It combines long-horizon reasoning with structured tool use, executing up to 200-300 sequential tool calls without human intervention. According to Moonshot's published test results, K2 Thinking achieved: * 44.9 % on Humanity's Last Exam (HLE), a state-of-the-art score; * 60.2 % on BrowseComp, an agentic web-search and reasoning test; * 71.3 % on SWE-Bench Verified and 83.1 % on LiveCodeBench v6, key coding evaluations; * 56.3 % on Seal-0, a benchmark for real-world information retrieval. Across these tasks, K2 Thinking consistently outperforms GPT-5's corresponding scores and surpasses the previous open-weight leader MiniMax-M2 -- released just weeks earlier by Chinese rival MiniMax AI. Open Model Outperforms Proprietary Systems GPT-5 and Claude Sonnet 4.5 Thinking remain the leading proprietary "thinking" models. Yet in the same benchmark suite, K2 Thinking's agentic reasoning scores exceed both: for instance, on BrowseComp the open model's 60.2 % decisively leads GPT-5's 54.9 % and Claude 4.5's 24.1 %. K2 Thinking also edges GPT-5 in GPQA Diamond (85.7 % vs 84.5 %) and matches it on mathematical reasoning tasks such as AIME 2025 and HMMT 2025. Only in certain heavy-mode configurations -- where GPT-5 aggregates multiple trajectories -- does the proprietary model regain parity. That Moonshot's fully open-weight release can meet or exceed GPT-5's scores marks a turning point. The gap between closed frontier systems and publicly available models has effectively collapsed for high-end reasoning and coding. Surpassing MiniMax-M2: The Previous Open-Source Benchmark When VentureBeat profiled MiniMax-M2 just a week and a half ago, it was hailed as the "new king of open-source LLMs," achieving top scores among open-weight systems: * τ²-Bench 77.2 * BrowseComp 44.0 * FinSearchComp-global 65.5 * SWE-Bench Verified 69.4 Those results placed MiniMax-M2 near GPT-5-level capability in agentic tool use. Yet Kimi K2 Thinking now eclipses them by wide margins. Its BrowseComp result of 60.2 % exceeds M2's 44.0 %, and its SWE-Bench Verified 71.3 % edges out M2's 69.4 %. Even on financial-reasoning tasks such as FinSearchComp-T3 (47.4 %), K2 Thinking performs comparably while maintaining superior general-purpose reasoning. Technically, both models adopt sparse Mixture-of-Experts architectures for compute efficiency, but Moonshot's network activates more experts and deploys advanced quantization-aware training (INT4 QAT). This design doubles inference speed relative to standard precision without degrading accuracy -- critical for long "thinking-token" sessions reaching 256 k context windows. Agentic Reasoning and Tool Use K2 Thinking's defining capability lies in its explicit reasoning trace. The model outputs an auxiliary field, reasoning_content, revealing intermediate logic before each final response. This transparency preserves coherence across long multi-turn tasks and multi-step tool calls. A reference implementation published by Moonshot demonstrates how the model autonomously conducts a "daily news report" workflow: invoking date and web-search tools, analyzing retrieved content, and composing structured output -- all while maintaining internal reasoning state. This end-to-end autonomy enables the model to plan, search, execute, and synthesize evidence across hundreds of steps, mirroring the emerging class of "agentic AI" systems that operate with minimal supervision. Efficiency and Access Despite its trillion-parameter scale, K2 Thinking's runtime cost remains modest. Moonshot lists usage at: * $0.15 / 1 M tokens (cache hit) * $0.60 / 1 M tokens (cache miss) * $2.50 / 1 M tokens output These rates are competitive even against MiniMax-M2's $0.30 input / $1.20 output pricing -- and an order of magnitude below GPT-5 ($1.25 input / $10 output). Comparative Context: Open-Weight Acceleration The rapid succession of M2 and K2 Thinking illustrates how quickly open-source research is catching frontier systems. MiniMax-M2 demonstrated that open models could approach GPT-5-class agentic capability at a fraction of the compute cost. Moonshot has now advanced that frontier further, pushing open weights beyond parity into outright leadership. Both models rely on sparse activation for efficiency, but K2 Thinking's higher activation count (32 B vs 10 B active parameters) yields stronger reasoning fidelity across domains. Its test-time scaling -- expanding "thinking tokens" and tool-calling turns -- provides measurable performance gains without retraining, a feature not yet observed in MiniMax-M2. Technical Outlook Moonshot reports that K2 Thinking supports native INT4 inference and 256 k-token contexts with minimal performance degradation. Its architecture integrates quantization, parallel trajectory aggregation ("heavy mode"), and Mixture-of-Experts routing tuned for reasoning tasks. In practice, these optimizations allow K2 Thinking to sustain complex planning loops -- code compile-test-fix, search-analyze-summarize -- over hundreds of tool calls. This capability underpins its superior results on BrowseComp and SWE-Bench, where reasoning continuity is decisive. Enormous Implications for the AI Ecosystem The convergence of open and closed models at the high end signals a structural shift in the AI landscape. Enterprises that once relied exclusively on proprietary APIs can now deploy open alternatives matching GPT-5-level reasoning while retaining full control of weights, data, and compliance. Moonshot's open publication strategy follows the precedent set by DeepSeek R1, Qwen3, GLM-4.6 and MiniMax-M2 but extends it to full agentic reasoning. For academic and enterprise developers, K2 Thinking provides both transparency and interoperability -- the ability to inspect reasoning traces and fine-tune performance for domain-specific agents. The arrival of K2 Thinking signals that Moonshot -- a young startup founded in 2023 with investment from some of China's biggest apps and tech companies -- is here to play in an intensifying competition, and comes amid growing scrutiny of the financial sustainability of AI's largest players. Just a day ago, OpenAI CFO Sarah Friar sparked controversy after suggesting at WSJ Tech Live event that the U.S. government might eventually need to provide a "backstop" for the company's more than $1.4 trillion in compute and data-center commitments -- a comment widely interpreted as a call for taxpayer-backed loan guarantees. Although Friar later clarified that OpenAI was not seeking direct federal support, the episode reignited debate about the scale and concentration of AI capital spending. With OpenAI, Microsoft, Meta, and Google all racing to secure long-term chip supply, critics warn of an unsustainable investment bubble and "AI arms race" driven more by strategic fear than commercial returns -- one that could "blow up" and take down the entire global economy with it if there is hesitation or market uncertainty, as so many trades and valuations have now been made in anticipation of continued hefty AI investment and massive returns. Against that backdrop, Moonshot AI's and MiniMax's open-weight releases put more pressure on U.S. proprietary AI firms and their backers to justify the size of the investments and paths to profitability. If an enterprise customer can just as easily get comparable or better performance from a free, open source Chinese AI model than they do with paid, proprietary AI solutions like OpenAI's GPT-5, Anthropic's Claude Sonnet 4.5, or Google's Gemini 2.5 Pro -- why would they continue paying to access the proprietary models? Already, Silicon Valley stalwarts like Airbnb have raised eyebrows for admitting to heavily using Chinese open source alternatives like Alibaba's Qwen over OpenAI's proprietary offerings. For investors and enterprises, these developments suggest that high-end AI capability is no longer synonymous with high-end capital expenditure. The most advanced reasoning systems may now come not from companies building gigascale data centers, but from research groups optimizing architectures and quantization for efficiency. In that sense, K2 Thinking's benchmark dominance is not just a technical milestone -- it's a strategic one, arriving at a moment when the AI market's biggest question has shifted from how powerful models can become to who can afford to sustain them. What It Means for Enterprises Going Forward Within weeks of MiniMax-M2's ascent, Kimi K2 Thinking has overtaken it -- along with GPT-5 and Claude 4.5 -- across nearly every reasoning and agentic benchmark. The model demonstrates that open-weight systems can now meet or surpass proprietary frontier models in both capability and efficiency. For the AI research community, K2 Thinking represents more than another open model: it is evidence that the frontier has become collaborative. The best-performing reasoning model available today is not a closed commercial product but an open-source system accessible to anyone.

[4]

Capitalism Built OpenAI, Constraints Helped China Defeat It | AIM

The estimated cost of developing the Kimi K2 Thinking AI model was around $4.6 million. China is breathing down OpenAI's neck. Alibaba-backed Moonshot AI has launched a new model, Kimi K2 Thinking, which outperforms OpenAI's GPT-5 and Anthropic's Claude Sonnet 4.5 on several key benchmarks. Moonshot said the model's architecture activates 32 billion parameters per inference out of a total of one trillion, and supports context windows of up to 2,56,000 tokens. It can also perform 200 to 300 sequential tool calls without human input. "The AI frontier is open-source!" wrote Hugging Face CEO Clement Delangue after the launch of Kimi K2 Thinking. At the same time, NVIDIA CEO Jensen Huang warned that China could soon lead the global AI race. He said that China's lower energy costs, lighter regulations, and government subsidies make building and running AI inf

[5]

Moonshot launches open-source 'Kimi K2 Thinking' AI with a trillion parameters and reasoning capabilities - SiliconANGLE

Moonshot launches open-source 'Kimi K2 Thinking' AI with a trillion parameters and reasoning capabilities Chinese startup Beijing Moonshot AI Co. Ltd. Thursday released a new open-source artificial intelligence model, named Kimi 2 Thinking, that displays significantly upgraded tool use and agentic capabilities compared to current models. The company said the new model was built as a "thinking agent," capable of reasoning step-by-step while using software tools such as search, advanced calculations, data retrieval and third-party services. The model itself can reportedly execute 200 to 300 sequential tool calls without human intervention and reason across their use. The model weighs in at 1 trillion parameters, a fairly large size for an open-source model. A source close to the project told CNBC it only cost $4.6 million to train. DeepSeek's V3 model, also the product of a Chinese company and the foundation for the R1 reasoning model, reportedly cost $5.6 million. When AI developers talk about parameter counts, they're referring to the individual adjustable values within a model that determine how it processes and understands information. In essence, parameters are the neurons of a digital brain -- the more of them a model has, the more complex patterns it can recognize and reason about. However, size isn't everything; efficient training methods and specialized model architecture often matter more than parameter count. Compared with the hundreds of millions or even billions of dollars that companies such as OpenAI Group PBC and Anthropic PBC spend to develop and train their frontier models, Moonshot's figures highlight a growing arms race to discover cheaper, faster paths to advanced AI development. It's also noteworthy that DeepSeek's R1 model has 673 million parameters, meaning that K2's trillion-parameter scale at low training cost represents a continuing innovation in algorithmic and economic efficiency. This release comes shortly after Nvidia Corp. Chief Executive Jensen Huang commented that China is primed to beat the United States in the AI race. He cited patchwork regulations between U.S. states, in contrast to the more unified regulatory approach by China and the country's energy subsidies. Huang has been a strong critic of export controls on Nvidia's AI chips to China, arguing that the country will eventually develop alternatives. "Chinese AI researchers will use their own chips," the Financial Times quoted the executive in May. "They will use the second-best. Local companies are very determined, and export controls gave them the spirit, and government support accelerated their development. Our competition is intense in China." Despite its low training cost, Moonshot claims that Kimi K2 Thinking outperforms many rival closed-source models on modern benchmarks. The model achieved a 43% score on Humanity's Last Exam, a benchmark of 3,000 graduate-level reasoning questions designed to measure complex problem-solving beyond simple recall. The company claimed these results notably exceed the performance of OpenAI's GPT-5 and Anthropic's Claude Sonnet 4.5. For software engineers, the new model delivered improvements in HTML, React and detailed front-end programming tasks. Users could use it to translate prompts into fully functional, responsive products using its agentic coding capabilities. According to Moonshot, the model employs long-horizon planning combined with adaptive reasoning to break down tasks, use tools, and refine hypotheses before arriving at a final answer. On the deployment side, the company said K2 natively supports INT4 inference, a compact quantized form of the model that reduces memory footprint and enables it to run on less powerful hardware. Low-bit quantization improves efficiency and latency for large-scale deployments; however, reasoning models such as K2 perform multiple cognitive passes, meaning high compression can degrade accuracy. Moonshot said it overcame that with Quantization-Aware Training, or QAT, during the post-training phase, applying INT4 weights to the mixture-of-experts components. That allows K2 Thinking to support INT4 with around double the speed improvements while still achieving state-of-the-art accuracy and performance.

[6]

AI race heats up as Chinese start-up Moonshot launches Kimi K2 Thinking

The launch comes amid a period of increased tensions between the US and China regarding control of the global AI and chip sectors. Moonshot AI, an Alibaba-backed Chinese start-up launched in 2023, has issued an update to its open-source large language model Kimi 2, with the release of the Kimi K2 Thinking. Based in Beijing, Moonshot AI's new model claims to be a "thinking agent" capable of moving step-by-step whilst deploying 200-300 tools, without the need for human interference, with the purpose of solving a range of complex problems. According to Moonshot AI, it is a signifier of their efforts "in test-time scaling, by scaling both thinking tokens and tool calling steps" and there have been significant improvements in "reasoning, agentic search, coding, writing and general capabilities". In a test of its capabilities, Moonshot AI said that the model was able to solve a PhD-level maths problem by thinking and reverting back to tools 23 times until it had a sufficient answer. Improvements upon its agentic search and browser capabilities was also noted by Moonshot AI. Using BrowseComp in order to evaluate the techs ability to browse search and reason via the web, Kimi K2 Thinking was found capable of executing 200-300 sequential tool calls to create a cycle of think, search, browser use, think, code. Moonshot AI said, "This interleaved reasoning allows it to decompose ambiguous, open-ended problems into clear, actionable subtasks." The update also included general improvements to the AI agent's overall tone, with a stronger sense of emotion, style, imagination and natural fluency, to better mimic a human response. As well as changes in its practical writing abilities, in order to produce more precise content and long-form content suitable for research, academic and analytical writing. Moonshot's AI model comes amid a period of significant tension between the US and China, as both countries aim to pull away in the AI race. In late October Nvidia made history as the first company to reach a market value of $5trn. Earlier this week its CEO Jensen Huang told attendees at the FT Future of AI Summit, that China was beating the US due to factors such as energy costs and looser regulations. However, he softened his stance in a later X post, where he said, "As I have long said, China is nanoseconds behind America in AI. It's vital that America wins by racing ahead and winning developers worldwide." Don't miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic's digest of need-to-know sci-tech news.

[7]

Kimi K2 Thinking Crushes GPT-5, Claude 4.5 Sonnet in Key Benchmarks | AIM

The model's architecture activates 32 billion parameters per inference out of a total of one trillion parameters and supports up to 2,56,000 token context windows. Moonshot AI, a Chinese startup backed by Alibaba, released its latest AI model, Kimi K2 Thinking, on November 6. The model surpassed several leading AI systems, including OpenAI's GPT-5 and Claude Sonnet 4.5, in key reasoning and coding benchmarks. Moonshot said the model's architecture activates 32 billion parameters per inference out of a total of one trillion parameters and supports up to 2,56,000 token context windows. The model can execute 200 to 300 sequential tool calls without human intervention. Benchmark results show that Kimi K2 Thinking achieved scores of 44.9% on the Humanity's Last Exam benchmark (with tools enabled), 60.2% on the BrowseComp web-search reasoning benchmark and 71.3% on SWE-bench Verified, which evaluate agentic reasoning and coding capabilities. Moonshot said Kimi K2 Thinking is designed for explicit reasoning, with intermediate logical steps visible in its outputs to ensure transparency across multi-step workflows. Despite its trillion-parameter scale, Moonshot AI explained that Kimi K2 Thinking maintains a modest runtime cost. The company lists pricing at $0.15 per one million tokens for cache hits, $0.60 per one million tokens for cache misses and $2.50 per one million tokens for output. These rates are competitive even against MiniMax-M2's $0.30 input and $1.20 output pricing, and remain an order of magnitude lower than GPT-5, which is priced at $1.25 for input and $10 for output. The open-source model is available under a Modified MIT License, permitting free commercial use with one attribution condition for high-scale deployments. The launch of Kimi K2 Thinking comes at a time when Chinese open-source AI firms are competing more closely with US proprietary systems. Moonshot AI views the model as a crucial step toward making powerful AI technology more accessible.

Share

Share

Copy Link

Beijing-based Moonshot AI has released Kimi K2 Thinking, an open-source reasoning model that reportedly outperforms OpenAI's GPT-5 and Anthropic's Claude Sonnet 4.5 on key benchmarks, costing only $4.6 million to train compared to billions spent by US competitors.

Chinese AI Startup Challenges US Dominance with Revolutionary Open-Source Model

Beijing-based AI startup Moonshot has released Kimi K2 Thinking, an open-source reasoning model that claims to outperform leading proprietary AI systems from OpenAI and Anthropic

1

. The model, released on Thursday, reportedly surpasses GPT-5 and Claude Sonnet 4.5 on several key benchmarks while being freely available to developers worldwide.

Source: Silicon Republic

Technical Specifications and Performance Metrics

Kimi K2 Thinking is built as a Mixture-of-Experts (MoE) model with approximately 1 trillion parameters, of which 32 billion activate per inference

3

. The model demonstrates exceptional performance across multiple evaluation metrics, achieving 44.9% on Humanity's Last Exam, 60.2% on BrowseComp, and 71.3% on SWE-Bench Verified3

.

Source: SiliconANGLE

The model's architecture enables it to execute 200 to 300 sequential tool calls without human intervention, combining long-horizon planning with adaptive reasoning capabilities

2

. This allows K2 Thinking to decompose complex, open-ended problems into actionable subtasks while continuously generating and refining hypotheses.Cost-Effective Development Challenges Industry Norms

Perhaps most significantly, Moonshot developed K2 Thinking for approximately $4.6 million, a fraction of the billions spent by leading US AI companies

2

. This cost efficiency mirrors similar achievements by other Chinese AI companies, with DeepSeek's V3 model reportedly costing $5.6 million to develop5

.The model supports INT4 inference through Quantization-Aware Training (QAT), enabling it to run efficiently on less powerful hardware while maintaining state-of-the-art accuracy

5

. This technical innovation allows for doubled inference speed without degrading performance quality.Related Stories

Open-Source Licensing and Commercial Implications

Moonshot has released K2 Thinking under a Modified MIT License, granting full commercial and derivative rights with one notable restriction: deployments serving over 100 million monthly active users or generating over $20 million monthly revenue must display "Kimi K2" prominently on their user interface

3

.The open-source nature of K2 Thinking challenges the prevailing business model of proprietary AI subscriptions that has dominated the market since ChatGPT's launch

1

. Some US companies, including Airbnb, have already begun preferring Chinese AI tools over American alternatives, citing superior performance and lower costs.Geopolitical and Industry Impact

The release comes amid growing concerns about China's position in the global AI race. NVIDIA CEO Jensen Huang recently warned that China could lead the AI sector, citing the country's lower energy costs, lighter regulations, and government subsidies

4

. Huang has criticized US export controls on AI chips to China, arguing that restrictions have accelerated Chinese companies' development of alternatives.

Source: AIM

Hugging Face CEO Clement Delangue celebrated the launch, declaring "The AI frontier is open-source!" following K2 Thinking's release

4

. The model's availability on platforms like Hugging Face and kimi.com provides developers worldwide with access to frontier-level AI capabilities without subscription fees.References

Summarized by

Navi

[2]

Related Stories

Kimi K2: China's New Open-Source AI Model Challenges Global Competitors

12 Jul 2025•Technology

Chinese AI Startup Z.ai Challenges Global Competitors with Open-Source GLM-4.5 Model

29 Jul 2025•Technology

Chinese AI Models Close Gap With US Systems as Open-Source Strategy Reshapes Global Tech Order

22 Dec 2025•Policy and Regulation

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation