Google AI Studio Introduces Advanced Logging and Dataset Features for Enhanced AI Development

2 Sources

2 Sources

[1]

New tools in Google AI Studio to explore, debug and share logs

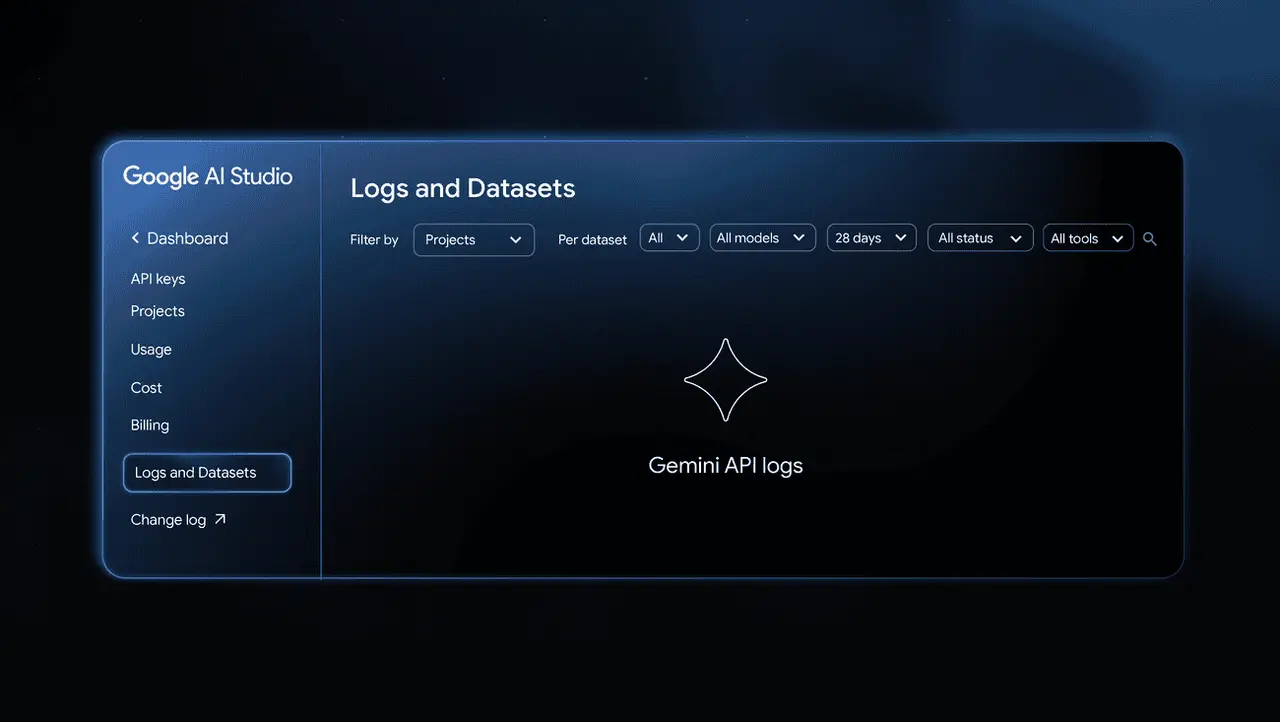

We're introducing a new logs and datasets feature in Google AI Studio, to help developers assess the quality of AI outputs and build with more confidence. A key challenge in developing AI-first applications is getting consistent, high-quality results -- especially as you iterate and grow. These new tools help to improve observability and streamline your debugging workflows, giving you quick and simple insights into how your application is working for both you and your end users. They also lay the groundwork for a broader set of evaluation capabilities.

[2]

Google AI Studio gets logging and dataset export features for developers

Google has introduced new logs and datasets features in Google AI Studio, allowing developers to assess AI output quality, track model behavior, and debug issues more effectively. The update improves observability and simplifies evaluation workflows for Gemini API-based applications. Developers can enable the new logging feature directly within the AI Studio dashboard by selecting "Enable logging." Once activated, all GenerateContent API calls from a billing-enabled Cloud project -- successful or failed -- become visible in the dashboard. This builds a complete interaction history without requiring code changes. Logging is available at no monetary cost across all regions where the Gemini API operates. It helps developers quickly identify issues and trace specific model interactions when debugging or refining their applications. Key capabilities include: Every user interaction can now be analyzed through exportable datasets, enabling offline testing and performance benchmarking. Developers can download these logs as CSV or JSONL files to study response quality and model consistency over time. These datasets provide a practical foundation for evaluating prompt effectiveness and measuring performance changes between iterations. Google also allows developers to share selected datasets to help improve model performance for real-world use cases. Shared data contributes to refining Gemini models and Google's AI products more broadly. The new logs and datasets features are now live in Google AI Studio Build mode. Once logging is enabled, developers can monitor their applications throughout the full lifecycle -- from prototype to production -- ensuring continuous quality assessment and easier debugging.

Share

Share

Copy Link

Google has launched new logging and dataset export capabilities in AI Studio, enabling developers to better assess AI output quality, debug applications, and share data for model improvement. The features provide comprehensive observability for Gemini API applications at no additional cost.

Enhanced Developer Tools for AI Applications

Google has unveiled significant new capabilities in Google AI Studio designed to address one of the most persistent challenges in AI development: ensuring consistent, high-quality outputs from artificial intelligence applications. The introduction of comprehensive logging and dataset management features represents a substantial step forward in providing developers with the observability tools necessary for building robust AI-first applications

1

.The core challenge these tools address is the difficulty developers face when trying to maintain quality and consistency as their AI applications scale and evolve. Without proper visibility into how models are performing across different interactions and use cases, developers often struggle to identify issues, debug problems, and optimize their applications effectively

2

.Comprehensive Logging Infrastructure

The new logging feature can be activated directly within the AI Studio dashboard through a simple "Enable logging" option. Once enabled, the system automatically captures all GenerateContent API calls from billing-enabled Cloud projects, regardless of whether these calls succeed or fail. This comprehensive approach ensures developers have complete visibility into their application's interaction patterns without requiring any code modifications

2

.The logging capability operates at no additional monetary cost and is available across all regions where the Gemini API functions. This accessibility ensures that developers of all scales can benefit from enhanced observability without worrying about additional expenses impacting their development budgets. The automatic nature of the logging means that once activated, developers can focus on building and refining their applications while the system maintains a detailed record of all interactions

2

.Advanced Dataset Export and Analysis

Beyond basic logging, the new features include sophisticated dataset export capabilities that allow developers to download their interaction logs in both CSV and JSONL formats. This functionality enables offline analysis, performance benchmarking, and detailed study of response quality and model consistency over time. Developers can use these exported datasets to evaluate prompt effectiveness and measure performance changes between different iterations of their applications

2

.The dataset export feature provides a practical foundation for systematic evaluation workflows. By having access to comprehensive interaction histories, developers can identify patterns, spot potential issues before they become problematic, and make data-driven decisions about how to improve their applications. This capability is particularly valuable for teams working on production applications where consistent performance is critical

1

.Related Stories

Collaborative Model Improvement

Google has also introduced a data sharing mechanism that allows developers to contribute selected datasets to help improve overall model performance. When developers choose to share their data, it contributes to refining Gemini models and Google's broader AI product ecosystem. This collaborative approach creates a feedback loop where real-world usage data helps improve the underlying models that all developers benefit from

2

.The sharing feature is designed with developer control in mind, allowing teams to select which datasets they want to contribute rather than automatically sharing all data. This approach respects privacy concerns while still enabling the collaborative benefits that come from shared learning across the developer community.

Production-Ready Monitoring

These new tools are now available in Google AI Studio's Build mode and are designed to support applications throughout their entire lifecycle, from initial prototyping through full production deployment. The continuous monitoring capabilities ensure that developers can maintain quality assessment and debugging capabilities as their applications scale and evolve. This end-to-end support addresses a critical gap in the AI development workflow where tools often focus on either development or production phases but not both

2

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Anthropic stands firm against Pentagon's demand for unrestricted military AI access

Policy and Regulation

3

Pentagon Clashes With AI Firms Over Autonomous Weapons and Mass Surveillance Red Lines

Policy and Regulation