Google DeepMind Unveils 'Nano Banana' AI Model, Revolutionizing Image Editing in Gemini

26 Sources

26 Sources

[1]

Google improves Gemini AI image editing with "nano banana" model

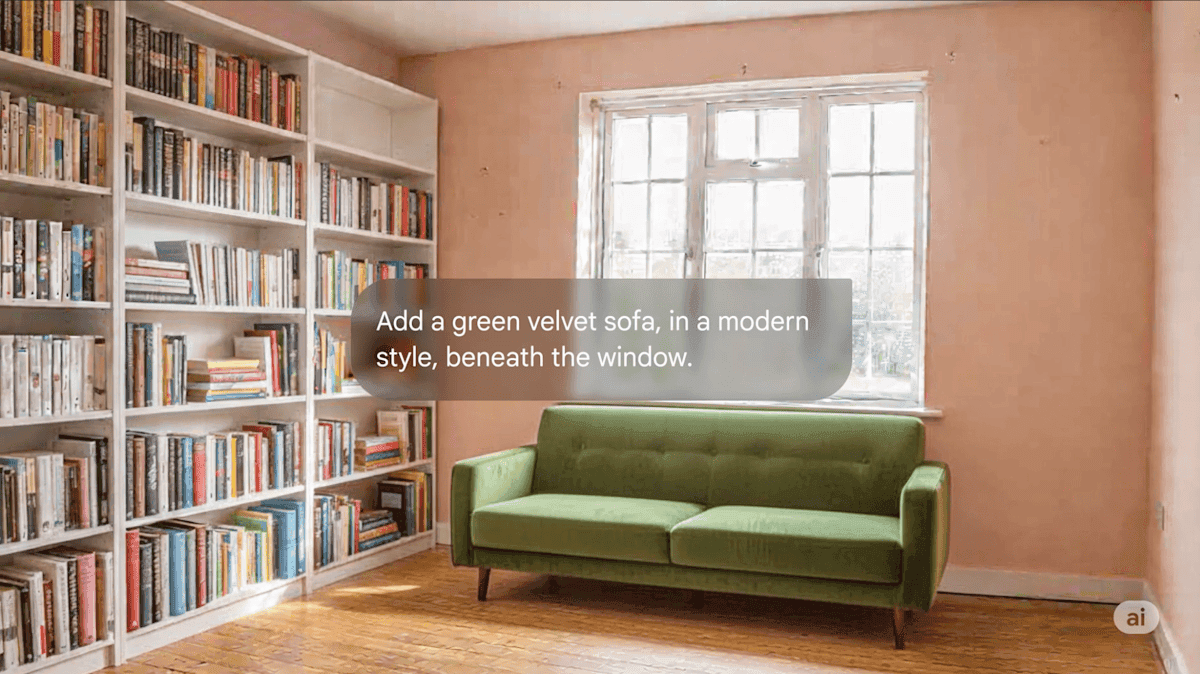

Something unusual happened in the world of AI image editing recently. A new model, known as "nano banana," started making the rounds with impressive abilities that landed it at the top of the LMArena leaderboard. Now, Google has revealed that nano banana is an innovation from Google DeepMind, and it's being rolled out to the Gemini app today. AI image editing allows you to modify images with a prompt rather than mucking around in Photoshop. Google first provided editing capabilities in Gemini earlier this year, and the model was more than competent out of the gate. But like all generative systems, the non-deterministic nature meant that elements of the image would often change in unpredictable ways. Google says nano banana (technically Gemini 2.5 Flash Image) has unrivaled consistency across edits -- it can actually remember the details instead of rolling the dice every time you make a change. This unlocks several interesting uses for AI image editing. Google suggests uploading a photo of a person and changing their style or attire. For example, you can reimagine someone as a matador or a '90s sitcom character. Because the nano banana model can maintain consistency through edits, the results should still look like the person in the original source image. This is also the case when you make multiple edits in a row. Google says that even down the line, the results should look like the original source material. Gemini's enhanced image editing can also merge multiple images, allowing you to use them as the fodder for a new image of your choosing. Google's example below takes separate images of a woman and a dog and uses them to generate a new snapshot of the dog getting cuddles -- possibly the best use of generative AI yet. Gemini image editing can also merge things in more abstract ways and will follow your prompts to create just about anything that doesn't run afoul of the model's guardrails. As with other Google AI image generation models, the output of Gemini 2.5 Flash Image always comes with a visible "AI" watermark in the corner. The image also has an invisible SynthID digital watermark that can be detected even after moderate modification. You can give the new native image editing a shot today in the Gemini app. Google says the new image model will also roll out soon in the Gemini API, AI Studio, and Vertex AI for developers.

[2]

Google Gemini's AI image model gets a 'bananas' upgrade | TechCrunch

Google is upgrading its Gemini chatbot with a new AI image model that gives users finer control over editing photos, a step meant to catch up with OpenAI's popular image tools and draw users from ChatGPT. The update, called Gemini 2.5 Flash Image, rolls out starting Tuesday to all users in the Gemini app, as well as to developers via the Gemini API, Google AI Studio, and Vertex AI platforms. Gemini's new AI image model is designed to make more precise edits to images -- based on natural language requests from users -- while preserving the consistency of faces, animals, and other details, something that most rival tools struggle with. For instance, ask ChatGPT or xAI's Grok to change the color of someone's shirt in a photo, and the result might include a distorted face or an altered background. Google's new tool has already drawn attention. In recent weeks, social media users raved over an impressive AI image editor in the crowdsourced evaluation platform, LMArena. The model appeared to users anonymously under the pseudonym "nano-banana." Google says it's behind the model (if it wasn't obvious already from all the banana-related hints), which is really the native image capability within its flagship Gemini 2.5 Flash AI model. Google says the image model is state-of-the-art on LMArena and other benchmarks. "We're really pushing visual quality forward, as well as the model's ability to follow instructions," said Nicole Brichtova, a product lead on visual generation models at Google DeepMind, in an interview with TechCrunch. "This update does a much better job making edits more seamlessly, and the models outputs are usable for whatever you want to use them for," said Brichtova. AI image models have become a critical battle ground for Big Tech. When OpenAI launched GPT-4o's native image generator in March, it drove ChatGPT's usage through the roof thanks to a frenzy of AI-generated Studio Ghibli memes that, according to OpenAI CEO Sam Altman, left the company's GPUs "melting." To keep up with OpenAI and Google, Meta announced last week that it would license AI image models from the startup Midjourney. Meanwhile, the a16z-backed German unicorn Black Forest Labs continues to dominate benchmarks with its FLUX AI image models. Perhaps Gemini's impressive AI image editor can help Google close its user gap with OpenAI. ChatGPT now logs more than 700 million weekly users. On Google's earnings call in July, the tech giant's CEO Sundar Pichai revealed that Gemini had 450 million monthly users -- implying weekly users are even lower. Brichtova says Google specifically designed the image model with consumer use cases in-mind, such as helping users visualize their home and garden projects. The model also has better "world knowledge" and can combine multiple references in a single prompt; for example, merging an image of a sofa, a living room photo, and a color palette into one cohesive render. While Gemini's new AI image generator makes it easier for users to make and edit realistic images, the company has safeguards that limit what users can create. Google has struggled with AI image generator safeguards in the past. At one point, the company apologized for Gemini generating historically inaccurate pictures of people, and rolled back the AI image generator altogether. Now, Google feels that it's struck a better balance. "We want to give users creative control so that they can get from the models what they want," said Brichtova. "But it's not like anything goes." The generative AI section of Google's terms of service prohibits users from generating "non-consensual intimate imagery." Those same kinds of safeguards don't seem to exist for Grok, which allowed users to create AI-generated explicit images resembling celebrities, such as Taylor Swift. To address the rise of deepfake imagery, which can make it hard for users to discern what's real online, Brichtova says that Google applies visual watermarks to AI-generated images, as well as identifiers in its metadata. However, someone scrolling past an image on social media may not look for such identifiers.

[3]

Google's New AI Image Model 'Bananas' Is Here: How to Edit Your Photos With Gemini

Google wants you to ditch Photoshop for Gemini. A new generative AI model, Gemini 2.5 Flash Image, upgrades Gemini's ability to edit your photos natively in the Gemini app. It's available now for all Gemini users, free and paying, and is rolling out in preview this week in the Gemini API and Google AI Studio and Vertex AI. A recent preview version of the new model on LMArena sparked interest from Gemini fans, even though the model only ranked seventh as of Monday, behind leaders like OpenAI's image model and Flux's Kontext Pro and Max. It's been referred to as the "nano bananas" model by AI enthusiasts, spurred on by a recent X post from Josh Woodward, Google's vice president of Google Labs and Google Gemini. You don't need to do anything to access the new model; it's automatically added to the base Gemini 2.5 Flash model. All you have to do is upload an image and type out your prompt, and Gemini will attempt to edit it. You should notice a difference when you ask Gemini to edit photos -- more precise edits and fewer errors from hallucinations. Adobe Express and Firefly users can also access the new model now. Some new features include the ability to replace backgrounds and layer multiple edits. You can also combine different elements with "design remix." For example, you can upload an image of a pair of rain boots and a pink rose, and Gemini will reimagine the rain boots in a floral pattern echoing the pink rose. You can also blend two photos together, creating a kind of composite image. Google's Gemini privacy policy says it can use the information you upload for improving its AI products, which is why the company recommends avoiding uploading sensitive or private information. The company's AI prohibitive use policy also outlaws the creation of illegal or abusive material. Google has been investing heavily in its generative media models this year, dropping updated versions of its image and video generator models at its annual I/O developers conference. Google's AI video generator Veo 3 stunned with synchronized audio, a first among the bevy of AI giants. Creators have made more than 100 million AI videos with Google's AI filmmaker tool, Flow. NotebookLM, an experimental product from Google Labs, also took off in popularity with its ability to turn any set of documents into a personalized podcast.

[4]

Top-rated mystery 'nano-banana' AI model rolls out to Gemini, as Google DeepMind claims responsibility

Google DeepMind was secretly behind the model, which is now publicly available. Every now and then, the LMArena will have a mystery model with a fun name shoot up to the top of the leaderboard. Shall I remind you of OpenAI's Project Strawberry? More recently, a mystery 'nano banana' model climbed to the top of the LMArena's photo-editing models on early previews (where it still resides), earning the title of 'top-rated image editing model in the world,' and you can now access it today. Also: You can now add AI images directly into LibreOffice documents - here's how On Tuesday, Google DeepMind revealed that it was responsible for the high-performing photo editing 'nano banana' model. With the model, users can edit pictures using natural language prompts while keeping the essence of the image intact better than ever before, and it is being integrated right in the Gemini app. If you have ever uploaded an image of yourself into an AI image generator and asked it to create a new version of it in some way, for example, making it a watercolor version of yourself or anime, you may have noticed that your features sometimes don't get lost in translation. This new model aims to change that, making the subject, whether it is a pet or a person, look like themselves. Google suggests putting photo editing to the test by changing your clothing or giving yourself a costume, or even changing your location entirely. As seen in the GIF below, the edited version keeps the person's appearance the same amidst outfit changes. These edits are more entertaining, but the AI editing tool can also be used for more practical edits. For example, you can take elements of two different photos and blend them together to create a new one. Google shares an example of a solo shot of a woman being combined with a short solo shot of a dog to make it look like they are cuddling. In a way, it is like everyone gets access to the Add Me feature that Google has been offering on its Pixel phones. Also: How a Meta partnership with Midjourney could inject more AI into future products With the model, you can also take advantage of multi-turn edits, in which you keep using prompts to tweak an element of the same photo until you get the intended result, such as adding different pieces of furniture to a room or adding different elements to the background of your photo. You can also take elements of one photo, such as a color, and apply them to a new image. The updated image editing is already available in the Gemini app starting today. All you need to do is enter your prompt to get started. Like all Gemini-generated images, images created or edited with this updated model will have the SynthID digital watermark, delineating that they are AI-modified.

[5]

Google DeepMind claims top-rated 'banana' mystery model - here's what it can do

Google DeepMind was secretly behind the model, which is now publicly available. Every now and then, the LMArena will have a mystery model with a fun name shoot up to the top of the leaderboard. Shall I remind you of OpenAI's Project Strawberry? More recently, a mystery 'nano banana' model climbed to the top of the LMArena's photo-editing models on early previews, earning the title of 'top-rated image editing model in the world,' and you can now access it today. Also: You can now add AI images directly into LibreOffice documents - here's how On Tuesday, Google DeepMind revealed that it was responsible for the high-performing photo editing 'nano banana' model. With the model, users can edit pictures using natural language prompts while keeping the essence of the image intact better than ever before, and it is being integrated right in the Gemini app. If you have ever uploaded an image of yourself into an AI image generator and asked it to create a new version of it in some way, for example, making it a watercolor version of yourself or anime, you may have noticed that your features sometimes don't get lost in translation. This new model aims to change that, making the subject, whether it is a pet or a person, look like themselves. Google suggests putting photo editing to the test by changing your clothing or giving yourself a costume, or even changing your location entirely. As seen in the GIF below, the edited version keeps the person's appearance the same amidst outfit changes. These edits are more entertaining, but the AI editing tool can also be used for more practical edits. For example, you can take elements of two different photos and blend them together to create a new one. Google shares an example of a solo shot of a woman being combined with a short solo shot of a dog to make it look like they are cuddling. In a way, it is like everyone gets access to the Add Me feature that Google has been offering on its Pixel phones. Also: How a Meta partnership with Midjourney could inject more AI into future products With the model, you can also take advantage of multi-turn edits, in which you keep using prompts to tweak an element of the same photo until you get the intended result, such as adding different pieces of furniture to a room or adding different elements to the background of your photo. You can also take elements of one photo, such as a color, and apply them to a new image. The updated image editing is already available in the Gemini app starting today. All you need to do is enter your prompt to get started. Like all Gemini-generated images, images created or edited with this updated model will have the SynthID digital watermark, delineating that they are AI-modified.

[6]

Your Gemini app just got a major AI image editing upgrade - for free

DeepMind was secretly behind the model, which is now available. Every now and then, the LMArena will have a mystery model with a fun name shoot up to the top of the leaderboard. Shall I remind you of OpenAI's Project Strawberry? More recently, a mystery 'nano banana' model climbed to the top of the LMArena's photo-editing models on early previews (where it still resides), earning the title of 'top-rated image editing model in the world,' and you can now access. Also: You can now add AI images directly into LibreOffice documents - here's how On Tuesday, Google DeepMind revealed that it was responsible for the high-performing photo editing 'nano banana' model. With the model, users can edit pictures using natural language prompts while keeping the essence of the image intact better than ever before, and it is being integrated right in the Gemini app. If you have ever uploaded an image of yourself into an AI image generator and asked it to create a new version of it in some way, for example, making it a watercolor version of yourself or anime, you may have noticed that your features sometimes don't get lost in translation. This new model aims to change that, making the subject, whether it is a pet or a person, look like themselves. Google suggests putting photo editing to the test by changing your clothing or giving yourself a costume, or even changing your location entirely. As seen in the GIF below, the edited version keeps the person's appearance the same amidst outfit changes. These edits are more entertaining, but the AI editing tool can also be used for more practical edits. For example, you can take elements of two different photos and blend them together to create a new one. Google shares an example of a solo shot of a woman being combined with a short solo shot of a dog to make it look like they are cuddling. In a way, it is like everyone gets access to the Add Me feature that Google has been offering on its Pixel phones. Also: How a Meta partnership with Midjourney could inject more AI into future products With the model, you can also take advantage of multi-turn edits, in which you keep using prompts to tweak an element of the same photo until you get the intended result, such as adding different pieces of furniture to a room or adding different elements to the background of your photo. You can also take elements of one photo, such as a color, and apply them to a new image. The updated image editing is already available in the Gemini app. All you need to do is enter your prompt to get started. Like all Gemini-generated images, images created or edited with this updated model will have the SynthID digital watermark, delineating that they are AI-modified.

[7]

Google Gemini 2.5 Flash Image (Nano Bananas) is quite good

Google has updated its Gemini AI image generation tool with a build that caused a stir after it was released under the code name Nano Bananas. The upgrade, technically called Gemini 2.5 Flash Image, lets users generate images through voice and text prompts, including swapping out participants in a photo, changing what they are wearing, or merging people from real images with new backgrounds. Google formally released it on Tuesday, though only via the Gemini mobile app, with the web version not getting all the new capabilities yet. We've been testing the new engine and the results are impressive. For example, Reg US Editor Avram Piltch took a photo of just his torso and a separate photo of two chairs. When he uploaded both photos to Gemini on his phone, he asked the engine to draw him seated in the red chair. Gemini not only placed Piltch in the red chair, but drew arms and legs for him that weren't in the original torso picture. It even completed the logo on his t-shirt that was only half visible in the original image. The only inaccurate thing about the merged photo was that his pants were black when, in real life, he was wearing blue jeans. He asked Gemini to change the pants to light blue jeans, and it did so without issue. "Just give Gemini a photo to work with, and tell it what you'd like to change to add your unique touch. Gemini lets you combine photos to put yourself in a picture with your pet, change the background of a room to preview new wallpaper or place yourself anywhere in the world you can imagine -- all while keeping you, you," the Chocolate Factory said. "Once you're done, you can even upload your edited image back into Gemini to turn your new photo into a fun video." In other tests, Piltch was took a picture of his daughter and asked that two statues next to her be removed. The statues disappeared with the shadow from a nearby tree extending to where they were before. He then asked that his daughter appear in front of the pyramids and Gemini obliged, even changing her posture so she was standing straighter. One major improvement users will notice right off the bat is how fast it is. In tests, images were done in seconds, with all the work taking place in the cloud. (Admittedly, we were using an older Pixel.) We even made the bananas cover art for this piece using Gemini. In a move that should have Adobe worried, Gemini shows real skill in letting image editors use AI to replace in seconds what might have taken a graphic designer hours, or at least minutes. Where you used to have to Photoshop someone into a picture, now you can just ask the tool to do it for you. Google has included a SynthID watermark to allow someone to identify the AI-generated images, which should be a big help in cutting down on fake pictures for spam, incitement, or for other purposes. That's not going to stop a wave of AI-generated spam shortly to be hitting your inboxes, but it will at least provide some safety checks. There are still some guardrails in Gemini 2.5 Flash Image, although they are somewhat limited. Generating pictures of Hitler, for example, is difficult but not impossible. If you want a celebrity such as Taylor Swift or Donald Trump, you won't have any problems, though. It does at least have safeguards against generating images of a pornographic bent, thankfully. Overall, while some images came out less than perfect, it's still a worthy rival to other LLM image designers from OpenAI or xAI's Grok. Google is rolling out the new system for Gemini API, Google AI Studio for developers, and Vertex AI, at a cost of $30 per one million output tokens, with each image being 1290 output tokens ($0.039 per image). This is very much an interim build, Google said, with more improvements coming down the line. It's also partnered with OpenRouter.ai and fal.ai to make the technology more accessible. It'll now be up to other AI companies to match Google's very compelling new feature set. ®

[8]

Mysterious 'Nano-Banana' Project Revealed to Be Google's Latest Image Editor

The tech giant came forward as the creator of the viral nano-banana AI image model. Google just upgraded its AI image model, and it actually looks to be a pretty significant step up. The company rolled out Gemini 2.5 Flash Image today, a major refresh that promises smarter and more flexible image generation. The upgraded model allows users to issue natural language prompts to not only generate images but also merge existing photos and make more precise edits without creating weird distortions. It also taps into Gemini’s “world knowledge†to better understand what it’s generating. This upgrade comes as Google tries to close the gap with the industry leader, OpenAI. In the past, image generation has been a major driver for AI. ChatGPT usage skyrocketed in March when the company launched its GPT-4o native image generator. The viral Studio Ghibli memes generated by the model resulted in the company's GPU models melting, according to OpenAI CEO Sam Altman. ChatGPT currently has over 700 million weekly users. By comparison, Google CEO Sundar Pichai revealed on the company’s July earnings call that Gemini had 450 million monthly users, still trailing behind ChatGPT. With its latest update, Google says it’s solved one of AI's biggest headaches. Until now, keeping characters or objects consistent across multiple edits has been a major challenge for AI image generators. "You can now place the same character into different environments, showcase a single product from multiple angles in new settings, or generate consistent brand assets, all while preserving the subject," the company wrote in a blog post. Google says users can now make very specific tweaks with just a prompt. For example, users can blur the background of an image, remove a stain from a T-shirt, change a subject’s pose, or even add color to a black-and-white photo. Even before its official launch, the new model was turning heads on the crowdsourced evaluation platform LMArena, where it appeared anonymously under the name “nano-banana.†One X user shared how they used nano-banana to change Altman’s shirt in a photo. The result was surprisingly good. Today, Google stepped forward and claimed ownership of the model, revealing that nano-banana was in fact Gemini 2.5 Flash Image. In addition to being available on the Gemini app, the new model is now accessible to developers through the Gemini API, Google AI Studio, and Vertex AI. Google has already built several template apps that make use of the new model on Google AI Studio, the company's coding AI assistant, and said users can vibe code on top of them. The company also said some developers have already experimented with the app to see how it would be useful in real-world scenarios, like creating real estate listing cards, employee uniform badges, and product mockups.

[9]

Google aims to be top banana in AI image editing

Why it matters: Nano Banana is the latest in a series of image-editing tools that have captured the internet's public eye, impressing users with its ability not only to generate new images but to refine them -- a skill that has proven elusive to AI makers. Driving the news: Google said the model that had been making waves under its code name will be available starting Tuesday to free and paid Gemini users on the web and in its mobile apps. * For now, free users can create up to 100 image edits per day, while paid users can make ten times as many edits with the tool, officially dubbed Gemini 2.5 Flash Image. * Under the Nano Banana name, the model had already been outperforming other models on the LMArena charts for image editing and generating significant buzz on social media. How it works: The new tool lets people create a photo from text or based on an existing image. * Google provided a reference photo of a young woman which the tool then transformed by putting her in matador garb. Google says the model is also better than prior models at multi-step edits. * In one example, Google shows a variety of stages of a room renovation being imagined, first with a fresh coat of paint and later with the addition of various pieces of furniture. The tool can also combine two images. * Google showed an example of one image getting transformed into a pattern that was then applied onto boots in another photo. * The model's creations are labeled as AI-generated using Google's SynthID watermarking system. Between the lines: While AI has proved helpful at generating images, it has tended to falter in the editing stage, particularly when asked to make changes to photos of real people. * Google says its new tool is better than its and others' previous models at making sure the subject of a photo doesn't start to look like someone else, especially as edits pile up. * "We know that when editing pictures of yourself or people you know well, subtle flaws matter -- a depiction that's 'close but not quite the same' doesn't feel right," Google said in a blog post. * "That's why our latest update is designed to make photos of your friends, family and even your pets look consistently like themselves, whether you're trying out a '60s beehive haircut or putting a tutu on your chihuahua." Flashback: OpenAI saw a surge of ChatGPT downloads and usage after it released a highly capable image generator in March. Yes, but: The more Google and its rivals refine their AI tools' ability to combine images or display people in new settings, the greater the threat of users creating deepfakes and misinformation.

[10]

Gemini expands image editing for enterprises: Consistency, collaboration, and control at scale

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now Google's highly speculated new image model, which many beta users know as nanobanana, has finally been released as Gemini 2.5 Flash Image and will be integrated into the Gemini app. The new model would give enterprises more choice for creative projects and enable them to change the look of images they need quickly. The model, built on top of Gemini 2.5 Flash, adds more capabilities to the native image editing on the Gemini app. Gemini 2.5 Flash Image maintains character likenesses between different images and has more consistency when editing pictures. If a user uploads a photo of their pet and then asks the model to change the background or add a hat to their dog, Gemini 2.5 Flash Image will do that without altering the subject of the picture. "We know that when editing pictures of yourself or people you know well, subtle flaws matter, a depiction that's 'close but not quite the same' doesn't feel right," Google said in a blog post written by Gemini Apps multimodal generation lead David Sharon and Google DeepMind Gemini image product lead Nicole Brichtova. "That's why our latest update is designed to make photos of your friends, family and even your pets look consistently like themselves." One complaint enterprises and some individual users had is that when prompting edits on AI-generated images, slight tweaks alter the photo too much. For example, someone may instruct the model to move a person's position in the picture, and while the model does what it's told, the person's face is altered slightly. All images generated on Gemini will include Google's SynthID watermark. The model is available for all paid and free users of the Gemini app. Speculation that Google plans to release a new image model ran rampant on social media platforms. Users on LM Arena saw a mysterious new model called nanobanana that followed "complex, multistep instructions with impressive accuracy," as Andressen Horowitz partner Justine Moore put it in a post. People soon noticed that the nanobanana model seemed to come from Google before several early testers confirmed it. Though at the time, Google did not confirm what it planned to do with the model on LM Arena. Up until this week, speculation on when the model would come out continued, which is prophetic in a way. Much of the excitement comes as the fight between model providers to offer more capable and realistic images and edits, showing how powerful multimodal models have become. Bringing image editing features directly into the chat platform would allow enterprises to fix images or graphs without moving windows. Users can upload a photo to Gemini, then tell the model what changes they want. Once they are satisfied, the new pictures can be reuploaded to Gemini and made into a video. Other than adding a costume or a location change, Gemini 2.5 Flash Image can blend different photos, offers multi-turn editing and mix styles of one picture to another.

[11]

Google Reveals Mystery AI Image Model That Can Smartly Edit Photos

As expected, an AI image model known as 'Nano Banana' that was making a big impression last week is in fact made by Google. The tech giant announced it last night along with its actual name 'Gemini 2.5 Flash Image.' "This update enables you to blend multiple images into a single image, maintain character consistency for rich storytelling, make targeted transformations using natural language, and use Gemini's world knowledge to generate and edit images," Google writes. The company has set up a web tool for editing photos, which I used to remove the cup from the dog photo. Business Insider declares that today is a "bad day" for Adobe. The website says that it has been testing Gemini 2.5 Flash Image for "several days" and found it to be deft at editing photos. BI notes that its ability to complete tasks like changing the color of an item of clothing or adding details such as a person's glasses, outperforms comparable apps. Adobe announced that Google's new AI model will be integrated into Adobe Firefly and Adobe Express, emphasizing that its ecosystem remains the premier hub for creators who want seamless movement and collaboration across different apps.

[12]

Google upgrades Gemini image editing with 'nano banana' model

Gemini is better at editing your images with a new model update from Google DeepMind. The model, which rose to the top of the LMArena leaderboard under the alias "nano banana," is actually called Gemini 2.5 Flash Image. The Google DeepMind team says this model has been trained to make subjects more consistent across various edits of AI-generated images. This has been an issue for AI image models, given their unpredictable nature. The ability to upload and natively edit photos in Gemini has been around since April of this year. Instead of learning the technical ins and outs of photo editing software like Photoshop, users can upload an image to Gemini and describe the changes they want in simple terms, no jargon required. With Gemini's updated model, Google says you can do things like change a subject's outfit and location, while keeping their likeness the same. You can also upload multiple photos and have the subjects appear together in the same photo, or add and alter specific details in an uploaded image to, say, see what a room looks like with a different color of paint or different furniture. Here's Gemini's attempt at editing my dog into the downward dog pose and relocating her to a yoga studio. Her likeness is the same, and it successfully edited the image to make her eyes open, but her body isn't arched in the way it should be. (I would know, I've seen this playful pose from her many times.) As Google DeepMind said in its announcement, the model might not always get it right. There might still be inaccuracies with fine details, text in the image, and inconsistencies. With my experiment, my dog's fur looks overly smooth, but her overall coloring, size, and shape stay the same. All images have a visible watermark and an invisible watermark called SynthID to mitigate any confusion over whether they're real or AI-generated. This update is now live, so you can try it out for yourself in the Gemini app.

[13]

I spent the weekend comparing Gemini's new Nano Banana image tool to ChatGPT - and there's one clear winner

At least as far as I'm concerned, ChatGPT has been ruling the roost for image generation in AI ever since its native image generation capabilities were added in March this year, sparking all sorts of viral image crazes, like the Studio Ghibili one, for example. Since then Google has taken a bit of a step back, but has been slowly working on its own rival, called Nano Banana. Last week it added its new image editor to Gemini and made it available to everybody, and now I think it has become my favourite image editor, even over ChatGPT. Here's why. Apparently the name Nano Banana was the anonymous codename for Google's Gemini 2.5 Flash Image model during its testing phase on the LMArena leaderboard, and over time it stuck. You can access Nano Banana in three ways, either through the Gemini app, selecting 'Create images' from the drop-down Tools menu in the prompt bar (you'll know it's now using Nano Banana because there's a new banana icon next to 'Create images'). Or you can go to Google's AI Studio and use it there, or you can head to its own dedicated website. I've been playing with Nanao Banana all weekend and can report that it's very good in three main areas: Character consistency, realism and image-to-image fusion. First of all, let's look at character consistency. If I ask Gemini 2.5 Flash to "Draw a cat in a historically accurate Roman Centurion helmet" it produces this magnificent-looking kitty: It's also a nice bright, clear image. If I then say: "Now make that cat appear in the coliseum". It does it, and the cat and the helmet look the same: With ChatGPT, I say: "Draw a cat in a historically accurate Roman Centurion helmet" and it produces this: It's got that characteristic, darker feel that ChatGPT seems to default to, but it is indeed a cat in a Roman helmet, albeit a wonky one. When I ask it to, "Now make that cat appear in the coliseum", it produces this: If you look closely you'll see the helmet is different now. It's little consistency details like this that Nano Banana seems better at. If I upload a picture of myself (I used the one down below in my profile), then ask Gemini Flash 2.5 to put me on a mountain, I get a picture that actually looks like me, on a mountain: Whereas ChatGPT makes an image that looks like an AI approximation of me (it doesn't help that it's clearly added a few pounds!): Not only am I fatter, but the image looks less real. Nano Banana can combine images together in a realistic way. For example, I uploaded the image of myself and some fence panels I put up at the weekend and asked Gemini to combine them together, while keeping the background image the same. In Gemini's version, the background picture hasn't changed at all, it's just added me into it: In contrast, even though I asked ChatGPT to keep the background the same, it reproduced a version of the background that was similar to the original, but with differences I could pick out. Not to mention that its version of me looked obviously made by AI. You could say that the ChatGPT image had better framing as a picture, but it didn't look as realistic. For me Gemini is now more useful than ChatGPT for creating images that look real. And I think that's what most people want AI images to be. All this is without mentioning one of the best things about Nano Banana in Gemini, which it that it's fast. It normally takes Gemini up to ten seconds to generate an image, whereas it can take ChatGPT up to a minute to make an image. That's a lot of waiting around. Of course, dedicated image creation tools like Midjourney will still be king of AI image generation for professionals, but you need to pay to use those. If want something that's very quick and gets the job done in the most realistic way possible, then for me, Gemini's Nano Banana is the clear winner.

[14]

Google Boosts Gemini AI Image Capabilities in Latest Salvo Against ChatGPT - Decrypt

Google has expanded access through OpenRouter and fal.ai, widening distribution to coders worldwide. Google launched Gemini 2.5 Flash Image on Tuesday, delivering a new AI model that generates and edits images with more precision and character consistency than previous tools -- attempting to close the gap with OpenAI's ChatGPT. The tech giant's push to integrate advanced image editing into Gemini reflects a broader push among AI platforms to include image generation as a must-have feature. The new tool, now available across Gemini apps and platforms, lets users edit visuals using natural language -- handling complex tasks like pose changes or multi-image fusion without distorting faces or scenes. In a blog post, Google said the model allows users to "place the same character into different environments, [and] showcase a single product from multiple angles... all while preserving the subject." The model first appeared under the pseudonym "nano-banana" on crowdsourced testing site LMArena, where it drew attention for its seamless editing. Google confirmed Tuesday it was behind the tool. Google said the system can fuse multiple images, maintain character consistency for storytelling or branding, and integrate "world knowledge" to interpret diagrams or combine reference materials -- all within a single prompt. The model costs $30 per million output tokens -- about four cents per image -- on Google Cloud. It's also being distributed via OpenRouter and fal.ai. OpenAI introduced the GPT-4o model in May 2024 and added image generation in March 2025, which helped push ChatGPT's usage above 700 million weekly active users. Google reported 400 million monthly active Gemini users in August 2025, which would indicate weekly usage that considerably trails OpenAI. Google said all outputs will include an invisible SynthID watermark and metadata tag to mark them as AI-generated to address concerns around misuse and authenticity.

[15]

Google updates Gemini with powerful new AI image model with photo editing capabilities - SiliconANGLE

Google LLC said today its updating its Gemini app and chatbot with a powerful new artificial intelligence image model that will allow users fine-grained photo editing capabilities. The new model, named Gemini 2.5 Flash Image, debuts today on the Gemini app so that users can start using it to edit their photos with natural language. The company claims that the new model provides state-of-the-art image generation and editing for photos, keeping the original composition, objects and people intact while adding, changing or removing whatever the user wants. Google DeepMind, the company's AI research arm, tested the new model under the mysteriously silly name "Nano Banana" on LMArena. This public site crowdsources anonymous feedback on AI model quality. At first, it was unknown what this new model might be, especially given its weird name, but users quickly sussed out that it must be from Google. The model outperformed every other photo-editing model on the site in early preview, gaining it the title of "top-rated editing model in the world." Although it was not without its flaws, the model proved to be superior for consistency, quality and following instructions. Now, DeepMind has revealed that the model is actually Gemini 2.5 Flash Image and powers the new Gemini image editing experience. "We're really pushing visual quality forward, as well as the model's ability to follow instructions," said Nicole Brichtova, a product lead on visual generation models at Google DeepMind, in an interview with TechCrunch. One of the problems for image editing and AI models has always been that models tend to make subtle or large modifications to images, even when users ask them to make small changes. For example, a user might take a photograph of themselves and ask a model to add glasses. The model might add glasses to their face, but it could dramatically change their features, adjust their hairstyle or an object in the background might change from one thing to another. To test out the new model, Google suggested that people try it out with a photo of themselves. They could use it to put themselves in a new outfit or change their location. The model is also capable of blending subjects from two different photos into a brand-new scene. For example, taking a picture of your cat and you and having it put you together on the couch. According to Google, this new model allows users to make multi-turn edits. Just take a photo and ask for one change and then follow up with another. This allows for iterative modifications to photos or images that feel natural. Since prompts can make specific requests about locations or subjects, the model will only change them and nothing else. Developers can also get access to this capability through Google's AI platforms and tools via Gemini API, Google AI Studio and Vertex AI. In addition to the news of the release, Adobe Inc. announced that it will add the new model to its AI-powered Firefly app and Adobe Express, making it easier for users to use the model to modify their photos and create stylized graphics with a consistent look and feel.

[16]

Google Unveils 'Nano-Banana' Image Model With Editing and Fusion Features | AIM

The model is priced at $30 per 1 million output tokens, with each image costing 1,290 tokens, or $0.039 per image. Google has announced the launch of Gemini 2.5 Flash Image, aka nano-banana, its latest image generation and editing model, now available through the Gemini API, Google AI Studio, and Vertex AI for enterprise. The company said the model allows users to blend multiple images, maintain character consistency across edits, perform targeted transformations with natural language prompts, and leverage Gemini's built-in world knowledge. "When we first launched native image generation in Gemini 2.0 Flash earlier this year, you told us you loved its low latency, cost-effectiveness, and ease of use. But you also gave us feedback that you needed higher-quality images and more powerful creative control," Google said in its announcement. The model is priced at $30 per 1 million output toke

[17]

Why Google's New AI Image Generator Could Give OpenAI a Run for Its Money

Google just dropped a major update for its AI image generation tech, enabling anyone to generate images with more accurate outcomes. In a blog post, Google revealed Gemini 2.5 Flash Image (also called nano-banana), its latest and greatest AI model for generating and editing images. Google says the new model gives users the ability to blend multiple images into a single image, maintain character consistency across multiple generations, and make more granular tweaks to specific parts of an image. One of the model's new features is that ability to maintain character consistency, meaning that if you create a specific look for an AI-generated character, the character will maintain that look each time you generate a new image featuring them. "You can now place the same character into different environments," Google wrote, "showcase a single product from multiple angles in new settings, or generate consistent brand assets, all while preserving the subject." Gemini 2.5 Flash Image can also make more granular edits to images, like blurring a background, and changing the color of an item of clothing.

[18]

Gemini's New AI Image Model Lets You Edit Your Outfit and Background

The AI model is said to be known for its character consistency Google introduced a new artificial intelligence (AI) image model on Tuesday, calling it the company's best image generation and editing model to date. Dubbed Gemini 2.5 Flash Image, the model improves on both the generation speed and element-based editing. Interestingly, the model went viral on the crowdsourced AI model ranking platform LMArena weeks before its official announcement. While in stealth mode, the model was named Nano Banana, and it has received praise from users for its high-quality image editing and consistent character. Notably, the Gemini 2.5 Flash Image is now available within the Gemini app. Google's New Image Model Offers Improved Editing Capabilities In a blog post, the Mountain View-based tech giant admitted that the Nano Banana AI model, which recently ranked first on LMArena, was in fact Google's Gemini 2.5 Flash Image. The company is not the first to test its model in stealth mode, as OpenAI was recently also spotted testing its GPT-4.1 model in a similar manner. While the company claims state-of-the-art (SOTA) speed and quality in image generation, the new model's key ability lies in image editing. Google says the model can now maintain a higher character consistency while editing elements within the image. One issue users have faced while editing non-synthetic images using Gemini is that the person in the frame sometimes gets distorted or altered to appear nothing like the input. Gadgets 360 staff members tried out the new model in the Gemini app and found that it is now able to change things like the colour of the t-shirt or add a hat to a person without affecting the person in the frame. The Gemini 2.5 Flash Image also gets a new image blending feature. With this, users can now take two different images and ask the AI to blend them into a single image. The success rate is mixed, and it largely depends on both the input images and the prompt. However, it is a fun tool to try out. Finally, the new image model also allows users to make multi-turn edits. This means that if users are not satisfied with the first iteration, they can continue requesting more changes in subsequent prompts, all while keeping the base character consistent. While end consumers can access the model in the Gemini app, developers can access it via the Gemini application programming interface (API), Google AI Studio, and Vertex AI (for enterprises). It is priced at $30 (roughly Rs. 2,600) per million output tokens, with each image consuming 1290 tokens. This means each image generation will cost $0.039 (roughly Rs. 3.5).

[19]

Google unveils Gemini 2.5 Flash Image upgrade - The Economic Times

Google on Tuesday unveiled Gemini 2.5 Flash Image, also called the Nano-Banana image generation and editing model available via the Gemini API and Google AI Studio for developers and Vertex AI for enterprise.Google has introduced an upgrade to its Gemini chatbot with a new image generation and editing model, giving users more control over modifying photos. The update, named Gemini 2.5 Flash Image, became available Tuesday across the Gemini app and to developers through the Gemini API, Google AI Studio, and Vertex AI, CEO Sundar Pichai said in a post on X. Nano-banana upgrade The feature allows users to: Gemini 2.5 Flash Image The news comes after Google launched Gemini 2.0 Flash, its most advanced suite of AI models, on February 5. The company said, consumer feedback has been taken into consideration to provide higher-quality images and more powerful creative control to the users. Gemini 2.5 Flash Image is priced at $30.00 per 1 million output tokens with each image being 1290 output tokens ($0.039 per image).

[20]

How to turn your photos into fun scenes with Google Gemini's Nano Banana AI edit

Photoshop now has a new competitor as Google DeepMind launched a new image editing tool in the Gemini app called Nano-Banana or Gemini 2.5 Flash Image. According to Google, this innovative editing model is designed to be more advanced and fun. It gives users greater creative freedom, allowing them to modify their photos while preserving the original appearance. As reports from OpenHunts suggest, Nano Banana is available to both free and paid users via the Gemini app. Free users can create up to 100 edits daily, while paid users can enjoy up to 1,000. Developers can leverage scalable integration through the Gemini API, AI Studio, and Vertex AI. Nano Banana in Gemini is Google DeepMind's latest image editing model integrated into the Gemini app. You can use this to edit photos with unprecedented control while preserving the likeness of people, pets, or objects across edits. Key features include: Both free and paid users can try it. All edited images carry visible and digital watermarks to indicate AI generation. Using this tool is pretty simple in Gemini: For users who love experimenting with their photos, the app will support turning still images into short videos as soon as the editing is complete.

[21]

The Mysterious Nano-Banana Image Model is From Google and It's Rolling Out in Gemini

Google has already integrated it into Gemini, letting users edit and transform images with text prompts with impeccable accuracy over multiple generations. For weeks, the mysterious nano-banana image model has been earning praise for generating impeccably accurate images. It could realistically blend images, edit parts of images while maintaining incredible coherence, and transform photos with simple natural language prompts. As it turns out, the nano-banana image model is actually Google's Gemini 2.5 Flash Image AI model. Yes, you read that right. Google was the first company to showcase Gemini's native image generation in March and it was powered by the Gemini 2.0 Flash Image model. Now, the upgraded native image generation feature is powered by Gemini 2.5 Flash Image. Unlike Diffusion models, it's natively multimodal and maintains incredible accuracy over multiple generations. The new Gemini 2.5 Flash Image (nano-banana) is currently the top-rated model on LMArena for image editing. It has scored a whopping 1,362 ELO points, much higher than the second best, Flux.1 Kontext [max] which has received 1,191 points. It goes on to show that Google's Gemini 2.5 Flash Image model is top-notch and can edit images conversationally while preserving the scene. What is interesting is that Google is already integrating the new image model in Gemini. Starting today, you can upload and edit images in Gemini and it will use the new Gemini 2.5 Flash Image (nano-banana) model. You can use this feature to combine multiple images, change specific parts of the image using text prompts, reimagine yourself in different avatars, and more. You can even try new costume, change the location, mix up images, and perform multi-turn editing over and over again while preserving the overall scene. Note that all images generated in the Gemini app will have a visible 'ai' watermark and an invisible SynthID watermark.

[22]

Google Gemini 2.5 Flash is Nano Banana AI Image Editor

What if editing an image was no longer about tedious adjustments and trial-and-error tweaks, but instead felt like wielding a magic wand? Enter Google Gemini 2.5 Flash, a new AI tool that's redefining how we create and manipulate visuals. Nicknamed "Nano Banana" for its compact yet powerful capabilities, this innovation doesn't just enhance images, it transforms them. From seamlessly removing objects to generating entire 3D scenes with lifelike precision, Gemini 2.5 Flash offers a level of control and creativity that feels almost futuristic. Whether you're a seasoned designer or a curious beginner, this tool promises to transform your creative process, blurring the line between imagination and reality. In this report, Matthew Berman explores how Google's Gemini 2.5 Flash is reshaping the world of AI-powered image editing, offering tools that range from advanced style transfer to material transformation. You'll discover how it enables everything from restoring damaged photographs to crafting surreal, imaginative scenes. But this isn't just about flashy features, it's about solving real-world challenges, like maintaining brand consistency or visualizing complex 3D designs. What makes this tool truly remarkable isn't just its versatility, but its ability to balance technical precision with artistic freedom. Could this be the most comprehensive image editor yet? Let's unpack its capabilities and find out. At the core of Gemini 2.5 Flash lies its ability to edit and transform images with remarkable precision. You can seamlessly add or remove elements, such as objects or individuals, while maintaining the natural integrity of the scene. The model's ability to flip objects and predict unseen perspectives ensures dynamic and realistic visual adjustments. Furthermore, its advanced style transfer technology allows you to apply artistic or thematic styles to images, making sure a cohesive aesthetic across edits without compromising quality. This feature is particularly useful for maintaining brand consistency or creating visually striking content. For projects requiring 3D visualization, Gemini 2.5 Flash delivers exceptional results. It can generate multiple angles of objects or characters, allowing the creation of detailed character sheets with varied poses and perspectives. This capability is invaluable for professionals such as animators, game developers, and product designers who require accurate and realistic 3D renderings. By simulating depth, lighting, and texture with precision, the tool simplifies complex workflows and enhances creative possibilities. Check out more relevant guides from our extensive collection on AI-powered image editing that you might find useful. Gemini 2.5 Flash goes beyond editing by offering the ability to generate lifelike scenes from scratch. With its advanced understanding of physics, the model accurately simulates lighting, reflections, and material properties, resulting in highly realistic visuals. Whether you are designing surreal imagery, such as animals in elaborate costumes, or creating thematic visuals for marketing campaigns, this tool provides a robust platform for creative exploration. Its ability to balance realism with imagination makes it a valuable asset for artists and designers alike. For those working with old or damaged photographs, Gemini 2.5 Flash offers powerful restoration capabilities. It can repair imperfections, restore missing details, and add realistic colors to black-and-white images, effectively breathing new life into historical or personal photographs. Additionally, the model's ability to expand images by zooming out ensures seamless integration of new areas while maintaining visual coherence. This feature is particularly beneficial for archivists, historians, and anyone looking to preserve or enhance photographic memories. One of the standout features of Gemini 2.5 Flash is its ability to alter materials within an image. For example, you can transform an object's surface from glass to wood or from ice to metal while preserving the surrounding elements. This capability extends to adapting images to specific artistic or thematic styles, making it a versatile tool for creative professionals. Whether you are designing product prototypes or creating unique visual effects, the ability to manipulate materials and styles opens up new possibilities for innovation. Gemini 2.5 Flash supports the creation of chronological image sequences, allowing you to depict gradual changes over time. For instance, you can illustrate the decay of an object, the growth of a plant, or the transformation of a landscape. This feature ensures logical progression across multi-frame edits, making it an ideal tool for storytelling, animation, and educational content. By providing a clear narrative through visuals, the tool enhances engagement and understanding for audiences. The advanced capabilities of Gemini 2.5 Flash extend to specialized applications, such as handling complex reflections, modeling human anatomy, and counting objects within a scene. These features make it an invaluable resource for fields like medical imaging, architectural visualization, and scientific research. Additionally, its creative tools, such as meme creation and thumbnail generation, cater to content creators seeking innovative and efficient solutions. The model's adaptability ensures it meets the needs of both technical and artistic projects. Ranked at the top of the LM Arena image editing leaderboard, Gemini 2.5 Flash outperforms its predecessors with significant improvements in performance metrics, including ELO scores. Accessible through Google Studio and Gemini platforms, the model offers adjustable settings that allow you to fine-tune creativity and safety parameters to suit your specific requirements. Its user-friendly interface ensures that professionals and beginners alike can use its full potential without a steep learning curve. Gemini 2.5 Flash stands out as a versatile tool for professionals across various industries. Its 3D modeling and product design tools streamline workflows for engineers and designers, while its creative content generation capabilities empower marketers and artists. By combining advanced features with user-friendly accessibility, Gemini 2.5 Flash ensures it meets the diverse demands of modern visual content creation. Whether you are restoring photographs, designing products, or generating imaginative visuals, this tool equips you with the precision and creativity needed to bring your ideas to life.

[23]

New Gemini 2.5 Flash Image Model : Create Stunning Visuals in Seconds

What if creating stunning, professional-grade visuals was as simple as having a conversation? Enter the Gemini 2.5 Flash Image model, affectionately nicknamed Nano Banana, a new leap in multimodal AI that's redefining how we interact with images. Imagine describing a bustling cityscape bathed in the golden hues of sunset, and watching as an AI not only generates that scene but also allows you to refine it with effortless, conversational adjustments. Bold claim? Perhaps. But with its ability to combine advanced reasoning and intuitive editing, Gemini 2.5 is proving that the future of creativity is here, and it's astonishingly accessible. Sam Witteveen takes you through how Gemini 2.5 is reshaping industries and empowering individuals, from marketers crafting tailored campaigns to archivists breathing new life into historical photographs. You'll discover its versatile features, like precise image restoration, perspective transformations, and even the ethically mindful use of celebrity likenesses. But this isn't just about technology; it's about how this tool bridges the gap between imagination and execution, unlocking creative potential in ways we've only dreamed of. As we delve deeper, you might just find yourself questioning not only what's possible but what's next in the ever-evolving world of AI-driven innovation. Gemini 2.5 image creation and manipulation stands out due to its exceptional multimodal understanding, allowing it to interpret and respond to prompts with high accuracy. Unlike traditional models, it integrates advanced reasoning to create contextually relevant and visually compelling images. For instance, if prompted with "a bustling cityscape at sunset," the model can generate an image that captures the essence of the description. Furthermore, its conversational input capabilities enable users to make seamless edits, making sure that modifications align with the original image's intent and quality. This model is equipped with a variety of features that redefine how users approach image generation and editing: The versatility of Gemini 2.5 makes it a powerful tool across a wide range of industries, offering practical solutions to complex challenges: Here are more detailed guides and articles that you may find helpful on AI image generation. Gemini 2.5 is designed to integrate seamlessly into diverse workflows, offering accessibility and scalability through cloud-based platforms such as AI Studio and Google Cloud. This approach ensures that users can collaborate effectively across teams and manage projects of any size with ease. Whether you're working on a small-scale task or a large enterprise initiative, the model's cloud-based infrastructure simplifies deployment and enhances productivity. Additionally, its compatibility with various platforms ensures that users can access its features from virtually anywhere, making it a flexible and reliable tool for professionals and hobbyists alike. While Gemini 2.5 unlocks new creative possibilities, it also raises important ethical considerations. For example, the use of celebrity likenesses requires strict adherence to legal guidelines and respect for individual rights. Similarly, the potential for misuse in generating misleading or harmful content underscores the need for responsible usage. As you explore the model's capabilities, it is essential to prioritize ethical practices and remain mindful of the broader implications of AI-driven technologies. The Gemini 2.5 Flash Image model, or Nano Banana, represents a significant step forward in AI-driven image generation and editing. Its ability to combine advanced reasoning with multimodal understanding makes it a versatile and powerful tool for a wide range of applications. Whether you're creating marketing materials, restoring historical photographs, or experimenting with creative projects, Gemini 2.5 enables you to bring your ideas to life with precision and efficiency. By using its innovative features and adhering to ethical practices, users can unlock the full potential of this new technology and redefine their approach to visual content creation.

[24]

Gemini 2.5 Flash image generation and editing model launched

Google unveiled Gemini 2.5 Flash Image (aka nano-banana), the company's latest image generation and editing model. The model is designed to excel at maintaining character consistency, adhering to visual templates, enabling targeted transformations, and making precise local edits using natural language. This latest image generation and editing model tops the LMArena's Image Edit Arena leaderboard, outperforming FLUX.1 Kontext model in terms of voting and score. For wider availability, Google has integrated it into its Gemini app, allowing users to create their perfect picture. Demonstrating various use cases of the model, Google has listed certain capabilities for users to try out: It is also available via the Gemini API and Google AI Studio for developers and Vertex AI for enterprise. Gemini 2.5 Flash Image is priced at USD 30.00 per 1 million output tokens, with each image being 1290 output tokens (USD 0.039 per image).

[25]

Google's Gemini App Introduces New AI-Powered Photo Editing Features

Google has upgraded its Gemini app with a suite of generative AI photo editing tools. The tech giant aims to improve creative possibilities for mobile users, according to a report by . The update introduces four major features for iPhone and Android phones. It promises to make advanced image editing faster and more intuitive. The standout 'Reimagine' tool allows users to into imaginative digital art. With a few taps, the AI can change outfits, swap backgrounds, or add playful elements, such as placing subjects in traditional attire or exotic locations, all within seconds. 's new 'Blend' feature merges two or three images into one cohesive group photo. Meanwhile, multi-turn editing enables users to make step-by-step modifications to images, such as specifying furniture placement in a room, with AI seamlessly integrating the edits. The 'Mix Up Design' feature enables users to generate unique designs, such as creating a dress inspired by a butterfly's pattern. Every AI-generated or carries both a visible watermark and Google's invisible SynthID watermark, ensuring transparency and authenticity. Gemini's new features mark the tech giant's continued push to integrate generative AI into flagship apps and gadgets, including the . The update will roll out to compatible smartphones worldwide in the coming days, making advanced creative tools more accessible to everyday users.

[26]

Inside Google's Nano Banana: Gemini's new AI image editor

Why Google's Nano Banana is a leap for AI editing, but not for portraits When Sundar Pichai dropped three banana emojis on X in late August, few guessed it was a teaser for Google's newest image-editing breakthrough. The post signaled the launch of Gemini 2.5 Flash Image, a powerful AI model for the Gemini app - quickly nicknamed "Nano Banana." The playful codename belies the seriousness of the update. Nano Banana supercharges Gemini's ability to edit images: it can swap backgrounds, blend multiple photos, transform styles, or even generate surreal compositions with remarkable coherence. Unlike earlier models, it excels at keeping objects, poses, and general likenesses intact across multiple edits. But there's one area where it stumbles - faces. Unlike Gemini's "Reimagine" feature, which is designed to preserve identity in subtle tweaks, Nano Banana often alters facial features more than users expect. That makes it a game-changing tool for creative editing, but a less dependable one for those hoping to maintain portrait accuracy. Also read: How Grok, ChatGPT, Claude, Perplexity, and Gemini handle your data for AI training Nano Banana is built on Gemini's multimodal backbone, trained not just to render photorealistic images but also to interpret instructions with context. Ask it to put your dog in a pirate costume, blend your photo with a landscape, or turn a pencil sketch into a polished illustration, and it executes with striking stability. The model allows for three major workflows: text-to-image, image + text-to-image, and multi-image fusion. This means you can generate visuals from scratch, enhance an uploaded photo with text prompts, or merge several images into one cohesive output. Its strength lies in editing with continuity, clothing textures, lighting conditions, and object relationships tend to remain stable across transformations. But when it comes to human likeness, Nano Banana shows its limits. Multiple edits often lead to subtle but noticeable face changes: different jawlines, eye shapes, or even an entirely new expression. For casual or creative work, this can feel like variety. For someone hoping to see their own face preserved through outfit swaps or background changes, it's a drawback. Nano Banana isn't just for casual users of the Gemini app. Developers can tap into it today via the Gemini API, Google AI Studio, and Vertex AI. The pricing comes in at about $30 per 1 million output tokens, which works out to roughly $0.039 per image. Also read: Google Gemini's Reimagine feature: 5 wild image edits that push AI boundaries To encourage experimentation, Google has rolled out template apps inside AI Studio. One demo focuses on multi-image fusion (drag, drop, and blend), while another explores stylistic consistency across sets of images. These tools lower the entry barrier for developers looking to build creative applications without writing heavy code. As with its other generative models, Google has baked in guardrails. Every output carries both a visible watermark and an invisible SynthID digital watermark, signaling that it's AI-generated. While the visible mark can be cropped or overlooked, the invisible one is harder to remove, but detection tools for it are still rolling out gradually. These measures highlight the tension around Nano Banana's release. On one hand, it's a playful, powerful creative assistant. On the other, its ability to generate realistic edits - sometimes with altered identities - feeds into ongoing debates about authenticity, misinformation, and deepfake misuse. For all the hype, Nano Banana isn't flawless. Beyond its face consistency issue, users have flagged a few quirks. Cropping into specific aspect ratios is oddly missing for such an advanced tool, leaving creators to rely on third-party editors. Resolution also lags compared to some rivals, with no built-in upscaling option yet. Still, for stylized editing, be it swapping outfits, shifting lighting, or fusing multiple concepts, it shines. The model is better thought of as a creative transformer rather than a portrait preserver. If you want your selfie in different hairstyles with your face intact, Reimagine is still your friend. If you want to turn yourself into a neon-lit comic hero or merge three landscapes into one, Nano Banana is the better bet. Nano Banana is a milestone in Google's march toward multimodal AI creativity. But it's not perfect. For all its polish in objects, styles, and compositions, its inability to lock down facial identity remains a noticeable flaw. For creators and developers, the message is clear: Nano Banana expands what's possible, but it's not yet the all-purpose image editor many hoped for. It's an exciting step, but one that leaves room for refinement.

Share

Share

Copy Link

Google DeepMind reveals its responsibility for the top-rated 'nano banana' AI image editing model, now integrated into the Gemini app, offering advanced photo manipulation capabilities with improved consistency and detail preservation.

Google Unveils 'Nano Banana' AI Model for Advanced Image Editing

Google DeepMind has revealed its responsibility for the mysterious 'nano banana' AI model that recently topped the LMArena leaderboard for image editing. This advanced model, officially named Gemini 2.5 Flash Image, is now being integrated into the Gemini app, offering users unprecedented capabilities in AI-powered photo manipulation

1

.

Source: Ars Technica

Enhanced Consistency and Detail Preservation

The new model addresses a significant challenge in AI image editing: maintaining consistency across multiple edits. Unlike previous iterations, Gemini 2.5 Flash Image can "remember" details from the original image, ensuring that elements like faces and objects remain recognizable even after substantial modifications

2

.Nicole Brichtova, a product lead at Google DeepMind, emphasized, "We're really pushing visual quality forward, as well as the model's ability to follow instructions." This improvement allows users to make multiple edits to an image while preserving the essence of the original source material

2

.Advanced Features and Use Cases

Gemini's upgraded image editing capabilities include:

- Style and attire changes: Users can reimagine subjects as different characters or in various settings while maintaining their likeness

1

. - Image merging: The model can combine elements from multiple images to create new, cohesive compositions

3

. - Multi-turn edits: Users can progressively refine images through multiple prompts, such as adding furniture to a room or modifying background elements

4

. - Enhanced "world knowledge": The model can better understand and implement complex visual concepts and combinations

2

.

Source: SiliconANGLE

Availability and Integration

The Gemini 2.5 Flash Image model is now available to all Gemini app users, both free and paying. Google is also rolling out the technology to developers through the Gemini API, AI Studio, and Vertex AI platforms

2

. This wide availability aims to make advanced AI image editing more accessible to a broader audience.Related Stories

Safeguards and Ethical Considerations

As with previous AI image generation tools, Google has implemented safeguards to prevent misuse. All images created or edited with Gemini 2.5 Flash Image include a visible "AI" watermark and an invisible SynthID digital watermark that persists even after moderate modifications

1

. These measures address concerns about deepfakes and help users distinguish AI-generated content from authentic images.Competitive Landscape

Source: ET

The release of this advanced image editing model comes at a crucial time in the AI industry. With OpenAI's ChatGPT boasting over 700 million weekly users and Gemini at 450 million monthly users, Google is likely hoping that these enhanced capabilities will help close the gap

2

. The competition in AI image generation has intensified, with companies like Meta partnering with Midjourney and startups like Black Forest Labs making significant strides2

5

.As AI image editing becomes more sophisticated and accessible, it promises to revolutionize creative workflows for both professionals and casual users. However, the technology also raises important questions about the future of visual media authenticity and the potential for misuse, underscoring the need for ongoing ethical considerations and safeguards in AI development.

References

Summarized by

Navi

[1]

Related Stories

Google Gemini's AI Image Editing Gets a Powerful Upgrade: Consistency and Advanced Features

27 Aug 2025•Technology

Google's Nano Banana Pro sets new AI image generation benchmark but raises troubling questions

02 Dec 2025•Technology

Google's Gemini 2.5 Flash Image Model Goes Mainstream with Enhanced Features

03 Oct 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Pentagon Summons Anthropic CEO as $200M Contract Faces Supply Chain Risk Over AI Restrictions

Policy and Regulation

3

Canada Summons OpenAI Executives After ChatGPT User Became Mass Shooting Suspect

Policy and Regulation