Google Developing 'Live for AI Mode': Integrating Real-Time Voice Conversations with Visual Search

3 Sources

3 Sources

[1]

Here's your first look at Live for Google AI Mode (APK teardown)

When ready AI Mode Live should support camera and screen input. When Google comes up with something it likes, you had better believe that you're going to start seeing it everywhere. That's absolutely true not just for Gemini itself, but also for many of the ways Google has enhanced it AI systems through new functionality and means to access them. Right now, Google's making a big push for Search AI Mode, expanding access to its tests and even starting to introduce it to some non-testers. Now we're getting an early look at what could be Google's next big upgrade to come through here.

[2]

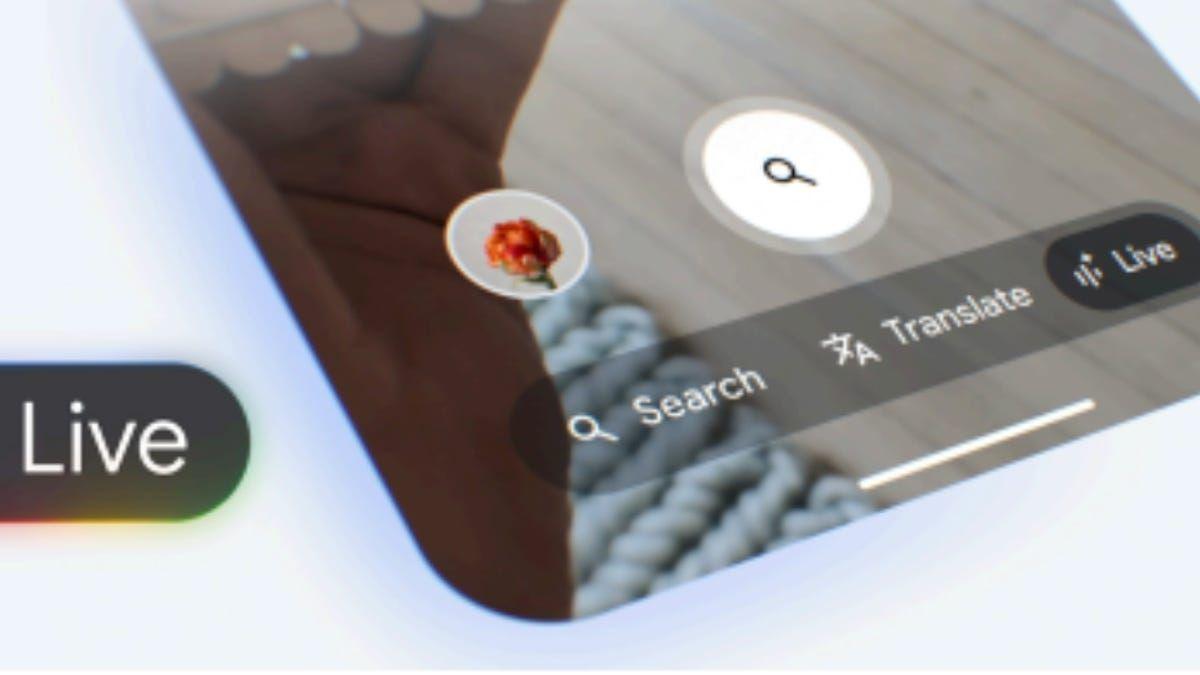

Gemini Live's camera search could be coming to Google's new AI Mode

Summary Google is developing a new "Live for AI Mode" feature, discovered in the latest Google app beta, which aims to bring real-time voice conversation capabilities, similar to Gemini Live's Project Astra, to AI Mode for enhanced search. This potential integration builds upon AI Mode's existing visual identification features (powered by Google Lens) and the recent move to make both AI Mode and Gemini Live's screen/video sharing functionalities free for all users. Code strings suggest that "Live for AI Mode" will be accessible within Google Lens, offering features like voice search initiation, volume control, response interruption, screen/video sharing, and a transcript with web links, pointing towards a more interactive and conversational search experience. Google's Project Astra, which is essentially the formal name given to Gemini Live's ability to gather on-screen and camera-view context, was previously locked behind the Gemini Advanced paywall. The feature went completely free-to-use last month, and has since become widely available on all compatible Android devices. Related Gemini Live's best feature is now free for everyone Astra features go public Posts 10 In similar vein, Google's new AI Mode, too, was initially paywalled, before it lost its Gemini One AI Premium exclusivity -- and subsequently its waitlist too (US only). Now, Google might just be working on integrating the two 'newly-freed-up' features together by bringing Live's Project Astra capabilities to AI Mode. For reference, this isn't the first 'visual identification' feature to make its way to AI Mode. Early in April, AI Mode became privy to Google Lens' secret sauce, allowing users to gain a deeper understanding of their visual search. Related Google's AI Mode gains popular camera search as rollout expands to more users Google AI Mode unlocks new senses Posts Now, with the potential 'Live for AI Mode' feature expected to be in development, as highlighted by the folks at 9to5Google, users can look forward to a more conversational AI Mode visual search experience. Hints surrounding the feature's arrival were found in the latest Google app beta (version 16.17), suggesting that Live functionality, at least in AI Mode, will reside within Google Lens. Live in AI Mode will offer transcripts too </string>="lens_live_onboarding_title">Start speaking to search</string> <string name="lens_live_volume_error_subtitle">To start searching and hear results, raise the volume on your device</string> <string name="lens_live_wave_tap_view_description">Tap to interrupt response playback.</string> <string name="lens_live_web_corroborations_termination_message">"It's not possible to ask a follow up question. Your next question will start a new search."</string> Users will have the option to run Live search with or without their microphone enabled. In addition to camera view, users will also be able to share their screen with AI Mode, as indicated by "Screencast" and "Video" references in the code strings. Further, similar to a regular Gemini Live session, AI Mode will return a transcript, complete with relevant links, allowing you to revisit your conversation with the AI tool. Other strings also indicate the presence of a peristent notification to indicate a Live session, dedicated mute/unmute buttons, and buttons to enable/disable video. It's not entirely clear when the feature might land, but considering how both AI Mode and Gemini Live screen/video sharing are now free for all, integrating the two services seems like the logical next step for Google. <string name="lens_live_coachmark_ecosystem">Tap links to explore the web while you chat<string name <string name="lens_live_coachmark_transcript">Tap \\"transcript\\" to read your conversations<string name

[3]

Google AI Mode and Lens are working on its own conversational 'Live' camera mode

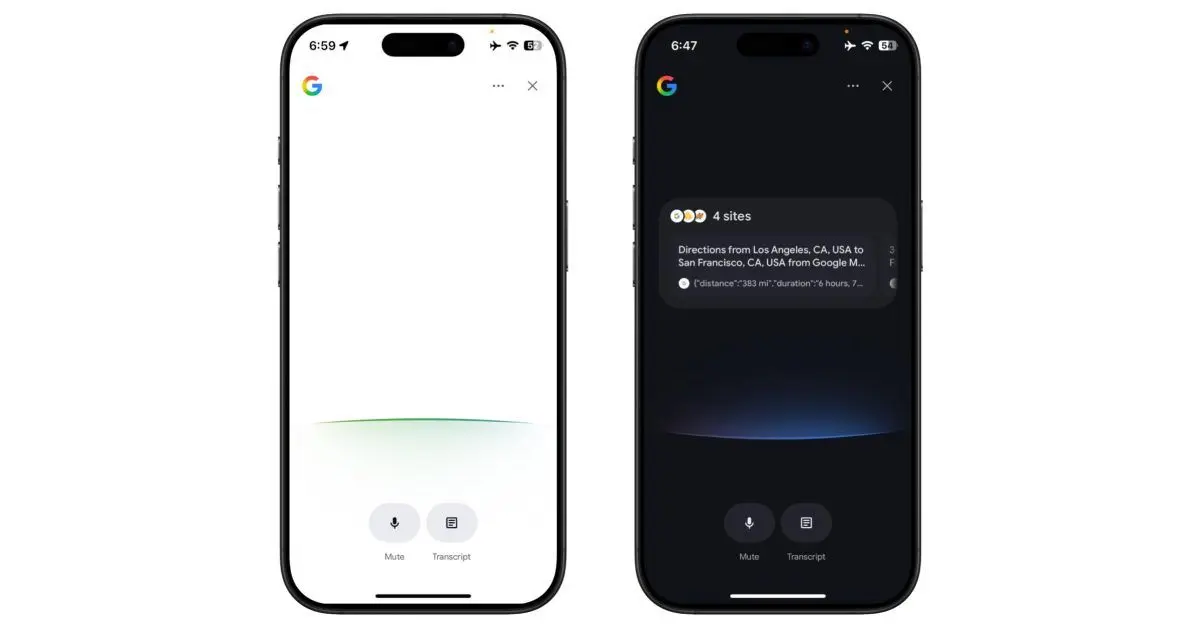

Last month, AI Mode gained Google Lens integration (as depicted above) to power multimodal search. Google is now working on "Live for AI Mode." About APK Insight: In this "APK Insight" post, we've decompiled the latest version of an application that Google uploaded to the Play Store. When we decompile these files (called APKs, in the case of Android apps), we're able to see various lines of code within that hint at possible future features. Keep in mind that Google may or may not ever ship these features, and our interpretation of what they are may be imperfect. We'll try to enable those that are closer to being finished, however, to show you how they'll look in case that they do ship. With that in mind, read on. In essence, it appears that Google Search is getting an equivalent Gemini Live-Project Astra capability. The difference, however, appears to be a focus on searching rather than providing personal assistance. Strings in the latest Google app beta (version 16.17) explain how: "With Live, you can have a real-time voice conversation with AI Mode to find exactly what you're looking for. Tap the mute button to mute the microphone, tap close to exit. From the usual disclaimer, we get the official name: "Live for AI Mode is experimental and can make mistakes." <string name="lens_live_error_labs_unenrolled_title">Live requires AI Mode</string> Notably, Google is building this Live capability into Google Lens: Like Gemini Live, there's a reminder to increase your volume and the ability to interrupt responses. There will also be a control notification while this is active: You will also be able to share your screen in addition to the camera: Of note is the ability to enable a "Transcript" to read your conversations, with Google showing "links to explore the web while you chat."

Share

Share

Copy Link

Google is working on a new feature called 'Live for AI Mode' that aims to bring real-time voice conversation capabilities to its AI-powered search, integrating visual search and screen sharing functionalities.

Google's New 'Live for AI Mode' Feature

Google is developing a new feature called 'Live for AI Mode,' which aims to integrate real-time voice conversation capabilities with its AI-powered search functionality. This development, discovered in the latest Google app beta (version 16.17), suggests a significant enhancement to the company's AI Mode and Google Lens offerings

1

2

3

.Integration of Visual Search and Voice Conversations

The new feature is expected to combine elements of Google's existing AI Mode, which recently gained Google Lens integration for multimodal search, with capabilities similar to Gemini Live's Project Astra. This integration would allow users to engage in voice conversations while utilizing visual search functionalities

2

3

.Key features of 'Live for AI Mode' include:

- Real-time voice conversations with AI Mode

- Camera and screen input support

- Ability to share screens and videos

- Transcript feature with web links for reference

- Options to mute/unmute and interrupt responses

Accessibility and Placement

'Live for AI Mode' is expected to be accessible within Google Lens, indicating a strategic placement that leverages existing visual search capabilities. This integration follows Google's recent moves to make both AI Mode and Gemini Live's screen/video sharing functionalities free for all users

2

.Enhanced User Experience

The feature aims to provide a more interactive and conversational search experience. Users will be able to:

- Initiate voice searches

- Control volume and interrupt responses

- Access transcripts of conversations with relevant web links

- Toggle between camera view and screen sharing

Related Stories

Potential Impact on Search Functionality

While similar to Gemini Live-Project Astra, 'Live for AI Mode' appears to focus more on enhancing search capabilities rather than providing personal assistance. This distinction suggests Google's intention to improve its core search functionality with advanced AI-driven features

3

.Development Status and Availability

The feature is still in development, with no clear timeline for public release. However, the integration of free AI Mode and Gemini Live screen/video sharing functionalities suggests that 'Live for AI Mode' could be the next logical step in Google's AI strategy

2

.As Google continues to expand its AI offerings, 'Live for AI Mode' represents a significant step towards more intuitive, multimodal search experiences that combine visual, auditory, and conversational elements.

References

Summarized by

Navi

[1]

[2]

Related Stories

Recent Highlights

1

SpaceX acquires xAI in $1.25 trillion merger, plans 1 million satellite data centers in orbit

Business and Economy

2

EU launches formal investigation into Grok over sexualized deepfakes and child abuse material

Policy and Regulation

3

SpaceX files to launch 1 million satellites as orbital data centers for AI computing power

Technology