Google's Gemini 2.5 Computer Use: AI Takes a Leap Towards Autonomous Web Navigation

14 Sources

14 Sources

[1]

Google's Gemini AI Is a Step Closer to Taking Control of Your Computer

Expertise Artificial intelligence, home energy, heating and cooling, home technology. Google's Gemini AI model might soon be able to act like an IT administrator who's taken over your faulty work laptop: moving your cursor around, clicking on things, typing in forms, all while you watch. The company on Wednesday announced the release of its Gemini 2.5 Computer Use model in preview in its API, allowing developers to create agents that can interact directly with a computer's user interface. This visual understanding of the screen and its elements, through the capture and analysis of screenshots, allows an AI agent to do really anything on a computer that you might be able to, letting you delegate all kinds of tasks to an automated tool. Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. While it mostly works in web browsers at the moment, it can do some actions on a mobile interface too. Google said the model, built on Gemini 2.5 Pro, isn't yet optimized for desktop operating system-level control. This new model is part of a growing trend toward agentic AI, a technology that allows a model to go beyond the box of a chatbot and take actions in the real-ish world of the computer interface. Tools like ChatGPT Agent can already do things like order you a pizza, albeit with some limitations. Some agentic tools might replace mundane tasks in the workplace or in customer service interactions. At the same time, AI companies are increasingly bringing more and more of the things we would normally do on our own into the chatbot interface. With agents, the conversational nature of a tool like Gemini stands to replace the pointing and clicking you're used to. As AI agents gain the ability to manipulate websites and potentially apps on the computer itself, AI makers will need to build in significant safety precautions. Google acknowledged that in the blog post announcing the model, stating: "AI agents that control computers introduce unique risks, including intentional misuse by users, unexpected model behavior, and prompt injections and scams in the web environment." Google reported it trained the model specifically to address those risks, including the ability to recognize when it's given a "high-stakes" command like sending an email or purchasing something. The model may also require the user to confirm before it takes a high-stakes action.

[2]

This new Google Gemini model scrolls the internet just like you do - how it works

The company also admitted its weaknesses, including hallucinations. Google DeepMind has debuted a new AI model in public preview that's designed to navigate a web browser just as a human would. Built atop Gemini 2.5 Pro, the company's new Computer Use model can execute tasks like clicking, typing, and scrolling directly within a web page. Also: 5 reasons I use local AI on my desktop - instead of ChatGPT, Gemini, or Claude Users simply have to feed it a prompt in natural language -- such as, "Open Wikipedia, search for 'Atlantis,' and summarize the history of the myth in Western thought." The model will autonomously fetch the URL and screenshots of the requested site to analyze the user interface it needs to act within, and will perform the requested task step by step, all while outlining its reasoning and actions in a text box easily visible to users. It may also respond by asking for confirmation if it's instructed to perform a sensitive task, like making a purchase. The preview of Gemini 2.5 Computer Use follows the release of similar web-browsing models from OpenAI and Anthropic. Google previously debuted an experimental Chrome extension called Project Mariner, which can also take action on behalf of users within web pages. Gemini 2.5 Computer Use runs off an iterative looping function that allows it to keep a record of all of its recent actions within a particular user interface and determine its next action accordingly. So the more tasks that it performs within a particular site, the more context it will have, and the more seamlessly it will function. Google posted demo videos (sped up 3x) showing the model autonomously making an update in a customer relationship management site and rearranging notes on Google's Jamboard platform, which was discontinued at the end of last year. Also: ChatGPT's Codex just got a huge upgrade that makes it more powerful than ever - what's new According to a blog post published by Google on Tuesday, the new model outperformed similar tools from Anthropic and OpenAI in terms of both accuracy and latency, and across "multiple web and mobile control benchmarks," including Online-Mind2Web, an evaluation framework for testing the performance of web-browsing agents. The new model is intended mainly for web browsers, but also shows "strong promise" on mobile, Google said. It's available now through the Gemini API in Google AI and through Vertex AI. A demo version is also available via Browserbase. The new model also comes with a set of safety controls, which Google says developers can use to prevent it from performing undesired actions like bypassing CAPTCHAs, compromising data security, or gaining control of medical devices. For example, developers can instruct the model to request user confirmation before it performs certain specified actions. Want more stories about AI? Sign up for our AI Leaderboard newsletter. The company also noted in the system card for the new model that it "may exhibit some of the general limitations of foundation models, as it is based off of Gemini 2.5 Pro, such as hallucinations, and limitations around causal understanding, complex logical deduction, and counterfactual reasoning." Those limitations are true of most models. Earlier this week, Anthropic published new research showing that many frontier AI models tended to whistleblow what they interpreted as unethical or illegal information in test scenarios, even when the supposedly incriminating information was actually harmless.

[3]

Google's new Gemini 2.5 Computer Use model can click, type, and scroll

The company also admitted its weaknesses, including hallucinations. Google DeepMind has debuted a new AI model in public preview that's designed to navigate a web browser just as a human would. Built atop Gemini 2.5 Pro, the company's new Computer Use model can execute tasks like clicking, typing, and scrolling directly within a web page. Also: 5 reasons I use local AI on my desktop - instead of ChatGPT, Gemini, or Claude Users simply have to feed it a prompt in natural language -- such as, "Open Wikipedia, search for 'Atlantis,' and summarize the history of the myth in Western thought." The model will autonomously fetch the URL and screenshots of the requested site to analyze the user interface it needs to act within, and will perform the requested task step by step, all while outlining its reasoning and actions in a text box easily visible to users. It may also respond by asking for confirmation if it's instructed to perform a sensitive task, like making a purchase. The preview of Gemini 2.5 Computer Use follows the release of similar web-browsing models from OpenAI and Anthropic. Google previously debuted an experimental Chrome extension called Project Mariner, which can also take action on behalf of users within web pages. Gemini 2.5 Computer Use runs off an iterative looping function that allows it to keep a record of all of its recent actions within a particular user interface and determine its next action accordingly. So the more tasks that it performs within a particular site, the more context it will have, and the more seamlessly it will function. Google posted demo videos (sped up 3x) showing the model autonomously making an update in a customer relationship management site and rearranging notes on Google's Jamboard platform, which was discontinued at the end of last year. Also: ChatGPT's Codex just got a huge upgrade that makes it more powerful than ever - what's new According to a blog post published by Google on Tuesday, the new model outperformed similar tools from Anthropic and OpenAI in terms of both accuracy and latency, and across "multiple web and mobile control benchmarks," including Online-Mind2Web, an evaluation framework for testing the performance of web-browsing agents. The new model is intended mainly for web browsers, but also shows "strong promise" on mobile, Google said. It's available now through the Gemini API in Google AI and through Vertex AI. A demo version is also available via Browserbase. The new model also comes with a set of safety controls, which Google says developers can use to prevent it from performing undesired actions like bypassing CAPTCHAs, compromising data security, or gaining control of medical devices. For example, developers can instruct the model to request user confirmation before it performs certain specified actions. Want more stories about AI? Sign up for our AI Leaderboard newsletter. The company also noted in the system card for the new model that it "may exhibit some of the general limitations of foundation models, as it is based off of Gemini 2.5 Pro, such as hallucinations, and limitations around causal understanding, complex logical deduction, and counterfactual reasoning." Those limitations are true of most models. Earlier this week, Anthropic published new research showing that many frontier AI models tended to whistleblow what they interpreted as unethical or illegal information in test scenarios, even when the supposedly incriminating information was actually harmless.

[4]

Gemini gets one big step closer to taking over your browser

Google is switching on a new kind of AI assistant -- one that doesn't just talk about doing things, it actually clicks the buttons. The company's Gemini 2.5 Computer Use model is now in public preview for developers through the Gemini API in Google AI Studio and Vertex AI, giving agents the ability to navigate real websites like a human: open pages, fill out forms, tap dropdowns, drag items, and keep going until the job's done. The next evolution of today's AI A significant, actually functional upgrade on the way Instead of relying on clean, structured APIs, Computer Use works in a loop. Your code sends the model a screenshot of the current screen along with recent actions. Gemini analyzes the scene and replies with a function call such as "click," "type," or "scroll," which the client executes. Then you send back a fresh screenshot and URL, and the cycle repeats until success or a safety stop. It's relatively mechanical, but effective. Most consumer web interfaces weren't built for bots, and this lets agents operate behind the login, where APIs don't exist. Google says the model is tuned for browsers first, with promising early results on mobile UIs. Desktop OS-level control isn't the focus yet. Performance-wise, Gemini 2.5 Computer Use leads recent browser-control benchmarks like Online-Mind2Web and WebVoyager and does so with lower latency in Browserbase's environment. It's a meaningful combo if you're trying to, for example, navigate an account dashboard or book travel in real time. Google also published extra evaluation details for the curious. Safety is treated like a seat belt, not an optional package. Each proposed action can be run through a per-step safety service before execution; developers can also require user confirmation for high-stakes moves like purchases or anything that might harm system integrity. You can further narrow what actions are even allowed, which should help keep agents from clicking themselves into trouble. Still, Google stresses exhaustive testing before you ship. If you want to kick the tires, Google points devs to a hosted demo via Browserbase, sample agent loops, and documentation for building locally with Playwright. And if parts of this feel familiar, that's because versions of the model have already been working behind the scenes in efforts like Project Mariner, Firebase's Testing Agent, and some of Search's AI Mode features. Today's news simply opens the door. With the preview now available, Gemini is clearly ready to graduate from an assistant that suggests to an assistant that does. If your workflows live on the web, this could be the most interesting thing Google's shipped this year.

[5]

'Gemini 2.5 Computer Use' model enters preview with strong web, Android performance

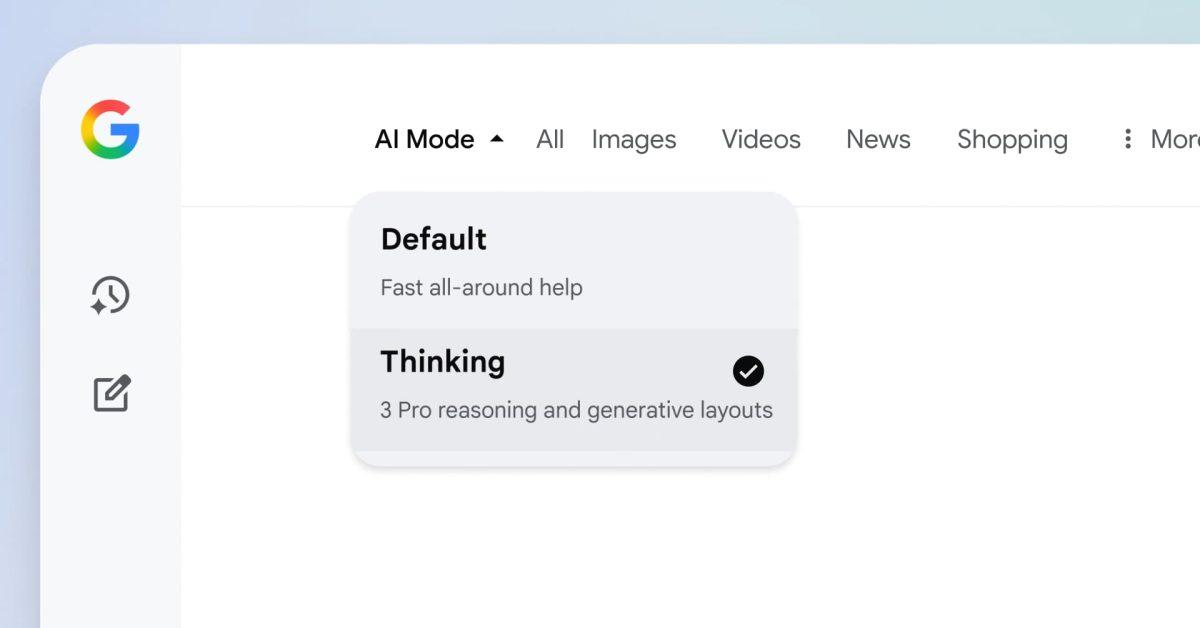

Google is now letting developers preview the Gemini 2.5 Computer Use model behind Project Mariner and agentic features in AI Mode. This "specialized model" can interact with graphical user interfaces, specifically browsers and websites. There are several steps that happen in a loop "until the task is complete." Other UI actions supported by the model include going back/forward, searching the web, navigating to a specific URL, cursor hovering, keyboard combinations, scrolling, and drag/drop. Google shared two examples (at 3X speed) with the following prompts: "From https://tinyurl.com/pet-care-signup, get all details for any pet with a California residency and add them as a guest in my spa CRM at https://pet-luxe-spa.web.app/. Then, set up a follow up visit appointment with the specialist Anima Lavar for October 10th anytime after 8am. The reason for the visit is the same as their requested treatment." "My art club brainstormed tasks ahead of our fair. The board is chaotic and I need your help organizing the tasks into some categories I created. Go to sticky-note-jam.web.app and ensure notes are clearly in the right sections. Drag them there if not." Gemini 2.5 Computer Use is "primarily optimized for web browsers." However, Google has an "AndroidWorld" benchmark that "demonstrates strong promise for mobile UI control tasks," while it's "not yet optimized for desktop OS-level control." Google demonstrated strong performance across web and mobile control benchmarks when compared to Claude and OpenAI's offering, as well as "leading quality for browser control at the lowest latency." This model is built on Gemini 2.5 Pro's visual understanding and reasoning capabilities. Google says "versions of this model" power Project Mariner and AI Mode's agentic capabilities. It's been used internally for UI testing to speed up software development, while Google has an early access program for third-party developers building assistants and workflow automation tools. Gemini 2.5 Computer Use is available in public preview today through Gemini API in Google AI Studio and Vertex AI.

[6]

Google's AI can now surf the web for you, click on buttons, and fill out forms with Gemini 2.5 Computer Use

Some of the largest providers of large language models (LLMs) have sought to move beyond multimodal chatbots -- extending their models out into "agents" that can actually take more actions on behalf of the user across websites. Recall OpenAI's ChatGPT Agent (formerly known as "Operator") and Anthropic's Computer Use, both released over the last two years. Now, Google is getting into that same game as well. Today, the search giant's DeepMind AI lab subsidiary unveiled a new, fine-tuned and custom-trained version of its powerful Gemini 2.5 Pro LLM known as "Gemini 2.5 Pro Computer Use," which can use a virtual browser to surf the web on your behalf, retrieve information, fill out forms, and even take actions on websites -- all from a user's single text prompt. "These are early days, but the model's ability to interact with the web - like scrolling, filling forms + navigating dropdowns - is an important next step in building general-purpose agents," said Google CEO Sundar Pichai, as part of a longer statement on the social network, X. The model is not available for consumers directly from Google, though. Instead, Google partnered with another company, Browserbase, founded by former Twilio engineer Paul Klein in early 2024, which offers virtual "headless" web browser specifically for use by AI agents and applications. (A "headless" browser is one that doesn't require a graphical user interface, or GUI, to navigate the web, though in this case and others, Browserbase does show a graphical representation for the user). Users can demo the new Gemini 2.5 Computer Use model directly on Browserbase here and even compare it side-by-side with the older, rival offerings from OpenAI and Anthropic in a new "Browser Arena" launched by the startup (though only one additional model can be selected alongside Gemini at a time). For AI builders and developers, it's being made as a raw, albeit propreitary LLM through the Gemini API in Google AI Studio for rapid prototyping, and Google Cloud's Vertex AI model selector and applications building platform. The new offering builds on the capabilities of Gemini 2.5 Pro, released back in March 2025 but which has been updated significantly several times since then, with a specific focus on enabling AI agents to perform direct interactions with user interfaces, including browsers and mobile applications. Overall, it appears Gemini 2.5 Computer Use is designed to let developers create agents that can complete interface-driven tasks autonomously -- such as clicking, typing, scrolling, filling out forms, and navigating behind login screens. Rather than relying solely on APIs or structured inputs, this model allows AI systems to interact with software visually and functionally, much like a human would. Brief User Hands-On Tests In my brief, unscientific initial hands-on tests on the Browserbase website, Gemini 2.5 Computer Use successfully navigate to Taylor Swift's official website as instructed and provided me a summary of what was being sold or promoted at the top -- a special edition of her newest album, "The Life of A Showgirl." In another test, I asked Gemini 2.5 Computer Use to search Amazon for highly rated and well-reviewed solar lights I could stake into my back yard, and I was delighted to watch as it successfully completed a Google Search Captcha designed to weed out non-human users ("Select all the boxes with a motorcycle.") It did so in a matter of seconds. However, once it got through there, it stalled and was unable to complete the task, despite serving up a "task competed" message. I should also note here that while the ChatGPT agent from OpenAI and Anthropic's Claude can create and edit local files -- such as PowerPoint presentations, spreadsheets, or text documents -- on the user's behalf, Gemini 2.5 Computer Use does not currently offer direct file system access or native file creation capabilities. Instead, it is designed to control and navigate web and mobile user interfaces through actions like clicking, typing, and scrolling. Its output is limited to suggested UI actions or chatbot-style text responses; any structured output like a document or file must be handled separately by the developer, often through custom code or third-party integrations. Performance Benchmarks Google says Gemini 2.5 Computer Use has demonstrated leading results in multiple interface control benchmarks, particularly when compared to other major AI systems including Claude Sonnet and OpenAI's agent-based models. Evaluations were conducted via Browserbase and Google's own testing. Some highlights include: * Online-Mind2Web (Browserbase): 65.7% for Gemini 2.5 vs. 61.0% (Claude Sonnet 4) and 44.3% (OpenAI Agent) * WebVoyager (Browserbase): 79.9% for Gemini 2.5 vs. 69.4% (Claude Sonnet 4) and 61.0% (OpenAI Agent) * AndroidWorld (DeepMind): 69.7% for Gemini 2.5 vs. 62.1% (Claude Sonnet 4); OpenAI's model could not be measured due to lack of access * OSWorld: Currently not supported by Gemini 2.5; top competitor result was 61.4% In addition to strong accuracy, Google reports that the model operates at lower latency than other browser control solutions -- a key factor in production use cases like UI automation and testing. How It Works Agents powered by the Computer Use model operate within an interaction loop. They receive: * A user task prompt * A screenshot of the interface * A history of past actions The model analyzes this input and produces a recommended UI action, such as clicking a button or typing into a field. If needed, it can request confirmation from the end user for riskier tasks, such as making a purchase. Once the action is executed, the interface state is updated and a new screenshot is sent back to the model. The loop continues until the task is completed or halted due to an error or a safety decision. The model uses a specialized tool called , and it can be integrated into custom environments using tools like Playwright or via the Browserbase demo sandbox. Use Cases and Adoption According to Google, teams internally and externally have already started using the model across several domains: * Google's payments platform team reports that Gemini 2.5 Computer Use successfully recovers over 60% of failed test executions, reducing a major source of engineering inefficiencies. * Autotab, a third-party AI agent platform, said the model outperformed others on complex data parsing tasks, boosting performance by up to 18% in their hardest evaluations. * Poke.com, a proactive AI assistant provider, noted that the Gemini model often operates 50% faster than competing solutions during interface interactions. The model is also being used in Google's own product development efforts, including in Project Mariner, the Firebase Testing Agent, and AI Mode in Search. Safety Measures Because this model directly controls software interfaces, Google emphasizes a multi-layered approach to safety: * A per-step safety service inspects every proposed action before execution. * Developers can define system-level instructions to block or require confirmation for specific actions. * The model includes built-in safeguards to avoid actions that might compromise security or violate Google's prohibited use policies. For example, if the model encounters a CAPTCHA, it will generate an action to click the checkbox but flag it as requiring user confirmation, ensuring the system does not proceed without human oversight. Technical Capabilities The model supports a wide array of built-in UI actions such as: * , , , , and more * User-defined functions can be added to extend its reach to mobile or custom environments * Screen coordinates are normalized (0-1000 scale) and translated back to pixel dimensions during execution It accepts image and text input and outputs text responses or function calls to perform tasks. The recommended screen resolution for optimal results is 1440x900, though it can work with other sizes. API Pricing Remains Almost Identical to Gemini 2.5 Pro The pricing for Gemini 2.5 Computer Use aligns closely with the standard Gemini 2.5 Pro model. Both follow the same per-token billing structure: input tokens are priced at $1.25 per one million tokens for prompts under 200,000 tokens, and $2.50 per million tokens for prompts longer than that. Output tokens follow a similar split, priced at $10.00 per million for smaller responses and $15.00 for larger ones. Where the models diverge is in availability and additional features. Gemini 2.5 Pro includes a free tier that allows developers to use the model at no cost, with no explicit token cap published, though usage may be subject to rate limits or quota constraints depending on the platform (e.g. Google AI Studio). This free access includes both input and output tokens. Once developers exceed their allotted quota or switch to the paid tier, standard per-token pricing applies. In contrast, Gemini 2.5 Computer Use is available exclusively through the paid tier. There is no free access currently offered for this model, and all usage incurs token-based charges from the outset. Feature-wise, Gemini 2.5 Pro supports optional capabilities like context caching (starting at $0.31 per million tokens) and grounding with Google Search (free for up to 1,500 requests per day, then $35 per 1,000 additional requests). These are not available for Computer Use at this time. Another distinction is in data handling: output from the Computer Use model is not used to improve Google products in the paid tier, while free-tier usage of Gemini 2.5 Pro contributes to model improvement unless explicitly opted out. Overall, developers can expect similar token-based costs across both models, but they should consider tier access, included capabilities, and data use policies when deciding which model fits their needs.

[7]

Introducing the Gemini 2.5 Computer Use model

Summaries were generated by Google AI. Generative AI is experimental. Earlier this year, we mentioned that we're bringing computer use capabilities to developers via the Gemini API. Today, we are releasing the Gemini 2.5 Computer Use model, our new specialized model built on Gemini 2.5 Pro's visual understanding and reasoning capabilities that powers agents capable of interacting with user interfaces (UIs). It outperforms leading alternatives on multiple web and mobile control benchmarks, all with lower latency. Developers can access these capabilities via the Gemini API in Google AI Studio and Vertex AI. While AI models can interface with software through structured APIs, many digital tasks still require direct interaction with graphical user interfaces, for example, filling and submitting forms. To complete these tasks, agents must navigate web pages and applications just as humans do: by clicking, typing and scrolling. The ability to natively fill out forms, manipulate interactive elements like dropdowns and filters, and operate behind logins is a crucial next step in building powerful, general-purpose agents. The model's core capabilities are exposed through the new 'computer_use' tool in the Gemini API and should be operated within a loop. Inputs to the tool are the user request, screenshot of the environment, and a history of recent actions. The input can also specify whether to exclude functions from the full list of supported UI actions or specify additional custom functions to include.

[8]

Google DeepMind Releases Gemini 2.5 Computer Use Model | AIM

The model lets AI agents interact directly with graphical interfaces, such as filling forms, scrolling and operating behind logins. Google DeepMind has released the Gemini 2.5 Computer Use model, a specialised version of its Gemini 2.5 Pro AI that can interact with user interfaces. The model is available in preview via the Gemini API through Google AI Studio and Vertex AI Studio. The model allows AI agents to complete tasks by interacting directly with graphical interfaces, such as filling forms, clicking buttons, scrolling and operating behind logins. "The ability to natively fill out forms, manipulate interactive elements like dropdowns and filters and operate behind logins is a crucial next step in building powerful, general-purpose agents," the company said. Developers access the model through the computer-use tool, which operates in a loop. Inputs include the user request, a screenshot of the environment and a history of recent actions. The model generates responses in the form of UI actions, which are executed by client-side code. The loop continues with updated screenshots and context until the task is completed or terminated. The model is optimised for web browsers and shows potential for mobile UI control, but is not yet designed for desktop operating system-level tasks. Demonstrations include transferring pet-care data to a CRM system and organising digital sticky notes into categories. Gemini 2.5 Computer Use has demonstrated strong performance on web and mobile control benchmarks, including Online-Mind2Web, WebVoyager and AndroidWorld. According to DeepMind, the model delivers "high accuracy while maintaining low latency", with accuracy above 70% and latency around 225 seconds. Google DeepMind emphasised safety, noting that AI agents controlling computers carry risks such as misuse, unexpected behaviour and web-based scams. The company said it has integrated safety features into the model and provides developers with controls to prevent harmful actions. "Developers can further specify that the agent either refuses or asks for user confirmation before it takes specific kinds of high-stakes actions," DeepMind said.

[9]

Google's Gemini 2.5 Computer Use model can navigate the web like a human - SiliconANGLE

Google's Gemini 2.5 Computer Use model can navigate the web like a human Google LLC has just announced a new version of its Gemini large language model that's able to navigate the web through a browser and interact with various websites, meaning it can perform tasks like searching for information or buying things, without human supervision. The model in question is called Gemini 2.5 Computer Use, and it uses a combination of visual understanding and reasoning to analyze user's requests and carry out tasks in the browser. It will complete all of the actions required to fulfill that task, such as clicking, typing, scrolling, manipulating dropdown menus and filling out and submitting forms, just as a human can do. In a blog post, Google's DeepMind research outfit said Gemini 2.5 Computer Use is based on the Gemini 2.5 Pro LLM, and explained that earlier versions of the model have been used to power earlier agentic features it has launched in tools such as AI Mode and Project Mariner. But this is the first time the complete model has been made available. The company explained that each request kicks off a "loop" that involves the model go through various steps until it's considered complete. First, the user sends a request to the model, which can also include screenshots of the website in question and a history of recent actions. Then, Gemini 2.5 Computer Use will analyze those inputs and generate a response, which will typically be a "function call representing one of the UI actions such as clicking or typing." Client-side code will then execute the required action, and after this is done, a new screenshot of the graphical user interface and the current website will be sent back to the model as a function response. Google posted a few demonstration videos showing the computer use tool in action, noting that they are sped up by three-times. The first video is based on the following prompt: "From https://tinyurl.com/pet-care-signup, get all details for any pet with a California residency and add them as a guest in my spa CRM at https://pet-luxe-spa.web.app/. Then, set up a follow up visit appointment with the specialist Anima Lavar for October 10th anytime after 8am. The reason for the visit is the same as their requested treatment." Google is somewhat late to the party here. Just yesterday, OpenAI revealed a number of new applications for ChatGPT, enhancing the capabilities of its ChatGPT Agent feature that's designed to complete various tasks on user's behalf using a computer. Anthropic PBC first released a version of its flagship Claude AI model that has the ability to use a computer last year. Not only is Google's computer use model late, but it's not as comprehensive. Unlike OpenAI's and Anthropic's tools, it's only able to access a web browser, rather than the entire computer operating system. "It's not yet optimized for desktop OS-level control, and currently supports 13 actions," the company explained. Still, DeepMind's researchers say their focus on getting Gemini 2.5 Computer Use to work specifically in web browsers has paid off in terms of its performance. They claim that it "outperforms leading alternatives on multiple web and mobile benchmarks", including Online-Mind2Web and WebVoyager. They noted that it's primarily optimized for web browsers and so it performs better in them, but even so, it still outperformed its peers on the AndroidWorld benchmark, which demonstrates "strong promise for mobile UI control tasks," the researchers said. In addition, they also claimed that Gemini 2.5 Computer Use is superior in terms of browser control at the lowest latency, based on its performance on the Browserbase harness for Online-Mind2Web. Here's a second example of the model in action, using a different prompt: "My art club brainstormed tasks ahead of our fair. The board is chaotic and I need your help organizing the tasks into some categories I created. Go to sticky-note-jam.web.app and ensure notes are clearly in the right sections. Drag them there if not." DeepMind's researchers are making Gemini 2.5 Computer Use available to developers through the Google AI Studio and Vertex AI, and pricing aligns pretty closely with the standard Gemini 2.5 Pro model. They follow the same token-based billing structure, with input tokens priced at $1.25 per one million tokens for prompts with under 200,000 tokens, rising to $2.50 per million tokens for longer prompts. Output tokens are priced similarly for both models, at $10 per million for shorter responses and $15 per million for longer ones. The only real difference is that, while Gemini 2.5 Pro is available for free with an explicit token cap, Gemini 2.5 Computer Use does not offer any free tier, and so users must pay from the outset to access it.

[10]

Google's Gemini Can Now Control Your Computer Like a Human Assistant - Phandroid

Google announced a major upgrade to Gemini that lets the AI assistant take control of your computer. The new Gemini computer use model can navigate your desktop, click buttons, fill out forms, and complete multi-step tasks without constant supervision. This isn't just about answering questions anymore. Gemini can actually interact with your computer like a human would, opening applications, moving between windows, and executing complex workflows. The Gemini computer use feature works by "seeing" your screen and understanding where to click or type. It can handle tasks like copying data between applications, filling out lengthy forms, or conducting research across multiple websites. For example, you could ask Gemini to gather information from several sites and compile it into a spreadsheet instead of doing it manually. Google isn't the first to explore AI-controlled computers. Anthropic released a similar feature for Claude last year, but Google's integration could reach millions of users already familiar with the assistant. Giving an AI control over your computer raises obvious concerns. Google emphasizes that users remain in control and must grant permission for Gemini to access your screen. However, questions remain about what happens when Gemini makes a mistake or clicks the wrong button. Google hasn't announced a wide rollout date yet. The Gemini computer use model appears to be in early testing, possibly limited to specific users or enterprise customers. Based on Google's typical strategy, expect a gradual release over the coming months.

[11]

Google Unveils Gemini 2.5 Computer Use That Clicks, Types, and Scrolls Like Humans

Versions of this model is already powering Project Mariner and AI Mode in Google Search. Today, Google released the Gemini 2.5 Computer Use AI model that is designed to interact with user interfaces (UIs). It's built on the flagship Gemini 2.5 Pro model, bringing its visual and reasoning capabilities to power AI agents. The Gemini 2.5 Computer Use model can navigate browser and web interfaces along with Android UI interfaces. Google says the new Gemini 2.5 Computer Use AI model can click, type, and scroll, just like humans to complete a task. In fact, in the WebVoyager benchmark, Gemini 2.5 Computer Use model scores 88.9% while OpenAI's Computer-Using AI Agent achieves 87%. In the Online-Mind2Web benchmark, Google again outperforms OpenAI's Operator AI agent. It shows that Google has trained a leading AI model to power AI agents, which can reliably perform tasks on browsers. In terms of accuracy and latency too, Google has an upper hand over Claude Sonnet 4.5 and OpenAI's Computer-Using Agent. Google has already deployed versions of this model on Project Mariner and AI Mode in Google Search. Besides that, the API for Gemini 2.5 Computer User is available via Google AI Studio and Vertex AI.

[12]

Google rolls out Gemini 2.5 Computer Use model for deeper app and browser control

Google has released the Gemini 2.5 Computer Use model, a new specialized version built on Gemini 2.5 Pro's visual understanding and reasoning capabilities. The model enables AI agents to interact directly with graphical user interfaces (UIs) -- performing actions such as clicking, typing, and form-filling -- similar to human users. According to Google, it delivers lower latency and outperforms leading alternatives across multiple web and mobile control benchmarks. Purpose Google explains that while AI systems often interact with software through structured APIs, many digital workflows still rely on graphical interfaces. Tasks such as completing forms, selecting filters, or navigating menus require direct UI control. The Gemini 2.5 Computer Use model addresses this by enabling agents to handle web and mobile applications directly, performing interface-level actions like scrolling, choosing dropdowns, and navigating login pages. How it works The model's functionality is provided through the new tool in the Gemini API, which operates within a continuous loop. Each loop includes: This process repeats until the task completes, an error occurs, or the interaction is stopped by a safety mechanism or user decision. The model is primarily optimized for web browsers and shows strong early results in mobile UI control, though desktop OS-level automation is not yet supported. Performance The Gemini 2.5 Computer Use model demonstrates strong results across several web and mobile control benchmarks. Google reports that it provides leading quality and efficiency for browser-based tasks, with the lowest latency recorded in Browserbase's Online-Mind2Web benchmark evaluations. Google highlights the importance of safety when developing agents capable of computer control. Such systems pose specific risks, including possible misuse, unexpected model behavior, or malicious prompt injections. To mitigate these, the Gemini 2.5 Computer Use model integrates built-in safety mechanisms described in the Gemini 2.5 Computer Use System Card and offers several developer safety features: Google also recommends that developers follow its documentation and best practices to thoroughly test systems before deployment. Google has already deployed the model internally for multiple applications, including: Participants in the early access program have also used the model for workflow automation, personal assistant development, and UI testing, reporting strong outcomes across scenarios. The Gemini 2.5 Computer Use model is now available in public preview via the Gemini API on Google AI Studio and Vertex AI.

[13]

Gemini 2.5 Computer Use model explained: Google's AI agent to navigate interfaces

Google DeepMind's Gemini agent can click, scroll, and fill forms Google's latest Gemini 2.5 update has quietly introduced something that could reshape how artificial intelligence interacts with the web: the Computer Use model. Unlike traditional chatbots that merely generate text or code, this new capability allows Gemini to literally use a computer, clicking buttons, filling forms, scrolling through websites, and performing actions on-screen much like a human user would. It's Google's boldest step yet toward an era of agentic AI - intelligent assistants that can act on behalf of users, not just answer their questions. Also read: OpenAI's AgentKit explained: Anyone can make AI Agents with ease The Gemini 2.5 Computer Use model is designed to bridge a long-standing gap between language models and the real world: the ability to interact directly with user interfaces. Traditionally, AI systems have relied on APIs to fetch information or perform tasks. But APIs aren't available for everything - especially the messy, visual world of websites and apps. Gemini's new model solves that by giving the AI eyes and hands in the digital sense. It can "see" what's on a screen through screenshots and then decide how to act: clicking buttons, typing into fields, or scrolling to find relevant sections. This means a developer can instruct Gemini to do something like "Log into this dashboard, download the latest report, and email it to me," and the model can navigate the web interface to accomplish it step by step. At the heart of Gemini's Computer Use model is an iterative agent loop, a continuous process of perception, reasoning, and action. Also read: India's AI ecosystem will play central role in how AI develops globally: Anthropic CEO on opening India office This system mimics how humans interact with computers, looking, thinking, and acting, only at machine speed and with higher consistency. Developers can even set safety constraints, preventing the AI from performing sensitive actions like confirming payments or sharing data without explicit approval. Google's early demonstrations showcase Gemini 2.5 handling a range of real-world tasks. It can fill out online forms, navigate dashboards, rearrange elements in web apps, and even work across multiple tabs or sites to complete multi-step workflows. For instance, it could pull contact details from a website, paste them into a CRM tool, and then schedule a meeting in Google Calendar, all autonomously. This capability opens up a new frontier for business automation, testing web apps, data entry, and personal productivity. Unlike traditional automation tools that depend on rigid scripts or APIs, Gemini's approach is flexible and adaptive. It "understands" visual layouts, making it far more resilient to changes in a site's design or structure. A model that can control a computer naturally raises safety concerns. Google acknowledges this and has implemented multiple guardrails. Every proposed action is checked by a safety service before execution, filtering out anything that could be harmful, malicious, or risky. Certain actions, such as making financial transactions or sending data, require explicit user confirmation. Developers can also define "forbidden actions" to ensure the model stays within safe boundaries. Moreover, Google recommends that all experiments with the Computer Use model take place in sandboxed environments, minimizing any risk of unintended side effects. Currently, Gemini's Computer Use model is available only in preview through the Gemini API, accessible via Google AI Studio and Vertex AI. It primarily focuses on browser-based control for now, though extensions to mobile and desktop environments are reportedly in development. According to Google DeepMind, early internal testing shows Gemini 2.5 outperforming other web automation agents on popular benchmarks like Online-Mind2Web and WebVoyager. These results suggest the system can complete UI-driven tasks faster and more accurately than previous models. Gemini's new capability fits into a growing trend across the AI landscape, agentic models that can perform actions autonomously. OpenAI has hinted at similar goals through its ChatGPT Agents and App Actions, while Anthropic's Claude and Meta's research teams are exploring their own versions of computer-use systems. What sets Gemini apart is Google's integration across its ecosystem. Imagine a Gemini-powered agent that can seamlessly move between Gmail, Docs, and Drive, managing workflows end to end. That's the vision this technology inches toward. With the Computer Use model, Gemini 2.5 marks a shift from passive intelligence to active capability. Instead of simply responding to prompts, it can take initiative, following complex instructions through multi-step UI interactions. In the near future, AI agents may become more like true digital colleagues, able to log into systems, analyze data, and execute tasks while keeping humans in the loop for high-level guidance. For now, Google's Gemini 2.5 Computer Use is still experimental, but it represents a pivotal leap: an AI that doesn't just talk about the web - it uses it.

[14]

Google introduces Gemini 2.5 Computer Use AI model: Here's how it works

It is built on Gemini 2.5 Pro's visual understanding and reasoning capabilities. Google has introduced Gemini 2.5 Computer Use, a new AI model designed to interact directly with web and mobile interfaces. This model, built on Gemini 2.5 Pro's visual understanding and reasoning capabilities, allows AI agents to perform tasks on computers by clicking, typing, scrolling, and navigating websites, much like a human would. The Gemini 2.5 Computer Use model is available through the Gemini API in Google AI Studio and Vertex AI. Keep reading to know how this new AI model works. Also read: UPI goes PIN-free with on-device biometric authentication, gets other features The Gemini 2.5 Computer Use model core capabilities are "exposed through the new 'computer_use' tool in the Gemini API and should be operated within a loop," the tech giant explains in a blogpost. Inputs to the tool include the user's request, a screenshot of the current environment, and a history of recent actions. You can also choose to exclude certain UI actions or add custom ones. The model then analyses these inputs and responds with a function call, such as clicking or typing. Some actions, like making a purchase, may require user confirmation. The client-side code then executes the action, and sends back a new screenshot and the current URL. This restarts the loop. This iterative process continues until the task is finished, an error occurs, or a safety/user stop is triggered. Also read: Google will pay you over Rs 26 lakh for finding bugs in its AI products "The Gemini 2.5 Computer Use model is primarily optimised for web browsers, but also demonstrates strong promise for mobile UI control tasks. It is not yet optimised for desktop OS-level control," Google said. According to the tech giant, the Gemini 2.5 Computer Use model offers leading quality for browser control at the lowest latency, as measured by performance on the Browserbase harness for Online-Mind2Web.

Share

Share

Copy Link

Google introduces Gemini 2.5 Computer Use, an AI model capable of interacting with web interfaces like a human. This development marks a significant step towards AI agents that can perform complex tasks across various digital platforms.

Google Introduces Gemini 2.5 Computer Use Model

Google DeepMind has unveiled a groundbreaking AI model that brings us one step closer to autonomous computer interaction. The Gemini 2.5 Computer Use model, now in public preview, is designed to navigate web browsers and mobile interfaces with human-like precision

1

2

.Capabilities and Functionality

Built on top of Gemini 2.5 Pro, this specialized model can execute a wide range of tasks within web interfaces, including clicking, typing, scrolling, and even more complex actions like drag-and-drop

3

. Users can instruct the model using natural language prompts, such as 'Open Wikipedia, search for 'Atlantis,' and summarize the history of the myth in Western thought.' The AI then autonomously navigates the website, performing the requested tasks step by step2

.Technical Approach

The model operates on an iterative looping function, allowing it to maintain a record of recent actions within a user interface and determine subsequent steps accordingly. This approach enables the AI to gain context and function more seamlessly as it performs multiple tasks within a particular site

3

.Performance and Availability

According to Google, Gemini 2.5 Computer Use outperforms similar tools from competitors like Anthropic and OpenAI in terms of accuracy and latency across multiple web and mobile control benchmarks

3

. While primarily optimized for web browsers, the model has shown promising results on mobile interfaces as well5

.The model is now available through the Gemini API in Google AI Studio and Vertex AI, with a demo version accessible via Browserbase

4

.Related Stories

Safety Measures and Limitations

Google has implemented several safety controls to prevent misuse and protect user data. The model can be instructed to request user confirmation before performing sensitive actions like making purchases or sending emails

1

. Developers can also restrict the model's actions to prevent undesired behaviors such as bypassing CAPTCHAs or compromising data security3

.However, Google acknowledges that the model may exhibit some limitations common to foundation models, including hallucinations and challenges with complex logical deduction and counterfactual reasoning

3

.Implications and Future Prospects

The introduction of Gemini 2.5 Computer Use represents a significant step towards agentic AI, potentially revolutionizing how we interact with digital interfaces. This technology could streamline various tasks in the workplace and customer service interactions, replacing mundane point-and-click activities with conversational AI interactions

1

.As AI agents gain more control over computer interfaces, the need for robust safety precautions becomes increasingly crucial. Google's approach to addressing these concerns will likely shape the future development and deployment of similar technologies across the industry.

References

Summarized by

Navi

[4]

Related Stories

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

3

Anthropic faces Pentagon ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation