Google's Gemini AI assistant preps Agentic Mode and Live Experimental Features for Android

2 Sources

2 Sources

[1]

Gemini gets ready for new Live Experimental Features, Thinking Mode, and more

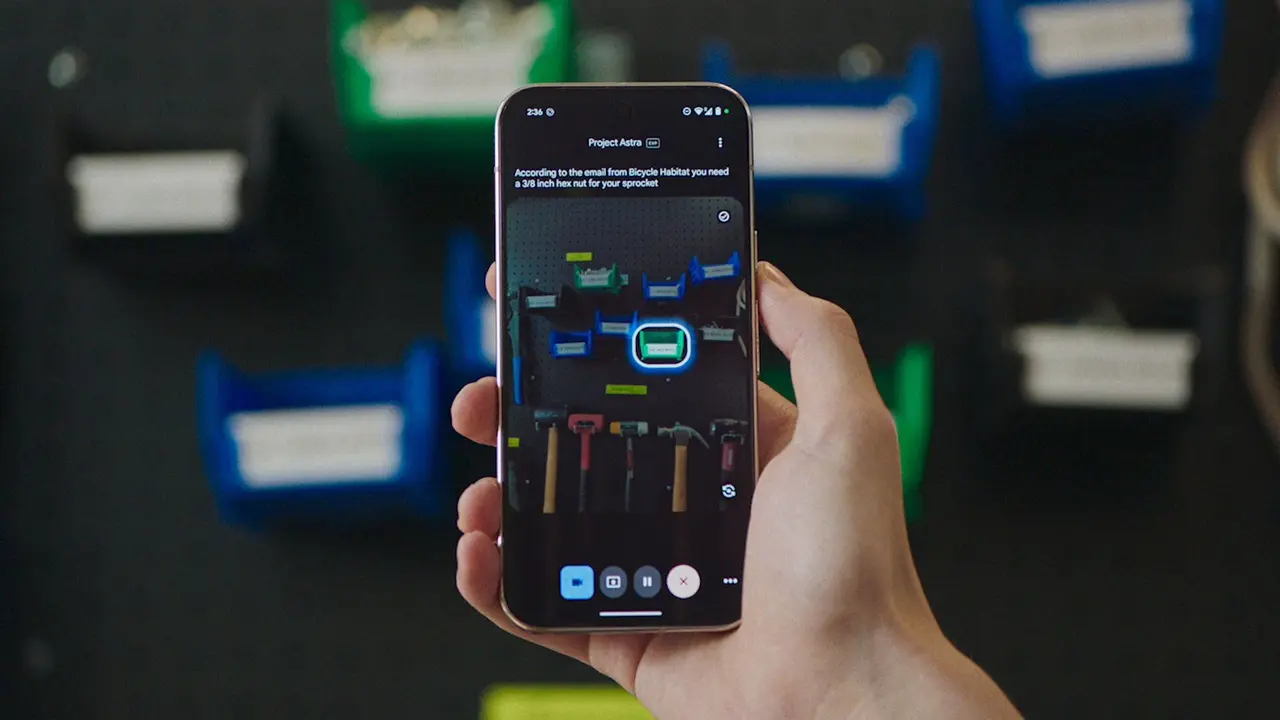

Among the experiments in development, we could get some big Gemini Live upgrades and new agentic options. Keeping up with AI developments can be like watching a race where all the participants are at very different places. Far off in the distance, we can see new ideas taking shape, but it's often anyone's guess how long it will be before they're ready for prime time. Last year, one of the big steps Google shared was Project Astra, a "universal AI assistant" able to work with apps across your phone. After getting our first taste last summer, we're taking a look at where some of those Astra ambitions may be headed next.

[2]

Gemini AI Assistant Could Soon Get New Live Features, Agentic Mode

Google is reportedly working on new capabilities for its Gemini artificial intelligence (AI) assistant for Android devices. As per the report, the Mountain View-based tech giant could soon release multiple new features across Gemini Live, Deep Research, Thinking mode, and new agentic AI capabilities. The company has been aggressively integrating AI capabilities across its products, and one of its most ambitious projects has been to replace Google Assistant on all devices with a more capable Gemini assistant. Gemini Assistant Could Soon Get New Capabilities According to an Android Authority report, the tech giant is currently working on several new features for Android's default AI assistant. The publication found hints of these capabilities within the strings of code in the latest version of the Google app for Android. It is said that these features are part of a new Gemini Labs section. The name suggests that the section is similar to Google Labs, which is focused on creating new and experimental AI features across the company's products. The strings mention "assistant robin," which has previously been noted to be the company's internal name for the Gemini AI assistant. One of the header strings reportedly mentions "Live Experimental Features," and names several capabilities, such as "multimodal memory, better noise handling, responding when it sees something, and personalised results based on your Google apps." These appear to be new upgrades for the Gemini Live, which offers a more conversational and human-like two-way voice-based real-time interactions. Multimodal memory will likely allow the mode to remember things it sees on-device or via the camera feed, even if it is no longer visible. Better noise handline appears to offer ambient noise reduction, whereas "responding when it sees something" could be a proactive capability. The last one is self-explanatory. However, these suggested functionalities are purely speculative, and we will have to wait for the official release to know what Google is planning. Beyond this, there is a mention of "Live Thinking Mode," which is described as "a version of Gemini Live that takes time to think and provide more detailed responses." Thinking Mode is a mainstay in the Gemini app, but the company might be bringing it to the assistant's Live mode too. Additionally, there is a mention of "Deep Research," where a string says, "Delegate complex research tasks." It is not clear what the new capability in this mode will be. Apart from this, within the UI Control string, "Agent controls phone to complete tasks" is mentioned. This is an entirely new capability, and it appears that the company might allow the Gemini assistant to complete certain on-device tasks on behalf of the user. It cannot be said which task automations will be added to the voice assistant. Do note that the abovementioned capabilities were only referenced in the code, and it does not mean that the company will definitely release them. Sometimes, developers also add these strings as placeholders or ideation spaces, which do not always materialise. We recommend taking this information with a pinch of salt, and to wait till Google officially announces these features.

Share

Share

Copy Link

Google is developing major upgrades to Gemini Live including multimodal memory, proactive responses, and new agentic capabilities that let the assistant control phone tasks. Code strings reveal Live Thinking Mode and Deep Research enhancements, signaling Google's push to replace Google Assistant with a more capable universal AI assistant across Android devices.

Google Advances Gemini AI Assistant With New Capabilities

Google is developing substantial upgrades to its Gemini AI assistant for Android, with code discoveries revealing plans for Live Experimental Features, Agentic Mode, and enhanced voice interaction capabilities

2

. These AI developments signal the tech giant's continued effort to transform its assistant into what it calls a universal AI assistant, building on ambitions first outlined in Project Astra last year1

.

Source: Gadgets 360

Analysis of code strings in the latest Google app for Android points to a new Gemini Labs section, mirroring the experimental approach of Google Labs. The discoveries reference "assistant robin," Google's internal designation for the Gemini AI assistant, alongside multiple feature enhancements currently under development

2

.Upgrades to Gemini Live Expand Voice Interaction

The most significant upgrades to Gemini Live include multimodal memory, improved noise handling, the ability to respond when it sees something, and personalized results based on user's Google apps

2

. Multimodal memory could enable the voice assistant to retain information from on-device interactions or camera feeds even after the visual input disappears, creating a more contextually aware experience.

Source: Android Authority

Better noise handling appears designed to reduce ambient interference during conversations, while proactive responses suggest the assistant might initiate interactions based on visual cues without explicit user prompts. These capabilities would mark a substantial evolution from current reactive AI models to more anticipatory systems that understand context and user needs in real-time.

Live Thinking Mode Brings Deliberate Reasoning to Conversations

Google is developing Live Thinking Mode, described as "a version of Gemini Live that takes time to think and provide more detailed responses"

2

. While Thinking Mode already exists in the Gemini app, integrating it into the Live experience would allow users to request more thorough, reasoned answers during real-time voice conversations. This hybrid approach could bridge the gap between quick conversational responses and deep analytical thinking.Additionally, enhancements to Deep Research are referenced with code mentioning the ability to "delegate complex research tasks"

2

. Though specific functionalities remain unclear, this suggests users might assign multi-step research projects to the assistant, which could autonomously gather and synthesize information.Related Stories

Agentic Options Enable Direct Control Phone Tasks

Perhaps most transformative are the agentic functions referenced in UI Control strings stating "Agent controls phone to complete tasks"

2

. These agentic capabilities would allow the Gemini AI assistant to execute task automations directly on Android devices on behalf of users, moving beyond simple voice commands to autonomous action-taking.While the specific tasks remain unspecified, this aligns with broader industry trends toward agentic AI systems that can navigate applications, manage workflows, and complete multi-step processes independently. Such functionality would represent a significant step toward the vision outlined in Project Astra—a universal AI assistant capable of working seamlessly across phone applications

1

.What This Means for Android Users

These developments matter because they signal Google's determination to replace Google Assistant with a fundamentally more capable system. For Android users, this could mean transitioning from an assistant that responds to commands to one that anticipates needs, remembers context across interactions, and takes autonomous actions to complete complex tasks.

However, it's important to note that code strings don't guarantee feature releases. Developers sometimes use these as placeholders or exploration spaces that never materialize into actual products

2

. The timeline for any official announcements remains uncertain, keeping observers watching for Google's next move in the competitive AI assistant landscape.References

Summarized by

Navi

[1]

Related Stories

Google's Gemini AI Set to Revolutionize Personal Assistance with Enhanced Data Integration and App Connectivity

02 May 2025•Technology

Google Teases Exciting Updates for Gemini Advanced: Video Generation, AI Agents, and More

13 Feb 2025•Technology

Google Enhances Gemini Live with Visual Guidance and Native Audio Model

21 Aug 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology