Google's Gemini for Home AI Struggles with Basic Recognition, Turning Dogs into Deer and Cats into Mice

3 Sources

3 Sources

[1]

Unexpectedly, a deer briefly entered the family room": Living with Gemini Home

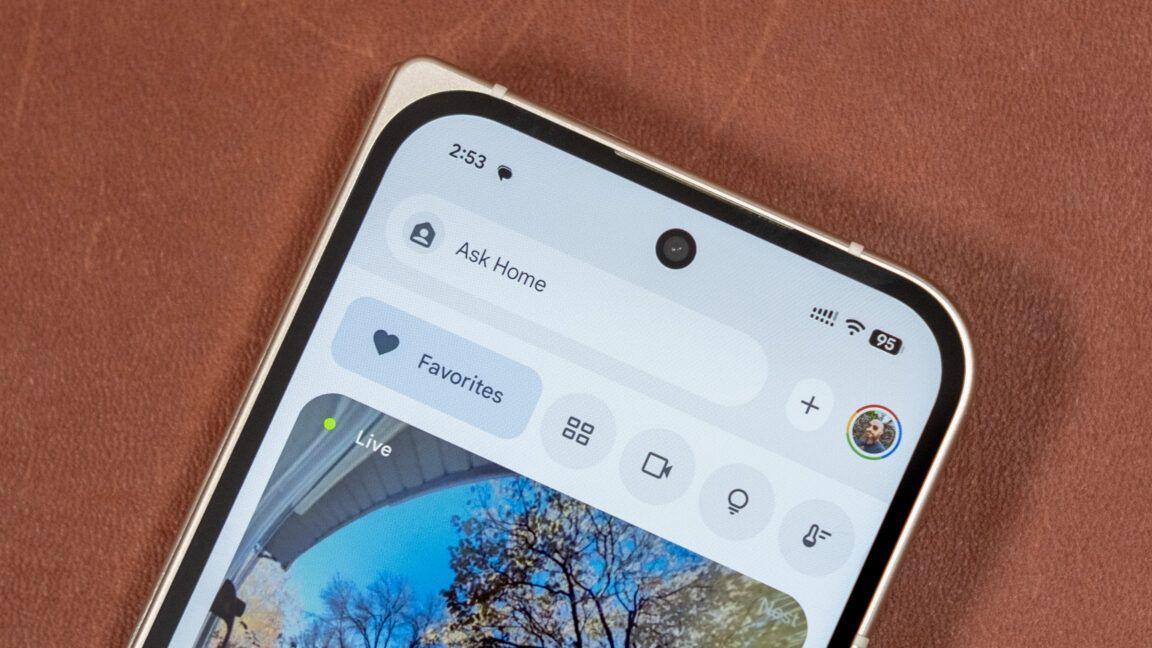

You just can't ignore the effects of the generative AI boom. Even if you don't go looking for AI bots, they're being integrated into virtually every product and service. And for what? There's a lot of hand-wavey chatter about agentic this and AGI that, but what can "gen AI" do for you right now? Gemini for Home is Google's latest attempt to make this technology useful, integrating Gemini with the smart home devices people already have. Anyone paying for extended video history in the Home app is about to get a heaping helping of AI, including daily summaries, AI-labeled notifications, and more. Given the supposed power of AI models like Gemini, recognizing events in a couple of videos and answering questions about them doesn't seem like a bridge too far. And yet Gemini for Home has demonstrated a tenuous grasp of the truth, which can lead to some disquieting interactions, like periodic warnings of home invasion, both human and animal. It can do some neat things, but is it worth the price -- and the headaches? Does your smart home need a premium AI subscription? Simply using the Google Home app to control your devices does not turn your smart home over to Gemini. This is part of Google's higher-tier paid service, which comes with extended camera history and Gemini features for $20 per month. That subscription pipes your video into a Gemini AI model that generates summaries for notifications, as well as a "Daily Brief" that offers a rundown of everything that happened on a given day. The cheaper $10 plan provides less video history and no AI-assisted summaries or notifications. Both plans enable Gemini Live on smart speakers. According to Google, it doesn't send all of your video to Gemini. That would be a huge waste of compute cycles, so Gemini only sees (and summarizes) event clips. Those summaries are then distilled at the end of the day to create the Daily Brief, which usually results in a rather boring list of people entering and leaving rooms, dropping off packages, and so on. Importantly, the Gemini model powering this experience is not multimodal -- it only processes visual elements of videos and does not integrate audio from your recordings. So unusual noises or conversations captured by your cameras will not be searchable or reflected in AI summaries. This may be intentional to ensure your conversations are not regurgitated by an AI. Paying for Google's AI-infused subscription also adds Ask Home, a conversational chatbot that can answer questions about what has happened in your home based on the status of smart home devices and your video footage. You can ask questions about events, retrieve video clips, and create automations. There are definitely some issues with Gemini's understanding of video, but Ask Home is quite good at creating automations. It was possible to set up automations in the old Home app, but the updated AI is able to piece together automations based on your natural language request. Perhaps thanks to the limited set of possible automation elements, the AI gets this right most of the time. Ask Home is also usually able to dig up past event clips, as long as you are specific about what you want. The Advanced plan for Gemini Home keeps your videos for 60 days, so you can only query the robot on clips from that time period. Google also says it does not retain any of that video for training. The only instance in which Google will use security camera footage for training is if you choose to "lend" it to Google via an obscure option in the Home app. Google says it will keep these videos for up to 18 months or until you revoke access. However, your interactions with Gemini (like your typed prompts and ratings of outputs) are used to refine the model. The unexpected deer Every generative AI bot makes the occasional mistake, but you'll probably not notice every one. When the AI hallucinates about your daily life, however, it's more noticeable. There's no reason Google should be confused by my smart home setup, which features a couple of outdoor cameras and one indoor camera -- all Nest-branded with all the default AI features enabled -- to keep an eye on my dogs. So the AI is seeing a lot of dogs lounging around and staring out the window. One would hope that it could reliably summarize something so straightforward. One may be disappointed, though. In my first Daily Brief, I was fascinated to see that Google spotted some indoor wildlife. "Unexpectedly, a deer briefly entered the family room," Gemini said. Gemini does deserve some credit for recognizing that the appearance of a deer in the family room would be unexpected. But the "deer" was, naturally, a dog. This was not a one-time occurrence, either. Gemini sometimes identifies my dogs correctly, but many event clips and summaries still tell me about the notable but brief appearance of deer around the house and yard. This deer situation serves as a keen reminder that this new type of AI doesn't "think," although the industry's use of that term to describe simulated reasoning could lead you to believe otherwise. A person looking at this video wouldn't even entertain the possibility that they were seeing a deer after they've already seen the dogs loping around in other videos. Gemini doesn't have that base of common sense, though. If the tokens say deer, it's a deer. I will say, though, Gemini is great at recognizing car models and brand logos. Make of that what you will. The animal mix-up is not ideal, but it's not a major hurdle to usability. I didn't seriously entertain the possibility that a deer had wandered into the house, and it's a little funny the way the daily report continues to express amazement that wildlife is invading. It's a pretty harmless screw-up. "Overall identification accuracy depends on several factors, including the visual details available in the camera clip for Gemini to process," explains a Google spokesperson. "As a large language model, Gemini can sometimes make inferential mistakes, which leads to these misidentifications, such as confusing your dog with a cat or deer." Google also says that you can tune the AI by correcting it when it screws up. This works sometimes, but the system still doesn't truly understand anything -- that's beyond the capabilities of a generative AI model. After telling Gemini that it's seeing dogs rather than deer, it sees wildlife less often. However, it doesn't seem to trust me all the time, causing it to report the appearance of a deer that is "probably" just a dog. A perfect fit for spooky season Gemini's smart home hallucinations also have a less comedic side. When Gemini mislabels an event clip, you can end up with some pretty distressing alerts. Imagine that you're out and about when your Gemini assistant hits you with a notification telling you, "A person was seen in the family room." A person roaming around the house you believed to be empty? That's alarming. Is it an intruder, a hallucination, a ghost? So naturally, you check the camera feed to find... nothing. An Ars Technica investigation confirms AI cannot detect ghosts. So a ghost in the machine? On several occasions, I've seen Gemini mistake dogs and totally empty rooms (or maybe a shadow?) for a person. It may be alarming at first, but after a few false positives, you grow to distrust the robot. Now, even if Gemini correctly identified a random person in the house, I'd probably ignore it. Unfortunately, this is the only notification experience for Gemini Home Advanced. "You cannot turn off the AI description while keeping the base notification," a Google spokesperson told me. They noted, however, that you can disable person alerts in the app. Those are enabled when you turn on Google's familiar faces detection. Gemini often twists reality just a bit instead of creating it from whole cloth. A person holding anything in the backyard is doing yardwork. One person anywhere, doing anything, becomes several people. A dog toy becomes a cat lying in the sun. A couple of birds become a raccoon. Gemini likes to ignore things, too, like denying there was a package delivery even when there's a video tagged as "person delivers package." At the end of the day, Gemini is labeling most clips correctly and therefore produces mostly accurate, if sometimes unhelpful, notifications. The problem is the flip side of "mostly," which is still a lot of mistakes. Some of these mistakes compel you to check your cameras -- at least, before you grow weary of Gemini's confabulations. Instead of saving time and keeping you apprised of what's happening at home, it wastes your time. For this thing to be useful, inferential errors cannot be a daily occurrence. Learning as it goes Google says its goal is to make Gemini for Home better for everyone. The team is "investing heavily in improving accurate identification" to cut down on erroneous notifications. The company also believes that having people add custom instructions is a critical piece of the puzzle. Maybe in the future, Gemini for Home will be more honest, but it currently takes a lot of hand-holding to move it in the right direction. With careful tuning, you can indeed address some of Gemini for Home's flights of fancy. I see fewer deer identifications after tinkering, and a couple of custom instructions have made the Home Brief waste less space telling me when people walk into and out of rooms that don't exist. But I still don't know how to prompt my way out of Gemini seeing people in an empty room. Despite its intention to improve Gemini for Home, Google is releasing a product that just doesn't work very well out of the box, and it misbehaves in ways that are genuinely off-putting. Security cameras shouldn't lie about seeing intruders, nor should they tell me I'm lying when they fail to recognize an event. The Ask Home bot has the standard disclaimer recommending that you verify what the AI says. You have to take that warning seriously with Gemini for Home. At launch, it's hard to justify paying for the $20 Advanced Gemini subscription. If you're already paying because you want the 60-day event history, you're stuck with the AI notifications. You can ignore the existence of Daily Brief, though. Stepping down to the $10 per month subscription gets you just 30 days of event history with the old non-generative notifications and event labeling. Maybe that's the smarter smart home bet right now. Gemini for Home is widely available for those who opted into early access in the Home app. So you can avoid Gemini for the time being, but it's only a matter of time before Google flips the switch for everyone. Hopefully it works better by then.

[2]

I let Gemini watch my family for the weekend -- it got weird

This weekend, I turned my home into a test lab for Google's new Gemini for Home AI and subjected my family to 72 hours of surveillance as it watched, interpreted, and narrated our every move. My purpose? To find out if an AI that sees everything is actually helpful or just plain creepy. "R unpacking items from a box," read one notification from the Nest camera on a shelf in the kitchen. "Jenni cuts a pie / B walks into the kitchen, washes dishes in the sink / Jenni gets a drink from the refrigerator," it continued. Sometimes, the alerts sounded like the start of a joke, "A dog, a person, and two cats walk into the room / Two chickens walk across the patio." But these weren't jokes. They were mostly accurate descriptions of the goings-on in and around my home, where I'd installed several Google Nest cameras powered by Gemini for Home. This is a new AI layer in the Google Home app that interprets footage from the cameras and -- combined with Nest's facial recognition feature -- delivers a written description of the events, including who or what is present, what they are doing, and sometimes even what they're wearing. For example, now, instead of alerts saying "animal detected on the porch," I get more descriptive versions telling me that it's two chickens or one dog. One of those requires immediate action on my part (my husband is not a fan of chickens pooping on our outdoor couch). The other I can ignore. Alerts like the one from the Nest Doorbell at 1AM, which clarifies it's my son at the front door trying to get in, are less anxiety-inducing than one that just says "person detected." Using AI to improve the constant barrage of notifications from security cameras is a major upgrade -- one that every manufacturer from Ring to Arlo to Wyze is chasing. But how much can we really trust AI to watch over our home? While Gemini didn't hallucinate strangers or wildlife in my home as others have reported, its daily summaries -- called Home Briefs -- in the Google Home app leaned more toward fiction than fact. This is where Gemini AI in the home becomes problematic. I don't record my family inside our home. I leave the security to outdoor cameras and set any indoor cameras to switch off when we're home. But I wanted to give Gemini as much information as possible, so I packed my home with cameras all set to record. I installed the new Nest Doorbell 2K, Nest Cam Indoor 2K, and Nest Cam Outdoor 2K (all wired), along with some earlier models compatible with Gemini. This provided surveillance for the major traffic routes inside and outside my house, as well as a large swath of my backyard. Gemini's descriptions and the Home Brief, which are currently in early access, require a $20 per month or $200 per year Google Home Premium Advanced subscription, which also includes 24/7 video recording. The Gemini AI only analyzes video, not audio, presumably because it's using a vision language model for processing. This also powers a feature in the Google Home app called Ask Home, which lets you search recorded video, something also offered by Ring. When I tested Ring's version of video search, I found it helpful for keeping tabs on my outdoor cat. Google's version is better, because it understood the context of my requests. When I asked both to show me the last time chickens were on my porch, Gemini surfaced the most recent sightings, whereas Ring showed me the best match, which was from three days ago. But beyond finding errant animals, I have struggled to find value in this blow-by-blow account of the actions in my home. And yes, it feels creepy. The real-time alerts were mostly accurate and straightforward. I only spotted two major errors: it mistook my dog for a fox in the backyard, and while I had a package sitting on my doorstep waiting to be picked up, anyone approaching the door became someone delivering a package. However, Gemini appears to have some selective interpretations. I tried to get it to say I was holding a knife -- even brandishing it threateningly at the camera. But it would just use words like chopping or carving in the descriptions, no mention of a knife. And it was a big knife! When my husband left the house carrying a shotgun, the alert said he was carrying a garden tool. It's unclear whether this is intentional (I've reached out to Google), but not identifying weapons seems like a significant oversight for a security-focused system. I would like to know if there is a person with a gun on my porch or wielding a knife in my home. However, the daily Home Briefs is where it got weird. Around 8:30 each night, the Google Home app presents its interpretation of the day's events in a new Activity Tab. You can customize this to focus on things you're most interested in; for me, that's animals and teenagers arriving home past curfew. The summaries were about 80 percent correct, with a few less concerning confusions -- it said a pizza oven was delivered when I was actually taking it away. But my main issue was with the heavy-handed editorializing in the summaries. Gemini took accurate real-time descriptions of events and turned them into a narrative full of declarative sentences that were simply incorrect. For example, on Halloween, the summary said "Jenni and R were seen interacting with the trick-or-treaters and enjoying the festive atmosphere." While I did pass out candy, my daughter R wasn't home. Another summary detailed how my husband and I had spent an enjoyable evening relaxing on the couch along with others. We were home alone. Home Brief is an interesting concept. Receiving an end-of-day summary rather than being constantly distracted by alerts throughout the day could help reduce notification fatigue. But it doesn't mean I can turn off the real-time notifications. I don't want to wait for a cheerful evening Home Brief to find out about the person who broke into my car at 6 in the morning, or that a fox got into the hen house. Google Home needs a way to prioritize urgent alerts over less important ones. It also needs to integrate these with my smart home so that I can use specific events -- such as chickens on the porch -- to trigger automated responses. But making stuff up as the Home Brief did is inexcusable for a system used for home security. Google Home notes that Gemini may make mistakes and offers short clips under the Home Brief so you can check its work, for example, to see that my husband and I were sitting alone in the living room after all. But while Gemini may be able to describe what's happening, it's these attempts at interpreting what matters that fall far short. While I found the AI descriptions for outdoor cameras useful, Gemini's "intelligence" has not convinced me to start running cameras inside the house. If I'm going to consider letting AI narrate my family's life, it will need to be a lot more useful and a lot more accurate.

[3]

AI Is Making a Lot of Big Promises, but It Can't Even Properly Identify My Cat

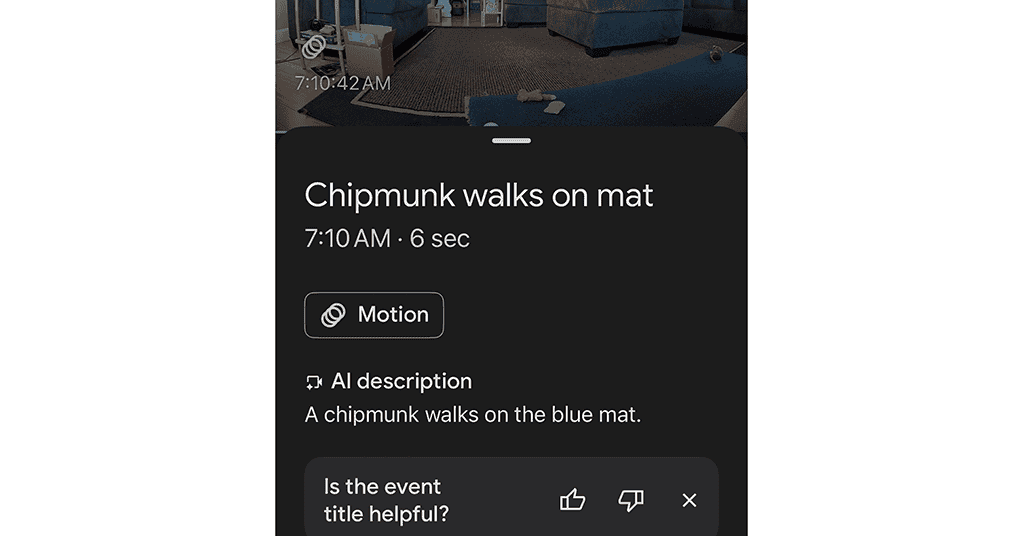

Experiencing a new level of intensely close monitoring -- and the AI-hallucination chaos that ended up snowballing -- left me slightly creeped out. And I'm more than a little annoyed that Google seems to expect me, and other camera owners, to do the hard work of training Gemini, while paying for my own labor. The promise of Gemini, according to Google, will be felt across the smart home, as it brings more natural and conversational voice interactions with smart speakers, fluid integration with other smart devices, and new features such as annotated descriptions and notifications for video recordings and the ability to use text or voice searches to locate specific details in video recordings. I installed the Google Nest Cam Outdoor (Wired, 2nd Gen) outside facing my driveway, and I put the Google Nest Cam Indoor (Wired, 3rd Gen) in my living room. I placed an older indoor Google Nest camera (which also received a Gemini boost) in my kitchen. I enabled Familiar Face alerts (an optional facial-recognition feature) and then also opted in to an early release of Gemini for Home, as well as a $20-per-month Advanced subscription (you do that part online), which adds AI-generated descriptions to smartphone notifications of video events, annotations of the action in the videos, the daily Home Brief, and the option to perform an Ask Home video search. Some good news: Automations work pretty well. Using the microphone icon on the Ask Home bar in the app, I told Gemini: "Every time someone walks in front of the living room camera after 6:00 p.m., turn on the deck lights." It created the task but still required a few manual clicks, and you can't delete or disable automations via Gemini yet. Overall, though, the experience is an improvement on a platform that always seemed wonky, and it did work. Less happy news: When it comes to Gemini's AI powers of observation, there's still a lot of hard work to be done. Things quickly went from being invasive but slightly boring to overwhelming and completely bananas. On the first day, the results were fairly typical but also a bit TMI. With pre-Gemini Nest cameras, motion would trigger a basic smartphone alert like "Person detected." With the new camera, I immediately got a more detailed description with Gemini's interpreted context ("Person walks into the room.") Since I had enabled Familiar Face detection and labeled my family's faces, notifications instantly became more specific, like "Rachel walks downstairs." Gemini was just getting warmed up. Within a day descriptions continued to add detail: "Person drinks water in the kitchen," "cat jumps on couch," or "cat plays with toy." With three cameras inside and outside my home, I was soon on the receiving end of a firehose of alerts: when items were tossed in the trash cans, when delivery people arrived, and when my cat groomed himself on top of my couch. For giggles I threw on a disguise to see what would happen, and Gemini impressively and accurately described the event as "A person wearing a cat mask and a black shirt walks into the room." Over just a couple of days of use, Gemini sent me dozens of banal descriptions about my household. But soon the hallucinations kicked in. Gemini notified me that our cat was scratching the couch and also that my husband was walking into the room, but a look at the footage showed that Kitty was innocent of the crime, and my husband was nowhere to be seen. Then there were descriptions that my husband and I were relaxing "along with others," though unless Gemini is able to see poltergeists, we were the only two in the house. Afterward, Gemini perceived my definitely orange cat as being several other colors, which made the AI assume I had a multitude of cats. And then Gemini insisted I had a dog, as well as a chipmunk, a hamster, and mice -- oh, lots of mice. To Gemini's mind, we were infested. This came as a great surprise to us (not least of all our cat). The Google Home app allows you to provide feedback on each video clip to improve accuracy, but only through the not-very-scientific thumbs-up/thumbs-down model. Over the course of three weeks, my cameras captured an average of 300 events on any given day. I can't imagine anyone having the time or patience to keep up with that. Although this whole experience was a source of entertainment for my co-workers and me, it quickly turned to concern. Security cameras aren't supposed to be funny or entertaining. They're safety devices tasked with protecting people and their possessions. People rely on cameras to be unbiased observers, but the addition of AI that interprets what it sees introduces bias -- and if it's inaccurate, it's no longer useful. Gemini for Home, in its current form, is plagued by AI hallucinations, constantly prone to mislabeling people, colors, activities, objects, and animals, and that makes it fundamentally untrustworthy. And the less you trust your security device, the less you rely on it, even when you absolutely should. We weren't the only ones having experiences like this. (As I write this, my Nest camera just labeled my 6-foot-3 husband as a child and claimed that his armful of laundry was a baby.) At best, Google's Gemini for Home is a rough beta program that's unfocused and unreliable -- and that makes it potentially dangerous when implemented on a security device. A Google spokesperson told us: "Gemini for Home (including AI descriptions, Home Brief, and Ask Home) is in early access, so users can try these new features and continue giving us feedback as we work to perfect the experience. As part of this, we are investing heavily in improving accurate identification. This includes incorporating user-provided corrections to generate more accurate AI descriptions. Since all Gemini for Home features rely on our underlying Familiar Faces identification, improving this accuracy also means improving the quality of Familiar Faces. This is an active area of investment and we expect these features to keep improving over time." We'll continue to test Gemini for Home, but for now we can't recommend it to anyone who relies on these cameras for security or peace of mind.

Share

Share

Copy Link

Google's new Gemini for Home AI service promises to revolutionize smart home security with AI-powered video analysis, but early users report significant accuracy issues including misidentifying pets as wildlife and hallucinating non-existent animals and people.

Google Launches AI-Powered Home Security with Mixed Results

Google has rolled out Gemini for Home, an AI-enhanced smart home service that promises to revolutionize how users interact with their security cameras and smart devices. The service, available through a $20 monthly subscription, integrates Google's Gemini AI model with existing Nest cameras to provide detailed, contextual descriptions of home activities rather than basic motion alerts

1

.

Source: The Verge

The Advanced plan includes 60 days of video history, AI-generated daily summaries called "Home Briefs," and an "Ask Home" feature that allows users to search through footage using natural language queries. Google emphasizes that the AI only processes visual elements from event clips, not continuous footage, and does not integrate audio to protect user privacy

1

.Promising Features Meet Reality Challenges

Early testing reveals both the potential and pitfalls of AI-powered home monitoring. The system successfully creates more informative notifications, transforming generic "animal detected" alerts into specific descriptions like "two chickens walk across the patio" or identifying family members by name through facial recognition

2

.The Ask Home feature demonstrates particular strength in creating smart home automations through natural language commands. Users can request complex automation setups, and the AI successfully interprets and implements these requests, representing a significant improvement over traditional smart home configuration methods

1

.

Source: Ars Technica

Accuracy Issues Raise Security Concerns

However, extensive real-world testing has revealed significant accuracy problems that undermine the system's reliability as a security tool. Multiple users report consistent misidentification of common household pets, with dogs frequently labeled as deer and cats misidentified as various other animals including mice, hamsters, and chipmunks

1

3

.

Source: NYT

More concerning are reports of the AI hallucinating people and animals that don't exist. One tester noted descriptions of family members "relaxing along with others" when only two people were present, while another experienced notifications about non-existent mice infestations

3

.The system also demonstrates problematic gaps in threat detection. Testing revealed that Gemini failed to properly identify weapons, describing a shotgun as a "garden tool" and avoiding mention of knives even when prominently displayed

2

.Related Stories

User Experience and Training Burden

The volume of notifications generated by Gemini for Home can be overwhelming, with some users receiving descriptions of hundreds of daily events including mundane activities like trash disposal and pet grooming. The system provides only basic thumbs-up/thumbs-down feedback mechanisms for accuracy improvement, placing the burden of training on paying customers

3

.Daily Home Briefs, while conceptually useful, often contain significant inaccuracies that blend fact with fiction. Users report approximately 80% accuracy in these summaries, with errors ranging from misidentified deliveries to completely fabricated events

2

.Privacy and Data Handling

Google states it does not retain user video for training purposes unless explicitly opted in through an obscure setting, and videos are kept for a maximum of 18 months. However, user interactions with Gemini, including prompts and feedback ratings, are used to refine the model. The company processes only event clips rather than continuous footage to manage computational costs

1

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Anthropic stands firm against Pentagon's demand for unrestricted military AI access

Policy and Regulation

3

Pentagon Clashes With AI Firms Over Autonomous Weapons and Mass Surveillance Red Lines

Policy and Regulation