Google's Trillium AI Chip: A Game-Changer for AI and Cloud Computing

3 Sources

3 Sources

[1]

5 reasons why Google's Trillium could transform AI and cloud computing - and 2 obstacles

Google's latest innovation, Trillium, marks a significant advancement in artificial intelligence (AI) and cloud computing. As the company's sixth-generation Tensor Processing Unit (TPU), Trillium promises to redefine the economics and performance of large-scale AI infrastructure. Alongside Gemini 2.0, an advanced AI model designed for the "agentic era," and Deep Research, a tool to streamline the management of complex machine learning queries, Trillium stands out as Google's most mature and ambitious effort to reshape its AI and cloud offerings. Also: Google's Gemini 2.0 AI promises to be faster and smarter via agentic advances Here are five compelling reasons why Trillium could be a game-changer for Google's AI and cloud strategy: One of the most striking features of Trillium is its exceptional cost and performance metrics. Google claims that Trillium delivers up to 2.5 times better training performance per dollar and three times higher inference throughput than previous TPU generations. These impressive gains are achieved through significant hardware enhancements, including doubled High Bandwidth Memory (HBM) capacity, a third-generation SparseCore, and a 4.7-fold peak compute performance per chip increase. For enterprises looking to reduce the costs associated with training large language models (LLMs) like Gemini 2.0 and managing inference-heavy tasks such as image generation and recommendation systems, Trillium offers a financially attractive alternative. Early adoption by companies like A21 Labs underscores Trillium's potential. AI21 Labs, a long-standing user of the TPU ecosystem, has reported remarkable gains in cost-efficiency and scalability while using Trillium to train its large language models. "At AI21, we constantly strive to enhance the performance and efficiency of our Mamba and Jamba language models. As long-time users of TPUs since v4, we're incredibly impressed with the capabilities of Google Cloud's Trillium. The advancements in scale, speed, and cost-efficiency are significant. We believe Trillium will be essential in accelerating the development of our next generation of sophisticated language models, enabling us to deliver even more powerful and accessible AI solutions to our customers." - Barak Lenz, CTO, AI21 Labs These preliminary results demonstrate Trillium's impressive capabilities and its ability to deliver on Google's performance and cost claims, making it a compelling option for organizations already integrated into Google's infrastructure. Trillium is engineered to handle massive AI workloads with remarkable scalability. Google boasts a 99% scaling efficiency across 12 pods (3,072 chips) and 94% efficiency across 24 pods for robust models such as GPT-3 and Llama-2. This near-linear scaling ensures that Trillium can efficiently manage extensive training tasks and large-scale deployments. Moreover, Trillium's integration with Google Cloud's AI Hypercomputer allows for the seamless addition of over 100,000 chips into a single Jupiter network fabric, providing 13 Petabits/sec of bandwidth. This level of scalability is crucial for enterprises that require robust and efficient AI infrastructure to support their growing computational needs. Also: Is this the end of Google? This new AI tool isn't just competing, it's winning Maintaining high scaling efficiency across thousands of chips positions Trillium as a powerful contender for large-scale AI training tasks. This scalability ensures enterprises can expand their AI operations without compromising performance or incurring prohibitive costs, making Trillium an attractive solution for businesses with ambitious AI ambitions. Trillium incorporates cutting-edge hardware technologies that set it apart from previous TPU generations and competitors. Key innovations include doubled High Bandwidth Memory (HBM), which enhances data transfer rates and reduces bottlenecks, a third-generation SparseCore that optimizes computational efficiency by focusing resources on the most critical data paths, and a 4.7x increase in peak compute performance per chip, significantly boosting processing power. These advancements ensure that Trillium can handle the most demanding AI tasks, providing a solid foundation for future AI developments and applications. These hardware improvements enhance performance and contribute to energy efficiency, making Trillium a sustainable choice for large-scale AI operations. By investing in advanced hardware, Google ensures that Trillium remains at the forefront of AI processing capabilities, capable of supporting increasingly complex and resource-intensive AI models. Trillium's deep integration with Google Cloud's AI Hypercomputer is a significant advantage. By leveraging Google's extensive cloud infrastructure, Trillium optimizes AI workloads, making deploying and managing AI models more efficient. This seamless integration enhances the performance and reliability of AI applications hosted on Google Cloud, offering enterprises a unified and optimized solution for their AI needs. For organizations already invested in Google's ecosystem, Trillium provides a highly integrated and streamlined pathway to scale their AI initiatives effectively. Also: Gemini's new Deep Research feature searches the web for you - like an assistant However, this tight integration also poses challenges in terms of portability and flexibility. Unlike Amazon's Trainium, which offers a hybrid approach allowing enterprises to use both NVIDIA GPUs and Trainium chips, or NVIDIA's GPUs that are highly portable across different cloud and on-premises environments, Trillium's single-cloud focus may limit its appeal to organizations seeking multi-cloud or hybrid solutions. To address this, Google must demonstrate how Trillium can deliver superior performance and cost benefits that outweigh the flexibility offered by its competitors. Trillium is not just a powerful TPU; it is part of a broader strategy that includes Gemini 2.0, an advanced AI model designed for the "agentic era," and Deep Research, a tool to streamline the management of complex machine learning queries. This ecosystem approach ensures that Trillium remains relevant and can support the next generation of AI innovations. By aligning Trillium with these advanced tools and models, Google is future-proofing its AI infrastructure, making it adaptable to emerging trends and technologies in the AI landscape. Also: The fall of Intel: How gen AI helped dethrone a giant and transform computing as we know it This strategic alignment allows Google to offer a comprehensive AI solution beyond mere processing power. By integrating Trillium with cutting-edge AI models and management tools, Google ensures that enterprises can leverage the full potential of their AI investments, staying ahead in a rapidly evolving technological landscape. While Trillium offers substantial advantages, Google faces stiff competition from industry leaders like NVIDIA and Amazon. NVIDIA's GPUs, particularly the H100 and H200 models, are renowned for their high performance and support for leading generative AI frameworks through the mature CUDA ecosystem. Additionally, NVIDIA's upcoming Blackwell B100 and B200 GPUs are expected to enhance low-precision operations vital for cost-effective scaling, maintaining NVIDIA's strong position in the AI hardware market. On the other hand, Amazon's Trainium chips present a compelling alternative with a hybrid approach that combines flexibility and cost-effectiveness. Amazon's second-generation Trainium claims a 30-40% price-performance improvement over NVIDIA GPUs for training large language models (LLMs). This hybrid strategy allows enterprises to use both NVIDIA GPUs and Trainium chips, minimizing risk while optimizing performance. Moreover, Amazon's ability to support multi-cloud and hybrid cloud environments offers greater flexibility than Trillium's single-cloud reliance. Also: How ChatGPT's data analysis tool yields actionable business insights with no programming Trillium's success will depend on proving that its performance and cost advantages can outweigh the ecosystem maturity and portability offered by NVIDIA and Amazon. Google must leverage its superior cost and performance metrics and explore ways to enhance Trillium's ecosystem compatibility beyond Google Cloud to attract a broader range of enterprises seeking versatile AI solutions. Google's Trillium represents a bold and ambitious effort to advance AI and cloud computing infrastructure. With its superior cost and performance efficiency, exceptional scalability, advanced hardware innovations, seamless integration with Google Cloud, and alignment with future AI developments, Trillium has the potential to attract enterprises seeking optimized AI solutions. The early successes with adopters like AI21 Labs highlight Trillium's impressive capabilities and its ability to deliver on Google's promises. Also: Even Nvidia's CEO is obsessed with Google's NotebookLM AI tool However, the competitive landscape dominated by NVIDIA and Amazon presents significant challenges. To secure its position, Google must address ecosystem flexibility, demonstrate independent performance validation, and possibly explore multi-cloud compatibility. If successful, Trillium could significantly enhance Google's standing in the AI and cloud computing markets, offering a robust alternative for large-scale AI operations and helping enterprises leverage AI technologies more effectively and efficiently.

[2]

Google's new Trillium AI chip delivers 4x speed and powers Gemini 2.0

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Google has just unveiled Trillium, its sixth-generation artificial intelligence accelerator chip, claiming performance improvements that could fundamentally alter the economics of AI development while pushing the boundaries of what's possible in machine learning. The custom processor, which powered the training of Google's newly announced Gemini 2.0 AI model, delivers four times the training performance of its predecessor while using significantly less energy. This breakthrough comes at a crucial moment, as tech companies race to build increasingly sophisticated AI systems that require enormous computational resources. "TPUs powered 100% of Gemini 2.0 training and inference," Sundar Pichai, Google's CEO, explained in an announcement post highlighting the chip's central role in the company's AI strategy. The scale of deployment is unprecedented: Google has connected more than 100,000 Trillium chips in a single network fabric, creating what amounts to one of the world's most powerful AI supercomputers. How Trillium's 4x performance boost is transforming AI development Trillium's specifications represent significant advances across multiple dimensions. The chip delivers a 4.7x increase in peak compute performance per chip compared to its predecessor, while doubling both high-bandwidth memory capacity and interchip interconnect bandwidth. Perhaps most importantly, it achieves a 67% increase in energy efficiency -- a crucial metric as data centers grapple with the enormous power demands of AI training. "When training the Llama-2-70B model, our tests demonstrate that Trillium achieves near-linear scaling from a 4-slice Trillium-256 chip pod to a 36-slice Trillium-256 chip pod at a 99% scaling efficiency," said Mark Lohmeyer, VP of compute and AI infrastructure at Google Cloud. This level of scaling efficiency is particularly remarkable given the challenges typically associated with distributed computing at this scale. The economics of innovation: Why Trillium changes the game for AI startups Trillium's business implications extend beyond raw performance metrics. Google claims the chip provides up to a 2.5x improvement in training performance per dollar compared to its previous generation, potentially reshaping the economics of AI development. This cost efficiency could prove particularly significant for enterprises and startups developing large language models. AI21 Labs, an early Trillium customer, has already reported significant improvements. "The advancements in scale, speed, and cost-efficiency are significant," noted Barak Lenz, CTO of AI21 Labs, in the announcement. Scaling new heights: Google's 100,000-chip AI supernetwork Google's deployment of Trillium within its AI Hypercomputer architecture demonstrates the company's integrated approach to AI infrastructure. The system combines over 100,000 Trillium chips with a Jupiter network fabric capable of 13 petabits per second of bisectional bandwidth -- enabling a single distributed training job to scale across hundreds of thousands of accelerators. "The growth of flash usage has been more than 900% which has been incredible to see," noted Logan Kilpatrick, a product manager on Google's AI studio team, during the developer conference, highlighting the rapidly increasing demand for AI computing resources. Beyond Nvidia: Google's bold move in the AI chip wars The release of Trillium intensifies the competition in AI hardware, where Nvidia has dominated with its GPU-based solutions. While Nvidia's chips remain the industry standard for many AI applications, Google's custom silicon approach could provide advantages for specific workloads, particularly in training very large models. Industry analysts suggest that Google's massive investment in custom chip development reflects a strategic bet on the growing importance of AI infrastructure. The company's decision to make Trillium available to cloud customers indicates a desire to compete more aggressively in the cloud AI market, where it faces strong competition from Microsoft Azure and Amazon Web Services. Powering the future: what Trillium means for tomorrow's AI The implications of Trillium's capabilities extend beyond immediate performance gains. The chip's ability to handle mixed workloads efficiently -- from training massive models to running inference for production applications -- suggests a future where AI computing becomes more accessible and cost-effective. For the broader tech industry, Trillium's release signals that the race for AI hardware supremacy is entering a new phase. As companies push the boundaries of what's possible with artificial intelligence, the ability to design and deploy specialized hardware at scale could become an increasingly critical competitive advantage. "We're still in the early stages of what's possible with AI," Demis Hassabis, CEO of Google DeepMind, wrote in the company blog post. "Having the right infrastructure -- both hardware and software -- will be crucial as we continue to push the boundaries of what AI can do." As the industry moves toward more sophisticated AI models that can act autonomously and reason across multiple modes of information, the demands on the underlying hardware will only increase. With Trillium, Google has demonstrated that it intends to remain at the forefront of this evolution, investing in the infrastructure that will power the next generation of AI advancement.

[3]

Google Cloud moves its AI-focused Trillium chips into general availability - SiliconANGLE

Google Cloud moves its AI-focused Trillium chips into general availability Google LLC's cloud unit today announced that Trillium, the latest iteration of its TPU artificial intelligence chip, is now generally available. The launch comes seven months after the search giant first detailed the custom processor. Trillium offers up to three times the inference throughput of Google's previous-generation chip. When used for AI training, it can provide two and a half times the performance. Google says that the chip delivers those speed increases using 67% less power, which means AI workloads can run more cost-efficiently in the company's cloud. Trillium is partly made up of compute modules called TensorCores. Each such module includes, among others, circuits optimized to perform matrix multiplications, mathematical operations that AI models rely on heavily to process data. One reason Trillium is faster than its predecessor is that Google increased the size of the matrix multiplication units and boosted clock speeds. The company's engineers also enhanced another Trillium building block called the SparseCore. It's a set of circuits optimized to process embeddings, the mathematical structures in which AI models keep their data. The new SparseCore is particularly adept at crunching large embeddings that hold a significant amount of information. Such supersized files are extensively used by the AI models that underpin product recommendation tools and search engines. Trillium's logic circuits are supported by an HBM memory pool twice as large as the one in Google's previous-generation chip. It's also twice as fast. HBM is a type of pricey, high-speed RAM that is used in graphics cards because it allows AI models to quickly access the data they require for calculations. Google also added other improvements, including to the interconnect through which Trillium chips exchange data with one another. The company doubled the component's bandwidth. That means the chips can share AI models' data twice as fast with one another to speed up calculations. In Google's data centers, Trillium processors are deployed as part of server clusters called pods that each feature 256 of the AI chips. Pods' hardware resources are provided to AI workloads in the form of so-called slices. Each slice corresponds to a subset of the AI chips in a pod. With Trillium, Google Cloud introduced a new software capability for managing slices. The feature groups multiple slices into a pool of hardware resources called a collection. "This feature allows Google's scheduling systems to make intelligent job-scheduling decisions to increase the overall availability and efficiency of inference workloads," Mark Lohmeyer, Google Cloud's vice president and general manager of compute and AI infrastructure, wrote in a blog post. Alongside Trillium chips, a pod includes a range of other components. A system called Titanium offloads certain computing tasks from a Trillium server's central processing unit to specialized chips called IPUs. Those chips perform the offloaded chores more efficiently than the CPU, which optimizes data center operations. Operating large AI clusters is challenging partly because they can experience scalability challenges. When engineers add more processors to a cluster, only a portion of the extra processors' compute capacity can be used by applications because of technical constraints. Moreover, the diminishing returns sometimes become more pronounced as the cluster grows in size. Google says that the design of its Trillium-powered pods addresses those limitations. In one internal run, a set of 12 pods achieved 99% scaling efficiency when training an AI model with 175 billion parameters. During one another test that involved Llama-2-70B, Google's infrastructure achieved 99% scaling efficiency. "Training large models like Gemini 2.0 requires massive amounts of data and computation," Lohmeyer wrote. "Trillium's near-linear scaling capabilities allow these models to be trained significantly faster by distributing the workload effectively and efficiently across a multitude of Trillium hosts."

Share

Share

Copy Link

Google unveils Trillium, its sixth-generation AI chip, offering significant performance improvements and cost efficiencies. This innovation could reshape the landscape of AI development and cloud computing.

Google Unveils Trillium: A Leap Forward in AI Processing

Google has introduced Trillium, its sixth-generation Tensor Processing Unit (TPU), marking a significant advancement in artificial intelligence (AI) and cloud computing. This new AI chip promises to redefine the economics and performance of large-scale AI infrastructure

1

2

3

.Unprecedented Performance and Efficiency

Trillium boasts impressive performance metrics, delivering up to 2.5 times better training performance per dollar and three times higher inference throughput compared to previous TPU generations

1

. The chip achieves a 4.7x increase in peak compute performance per chip while using 67% less energy, addressing the growing power demands of AI training2

.Key hardware improvements include:

- Doubled High Bandwidth Memory (HBM) capacity

- Third-generation SparseCore for optimized computational efficiency

- 4x increase in peak compute performance per chip

1

2

Scalability and Integration

Google has demonstrated Trillium's exceptional scalability, achieving 99% scaling efficiency across 12 pods (3,072 chips) for robust models like GPT-3 and Llama-2

1

. The integration with Google Cloud's AI Hypercomputer allows for the seamless addition of over 100,000 chips into a single Jupiter network fabric, providing 13 Petabits/sec of bandwidth1

2

.Powering Next-Generation AI Models

Trillium played a crucial role in training Google's newly announced Gemini 2.0 AI model. "TPUs powered 100% of Gemini 2.0 training and inference," stated Sundar Pichai, Google's CEO

2

. This highlights Trillium's capability to handle the most demanding AI tasks and support future AI developments.Economic Implications for AI Development

The cost efficiency of Trillium could reshape the economics of AI development, particularly benefiting enterprises and startups working on large language models. AI21 Labs, an early adopter, reported significant improvements in scale, speed, and cost-efficiency

1

2

.Related Stories

Competitive Landscape

Trillium's release intensifies competition in the AI hardware market, where NVIDIA has long dominated with its GPU-based solutions. Google's custom silicon approach could provide advantages for specific workloads, especially in training very large models

2

.Challenges and Considerations

Despite its impressive capabilities, Trillium faces some challenges:

-

Single-cloud focus: Unlike some competitors offering hybrid or multi-cloud solutions, Trillium's tight integration with Google Cloud may limit its appeal to organizations seeking more flexible deployment options

1

. -

Adoption curve: As a new technology, it may take time for the broader AI community to fully leverage Trillium's capabilities and integrate it into existing workflows.

Future Implications

The release of Trillium signals a new phase in the race for AI hardware supremacy. As companies push the boundaries of AI capabilities, the ability to design and deploy specialized hardware at scale could become a critical competitive advantage

2

.Demis Hassabis, CEO of Google DeepMind, emphasized, "We're still in the early stages of what's possible with AI. Having the right infrastructure -- both hardware and software -- will be crucial as we continue to push the boundaries of what AI can do"

2

.As the industry moves towards more sophisticated AI models capable of autonomous action and multi-modal reasoning, Trillium positions Google at the forefront of this evolution, providing the infrastructure to power the next generation of AI advancements

2

3

.References

Summarized by

Navi

Related Stories

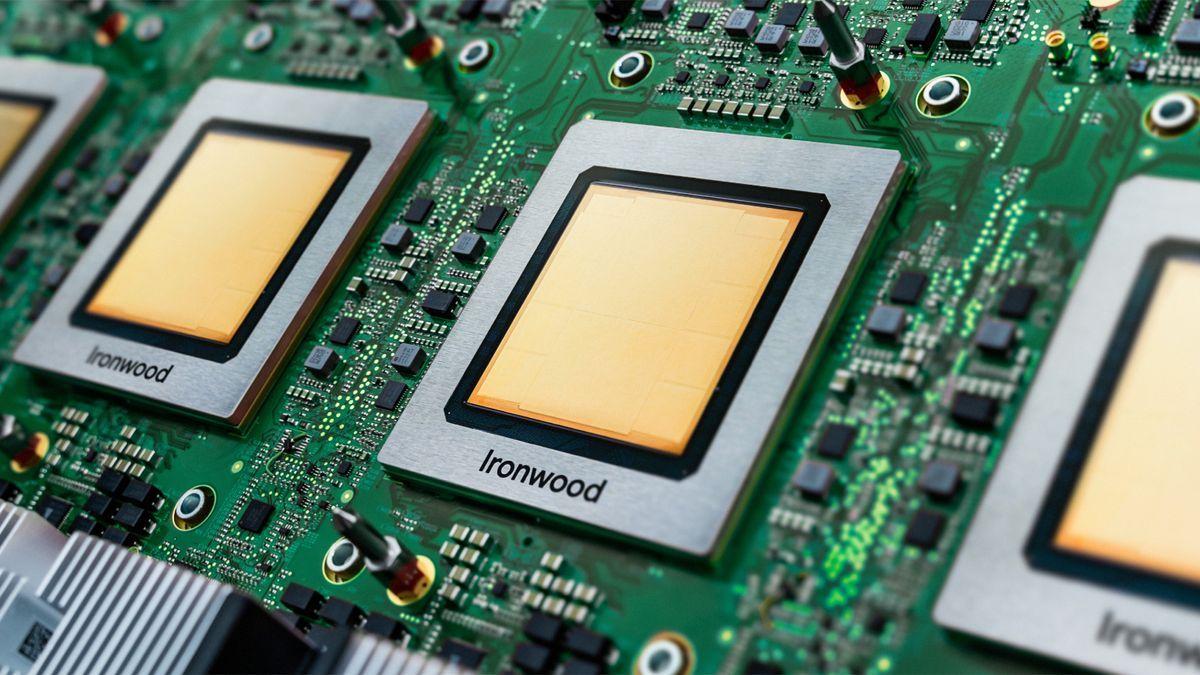

Google Unleashes Ironwood TPU v7: Seventh-Generation AI Chips Challenge Nvidia's Dominance

06 Nov 2025•Technology

Google Cloud Unveils AI Agent Strategy at Cloud Next Conference

10 Apr 2025•Technology

AWS Unveils Next-Gen Trainium3 AI Chip and Launches Trainium2-Powered Cloud Instances

04 Dec 2024•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation