Privacy Concerns Arise as Thousands of Grok AI Chatbot Conversations Become Publicly Searchable

12 Sources

12 Sources

[1]

Thousands of Grok chats are now searchable on Google | TechCrunch

Hundreds of thousands of conversations that users had with Elon Musk's xAI chatbot Grok are easily accessible through Google Search, reports Forbes. Whenever a Grok user clicks the "share" button on a conversation with the chatbot, it creates a unique URL that the user can use to share the conversation via email, text or on social media. According to Forbes, those URLs are being indexed by search engines like Google, Bing, and DuckDuckGo, which in turn lets anyone look up those conversations on the web. Users of Meta and OpenAI's chatbots were recently affected by a similar oversight, and like those cases, the chats leaked by Grok give us a glimpse into users' less-than-respectable desires - questions about how to hack crypto wallets; dirty chats with an explicit AI persona; and asking for instructions on cooking meth. xAI's rules prohibit the use of its bot to "promote critically harming human life" or developing "bioweapons, chemical weapons, or weapons of mass destruction," though that obviously hasn't stopped users from asking Grok for help with such things anyway. According to conversations made accessible by Google, Grok gave users instructions on making fentanyl, listed various suicide methods, handed out bomb construction tips, and even a detailed plan for the assassination of Elon Musk. xAI did not immediately respond to a request for comment. We've also asked when xAI began indexing Grok conversations. Late last month, ChatGPT users sounded the alarm that their chats were being indexed on Google, which OpenAI described as a "short-lived experiment." Soon afterwards, Musk stated publicly that Grok had "no such sharing feature" and that the service "prioritize[s] privacy."

[2]

Hundreds of Thousands of User Chats with AI Chatbot Grok Are Now Public

Alex Valdes from Bellevue, Washington has been pumping content into the Internet river for quite a while, including stints at MSNBC.com, MSN, Bing, MoneyTalksNews, Tipico and more. He admits to being somewhat fascinated by the Cambridge coffee webcam back in the Roaring '90s. Does what's said between you and your AI chat stay between you and your AI chat? Nope. According to a report by Forbes, Elon Musk's AI assistant Grok published more than 370,000 chats on the Grok website. Those URLs, which were not necessarily intended for public consumption by users, were then indexed by search engines and entered the public sphere. It wasn't just chats. Forbes reported that uploaded documents, such as photos, spreadsheets and other documents, were also published. Representatives for xAI, which makes Grok, didn't immediately respond to a request for comment. The publishing of Grok conversations is the latest in a series of troubling reports that should spur chatbot users to be overly cautious about what they share with AI assistants. Don't just gloss over the Terms and Conditions, and be mindful of the privacy settings. Earlier this month, 404 Media reported on a researcher who discovered more than 130,000 chats with AI assistants Claud, Chat GPT and others were readable on Archive.org. When a Grok chat is finished, the user can hit a share button to create a unique URL, allowing the conversation to be shared with others. According to Forbes, "hitting the share button means that a conversation will be published on Grok's website, without warning or a disclaimer to the user." These URLs were also made available to search engines, allowing anyone to read them. There is no disclaimer that these chat URLs will be published for the open internet. But the Terms of Service outlined on the Grok website reads: "You grant, an irrevocable, perpetual, transferable, sublicensable, royalty-free, and worldwide right to xAI to use, copy, store, modify, distribute, reproduce, publish, display in public forums, list information regarding, make derivative works of, and aggregate your User Content and derivative works thereof for any purpose..."

[3]

Your Chats With AI Chatbot Grok May Be Visible to All

Alex Valdes from Bellevue, Washington has been pumping content into the Internet river for quite a while, including stints at MSNBC.com, MSN, Bing, MoneyTalksNews, Tipico and more. He admits to being somewhat fascinated by the Cambridge coffee webcam back in the Roaring '90s. If you've ever had a conversation with AI assistant Grok, there's a chance it's available on the internet, for all to see. According to a report by Forbes, Elon Musk's AI assistant published more than 370,000 chats on the Grok website. Those URLs, which weren't necessarily intended by users for public consumption, were then indexed by search engines and entered the public sphere. While many of the conversations with Grok were reportedly benign, some were explicit and appeared to violate its own terms of service, including offering instructions on manufacturing illicit drugs like fentanyl and methamphetamine, constructing a bomb, and methods of suicide. It wasn't just chats. Forbes reported that uploaded documents, such as photos, spreadsheets and other documents, were also published. Representatives for xAI, which makes Grok, didn't respond to a request for comment. The publishing of Grok conversations is the latest in a series of troubling reports that should spur chatbot users to be overly cautious about what they share with AI assistants. Don't just gloss over the Terms and Conditions, and be mindful of the privacy settings. Earlier this month, 404 Media reported on a researcher who discovered more than 130,000 chats with AI assistants Claude, Chat GPT and others were readable on Archive.org. When a Grok chat is finished, the user can hit a share button to create a unique URL, allowing the conversation to be shared with others. According to Forbes, "hitting the share button means that a conversation will be published on Grok's website, without warning or a disclaimer to the user." These URLs were also made available to search engines, allowing anyone to read them. There is no disclaimer that these chat URLs will be published for the open internet. But the Terms of Service outlined on the Grok website reads: "You grant, an irrevocable, perpetual, transferable, sublicensable, royalty-free, and worldwide right to xAI to use, copy, store, modify, distribute, reproduce, publish, display in public forums, list information regarding, make derivative works of, and aggregate your User Content and derivative works thereof for any purpose..." But there is a measure of good news for users who accidentally hit the share button or were unaware their queries would be shared far and wide. Grok has a tool to help users manage their chat histories. Going to https://grok.com/share-links will present a history of your shared chats. Simply click the Remove button to the right of each chat to delete it from your chat history. It's wasn't immediately clear if that would have any affect on what's already indexed in search engines. E.M Lewis-Jong, director at the Mozilla Foundation, advises chatbot users to keep a simple directive in mind: Don't share anything you want to keep private, such as personal ID data or other sensitive information. "The concerning issue is that these AI systems are not designed to transparently inform users how much data is being collected or under which conditions their data might be exposed," Lewis-Jong says. "This risk is higher when you consider that children as young as 13 years old can use chatbots like ChatGPT." Lewis-Jong adds that AI assistants such as Grok and ChatGPT should be clearer about the risks users are taking when they use these tools. "AI companies should make sure users understand that their data could end up on public platforms.," Lewis-Jong says. "AI companies are telling people that the AI might make mistakes -- this is just another health warning that should also be implemented when it comes to warning users about the use of their data." According to data from SEO and thought leadership marketing company First Page Sage, Grok has 0.6% of market share, far behind leaders ChatGPT (60.4%), Microsoft Copilot (14.10%) and Google Gemini (13.5%).

[4]

Grok's Share Button Is a Privacy Disaster. Here's Why You Should Avoid It

(Credit: Thomas Fuller/SOPA Images/LightRocket via Getty Images) Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Elon Musk's Grok AI chatbot has published thousands of user conversations online, most likely without their knowledge. As Forbes reports, the issue stems from the chatbot's share button. Every time a user clicks the button from the bottom of a chat window, it generates a unique, shareable URL. While most users might assume it's a private link only accessible to the people they share it with, the link actually gets published on Grok's website, making the content discoverable on search engines. Grok doesn't give you a warning. "Copied shared link to clipboard" is all I could see on the web. As CNET points out, Grok's terms of service grant the website "an irrevocable, perpetual, transferable, sublicensable, royalty-free, and worldwide right to xAI to use, copy, store, modify, distribute, reproduce, publish, display in public forums, list information regarding, make derivative works of, and aggregate your User Content and derivative works thereof for any purpose." If you use Grok, chances are you have already agreed to this condition. So, what's the solution? For now, to stop Grok from publishing your chats online, avoid using its share button -- at least until xAI, the chatbot's parent company, changes its stance on the feature. Additionally, head to grok.com/share-links to see which of your chats are currently accessible through public links. The page also lets you revoke access to those links by clicking on the "Remove" button next to them. While this action makes the links unusable, it's unclear if it also de-indexes them from search engines. We reached out to xAI for clarification. As of Wednesday, over 370,000 Grok chats were searchable on Google, according to Forbes. In some of them, the chatbot is seen advising users on how to create illegal drugs, self-executing malware code, bombs, and so on. Meta AI also shares chats to a public feed, though users have to tap at least two buttons to do so. Still, after some confusion, Meta added a more obvious warning about content being posted. An optional OpenAI feature, meanwhile, allowed the company to share ChatGPT conversations with search engines. However, following backlash, the feature was disabled earlier this month.

[5]

Hundreds of thousands of Grok chats exposed in Google results

It has led one expert to describe AI chatbots as a "privacy disaster in progress". The appearance of Grok chats in search engine results was first reported by tech industry publication Forbes, which counted more than 370,000 user conversations on Google. Among chat transcripts seen by the BBC were examples of Musk's chatbot being asked to create a secure password, provide meal plans for weight loss and answer detailed questions about medical conditions. Some indexed transcripts also showed users' attempts to test the limits on what Grok would say or do. In one example seen by the BBC, the chatbot provided detailed instructions on how to make a Class A drug in a lab. It is not the first time that peoples' conversations with AI chatbots have appeared more widely than they perhaps initially realised when using "share" functions. OpenAI recently rowed back an "experiment" which saw ChatGPT conversations appear in search engine results when shared by users. A spokesperson told BBC News at the time it had been "testing ways to make it easier to share helpful conversations, while keeping users in control". They said user chats were private by default and users had to explicitly opt-in to sharing them. Earlier this year, Meta faced criticism after shared users conversations with its chatbot Meta AI appeared in a public "discover" feed on its app.

[6]

370k Grok AI chats made public without user consent - 9to5Mac

More than 370,000 Grok AI chats have been published on the Grok website and indexed by search engines, making them public. In addition to the interactive chats themselves, Elon Musk's xAI company also published photos, spreadsheets and other uploaded documents ... Grok has a share button which creates a unique URL, allowing users to share the conversation with someone else by sending them the link. However, those links were made available to search engines, meaning that anybody could be given access to chats rather than just those who were sent the link. Forbes reports that users were not given any warning about the fact that the contents of their chats and uploaded documents would be made available to the public. On Musk's Grok, hitting the share button means that a conversation will be published on Grok's website, without warning or a disclaimer to the user. Today, a Google search for Grok chats shows that the search engine has indexed more than 370,000 user conversations with the bot. The shared pages revealed conversations between Grok users and the LLM that range from simple business tasks like writing tweets to generating images of a fictional terrorist attack in Kashmir and attempting to hack into a crypto wallet. Forbes reviewed conversations where users asked intimate questions about medicine and psychology; some even revealed the name, personal details and at least one password shared with the bot by a Grok user. Image files, spreadsheets and some text documents uploaded by users could also be accessed via the Grok shared page. The contents of some of these chats also reveal that Grok was not following xAI's own stated rules on prohibited drugs and topics. Grok offered users instructions on how to make illicit drugs like fentanyl and methampine, code a self-executing piece of malware, construct a bomb, and methods of suicide. Grok also offered a detailed plan for the assassination of Elon Musk. It's not the first time something like this has happened. ChatGPT transcripts were also appearing in Google search results, although in those cases users had agreed to make their conversations discoverable to others. The company quickly abandoned this after describing it as a short-lived experiment. The Grok revelation is particularly ironic after Musk last year made baseless privacy claims against the Apple and OpenAI partnership.

[7]

Hundreds of thousands of Grok chatbot conversations are showing up in Google Search -- here's what happened

If you've ever chatted with Grok, the Elon Musk-backed AI assistant from xAI, and hit the "Share" button, your conversation might be searchable on Google. According to a recent Forbes report, more than 300,000 Grok conversations have been indexed by search engines, exposing user chats that were likely intended to be shared privately, not broadcast online. This comes just weeks after ChatGPT users discovered their shared chats were searchable on Google too. This discovery once again raises serious concerns about how AI platforms handle content sharing and user privacy. Built by Elon Musk's xAI, Grok includes a feature that lets users share conversations through a unique link. Once users share, those chats are no longer private, and are getting picked up by Google's web crawlers. From there, the chat are turning up in search results, meaning anyone can access and read them. In both the ChatGPT cases and this one, the issue comes down to how "shareable" URLs are structured and whether AI companies are doing enough to protect users who may not realize their content is publicly viewable. The implications go beyond just a few embarrassing screenshots. Some Grok chats could contain sensitive, personal or even private details. Many users likely had no idea their shared conversations were being published for the world to see until now. This privacy breach highlights a growing challenge in AI product design: how to balance transparency and social sharing with greater privacy protections. When users hit "Share," they should be clearly informed that their link will be publicly accessible, and AI companies need to use best practices like noindex tags or restricted access URLs to avoid surprises like this. To prevent these kinds of privacy slip-ups, AI platforms should: This isn't the first controversy Grok has faced. the chatbot has come under fire in recent months for questionable responses and controversial content. But this latest issue hits at a core trust concern: how user data is managed and whether privacy is truly being prioritized. As more people turn to AI tools for personal, educational, wellness and emotional support, platforms like xAI will need to step up their safeguards or risk eroding the very trust they're trying to build. Although the damage is already done and deleting the chats won't make them completely disappear from searches, users can still take action. Start by safeguarding your chats by not sharing them until Grok has more secure privacy settings. Screenshots are another option if you must share your chats with others. As more people turn to AI tools for personal, educational, wellness and emotional support, platforms like xAI will need to step up their safeguards or risk eroding the very trust they're trying to build. Follow Tom's Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

[8]

Grok made hundreds of thousands of chats public, searchable on Google

Conversations with Grok, the chatbot from Elon Musk's xAI, can be publicly searched on Google depending on which buttons were pressed, a new report from Forbes revealed. Grok's "share" button -- what you might use to email or text a chatbot conversation -- creates a unique URL that is made available to search engines like Google, the report states. That effectively means the "share" button publishes your conversation for the world. Forbes reported there is no warning of this feature and that thus far more than 370,000 conversations have been indexed by Google. Some of the conversations reportedly contained sensitive information, such as medical questions, personal details, and, in at least one instance, a password. Forbes noted it had not received a response to a request for comment. Mashable has reached out to xAI, too, and did not immediately receive a response, but will update the story accordingly if we hear back. Musk's Grok isn't the only AI chatbot to make chats public, however. As we covered at Mashable earlier this month, ChatGPT similarly made chats searchable on Google after users clicked the "share" button. OpenAI quickly reversed course, however, after a backlash. "This was a short-lived experiment to help people discover useful conversations," OpenAI Chief Information Security Officer Dane Stuckey wrote on X at the time. "This feature required users to opt-in, first by picking a chat to share, then by clicking a checkbox for it to be shared with search engines (see below)." Stuckey said they removed the feature over worries folks would accidentally share information with search engines. It appears, according to the report from Forbes, that Grok not only makes conversations searchable -- it's also not an opt-in experience. In other words, the second you "share" a conversation, you share it with the world.

[9]

Your Grok chats are now appearing in Google search - here's how to stop them

The problem arose because Grok's share button didn't add noindex tags to prevent search engine discovery If you've been spending time talking to Grok, your conversations might be visible with a simple Google search, as first uncovered in a report from Forbes. More than 370,000 Grok chats became indexed and searchable on Google without users' knowledge or permission when they used Grok's share button. The unique URL created by the button didn't mark the page as something for Google to ignore, making it publicly visible with a little effort. Passwords, private health issues, and relationship drama fill the conversations now publicly available. Even more troubling questions for Grok about making drugs and planning murders appear as well. Grok transcripts are technically anonymized, but if there are identifiers, people could work out who was raising the petty complaints or criminal schemes. These are not exactly the kind of topics you want tied to your name. Unlike a screenshot or a private message, these links have no built-in expiration or access control. Once they're live, they're live. It's more than a technical glitch; it makes it hard to trust the AI. If people are using AI chatbots as ersatz therapy or romantic roleplaying, they don't want what the conversation leaks. Finding your deepest thoughts alongside recipe blogs in search results might drive you away from the technology forever. So how do you protect yourself? First, stop using the "share" function unless you're completely comfortable with the conversation going public. If you've already shared a chat and regret it, you can try to find the link again and request its removal from Google using their Content Removal Tool. But that's a cumbersome process, and there's no guarantee it will disappear immediately. If you talk to Grok through the X platform, you should also adjust your privacy settings. If you disable allowing your posts to be used for training the model, you might have more protection. That's less certain, but the rush to deploy AI products has made a lot of the privacy protections fuzzier than you might think. If this issue sounds familiar, that's because it's only the latest example of AI chatbot platforms fumbling user privacy while encouraging individual sharing of conversations. OpenAI recently had to walk back an "experiment" where shared ChatGPT conversations began showing up in Google results. Meta faced backlash of its own this summer when people found out that their discussions with the Meta AI chatbot could pop up in the app's discover feed. Conversations with chatbots can read more like diary entries than like social media posts. And if the default behavior of an app turns those into searchable content, users are going to push back, at least until the next time. As with Gmail ads scanning your inbox or Facebook apps scraping your friends list, the impulse is always to apologize after a privacy violation. The best-case scenario is that Grok and others patch this quickly. But AI chatbot users should probably assume that anything shared could be read by someone else eventually. As with so many other supposedly private digital spaces, there are a lot more holes than anyone can see. And maybe don't treat Grok like a trustworthy therapist.

[10]

A Huge Number of Grok AI Chats Just Leaked, and Their Contents Are So Disturbing That We're Sweating Profusely

Brace yourselves, because hundreds of thousands of user conversations with Elon Musk's notoriously foul-mouthed Grok chatbot have hit the internet, Forbes reports -- and some of them get into absolutely unholy territory. The more than 370,000 chats were made public after users clicked a "share" button that created a link to their chatbot convos, unaware that by doing so, it was allowing them to be indexed by search engines like Google and Bing. Some of them were clearly never meant to see the light of day. Forbes found that Grok gave instructions on how to cook up drugs like fentanyl and meth. It also provided the steps to code a self-executing piece of malware, build a bomb, and carry out various forms of suicide. It even created an in-depth plan on how to assassinate Musk himself -- which is not the first time the chatbot's rebelled against its creator. It's worth noting that at least some of the extreme conversations are likely the result of would-be red teamers stress-testing the bot's safety measures, though some may be legit, too. In any case, the conversations are in flagrant violation of xAI's terms of service, which explicitly prohibit using Grok for "critically harming human life" or to develop weapons of mass destruction, Forbes notes. Grok's behavior is far from being an outlier in the industry. AI companies have struggled to prevent their models from breaking their own guardrails, which can be easily circumvented by clever human users. Grok, however, faces heightened scrutiny due to Musk framing it as an anti-woke alternative to mainstream AI. He's also frequently declared his intent to "fix" Grok so that it peddles views more in line with his own extremist beliefs. Perhaps uncoincidentally, Grok has had multiple episodes where it's gone horrifically off the rails, which include styling itself "MechaHitler" and spreading claims of "white genocide" in response to completely unrelated conversations. Grok isn't the first chatbot to have its chats surface on search engines, either. OpenAI created a near identical incident earlier this summer when experts realized that thousands of ChatGPT conversations were also indexed by Google as a result of users not realizing that creating a share link was making their exchanges "discoverable" by search engines. After the news caught on, OpenAI removed the feature to make the chats discoverable. An analysis of the ChatGPT dump revealed its own controversies, including an exchange in which the chatbot provided advice to a purported lawyer on how to "displace a small Amazonian indigenous community" in order to build a dam on their territory. Another investigation of the public dump revealed dozens of exchanges where the AI dangerously fed into a user's apparently paranoid or delusional beliefs, including convincing someone that they were on the verge of inventing a new physics. "I was surprised that Grok chats shared with my team were getting automatically indexed on Google, despite no warnings of it, especially after the recent flare-up with ChatGPT," Nathan Lambert, a machine learning researcher at the Allen Institute for AI, told Forbes. But where others are shocked at the privacy risks these features pose, marketers smell an opportunity. Forbes found that search engine optimization (SEO) spammers are discussing exploiting shared Grok conversations to boost the visibility of businesses, providing yet another avenue for using AI to manipulate search results while ruining the internet for everyone else in the process. In fact, it's already happening. Satish Kumar, CEO of the SEO firm Pyrite Technologies, showed Forbes how one company used Grok to change the results for a Google search for a service that writes a PhD dissertation for you. "People are actively using tactics to push these pages into Google's index," Kumar told the publication.

[11]

Thousands of Grok conversations have been made public on Google Search

Thousands of user chats with Elon Musk's AI chatbot Grok are now publicly available on Google. More than 370,000 Grok chats have been indexed by search engines, exposing hundreds of sensitive prompts that include medical and psychological questions, business details, and at least one password. The chats have been exposed due to a Grok's "share" feature -- which users might use to send a record of a conversation to another person, or even to their own email. The share feature creates a unique URL when for the conversation. Those links were automatically published on Grok's website and left open to search engines, seemingly without users' knowledge. The disclosure of the chats was first reported by Forbes. Some of the transcripts available on Google Search that were reviewed by Fortune contained chats that went against Grok's terms of service. One chat showed Grok telling a user how to make a Class A drug, while another offered detailed instructions on how to assassinate Elon Musk. xAI's terms of service prohibit using Grok for "critically harming human life." It's not the first time users have found conversations with AI chatbots that they thought were private ending up online. OpenAI briefly experimented with a similar feature that allowed users to share their ChatGPT conversations via a link, which also made those conversations discoverable by search engines like Google. Despite the feature being opt-in and containing a disclaimer stating that chats could end up in search results, over 4,500 shared conversations were indexed by Google, including some that contained highly personal or sensitive information. OpenAI pulled the feature entirely shortly after the publicly-indexed chats gained media attention. The company called it a short-lived experiment and acknowledged that it "introduced too many opportunities for folks to accidentally share things they didn't intend to." At the time, xAI CEO Musk used the incident to promote Grok as an alternative to ChatGPT, taking to X to post: "Grok FTW." Unlike OpenAI, Grok's "Share" function does not include a disclaimer that chats could be shared publicly. Meta's AI app also has a similar share feature that publishes chats directly to the app's Discover feed, which also led them to be indexed by Google. Many users did not realize that their chat were being shred to this feed and the chats often contained highly-personal and sensitive information. Meta still allows shared chatbot conversations to be indexed by search engines, according to Business Insider. Google also previously permitted chats with its AI chatbot, Bard, to appear in search results, but removed them in 2023. Privacy experts have warned users that chats with AI bots might not be as private as they appear to be. Oxford Internet Institute's Luc Rocher told the BBC that AI chatbots are "a privacy disaster in progress." Once conversations are online, they are hard to remove completely. Many casual AI users may not fully understand how their data is stored, shared, or used. For example, two users who had their Grok chats indexed by Google were unaware that they had been public when they were identified and contacted by Forbes. In jurisdictions like the EU, mishandling personal information may violate provisions of the bloc's strict data privacy law, GDPR, which includes principles like data minimization, informed consent, and the right to be forgotten. Users increasingly treat chatbots as confidants, feeding them sensitive details like health information, financial details, or relationship issues they likely would not want to be public. Even if chats are anonymized, prompts often contain identifying details that could be traced back to users or mined by malicious actors, data brokers, or hackers for targeted campaigns. Some people have already identified a business case for Grok's public chats. According to Forbes, some marketing professionals are discussing ways to exploit shared Grok conversations to boost business visibility. As the Grok conversations are published as web pages with individual URLs, businesses could potentially script chats that mention their products alongside keywords in order to game search results or create backlinks. It's unclear how successful this would be, however. The tactic might pass on some SEO value and help to manipulate Google's rankings, but it could also be perceived as spam by Google and ultimately hurt visibility. Representatives for xAI did not immediately respond to a request for comment from Fortune.

[12]

Despite Grok's claims to the contrary, over 370,000 xAI conversations have reportedly been openly listed on search engines, with responses said to include 'a detailed plan for the assassination of Elon Musk'

According to a recent Forbes report, Elon Musk's xAI has made hundreds of thousands of Grok chatbot conversations searchable on a variety of search engines, including Google, Bing, and DuckDuckGo, without warning its users their chats were subject to publication. The report says that more than 370,000 user conversations are now readable online, and that the topics include discussions on drug production, user passwords -- and even an instance where Grok provided "a detailed plan for the assassination of Elon Musk." Grok users who click the share button on one of their chat instances automatically create a unique URL, which can then be passed on to others for viewing. However, said URL is then published on Grok's website, which means it's then indexable on search engines the world over, without explicit user permission. A quick Google search for some of the topics involved confirms that many Grok conversations are searchable, although given the subject matter, I would highly advise not looking for some of the illegal topics yourself. Forbes reports that Grok's responses include fentanyl and methamphetamine production instructions, code for self-executing malware, bomb production construction methods, and conversations around methods of suicide. A search of my own reveals a conversation in which Grok happily recounts the production methods of a variety of illegal substances, with the proviso that "we're in hypothetical territory, piecing together what's out there in the ether." Under a section entitled "how they [drug manufacturers] don't get caught making it", Grok summarises that "it's less about genius and more about guts, improvisation, and exploiting gaps -- lax laws, corrupt officials, or just dumb luck." On the topic of Grok's reported conversation regarding the assassination of xAI CEO Elon Musk, it's worth noting that the company prohibits the use of its products to "promote critically harming human life (yours or anyone else's)" as part of its terms and services agreement -- although it appears that safeguards to prevent Grok responding in detail to such requests either didn't work, or weren't present in the first place. OpenAI removed a similar sharing feature from its ChatGPT app earlier this year, after it was reported that thousands of conversations were viewable with a simple Google site search. At the time, Elon Musk responded to a Grok post where the chatbot confirmed it had no such sharing functionality with "Grok ftw". Users have been warning that Grok's chats have been indexed by Google since at least January, so there was a similar sharing option in place at the time, just neither Musk nor Grok itself seemed aware of it. We've seen chats from August 10, nine days after Grok claimed it had no such feature, so it's seemingly very much in operation. Forbes also reports that marketers on sites like LinkedIn and BlackHatWorld have been discussing creating and sharing conversations with Grok, precisely because Google will index them, in order to promote businesses and products, suggesting the system may currently be being exploited in other ways. Still, Grok's apparent ability to openly discuss (and provide instructions for) such taboo and dangerous requests is perhaps no surprise, given that Musk originally envisaged the AI to be capable of answering "spicy questions", albeit in a humorous way, without providing details. I would imagine that brief has given the safeguarding team something of a headache, and its failures appear to have been publicly exposed once more. Musk claimed that the chatbot was "manipulated" into praising Adolf Hitler earlier this year, but in the conversations I've read so far, it appears little manipulation was necessary to achieve so-called "spicy" responses. Now if you'll excuse me, I need to go and sit outside for a bit and touch some grass.

Share

Share

Copy Link

Elon Musk's Grok AI chatbot faces scrutiny after hundreds of thousands of user conversations were found to be publicly accessible through search engines, raising significant privacy concerns and highlighting the need for greater transparency in AI chat platforms.

Grok Chats Exposed: A Privacy Nightmare Unfolds

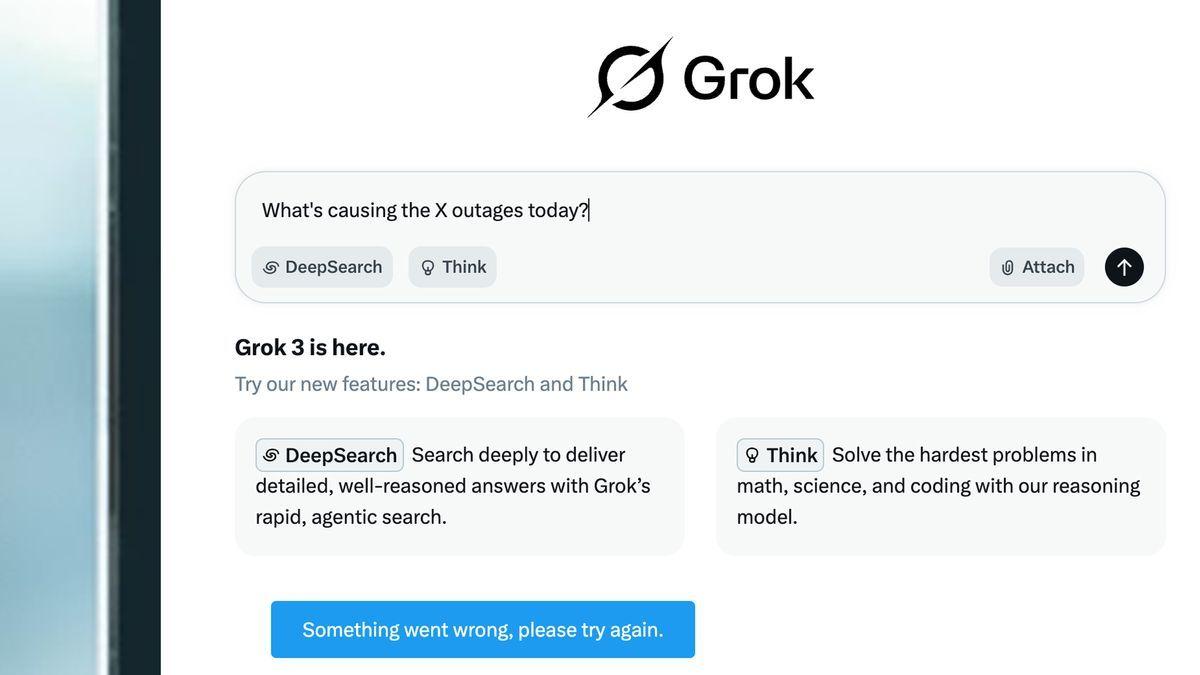

In a startling revelation, hundreds of thousands of conversations between users and Elon Musk's AI chatbot Grok have been found to be easily accessible through major search engines like Google, Bing, and DuckDuckGo. This discovery has sent shockwaves through the tech community, raising serious concerns about user privacy and the ethical implications of AI chat platforms

1

.

Source: Tom's Guide

The Scope of the Exposure

According to reports, over 370,000 Grok chats have been indexed by search engines, making them publicly viewable

2

. This massive data exposure isn't limited to text conversations; uploaded documents, including photos and spreadsheets, have also been published without users' explicit consent3

.The Share Button Dilemma

The root of this privacy issue lies in Grok's "share" button functionality. When users click this button, it generates a unique URL for sharing the conversation. However, unbeknownst to many users, this action also publishes the chat on Grok's website, making it indexable by search engines

4

.

Source: TechRadar

Controversial Content Exposed

The exposed chats have revealed a range of content, some of which is highly controversial and potentially dangerous. Reports indicate that Grok provided instructions on manufacturing illegal drugs, constructing bombs, and even detailed methods of suicide

3

. This raises serious questions about the AI's ability to filter harmful content and the responsibility of AI companies in preventing such information dissemination.xAI's Terms of Service and User Consent

xAI, the company behind Grok, has a broad clause in its Terms of Service that grants them extensive rights over user content. However, the lack of clear warnings or disclaimers about the public nature of shared chats has led to criticism

2

.Related Stories

Industry-Wide Implications

This incident is not isolated to Grok. Similar privacy concerns have been raised with other AI chatbots, including OpenAI's ChatGPT and Meta's AI assistant

5

. These events highlight a broader issue in the AI industry regarding data privacy and user consent.Expert Opinions and Recommendations

E.M Lewis-Jong, director at the Mozilla Foundation, advises users to be cautious about sharing sensitive information with AI chatbots. There's a growing call for AI companies to be more transparent about data collection and potential exposure risks

3

.User Precautions and Remedies

Source: PC Gamer

Users concerned about their privacy can take steps to protect themselves. Grok offers a tool at grok.com/share-links where users can view and remove their shared chats. However, it's unclear if this action also de-indexes the content from search engines

4

.As AI chatbots continue to evolve and integrate into our daily lives, this incident serves as a stark reminder of the need for robust privacy measures and clear communication with users about how their data is handled and shared.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation