IBM CEO warns $8 trillion AI data center buildout can't turn a profit at current spending rates

5 Sources

5 Sources

[1]

IBM CEO warns that ongoing trillion-dollar AI data center buildout is unsustainable -- warns there is 'no way' that infrastructure costs can turn a profit

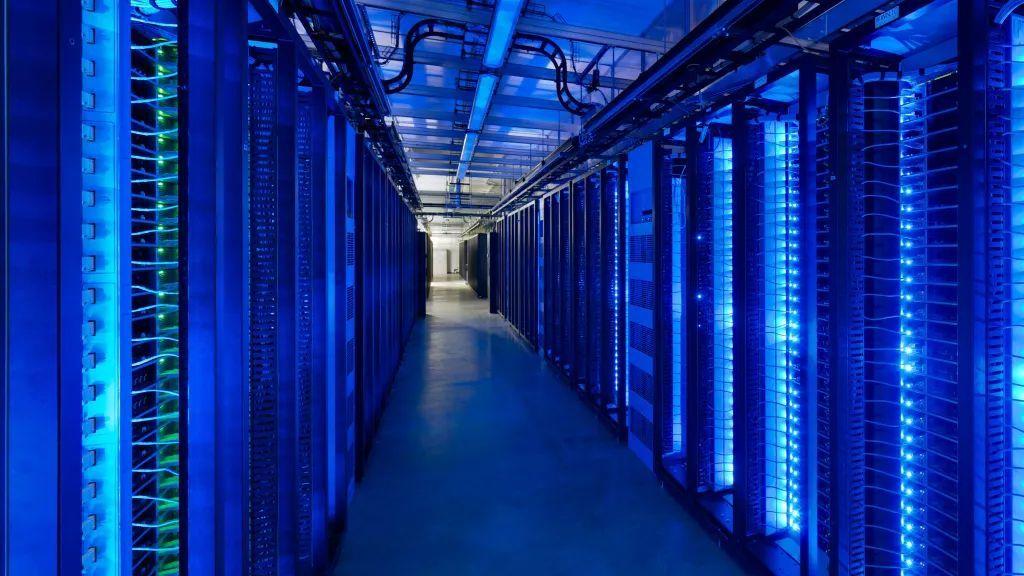

Krishna's cost model challenges the economics behind multi-gigawatt AI campuses. IBM CEO Arvind Krishna used an appearance on The Verge's Decoder podcast to question whether the capital spending now underway in pursuit of AGI can ever pay for itself. Krishna said today's figures for constructing and populating large AI data centers place the industry on a trajectory where roughly $8 trillion of cumulative commitments would require around $800 billion of annual profit simply to service the cost of capital. The claim was tied directly to assumptions about current hardware, its depreciation, and energy, rather than any solid long-term forecasts, but it comes at a time when we've seen several companies one-upping one another with unprecedented, multi-year infrastructure projects. Krishna estimated that filling a one-gigawatt AI facility with compute hardware requires around $80 billion. The issue is that deployments of this scale are moving from the drawing board and into practical planning stages, with leading AI companies proposing deployments with tens of gigawatts -- and in some cases, beyond 100 gigawatts -- each. Krishna said that, taken together, public and private announcements point to roughly one hundred gigawatts of currently planned capacity dedicated to AGI-class workloads. At $80 billion per gigawatt, the total reaches $8 trillion. He tied those figures to the five-year refresh cycles common across accelerator fleets, arguing that the need to replace most of the hardware inside those data centers within that window creates a compounding effect on long-term capex requirements. He also placed the likelihood that current LLM-centric architectures reach AGI at between zero and 1% without new forms of knowledge integration. Krishna pointed to depreciation as the part of the calculation most underappreciated by investors. AI accelerators are typically written down over five years, and he argued that the pace of architectural change means fleets must be replaced rather than extended. "You've got to use it all in five years because at that point, you've got to throw it away and refill it," he said. Recent financial-market criticism has centred on similar concerns. Investor Michael Burry, for example, has raised questions about whether hyperscalers can continue stretching useful-life assumptions if performance gains and model sizes force accelerated retirement of older GPUs. The IBM chief said that ultimately, he expects generative-AI tools in their current form to drive substantial enterprise productivity, but that his concern is the relationship between the physical scale of next-gen AI infrastructure and the economics required to support it. Companies committing to these huge, multi-gigawatt campuses and compressed refresh schedules must therefore demonstrate returns that match the unprecedented capital expenditure that Krishna outlined.

[2]

Multi-gigawatt AI campuses are draining billions annually

High-end GPU hardware must be replaced every five years without extension IBM chief executive Arvind Krishna questions whether the current pace and scale of AI data center expansion can ever remain financially sustainable under existing assumptions. He estimates that populating a single 1GW site with compute hardware now approaches $80 billion. With public and private plans indicating close to 100GW of future capacity aimed at advanced model training, the implied financial exposure rises toward $8 trillion. Krishna links this trajectory directly to the refresh cycle that governs today's accelerator fleets. Most of the high-end GPU hardware deployed in these centers depreciates over roughly five years. At the end of that window, operators do not extend the equipment but replace it in full. The result is not a one-time capital hit but a repeating obligation that compounds over time. CPU resources also remain part of these deployments, but they no longer sit at the center of spending decisions. The balance has shifted toward specialized accelerators that deliver massive parallel workloads at a pace unmatched by general-purpose processors. This shift has materially altered the definition of scale for modern AI facilities and pushed capital requirements beyond what traditional enterprise data centers once demanded. Krishna argues that depreciation is the factor most often misunderstood by market participants. The pace of architectural change means performance jumps arrive faster than financial write-downs can comfortably absorb. Hardware that is still functional becomes economically obsolete long before its physical lifespan ends. Investors such as Michael Burry raise similar doubts about whether cloud giants can keep stretching asset life as model sizes and training demands grow. From a financial perspective, the burden no longer sits with energy consumption or land acquisition, but with the forced churn of increasingly expensive hardware stacks. In workstation-class environments, similar refresh dynamics already exist, but the scale is fundamentally different inside hyperscale sites. Krishna calculates that servicing the cost of capital for these multi-gigawatt campuses would require hundreds of billions of dollars in annual profit just to remain neutral. That requirement rests on present hardware economics rather than speculative long-term efficiency gains. These projections arrive as leading technology firms announce ever larger AI campuses measured not in megawatts but in tens of gigawatts. Some of these proposals already rival the electricity demand of entire nations, raising parallel concerns around grid capacity and long-term energy pricing. Krishna estimates near-zero odds that today's LLMs reach general intelligence on the next hardware generation without a fundamental change in knowledge integration. That assessment frames the investment wave as driven more by competitive pressure than by validated technological inevitability. The interpretation is difficult to avoid. The buildout assumes future revenues will scale to match unprecedented spending. This is happening even as depreciation cycles shorten and power limits tighten across multiple regions. The risk is that financial expectations may be racing ahead of the economic mechanisms required to sustain them over the full lifecycle of these assets. Via Tom's Hardware

[3]

IBM CEO Says the Math Just Doesn't Add Up on Its Competitors' AI Spending

"It's my view that there's no way you're going to get a return on that." AI companies are continuing to pour ungodly amounts of money into building out data centers, in an enormous bet that both analysts and tech leaders warn may not pay off for many years to come -- if it ever does. OpenAI most recently committed to spending well over a trillion dollars before the end of the decade as it continues to burn oodles of cash each quarter, enormous losses that are having investors asking some hard questions. Put simply, as IBM CEO Arvind Krishna told The Verge's editor-in-chief Nilay Patel during a recent episode of the "Decoder" podcast, the math isn't adding up. When asked whether he thinks "there's an enterprise [return on investment] that would justify the spend" on trying to achieve artificial general intelligence (AGI) -- OpenAI's ill-defined priority number one -- Krishna laid out some back-of-the-envelope math. "It takes about $80 billion to fill up a one-gigawatt data center," he said. "That's today's number. If one company is going to commit 20-30 gigawatts, that's $1.5 trillion of [capital expenditure]." Considering the "total commits" of "chasing AGI" amounts to 100 gigawatts, he reasoned, that's "$8 trillion of [capital expenditure]." "It's my view that there's no way you're going to get a return on that because $8 trillion of [capital expenditure] means you need roughly $800 billion of profit just to pay for the interest," he concluded. It's a striking display of skepticism, highlighting a growing unease among executives that the enormous AI spending spree may not be sustainable, let alone rational, in the long run. AI companies' valuations have soared to unprecedented levels, despite what the Wall Street Journal recently described as the lack of a "clear financial model for profitable AI." According to a recent analysis by investment bank HSBC, OpenAI won't be making any profit for at least another four years, and will need to keep burning over $200 billion to keep up with its growth plans in terms of additional debt, equity -- or new avenues of generating revenue, which is much easier said than done. Interestingly, IBM is using the topic of an AI bubble as a litmus test for new hires. As Fortune reported this week, IBM executives are asking candidates whether they believe we're in an AI bubble, a question that purportedly has no right or wrong answers. "I strongly believe we aren't, so let's see what everyone else has to say," IBM's managing partner for Europe, the Middle East and Africa, told Fortune. Besides some murky math, experts have long questioned Altman on his push to realize AGI, a term that remains nebulous at best, with OpenAI repeatedly being accused of shifting the goalposts to give the impression of progress. Krishna described Altman's drive to achieve AGI as chasing a "belief." "Nilay, I will be clear," Krishna told Patel. "I am not convinced, or rather I give it really low odds -- we're talking like 0 to 1 percent -- that the current set of known technologies gets us to AGI." Nonetheless, he believes that generative AI will be "incredibly useful for enterprise" and "unlock trillions of dollars of productivity." However, getting to AGI will necessitate technological breakthroughs that will take us beyond large language models, Krishna argued. "If we can figure out a way to fuse knowledge with LLMs," we stand a chance of reaching AGI, he said. "Even then, I'm a maybe."

[4]

IBM CEO warns there's 'no way' hyperscalers like Google and Amazon will be able to turn a profit at the rate of their data center spending | Fortune

While giant tech companies like Google and Amazon tout the billions they're pouring into AI infrastructure, IBM's CEO doubts their bets will pay off like they think. Arvind Krishna, who has been at the helm of the legacy tech company since 2020, said even a simple calculation reveals there is "no way" tech companies' massive data center investments make sense. This is in part because data centers require huge amounts of energy and investment, Krishna said on the Decoder podcast. Goldman Sachs estimated earlier this year that the total power usage by the global data center market stood at around 55 gigawatts, of which only a fraction (14%) is dedicated to AI. As demand for AI grows, the power required by the data center market could jump to 84 gigawatts by 2027, according to Goldman Sachs. Yet, building out a data center that uses merely one gigawatt costs a fortune -- an estimated $80 billion in today's dollars, according to Krishna. If a single company commits to building out 20 to 30 gigawatts then that would amount to $1.5 trillion in capital expenditures, Krishna said. That's an investment about equal to Tesla's current market cap. All the hyperscalers together could potentially add about 100 gigawatts, he estimated, but that still means $8 trillion of investment -- and the profit needed to balance out that investment is immense. "It's my view that there's no way you're going to get a return on that because $8 trillion of CapEx means you need roughly $800 billion of profit just to pay for the interest," he said. Moreover, thanks to technology's rapid advance, the chips powering your data center could quickly become obsolete. "You've got to use it all in five years because at that point, you've got to throw it away and refill it," he said. Krishna added that part of the motivation behind this flurry of investment is large tech companies' race to be the first to crack AGI, or an AI that can match or surpass a human's intelligence. Yet, Krishna says there's at most a 1% chance this feat can be accomplished with our current technology, despite the steady improvement of large language models. "I think it's incredibly useful for enterprise. I think it's going to unlock trillions of dollars of productivity in the enterprise, just to be absolutely clear," he said. "That said, I think AGI will require more technologies than the current LLM path." Meanwhile, hyperscalers are plowing ahead with investments in AI infrastructure that are estimated to reach $380 billion in this year alone. In its third quarter earnings report, Google parent Alphabet raised its 2025 capital spending outlook to between $91 billion and $93 billion from a previous estimate of $85 billion. Its CFO also said on the company's quarterly earnings call to expect a "significant increase" in capex spending next year thanks in part to increased infrastructure investment. Amazon in the third quarter also raised its capital expenditure estimate to $125 billion from a prior estimate of $118 billion.

[5]

The $8 Trillion AI Mirage: IBM Says The Math Just Doesn't Work - IBM (NYSE:IBM)

Everyone on Wall Street is busy celebrating the AI supercycle -- until you try the math. This week, IBM (NYSE:IBM) CEO Arvind Krishna dropped a number so large it could stop the AI party cold. Track IBM stock here. At today's costs, he told Decoder, it takes roughly $80 billion to build and fully equip a 1-gigawatt AI data center. And with nearly 100 gigawatts of hyperscale capacity already announced across the industry, that implies around $8 trillion in capital spending. His conclusion was blunt: "There is no way you're going to get a return on that," arguing companies would need about $800 billion in profit just to service interest on that scale of investment. AI Data Center Economics Look Broken That warning lands right as Big Tech is flexing spending like price doesn't matter. Amazon.com Inc (NASDAQ:AMZN), Microsoft Corp (NASDAQ:MSFT), Alphabet Inc (NASDAQ:GOOG) (NASDAQ:GOOG) and Meta Platforms Inc (NASDAQ:META) are pouring tens of billions into compute, GPUs, land, power and cooling in what increasingly looks like an existential race to prove dominance in AI -- not necessarily a profitable one. Nvidia Corp's (NASDAQ:NVDA) revenue projections assume that every hyperscaler keeps building non-stop; the market caps of chipmakers and equipment suppliers depend on that narrative holding. What Krishna is suggesting is a far darker possibility: the economics simply don't support the ambition. Read Also: Amazon's $150 Billion AI Capex Surge Could Force Its First Big Bond Deal In Years Hyperscaler Capex Is A Financial Time Bomb If capex continues to balloon while monetization remains vague, someone is going to hit the brakes. Enterprises haven't proven that generative AI can deliver ROI at scale, inference costs are exploding, and power shortages are already delaying deployments in multiple markets. You don't spend $8 trillion because you want to; you spend it because you're terrified of losing the race. Who Blinks First In The AI Build-Out Arms Race? Right now, investor psychology is driven by FOMO, not fundamentals. The first hyperscaler to slow spending could trigger a wider rethink about AI infrastructure profitability -- and expose how much of this build-out is narrative rather than economics. But at some point, CFOs would start asking simple questions with ugly answers: How fast can AI revenue scale? Who pays for inference? What if enterprise adoption is slower than promised? What if power constraints halt deployment? If Krishna is right, the AI supercycle ends not with a crash in demand, but with a financial choke point -- where the first company to pause spending triggers a broader reassessment of what all this infrastructure is really worth. The AI revolution may be real. But IBM's math suggests the capital model may not be. And the market hasn't priced in the risk that the AI gold rush hits a wall long before returns arrive. Read Next: The One AI Risk Nvidia Bulls Keep Pretending Isn't Real Photo: Shutterstock IBMInternational Business Machines Corp$303.40-0.74%OverviewAMZNAmazon.com Inc$234.790.39%GOOGAlphabet Inc$315.630.16%GOOGLAlphabet Inc$315.310.13%METAMeta Platforms Inc$647.240.99%MSFTMicrosoft Corp$489.940.66%NVDANVIDIA Corp$181.270.75%Market News and Data brought to you by Benzinga APIs

Share

Share

Copy Link

IBM CEO Arvind Krishna questions whether the trillion-dollar AI infrastructure boom makes financial sense. He estimates that 100 gigawatts of planned AI data center capacity would cost $8 trillion and require $800 billion in annual profit just to service debt. With five-year hardware refresh cycles and accelerating depreciation, Krishna warns hyperscalers face unsustainable economics chasing AGI.

IBM CEO Challenges Economics Behind Massive AI Infrastructure Push

Arvind Krishna, CEO of IBM, has issued a stark warning about the financial viability of the ongoing trillion-dollar AI data center buildout. Speaking on The Verge's Decoder podcast, Krishna argued that current capital expenditures in pursuit of artificial general intelligence may never generate sufficient returns to justify the investment

1

. His analysis centers on a simple but troubling calculation: filling a single one-gigawatt AI data center with compute hardware now costs approximately $80 billion2

. With public and private announcements indicating roughly 100 gigawatts of planned capacity dedicated to AGI-class workloads, the total financial exposure approaches $8 trillion3

. Krishna's assessment comes as hyperscalers like Google, Amazon, and Microsoft continue announcing unprecedented infrastructure investments, with combined capital spending projected to reach $380 billion in 2025 alone4

.

Source: Fortune

Why Five-Year Depreciation Cycles Make AI Spending Financially Unsustainable

The IBM chief pointed to depreciation as the factor most underappreciated by investors in evaluating AI infrastructure build-out economics. AI accelerators and GPU hardware typically depreciate over five years, but the rapid pace of architectural change means fleets must be replaced entirely rather than extended

1

. "You've got to use it all in five years because at that point, you've got to throw it away and refill it," Krishna explained3

. This creates a compounding effect on long-term capital expenditures, transforming what appears to be a one-time investment into a repeating financial obligation. Hardware that remains physically functional becomes economically obsolete as performance jumps arrive faster than financial write-downs can absorb2

. Investor Michael Burry has raised similar concerns about whether hyperscalers can continue stretching useful-life assumptions if model sizes and training demands force accelerated retirement of older equipment1

.Multi-Gigawatt AI Campuses Demand Unrealistic Profits

Krishna calculated that servicing the cost of capital for $8 trillion in infrastructure investment would require approximately $800 billion in annual profit just to remain financially neutral

3

. "It's my view that there's no way you're going to get a return on that," he stated bluntly4

. The burden no longer sits primarily with energy consumption or land acquisition, but with the forced churn of increasingly expensive hardware stacks2

. If a single company commits to building 20-30 gigawatts of capacity, that alone represents $1.5 trillion in capital spending—roughly equivalent to Tesla's current market capitalization4

. These projections arrive as leading technology firms announce ever-larger facilities measured not in megawatts but in tens of gigawatts, with some proposals rivaling the electricity demand of entire nations2

.

Source: Benzinga

Related Stories

AGI Pursuit Drives Competitive Pressure Over Validated Returns

Krishna estimates the likelihood that current LLM-centric architectures reach AGI at between zero and 1% without fundamental breakthroughs in knowledge integration

1

. He described the drive to achieve artificial general intelligence as chasing a "belief" rather than a validated technological path3

. "If we can figure out a way to fuse knowledge with LLMs," Krishna suggested, companies might stand a chance of reaching AGI, though he remains skeptical even then3

. The IBM executive believes generative AI will prove "incredibly useful for enterprise" and "unlock trillions of dollars of productivity," but argues the relationship between physical scale of next-gen infrastructure and the economics required to support it remains deeply problematic1

. OpenAI alone has committed to spending well over a trillion dollars before the end of the decade while burning significant cash each quarter, prompting hard questions from investors about return on investment3

.Market Implications and the Risk of Financial Reassessment

The warning lands as Big Tech companies continue flexing AI spending with little apparent concern for near-term profitability. In its third quarter, Alphabet raised its 2025 capital spending outlook to between $91 billion and $93 billion, while Amazon increased its capital expenditure estimate to $125 billion

4

. According to HSBC analysis, OpenAI won't generate profit for at least another four years and will need to burn through over $200 billion to sustain growth plans3

. The Wall Street Journal recently described the lack of a "clear financial model for profitable AI" despite soaring valuations3

. Market observers suggest the first hyperscaler to slow spending could trigger broader reassessment of AI infrastructure profitability, exposing how much of the buildout reflects competitive fear rather than sound economics5

. Interestingly, IBM now uses questions about an AI bubble as a litmus test for new hires, asking candidates whether they believe the industry faces unsustainable speculation3

. Krishna's assessment suggests the AI revolution may be real, but the capital model supporting it could hit a wall long before anticipated returns materialize.References

Summarized by

Navi

[1]

Related Stories

AMD CEO Lisa Su dismisses AI bubble fears as Goldman Sachs warns of datacenter investment risks

05 Dec 2025•Business and Economy

AI Industry Faces $800 Billion Revenue Shortfall by 2030, Bain Report Warns

23 Sept 2025•Business and Economy

Nvidia Reaches Historic $5 Trillion Valuation Amid Growing AI Investment Concerns

29 Oct 2025•Business and Economy

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology