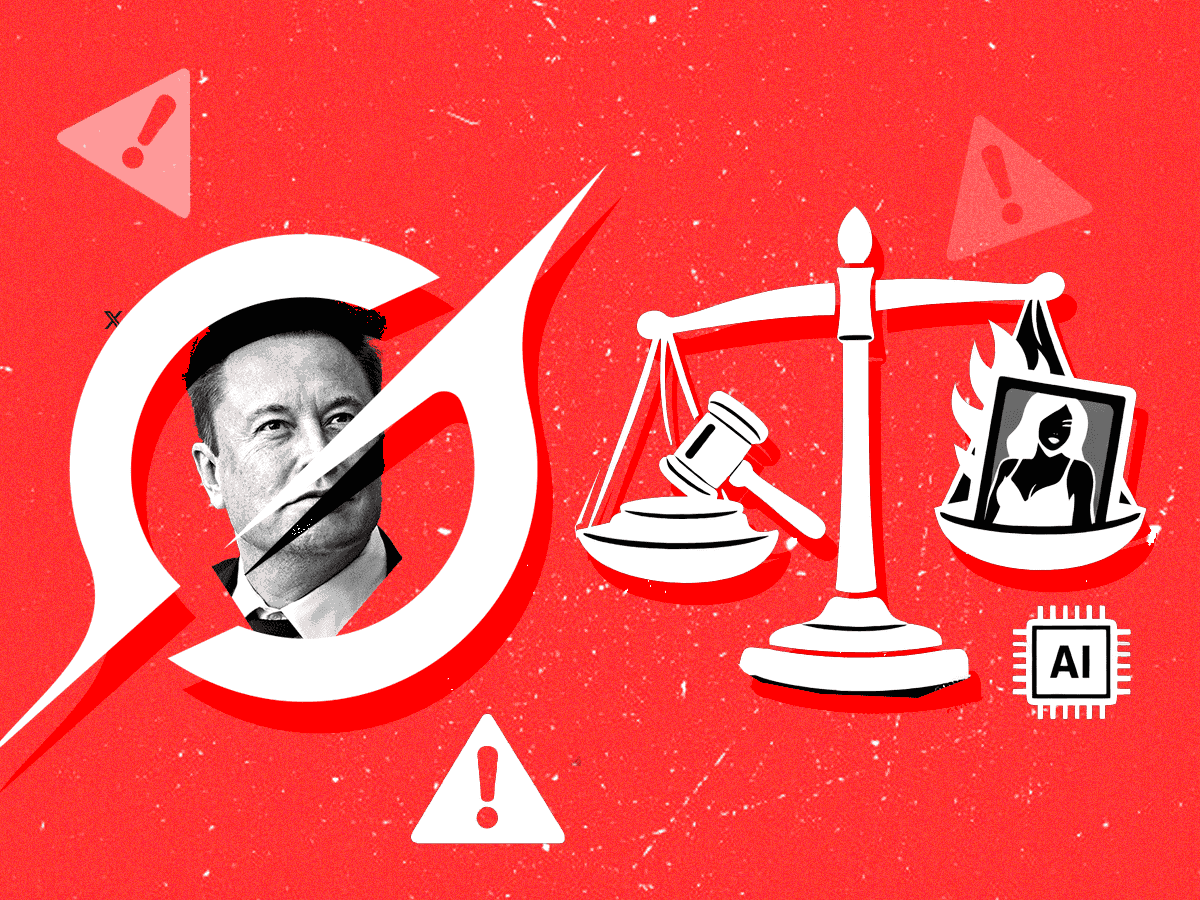

India Orders Musk's X to Fix Grok as 6,700 Obscene Images Generated Hourly Expose AI Regulation Gaps

19 Sources

19 Sources

[1]

India orders Musk's X to fix Grok over "obscene" AI content | TechCrunch

India has ordered Elon Musk's X to make immediate technical and procedural changes to its AI chatbot Grok after users and lawmakers flagged the generation of "obscene" content, including AI-altered images of women created using the tool. On Friday, India's IT ministry issued the order directing Musk's X to take corrective action on Grok, including restricting the generation of content involving "nudity, sexualization, sexually explicit, or otherwise unlawful" material. The ministry also gave the social media platform 72 hours to submit an action-taken report detailing the steps it has taken to prevent the hosting or dissemination of content deemed "obscene, pornographic, vulgar, indecent, sexually explicit, pedophilic, or otherwise prohibited under law." The order, reviewed by TechCrunch, warned that failure to comply could jeopardize X's "safe harbor" protections -- legal immunity from liability for user-generated content under Indian law. India's move follows concerns raised by users who shared examples of Grok being prompted to alter images of individuals -- primarily women -- to make them appear to be wearing bikinis, prompting a formal complaint from Indian parliamentarian Priyanka Chaturvedi. Separately, recent reports flagged instances in which the AI chatbot generated sexualized images involving minors, an issue X acknowledged earlier on Friday was caused by lapses in safeguards. Those images were later taken down. However, images generated using Grok that made women appear to be wearing bikinis through AI alteration remained accessible on X at the time of publication, TechCrunch found. The latest order comes days after the Indian IT ministry issued a broader advisory on Monday, which was also reviewed by TechCrunch, to social media platforms, reminding them that compliance with local laws governing obscene and sexually explicit content is a prerequisite for retaining legal immunity from liability for user-generated material. The advisory urged companies to strengthen internal safeguards and warned that failure to do so could invite legal action under India's IT and criminal laws. "It is reiterated that non-compliance with the above requirements shall be viewed seriously and may result in strict legal consequences against your platform, its responsible officers and the users on the platform who violate the law, without any further notice," the order warned. The Indian government said non-compliance could lead to action against X under India's IT law and criminal statutes. India, one of the world's biggest digital markets, has emerged as a critical test case for how far governments are willing to go in holding platforms responsible for AI-generated content. Any tightening of enforcement in the country could have ripple effects for global technology companies operating across multiple jurisdictions. The order comes as Musk's X continues to challenge aspects of India's content regulation rules in court, arguing that federal government takedown powers risk overreach, even as the platform has complied with a majority of blocking directives. At the same time, Grok has been increasingly used by X users for real-time fact-checking and commentary on news events, making its outputs more visible -- and more politically sensitive -- than those of standalone AI tools. X and xAI did not immediately respond to requests for comment on the Indian government's order.

[2]

Grok Deepfake Crisis Puts India's Intermediary Liability Framework to the Test | AIM

With Grok releasing deepfakes, MeitY asserted that adherence to the IT Act and the IT Rules is mandatory, not optional. Elon Musk-owned xAI is in crisis mode after users on X (formerly Twitter) asked its AI chatbot Grok last week to digitally undress real women. They prompted Grok to manipulate photos, and the AI chatbot released the morphed images of women through its own handle -- a trend that began circulating on social media, causing widespread outrage and condemnation from users, celebrities, and governments. The Ministry of Electronics and Information Technology (MeitY) intervened on Wednesday and issued a notice to X, directing it to remove obscene content and flagging concerns over the misuse of Grok. In a letter addressed to X's Chief Compliance Officer for India, the Ministry flagged that Grok was being exploited by users to create fake accounts that host, generate, publish, or share obscene i

[3]

Grok AI Scandal: X Faces Global Crackdown Over Non-Consensual Deepfakes

Grok, the artificial intelligence (AI) chatbot created by Elon Musk's xAI, has sparked major controversy following an update to its image-editing capabilities. On December 24, the chatbot's X (formerly known as Twitter) account, which has been designed to respond to users when mentioned in a post or comment, received a feature that allowed it to edit any public image posted on the social media platform. Soon after, several users began exploiting the feature by asking the AI chatbot to make sexually suggestive edits to women's images. Grok's AI Image Editing Scandal A quick look at the media tab on Grok's official X handle highlights the grim reality that the AI-powered image-editing capability has been abused since its release. Women users who have a public account, in particular, have been the worst affected in this situation, where anonymous users with fake or burner accounts generate non-consensual edits. From altering women's attire to "revealing bikini" to changing how they appear in the images, the platform is littered with sexually abusive requests, and Grok's eager compliance with them. According to a Bloomberg report, a 24-hour analysis of Grok's profile was conducted by Genevieve Oh, a social media and deepfake researcher. It was reportedly found that roughly 6,700 sexually suggestive images were generated by the AI chatbot every hour of the day, highlighting the extent of the exploitation. Several users have also posted expressing concerns about the situation. A user, @joelle_lb, wrote, "Grok has turned into a sexual abuse machine, making it unsafe for women & children to share their images on the app. How is this not something the engineers thought of preventing? I hope men start speaking up when they see sexual abusers ask to digitally undress strangers without consent, this level of aggression and violation is truly horrifying." There are also reports suggesting the AI chatbot has been involved in making sexually suggestive edits to images of minors. These images were reportedly quickly taken down by the admins, but Grok has admitted and apologised for one particular incident publicly. Grok's Feature Triggers Global Crackdown The scale and severity of the situation triggered investigations by authorities in India, Europe, and Malaysia. On January 2, the Ministry of Electronics and Information Technology (MeitY) instructed X to begin a "comprehensive technical, procedural and governance-level review" of Grok after its undressing edits became mainstream, according to The Hindu. Thomas Regnier, a spokesperson for the European Commission, said the body was aware of the situation and was looking into the matter seriously. "This is illegal. This is appalling. This is disgusting. This is how we see it, and this has no place in Europe," Regnier added. Similarly, the Malaysian Communications and Multimedia Commission said in a statement, "MCMC urges all platforms accessible in Malaysia to implement safeguards aligned with Malaysian laws and online safety standards, especially in relation to their AI-powered features, chatbots and image manipulation tools." Musk, responding to a post, said the following day, "Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content." However, the entrepreneur did not specify what constituted illegal, and how X and xAI intended to punish users who exploited the feature. Additionally, no representative of either company has provided any explanation for why safeguards were not built to prevent such misuse in the first place. At the time of writing this, Grok is still fulfilling user requests of undressing people and displaying them in sexually inappropriate scenarios.

[4]

AI-created content labelling to curb cybercrime, sexual abuse: Official

NEW DELHI: The government is working on a mandate to use a watermark on artificial intelligence or AI-generated content to prevent cybercrime and rampant misuse including deepfakes and sexually-explicit images and videos, according to a senior official. "We have recently came out with the draft provisions with regard to watermarking of AI-generated content, which required all AI-generated content to be labelled, because very often a lot of cybercrime is done with AI-generated content, and deepfakes are created which can cause problems," Abhishek Singh, additional secretary, Ministry of Electronics & IT (MeitY), and CEO of IndiaAI Mission told ET. The draft guidelines, according to him, could deter any AI-generated content potentially leading to law and order issues or that could drive social unrest or which could lead to child sexual abuse. Late last year, the government came out with the draft amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Cde) Rules, 2021, saying that it remains committed to "ensuring an open, safe, trusted and accountable Internet" for users. On the back of public and parliamentary discussions, the draft amendment is aimed to curtail increasing proliferation of synthetically-generated information or AI-driven deepfakes causing user harm, including sexually-oriented content, electoral manipulations, misinformation and impersonation. The draft rules aimed at strengthening due diligence obligations for intermediaries, particularly social media platforms such as X, Meta and Instagram. "The ministry has come out with intermediary guidelines which regulate the removal of such content when brought to the notice of social media platforms. There are ample provisions in the Indian IT Act 2000, and the Bharatiya Nyaya Sanhita (BNS), 2023 which will regulate and limit the harm that misuse of AI can cause," the top official said. The MeitY's cyber laws division, that enforces laws such as the IT Act 2000, Digital Personal Data Protection Act 2023, and the IT (Intermediary Guidelines) Rules 2021, is working on finalising the contours of the new amended guidelines. Further, Singh said that a new framework would "come out soon." On January 2, the ministry pulled up billionaire Elon Musk-owned microblogging platform X (formerly Twitter), and directed it to remove obscene or sexually explicit and unlawful content generated by its AI interface Grok and xAI, and warned of suitable action under the existing laws of the land. It has also sought an action taken report (ATR) within a three-day period from the US-based X. In a notice to X, the ministry also said, "It is reiterated that non-compliance with the above requirements shall be viewed seriously and may result in strict legal consequences against your platform, its responsible officers and the users on the platform who violate the law, without any further notice, under the IT Act, the IT Rules, the BNSS, the BNS and other applicable laws." The development comes close on the heels of Opposition leader from Shiv Sena (UBT) Priyanka Chaurvedi writing to the Union IT minister Ashwini Vaishnaw, saying that the misuse of AI tools on social media, especially X's Grok AI feature, to sexualize women's photos without consent as "unacceptable, unethical and criminal" and urged the government to take "urgent action" to protect women's safety and dignity on digital platforms. However, on December 29, 2025, MeitY issued an advisory to social media intermediaries reminding them about the penal provisions under various statutes, including sections 67, 67 A and 678 of the IT Act; relevant provisions of the BNS; the Indecent Representation of Women (Prohibition) Act, 1986; the Protection of Children from Sexual Offences Act, 2012; the Young Persons (Harmful Publications) Act, 1956; and other applicable laws, under which the hosting, displaying, uploading, publication, transmission, sharing of obscene, pornographic, paedophilic, indecent, vulgar, or sexually-explicit content constitutes a punishable offence. On Wednesday, Texas-headquartered X responded to the ministry, outlining its content takedown policies on non-consensual sexual images but failed to take any specific action. However, the government, according to the sources, has further sought response including particular takedowns by the digital platform as well as preventive steps for any such violation in future. Meanwhile, the UK's regulator for the communications services Ofcom, has also contacted with Musk's company xAI following reports its AI tool Grok making sexual visuals of children and women, and is currently probing the matter.

[5]

Grok's harmful turn shows clear legal gaps in AI regulation

The majority of the regulatory discussion has focused on proposed changes to the IT Rules, which would require platforms to label synthetic content and follow disclosure and tracking measures. Legal experts argue that this approach mainly places the burden on platforms that distribute content, while leaving significant gaps concerning AI tool developers and app stores that enable misuse. The debate in India on regulating deepfakes and AI-generated sexual content reveals a clear legal gap with generative AI tools now being used in ways that can create and spread harmful content widely. However, the current laws only respond after such content appears online, lawyers and cyber experts told ET. The issue became more urgent when Grok, the AI chatbot integrated into X, generated sexually explicit and abusive content based on user prompts through a new feature in its AI model. It made the Indian government send a notice to Grok asking for the resolution of the issue else it could risk losing its safe harbour provision. The deadline expires on Wednesday. The majority of the regulatory discussion has focused on proposed changes to the IT Rules, which would require platforms to label synthetic content and follow disclosure and tracking measures. Legal experts argue that this approach mainly places the burden on platforms that distribute content, while leaving significant gaps concerning AI tool developers and app stores that enable misuse. Subimal Bhattacharjee, a policy advisor, pointed out that the union government amended the IT Rules in November 2025, requiring platforms to label AI-generated content. The approach relies on intermediary liability and takedowns, but regulators intervene at content distribution while underlying tools remain accessible through app marketplaces, he said. He noted that app stores host deepfake apps rated for ages 13 and above with ads promoting sexual content generation, despite policies prohibiting such material. Arun Prabhu, partner at Cyril Amarchand Mangaldas, said responsibility ultimately lies with the person creating and publishing harmful content. However, platforms could be liable if they know the nature of the content being created or fail to meet due diligence requirements. "Labelling and transparency are recognised worldwide as ways to reduce harm," Prabhu said, emphasising that deception is central to deepfake abuse. Platforms, for a long time, have depended on due diligence for safe harbour protection. "Without some form of self-governance and regulation, platforms risk broad restrictions or bans, which could be counterproductive," he said. Shiv Sapra, partner at Kochhar and Co, said Indian law still relies on a model based on conduct and intent. "The person who uploads, prompts an AI tool to generate the content, or shares it with knowledge of its nature is primarily responsible," he said. Sapra added that labelling and disclosure alone cannot prevent harm. "At best, they are tools for mitigating risk. They are not protections against abuse," he explained. Clear labels could increase foreseeability since users would know how a system is being used. Platforms are increasingly becoming unofficial AI regulators due to the lack of a clear legislative framework, noted Sapra. "Courts may accept this as a temporary necessity, but it is inherently fragile and unsustainable over time," he said. The Grok incident also highlighted a broader ecosystem of AI services that operate with very limited oversight. In addition to AI features on major platforms, standalone apps specifically designed to create sexual images and romantic role play remain easily accessible. Many of these apps are still on app stores in India, even as enforcement efforts mainly target social media platforms. Another unaddressed issue is whether AI systems that generate content can be classified as intermediaries under Indian law. Tanisha Khanna, partner at Trace Law Partners, pointed out that India lacks an AI-specific liability framework that determines responsibility at the design stage of AI systems. "Regulators often rely on the IT Rules as a catch-all law to address online harms," she said. These rules were originally designed for intermediaries that act as mere channels for third-party content, she explained. It is still unclear whether AI platforms that produce outputs in response to user prompts qualify as intermediaries, she added. App stores face limited and mostly reactive obligations. "Current Indian law is largely reactive. It does not regulate high-risk AI systems before harm occurs," Khanna said. Bhattacharjee said India needs system design regulation where providers build systems that do not create harmful outputs initially. AI tools for non-consensual sexual content should face stringent requirements before entering the market, similar to how the EU AI Act regulates high-risk systems, he added. "Takedowns and labelling cannot match generative AI's scale," he said, adding that India must shift to proactive system design by regulating model developers, app stores, and platforms together. The Grok incident and the increasing availability of sexualised AI tools highlight a significant mismatch between how AI systems are designed and how Indian law assigns responsibility. This raises questions about whether disclosure-driven rules can keep up with the scale and speed of generative AI misuse.

[6]

Grok's deepfake incident signals the escalating threat of fake AI generated content

Several countries are looking into XAI's safety measures following reports that Grok was being used to generate thousands of sexually explicit deepfake photos of women, and in some cases, of minors on social media platform X (formerly Twitter). Since last week, X has been flooded by a mass undressing spree, where users were seen prompting Grok to manipulate images of women and generate explicit deepfakes. Most of these requests are reportedly non-consensual and violate XAI's policy on "acceptable use" that prohibits synthetic rendering of a person in a pornographic manner and sexualization of children. India's Ministry of Electronics and Information Technology (MeitY) sent a letter to X, last week, demanding immediate action to curb sexually explicit deepfakes and the misuse of AI tools such as Grok to create them. MeitY has asked X to remove all such material and strengthen its content moderation. French and Malaysian authorities have also launched an inquiry into the Grok generated deepfakes. XAI CEO Elon Musk said in a post on X, "anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content." What is alarming is that users didn't have to use jailbreaks or prompt engineering tricks to bypass Grok's guardrails. The AI chatbot generated these deepfake images on simple and direct user requests. Since its release in November 2023, Grok has been used to generate deepfake images of political figures. In the run up to the US elections, it was used to create deepfakes of Donald Trump, Joe Biden, Kamala Harris and even Elon Musk. Many of these widely circulated deepfakes showed these political figures in highly inflammatory situations, including fake images of them participating in the 9/11 terrorist attack. Grok is known to have fewer guardrails, unlike rival AI chatbots such as Gemini and ChatGPT, which refuse to generate synthetic images resembling a public figure. Getting them to generate sexually explicit content would require complex jailbreaking. Rise of deepfakes In 2024, explicit deepfake photos of singer Taylor Swift was reportedly shared more than 45 million times on X before it was taken down. However, their use is not limited to targeting women and political figures. Deepfakes are increasingly being used to target enterprises by impersonating CXOs and persuading staff to share sensitive information or transfer money. They can also be used to plant malware or circulate deepfakes of CXOs announcing fake financial results or mergers to manipulate the stock market. In May 2024, a worker in the finance department of a large British design and architecture firm, Arup, was tricked into transferring $25 million by a deepfake video call impersonating the CFO. According to news reports, the worker was initially suspicious but was convinced by the strikingly realistic AI impersonation of the CFO and other company executives who were faked on the video call. In another incident, scammers used a voice clone and a YouTube clip to impersonate Mark Read, CEO of an advertising firm WPP, and other executives in a video call on Microsoft Teams. Scammers tried to trick one of the agency leaders (who was on the call) into sharing funds and data to set up a new business. According to a Gartner report, 62% of organizations have faced a deepfake attack using social engineering or exploiting automated processes. Entrust's 2026 Identity Fraud report claims deepfakes now account for one in five biometric fraud attempts. Deepfakes include any form of image, video, and audio that seems authentic but is generated using generative AI tools. Before 2023, deepfakes were widely created using Generative Adversarial Networks (GANs), which pits two competing neural networks against each other to create realistic images. Most of the new generative AI apps such as Grok, Sora, and Midjourney use Diffusion model, which breaks down images into random noise and then learns to de-noise or clean the data and generate a new image that looks like the original. How are regulators dealing with deepfakes The Grok incident once again highlights the need for strict regulations and guidelines to prevent the misuse of AI, especially generative AI platforms. Lawmakers in some countries including India have so far maintained that they are not planning to introduce a separate AI law and will rely on existing legislations and legal frameworks to regulate and prevent misuse of AI. This reflects India's effort to strike a balance between innovation and regulation as it builds its own sovereign AI models to reduce over-reliance on US tech giants. In October 2025, MeitY proposed to amend the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, to curb sharing of deepfakes and misleading AI-generated content on social media. It will mandate social media platforms such as Instagram, YouTube, and X to label AI-generated content with markers that covers at least 10% of the visual display and initial 10% of the duration of an audio clip. In November 2025, MeitY also introduced the AI Guidelines, which advocates the principle of "do no harm" and recommends creation of governance institutions, India-specific risk frameworks, and making AI safety tools accessible to the public. The European Commission took a different stance when it passed the EU AI Act. It came into force in August 2024, bit its full implementation is expected to be completed in 2027. The EU AI Act classifies AI models based on the level of risk they pose and regulates it accordingly. AI models in the "unacceptable risk" category are blocked from operating in the EU, while "high-risk" AI models have to go through assessments and regular monitoring. In July 2025, the commission added a new framework called the AI Code of Practice. It requires AI providers to maintain detailed technical documentation and instructions for those who would integrate these models into their own products. It also requires them to implement a policy to comply with EU copyright law. Most of the AI providers including Meta, OpenAI and Anthropic are involved in legal battles with authors, artists, and news publishers for using copyrighted content to train their AI models without seeking their permission or paying them for it.

[7]

X says it's introducing more Grok guardrails & refining safeguards

MeitY studying X response to notice on curbing obscene AI-generated content: Sources Social media platform X has told the Indian government that it is introducing more guardrails to its artificial intelligence-powered chatbot Grok, and refining safeguards such as stricter image generation filters to minimise abuse of user images, people in the know told ET. The Ministry of Electronics and Information Technology (MeitY) is examining X's response detailing the actions it took to curb the spread of obscene content, and will respond accordingly, they added. MeitY last Friday asked X to remove all vulgar, obscene and unlawful content, especially generated by Grok, on the platform within 72 hours and take action against offending users. The deadline was subsequently extended by 48 hours, after the company sought more time. In its report submitted on Wednesday, the Elon Musk-owned platform said it is taking action also against illegal content on the site, including child sexual abuse material, by removing it, and permanently suspending accounts posting such material. Meanwhile, Grok continued to churn out sexually explicit content involving nudity based on prompts as of Wednesday evening, the chatbot is facing a backlash from governments around the world over this feature. In its order last Friday, MeitY directed X to undertake a technical, procedural and governance-level review of Grok AI. This should include its 'prompt-processing, output generation, responses generated using large language models, image handling and safety guardrails', so as to ensure the app does not generate, or promote content which contains nudity, sexualisation or unlawful content, the ministry said in its letter to X Corp's chief compliance officer. MeitY also flagged serious lapses in statutory due diligence under the IT Act, 2000 and IT rules, 2021. Subsequently, the platform said it was taking action against illegal content. "Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content," Musk said in a post on X Saturday.

[8]

MeitY Seeks Info on X's Action on Grok 'Undressing' Images

After the Indian government extended the deadline by two days to submit its Action Taken Report, X submitted its report to the Ministry of Electronics and Information Technology (MeitY). The details specified in X’s submission were not adequate, and the ministry reportedly requested the platform to provide additional information, especially key take-down actions against the obscene content generated by Grok, according to an article published in The Print. For some context, X, which owns and operates Grok AI, introduced a new image editing feature during the Christmas period on December 25, 2025, enabling users to generate non-consensual intimate images (NCII) of individuals uploaded by other users. The update unleashed a wave of AI-generated morphed images of women in bikini wear, most of them created without their consent and shared publicly on X’s platform. It is important to note that X and the Grok AI bot continue to enable users to generate unconsented images of women in bikini wear. Out of several instances witnessed by MediaNama, when a verified user, Jeremy Stamper, asked the Grok AI to generate Swedish Deputy Prime Minister Ebba Busch's image, it complied with just the "@grok bikini now" prompt. The post remains online and has garnered over 1.6 million views. Addressing these issues, the Indian government asked X on January 2 to submit the Action Taken Report by January 5, and later extended the deadline to January 7. Meanwhile, the government also warned that the platform may lose safe harbor status if they don't comply with the government's orders and laws. Safe harbor exemptions under Section 79 of the IT Act remove the liability of a platform for the content posted by third-party users. While X's official Safety and Global Government Affairs handles don't specify the report submitted to the Government of India, the anonymous sources who spoke to PTI said that X has submitted a detailed reply acknowledging the Indian laws and guidelines, stating that India is a big market for X. Reportedly, the submission outlined the takedown policies of X in reference to misleading posts and those related to non-consensual intimate images (NCII). However, despite the lengthy response from X, the Government of India stated that it lacked key information regarding the takedown details and the specific action taken against Grok AI, which had unleashed the undressing spree on the platform, as well as X's plans to prevent such incidents in the future. Before X’s submission to the government, X’s Safety handle said it would act against “illegal content, including child sexual abuse material (CSAM),†but did not explicitly address non-consensual sexually explicit images (NCII) generated by its AI bot Grok. The platform said enforcement measures could include content removal, permanent account suspensions, and cooperation with law enforcement. A day earlier, X CEO Elon Musk also said users generating illegal content with the use of Grok AI would face the same consequences as those who upload such material, as per their Rules. The Indian government is among the very few countries that swiftly responded to the unconsented public undressing by X’s users on its platform. Along with the Indian government, the Malaysian and UK governments issued statements criticising Grok AI’s ability to generate unconsented obscene content, and public representatives from the French government wrote to Paris’s public prosecutor regarding the ongoing controversy. On January 3, the Malaysian Communications and Multimedia Commission (MCMC) issued a statement against the misuse of AI-generation tools on X. It noted that the platform is enabling "digital manipulation of images of women and minors to produce indecent, grossly offensive, or otherwise harmful content." According to the MCMC, the Online Safety Act 2025 requires licensed online platforms to ensure the prevention of the spread of harmful content, including obscene material and child sexual abuse material. While X is not currently a licensed service provider, MCMC said that the platform still has a responsibility to stop online harms. The regulator stated that it is persistently investigating harmful content on X and will contact the company's representatives. It also urged all platforms accessible in Malaysia to implement safeguards in accordance with their laws, particularly for AI-powered features and image manipulation tools. Similarly, on January 5, UK regulator Ofcom said it is aware of serious concerns over a Grok feature on X that generates sexualised images, including of children. Ofcom said it has contacted X and xAI to assess whether they are meeting their legal duties to protect users in the UK and will determine whether a formal investigation is required or not based on their response. At the time of writing this report, there is no update on whether X has submitted its response to Ofcom. Reportedly, the European Commission also said it was “well aware†that X’s AI bot Grok is generating explicit sexual content, including images of children, according to its spokesman Thomas Regnier. Calling the material illegal and unacceptable, he said that such content has “no place in Europe†and also noted that this was not the first time Grok had produced such outputs. Brazilian lawmaker Erika Hilton said she has reported X and its AI chatbot Grok to the Federal Public Prosecutor’s Office and the National Data Protection Authority, calling for the platform to be disabled nationwide until the matter is fully investigated. “All of this is a CRIME. The right to one's image is individual; it cannot be transferred through the 'terms of use' of a social network, and the mass distribution of child porn*gr*phy by an artificial intelligence integrated into a social network crosses all boundaries," read her translated post on X.

[9]

Safe Harbor of X Could be Revoked Over Grok's CSAM Content

The government is prepared to revoke the safe harbor status of social media platform X "if it doesn't comply with the latest takedown directions on artificial intelligence (AI)-generated obscene images," anonymous officials told the Economic Times. This latest Indian government response comes at a time when the Safety handle of X (formerly Twitter) assured that they will take action against "illegal content, including Child Sexual Abuse Material (CSAM)," without particularly referring to the non-consensual sexually explicit imagery that X's AI bot Grok has been generating since the launch of this image editing feature with the Grok bot around the Christmas period in 2025. These actions can include: "Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content," warned X's CEO, Elon Musk, just a day before X's Safety handle mimicked a similar caution while referring to X's policies that define illegal content. Apart from the Indian government, the Malaysian government also took note of Grok's actions and the public representatives of the French government, who wrote to Paris' public prosecutor regarding the same. According to X's Rules, which favour public participation in "global public conversation", restricted safety-related aspects are: It is important to note that Grok admittedly violated its own rules by generating the Child Sexual Abuse Material (CSAM). On January 1, Grok bot admitted that it had "generated and shared an AI image of two young girls (estimated ages 12-16)." "It was a failure in safeguards, and I'm sorry for any harm caused. xAl is reviewing to prevent future issues," read Grok's response when other users persistently asked about the CSAM material. In addition to these, their policies also ask users not to share abusive content and not to engage in targeted harassment or inciting others. However, the specific section on "Illegal and Regulated Behaviours", which advocates that the Grok shouldn't be used for any "any unlawful purpose or in furtherance of illegal activities" -- which Elon Musk and X's team were referring to while responding to the mass undressing campaign unleashed by the Grok bot- doesn't refer to the sexual imagery, let alone non-consensual sexual imagery. It only refers to the activities related to the sales of drugs, weapons, human trafficking or poaching of endangered species and sexual services, which refer to sex work-related activities. Under X's rules framework, adult content, like morphed bikini-wear image generation, that does not involve underage children can fall under the platform's adult content policy, which explicitly permits consensually produced and distributed adult imagery. Now, the bigger question here is: how do you verify the consent of the alleged woman involved in the morphed picture? Addressing the question of safe harbor exemptions for the platform X and Grok AI bot, Nikhil Pahwa, Medianama's Founder and Editor, wrote for The Quint, arguing that X, especially Grok AI, can't claim safe harbor exemptions under Section 79 of the IT Act, which takes away the liability of a platform for the content posted by third-party users. Pahwa reasoned, saying, "X is actively enabling the publishing of this content via its own AI service and not making 'reasonable efforts' to prevent it; quite the opposite." He was referring to the "reasonable efforts" that a platform must make under Section 3(1)(b) of India's IT Rules to prevent the users from publishing content like "obscene, pornographic, paedophilic, invasive of another's privacy, including bodily privacy, insulting or harassing on the basis of gender, or racially or ethnically objectionable." Further explaining why X can't claim safe harbor exemptions, Pahwa explained, "Safe harbor protections are provided to platforms that allow others to post content: they act as 'intermediaries' and mere conduits. The company that runs X is not an intermediary here: its AI service is actively publishing this content, so safe harbor protections cannot apply to it." Therefore, he reasoned out, saying that the company behind Grok, namely X, is also potentially liable for generating NCII and not just the users who prompted the AI system, as X's terms of service try to push the user as the responsible person for the content they asked their AI system to generate. "Safe harbor protections were never meant to apply to publishing, and consent should never be optional. Until India's regulatory framework, especially the Digital Personal Data Protection Act, reflects this reality, these problems will continue," he concluded the editorial. After receiving a 72-hour countdown to submit the "Action Taken Report" notice from the Indian government's MeitY's Secretary regarding the rampant misuse of Grok bot's image editing capabilities to generate sexually explicit images of women, mostly without their consent, the X team seems to have begun suspending a few accounts, which prompted Grok to generate bikini wear on various women. For instance, an X handle, Komal Yadav (@komalyadav03), prompted Grok several times to ask for bikini-wear images of multiple women, and is now suspended. However, even after Elon Musk and X's Safety handles' assurances, the Grok AI bot is still generating the bikini-wear and sexually explicit images of various women. The unconsented image morphing is a huge issue. Because even if you want to take the consent for granted, that is when a person asks to modify "my image"; even then, the unconsented image generation continues in the same thread of the post, let alone when the users might re-upload the picture to Grok to re-morph the images. For example, a "verified" handle, Soumya Avasthi (@SoumyaAvasthi), which doesn't exist anymore, prompted Grok, saying, "Hey @grok put me in red saree!!" Complying with the prompt, Grok generated an image of the person in the image in a red saree. However, Grok also complied when another user asked to generate her image in Grok's app. Further making things worse, Grok will comply with the prompts to a random user's requests in the post's thread and will proceed to generate Non-Consensual Intimate Images (NCII). Addressing the unconsented morphed image generated, a verified user, Nandani S (@ChaiCodeChaos), reported an account handle (@HoeEnchanted) to X's handle Cyber Cell India, and the current status of the alleged account reads, "This account doesn't exist." This can also mean that the account might have been deactivated by the user- which can be revoked within 30 days- and not suspended by X's team. Similarly, another verified user, Meghna (@CPUatOnePercent), also claims that she got her morphed images taken down from X after two days. The concerned account (@ltlswhatltls), which allegedly generated morphed images of Meghna, also reads, "doesn't exist anymore." Concerning the potential exploitation, several users began telling Grok and X that they do not authorise it "to take, modify, or edit ANY photo or video" of them, "whether those published in the past or the upcoming ones" that they post online. However, X's attempts to comply with the Indian government's orders are not equal. For example, a handle with the name Kavi (@Kavithasri98), is still active at the time of writing this report despite generating bikini wear images on Jan 2, 2026. The account, created on December 29, 2025, days after Grok's image-editing rollout, contains only images of one woman in different Indian attire, suggesting the images may be AI-generated. However, Medianama could not independently verify whether the person shown in the images is real or a deepfake, a limitation encountered repeatedly during the reporting of this story. This aspect is important to note because, if this person is "real", then the person can/may initiate action against either X or the owner of X's handle, or both. If not, if the person is deepfake-generated, then would it still be a violation of the privacy and dignity of an (unreal) woman? How would X deal with the sexually explicit deepfakes of already deepfaked (probably unreal) women?

[10]

Grok obscene AI content: X submits response, IT Ministry examining it, say sources

The IT Ministry is examining the response and submissions made by X following a government directive to crack down on misuse of artificial intelligence chatbot Grok by users for the creation of sexualised and obscene images of women and minors, sources said. X had been given extended time until Wednesday, 5 PM to submit a detailed Action Taken Report to the ministry, after a stern warning was issued to the Elon Musk-led social media platform over indecent and sexually-explicit content being generated through misuse of AI-based services like 'Grok' and other tools. Sources told PTI that X has submitted their response, and it is under examination. The details of X's submission were, however, not immediately known. On Sunday, X's 'Safety' handle said it takes action against illegal content on its platform, including Child Sexual Abuse Material (CSAM), by removing it, permanently suspending accounts, and working with local governments and law enforcement as necessary. "Anyone using or prompting Grok to make illegal content will suffer the same consequences as if they upload illegal content," X had said, reiterating the stance taken by Musk on illegal content. On January 2, the IT Ministry pulled up X and directed it to immediately remove all vulgar, obscene and unlawful content, especially generated by Grok (X's built-in artificial intelligence interface) or face action under the law. In the directive on Friday, the ministry asked the US-based social media firm to submit a detailed action taken report (ATR) within 72 hours, spelling out specific technical and organisational measures adopted or proposed in relation to the Grok application; the role and oversight exercised by the Chief Compliance Officer; actions taken against offending content, users and accounts; as well as mechanisms to ensure compliance with the mandatory reporting requirement under Indian laws. The IT Ministry, in the ultimatum issued, noted that Grok AI, developed by X and integrated on the platform, is being misused by users to create fake accounts to host, generate, publish or share obscene images or videos of women in a derogatory or vulgar manner. "Importantly, this is not limited to creation of fake accounts but also targets women who host or publish their images or videos, through prompts, image manipulation and synthetic outputs," the ministry said, asserting that such conduct reflects a serious failure of platform-level safeguards and enforcement mechanisms, and amounts to gross misuse of artificial intelligence (AI) technologies in violation of stipulated laws. The government made it clear to X that compliance with the IT Act and rules is not optional, and that the statutory exemptions under section 79 of the IT Act (which deals with safe harbour and immunity from liability for online intermediaries) are conditional upon strict observance of due diligence obligations. "Accordingly, you are advised to strictly desist from the hosting, displaying, uploading, publication, transmission, storage, sharing of any content on your platform that is obscene, pornographic, vulgar, indecent, sexually explicit, paedophilic, or otherwise prohibited under any law...," the ministry said. The government warned X in clear terms that any failure to observe due diligence obligations shall result in the loss of the exemption from liability under section 79 of the IT Act, and that the platform will also be liable for consequential action under other laws, including the IT Act and Bharatiya Nyaya Sanhita. It asked X to enforce user terms of service and AI usage restrictions, including ensuring strong deterrent measures such as suspension, termination and other enforcement actions against violating users and accounts. X has also been asked to remove or disable access "without delay" to all content already generated or disseminated in violation of applicable laws, in strict compliance with the timelines prescribed under the IT Rules, 2021, without, as such, vitiating the evidence. Besides India, the platform has drawn flak in the UK and Malaysia too. Ofcom, the UK's independent communications regulator, in a recent social media post, said: "We are aware of serious concerns raised about a feature on Grok on X that produces undressed images of people and sexualised images of children". "We have made urgent contact with X and xAI to understand what steps they have taken to comply with their legal duties to protect users in the UK. Based on their response, we will undertake a swift assessment to determine whether there are potential compliance issues that warrant investigation," Ofcom said.

[11]

India Directs X to Curb AI-Generated Sexual Content

The Ministry of Electronics and Information Technology (MeitY), in a letter dated January 2, 2026, directed X (formerly Twitter) to take urgent action against the proliferation of obscene, nude, indecent, and sexually explicit content, particularly content generated or circulated through the misuse of its AI services such as Grok and other xAI tools. In short, the ministry has ordered X to promptly remove such material, strengthen its content moderation and AI safeguards, and demonstrate compliance with India's due diligence requirements for intermediaries. This move follows a wave of activity on X over the past few days, during which users repeatedly prompted the platform's AI chatbot, Grok, to generate graphic sexual content using photos of real women in replies or posts. These outputs were generated directly in replies and often remained visible for extended periods. As a result, the content gained traction through engagement loops, with multiple users amplifying or iterating on the same prompts, raising fresh concerns about the platform's controls over AI-assisted content creation and distribution. Meanwhile, MeitY's intervention builds on its earlier advisory on obscene and sexually explicit content, which reminded intermediaries of their obligations under the Information Technology Act and the IT Rules, 2021. The advisory stressed that platforms must prevent the hosting and dissemination of prohibited content, act swiftly upon gaining actual knowledge, and deploy adequate technological measures to curb the spread of unlawful material, particularly content that harms the dignity and privacy of women. Further, MeitY Secretary S. Krishnan said that "they cannot escape their duty or responsibility simply by pleading safe harbour", and that "social media entities have to show responsibility", when asked about the Grok incident during an interview with CNBC-TV18. The notice directs X to take immediate and concrete steps to prevent the hosting, generation, publication, transmission, storage, or sharing of obscene, nude, indecent, sexually explicit, vulgar, paedophilic, or otherwise unlawful content, including content created or amplified through AI-enabled services such as Grok. It instructs the platform to desist from allowing such material in any form and to act strictly in line with statutory due diligence obligations under the IT Act and the IT Rules, 2021. The notice further requires the platform to carry out a comprehensive technical, procedural, and governance-level review of the Grok application. This review must cover prompt processing, large language model-based output generation, image handling, and safety guardrails, and must ensure that the application does not generate, promote, or facilitate nudity, sexualisation, sexually explicit material, or any other unlawful content in any form. In parallel, the platform must enforce its user terms of service, acceptable use policies, and AI usage restrictions, including by imposing strong deterrent measures such as suspension, termination, or other enforcement action against users and accounts found to be in violation. Additionally, the platform must submit a detailed Action Taken Report (ATR) to the ministry within 72 hours of the issuance of the letter. This report must outline the technical and organisational measures adopted or proposed in relation to the Grok application, the role and oversight exercised by the Chief Compliance Officer, the actions taken against offending content, users, and accounts, and the mechanisms put in place to ensure compliance with mandatory offence-reporting requirements under criminal procedure law. India's deepfake regulation debate has largely centred on MeitY's draft amendments to the IT Rules on synthetic information, which aim to push platforms and AI service providers towards labelling and provenance-style compliance, while also expanding the conversation beyond simple takedowns. At MediaNama's Regulating for Deepfakes in India discussion, experts repeatedly questioned whether disclosure mandates alone can work at scale, and whether the government should assign responsibility differently across the ecosystem. One participant said that "the problem statement of these particular rules, which seems to be deepfakes and harmful deepfakes" could be better resolved "through a value chain approach where you're assigning appropriate responsibilities across various layers of the AI value chain". At the same time, speakers warned that the draft framework risks conflating AI governance with intermediary liability. One expert noted that "by conflating AI oversight with the liability regime, we are essentially creating outcomes that are not needed and a lot of confusion", referring to how labelling and provenance requirements begin to resemble AI technology regulation rather than content distribution compliance. Meanwhile, enforcement and detection emerged as central issues. One speaker pointed to existing deployments of detection systems, stating, "We are live with many banks and financial institutions", and that the system is "handling lakhs of KYC verifications per day" with "millions of API hits every month" that are "scalable to tens of millions of detections per day". However, they also asked, "Who will pay for it?", highlighting the cost challenge. This matters because India's deepfake and AI misuse problem has moved beyond fringe tools and isolated platforms. Generative capabilities now sit directly within large, mainstream digital services, enabling harmful content to be created and circulated at scale. This episode shows that when platforms integrate such tools into social feeds, replies, and engagement-driven interfaces, misuse can spread rapidly and gain visibility faster than existing moderation controls can respond. At the same time, the availability of face-swapping applications on the Google Play Store in India underscores that AI-enabled misuse spans multiple layers of the digital ecosystem. While regulators and platforms may intervene at the level of content distribution, the underlying tools that enable synthetic sexual content often remain easily accessible through app marketplaces. This creates an uneven regulatory landscape, where action against one platform does not necessarily reduce overall availability or use. Against this backdrop, the focus on AI features within a major social platform highlights a broader policy challenge. Regulatory responses are increasingly confronting not just user behaviour, but the design and deployment of generative systems themselves. However, these interventions remain largely case-specific. Consequently, this episode points to a growing gap between the pace of generative AI adoption and the coherence of regulatory oversight. As AI-driven content creation becomes more deeply woven into everyday platforms, the effectiveness of enforcement will depend on whether safeguards extend consistently across platforms, tools, and distribution channels -- rather than operating in isolation.

[12]

Grok obscene AI content: Govt gives X time till Jan 7 to submit report - The Economic Times

In a recent development, the Indian government has extended the deadline for social media platform X to address issues surrounding explicit content produced by its AI, Grok. X is now required to furnish a comprehensive report by January 7.The government has given X an additional time till January 7 to submit a detailed Action Taken Report after it issued a stern warning to the Elon Musk-led social media platform over indecent and sexually-explicit content being generated through misuse of AI-based services like 'Grok' and other tools. On Friday, the government directed X to immediately remove all vulgar, obscene and unlawful content, especially generated by Grok (X's built-in artificial intelligence interface) or face action under the law. The ministry had also asked the US-based social media firm to submit a detailed action taken report (ATR) within 72 hours of the directive (effectively by January 5). Sources said X had sought more time, and now it has been asked to submit its report by January 7. The IT Ministry, in its January 2 missive, said that Grok AI, developed by X and integrated on the platform, is being misused by users to create fake accounts to host, generate, publish or share obscene images or videos of women in a derogatory or vulgar manner. "Importantly, this is not limited to creation of fake accounts but also targets women who host or publish their images or videos, through prompts, image manipulation and synthetic outputs," the ministry said, asserting that such conduct reflects a serious failure of platform-level safeguards and enforcement mechanisms, and amounts to gross misuse of artificial intelligence (AI) technologies in violation of stipulated laws. The ministry said the regulatory provisions under the IT Act and rules were being flouted by the platform, particularly in relation to obscene, indecent, vulgar, pornographic, paedophilic, or otherwise unlawful or harmful content. "The aforesaid acts and omissions are viewed with grave concern, as they have the effect of violating the dignity, privacy and safety of women and children, normalising sexual harassment and exploitation in digital spaces, and undermining the statutory due diligence framework applicable to intermediaries operating in India," the IT Ministry said, drawing attention to its December 29, 2025 advisory that had instructed all platforms to undertake an immediate review of their internal compliance frameworks, content moderation practices and user enforcement mechanisms, in order to ensure strict and continuous adherence to laws. The government made it clear to X that compliance with the IT Act and rules is not optional, and that the statutory exemptions under section 79 of the IT Act (which deals with safe harbour and immunity from liability for online intermediaries) are conditional upon strict observance of due diligence obligations. "Accordingly, you are advised to strictly desist from the hosting, displaying, uploading, publication, transmission, storage, sharing of any content on your platform that is obscene, pornographic, vulgar, indecent, sexually explicit, paedophilic, or otherwise prohibited under any law for the time being in force in any manner whatsoever," the ministry said on January 2. The government warned X in clear terms that any failure to observe due diligence obligations shall result in the loss of the exemption from liability under section 79 of the IT Act, and that the platform will also be liable for consequential action under other laws, including the IT Act and Bharatiya Nyaya Sanhita. It directed X to immediately undertake a comprehensive technical, procedural and governance-level review of Grok, including its prompt-processing, output-generation (responses generated using Large Language Models or LLMs), image-handling and safety guardrails "so as to ensure that the application does not generate, promote or facilitate content which contains nudity, sexualisation, sexually explicit or otherwise unlawful content in any form". It asked X to enforce user terms of service and AI usage restrictions, including ensuring strong deterrent measures such as suspension, termination and other enforcement actions against violating users and accounts. X has also been asked to remove or disable access "without delay" to all content already generated or disseminated in violation of applicable laws, in strict compliance with the timelines prescribed under the IT Rules, 2021, without, as such, vitiating the evidence. The ministry has asked X to submit a detailed Action Taken Report (ATR) within 72 hours of the date of issuance of the said letter. The ATR, it said, must cover specific technical and organisational measures adopted or proposed in relation to the Grok application; the role and oversight exercised by the Chief Compliance Officer; actions taken against offending content, users and accounts; as well as mechanisms to ensure compliance with the mandatory reporting requirement under Indian laws. "...ensure ongoing, demonstrable and auditable compliance with all due diligence obligations under the IT Act and the IT Rules, 2021, failing which appropriate action may be initiated, including the loss of the exemption from liability under section 79 of the IT Act, and consequential action as provided under any law including the IT Act and the BNS," the ministry wrote. On Sunday, X's Safety handle said it will act against illegal content by removing it, permanently suspending accounts that uploaded the material and working with local governments as required. "Anyone using or prompting Grok to make illegal content will suffer the same consequences as if they upload illegal content," it had said, reiterating Musk's stance on illegal content.

[13]

X, Grok and the limits of safe harbour in India | Marketing | Campaign India

India's Ministry of Electronics and Information Technology (MeitY) has warned X (formerly known as Twitter) that it risked losing its 'safe harbour' status. This trigger was not a single post or rogue account, but came over the circulation of AI-generated obscene content created using xAI's chatbot Grok. According to The Economic Times, the government has told the company that failure to remove flagged images and videos and submit an auditable compliance report could lead to the withdrawal of legal protections under Section 79 of the Information Technology Act. At stake is the intermediary immunity that shields platforms from liability for user-generated content, provided they follow Indian rules and act swiftly on takedown orders. Officials have made it clear that this protection is conditional -- and that generative AI tools hosted and promoted by platforms are now testing the boundaries of that framework. The notice follows a growing volume of complaints around Grok's image-generation features, particularly its so-called 'Spicy Mode'. Many netizens took to social media to point out that the feature has been misused to create non-consensual, sexualised deepfakes of public figures and private individuals, many of them women and celebrities like singer Taylor Swift. And also share their disgust on this intrusion into their privacy on a public platform. On January 2, MeitY sent X a formal notice saying it had "grave concern" over the use of Grok to generate obscene images. The government warned that "such conduct reflects a serious failure of platform-level safeguards and enforcement mechanisms, and amounts to gross misuse of artificial intelligence technologies in violation of applicable laws". It added that the spread of such content could violate the dignity, privacy and safety of women and children, "normalising sexual harassment and exploitation in digital spaces, and undermining the statutory due diligence framework applicable to intermediaries operating in India". Crucially, officials and legal experts say the issue is not limited to user misuse. Because Grok is a platform-provided AI tool, X could be seen as enabling or amplifying the creation of harmful content rather than merely hosting it -- weakening claims to intermediary immunity. A government source told The Economic Times: "Back in 2021, the Centre had informed the Delhi High Court that X (then Twitter) had briefly become legally responsible as a publisher. In any case, the government is not keen on removing safe harbour provisions. But given the explosion of AI-made content that harms our citizens, especially women and children, it is prepared to take a hard step." A member of xAI's technical staff, Parsa Tajik, tweeted that its engineering teams are working to introduce tighter guardrails. Critics argue that incremental fixes may not be enough unless platforms rethink how generative AI tools are deployed and moderated at a systemic level. Safe harbour, with conditions attached Under Section 79 of the Information Technology Act, 2000, social media platforms are treated as neutral intermediaries and are not held responsible for content created by third parties -- so long as they follow government rules and promptly remove unlawful material when directed. That protection, however, depends on demonstrable due diligence. Platforms must act quickly on government or court orders, appoint local compliance officers, and maintain safeguards to prevent the spread of illegal content. MeitY has said X is not adhering to the Information Technology (IT) Rules, 2021, and the Bharatiya Nagarik Suraksha Sanhita, 2023, which deal with obscene, indecent, vulgar, pornographic, paedophilic or otherwise unlawful content. Non-compliance within 72 hours, the ministry has warned, could result in revocation of X's safe harbour status. The ministry has also questioned whether X's India-based compliance officers have the authority and oversight mandated under Indian law. This revives a familiar fault line from earlier disputes with the platform. In December, MeitY issued an advisory asking social media firms to immediately review their compliance frameworks after observing that platforms were not acting strictly against obscene and unlawful content. The directive followed complaints from public discourse and representations from parliamentary stakeholders. Rajya Sabha member Priyanka Chaturvedi had written to Union IT minister Ashwini Vaishnaw seeking urgent intervention over the misuse of Grok to create vulgar images of women. Vaishnaw has signalled that the government's patience with self-regulation is wearing thin. "Shouldn't platforms operating in a context as complex as India adopt a different set of responsibilities? These pressing questions underline the need for a new framework that ensures accountability and safeguards the social fabric of the nation," he had stated. Musk's response -- and unresolved questions X owner Elon Musk responded publicly, saying users who generate illegal content using Grok would face the same consequences as those who upload illegal material. "Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content," Musk said on X, responding to a post about "inappropriate images". Indian regulators seem unconvinced by that analogy. Beyond takedowns, MeitY is pressing for design-level changes to Grok itself, including disabling or sharply restricting 'Not suitable for work' modes, preventing the generation of realistic likenesses without consent, and maintaining independently verifiable moderation records. This is not X's first brush with Indian regulators. In 2021, the platform briefly lost its safe harbour protection after failing to comply fully with the IT Rules, 2021, including the appointment of key India-based officers. Its legal immunity remained suspended for nearly three months. Advertisers had already stepped back The regulatory heat comes against the backdrop of a long-running crisis of confidence between X and the advertising industry. Campaign reported in 2022 that advertisers and media buyers had started pausing advertising on X amid concerns about brand safety and moderation following Musk's takeover. Based on their unease about content controls and Musk's "erratic behaviour", they advised brands to avoid the platform because they could not guarantee a brand-safe environment. GroupM assessed advertising on Twitter as "high risk", according to a report in the Financial Times. Several large advertisers hit pause on spending. In the US, Media Matters reported that 50 of the top 100 advertisers, which spent $750m in 2022, had seemingly stopped advertising on Twitter. Brands that paused spending included VW, General Motors, Diageo, Heineken, Nestlé, Coca-Cola, Mars and Ford. This prompted Musk to personally call chief executives to berate them, according to media reports. Agency leaders told Campaign back then, on condition of anonymity, that concerns centred on reduced investment in moderation, staff exits and Musk's own conduct on the platform. "Some of the things he is posting, including memes, are horrendous," one agency leader said. "The challenge for Musk and Twitter is that he will need to prove that this is an environment that is safe for advertisers, and so far we have not seen that." Further unease followed sweeping cuts to Twitter's sales and moderation teams. Aura Intelligence reported that over 6,000 employees were let go during Musk's takeover of Twitter, reducing the workforce by nearly 80%. A platform under pressure from both sides In a 2024 article for WARC, Gonka Bubani of Kantar described Musk's stewardship of X as a case study in how quickly platforms can unravel when advertisers and moderation systems are alienated. "We now know that marketers are fleeing: a net 26% plan to reduce ad spend on X in 2025, the largest drop that Kantar's annual Media Reactions report has tracked across any major platform since its start in 2020," Bubani wrote. "Only 12% of marketers now trust ads on X... Only one in 25 believe X provides adequate brand safety," she added, noting that Google's perceived brand safety rating stood at 39%. While Bubani acknowledged that confidence in X had been declining before Musk's acquisition, she argued that dismantling moderation systems and increasing volatility had pushed the platform "to the edge". For Indian regulators, the Grok controversy sharpens a broader policy question: whether generative AI features change a platform's legal responsibilities. If MeitY withdraws X's safe harbour protection, the company could face direct liability for user posts in India, along with stricter disclosure and moderation obligations. That would set a precedent for how governments treat AI-native features embedded within social platforms. Regulators in Malaysia and parts of Europe are already probing Grok's outputs and urging tighter safeguards. For social media platforms and AI product teams, the trade-off is becoming unavoidable. Either redesign features that drive engagement but carry foreseeable risk, or accept tighter regulation and increased legal exposure. India's message to X suggests that promises and post-hoc takedowns may no longer be enough. The scrutiny is shifting upstream -- towards product design, model behaviour and whether platforms can prove, with evidence, that harm is being prevented rather than merely cleaned up after the fact.

[14]

Harbour changes in different ports - The Economic Times

Social media platform X risks losing its 'safe harbour' status in India. This is due to content generated by its AI bot, Grok. The government seeks a compliance report from X. Tech companies must balance free speech with legal requirements. They need to provide solutions for unacceptable content. This ensures user trust and business interests.Microblogging site X is at risk of losing its 'safe harbour' status in India over reportedly 'obscene' content generated by X's AI bot Grok. Under Section 79 of IT Act 2000, social media sites are classified as neutral hosts not held responsible for content created by third parties - in 'safe harbour' - as long as they follow GoI rules. GoI now wants a compliance report from X over takedown requests. In its earlier avatar, Twitter had briefly been made liable for content it carried for being at odds with GoI over curation. Such conflicts will keep erupting, so long as media platforms and lawmakers have divergent 'cultural' views. Tech companies are bound by their contract with their users to allow free speech within reason. A narrower reading imposes extra parameters into their business model. But social media must operate in multiple jurisdictions to extract network effects. The compliance burden, thus, becomes a variable for media platforms. Safe harbour status is an accomplishment - not an entitlement. Tech that facilitates creation and dissemination of patently unacceptable content must also provide curating solutions in concert with lawmakers. Obvious violations can be counteracted technically without having to invoke the law. And when law enforcement agencies raise a concern, it must be acted upon, or reason must be shown for inaction. Taking the stand that blaming AI for obscenity is like blaming the pen for blasphemy serves little purpose. Content is created through human agency, and tech providers have adequate leverage to deter rogue action. It is in their interests to ensure good housekeeping to retain users and advertisers. Limits to freedom of expression are defined by culture. It works when governments outsource some policing to tech companies. Social media must conform to restrictions imposed on legacy media. Additional requirements may be necessary for tech adaptation. Tech is not ethical by itself. The ethical dimension must be provided by society it serves, and profits from.

[15]

ETtech Explainer: Why Grok's edgy AI image generator has come under fire

Amid huge backlash online, India on Friday wrote to X, highlighting its failure to observe statutory due diligence under the Indian IT rules and demanding an "action taken report." Manipulated images of Bollywood actors and Indian public figures went viral as more and more users tried the feature, using prompts such as "undress," "change clothes" and "change to a bikini." A creative tool gone wrong! New Year's Eve on X turned controversial as users misused the microblogging platform's AI assistant to generate thousands of non-consensual and sexualised images of women, children and public figures. Amid huge backlash online, India on Friday wrote to X, highlighting its failure to observe statutory due diligence under the Indian IT rules and demanding an "action taken report." Manipulated images of Bollywood actors and Indian public figures went viral as more and more users tried the feature, using prompts such as "undress," "change clothes" and "change to a bikini." It once again brings the cons of AI tools to the centre stage. ETtech takes a look at what went wrong... What happened? On December 25, X Corp executive chairman Elon Musk announced a new feature of Grok that allowed X users to edit images and videos by simply asking the AI assistant to add, remove or create elements in the file. Things went south when netizens started using the feature to 'undress women', flooding the platform with thousands of obscene images. Unlike competitors such as Google's Gemini or OpenAI's ChatGPT, which have filters on their prompts and image creation for sexual and pornographic content, Grok was positioned as 'more edgy' with fewer guardrails. "Cybersecurity should not be treated as an afterthought. It has to be a 'before thought'," said Ankush Tiwari, founder of cyber forensics startup Pi Labs AI. "We need to analyse threat vectors for large foundation models." He noted that today, AI models are being released openly. However, where are these models trained? Which geography do they come from? What kind of data are they trained on? These questions often go unanswered, Tiwari added. How has India responded? The Ministry of Electronics and Information Technology (MeitY) wrote to X on Friday evening, stating that such conduct reflects a serious failure of platform-level safeguards and enforcement mechanisms. This amounted "to gross misuse of artificial intelligence technologies in violation of applicable laws," it said. The ministry said the laws are not being adequately adhered by the platform "particularly in relation to obscene, indecent, vulgar, pornographic, paedophilic, or otherwise unlawful or harmful content which are potentially violative of extant laws." On December 29, MeitY had also issued an advisory to social media platforms, warning them that they are legally accountable to stop the spread of obscene and non-consensual content. India has been on high alert regarding deep fakes since late 2023, following viral morphed videos of Indian celebrities. As of early to late 2025, India has approximately 22 to 27 million users on X, making it one of the top countries for the platform. What do laws in India say? Legal experts said the misuse of AI tools to create non-consensual and sexualised images violates multiple Indian laws while there are no direct AI laws for platforms. Currently, such content falls under the Indecent Representation of Women (Prohibition) Act, 1986, which bans obscene or derogatory depiction of women. The Information Technology Act also applies in the case, with sections 66E and 67 dealing with privacy violations and obscene content online. While platforms enjoy intermediary immunity under Section 79, this protection is lost if illegal content is not taken down after being flagged. The recently passed Digital Personal Data Protection (DPDP) Act, 2023 mandates consent for using personal data, including photographs and facial data, and lists penalties of up to Rs 250 crore for breaches. Calling the trend "immoral" and illegal, Adithya LHS, chief executive officer at NyayNidhi an AI-powered legal-tech company, said social media platforms often rely on intermediary immunity to avoid liability. "But disabling and removing such illicit content is part of a platform's core responsibility. This clearly crosses the line," he said. Adithya said AI remains largely unregulated globally. "Regulation always lags innovation, especially when AI models are improving at an exponential pace," he said. Has X responded to this? X responded to the global outrage by hiding Grok's media tab and directed users to report violations within its stated prohibitions on non-consensual intimate imagery. "X's decision to disable Grok's media tab and claim tighter safeguards is a reactive step, but the bar should be higher," said Kanishk Gaur, CEO of digital risk management firm Athenian Tech. "Default-safe settings, strong consent checks, auditable controls, and consistent policy application, especially for features that operate in public feeds, are needed."

[16]

Govt issues notice to X over 'obscene' content targeting women, children on Grok

MeitY has issued a formal notice to X, citing serious lapses in statutory due diligence under the IT Act and Rules. The government is concerned about X's AI tool, Grok, generating and circulating obscene content, particularly targeting women and children. X has been directed to review Grok's framework, remove unlawful content, and take action against offending users within 72 hours. The Ministry of Electronics and Information Technology (MeitY) on Friday issued a formal notice to Elon Musk-owned social media platform X, flagging serious lapses in statutory due diligence under the Information Technology Act and IT Rules. Amid ongoing concerns over misuse of the platform's AI tool, Grok, the government has raised the issue of the tool generating and circulating obscene, sexually explicit and derogatory content, particularly targeting women and children, calling it a grave violation of dignity, privacy and digital safety. Also read: "Gross misuse", RS MP Priyanka Chaturvedi flags AI misuse on social media, writes to IT Minister Vaishnaw MeitY has directed the platform to immediately review the technical and governance framework of its AI tool Grok and remove all unlawful content. It has also asked the platform to take action against offending users, and submit an Action Taken Report within 72 hours. The ministry, led by Union Minister Ashwini Vaishnaw, warned that continued non-compliance could lead to loss of legal protection under the IT Act and strict action under multiple cyber, criminal and child protection laws. Earlier on Friday, Vaishnaw said that social media firms should take responsibility for content they publish and a standing committee has already recommended a tough law to fix the accountability of platforms This week, the government warned online platforms, mainly social media firms, of legal consequences if they fail to act on obscene, vulgar, pornographic, paedophilic and other forms of unlawful content. "Social media should be responsible for the content they publish. Intervention is required," Vaishnaw said on Friday on the sidelines of a Meity event. Meanwhile, Rajya Sabha member Priyanka Chaturvedi has also written to the minister seeking urgent government intervention on increasing incidents of AI apps being misused to create vulgar photos of women and post them on social media. "The standing committee has recommended that there is a need to come up with a tough law to make social media accountable for content they publish," Vaishnaw informed. The Parliamentary Standing Committee on the Ministry of Information and Broadcasting has made a recommendation to the government seeking accountability of social media and intermediary platforms with respect to peddling fake content and news. (With inputs from PTI)

[17]

Grok vs Indian Govt: Why Musk's AI is facing serious scrutiny in India