India Proposes Stringent AI Regulations to Combat Deepfakes and Misinformation

12 Sources

12 Sources

[1]

India plans tightening AI rules to stem deepfake surge

India has proposed sweeping new regulations to govern artificial intelligence, aiming to curb a surge in misinformation and deepfake videos in the world's most populous nation. The Ministry of Information Technology announced the proposed amendments, citing "the growing misuse of technologies used for the creation or generation of synthetic media." "Recent incidents of deepfake audio, videos and synthetic media going viral on social platforms have demonstrated the potential of generative AI to create convincing falsehoods," a briefing note from the ministry issued late Wednesday read. "Such content can be weaponized to spread misinformation, damage reputations, manipulate or influence elections, or commit financial fraud," it added. India has more than 900 million internet users, according to the Internet and Mobile Association of India. China has more internet users, but India is more open to US tech companies. The government has already launched an online portal called Sahyog -- meaning "cooperate" in Hindi -- aimed at automating the process of sending government notices to content intermediaries such as X and Facebook. "These proposed amendments provide a clear legal basis for labeling, traceability, and accountability," it added, saying it would "strengthen the due diligence obligations" of social media intermediaries. Major AI firms looking to court users in the world's fifth-largest economy have made a flurry of announcements about expansion this year. This month, US startup Anthropic said it plans to open an office in India, with its chief executive Dario Amodei meeting Prime Minister Narendra Modi. OpenAI has said it will open an India office, with its chief Sam Altman noting that ChatGPT usage in the country had grown fourfold over the past year. AI firm Perplexity also announced a major partnership in July with Indian telecom giant Airtel.

[2]

India's Deepfake Crackdown Signals Tougher AI Rules on Fake Content | AIM

The draft IT Rules amendments define synthetic information, mandate clear labelling and verification, and allow only senior officers to issue removal notices. In what marks one of the first formal steps towards regulating the use of artificial intelligence (AI) in India, the Union government has released draft amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, seeking to establish legal guardrails around synthetically generated information, including deepfakes. The electronics and information technology ministry (MeitY), which notified the draft, has invited feedback on the proposed changes by November 6. The government said the amendments are aimed at ensuring an "open, safe, trusted and accountable internet", amid the rapid rise of generative AI tools and the growing risk of misuse through synthetic content that can mislead, impersonate or manipulate. The ministry noted that the proliferation of deepfake videos and AI-generated content has significantly increased the potential for harm, from spreading misinformation and manipulating elections to impersonating individuals or creating non-consensual intimate imagery. Recognising these risks and following public consultations and parliamentary discussions, MeitY has proposed strengthening the due diligence obligations of intermediaries, especially social media intermediaries, significant social media intermediaries and platforms that facilitate the creation or modification of synthetic media. Synthetic Information Defined For the first time, the draft amendments introduce a definition of "synthetically generated information", described as information "artificially or algorithmically created, generated, modified or altered using a computer resource, in a manner that reasonably appears to be authentic or true". The rules clarify that references to "information" used for unlawful acts under the IT Rules will now explicitly include such synthetically generated content. To ensure transparency and accountability, the amendments propose mandatory labelling and metadata embedding for all synthetic content. Intermediaries that enable the creation or modification of AI-generated media will be required to ensure that such content carries a permanent, unique metadata or identifier that cannot be removed or altered. This label must be prominently displayed or made audible, covering at least 10% of the visual display area or, in the case of audio content, the initial 10% of its duration, so users can immediately identify that the content is synthetic. Significant social media intermediaries will also face enhanced responsibilities. They must require users to declare whether any uploaded content is synthetically generated and deploy reasonable and proportionate technical measures to verify these declarations. If the information is confirmed to be synthetic, the platform must clearly display an appropriate label or notice indicating that it is such. Failure to act against synthetically generated information in violation of these rules will be considered a lapse in due diligence. The amendments further specify that intermediaries acting in good faith to remove or disable access to synthetic information based on grievances will continue to enjoy protection under Section 79(2) of the IT Act, which grants them exemption from liability for third-party content. The Rationale MeitY said the rationale behind the proposed legal framework stems from recent incidents of deepfake media being weaponised to damage reputations, spread falsehoods, influence elections or commit fraud. Policymakers worldwide have raised concerns about such fabricated content, which is increasingly indistinguishable from authentic material and threatens public trust in digital information ecosystems. These issues have also been debated in both Houses of the Parliament in India, prompting the ministry to issue earlier advisories to intermediaries urging stronger controls on deepfakes. The government said the proposed rules will establish a clear legal basis for labelling, traceability and accountability of AI-generated content. They are designed to balance user protection and innovation by mandating transparency without stifling technological advancement. If adopted, the amendments would make India one of the first countries to codify rules specifically addressing synthetic and AI-generated information. The framework aims to empower users to distinguish authentic content from manipulated or fabricated material while ensuring that intermediaries and platforms hosting such information remain accountable. MeitY has invited stakeholders and the public to submit comments or suggestions on the draft rules by email to [email protected] in MS Word or PDF format by November 6. Akif Khan, a VP analyst at Gartner, said the law would be a step in the right direction, particularly in requiring social media platforms to label the content users post on their platforms. However, he noted that the stated use of "reasonable and appropriate technical measures" for doing so is open to interpretation. Furthermore, the traditional challenges of jurisdiction and applicability remain, he said, questioning whether the law would extend to social media posts made by users in other countries that Indian citizens could potentially see. "Those challenges will need to be resolved for the law to have the intended positive impact in the Indian context," he added. Who Keeps a Check? MeitY's review also recommended stronger safeguards for senior-level accountability, precise identification of unlawful content and periodic review of government directions. The amendments specify that only a senior officer not below the rank of joint secretary (or a director or equivalent where no joint secretary is appointed) can issue removal notices to intermediaries. For police authorities, only a deputy inspector general of police (DIG) who has been specially authorised can issue such intimations. These notices must provide a clear legal basis, specify the unlawful act and identify the exact content to be removed, replacing earlier broad references with "reasoned intimation" under Section 79(3)(b) of the IT Act, according to an official statement. All notices under Rule 3(1)(d) will be reviewed monthly by an officer not below the rank of secretary of the appropriate government to ensure necessity and proportionality. The amendments aim to balance citizens' rights with state regulatory powers, ensuring transparent and precise enforcement. In a LinkedIn post, Rakesh Maheshwari, former senior director and group coordinator of cyber laws, cyber security and data governance at MeitY, said the amendment brings in a much-needed clarity on who can issue notices for takedown. Some checks and balances have also been put in place to ensure proper accountability and may also be followed up on, he added. "I believe that the earlier notifications designating multiple officers by each agency/state governments and published by various government departments (including state governments) will now be reviewed," he said. Refinement Needed Cyber law advocate Prashant Mali welcomed the amendments for addressing deepfake risks. "The devil, as always, hides in the algorithm," he noted, highlighting the need for clear benchmarks and careful implementation to balance innovation with regulation. According to him, requiring a 10% visible label on AI-generated media, while aimed at transparency, could hinder aesthetic or artistic uses of generative AI. He suggested adaptive watermarking, aligned with ISO/IEC 23053 and W3C provenance standards, as a more flexible alternative. The proposal to mandate user declarations for all synthetic content, Mali observed, may lead to compliance fatigue, and rules should distinguish between AI-assisted edits and fully AI-generated fabrications. He also cautioned on cross-jurisdictional traceability, noting that deepfakes do not respect borders, and India should align with Budapest Convention principles and pursue mutual legal assistance protocols for synthetic media offences. If refined judiciously, he believes the rules could position India as a model jurisdiction for responsible AI governance.

[3]

MeitY Proposes New Rules to Detect and Track AI-Generated Deepfakes

MeitY wants the AI labels to occupy 10 percent of the display surface The Ministry of Electronics and Information Technology (MeitY) has proposed new rules for "synthetically generated information," such as deepfakes, on Wednesday. These have been proposed as an amendment to the IT Rules, 2021, bringing artificial intelligence (AI)-generated content under the purview of the Indian Government and the legal system. The new document also clearly defines what constitutes synthetically generated information and increases accountability on social media platforms to clearly label and highlight whenever AI-generated content is displayed to users.

[4]

Creative industry flags MeitY's 10% AI label rule as overreach - The Economic Times

The creative industry is pushing for exemptions from a proposed 10% screen or duration labelling rule for AI-generated content. They argue this blanket requirement could hinder legitimate AI use in film, animation, and VFX, urging a risk-based approach and alternative disclosures for non-deceptive applications.The creative industry is seeking exemption from the blanket rule requiring AI-generated content on social media to display labels covering at least 10% of screen space or duration, which is aimed at preventing deepfakes and misinformation. The industry is arguing it could severely disrupt legitimate AI use in films, animations, VFX and communications, and is urging carve-outs for B2B and non-deceptive use, alternative disclosures for long-form content and a phased pilot to validate practicality before full roll out. The proposed changes to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules require companies and individuals to mark AI-generated material with labels covering at least 10% of the display area, or the first 10% of duration for audio. "A blanket 10% disclosure could unintentionally make everyday AI workflows unfeasible," said Rajan Navani, co-chairman of CII National Committee on Media & Entertainment and CEO of JetSynthesys, suggesting dual compliance paths -- metadata-based tagging for back-end processes and visible labels only where manipulation could mislead audiences. He urged a risk-based framework that differentiates between deceptive and bona fide industrial use. In post-production, AI is often deployed for noise reduction, dubbing, or restoration. Navani warned that requiring large visible tags even in these instances would force costly re-rendering and degrade visual quality. "Visible disclosure makes sense where there's risk of deception. For back-end creative use, machine-readable traceability would be far more workable," he said. The industry has sought consultative processes to distinguish between deceptive synthetic media and legitimate creative AI applications. Also Read: Global tech reviewing AI-labelling mandate as India curbs deepfakes Legal experts pointed to global regulators who take more calibrated approaches, with the EU and the US allowing flexibility rather than rigid display thresholds. Ranjana Adhikari, partner at Indus Law, said the current draft's definition of 'synthetically generated information' is overly broad. "AI-assisted post production, voice enhancement and digital restoration are standard practices now," she said. "Heavy-handed labelling could confuse audiences and undermine creative authenticity." Tanu Banerjee, partner at law firm Khaitan & Co, added that digital ads and short videos, which depend on creative freedom and precise design, would suffer under a fixed rule. "A tag covering 10% of the screen or audio could break the aesthetic flow. Retaining large labels only for high-risk, deceptive use and metadata for everyday creativity could be a balanced route," she said. Brand consultant Reva Malhotra observed that most creative teams use AI responsibly -- to enhance productivity, test styles, or refine ideas. "The grey area around partly AI-assisted content may confuse teams about whether labelling is needed," she said. "A large 'AI-generated' tag could unfairly suggest that the whole work lacks originality." The intent of transparency is valid, but the lack of nuance could discourage experimentation and innovation, she said. Also Read: Labelling of AI-generated a step toward ensuring authenticity in digital content: Experts Experts also warned of perception and innovation risks. Rohit Pandharkar, technology consulting partner at EY India, said audiences might dismiss labelled works as mass-produced. Sagar Vishnoi, director at Future Shift Labs, which provides expert guidance to organisations on AI strategy and implementation, said the 10% labelling rule, though aimed at transparency, could raise compliance costs by forcing creators and platforms to rework production workflows, build verification systems and continuously audit content. Ashima Obhan of law firm Obhan & Associates urged India to align with global frameworks. "The EU's AI Act and the US FTC's approach allow flexible formats rather than fixed thresholds," she said. A proportionate framework, she added, would preserve commercial viability, uphold creative freedom under Article 19(1)(a) and still tackle deepfake misuse. Industry leaders now hope the final rules will emerge from broad consultations to ensure transparency without throttling legitimate innovation in India's growing creative economy. Also Read: Govt not asking creators to restrict AI content, only to label it: IT secretary Krishnan

[5]

Global tech reviewing AI-labelling mandate as India curbs deepfakes

Major tech players like Microsoft, OpenAI, and Google are scrutinizing India's recent policy that requires clear labeling of AI-generated posts on social media platforms. This regulation aims to address the escalating concern over deepfakes by ensuring that synthetic content is distinguished from authentic material. Global tech giants like Microsoft, OpenAI and Google are studying the government's latest mandate to label all artificial intelligence (AI) content on social media, sources said. All creators of AI generated producing will be covered if their computer resources produce such content shared in the public domain, officials told ET. "Any software, database or computer resource that is used to generate synthetic content would be covered under the mandate, to make the effort of labelling AI fool proof. The rules are not only for social media platforms," a ministry of electronics and information technology (MeitY) official said. All technology firms would have to embed the disclaimer in their content whether they are a social media intermediary or providing software or services that leverage AI. This opens a long list of popular AI-based software, apps and services including OpenAI's ChatGPT, Dall-E, and Sora, Google's Gemini, NotebookLM, and Google Cloud, Microsoft's Copilot, Office365 and Azure, and Meta AI to scrutiny. Firms said they are already part of international efforts to trace the origin of digital content and have implemented in-house methods for content provenance, sources added. To cull the rapid rise of AI based deepfakes, the MeitY last week published draft amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, that mandates declaration from all social media users when posting AI-generated or modified content, and directs platforms to adopt technology to verify these. While the initial focus has been on significant social media intermediaries (SSMIs) or those with 50 lakh (5 million) or more registered users in India, all technology intermediaries must be part of the efforts, officials said. "Many of the technology intermediaries today are both software providers as well as social media intermediaries, as we understand. This is because their software and services are often provided in partnership with SSMIs that allow content created through these to be posted on public platforms," the official stressed. Global efforts ongoing Google, OpenAI and Microsoft did not respond to ET's requests for comments. However, industry sources pointed to these, and other tech giants already being part of global efforts to validate digital data. Google, Microsoft, OpenAI, Meta, Amazon and Intel, among others, are steering committee members of the Coalition for Content Provenance and Authenticity (C2PA) which provides an open technical standard to establish the origin and edits of digital content called Content Credentials. OpenAI has developed a text watermarking method, while researching alternatives such as how metadata can be used in a text provenance method. As of August 2024, the company's AI image generation platform Dall-E has a built-in system to show if an image was edited and how. Meanwhile, Microsoft's Project Origin aims to create a system to certify the source and integrity of digital content through collaborations with content producers, distributors, cloud providers, and application developers. "We are studying the implications of the latest amendments. We will be engaging with the government on this, just as we have engaged with other authorities in other jurisdictions such as the EU and US," an official at a global technology company said. While stakeholders have been invited to submit feedback on the latest AI mandate by November 6, there is no clear timeline for when it will kick in, officials said. Experts said industry and government now must sit down and chalk a way forward. "The proposed rules will serve as the foundation for responsible AI adoption; these measures will give businesses the confidence to innovate and scale AI responsibly. The next step should be to establish clear implementation standards and collaborative frameworks between government and industry, to ensure the rules are practical, scalable, and supportive of India's AI leadership ambitions," said Mahesh Makhija, partner and technology consulting leader, EY India.

[6]

India vs China: Regulating AI-Generated Media Content

According to the new draft amendment to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 (IT Rules, 2021), AI-generated content, including deepfake material, on social media and the internet will be regulated by the Ministry of Electronics and Information Technology (MeitY). The draft defines synthetic content as "information which is artificially or algorithmically created, generated, modified, or altered using a computer resource, in a manner that such information reasonably appears to be authentic or true." MeitY is seeking public comments on the draft amendment. People can send their comments via email to [email protected] by November 6. India is not the first country to implement a mandatory labeling system for AI-generated content, including deepfakes. China is among the first countries to have formulated legislation around the labelling of "Deep Synthesis Internet Information." While the original Chinese act in Mandarin can be accessed on the government's official website, the translated version is available on ChineseLawTranslate. The People's Republic of China's Ministry of Industry and Information Technology and the Ministry of Public Security govern deepfake content through the act titled "Provisions on the Administration of Deep Synthesis Internet Information Services." It was created to "carry forward the Core Socialist Values, preserve national security and societal public interests, and protect the lawful rights and interests of citizens, legal persons, and other organisations." China defines deep synthesis technology as the use of advanced tools, such as deep learning and virtual reality, that employ AI-based algorithms to create or alter text, images, audio, video, virtual scenes, or other digital information. According to the law, deep synthetic content includes: The law defines deep synthesis service providers as organisations or individuals offering AI-based technologies or related services. Deep synthesis service providers must clearly label or watermark AI-generated or modified content in a way that identifies it as synthetic without affecting how users view or use it. They should also label any AI-generated or AI-edited content that could mislead or confuse the public. Platforms must additionally allow users to self-label AI-generated content. To curb the spread of fake news, the law prohibits generating false information using deepfake technologies. It also covers services that mimic real people's text, voices, or images; create synthetic or altered media of individuals; generate realistic virtual scenes; or significantly alter information content. Providers are required to maintain detailed logs, including user activity and system operations data, and store them in compliance with China's existing laws. India's draft amendment takes a different approach from China's law, which targets both AI-generated false information and deepfakes. India's proposal requires synthetic content to be labelled and identified through metadata. It also mandates visible or audible markers covering at least 10% of the visual area or appearing at the start of the audio to indicate when content is synthetic. The draft further directs major social media platforms to use technical tools to verify whether uploaded content is AI-generated and label it accordingly, creating a transparency-focused accountability system similar in intent to China's framework. It also mandates platforms to verify users' self-declarations regarding AI usage. Key differences between China's legislation and India's draft rules include: According to Article 3, the State Internet Information Department is responsible for overall planning and coordination of the nation's governance of deep synthesis services, along with related oversight and management. The Departments for Telecommunications and Public Security are responsible for specific oversight and management efforts. The law mandates that the Deep synthesis services shouldn't be used to endanger the "national security and interests, harm the image of the nation, harm the societal public interest, disturb economic or social order, or harm the lawful rights and interests of others." Similarly, deepfake content must not be used to produce or distribute fake news. Unlike India's draft, China's law requires platforms to verify users' real identities and forbids providing services to unverified users. Verification systems may use mobile phone numbers, ID card numbers, uniform social credit codes, or the state's public online identity verification system. Platforms must also review algorithms that produce synthetic content and implement strict security measures to protect users' personal data. Under this law, they must notify users if someone attempts to synthetically edit their biometric data, including facial or voice information. Additionally, platforms must conduct security assessments for tools that allow users to generate non-biometric information involving national security, the nation's image, or the public interest. Deep synthesis service providers that can influence public opinion or mobilise large groups must register their platforms with authorities. This registration allows the government to track companies operating powerful AI tools. After registration, providers and their technical partners must display their official registration numbers and include a public link to the filing information on their websites, apps, or other platforms to ensure transparency. Providers and their partners must update or cancel their registration if they modify or shut down their services. Under the law, deep synthesis service providers are responsible for information security and must maintain systems for user verification, content and data oversight, fraud prevention, emergency response, and other safety controls. They must publicly release management rules and platform policies, update service agreements, and carry out management duties in line with the law. They are also required to clearly post the security obligations of technical providers and users of deep synthesis services in a visible and accessible manner. Deep synthesis service providers must not only label content but also review user inputs and AI-generated outputs to support regulatory inspections, detect illegal or harmful content, and clearly mark all synthetic materials. Providers must monitor both user-uploaded material (including prompts) and AI-generated content produced on their platforms. They must also create portals for users to file appeals and complaints. In addition, app stores must enforce security measures through early app reviews, regular oversight, and emergency responses. Stores must verify the security assessments and registrations of deep synthesis service providers. Government regulators responsible for internet, telecommunications, and public security can determine whether a deep synthesis service provider poses serious information security risks, such as spreading harmful or misleading content or creating vulnerabilities. They can then order the provider to take corrective action. Corrective measures may include suspending content updates, pausing new user registrations, or temporarily halting certain services. If found guilty, companies and their technical partners must comply with government orders, implement the required corrective measures, and resolve the identified risks.

[7]

Click, label, share: How India wants to tame AI-generated content on social media - The Economic Times

Subimal BhattacharjeeCommentator on digital policy issuesOn Oct 22, GoI proposed sweeping amendments to IT Rules 2021, focusing on synthetically generated information (SGI). Draft amendments mandate that AI or AI-generated content on social media platforms be labelled or embedded with a permanent, unique identifier. Platforms will also be required to obtain a user declaration confirming whether the uploaded content qualifies as SGI. Can mandatory labelling requirements keep pace with synthetic content? MeitY's answer: defining AI-generated info and mandating prominent labels covering 10% of visual content or audio duration represents a clear choice: transparency over prohibition. The argument is sound. Yet, transparency hinges on a key assumption: users will consume labelled content rationally and make informed judgements. Anyone who has seen misinformation run rampant on WhatsApp knows how optimistic that assumption is. The real test is: will people notice these labels or will they fade into digital wallpaper - present but ignored? From cigarette warnings to social media fact checks, labels tend to lose impact over time. The draft's boldest provision, Rule 4(1A), obliges significant social media intermediaries to collect user declarations on SGI and verify them through 'reasonable and appropriate technical measures'. This is promising, but it can create a compliance nightmare. The technical hurdle is steep: platforms must detect AI-generated content across formats, languages and manipulation types - yet, current detection tools are unreliable. The draft amendments limit verification to public content, sparing private messages. This means platforms must distinguish public from private content at upload, yet, privately shared material can go public via screenshots or forwards. Mandating platforms to verify user declarations puts them in a bind. Under-enforcement risks losing safe-harbour protections and incurring liability, while over-enforcement can censor legitimate content. The rule states platforms will be deemed to have failed due diligence if they 'knowingly permitted, promoted, or failed to act upon' unlabelled synthetic content. Yet, the word 'knowingly', in algorithmic content moderation contexts, is murky at best. Rule 3(3) mandates that any intermediary offering resources enabling SGI creation must ensure permanent, non- removable labels. This applies not just to deepfake apps but potentially to any photo-editing tool, video production software or creative AI platform. This has real implications for India's emerging AI industry. A startup building an AI video-editing tool would now need to design its product around mandatory labelling that persists through every export, share and modification. For consumer apps, this friction could make products globally uncompetitive. For B2B tools, it raises questions about commercial confidentiality - must every AI-enhanced corporate video carry a permanent SGI label? These rules fail to distinguish between malicious deepfakes and benign creative uses. Collapsing these distinctions treats all synthetic content as suspicious. Moreover, the rules reflect a broader regulatory philosophy: when in doubt, maximise transparency and let users decide. But users aren't equipped for this. Perhaps amendments' most glaring weakness is enforcement. How will MeitY assess whether platforms use 'reasonable and appropriate' technical measures? Who audits if labels hit 10% visibility threshold? And what happens when automated verification fails? Takedown amendments - mandating JS-level approval and monthly secretary reviews - show that the GoI values senior oversight for rights-sensitive decisions. Yet, SGI verification is left to platform self-regulation, with only the threat of losing safe-harbour protection. The asymmetry is striking. International experience offers cautionary tales. The EU's Digital Services Act has sparked debates about over- compliance and automated censorship. China's watermarking mandates face implementation difficulties and selective enforcement concerns. India's rules risk similar pitfalls without clear guidance on acceptable technical standards and margins for error. Here's what needs to be done: Effective regulation needs more than good intentions and legal definitions. It demands technological feasibility, industry cooperation, international coordination, and realistic expectations about what labels can achieve in an attention economy built for engagement, not accuracy. Success isn't measured by compliance or label volume. The true metric is whether public trust in digital information grows - a goal that regulation alone cannot achieve. The writer is a commentator ondigital policy issues

[8]

Will India's Synthetic Info Label Rule Undermine VR Experiences?

Earlier this month, the Ministry of Electronics and Information Technology (MeitY) released a draft amendment to the IT Rules 2021 requiring disclosures of synthetically generated information. The amendment defines synthetically generated information as "information that is artificially or algorithmically created, generated, modified, or altered using a computer resource, in a manner that such information reasonably appears to be authentic or true." Companies that allow people to generate synthetic information must make sure that the information has prominent labels or permanent, unique metadata or identifiers. The label/identifier must cover at least 10% of the visual display, or in the case of audio content, must be present during at least 10% of the duration. Companies must make sure that they do not allow users to remove the label/identifier. As MediaNama has previously pointed out, this broad definition leaves room for a lot of different information to require labels. One of the key areas of concern emerging from this labelling requirement is for the augmented reality/virtual reality (AR/VR) domain, wherein effectively every element is 'algorithmically generated'. In such a situation, to what extent can the company that provides the software tools for creating AR/VR implement synthetically generated information labels without jeopardising the immersive quality of the virtual environment? Picture this: You are navigating a horror game in VR, fully immersed in the realism of the spooky atmosphere. Suddenly, instead of a ghost popping up around the corner of your eye and jumpscaring you, you are faced with this label, "this information is synthetically generated," covering 10% of your display. No matter which direction you turn, the label follows you around, keeping 10% of your vision occupied. This is what VR experiences may look like if the government implements the draft amendment in its current form. "I think the intention of the rules appears to have been to curb what we typically call 'deepfakes' or other malicious end-user applications. But I think what happened is that I think the rules are not providing exceptions or laxity around creative uses for media, entertainment, industry, et cetera. For example, suppose I am a professional studio, or I am a VFX, or I am a film studio, even if I use AI tools. In that case, I will have that 10% surface area covered," lawyer and tech policy advisor Dhruv Garg, who is a Partner at the Indian Governance and Policy Project (IGAP), told MediaNama. A very straightforward reading of the synthetically generated information suggests that it only includes those pieces of AI/algorithmically generated content that "reasonably appear to be authentic or true." In practice this may mean that a game like Stardust Odyssey may not need disclosures because of the sheer fact that it looks rendered/animated. Technology and gaming lawyer Jay Sayta told MediaNama that some games today allow people to recreate avatars of real people or real-life environments, and for such cases, disclosures may be necessary. However, "if you are using AI but the end result appears to be animated, it may not need a disclosure, but that would probably depend upon the facts of each situation," he explained. Similarly, Garg also pointed to this specific aspect of the synthetically generated information definition. He emphasised that in most games today, one can reasonably tell the gaming world is a rendered environment. "Most games are not realistic or hyperrealistic. You can figure out that it's animated, it's art, rendered art, et cetera. I don't know whether, then, in that case, the definition is applicable. Same goes for animation. For example, I would say that if there is an animated video, I don't know whether it is 'authentic and true' in that sense or appears to be," he explained. However, he added that the definition does not use the term realistic, which leaves room for some amount of confusion. "I am reading the term realistic in the definition because some other jurisdictions are talking about realism. But if there's a clarity about that, oh, when we say 'true' and 'authentic', it means close to the real person, event or place. I think that will solve even that dilemma," Garg suggested. Garg explained that this lack of exceptions was a concern beyond AR/VR. "A lot of content today is generated in the health sector, in the engineering sector, for prototyping or looking at scenarios. Even in that, technically, if I am using these tools [tools to generate synthetic content], there will be a label covering 10% of the visual display," he pointed out. He added that all enterprise-level content generation use cases where there is no clear retail consumption may still need to carry labels under the rules. This would imply that service providers that allow for computer-aided design, such as AutoCAD, SolidWorks, and Autodesk Inventor, which enable people to design products/buildings, could also need to carry disclosures. Picture the 3D model of your dream house with the disclosure covering 10% of its surface if there is no exception for enterprise-level use cases. While the current definition may not include a carve-out for such enterprise-level use cases, Garg was positive that future iterations of the rules could include them. "I'm assuming that the government will definitely take a look at it, and obviously, there will be an exception for it," he added.

[9]

Govt not asking creators to restrict AI content, only to label it: IT secretary Krishnan - The Economic Times

The government is not seeking to control or restrict online content but rather to ensure transparency by requiring creators to label AI-generated content so that audiences can make informed choices, Electronics and IT Secretary S Krishnan said on Thursday. The government proposed changes to IT rules on Wednesday, mandating the clear labelling of AI-generated content and increasing the accountability of large platforms, such as Facebook and YouTube, for verifying and flagging synthetic information to curb user harm from deepfakes and misinformation. "All that we are asking for is to label the content...You must put in a label which indicates whether a particular piece of content has been generated synthetically or not. We are not saying don't put it up, or don't do this and that. Whatever you're creating, it's fine. You just say it is synthetically generated. So that once it says it's synthetically generated, then people can make up their minds as to whether it is good, bad, or whatever," Krishnan said. India's approach to artificial intelligence (AI) adoption prioritises innovation first, with regulation following only where necessary, he said, adding that the responsibility for implementing the new labelling requirement will rest jointly on users, AI service providers, and social media platforms. Providers of computer resources or software used to create synthetic content must enable the creation of labels that are fairly prominent and cannot be deleted, Krishnan noted. Enforcement action would apply only to unlawful content, "and that applies to any content, not just AI content". The proposed amendments to IT rules provide a clear legal basis for labelling, traceability, and accountability related to synthetically-generated information. Apart from clearly defining synthetically generated information, the draft amendment, on which comments from stakeholders have been sought by November 6, 2025, mandates labelling, visibility, and metadata embedding for synthetically generated or modified information to distinguish such content from authentic media. The stricter rules would increase the accountability of significant social media intermediaries (those with 50 lakh or more registered users) in verifying and flagging synthetic information through reasonable and appropriate technical measures.

[10]

Top Five Concerns with MeitY Draft Synthetic Information Rules

In a bid to obtain a clear legal basis for synthetic information regulation, the Ministry of Electronics and Information Technology (MeitY) released a draft amendment to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 (IT Rules, 2021). The draft amendment requires AI companies to ensure that they label AI-generated content that users generate through their service and also requires similar labelling/disclosures on social media. We have summarised the draft amendment here. In this post, we discuss the key concerns we have spotted with the rules so far. The draft amendment defines synthetically generated information as "information which is artificially or algorithmically created, generated, modified or altered using a computer resource, in a manner that such information reasonably appears to be authentic or true." It also sets out specific labelling requirements for intermediaries that allow people to generate such information. Given that the definition covers all artificially or algorithmically generated information, one has to wonder whether anything from the Snapchat Puppy dog ears filter to AR/VR environments and video games would have to include these labels. Such labels could end up taking people out of immersive experiences. The requirements seem equally excessive when we apply them to beauty/colour correction filters. These filters all fit within the scope of artificially/algorithmically generated modifications, but does that immediately make them inauthentic or worth disclosure? Earlier this year, a report by the Consumer Unity and Trust Society (CUTS-International) mentioned similar concerns, stating that not all AI-generated/altered content is deceptive. "Labelling content as synthetic may unfairly suggest deception in cases such as clear parodies or artistic works, while labelling something as 'authentic' might wrongly imply trustworthiness even when manipulative framing is used to distort its meaning," it argued. The definition of synthetically generated information under the draft amendment hinges on whether the information 'reasonably appears to be authentic or true' while being artificial/algorithmically created. This leads one to question how the government will operationalise this 'reasonableness' across various formats. Further, if 'synthetically generated information' can include material that merely appears real, are we risking a culture where perceived authenticity becomes more important than verified truth? The draft amendment requires platforms to remind users not to post synthetic information that impersonates another person, and the government can even issue directions to remove such information under Rule 3(1)(d). This leads one to wonder how intermediaries will distinguish harmless transformations (satire, parody, reenactments) from manipulative impersonation or other harmful synthetically generated content. If a user posts a video depicting the likeness of a politician for parody purposes, the platform does not have any guidance on how to differentiate between that and genuine impersonation. There are also no clear exemptions for satire and parody. The result is a system where legitimate synthetic content faces an uncertain fate. Platforms, operating under the threat of losing their safe harbor protections and facing government takedown orders, will likely adopt the most conservative interpretation possible. The rules state that intermediaries that allow people to generate synthetic information must ensure that the generated content visibly displays a metadata/identifier (or includes audio disclaimers in case of synthetic audio content) in a prominent manner on or within the synthetically generated information. Furthermore, the metadata/identifier must cover at least 10% of the visual display, or in the case of audio content, must be present during at least 10% of the duration. The government has provided no clear explanation of how it arrived at the 10% figure. This raises several critical questions about the practical application and effectiveness of such a requirement. Did the government determine this percentage through user testing to assess what level of prominence ensures genuine awareness without destroying user experience? Is there empirical evidence suggesting that 10% coverage is the threshold at which viewers reliably notice and process synthetic content warnings? Or did the government simply choose an arbitrary number without rigorous justification? Besides, we also need to consider what these 10% visual display-occupying labels would look like in practice. Picture this: you removed the background of a photograph to make it the profile picture of your LinkedIn account. Within these rules, 10% of that very small profile picture would need to prominently display the label. The result would be a tiny profile image with a disproportionately large "AI/synthetically generated" watermark, taking away from the authenticity of our LinkedIn profile, even though all you did was edit the background. The uniform application of 10% across all contexts ignores the reality that different types of content require different approaches. A short meme might be entirely obscured by a 10% label, or a music track with 10% of its duration devoted to spoken disclaimers becomes nearly unlistenable. Perhaps the question that deserves the most attention is whether platforms that allow users to generate synthetic information are intermediaries to begin with. Under the IT Act, 2000, an intermediary is "any person who, on behalf of another person, receives, stores or transmits that record or provides any service with respect to that record". Further, under the definition of originator (the person who generates a message), the IT Act clearly says that an intermediary cannot be the originator. It is based on this middleman role of hosting/carrying content to and from people instead of being actively involved in their creation that intermediaries have safe harbor protections. Unlike the intermediaries clearly spelled out in the IT Act, such as telecom companies, web hosting services, and search engines, services like OpenAI or Meta AI, or Gemini generate outputs in response to user prompts. They are creating content rather than merely hosting or transmitting user-generated content. If the definition of 'intermediary' does include AI models, then it would mean that, just like other intermediaries, these players also have safe harbor protections, which means that they are not liable for what a user generates using their services, provided they comply with the amended rules. If AI models do not fall within the scope of intermediaries, then that would put into question the entire applicability of the amendment.

[11]

Centre moves to regulate deepfakes, AI media; MeitY proposes amendments to IT rules - The Economic Times

MeitY has proposed amendments to the IT rules to regulate AI-generated content, including deepfakes. Platforms must label synthetic media clearly and verify user declarations. The draft aims to prevent misuse, protect privacy, and boost transparency. Feedback is invited by November 6 as part of ongoing efforts to tackle online misinformation.The Ministry of Electronics and Information Technology (MeitY) on Wednesday published draft amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, which are aimed at regulating synthetically generated information, commonly known as deepfakes and AI-generated content. Stakeholders have been invited to submit feedback on the proposed amendments by November 6. The move is part of the government's efforts to address growing concerns about the misuse of technology. The draft amendments introduce a clear definition of "synthetically generated information" as content artificially or algorithmically created, modified, or altered using computer resources in a manner that appears authentic. This definition is incorporated into existing provisions dealing with unlawful information, making synthetic content liable under the same rules that apply to other harmful or unlawful materials. A key feature of the proposed rules is the requirement that intermediaries enabling the creation or modification of synthetic content must prominently label such material. The label or unique metadata should cover at least 10% of the visual display area or the initial 10% of an audio clip's duration and remain permanently embedded in the content to ensure instant identification. Intermediaries are prohibited from allowing the removal or alteration of these labels. For significant social media intermediaries (SSMIs) -- platforms with a large user base -- the rules mandate additional due diligence. These platforms must obtain user declarations on whether the uploaded content is synthetic, deploy automated tools to verify these declarations, and ensure synthetic content is clearly marked with appropriate labels. Failure to exercise these due diligence obligations will be considered non-compliance, the draft amendments said. The amendments also clarify legal protections for intermediaries who remove or disable access to synthetic content that is harmful or unlawful, reinforcing their safe harbour under Section 79(2) of the Information Technology Act, 2000. The government said that deepfakes and synthetic media pose a risk in spreading misinformation, impersonating individuals, and threatening privacy or national integrity. The new rules aim to enhance transparency, accountability, and user safety on internet platforms, balancing innovation in AI-driven technologies with the need for oversight. The ministry has made the draft amendments and explanatory notes available on its website and encouraged public and stakeholder consultation to refine these rules further. This initiative builds on earlier IT Rules amendments from 2022 and 2023. Speaking to ET, Dhruv Garg, founding partner, Indian Governance and Policy Project (IGAP), a New Delhi-based policy think tank, said, "The proposed amendments to the IT Rules are defining synthetically generated information and are mandating labelling norms for AI-generated content. The government's intent seems [to be] to ensure transparency and trust in online information." These measures may be an answer to the recent issues around political misinformation, celebrity deepfakes and misleading advertisements using AI tools, Garg said. The proposed obligations for social media intermediaries to identify and label synthetic content are essential, but their success will depend on clear implementation guidance and technological feasibility, particularly for smaller platforms, he added.

[12]

India proposes strict IT rules for labelling deepfakes amid AI misuse

BENGALURU (Reuters) -India's government on Wednesday proposed new legal obligations for artificial intelligence and social media firms to tackle a growing proliferation of deepfakes online, by mandating them to label such content as AI-generated. The potential for misuse of generative AI tools "to cause user harm, spread misinformation, manipulate elections, or impersonate individuals has grown significantly," India's IT ministry said in a press release, explaining the rationale behind the move. The proposed rules mandate social media companies to require their users to declare if they are uploading deepfake content. With nearly 1 billion internet users, the stakes are high in a sprawling country of many ethnic and religious communities where fake news risks stirring up deadly strife and AI deepfake videos have alarmed officials during elections. (Reporting by Munsif Vengattil and Aditya Kalra; Editing by Andrew Heavens and Ed Osmond)

Share

Share

Copy Link

India's Ministry of Electronics and Information Technology has proposed new rules to regulate AI-generated content, including deepfakes, aiming to curb misinformation and protect users. The draft amendments to IT Rules 2021 introduce labeling requirements and increased accountability for social media platforms.

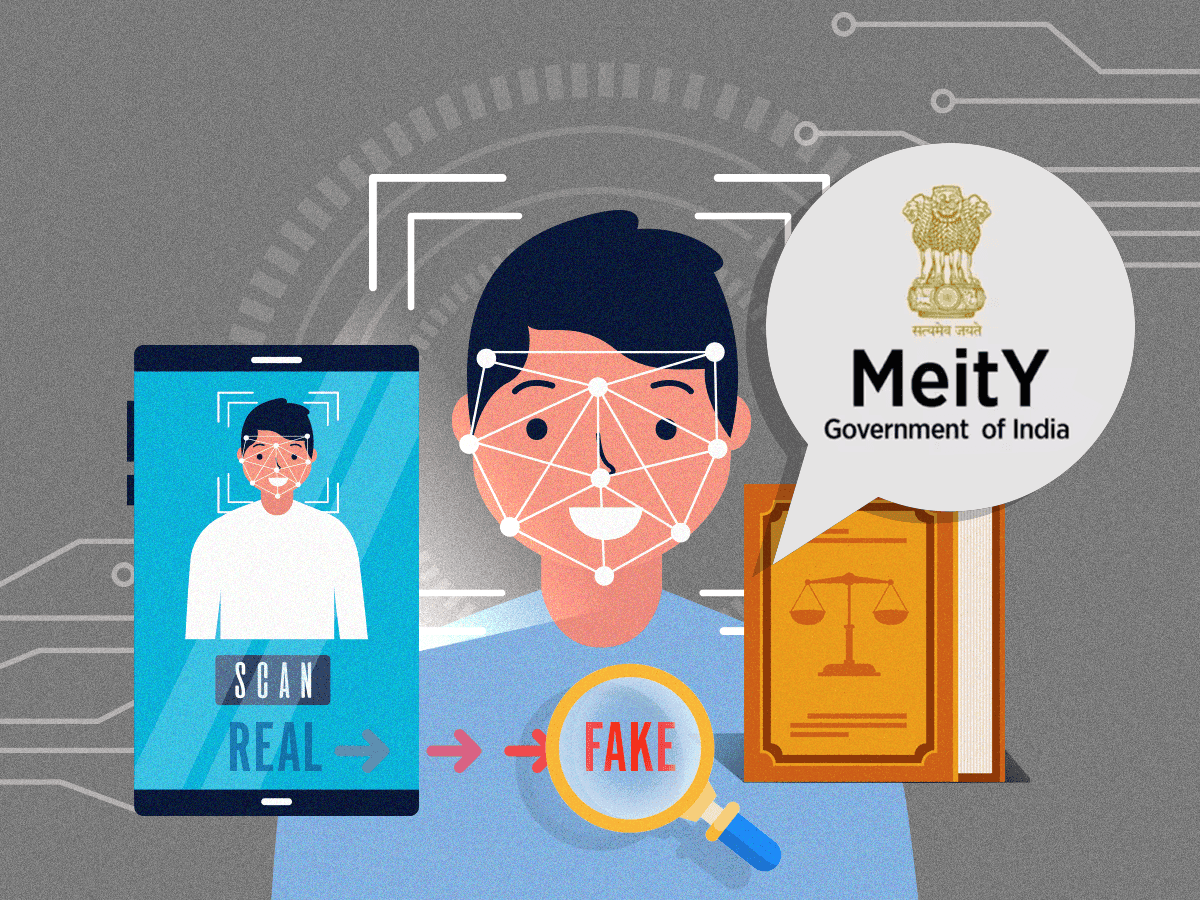

India's Proactive Stance on AI Regulation

India has taken a significant step towards regulating artificial intelligence (AI) by proposing sweeping new amendments to the Information Technology Rules of 2021. The Ministry of Electronics and Information Technology (MeitY) announced these changes in response to the growing concerns over the misuse of AI technologies, particularly in creating deepfakes and spreading misinformation

1

2

.

Source: ET

Defining and Labeling Synthetic Content

The draft amendments introduce a formal definition for 'synthetically generated information,' described as content that is artificially created or modified using computer resources and appears to be authentic

2

. To ensure transparency, the proposed rules mandate that all AI-generated content must be clearly labeled, with the label covering at least 10% of the visual display area or the initial 10% of audio duration1

2

.

Source: ET

Enhanced Responsibilities for Social Media Platforms

Under the new regulations, significant social media intermediaries will face increased accountability. They will be required to:

- Implement measures to verify user declarations about synthetic content

- Display appropriate labels for confirmed AI-generated information

- Deploy technical measures to detect and label synthetic content

2

3

The government aims to establish a clear legal basis for labeling, traceability, and accountability of AI-generated content without stifling technological advancement

2

.Related Stories

Global Tech Giants and Industry Response

Major tech companies like Microsoft, OpenAI, and Google are closely examining the proposed regulations

5

. These firms, along with others, are already part of international efforts to trace the origin of digital content and have implemented in-house methods for content provenance5

.However, the creative industry has raised concerns about the blanket 10% labeling rule. They argue that this requirement could disrupt legitimate AI use in films, animations, and visual effects. Industry leaders are pushing for exemptions and a more nuanced approach that distinguishes between deceptive synthetic media and legitimate creative AI applications

4

.

Source: ET

Balancing Innovation and Regulation

The proposed rules aim to strike a balance between protecting users and fostering innovation. MeitY has invited stakeholders and the public to submit comments on the draft rules by November 6, signaling an openness to feedback and potential refinements

2

.As India positions itself as one of the first countries to codify rules specifically addressing synthetic and AI-generated information, the global tech community and policymakers will be watching closely. The final implementation of these regulations could set a precedent for AI governance in other jurisdictions

2

5

.References

Summarized by

Navi

[1]

Related Stories

Indian Tech Industry Pushes Back Against Proposed AI Content Labeling Rules

12 Nov 2025•Policy and Regulation

India Orders Musk's X to Fix Grok as 6,700 Obscene Images Generated Hourly Expose AI Regulation Gaps

02 Jan 2026•Policy and Regulation

Experts warn no AI can detect deepfakes as India grapples with national security threat

18 Feb 2026•Policy and Regulation

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation