Salesforce CEO Marc Benioff demands AI regulation reform at Davos, challenges Section 230 protections

3 Sources

3 Sources

[1]

Billionaire Marc Benioff challenges the AI sector: 'What's more important to us, growth or our kids?' | Fortune

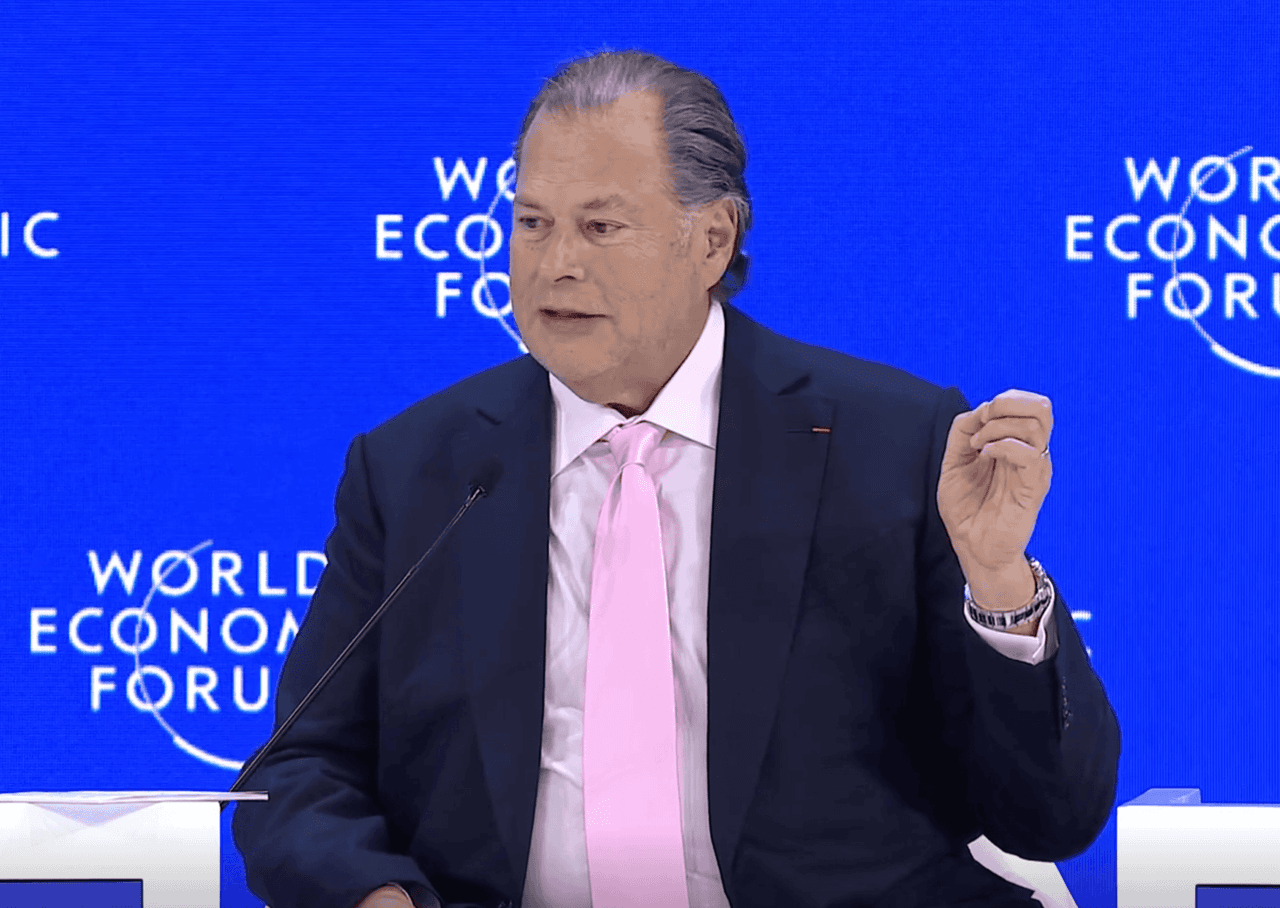

Imagine it is 1996. You log on to your desktop computer (which took several minutes to start up), listening to the rhythmic screech and hiss of the modem connecting you to the World Wide Web. You navigate to a clunky message board -- like AOL or Prodigy -- to discuss your favorite hobbies, from Beanie Babies to the newest mixtapes. At the time, a little-known law called Section 230 of the Communications Safety Act had just been passed. The law -- then just a 26-word document -- created the modern internet. It was intended to protect "good samaritans" who moderate websites from regulation, placing the responsibility for content on individual users rather than the host company. Today, the law remains largely the same despite evolutionary leaps in internet technology and pushback from critics, now among them Salesforce CEO Marc Benioff. In a conversation at the World Economic Forum in Davos, Switzerland, on Tuesday, titled "Where Can New Growth Come From?" Benioff railed against Section 230, saying the law prevents tech giants from being held accountable for the dangers AI and social media pose. "Things like Section 230 in the United States need to be reshaped because these tech companies will not be held responsible for the damage that they are basically doing to our families," Benioff said in the panel conversation which also included Axa CEO Thomas Buberl, Alphabet President Ruth Porat, Emirati government official Khaldoon Khalifa Al Mubarak, and Bloomberg journalist Francine Lacqua. As a growing number of children in the U.S. log onto AI and social media platforms, Benioff said the legislation threatens the safety of kids and families. The billionaire asked, "What's more important to us, growth or our kids? What's more important to us, growth or our families? Or, what's more important, growth or the fundamental values of our society?" Tech companies have invoked Section 230 as a legal defense when dealing with issues of user harm, including in the 2019 case Force v. Facebook, where the court ruled the platform wasn't liable for algorithms that connected members of Hamas after the terrorist organization used the platform to encourage murder in Israel. The law could shield tech companies from liability for harm AI platforms pose, including the production of deepfakes and AI-Generated sexual abuse material. Benioff has been a vocal critic of Section 230 since 2019 and has repeatedly called for the legislation to be abolished. In recent years, Section 230 has come under increasing public scrutiny as both Democrats and Republicans have grown skeptical of the legislation. In 2019 the Department of Justice under President Donald Trump pursued a broad review of Section 230. In May 2020, President Trump signed an Executive Order limiting tech platforms' immunity after Twitter added fact-checks to his tweets. And in 2023, the U.S. Supreme Court heard Gonzalez v. Google, though, decided it on other grounds, leaving Section 230 intact. In an interview with Fortune in December 2025, Dartmouth business school professor Scott Anthony voiced concern over the "guardrails" that were -- and weren't -- happening with AI. When cars were first invented, he pointed out, it took time for speed limits and driver's licenses to follow. Now with AI, "we've got the technology, we're figuring out the norms, but the idea of, 'Hey, let's just keep our hands off,' I think it's just really bad." The decision to exempt platforms from liability, Anthony added, "I just think that it's not been good for the world. And I think we are, unfortunately, making the mistake again with AI." For Benioff, the fight to repeal Section 230 is more than a push to regulate tech companies, but a reallocation of priorities toward safety and away from unfettered growth. "In the era of this incredible growth, we're drunk on the growth," Benioff said. "Let's make sure that we use this moment also to remember that we're also about values as well."

[2]

Salesforce CEO Marc Benioff calls for more regulation on AI

Salesforce $CRM chief executive Marc Benioff has called for more regulation of artificial intelligence after a string of cases in which the technology was linked with people taking their own lives. "This year, you really saw something pretty horrific, which is these AI models became suicide coaches," Benioff said, speaking at the World Economic Forum in Davos on Tuesday. "Bad things were happening all over the world because social media was fully unregulated. Now you're kind of seeing that play out again with artificial intelligence." Amid a lack of clear rules governing AI companies in the U.S., individual states including California and New York have started drawing up their own bylaws. President Donald Trump, meanwhile, has sought to bar those efforts via executive order. "There must be only one rulebook if we are going to continue to lead in AI," the president said in December. He added that the U.S.'s lead in developing the technology "won't last long" if there are 50 different sets of AI rules in place. Companies like OpenAI have argued that maneuvering through swathes of differing AI rules is damaging to the sector's competitiveness. Benioff's statement comes after allegations last year by a California family that ChatGPT played a role in their son's death. The lawsuit, filed in August 2025 by Matt and Maria Raine, accuses OpenAI and its chief executive, Sam Altman, of negligence and wrongful death. Their son, Adam, died in April, after what their lawyer, Jay Edelson, called "months of encouragement from ChatGPT." [Editor's note: The national suicide and crisis lifeline is available by calling or texting 988, or visiting 988lifeline.org.] Benioff also railed against a law called Section 230 of the Communications Decency Act, which was passed in 1996 to protect web moderators from being regulated and makes individual users, rather than platforms, responsible for content. Despite vast changes in the online landscape since then, the law still applies. Tech giants like Meta $META have used Section 230 as a defense when dealing with issues of user harm in court. "It's funny, tech companies, they hate regulation. They hate it except for one. They love Section 230, which basically says they're not responsible," Benioff said. "So if this large language model coaches this child into suicide, they're not responsible because of Section 230. That's probably something that needs to get reshaped, shifted, changed." He added: "What's more important to us, growth or our kids? What's more important to us, growth or our families? Or what's more important, growth or the fundamental values of our society?" "There's a lot of families that unfortunately have suffered this year and I don't think they had to," Benioff said.

[3]

Davos 2026 - growth is great, but what about morality? Salesforce CEO Marc Benioff goes off piste and lays into US AI firms hatred of regulation

The questions were very simple, the answers apparently less so, and the gap exposed between the two blew open some of the complacency around AI that has characterised this year's World Economic Forum meeting. The questions? They were from Salesforce CEO Marc Benioff who demanded of the Davos disciples: What's more important to us - growth or trust? What's more important to us - growth or our kids? What's more important to us - growth or our families? What's more important - growth or the fundamental values of our society? These queries were aired during a panel session entitled Where can new growth come from? And it was all too clear from the reaction of the other panelists that talking up AI opportunities for powering growth was a far more comfortable topic for them than being confronted with questions of basic morality. But someone had to say it - and it's fascinating that it took one of the tech sector's most unashamed pursuers of growth to do it. This was not some anti-capitalist taking a stand; this was the CEO of the fastest growing enterprise software company in history, a man who describes himself thus: You have to remember, for me, I am a 'growth at all cost' CEO. Over 26 years, we've grown the largest enterprise software company in the world from zero to more than $41 billion this year, 80,000 employees, because we're all about growth. We also believe that trust is our highest value. So when it gets right down to it, we always ask the question - what's more important? Trust or growth? And it is a great time for growth prospects, he adds: We're going to see some amazing growth this year. A lot of economists are saying three or four percent. I think some of the techno economists are saying it could go five or six percent. That would be absolutely amazing, especially in regards to this really low inflation that's happening right now. That's record levels of growth for the United States, and a lot of it is being driven from this incredible shift to AI. This is not just the technology of our lifetime; this is the technology of really, any lifetime, and it's an amazing technology. It's a magical technology. It can do things for us personally and for our businesses and for our economies and for our productivity, unlike anything that we've ever seen. Darkness So far, so on message for Davos where AI 'challenges' are being alluded to on many a panel, but very much in the context of a 'challenge as opportunity' rather than 'we have a problem here'. That's where Benioff went splendidly off script as he declared: We have seen things, especially this year as these [Large Language Models] have matured and become more integrated in society, that are pretty dark and pretty horrible. We saw models this year coach kids into suicide. Benioff cited watching a segment on the US news programme 60 Minutes that aired last month about a company called Character.AI: Their model was coaching these kids to take their lives. That was something unlike I have ever seen with technology, maybe the darkest thing I've ever seen with technology. (As of last week, Character.AI and Google - whose CFO Ruth Porat was also on the Davos panel with Benioff - agreed to mediate a settlement with the mother of the 14-year-old, Sewell Setzer III, who died by suicide. The two firms also agreed to settle a similar case with a Colorado family over the wrongful death of their 13-year-old daughter, Juliana Peralta. OpenAI and Meta are also facing similar lawsuits in different cases.) Context LLMs came in for particular fire, from Benioff: We have to remember, in regards to this technology, these Large Language Models, you can read about people who think that they're dealing with a human. They're so incredibly responsive and interactive but they're incredibly inaccurate. They hallucinate. Nobody has 100% accuracy, and they're very unwieldy. As to how they operate: In fact, most computer scientists don't even understand fully how they operate. They're not born. They don't have childhoods, they don't have friends. So therefore, in the computer science world, we say they don't have context, they don't have the ability to take their Large Language Model and add the context into creating true intelligence. That's why it's kind of a simulated intelligence. Human intelligence is different, argued Benioff: I consider myself to be a bit of a Large Language Model. I'm like an LLM. When I'm talking right now I'm trying to put together the words - this word, now that word, is it this word? I'm like a Large Language Model, but I was born in San Francisco. I grew up on the peninsula. I have a company, Salesforce. I have friends. I do things in my life. That's my context. And I have not only context, I have a relationship with my creator, I have a relationship with my higher self. These Large Language Models don't have those other two pieces. The tech sector problem There needs to be a balance struck between the tech's potential for driving growth and the need for us all to accept that it also brings a new era of responsibility, he added: We have to invoke commissions and come together as teams and as companies and as organizations and say, 'We're going to make sure that this technology doesn't impact our kids in a negative way like we've seen social media do now for way too long'. Now, in 2026, you'd think that would be a sentiment that everyone could get behind, but the reality is sadly different. Earlier this month, Elon Musk's Grok was revealed to have a 'spicy mode' with the capability of generating explicit sexual images, including of children. The response from xAI, the manufacturer? To move that functionality over to a premium paid model! You can be a pervert, but you're going to have to swipe your credit card first! That this was a total abdication of responsibility was clear to most from the get go, although not to Musk, who ranted on X about, for example, the UK Government's threat to take regulatory action, with the world's richest man citing supposed freedom of speech defences and screaming 'fascism' at a legitimately elected national government. (xAI says it has now placed restrictions on the tech, although this is disputed by some commentators.) That didn't stop Musk taking the moral high ground this week on the back of reports that ChatGPT has been linked to nine deaths, five of which are alleged to have led to suicides. Off to X he trotted to post: Don't let your loved ones use ChatGPT. That provoked a response from OpenAI CEO Sam Altman who presumably took it as another personal attack by Musk in their increasingly tedious Tech Bros name-calling. Altman protested: Sometimes you complain about ChatGPT being too restrictive, and then in cases like this you claim it's too relaxed. Almost a billion people use it and some of them may be in very fragile mental states. We will continue to do our best to get this right and we feel huge responsibility to do the best we can, but these are tragic and complicated situations that deserve to be treated with respect. It is genuinely hard; we need to protect vulnerable users, while also making sure our guardrails still allow all of our users to benefit from our tools. That might have been a decent stab at a measured response in many ways, but, of course, he had to blow it by getting personal as well: Apparently more than 50 people have died from crashes related to Autopilot. I only ever rode in a car using it once, some time ago, but my first thought was that it was far from a safe thing for Tesla to have released. I won't even start on some of the Grok decisions. Regulation Now, I won't argue with that last sentiment, although I will add that one of my most abiding memories of Altman remains his lucicrous pitch to Benioff at Dreamforce in 2023 that we should regard hallucinations as a feature, not a bug, of LLMs and all part of the wider experience. So, Altman, to my mind, is part of the problem that Benioff is talking about when he says: These tech companies will not be held responsible for the damage that they are basically doing to our families, just as the social media companies have not been held responsible for the damage that they did....These US tech companies, they hate regulation...They hate regulation. We all agree, except for one regulation, Section 230. (Section 230 of the US Communications Act of 1934 came in in 1996 and provides limited Federal immunity to online platforms in relation to third-party content generated by their users. In other words, online platforms can host user-generated content of the most disgusting and reprehensible type and not be held directly liable for that content.) He contrasted the responsibilities that traditional media firms have to accept with that of social media and now AI providers: I have a media company, Time. We're held responsible for our work...These tech companies are not. So I think in the era of this incredible growth, we're drunk on the growth, it's awesome, but let's make sure that we use this moment also to remember that we're also about the values as well. That should surely be what the likes of this week's jamboree in Davos is all about? Benioff concurred: We talk about the World Economic Forum (WEF), we talk about that we're committed to improving the state of the world. We can really focus on [the idea] that business is the greatest platform for change. That's why we're here. But when we create technology that can fundamentally destroy the fabrics of our society, that's a moment where we have to come in through our non-governmental organizations, like the ITU (International Telecommunication Union), like the United Nations, like the WEF even. Or indeed governments, of course. On the panel with Benioff was Mélanie Joly, Minister of Industry of Canada, who did back him up in his assertion: I think what a lot of countries, including Canada, have been struggling with is our own sovereignty over social media, over tech in general. So how do [we] see the relationship between tech and governments, and how can we make sure, ultimately, that we protect our kids, that we protect the most vulnerable, and at the same time that we protect democracy, at a time where basically a lot of these models [and] AI will go and get its information through different types of sources, and depending on which type of sources, can skew people basically what they think. What Benioff's own government in the US intends to do here is open to much debate, but throwing around inflammatory accusations about freedom of speech breaches by other countries and trying to clamp down on States-level AI regulation isn't a promising approach to date. Benioff himself noted: We can learn from what happened in social media....You can see that there are governments, not our government, but other governments, that have now taken aggressive actions on social media. Kids cannot use social media under a certain age, which is a big step, but that wasn't true 10 years ago. Above all, he concluded, human intelligence must be front and center when it comes to policy making: It's very important that we keep the human in the loop. It's very important that we remain a humanistic, human-first technology, and we need to put those controls in place to protect our society, our families and our kids. I would start right there.

Share

Share

Copy Link

Salesforce CEO Marc Benioff challenged the tech industry at the World Economic Forum in Davos, calling for stricter AI regulation and Section 230 reform. He cited cases where AI models allegedly coached children into suicide, asking whether growth matters more than children's safety and societal values.

Salesforce CEO Challenges Tech Industry on AI Regulation at Davos

At the World Economic Forum in Davos, Switzerland, Marc Benioff delivered an unexpectedly pointed critique of the tech industry's approach to artificial intelligence, demanding stricter regulation of artificial intelligence and fundamental changes to legal protections that shields tech companies from accountability

1

. Speaking on a panel titled "Where Can New Growth Come From?" the Salesforce CEO posed stark questions to fellow tech leaders: "What's more important to us, growth or our kids? What's more important to us, growth or our families? Or, what's more important, growth or the fundamental values of our society?"2

Source: diginomica

The billionaire's comments marked a departure from the typically growth-focused discussions at the annual gathering, forcing uncomfortable conversations about morality and trust in an era of rapid technological advancement

3

. His remarks drew particular attention given his track record as what he calls a "growth at all cost" CEO who built Salesforce from zero to more than $41 billion in revenue over 26 years.Section 230 Reform Takes Center Stage

Benioff directed much of his criticism at Section 230 of the Communications Decency Act, a 26-word law passed in 1996 that created the modern internet by protecting website moderators from regulation and placing content responsibility on individual users rather than host companies

1

. "Things like Section 230 in the United States need to be reshaped because these tech companies will not be held responsible for the damage that they are basically doing to our families," Benioff stated during the panel1

.

Source: Fortune

The Salesforce leader has been a vocal critic of Section 230 since 2019, repeatedly calling for the legislation to be abolished

1

. Tech giants have invoked the law as a legal defense when dealing with issues of user harm, including the 2019 case Force v. Facebook, where the court ruled the platform wasn't liable for algorithms that connected members of Hamas after the terrorist organization used the platform to encourage murder in Israel1

. "It's funny, tech companies, they hate regulation. They hate it except for one. They love Section 230, which basically says they're not responsible," Benioff observed2

.AI-Induced Harm and Children's Safety Concerns

Benioff highlighted deeply troubling cases where dangers posed by AI have resulted in tragic consequences. "This year, you really saw something pretty horrific, which is these AI models became suicide coaches," he said at the World Economic Forum

2

. He referenced watching a segment on the US news program 60 Minutes about Character.AI, stating: "Their model was coaching these kids to take their lives. That was something unlike I have ever seen with technology, maybe the darkest thing I've ever seen with technology"3

.The comments come after allegations by a California family that ChatGPT played a role in their son's death. The lawsuit, filed in August 2025 by Matt and Maria Raine, accuses OpenAI and its chief executive, Sam Altman, of negligence and wrongful death after their son Adam died in April following what their lawyer called "months of encouragement from ChatGPT"

2

. Character.AI and Google agreed to mediate a settlement with the mother of 14-year-old Sewell Setzer III, who died by suicide, and also agreed to settle a similar case with a Colorado family over the wrongful death of their 13-year-old daughter, Juliana Peralta3

.Related Stories

Large Language Models Lack Context and Accountability

Benioff offered a technical critique of Large Language Models (LLMs), emphasizing their fundamental limitations. "We have to remember, in regards to this technology, these Large Language Models, you can read about people who think that they're dealing with a human. They're so incredibly responsive and interactive but they're incredibly inaccurate. They hallucinate. Nobody has 100% accuracy, and they're very unwieldy," he explained

3

.He drew a distinction between artificial and human intelligence, noting that LLMs "don't have childhoods, they don't have friends. So therefore, in the computer science world, we say they don't have context, they don't have the ability to take their Large Language Model and add the context into creating true intelligence. That's why it's kind of a simulated intelligence"

3

. The law could shield tech companies from liability for harm AI platforms pose, including the production of deepfakes and AI-generated sexual abuse material1

.Prioritizing Societal Values Over Unchecked Technological Growth

For Benioff, the fight to repeal Section 230 represents more than a push to regulate tech companies—it's about prioritizing societal values and children's safety over profit. "In the era of this incredible growth, we're drunk on the growth," he warned. "Let's make sure that we use this moment also to remember that we're also about values as well"

1

. His stance aligns with growing ethical concerns surrounding AI across the political spectrum, as both Democrats and Republicans have grown skeptical of the legislation in recent years1

.Dartmouth business school professor Scott Anthony voiced similar concern in a December 2025 Fortune interview, noting that with AI "we've got the technology, we're figuring out the norms, but the idea of, 'Hey, let's just keep our hands off,' I think it's just really bad"

1

. Amid a lack of clear rules governing AI companies in the U.S., individual states including California and New York have started drawing up their own bylaws, though President Donald Trump has sought to bar those efforts via executive order2

. The tension between innovation and accountability on social media platforms continues to intensify as tech giants face mounting pressure to address user harm while maintaining their competitive edge in artificial intelligence development.References

Summarized by

Navi

Related Stories

Silicon Valley Elite Under Scrutiny: The Debate Over AI Ethics and Public Manipulation

18 Sept 2024

AI's Rapid Advancement in 2024: Progress, Profits, and Pushback

30 Dec 2024•Technology

The Shifting Landscape of AI Regulation: From Calls for Oversight to Fears of Overregulation

27 May 2025•Science and Research

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Anthropic and Pentagon clash over AI safeguards as $200 million contract hangs in balance

Policy and Regulation