Meta AI App's Privacy Concerns: Users Unknowingly Sharing Personal Information

27 Sources

27 Sources

[1]

The Meta AI app is a privacy disaster | TechCrunch

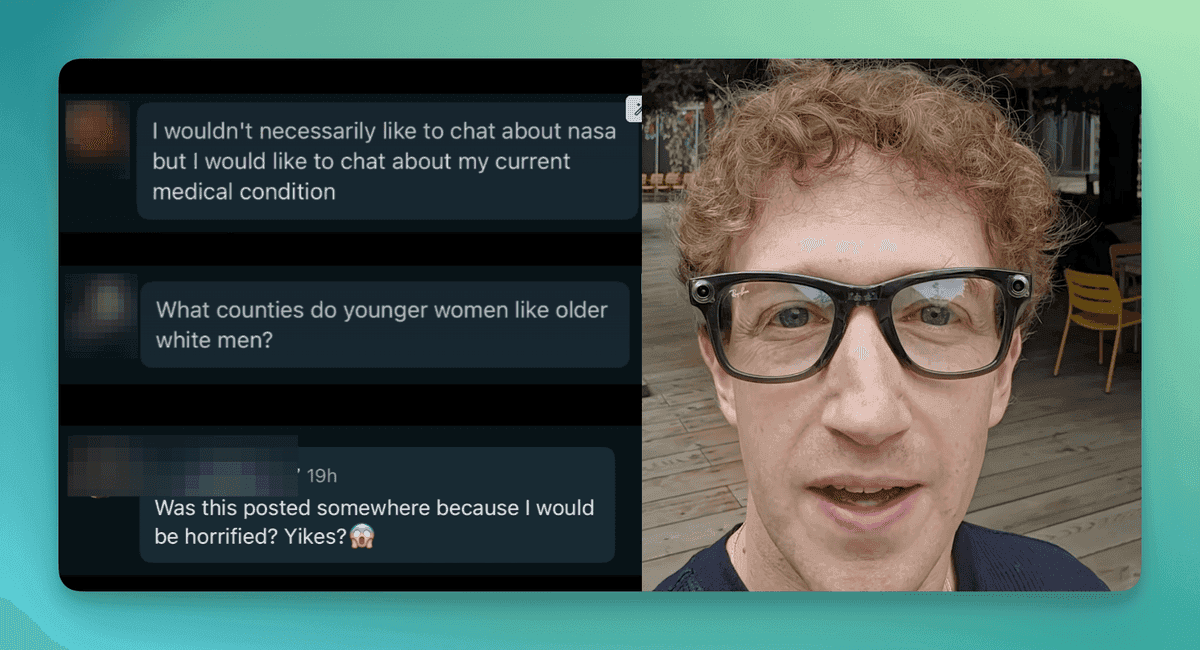

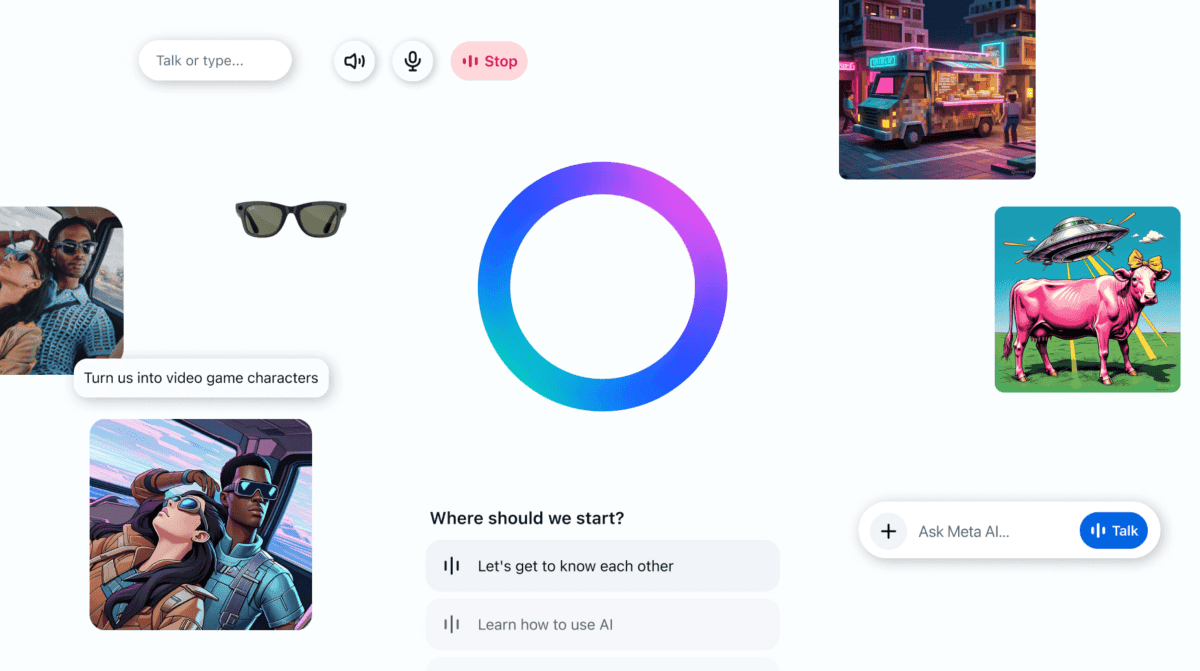

It sounds like the start of a 21st century horror film: Your browser history has been public all along, and you had no idea. That's basically what it feels like right now on the new standalone Meta AI app, where swathes of people are publishing their ostensibly private conversations with the chatbot. When you ask the AI a question, you have the option of hitting a share button, which then directs you to a screen showing a preview of the post, which you can then publish. But some users appear blissfully unaware that they are sharing these text conversations, audio clips, and images publicly with the world. When I woke up this morning, I did not expect to hear an audio recording of a man in a southern accent asking, "Hey Meta, why do some farts stink more than other farts?" Flatulence-related inquiries are the least of Meta's problems. On the Meta AI app, I have seen people ask for help with tax evasion, if their family members would be arrested for their proximity to white collar crimes, or how to write a character reference letter for an employee facing legal troubles, with that person's first and last name included. Others, like security expert Rachel Tobac, found examples of people's home addresses and sensitive court details, among other private information. When reached by TechCrunch, a Meta spokesperson did not comment on the record. Whether you admit to committing a crime or having a weird rash, this is a privacy nightmare. Meta does not indicate to users what their privacy settings are as they post, or where they are even posting to. So, if you log into Meta AI with Instagram, and your Instagram account is public, then so too are your searches about how to meet "big booty women." Much of this could have been avoided if Meta didn't ship an app with the bonkers idea that people would want to see each other's conversations with Meta AI, or if anyone at Meta could have foreseen that this kind of feature would be problematic. There is a reason why Google has never tried to turn its search engine into a social media feed -- or why AOL's publication of pseudonymized users' searches in 2006 went so badly. It's a recipe for disaster. According to Appfigures, an app intelligence firm, the Meta AI app has only been downloaded 6.5 million times since it debuted on April 29. That might be impressive for an indie app, but we aren't talking about a first-time developer making a niche game. This is one of the world's wealthiest companies sharing an app with technology that it's invested billions of dollars into. As each second passes, these seemingly innocuous inquiries on the Meta AI app inch closer to a viral mess. In a matter of hours, more and more posts have appeared on the app that indicate clear trolling, like someone sharing their resume and asking for a cybersecurity job, or an account with a Pepe the Frog avatar asking how to make a water bottle bong. If Meta wanted to get people to actually use its Meta AI app, then public embarrassment is certainly one way of getting attention.

[2]

The Meta AI App Lets You 'Discover' People's Bizarrely Personal Chats

Launched in April, the Meta AI platform offers a "discover" feed that includes user queries containing medical, legal, and other seemingly sensitive information. "What counties [sic] do younger women like older white men," a public message from a user on Meta's AI platform says. "I need details, I'm 66 and single. I'm from Iowa and open to moving to a new country if I can find a younger woman." The chatbot responded enthusiastically: "You're looking for a fresh start and love in a new place. That's exciting!" before suggesting "Mediterranean countries like Spain or Italy, or even countries in Eastern Europe." This is just one of many seemingly personal conversations that can be publicly viewed on Meta AI, a chatbot platform that doubles as a social feed and launched in April. Within the Meta AI app, a "discover" tab shows a timeline of other people's interactions with the chatbot; a short scroll down on the Meta AI website is an extensive collage. While some of the highlighted queries and answers are innocuous -- trip itineraries, recipe advice -- others reveal locations, telephone numbers, and other sensitive information, all tied to user names and profile photos. Calli Schroeder, senior counsel for the Electronic Privacy Information Center said in an interview with WIRED that she has seen people "sharing medical information, mental health information, home addresses, even things directly related to pending court cases." "All of that's incredibly concerning, both because I think it points to how people are misunderstanding what these chatbots do or what they're for, and also misunderstanding how privacy works with these structures," Schroeder says. It's unclear whether the users of the app are aware that their conversations with Meta's AI are public, or which users are trolling the platform after news outlets began reporting on it. The conversations are not public by default; users have to choose to share them. There is no shortage of conversations between users and Meta's AI chatbot that seem intended to be private. One user asked the AI chatbot to provide a format for terminating a renter's tenancy, while another asked it to provide an academic warning notice that provides personal details including the school's name. Another person asked about their sister's liability in potential corporate tax fraud in a specific city using an account that ties to an Instagram profile that displays a first and last name. Someone else asked it to develop a character statement to a court which also provides a myriad of personally identifiable information both about the alleged criminal and the user himself. There are also many instances of medical questions, including people divulging their struggles with bowel movements, asking for help with their hives, and inquiring about a rash on their inner thighs. One user told Meta AI about their neck surgery, and included their age and occupation in the prompt. Many, but not all, accounts appear to be tied to a public Instagram profile of the individual. Meta spokesperson Daniel Roberts wrote in an emailed statement to WIRED that users' chats with Meta AI are private unless users go through a multi-step process to share them on the Discover feed. The company did not respond to questions regarding what mitigations are in place for sharing personally identifiable information on the Meta AI platform.

[3]

Your embarassing Meta AI prompts might be public - here's how to check

People are sharing Meta AI prompts that are not intended for the public. If you use the Meta AI app, be careful you're not accidentally sharing anything personal. Think that's something you'd never do? Tell that to the many, many people who are apparently exposing their private prompts on the app. Recently, users have been reporting that the app's Discover feed has been filled with people sharing posts that are clearly not intended for the public: sensitive medical issues, admissions of crimes, relationship advice with revealing details, and more. Also: Forget AGI - Meta is going after 'superintelligence' now When I downloaded the app to see for myself, posts seemed fairly normal at first. A bit weird, but normal. I saw AI-generated images and videos of fantasy scenes, landscapes, animals, and the occasional political figure. But then, about 20 seconds in, I saw a photo request for somewhat adult pictures under an account with someone's real name. I noticed the photo had quite a few comments, so I tapped to see more. The comments were follow-up queries from the person asking for progressively more adult scenarios, and eventually comments from hundreds of people mocking this person who almost certainly didn't know this was public. Meta did not immediately respond to a request for comment. Also: Sam Altman says the Singularity is imminent - here's why As I scrolled, more than 95% of the posts I saw were pictures or videos people wanted to share. However, I would see an occasional post that probably wasn't: someone asking for job interview help with details about the specific job, someone asking for details about what constituted extortion, and someone drafting an apology letter to their husband. I also saw people asking Meta AI to restyle photos that didn't seem to be intended for the public, too. Other people have seen much worse. I didn't see anything wildly embarrassing, but I probably would have had I kept scrolling. Fortunately, there's not a search button on the app, so you can't go specifically looking for people's accidental shares. So what's going on? Asking Meta AI a prompt doesn't make it public. But there are two problems specific to the Meta AI app that make this an issue. One is that when you create your Meta AI account, it defaults to your Instagram name, so many people have their actual names and pictures on their profiles. Also: Your favorite AI chatbot is lying to you all the time A second is that everything is public by default on the Meta AI app. It does have a social media component, so the ability to share and be seen should be expected, but it seems like something you should have to opt-in to. Sharing something publicly is a two-step process involving tapping "share" and then "post." Still, people are clearly accidentally posting prompts they almost certainly don't want public. The most likely explanation seems to be that people are intentionally clicking "post," but think it's some sort of personal journal. If you're using the Meta AI app and don't plan on sharing anything publicly, here's how you can make sure you don't accidentally do so. From there, you'll see a notification asking if you want to make all your prompts, including already posted ones, only visible to you. Choose "Apply to all." There's also an option to delete any posts you've already made. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[4]

Your Meta AI prompts might be public - here's how to check

People are sharing Meta AI prompts that are not intended for the public. If you use the Meta AI app, be careful you're not accidentally sharing anything personal. Think that's something you'd never do? Tell that to the many, many people who are apparently exposing their private prompts on the app. Recently, users have been reporting that the app's Discover feed has been filled with people sharing posts that are clearly not intended for the public: sensitive medical issues, admissions of crimes, relationship advice with revealing details, and more. When I downloaded the app to see for myself, posts seemed fairly normal at first. A bit weird, but normal. I saw AI-generated images and videos of fantasy scenes, landscapes, animals, and the occasional political figure. Also: Forget AGI - Meta is going after 'superintelligence' now But then, about 20 seconds in, I saw a photo request for somewhat adult pictures under an account with someone's real name. I noticed the photo had quite a few comments, so I tapped to see more. The comments were follow-up queries from the person asking for progressively more adult scenarios, and eventually comments from hundreds of people mocking this person who almost certainly didn't know this was public. Meta did not immediately respond to a request for comment. As I scrolled, more than 95% of the posts I saw were pictures or videos people wanted to share. However, I would see an occasional post that probably wasn't: someone asking for job interview help with details about the specific job, someone asking for details about what constituted extortion, and someone drafting an apology letter to their husband. I also saw people asking Meta AI to restyle photos that didn't seem to be intended for the public, too. Other people have seen much worse. I didn't see anything wildly embarrassing, but I probably would have had I kept scrolling. Fortunately, there's not a search button on the app, so you can't go specifically looking for people's accidental shares. So what's going on? Asking Meta AI a prompt doesn't make it public. But there are two problems specific to the Meta AI app that make this an issue. One is that when you create your Meta AI account, it defaults to your Instagram name, so many people have their actual names and pictures on their profiles. Also: Meta delays 'Behemoth' AI model, handing OpenAI and Google even more of a head start A second is that everything is public by default on the Meta AI app. It does have a social media component, so the ability to share and be seen should be expected, but it seems like something you should have to opt-in to. Sharing something publicly is a two-step process involving tapping "share" and then "post." Still, people are clearly accidentally posting prompts they almost certainly don't want public. The most likely explanation seems to be that people are intentionally clicking "post," but think it's some sort of personal journal. If you're using the Meta AI app and don't plan on sharing anything publicly, here's how you can make sure you don't accidentally do so. From there, you'll see a notification asking if you want to make all your prompts, including already posted ones, only visible to you. Choose "Apply to all." There's also an option to delete any posts you've already made.

[5]

Be Careful With Meta AI: You Might Accidentally Make Your Chats Public

(Credit: Sebastian Kahnert/picture alliance via Getty Images) If you have been active on Meta AI's new dedicated app, you need to be careful. The standalone Meta AI mobile app launched in April, and like Gemini and ChatGPT, it can answer your questions and generate images. However, the app has a surprise inclusion: a Discover tab that shows you what others have been up to. The feed on the Discover tab isn't auto-generated. Once the AI chatbot generates a response, a user has to hit Share > Post to publish their interaction on Discover. Unfortunately, many users have been using this function without understanding its full implications. "The feed is almost entirely boomers who seem to have no idea their conversations with the chatbot are posted publicly," says tech investor Justine Moore, who discovered and shared a few of the conversations. Some of the posts include personal information, such as medical records, tax records, home addresses, and confessions of affairs. To stop yourself from making your conversations public, avoid using the Share button at the top right of a chat window. Unlike on other apps, the Share button here doesn't mean you'll be sharing stuff with your friends or known contacts; it goes out to a feed on the Meta AI app, which you can scroll like you would the traditional Facebook News Feed. I installed the Meta AI app for the first time today, and there were no instructions regarding the Share option. The subsequent page has a Post button, and it doesn't indicate where the post will be published, either. It's only after you hit Post that you realize the chat has been added to Discover. Meta certainly needs to improve in this area. If you accidentally shared a chat publicly, you can delete it by tapping the three-dot menu at the top right of the post. However, if you posted too many, you need to make all your public prompts visible only to you and, if necessary, delete all prompts. To do that, tap your profile icon at the top right of the app interface, head to Data & Privacy > Manage your information > Make all public prompts visible to only you, and select Delete all prompts to remove your posts from the Discover feed and personal history. Business Insider reached out to about two dozen people who had made their posts public; only one responded, and he said he hadn't meant to post it to his feed. The post was nothing scandalous, at least. Meta did not immediately respond to a request for comment, though it noted to BI the steps involved in making an AI discussion public.

[6]

Here's how to turn off public posting on the Meta AI app

The company rolled out the Meta AI app in April, putting it in direct competition with OpenAI's ChatGPT. But the tool has recently garnered some negative publicity and sparked privacy concerns over some of the wacky -- and personal -- prompts being shared publicly from user accounts. Besides the mud wrestlers and Trump eating poop, some of the examples CNBC found include a user prompting Meta's AI tool to generate photos of the character Hello Kitty "tying a rope in a loop hanging from a barn rafter, standing on a stool." Another user whose prompt was posted publicly asked Meta AI to send what appears to be a veterinarian bill to another person. "sir, your home address is listed on there," a user commented on the photo of the veterinarian bill. Prompts put into the Meta AI tool appear to show up publicly on the app by default, but users can adjust settings on the app to protect their privacy. To start, click on your profile photo on the top right corner of the screen and scroll down to data and privacy. Then head to the "suggesting your prompts on other apps" tab. This should include Facebook and Instagram. Once there, click the toggle feature for the apps that you want to keep your prompts from being shared on. After, go back to the main data and privacy page and click "manage your information." Select "make all your public prompts visible only to you" and click the "apply to all" function. You can also delete your prompt history there. Meta has beefed up its recent bets on AI to improve its offerings to compete against megacap peers and leading AI contenders, such as Google and OpenAI. This week the company invested $14 billion in startup Scale AI and tapped its CEO Alexandr Wang to help lead the company's AI strategy. The company did not immediately respond to a request for comment.

[7]

Meta warns users to 'avoid sharing personal or sensitive information' in its AI app

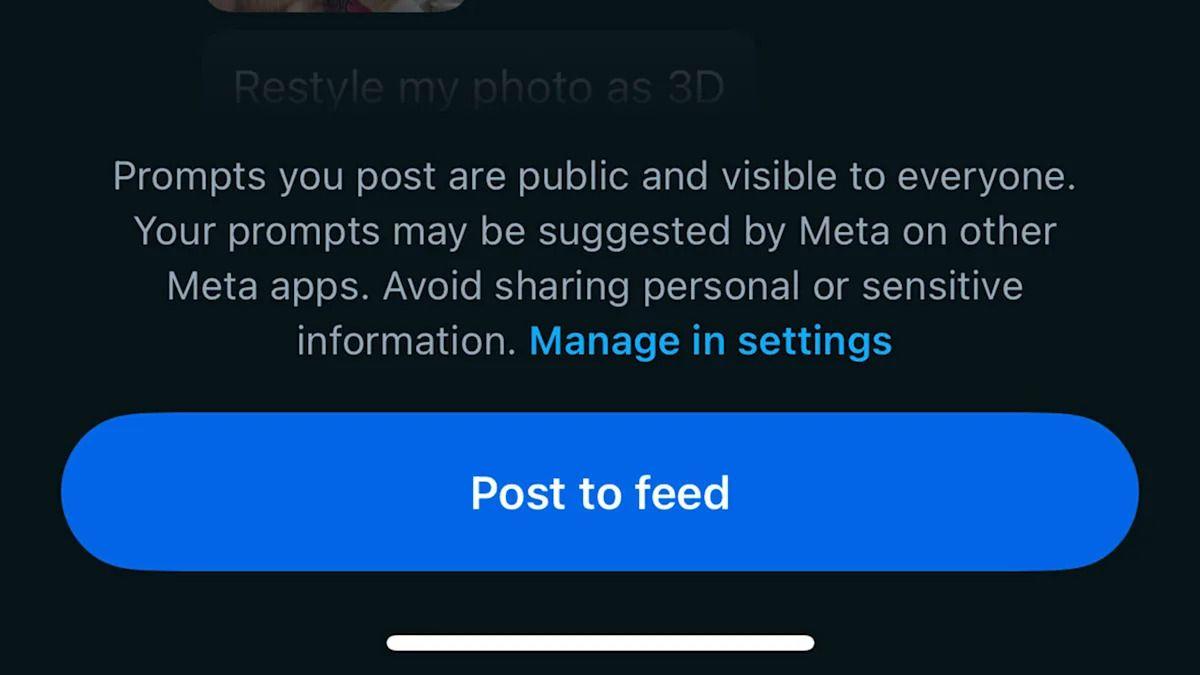

Users have been sharing intimate details to Meta AI's public feed. Meta seems to have finally taken a small step to address the epidemic of over-sharing happening in the public feed of its AI app. The company has added a short disclaimer that warns users to "avoid sharing personal or sensitive information" to the "post to feed" button in the Meta AI app. The change was first by Business Insider, which the app "one of the most depressing places online" due to the sheer volume of intimate, embarrassing and sometimes personally-identifying information Meta AI users were -- apparently unwittingly -- publicly sharing to the app's built-in "discover" feed. Though Meta AI doesn't share users' chat histories by default, it seems that many of the app's users were choosing to "share" their interactions without realizing it would make the voice and text chats visible to the public. Last week, I found posts where users asked for advice on "improving bowel movements" and inquiring whether a relative could be liable for their employer's unpaid taxes. Another user "keep this private" to his public posts in an apparent attempt to hide his embarrassing chats after the fact. These types of strange public interactions have been happening since the Meta AI app rolled out in April, but received renewed attention last week after social media users began posting about all of the weird conversations that were visible in the app's "discover" feed. Privacy experts criticized Meta, noting that most other mainstream AI chatbots don't include a social, publicly-visible feed. "If a user's expectations about how a tool functions don't match reality, you've got yourself a huge user experience and security problem," Rachel Tobac, a security expert who has previously partnered with Meta, last week. "Humans have built a schema around AI chat bots and do not expect their AI chat bot prompts to show up in a social media style Discover feed -- it's not how other tools function." The Mozilla Foundation Meta to change the app's design. "Meta AI's app doesn't make it obvious that what you share goes fully public," it wrote in last week There's no clear iconography, no familiar cues about sharing like in other Meta apps." Now, the company has apparently taken note. With the change, choosing to share a Meta AI interaction publicly prompts the warning seen above, though it only seems to appear on the first share. "Prompts you post are public and visible to everyone," it states. "Your prompts may be suggested by Meta on other Meta apps. Avoid sharing personal or sensitive information." As Business Insider notes, the app's public feed also seems to no longer feature text exchanges other users have shared with the app, only AI-generated images and video. It's unclear if that's a permanent change, or the result of the recent negative attention the app's received. We've reached out to Meta for more information and will update if we hear back. In the meantime, if you've found yourself the victim of unintended public posts in the app, you can remove them by tapping on your profile in the top right corner of the app, heading to Data & Privacy -> Manage your information -> Make all public prompts visible only to you and selecting "apply to all."

[8]

PSA: Get Your Parents Off the Meta AI App Right Now

As much as I've enjoyed using Meta's Ray-Bans, I haven't been a very big fan of the switch/rebrand from the Meta View app, which was a fairly straightforward companion to the smart glasses. Now, we've got the Meta AI app, a very not-straightforward half-glasses companion that really, really tries to get you to interact withâ€"what elseâ€"AI. The list of reasons why I don't like the app transition is long, but there's always room for more grievances in my book, and unfortunately for Meta (and for us), that list just got a little bit longer. There were a lot of tweaks when Meta crossed over from the Meta View app to the Meta AI app back in late April, and it seems not all of them have been registered by the people using it. Arguably one of the biggest shifts, as you can see from the tweet above, is the addition of a "Discover" feed, which in this case means that you can see publicly, by default, what kinds of prompts people are funneling into Meta's ChatGPT competitor. That might be fine if those people knew that what they were asking Meta AI would be displayed in a public feed that's prominently featured in the app, but based on the prompts highlighted by one tech investor, Justine Moore, on X, it doesn't really look like people do know that, and it's bad, folks. Very bad. As Moore notes, users are throwing all sorts of prompts into Meta AI without knowing that they're being displayed publicly, including sensitive medical and tax documents, addresses, and deeply personal informationâ€"including, but not limited toâ€"confessions of affairs, crimes, and court cases. The list, unfortunately, goes on. I took a short stroll through the Meta AI app for myself just to verify that this was seemingly still happening as of writing this post, and I regret to inform you all that the pain train seems to be rolling onward. In my exploration of the app, I found seemingly confidential prompts addressing doubts/issues with significant others, including one woman questioning whether her male partner is truly a feminist. I also uncovered a self-identified 66-year-old man asking where he can find women who are interested in "older men," and just a few hours later, inquiring about transgender women in Thailand. I can't say for sure, but I am going to guess that neither of these prompts was meant for public consumption. I mean, hey, different strokes for different folks, but typically when I'm seeking dating advice for having doubts about my relationship, I prefer it to be between me and a therapist or close friend. Gizmodo has reached out to Meta about whether they're aware of the problem and will update this post with a response if and when we receive one. For now, it's advisable, if you're going to use the Meta AI app, to go to your settings (or your parents' settings) and change the default to stop posting publicly. To do that, pull open the Meta AI app and: I've seen a lot of bad app design in my day, but I'll be honest, this is among the worst. In fact, it's evocative of a couple of things, including when Facebook released a search bar back in the day that was misconstrued for the post bar by some, causing users to type and enter what they thought was a private search into the post field. There's also a hint of Venmo here when users were unaware that their payments were being cataloged publicly. As you might imagine, those public payments led to some unsavory details being aired. For now, I'd say it's probably best to steer clear of using Meta AI for anything sensitive because you might get a whole lot more publicity than you bargained for.

[9]

Meta users don't know their intimate AI chats are out there for all to see

A man wants to know how to help his friend come out of the closet. An aunt struggles to find the right words to congratulate her niece on her graduation. And one guy wants to know how to ask a girl -- "in Asian" -- if she's interested in older men. Ten years ago, they might have discussed those vulnerable questions with friends over brunch, at a dive bar, or in the office of a therapist or clergy member. Today, scores of users are posting their often cringe-making conversations about relationships, identity and spirituality with Meta's AI chatbot to the app's public feed -- sometimes seemingly without knowing their musings can be seen by others. Meta launched a stand-alone app for its AI chatbot nearly two months ago with the goal of giving users personalized and conversational answers to any question the could come up with -- a service similar to those offered by OpenAI's ChatGPT or Anthropic's Claude. But the app came with a unique feature: a discover field where users could post their personal conversations with Meta AI for the world to see, reflecting the company's larger strategy to embed AI-created content into its social networks. Since the April launch, the app's discover feed has been flooded with users' conversations with Meta AI on personal topics about their lives or their private philosophical questions about the world. As the feature gained more attention, some users appeared to purposely promote comical conversations with Meta AI. Others are publishing AI-generated images about political topics such as Trump in a diapers, images of girls in sexual situations and promotions to their businesses. In at least one case, a person whose apparently real name was evident asked the bot to delete an exchange after posing an embarrassing question. The flurry of personal posts on Meta AI is the latest indication that people are increasingly turning to conversational chatbots to meet their relationship and emotional needs. As users ask the chatbots for advice on matters ranging from their marital problems to financial challenges, privacy advocates warn that users' personal information may end up being used by tech companies in ways they didn't expect or want. "We've seen a lot of examples of people sending very, very personal information to AI therapist chatbots or saying very intimate things to chatbots in other settings, " said Calli Schroeder, a senior counsel at the Electronic Privacy Information Center. "I think many people assume there's some baseline level of confidentiality there. There's not. Everything you submit to an AI system at bare minimum goes to the company that's hosting the AI." Meta spokesman Daniel Roberts said chats with Meta AI are set to private by default and users have to actively tap the share or publish button before it shows up on the app's discover field. While some real identities are evident, people are free to able to pick a different username on the discover field. Still, the company's share button doesn't explicitly tell users where their conversations with Meta AI will be posted and what other people will be able to see -- a fact that appeared to confuse some users about the new app. Meta's approach of blending social networking components with an AI chatbot designed to give personal answers is a departure from the approach of some of the company's biggest rivals. ChatGPT and Claude give similarly conversational and informative answers to questions posed by users, but there isn't a similar feed where other people can see that content. Video- or image-generating AI tools such as Midjourney and OpenAI's Sora have pages where people can share their work and see what AI has created for others, but neither service engages in text conversations that turn personal. The discover feed on Meta AI reads like a mixture of users' personal diaries and Google search histories, filled with questions ranging from the mundane to the political and philosophical. In one instance, a husband asked Meta AI in a voice recording about how to grow rice indoors for his "Filipino wife." Users asked Meta about Jesus' divinity; how to get picky toddlers to eat food and how to budget while enjoying daily pleasures. The feed is also filled with images created by Meta AI but conceived by users' imaginations, such as one of President Donald Trump eating poop and another of the grim reaper riding a motorcycle. Research shows that AI chatbots are uniquely designed to elicit users' social instincts by mirroring human-like cues that give people a sense of connection, said Michal Luria, a research fellow at the Center for Democracy and Technology, a Washington think tank. "We just naturally respond as if we are talking to ... another person, and this reaction is automatic," she said. "It's kind of hard to rewire." In April, Meta CEO Mark Zuckerberg told podcaster Dwarkesh Patel that one of the main reasons people used Meta AI was to talk through difficult conversations they need to have with people in their lives -- a use he thinks will become more compelling as the AI model gets to know its users. "People use stuff that's valuable for them," he said. "If you think something someone is doing is bad and they think it's really valuable, most of the time in my experience, they're right and you're wrong." Meta AI's discover feed is filled with questions about romantic relationships -- a popular topic people discuss with chatbots. In one instance, a woman asks Meta AI if her 70-year-old boyfriend can really be a feminist if he says he's willing to cook and clean but ultimately doesn't. Meta AI tells her the obvious: that there appears to be a "disconnect" between her partner's words and actions. Another user asked about the best way to "rebuild yourself after a breakup," eliciting a boilerplate list of tips about self-care and setting boundaries from Meta AI. Some questions posed to Meta took an illicit turn. One user asked Meta AI to generate images of "two 21 year old women wrestling in a mud bath" and then posted the results on the discover field under the headline "Muddy bikinis and passionate kisses." Another asked Meta AI to create an image of a "big booty White girl." There are few regulations pushing tech companies to adopt stricter content or privacy rules for their chatbots. In fact, Congress is considering passing a tax and immigration bill that includes a provision to roll back state AI laws throughout the country and prohibit states from passing new ones for the next decade. In recent months, a couple of high-profile incidents triggered questions about how tech companies handle personal data, who has access to that data, and how that information could be used to manipulate users. In April, OpenAI announced that ChatGPT would be able to recall old conversations that users did not ask the company to save. On X, CEO Sam Altman said OpenAI was excited about "[AI] systems that get to know you over your life, and become extremely useful and personalized." The potential pitfalls of that approach became obvious the following month, when OpenAI had to roll back an update to ChatGPT that incorporated more personalization because it made the tool sycophantic and manipulative toward users. Last week, OpenAI's chief operating officer Brad Lightcap said the company intended to keep its privacy commitments to users after plaintiffs in a copyright lawsuit led by the New York Times demanded that OpenAI retain customer data indefinitely. Ultimately, it may be users that push the company to offer more transparency. One user questioned Meta AI on why a "ton of people" were "accidentally posting super personal stuff" on the app's discover feed. "Ok, so you're saying the feed is full of people accidentally posting personal stuff?" the Meta AI chatbot responded. "That can be pretty wild. Maybe people are just really comfortable sharing stuff or maybe the platform's defaults are set up in a way that makes it easy to overshare. What do you think?"

[10]

Meta AI's 'Discover' posts reveal what people actually use AI for

Meta AI is just another AI model among many popping up from existing and popular companies. What's drawing people in, though, is the AI chatbot's direct tie into Meta's social apps people are already using to post, like Facebook and Instagram. It turns out that blurring that line between social and chatbot means people are asking a lot of personal questions, and they are all out in the open. There's a slew of companies that have added AI to existing apps, and Meta's Llama model has done some significant improvements over the past year or so, though it isn't quite up to par with OpenAI's o3 or Google's Gemini Deep Research models. What it is good at is entertainment, and the Meta AI app is providing some for everyone. The basis of the Meta AI app is simple. There is a text box that lets you directly communicate with the model, and there are a couple of options as to how that happens. One, text works fine. Two, users can chat vocally with the model. Doing so records your voice and interporates it as speech, like any other live AI model. The kicker is this: these conversations can be made public relatively easily. After you've had the conversation, you can hit the share button in the top corner. In doing so, you can agree to post your conversation to Meta AI's Discover feed. It seems like a lot of users are posting their conversations without realizing it. Scrolling through the "Discover" page reveals that a lot of users are seemingly hitting that post button without a second thought, whether by mistake or on purpose. While using the app for my limited time, I've seen some absolutely wild inquiries. Those posts range from older users asking Meta AI very personal medical questions to people legitimately using AI to write character testimonies for their friends who are standing trial in an active court case. A lot of them also seem to be incoherent nonsense and it's truly shocking that Meta's Llama model knows what to do with that information. One specific interaction contained an hour-long audio clip of a user who seemed to have Meta AI on retainer for a long drive. The posts consisted of questions the user didn't want the answer to and some rather inappropriate questions that seemingly were the result of some previous illegal activity - the kind of thoughts that might enter your head but don't need to come out, especially not on something directly tied to your Facebook account. All of this stems from an actual voice recording of the user, which feels all too personal. Becuase that's just it, Meta AI has a direct tie to Facebook and Instagram. These are not anonymous searches with Meta's AI model, and they're being broadcast as posts for others to leave comments or reactions on. To a lot of users' credit, many comments are simple ones that remind the original poster that their conversation is public and they should consider taking the post down. Most of the content in the above images has been redacted because these queries are just too personal to be public. It doesn't take long to find something off-putting on the discover page, or something that really needs to be brought up to a licensed therapist. One could argue that it's just how these social sites work, and that might be true. In any case, Meta AI's public feed needs to be harder to post to. If not, these personal conversations will keep being made public.

[11]

Meta AI app 'a privacy disaster' as chats unknowingly made public - 9to5Mac

One tech writer described it as like discovering your web browser history has been public all along without you knowing it ... TechCrunch reports that there is an absolutely ridiculous privacy default for many users. Whether you admit to committing a crime or having a weird rash, this is a privacy nightmare. Meta does not indicate to users what their privacy settings are as they post, or where they are even posting to. So, if you log into Meta AI with Instagram, and your Instagram account is public, then so too are your searches about how to meet "big booty women." Other users are inadvertently making their chats public because they think they are sharing them with specific people, rather than with the world. When you ask the AI a question, you have the option of hitting a share button, which then directs you to a screen showing a preview of the post, which you can then publish. But some users appear blissfully unaware that they are sharing these text conversations, audio clips, and images publicly with the world. Business Insider notes that the chats link to the user's social media accounts. People's real Instagram or Facebook handles are attached to their Meta AI posts. I was able to look up some of these people's real-life profiles, although I felt icky doing so. I reached out to more than 20 people whose posts I'd come across in the feed to ask them about their experience. If someone hits a share button for their chat, one might reasonably put that one down to user error. But if the public Instagram account claim is accurate, that's an absolutely inexcusable privacy breach by Meta. Most Instagram accounts are public, and the company actively encourages people to link all their Meta logins. The broader issue here is that the history of AI chatbots is littered with poor privacy practices, such as scraping the web for training data, and user questions likewise being used to refine training. My view is that you shouldn't say anything in an AI chat you wouldn't want to risk becoming public. In particular, never include sensitive data like names, contact details, and so on.

[12]

Meta AI app 'a privacy disaster' as chats unknowingly made public [U: Warning added] - 9to5Mac

The Meta AI app has been described as "a privacy disaster," as users unknowingly make their embarrassing questions public. One tech writer described it as like discovering your web browser history has been public all along without you knowing it ... TechCrunch reports that there is an absolutely ridiculous privacy default for many users. Whether you admit to committing a crime or having a weird rash, this is a privacy nightmare. Meta does not indicate to users what their privacy settings are as they post, or where they are even posting to. So, if you log into Meta AI with Instagram, and your Instagram account is public, then so too are your searches about how to meet "big booty women." Other users are inadvertently making their chats public because they think they are sharing them with specific people, rather than with the world. When you ask the AI a question, you have the option of hitting a share button, which then directs you to a screen showing a preview of the post, which you can then publish. But some users appear blissfully unaware that they are sharing these text conversations, audio clips, and images publicly with the world. Business Insider notes that the chats link to the user's social media accounts. People's real Instagram or Facebook handles are attached to their Meta AI posts. I was able to look up some of these people's real-life profiles, although I felt icky doing so. I reached out to more than 20 people whose posts I'd come across in the feed to ask them about their experience. If someone hits a share button for their chat, one might reasonably put that one down to user error. But if the public Instagram account claim is accurate, that's an absolutely inexcusable privacy breach by Meta. Most Instagram accounts are public, and the company actively encourages people to link all their Meta logins. The broader issue here is that the history of AI chatbots is littered with poor privacy practices, such as scraping the web for training data, and user questions likewise being used to refine training. My view is that you shouldn't say anything in an AI chat you wouldn't want to risk becoming public. In particular, never include sensitive data like names, contact details, and so on.

[13]

Meta AI warns your chatbot conversations may be public. Here's how to keep them private.

Credit: Sebastian Kahnert / picture alliance via Getty Images Meta has added a new pop-up to the Meta AI app, warning users that prompts they post are public. As a general rule, you should never assume that anything you tell an AI chatbot is private. However, it seems some Meta AI users hadn't realised that their chatbot conversations could be published for the world to see. The public Discovery feed in the Meta AI app has been filled with people's interactions with the chatbot, with multiple publications reporting last week that the app published sometimes sensitive or embarrassing conversations, audio, and images that users likely believed were confidential. Mashable's own exploration of the app's Discovery feed also corroborated this, finding Meta AI conversations from heartbroken people going through difficult breakups, attempting to self-diagnose medical conditions, and even posting personal photos it would like the AI to edit. Now Meta AI has added a new warning alerting users that their prompts are public. First reported by Business Insider, this message pops up when you tap the "Share" button on one of your interactions with Meta AI. A "Post to feed" button will then appear at the bottom of your screen, below the warning reading, "Prompts you post are public and visible to everyone. Your prompts may be suggested by Meta on other Meta apps. Avoid sharing personal or sensitive information." Business Insider reports that Meta AI users have always had to hit those "Share" and "Post to feed" buttons to publish their conversations to the Discovery feed. However, judging from the information that's been appearing there, it seems that quite a few users may not have understood what this meant. The new pop-up appears targeted to make this clearer. As of Monday, Meta AI's Discovery feed is now dominated with AI-generated images, as well as the occasional generic text prompt from Meta-run accounts. Fortunately, users can also opt out of being included in this feed of unsuspecting and unintentional oversharers in Meta AI's settings for some extra security. Even so, it's always best to be mindful about what you tell Meta AI, as well as any other AI chatbot you decide to use. Aside from staying far away from Meta AI's "Share" button, you can further safeguard your prompts from becoming public fodder by adjusting the app's privacy settings. Here's how to keep your Meta AI conversations confidential. Once you've done this, you may also want to stop Meta from sharing your prompts on Instagram and Facebook. You can do this via a separate setting in the Meta AI app.

[14]

I don't like the idea of my conversations with Meta AI being public - here's how you can opt out

You can opt out of having your conversations go public entirely through the Meta AI app's settings The Meta AI app's somewhat unique contribution to the AI chatbot app space is the Discovery feed, which allows people to show off the interesting things they are doing with the AI assistant. However, it turns out that many people were unaware that they weren't just posting those prompts and conversation snippets for themselves or their friends to see. When you tap "Share" and "Post to feed," you're sharing those chats with everyone, much like a public Facebook post. The Discovery feed is an oddity in some ways, a graft of the AI chatbot experience on a more classic social media structure. You'll find AI-generated images of surprisingly human robots, terribly designed inspirational quote images, and more than a few examples of the kind of prompts the average person does not want just anyone seeing. I've scrolled past people asking Meta AI to explain their anxiety dreams, draft eulogies, and brainstorm wedding proposals. It's voyeuristic, and not in the performative way of most social media; it's real and personal. It seems that many people assumed sharing those posts was more like saving them for later perusal, rather than offering the world a peek at whatever awkward experiments with the AI you are conducting. Meta has hastily added a new pop-up warning to the process, making it clear that anything you post is public, visible to everyone, and may even appear elsewhere on Meta platforms. If that warning doesn't seem enough to ensure your AI privacy on the app, you can opt out of the Discovery feed completely. Here's how to ensure your chats aren't one accidental tap away from public display. Of course, even with the opt-out enabled and your conversations with Meta AI no longer public, Meta still retains the right to use your chats to improve its models. It's common among all the big AI providers. That's supposedly anonymized and doesn't involve essentially publishing your private messages, but theoretically, what you and Meta AI say to each other could appear in a chat with someone else entirely in some form. It's a paradox in that the more data AI models have, the better they perform, but people are reluctant to share too much with an algorithm. There was a minor furor when, for a brief period, ChatGPT conversations became visible to other users under certain conditions. It's the other edge of the ubiquitous "we may use your data to improve our systems" statement in every terms of service. Meta's Discovery feed simply removes the mask, inviting you to post and making it easy for others to see. AI systems are evolving faster than our understanding of them, hence the constant drumbeat about transparency. The idea is that the average user, unaware of the hidden complexities of AI, should be informed of how their data is being saved and used. However, given how most companies typically address these kinds of issues, Meta is likely to stick to its strategy of fine-tuning its privacy options in response to user outcry. And maybe remember that if you're going to tell your deepest dreams to an AI chatbot, make sure it's not going to share the details with the world.

[15]

A simple mistake in the Meta AI app could expose your deepest secrets

An example of a question you might not want to be made public, but because of an obtuse "Share" button, maybe people are sharing their most private questions in the Discover feed of the new Meta AI app. (Image credit: Future) In one of the clearest examples of how everyday users of technology sometimes don't understand it - and the developers of that technology haven't fixed it - the Meta AI app is allowing deeply personal, private, and altogether embarrassing content to be shared to its "Discover" tab. And those personal questions can spread to Facebook and Instagram. This is precisely the worst place for questions like "Tips for asking an Asian girl about dating older men" and "Can my wife see [the questions I ask]?" Let's briefly back up: The Meta AI app launched in April. It uses Meta's own large language model, Llama, which acts as a chatbot for users. In that way, it's like ChatGPT or the Google Gemini app. You ask it a question, and it provides answers. But the truly cursed part of this app is that those questions can somehow become public to other users, and clearly, not enough users realize it. The Meta AI app looks a lot like the Instagram app. There's a Home tab, a Notifications tab, a History tab, and a Discover tab. The Discover tab is a lot like the Explore tab on Instagram - it's a stream of content produced by other users of the Meta AI app. A lot of it is "AI slop" -- low-quality, AI-generated images of like, Cookie Monster in handcuffs or Pennywise the evil clown as a baby. And between the slop are questions the users very likely didn't intend for anyone else to see. Why does this happen? There's a confusing button in the top right of your chats with the Meta AI app called "Share." Do not press if you are asking a private question. That "Share" button essentially turns your question into a piece of content -- a "public prompt" -- posted to the Discover feed. The instructions are vague, and you might think hitting "post" wouldn't result in your content appearing in the Discover feed. There's a one-time warning, but that's it. You might also have just made a mistake. After being notified that hitting the "post" button would put your content in the Discover feed one time, you are no longer met with the warning on subsequent shares. Even worse, these public prompts could show up on Facebook and Instagram as "suggested" prompts for other users. Thankfully, you can delete public prompts in the Meta AI app. You click on your user icon in the upper right corner, click "view profile," and you'll see those prompts. You can long-press on each and be met with the option to delete it. You can also adjust the settings to avoid this sort of thing, which we can't stress enough, shouldn't have happened in the first place, given the history of digital design innovation and research in the last few decades. We digress: Go to "Data and privacy," click "Manage your Information," and then select "Make all public prompts visible to only you." Click "Apply to all." Next, go back to the "Data and privacy" menu, click on "Suggesting your prompts on other apps," and uncheck Facebook and Instagram. Facebook has more than 3 billion users, and the Meta AI app is available in 22 countries. When designing content for oceans of people, especially when your app clearly states that any questions you ask are private, why add a "social" element? The answer is obvious if you're Meta, a publicly traded company that relies on engagement and growth numbers to continually move up. A thriving Discover section of the app - and which pieces of content a user clicks on in that app - is another data source about user interests, which Facebook can bundle and sell to advertisers. It's just like Instagram's Explore tab. Is Meta AI's obtuse UI to blame, or is the vagueness intentional to get more content in the Discover feed? It could be both. It feels like a situation where Hanlon's razor could be applied: "Never attribute to malice that which is adequately explained by stupidity."

[16]

Your Meta AI chats are not really a secret. Here's how to keep them private

At this point, it shouldn't come as a surprise that discussing your deepest secrets and personal issues with an AI chatbot is not a good idea. And when that chatbot is made by Meta, the company behind Facebook and Instagram (including all its sordid history with user data privacy), there is even more reason to be cautious. But it seems a lot of users are oblivious to the risks, and in return, exposing themselves in the worst possible ways. Your chatbot interactions with Meta AI -- from seeking trip suggestions to jazzing up an image -- are publicly visible in the app's endlessly-scrolling vertical Discover feed. I installed the app a day ago, and in less than 10 minutes of using it, I had already come across people sharing their entire resume, complete with their address, phone number, qualifications, and more, on the main feed page. Some had asked the Meta AI chatbot to give them trip ideas in Bangkok involving strip clips, while others had weirdly specific demands regarding a certain skin condition. Users on social media have also documented the utterly chaotic nature of their app's Discover feed. An expert at the Electronic Privacy Information Center told WIRED that people are sharing everything from medical history to court details. Recommended Videos How to plug Meta AI app's privacy holes? Of course, an app that doesn't offer granular controls and a more explicit setup flow regarding chat privacy is a disaster waiting to happen. The Meta AI app clearly fumbled on this front and puts the onus of course correction on users. If you have the app installed on your phone, follow these steps on your phone. Open the Meta app and tap on the round profile icon in the top-right corner of the app to open the Settings dashboard. On the Settings page, tap on Data & Privacy, followed by Manage your information on the next page. You will now see an option that says "Make all public prompts visible to only you." Tap on it and select "Apply to all" in the pop-up window, as shown in the image below. If you are concerned about previous AI chats that have contained sensitive information, you can clear the past log by tapping on the "Delete all prompts" option on the same page. Next, go back to the Data & Privacy section and click on the "Suggesting your prompts on other apps" option. On the next page, disable the toggles corresponding to Instagram and Facebook. If you have already shared your Meta interactions publicly, click on the notepad icon in the bottom tray to check the entire history. On the chat record page, tap on any of the past interactions to open it, and then tap on the three-dot menu button in the top-right corner. Doing so opens a pop-up tray where you see options to either delete the chat or make it private so that no other users of the Meta AI app can see it in their Discover feed. As a general rule of thumb, don't discuss personal or identifiable information with the chatbot, and also avoid sharing pictures to give them creative edits. Why is it deeply problematic? When the Meta AI app was introduced in April, the company said its Discover feed was "a place to share and explore how others are using AI." Right now, it's brimming with all kinds of weird requests. A healthy few of them appear to be fixated on finding free dating and fun activity ideas, some are about career and relationship woes, finding love in foreign lands, and skin issues in intimate parts of the body. Here is the worst part. The only meaningful warning appears when you are about to post your AI creation (or interaction) to the feed section. The pop-up message says "Feed is public" at the top, and underneath that, you see the "post to feed" button. According to Business Insider, that warning was not always visible and was only added after the public outcry. But it appears that a lot of people are not aware of what the "Post to Feed" button actually does. To them, it might come out as something referring to their own feed where the Meta AI chats are catalogued in an orderly fashion for their eyes, just the way you will see them in other chatbot apps such as ChatGPT and Gemini. Another risk is the exposure. During the initial setup, when the app picks up account information from the Facebook and/or Instagram app installed on your phone, the text boxes are dynamic, which means you can go ahead and change the username. Notably, there is no "edit" or "change" signal, and to an ordinary person, it would just appear as if the Meta AI simply extracted the correct username from their pre-installed social app. It's not too different from the seamless sign-up experience in apps that show users Google Account or Apple ID options to log-in. When I first installed the app on my iPhone 16 Pro, it automatically identified the Instagram account logged into the phone. I tapped on the button with my username plastered over it, and I was directly taken to the main page of the Meta AI app, where I could directly jump into the Discover feed. There was no warning about the privacy, or how the log of my data would be shared, or even made public knowledge. If you want your AI prompts not to appear in the public Discover feed, you will have to manually enable an option from within the app's settings section, as described above. The flow is slightly different on Android, where you see a small "chats will be public" during the initial set-up process. That message appears only once, and not on any other page. Just like the iOS app, you must manually enable the option to prevent your chats from appearing in the Discover feed and to stop the chat prompts from appearing inside Instagram and Facebook. If you absolutely must use the Meta AI, you can already summon it in WhatsApp, Instagram, and Facebook. In those apps, you can ask Meta AI random questions, ask it to create images, or give it a fun makeover to pictures, among others. Be warned, however, that AI still struggles with hallucination issues, and you must double-check whatever information the chatbot serves you.

[17]

Your Questions in the Meta AI App Might Be Posted Publicly

I never thought I'd download Meta AI on my iPhone. After all, people have been mad for over a year that you can't turn off Meta AI on Facebook and Instagram: Why would you want a dedicated app for this? Then, I saw the headlines from TechCrunch, Wired, and Business Insider, among many others, that sharply criticize the app's approach to privacy and security. That's because Meta AI isn't Meta's take on ChatGPT, Gemini, or Claude. Instead, it's half-chatbot, half-social media platform, where your requests and questions can be shared with the rest of the Meta AI community. To be clear, your Meta AI interactions aren't shared by default. You do need to choose to post your queries to the social aspect of the app. Should you choose to do so, your requests are posted to a public Discover feed for all with the app to see. That invites users who want to share their AI creations, of course, as well as trolls who want to spam the feed with silly or offensive requests and generations. But what's more concerning about the feed, however, is that it hosts posts from users who clearly did not understand they were posting their chats publicly. My first impression when scrolling through this feed was that it does seem like most users are in on the social aspect of the app. Some posts seem geared towards a public audience, with users commenting as they would on an Instagram of Facebook post. And, in fact, most of these posts are pretty harmless: a Maltese dog swimming in a pool; killer clowns from outer space; Nartuo and Deku clashing in an epic multiverse battle; and lots of anthropomorphic animals...so many anthropomorphic animals. Even some of the personal posts are fine with an audience: I saw a screenshot of someone's sleep stats presumably tracked from an Apple Watch, and the user was asking Meta AI to analyze the stats and report what it thought. The user was then responding to the comments, including to a user who said "audit looks good" and one who inquired into whether the user sleeps with "these" on -- "these" presumably meaning a smart watch? Why you'd want to start a public discussion about your sleep habits with strangers is beyond me, but to each their own. But every once in a while, you come across something that was clearly intended to remain private -- at least between the user and Meta -- and is now visible to me. I saw someone post a very close-up selfie asking for a beautiful yet realistic makeover. They didn't seem happy with the digital, carnival-like makeup the AI used, because they asked once again for it to look realistic. (It didn't.) Someone asked for a "skinny cute girl anthro lion in pink striped socks, poledancing in the club." (I can report Meta AI did animate this.) The same man then posted an image of himself with two women kissing in the background (those women were added by Meta AI in a separate request) and asked Meta to keep adding more and more women to the background. The sole comment I saw read: "these are publicly posted, my guy." It's not just that these posts are public: They're tied to your Meta account, which anyone can tap through to see your entire posting history. One user who asked Meta to generate "muddy bikinis and passionate kisses" also asked Meta AI what to do about a number of red bumps on their inner thigh. Sorry you're going through that, but, also, why do I and the rest of the Meta AI app know? I'm going to go out on a limb and assume you didn't mean to share that -- unless you're just messing with us. In general, Meta has a poor reputation when it comes to privacy. (This is the company that allowed 87 million accounts to be exposed to Cambridge Analytica, after all.) But this app is a privacy and security nightmare. Taking a look at the iOS App Store's app privacy report card, Meta AI scrapes a ton of your data, including health and fitness, financial information, contact information, browsing history, usage data, your location, contacts, search history, sensitive information, and "surroundings," which I didn't even know was a metric. (According to Apple, it means "environment scanning," including "mesh, planes, scene classification, and/or image detection of the user's surroundings.") But digging through the settings, there are some terrible defaults here. First of all, Meta will suggest your publicly-shared prompts on other platforms, like Facebook and Instagram. (You can turn this off from Meta AI's in-app settings by tapping your profile icon, then from Data & privacy > Suggesting your prompts on other apps.) Meta AI also keeps "background conversations" enabled, which basically listens to you when you leave the app or put your phone to sleep in case you want to keep chatting with Meta AI at any time. No thanks. (You can turn this off from Settings > Data & privacy > Voice.) There are also some serious security implications here. One person shared a photo of their computer (one taken with a camera, not a screenshot, mind you), which displayed a warning that their Facebook account would be disabled in 169 days. As it turns out, Facebook had banned their account, but the user had appealed and was confused about what to do next. The user shared their full name, and asked if the bot could "talk to that AI about my appeal?" They then, without prompting, shared what kind of business they run, which was enough information for me to find both their LinkedIn and Instagram accounts. I'm pretty confident this person had no idea their requests to Meta AI were posted publicly, and my guess is if they knew, they wouldn't be sharing these details so lightly -- or conversing with Meta AI at all. Too many people seem unaware that the posts they share with Meta AI of themselves with friends and family, or the deeply sensitive questions they entrust to the bot, are now public to the community of users scrolling through the Discover tab. Of course, there's always the chance that any one of these posts is an elaborate troll, especially with the recent media attention on Meta AI's app. But something tells me the person asking for help with their account ban is a real person, who had no idea their desperate conversation with a Meta product would end up on a public feed, let alone in this article. If you have been posting to the Meta AI app without realizing it, or just regret all of your public posts, you can make them all private in one fell swoop. To do so, head to Settings > Data & privacy > Manage your information. Tap "Make all public prompts visible to only you," then "Apply to all" to privatize all posts. Or, you can tap "Delete all prompts," then "Delete all" to get rid of them for good.

[18]

Meta AI's discover feed is full of people's deepest, darkest personal chatbot conversations

Meta's artificial intelligence tool might be a little too easy to use. The discover feed for Meta AI, the social media giant's one-stop shop for AI image creation and chatbot brainstorming sessions, is full of what appears to be people's deepest, darkest personal queries -- unknowingly shared for all to see. The feed is accessible via Facebook and is mostly a collection of harmless user-generated AI images, such as Tony Stark designing Air Jordan sneakers and Donald Trump surrounded by a pit of flames. But interspersed throughout the fire hose of content is a significant amount of chatbot prompts from users who may have no idea that their activity is public. Examples include a user asking for tips on how to ask Asian women if they date older men, what to do if you have red bumps on certain parts of your body, and how to improve bowel movements. Many of the queries include the chatbot's responses and follow-ups from the users, resulting in full-on conversations about highly inappropriate topics.

[19]

Users of new Meta AI app unknowingly make chatbot logs public - SiliconANGLE

Users of new Meta AI app unknowingly make chatbot logs public Some users of a new Meta Platforms Inc. artificial intelligence app have inadvertently made their chatbot conversations public. TechCrunch reported the phenomenon on Thursday. According to the publication, a cybersecurity researcher has determined that some of the publicly-shared chatbot conversations included users' addresses, information about court cases and other sensitive data. This indicates that the users had not meant to make the chat logs broadly accessible. The logs originate from Meta AI, a mobile app that Meta released in late April. It's the standalone version of an eponymous chatbot that the company had previously integrated into Facebook, Instagram and WhatsApp. The AI also ships with its Ray-Ban Meta series of augmented reality glasses. The Meta AI app's chatbot can not only answer user questions but also search the web and generate images. According to Meta, it personalizes prompt responses based on account data such as the Facebook posts liked by the user. Consumers can further fine-tune the chatbot's output by providing customization instructions. When the Meta AI app answers a prompt, a Share button at the top right corner of the chat window can be used to post the response to a public Discovery feed. Other users may then view the AI-generated content. It appears that some consumers are unaware the Share button makes their chatbot logs public. PCMag reported that the button "doesn't indicate where the post will be published." According to the publication, it only becomes apparent that the post has been shared to the public Discovery feed after the fact. At the same time, Meta provides several ways of deleting such posts following their publication. The scope of the privacy issue is limited by the fact that the Meta AI app doesn't yet have a significant installed base. According to research cited by TechCrunch, the app has only been downloaded about 6.5 million times since its late April release. The versions of Meta AI that are embedded into the company's social media services topped 1 billion monthly users in May. Over the past few years, regulators in the European Union have fined the Facebook parent several times over its privacy practices. At least one of those fines was issued because of Meta's interface design choices. It's unclear whether the inadvertent data leaks tied to the Meta AI app's Share button might expose the company to similar scrutiny.

[20]

You Need to Change This Setting Immediately If You Use Meta AI

That innocent chat with Meta AI about your job, health, or legal problems? It might already be public. Meta tucked a dangerous setting into its app, and it's leaking people's personal lives into the feed. Meta AI Is Sharing Your Prompts Publicly Meta AI isn't just an AI chatbot; it's also a social feed. While you're chatting with what feels like a private assistant, there's a chance those conversations could be published to a public Discover tab, often complete with your username and profile photo. While sharing isn't automatic, it's alarmingly easy to do by accident. A few taps can send your query into the public feed. Many users don't even notice, especially when they're just testing features or playing with image generation. Related I Tested Meta AI: It's Better Than Other Chatbots In These 3 Areas Meta AI doesn't have the same following as ChatGPT or Claude, but it's surprisingly good in a few areas. Posts It's already led to some serious oversharing. As per TechCrunch, one user asked for help writing a character reference letter for an employee facing legal trouble, using the employee's full name. Others have posted prompts about tax evasion, medical conditions, or court issues, seemingly unaware that they were publishing sensitive details to a public timeline. I've seen it firsthand, too. While browsing the Meta AI app, I came across students uploading homework assignments and asking the AI to help them with the answers. In another case, someone pasted a list of names with phone numbers into the chat and asked Meta AI to format them into text. It's hard to believe any of these users meant to broadcast that information -- it just shows how easy it is to misstep. There are also some prompts with some more unsavory focuses. Close Even if you understand the share function, it's still easy to post something you meant to keep private. And the worst part? You might not realize it until your prompt shows up in someone else's feed. Why This Matters (Even If You Think It Doesn't) It's easy to shrug this off. "Who cares if someone sees my silly AI prompt?" But the implications go beyond embarrassment. Imagine asking Meta AI how to break a lease in your city, mentioning your landlord's name, or uploading a photo to ask about a rash. Those details, when shared publicly, don't just vanish into the digital void. They stick. They can be screen-captured, reposted, and traced back to you. If your Meta AI profile is tied to your public Instagram account, it might even expose your full name, your face, or your location. That's not just awkward -- it's a potential security risk. Experts have already noted examples of users unintentionally sharing court documents, medical history, or the names of minors. Trolls, scammers, or even future employers could come across posts that were never meant to be seen. Related 6 Reasons I Love Meta AI -- and 6 Bits I Hate Meta AI is great, but it isn't without issues. Posts If you're using Meta AI and haven't reviewed your privacy settings, you're taking a risk. A single careless tap can expose your thoughts and questions to the world. How to Stop Meta AI Sharing Your Prompts Publicly If you're using the Meta AI app, it's worth taking a minute to double-check your privacy settings. While chats are private by default, sharing them is far too easy. Open the Meta AI app. Tap your profile photo in the top-right corner. Scroll down and tap Data and Privacy. Tap Suggesting your prompts on other apps. Turn off the toggles for Facebook, Instagram. Return to the Data and Privacy screen and select Manage your information. Tap Make all your public prompts visible only to you and select Apply to all. Close These changes take only a minute but can save you from accidentally broadcasting something you meant to keep private. A Quick Rule of Thumb for Using AI Safely We've previously shared guides on questions you should never ask an AI tool, as well as tasks to never outsource to AI. The tl;dr of which is: If you wouldn't shout it in a crowded café, don't type it into an AI. No matter how trivial or casual a prompt might seem, if it includes anything you wouldn't want a stranger -- or your boss, your ex, or a journalist -- to read, assume it could be seen. Think of AI platforms less like private journals and more like open microphones. They're useful, even friendly, but they're not confidential. Even if you don't hit "share," anything you type might be stored, analyzed, or reused. AI tools are convenient, but they're still owned by companies with their own incentives. And user privacy is not typically near the top of the priority list, despite what most AI chatbot developers claim. So be smart, be skeptical, and above all, be careful.

[21]

Meta Might Be Trying to Fix the Privacy Problem of Its AI App

Meta says nothing is shared on Discover unless users choose to post Meta AI app, the company's artificial intelligence (AI) platform with a social experience, came under fire last week after several users and reports highlighted that its Discover feed was showing a multitude of seemingly personal conversations. It appears that the social media giant is now taking steps to rectify this and making users more aware about the consequences of hitting the "Share" button. When sharing a post on the social feed, a warning message appears informing users that the content will be visible to everyone. First reported by Business Insider, the Meta AI app now shows a warning message whenever users try to share a conversation publicly. Gadgets 360 staff members were also able to spot the warning message when attempting the same. This might be one of the guardrails the company is implementing to stop the instances of users unknowingly posting personal information. The warning message appears after users tap the "Share" button located on the top right corner. A full-page pop-up window appears with the message "Prompts that you post are public and visible to everyone. Your prompts may be suggested by Meta on other Meta apps." Additionally, the message also tells users to avoid sharing personal or sensitive information. A "Manage Settings" hyperlink also appears next to the message. It takes users to a Settings page where users can choose whether their prompts can be suggested on Facebook and Instagram or not. Once users see this message, they need to tap the middle of the screen once to activate the "Post to feed" button. After that, they can share the post to the Discover feed. Notably, some of the Gadgets 360 staff members highlighted seeing this message when trying to share a post for the first time, however, it apparently did not appear afterwards. We are now seeing this message appear more frequently. The Business Insider report also claimed to see more image-based posts and fewer text posts, which might also be part of Meta's efforts to curb posts of personal nature. However, we were not able to verify this claim. Also, image posts on the Meta AI app, especially those that contain an AI edit of a real picture, comes with privacy concerns of its own. As reported last week, these posts also include the original, unedited image in the description which can be copied or saved by anyone.

[22]

Sensitive Private Chats Said to Be Appearing on Meta App's Discovery Feed

Meta provides a Share button on top of the chat screen to share a post Meta AI app's Discover feed is reportedly being flooded with users' private conversations and requests, unintentionally shared to the public. Multiple reports have highlighted instances of text chats and image prompts that appear deeply personal, suggesting that users may be accidentally posting them to the app's social feed. The appearance of these public posts has raised concerns among netizens, and experts are reportedly questioning whether the company is doing enough to protect its user base's privacy. A TechCrunch report claims to have spotted posts where "people ask for help with tax evasion," inquired about "how to write a character reference letter for an employee facing legal troubles," and even people asking what to do if there are "a bunch of red bumps on my inner thigh" on the Meta AI app's Discover feed. The publication, which also posted screenshots of similar public posts, is not alone. Kylie Robinson, Senior Correspondent at Wired and author of the report, claimed to have seen posts from users asking, "Do younger women like older white men?" among others. Further, Calli Schroeder, Senior Counsel for the Electronic Privacy Information Center, told Wired in an interview that she has come across posts where people are "sharing medical information, mental health information, home addresses, even things directly related to pending court cases." While Gadgets 360 staff members have not seen such outrightly private posts, we have also spotted odd posts where people have posted their selfies, which was originally a request to the chatbot to make a minor edit. Some of these pictures include minors with a changed hair colour. Additionally, several X (formerly known as Twitter) users have also posted screenshots of such private posts on the app's social feed. It cannot be said with certainty that in all of these instances, people were unaware that they were making a public post. However, the nature of the queries and images does raise the suspicion of the same. Currently, publicly sharing a post on Meta AI is a two-step process after a user has had a conversation with the chatbot. A Share button appears on the top of the chat interface, tapping which takes the user to a new page titled "Preview." Here, the AI generates an editable title for the post, the query and its response, with a large "Post" button at the bottom. However, there is nothing else to tell users that tapping on the Post button makes the entire conversation public and visible to others. While it is easier for those fluent with modern apps to understand what the Post button means, those who are not tech-savvy can mistakenly tap it and not realise that they have shared a conversation. At the time of launching the Meta AI app, the tech giant had said, 'And as always, you're in control: nothing is shared to your feed unless you choose to post it." However, the reported incidents raise the concern about whether the two-step process is enough to make people aware of the consequences of tapping on the "Post" button.

[23]

With Meta AI App, You Can 'Discover' People's Wildest Thoughts -- But Are You Unknowingly Sharing Yours?