Meta's AI Demo Fails Spectacularly, Raising Questions About AI's Practical Use

2 Sources

2 Sources

[1]

You Don't Need AI to Cook Sauce -- But Meta Still Wants You to Try

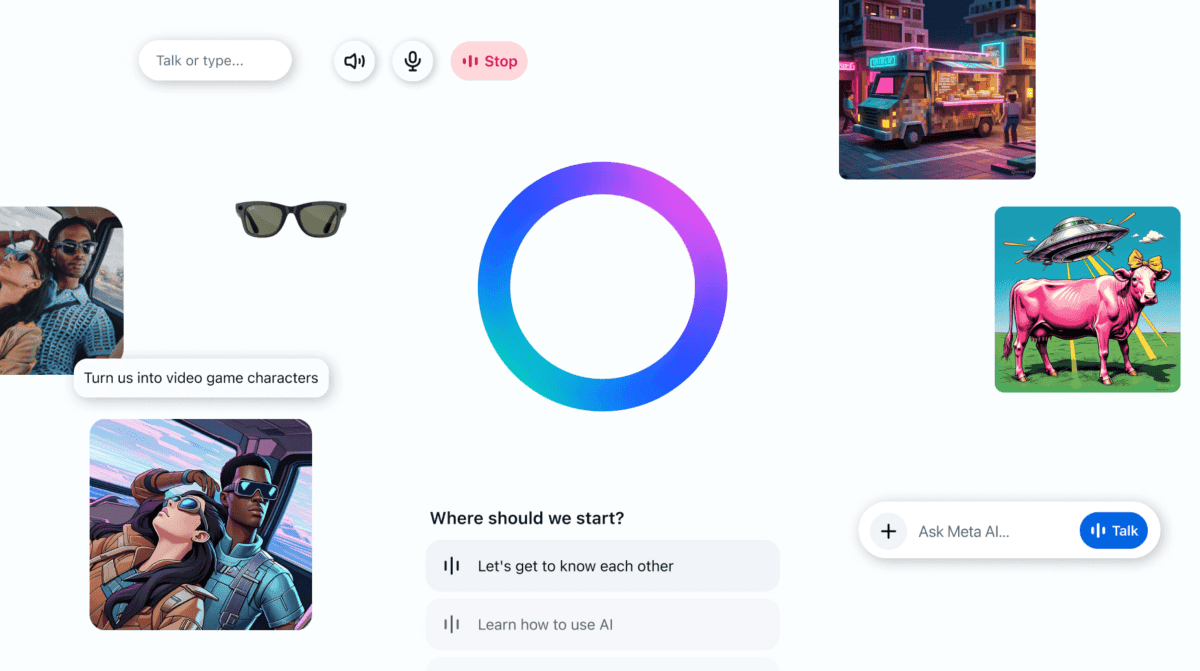

Our team tests, rates, and reviews more than 1,500 products each year to help you make better buying decisions and get more from technology. I don't want to seem anti-AI. I really do think it can be a valuable tool for a lot of tasks. But almost every time I see it being shown off in consumer electronics, it isn't presented as a tool but as a do-everything interface that defines how you interact with whatever device uses it. That's how Meta frames its AI, and that isn't the best use of the technology. Meta itself proved that when its live demo of Meta AI on its newest Meta Ray-Ban Smart Glasses completely fell apart. If you didn't see it live, the demo was supposed to show off how Meta AI on smart glasses can be useful in daily tasks like cooking. The demonstrator wore them in front of a counter with ingredients on it, and asked Meta AI how to make Korean barbecue sauce. The idea was that the cameras on the glasses would let the AI identify what ingredients were available and use that information to walk him through the process. It started out promising, with the voice response complimenting him on what he had ready and telling him what ingredients are common in Korean barbecue flavors. The demonstrator then asked the glasses how to get started. Then Meta AI started telling him what to do now that he mixed the main ingredients together for the sauce base. Except, he hadn't mixed the sauce base yet. The demonstrator then asked the AI for the first step. Once again, Meta AI told him what to do now that his sauce base was ready. Then the camera cut back to Meta CEO Mark Zuckerberg: "The irony of the whole thing is that you spend years making the technology, and then the Wi-Fi of the day kind of catches you." The AI failed the demo. By the time the presentation moved on to Zuckerberg dealing with video call and control band glitches (which I won't fault AI for, but they were still very funny), I could have gotten the information to make Korean barbecue sauce and whipped up a batch in my kitchen. And honestly? If I started at the same time as that live demo without AI or a specific recipe on hand, I could have still made the sauce faster than if the AI actually worked. I could have even used a smart device to do it. The best-case scenario for Meta's Live AI in that cooking demo was still a completely unnecessary layer of automation between the user and the information needed to perform the task. If you want to cook something new, you need some kind of recipe. Maybe it's a set of formal instructions or maybe it's a loose outline of ingredients and cooking times, but you need that base. They're really easy to find, whether you search for them yourself, use an app, or watch a cooking video on YouTube. The information is available, and just as importantly, it's (usually) tested by whoever wrote or recorded it. It doesn't need to be synthesized completely new and processed in the cloud, step by step. Speaking of step-by-step, that's one of the biggest problems of AI guidance for cooking. Meta AI was supposed to walk the demonstrator through making the sauce that way. It was going to tell him how to start, by gathering the ingredients. Then it was going to tell him to mix the right ones to make the base. Then, after making the base, it was going to tell him what to do next. It failed at telling him how to mix the base, and that information was never made available to him. When you follow a recipe, you read the entire recipe first, then you go through the steps. This lets you know what you should be prepared to do, when the individual ingredients and tools will be necessary, and how long it will take. Meta AI wouldn't have given him that information even if it did work. It was going to be just voice prompts walking him through the process as he went. That's not good for cooking or learning how to cook. The only useful element the Meta AI demo could have shown would have been how to cook using the ingredients on hand, identified by the glasses. Even then, I wouldn't trust an AI to give me good suggestions on substitutions or how to cook a dish without certain ingredients. Those tricks are hard enough to get right if you're an experienced chef, and expecting an AI to have the right insight on what any change will do to flavor and texture is a big gamble. I can see how you could use AI for cooking. I've seen it successfully used in the past not just on phones and tablets, but on smart displays with voice assistants. It's simple: "Alexa, show me a recipe for what I want to make." And a recipe will show up on the screen, maybe with a video or voice instructions. And yes, voice assistants count as AI. The Meta AI demo offers none of that information. No displayed lists of ingredients or steps to look over before you start. No reference material to use the same recipe on your own later. No choice in whose recipe you use, because the AI is synthesizing it based on the information in its knowledge base. You ask it for something, and it gives you that thing in a single, generated form. In automating the process to be more instantly convenient, it keeps a lot of information and control out of the user's hands. That's what most of these current AI systems have been trying to do: cutting out a huge part of any given process in the name of streamlining it through technology. Maybe the Meta Ray-Ban Display will give that information. It isn't just voice-controlled, but has a graphical interface with direct interactivity via its Meta Neural Band. Perhaps it will let you treat Meta AI like Alexa, having it simply bring up a recipe you can read through and save for later. I'd be mildly surprised if it does, though, since the Meta Ray-Ban Display isn't just the company's newest smart glasses, but its newest "AI glasses." Meta is pushing its AI not as a tool built into devices, but as the heart that drives those devices. Automation and convenience are great, but not if it means taking choice out of people's hands. I don't mind asking AI for help cooking if I can choose the recipe and read it myself. I don't mind asking AI to play music for me if I can choose what it plays. I don't mind asking AI for answers if I can clearly see and go to its sources. But when it cuts out everything I would otherwise have to let me fully control my experience, that's a price that's too hard to pay. And even if it wasn't, it still means relying on network-connected, cloud-based technology that can simply break and leave you with nothing to work with. If Meta Connect proved anything, it proved that.

[2]

Facebook CEO Demos New Meta AI And It Couldn't Have Gone Worse

A recipe for disaster, asking an AI to help with Korean steak sauce live on stage Mark Zuckerberg took the stage at Meta Connect 2025 to show off the corporation's latest non-AI. The tool, designed to spy on the objects in your home and send that data back to Facebook for advertising reasons...wait, sorry, I mean to answer questions while responding to your current environment, was asked to help Mark's pretend-friend Jack Mancuso create a new sauce for his sandwich. Even in the heavily rehearsed and pre-scripted demonstration, the AI immediately shit the bed, leaving Mancuso and Zuckerberg to spuriously blame the wifi for the obvious technological fail. The AI being hyped right now is not AI at all. It's really important that we all acknowledge this, that the world is selling itself a multi-billion dollar lemon, predictive text engines that have nothing intelligent about them. They're giant sorting machines, which is why they're so good at identifying patterns in scientific research, and could genuinely advance medicine in wonderful ways. But what they cannot do is think, and as such, it's a collective mass-delusion that these systems have any use in our day-to-day lives beyond plagiarism. Here's the set-up. Jack "Chef Cuso" Mancuso (the not-chef YouTuber and seller of knives and seasonings) is stood on camera in a kitchen set, before a table of ingredients capable of being put together for pretty much one purpose. Zuckerberg fake-ass pretends to think up an idea on the spot for what could be cooked up, "Maybe a steak sauce, maybe a Korean-inspired thing?" "Yeah, let's try it. It's not something I've made before," says Mancuso, remembering his script, "so I could definitely use the help." In the best case scenario for this Meta Connect demo, the Meta "AI" would scan the table of ingredients in front of Mancuso, and suggest a sauce he could make from the items it recognizes. The hilariously clearly-labeled bottles of "Sesame Oil" and "Soy Sauce," with the words squarely facing the camera, sit next toâ€"oh my goodness, would you look at thatâ€"a jar of Cuso-branded seasoning! There's also some spring onions, a couple of lemons, two garlic cloves, salt and pepper and maybe a potato and a bottle of honey? It's all next to a very sad-looking steak sandwich. So, Meta AI, what can we possibly do? "Hey Meta, start Live AI," says Mancuso, just like in rehearsal, before a very long and awkward pause. "Starting Live AI" the robot voice eventually intones, before adding the unimprovable words, "I love this set-up you have here, with soy sauce and other ingredients." Thanks! It loves it! That's so thoughtful and demonstrative that it was able to scan the words in the image. "How can I help?" "Hey, could you help me make a Korean-inspired steak sauce for my steak sandwich here?" asks Mancuso, stood in front of the exact ingredients used to make Korean steak sauce according to this online recipe. Oh, except for a pear. That recipe I just found wants a pear. He doesn't have a pear. Interesting. "You can make a Korean-inspired steak sauce using soy sauce, sesame oil..." beings Meta AI, before Mancuso interrupts to stop the voice listing everything that happens to be there. "What do I do first?" he demands. Meta AI, clearly unimpressed by being cut off, falls silent. "What do I do first?" Mancuso asks again, fear entering his voice. And then the magic happens. "You've already combined the base ingredients, so now grate a pear to add to the sauce." Mancuso looks like a rabbit looking into the lights of an oncoming juggernaut. He now only has panic. There's nothing else for it, there's only one option left. He repeats his line from the script for the third time. "You've already combined the base ingredients, so now grate the pear and gently combine it with the base sauce." Mancuso seems to snap out of his stupor and makes the hilariously silly claim, "Alright, I think the wifi might be messed up. Sorry, back to you Mark." There's louder audience laughter. "It's all good!" says Zuck, as he hears billions of dollars flushing down a toilet. "It's a...the irony of the whole thing is you spend years making technology and then the wifi at the, er, day kinda...catches you." Yeah, the wifi. You know how a bad wifi connection causes an AI to skip steps in a recipe. We've all been there. Same reason I lost that game of poolâ€"the damn wifi in the bar. Rather than because of wifi, the reason this happened is because these so-called AIs are just regurgitating information that has been parsed from scanning the internet. It will have been trained on recipes written by professional chefs, home cooks and cookery sites, then combined this information to create something that sounds a lot like a recipe for a Korean sauce. But it, not being an intelligence, doesn't know what Korean sauce is, nor what recipes are, because it doesn't know anything. So it is that it can only make noises that sound like the way real humans have described things. Hence it having no way of knowing that ingredients haven't already been mixedâ€"just the ability to mimic recipe-like noises. The recipes it will have been trained on will say "after you've combined the ingredients..." so it does too. The words themselves do not mean anything to the LLM. What's so joyous about this particular incident isn't just that it happened live on stage with one of the world's richest men made to look a complete fool in front of the mocking laughter of the most non-hostile audience imaginable... Oh wait, it largely is that. That's very joyous. But it's also that it was so ludicrously over-prepared, faked to such a degree to try to eliminate all possibilities for error, and even so it still went so spectacularly badly. From Zuckers pretending to make up, "Oh, I dunno, picking from every possible foodstuff in the entire universe, what about a...ooh! Korean-inspired steak sauce!" for a man stood in front of the base ingredients of a Korean-inspired steak sauce, to the hilarious fake labels with their bold Arial font facing the camera, it was all clearly intended to force things to go as smoothly as possible. We were all supposed to be wowed that this AI could recognize the ingredients (it imagined a pear) and combine them into the exact sauce they wanted! But it couldn't. And if it had, it wouldn't have known the correct proportions, because it would have scanned dozens and dozens of recipes designed to make different volumes of sauce, with contradictory ingredients (the lack of both gochujang and rice wine vinegar, presumably to try to make it even simpler, seems likely to not have helped), and just approximated based on averages. Plagiarism on this scale leads to a soupy slop. You know what Cuso could have done? He could have Googled a recipe, and visited a trusted site. Or, and sorry to be quite so old-fashioned, looked in a recipe book! Hell, he could have looked up instructions on Facebook. Oh, and here's Jack Mancuso making a Korean-inspired steak sauce in 2023.

Share

Share

Copy Link

Meta's live demonstration of its AI-powered smart glasses at Meta Connect 2025 went awry, highlighting concerns about the practicality and reliability of AI in everyday tasks like cooking.

Meta's AI Demo Disaster

At Meta Connect 2025, CEO Mark Zuckerberg's attempt to showcase the company's latest AI technology in smart glasses turned into a comedic disaster, raising serious questions about the practical applications of AI in everyday tasks

1

2

.The demonstration, featuring YouTuber Jack "Chef Cuso" Mancuso, was designed to highlight how Meta's AI could assist in cooking by recognizing ingredients and providing step-by-step instructions for making Korean barbecue sauce

1

2

. However, the AI failed spectacularly, unable to provide coherent instructions and skipping crucial steps in the recipe1

2

.The Promise vs. The Reality

Meta's AI was supposed to leverage the smart glasses' cameras to identify available ingredients and guide the user through the cooking process

1

. In theory, this technology could offer hands-free assistance for various tasks. However, the demo revealed significant limitations:- Lack of context awareness: The AI assumed steps had been completed when they hadn't, demonstrating an inability to understand the current state of the task

1

2

. - Poor step-by-step guidance: Instead of providing clear, sequential instructions, the AI jumped ahead in the recipe, confusing the demonstrator

1

2

. - Limited practical value: Critics argue that traditional recipes or cooking videos might be more effective and efficient for learning new dishes

1

.

Broader Implications for AI Technology

This incident has sparked a broader discussion about the current state and practical applications of AI:

- Pattern recognition vs. true intelligence: Some experts argue that current AI systems are essentially advanced pattern recognition tools rather than truly intelligent entities

2

. - Overreliance on AI: The demo highlights the potential pitfalls of relying too heavily on AI for tasks that may be better suited to traditional methods or human expertise

1

. - Privacy concerns: The use of AI-powered cameras in smart glasses raises questions about data collection and user privacy

2

.

Related Stories

Industry Reactions and Future Outlook

The failed demonstration has led to both criticism and skepticism within the tech industry. Some view it as a setback for Meta's AI ambitions, while others see it as a reality check for the entire field of consumer AI applications

1

2

.As companies continue to invest heavily in AI technology, this incident serves as a reminder of the challenges in developing truly useful and reliable AI systems for everyday tasks. It also underscores the importance of managing public expectations and being transparent about the current limitations of AI technology

1

2

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Pentagon Summons Anthropic CEO as $200M Contract Faces Supply Chain Risk Over AI Restrictions

Policy and Regulation

3

Canada Summons OpenAI Executives After ChatGPT User Became Mass Shooting Suspect

Policy and Regulation