Meta Unveils SAM 2: A Revolutionary AI Model for Video Object Manipulation

3 Sources

3 Sources

[1]

Meta Unveils SAM 2 AI Model With Powerful Video Editing Capabilities - MySmartPrice

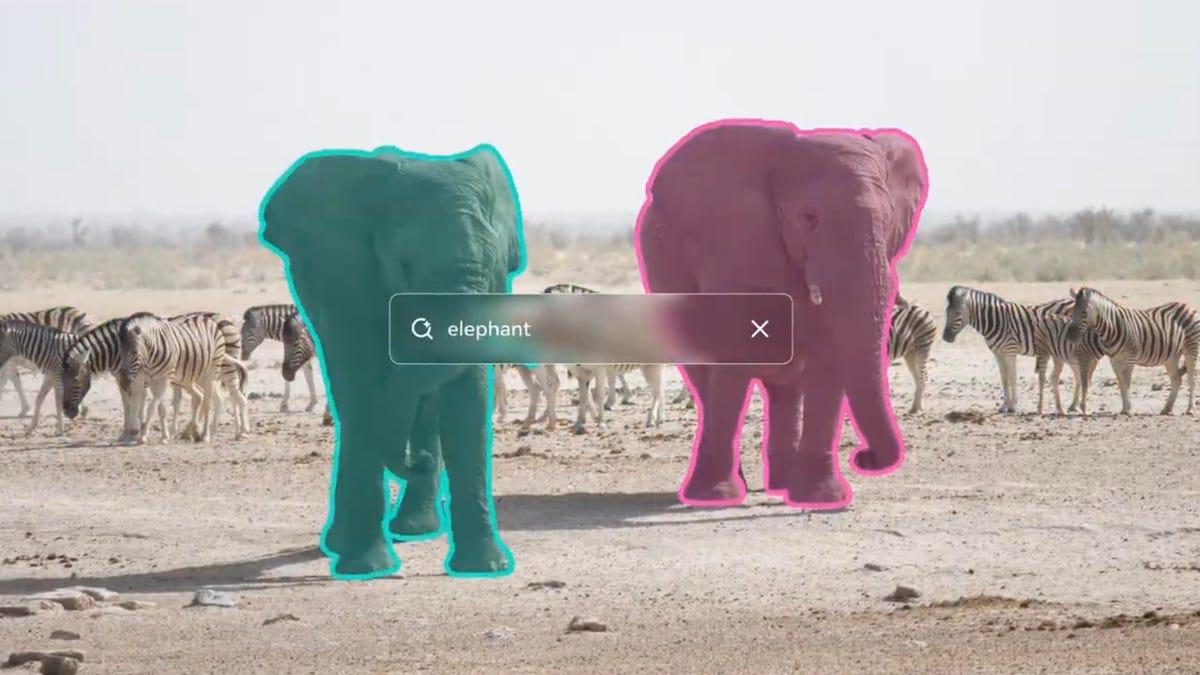

The model is expected to be made available in Instagram's video editing tools, which are currently powered by SAM 1. Meta has unveiled its new AI model, Segment Anything Model 2, or SAM 2. It can track a particular object or subject in a video in real-time and enable users to add visual effects. The company has made this AI model open-source, allowing developers to create video editing tools. Here are the details of Meta's SAM 2 AI model. The SAM 2 AI model uses object segmentation to identify the pixels of the main subject in a video. It automatically creates a different layer for that subject and allows users to add special visual effects while keeping the background of the video unchanged. This concept is also known as masking in traditional video editing. Here's an example of how Meta's SAM 2 AI model works. The sample video shows a person riding a skateboard. The SAM 2 AI model can identify the person's exact dimensions and differentiate it from the background. Special VFX can be added to highlight only the person or create an object-tracking pattern around the subject. The entire process happens within seconds using AI, in almost real-time. Emulating the same visual effects in legacy manual video editing takes a lot more effort and professional-grade skills. Advanced software is needed for such masking techniques. The SAM 2 AI model simplifies the process to a large extent by automating it. It is similar to background removal tools, which were extremely difficult before AI but have now become easier with readily available tools like remove.bg. Meta's SAM 2 AI model will be integrated across Meta's family apps, such as Instagram. Features such as backdrops and cutouts in Instagram previously used the first version of SAM and will soon be upgraded to SAM 2. It is possible that users could soon be able to edit videos in the Instagram app using AI directly, but Meta has not confirmed any details yet. Meta CEO Mark Zuckerberg also mentions that the SAM 2 AI model also has applications in the medical field. It can be used to identify bacteria, viruses, and other biological elements to improve medical diagnosis. However, Meta has not disclosed any additional details about the concept yet, possibly due to regulatory approvals. Meta has made the SAM 2 AI model open source under an Apache 2.0 license. Software developers can implement this AI model to create video editing tools using all of SAM 2's object tracking and segmentation functions. This also includes provisional access to the SA-V dataset on which SAM 2 is trained. It contains 4.5 times more videos and 53 times higher annotations than the current largest video segmentation dataset. It has over 6,00,000 masklets that serve as training data, helping the AI model to identify the subject in a video in a more efficient manner. Meta has not mentioned the availability of SAM 2 in its Meta AI chatbot. We can expect it to be implemented in Instagram and Facebook video and editing tools first.

[2]

Meta's AI video model Segment Anything Model 2 AI lets you add special effects to objects in a video

Meta has introduced a new AI model called Segment Anything Model 2, or SAM 2, which it says can tell which pixels belong to a certain object in videos. The Facebook-parent's previously released Segment Anything Model from last year helped in the development of features in Instagram, such as 'Backdrop' and 'Cutouts.' SAM 2 is meant for video media, with Meta claiming that SAM 2 could "segment any object in an image or video, and consistently follow it across all frames of a video in real-time." Apart from social media and mixed reality use cases, however, Meta explained that its older segmentation model was used in oceanic research as well as disaster relief, apart from cancer screening. Meta Q2 ad sales expected to rise; focus on AI roadmap, costs (Unravel the complexities of our digital world on The Interface podcast, where business leaders and scientists share insights that shape tomorrow's innovation. The Interface is also available on YouTube, Apple Podcasts and Spotify.) How segmentation works in Meta's SAM 2 | Photo Credit: Meta "SAM 2 could also be used to track a target object in a video to aid in faster annotation of visual data for training computer vision systems, including the ones used in autonomous vehicles. It could also enable creative ways of selecting and interacting with objects in real-time or in live videos," said Meta in a blog post. The social media company invited users to try out the model, which is being released under a "permissive" Apache 2.0 license. Meta CEO Mark Zuckerberg discussed the new model with Nvidia CEO Jensen Huang, hailing its scientific applications, reported TechCrunch. Read Comments

[3]

Meta SAM 2 computer vision AI model shows impressive results

Meta has launched SAM 2, an advanced computer vision model that significantly improves real-time video segmentation and object detection. You can now enhance your video editing and analysis projects with an AI tool that's six times faster and more accurate than its predecessor? This advanced computer vision model excels in real-time video segmentation and object detection, making it a game-changer for developers and researchers. Released with open-source code under the Apache 2 license. Building on its predecessor, SAM, this model offers enhanced efficiency and versatility for various applications. Released with open-source code and weights under the Apache 2 license, SAM 2 aims to foster accessibility and innovation in the computer vision field. SAM 2 is the successor to Meta's original Segment Anything Model (SAM). It focuses on real-time video segmentation and object detection, delivering substantial improvements in speed and accuracy. This model is designed to handle complex tasks more efficiently, making it a valuable tool for developers and researchers. SAM 2 excels in real-time inference, achieving up to 44 frames per second. This allows you to prompt the model for specific segmentation tasks, ensuring precise and efficient results. The model's performance is six times faster than the original SAM, thanks to its improved architecture and optimized components. The enhanced capabilities of SAM 2 enable it to tackle a wide range of computer vision challenges. Whether you need to track objects in videos, generate automatic masks, or apply creative effects, SAM 2 provides the necessary tools and performance to accomplish these tasks effectively. Here are a selection of other articles from our extensive library of content you may find of interest on the subject of Meta AI models : The SAM 2 model features a simplified architecture with unified components, enhancing its overall performance. A key technical enhancement is the incorporation of temporal memory, which allows the model to maintain context over time. This feature is crucial for tasks requiring continuous tracking and segmentation in video streams. Other notable improvements in SAM 2 include: These technical advancements contribute to SAM 2's exceptional performance and make it a powerful tool for a wide range of computer vision applications. Meta has made SAM 2 available as an open-source project under the Apache 2 license. This release includes the model weights and a comprehensive dataset of 51,000 videos and 600,000 masklets. By providing these resources, Meta aims to foster innovation and collaboration within the computer vision community. The open-source nature of SAM 2 allows developers and researchers to build upon its capabilities and adapt it to their specific needs. Whether you are working on a commercial project or conducting academic research, SAM 2 provides a solid foundation for exploring new possibilities in computer vision. SAM 2's capabilities extend to various applications, including the creation of datasets for training specialized models. You can use it for annotating data, tracking objects in videos, and applying creative effects. The model's versatility makes it suitable for integration into video editing and analysis software, enhancing their functionality and performance. Some potential applications of SAM 2 include: The possibilities are vast, and SAM 2 provides the necessary tools to explore and innovate in these areas. Meta has provided practical demonstrations to illustrate SAM 2's capabilities. These include tracking objects in videos, such as a ball and a dog, and applying effects like pixelation and emoji overlays. You can also upload your own videos to see the model in action, performing tasks like tracking and segmentation with impressive accuracy. These demonstrations showcase the real-world applicability of SAM 2 and inspire developers to leverage its capabilities in their own projects. By seeing the model in action, you can gain a better understanding of its potential and how it can be used to solve specific computer vision challenges. Meta has equipped developers with example notebooks for automatic mask generation and video segmentation. These resources allow you to prompt the model with points or bounding boxes, ensuring precise segmentation. By offering these tools, Meta supports the development of new applications and technologies that leverage SAM 2's advanced capabilities. The provided resources serve as a starting point for developers to explore and experiment with SAM 2. Whether you are a beginner or an experienced practitioner in computer vision, these resources offer valuable insights and guidance on how to effectively use the model's features. The release of SAM 2 promotes accessibility and innovation in the field of computer vision. Its open-source nature supports both commercial and research applications, encouraging the development of new tools and technologies. By leveraging SAM 2's capabilities, you can contribute to the advancement of computer vision and explore new possibilities in video segmentation and object detection. As the demand for intelligent video analysis grows across various industries, SAM 2 positions itself as a key enabler for developing innovative solutions. From autonomous vehicles to smart surveillance systems, the potential applications of SAM 2 are vast and far-reaching. To learn more jump over to the official Meta website.

Share

Share

Copy Link

Meta has introduced SAM 2, an advanced AI model that can identify and manipulate objects in videos. This technology represents a significant leap in computer vision and video editing capabilities.

Meta's Groundbreaking SAM 2 AI Model

Meta, the parent company of Facebook, has unveiled its latest artificial intelligence breakthrough: the Segment Anything Model 2 (SAM 2). This advanced AI model represents a significant evolution in computer vision technology, particularly in the realm of video processing and manipulation

1

.Enhanced Video Object Manipulation

SAM 2 builds upon its predecessor by introducing remarkable capabilities for video editing. The model can now identify and track objects within videos, allowing users to apply effects or manipulate specific elements throughout the footage. This functionality opens up new possibilities for content creators and video editors, streamlining complex tasks that previously required extensive manual work

2

.Technical Advancements and Efficiency

The new AI model boasts impressive technical specifications. SAM 2 is notably more efficient than its predecessor, with a 5x reduction in model size and a 2.5x increase in inference speed. These improvements make the technology more accessible and practical for a wider range of applications

3

.Versatile Applications

SAM 2's capabilities extend beyond simple object recognition. The model can perform intricate tasks such as identifying specific body parts or distinguishing between different types of objects within a video. This level of detail and accuracy makes SAM 2 potentially valuable across various industries, from entertainment and advertising to security and surveillance

1

.Ethical Considerations and Future Implications

While the technology promises exciting possibilities, it also raises important questions about privacy and the potential for misuse. As AI models like SAM 2 become more sophisticated, there is a growing need for discussions around ethical guidelines and responsible implementation of such technologies

2

.Related Stories

Open-Source Approach

Meta has chosen to make SAM 2 open-source, following the approach taken with the original SAM model. This decision allows researchers and developers worldwide to access, study, and build upon the technology. By fostering collaboration and transparency, Meta aims to accelerate advancements in AI and computer vision

3

.Impact on Content Creation

The introduction of SAM 2 is expected to have a significant impact on the content creation industry. Video editors and visual effects artists may find their workflows dramatically simplified, potentially leading to more creative and innovative video content. Additionally, the technology could democratize advanced video editing techniques, making them accessible to a broader range of creators

1

.References

Summarized by

Navi

[2]

[3]

Related Stories

Meta Unveils SAM 3 and SAM 3D: Advanced AI Models for Visual Intelligence and 3D Reconstruction

20 Nov 2025•Technology

Meta Unveils Suite of Advanced AI Models and Tools, Emphasizing Open-Source Collaboration

20 Oct 2024•Technology

Meta releases SAM Audio AI model to isolate and edit sounds with simple text prompts

16 Dec 2025•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Trump orders federal agencies to ban Anthropic after Pentagon dispute over AI surveillance

Policy and Regulation

3

Google releases Nano Banana 2 AI image model with Pro quality at Flash speed

Technology