Microsoft's OneDrive Introduces AI-Powered Facial Recognition with Controversial Limitations

4 Sources

4 Sources

[1]

Microsoft OneDrive Limits How Often You Can Adjust Facial-Recognition Settings

(Credit: Mateusz Slodkowski/SOPA Images/LightRocket via Getty Images) Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Microsoft has been experimenting with facial-recognition tech in OneDrive to help you group and search your photos by person, but it's limiting the number of times you can turn it on and off. As Slashdot reports, the feature is enabled by default for those with early access. If you get it, you'll see an update in the privacy section of the app that says "OneDrive uses AI to recognize faces in your photos." (Its support page still says it's "coming soon.") "Microsoft collects, uses, and stores facial scans and biometric information from your photos through the OneDrive app for facial grouping technologies," the support page says. "This helps you quickly and easily organize photos of friends and family." Face groupings are not public, even if you share a photo or album. "Microsoft does not use any of your facial scans and biometric information to train or improve the AI model overall," it says. When asked why this feature is opt-out, a spokesperson told Slashdot that "Microsoft OneDrive inherits privacy features and settings from Microsoft 365 and SharePoint, where applicable." One puzzling restriction: Microsoft will only let you toggle the feature on and off three times per year. When Slashdot asked about that, the company declined to comment. However, it does not appear to be brand new. A screenshot shared on Jan. 1 on the Microsoft forums includes the toggle that says, "You can only turn off this setting 3 times a year." So, it seems to be generating attention now that more people are getting access. On its support page, Microsoft notes that "When you turn off this feature in your OneDrive settings, all facial grouping data will be permanently removed within 30 days." So, it likely doesn't want to devote server power to deleting facial grouping data over and over. Google Photos has had a similar feature, known as Face Grouping, since 2015, which works for both people and animals. And it does not have a limit on how many times you can turn it on or off. Apple Photos can also identify people and pets. To see if you're part of the preview on OneDrive, head to Settings > Privacy and permissions. You'll find a section called Features, which then opens with an option called People section. Toggle this off, and it won't use its AI to monitor your photos anymore. Microsoft Photos previously had a face-grouping feature, but that was removed earlier this year.

[2]

Microsoft previews People grouping in OneDrive photos

Microsoft's OneDrive is increasing the creepiness quotient by using AI to spot faces in photos and group images accordingly. Don't worry, it can be turned off - three times a year. This writer has been enrolled in a OneDrive feature on mobile to group photos by people. We're not alone - others have also reported it turning up on their devices. According to Microsoft, the feature is coming soon but has yet to be released - and it's likely to send a shiver down the spines of privacy campaigners. It relies on users telling OneDrive who the face is in a given image, and will then create a collection of photos based on the identified person. Obviously, user interaction is required, and asking a user to identify faces in an image is hardly innovative. However, OneDrive's grouping of images based on an identified face is different. According to Microsoft's documentation, a user can only change the setting to enable or disable the new People section three times a year. The Register asked Microsoft why only three times, but the company has yet to provide an explanation. Unsurprisingly, Microsoft noted: "Some regions require your consent before we can begin processing your photos" - we can imagine a number of regulators wanting to discuss this. It took until July 2025 before Microsoft was able to make Recall available in the European Economic Area (EEA), partly due to how data was processed. However, it is that seemingly arbitrary three-times-a-year limit applied to the People section that is most concerning. Why not four? Why not as many times as a user wants? Turning it off will result in all facial grouping data being permanently removed in 30 days. There is also no indication of what Microsoft means by three times a year. Does the year run from when the setting is first changed, from when the face ID began, or on another date? This feature is currently in preview and has yet to reach all users. While Microsoft is clear that it won't use facial scans or biometric data in the training of its AI models, and that grouping data can't be shared (for example, if a user shares a photo or album with another user), the idea of images being used in this way might make some customers uncomfortable. ®

[3]

Preview users have noticed OneDrive's AI-driven face recognition setting is opt-out, and can only be turned off 'three times a year'

Microsoft told Slashdot "privacy is built into all Microsoft OneDrive experiences," but those protective of their biometrics may disagree. Is AI the future, or just an ecologically ruinous, dangerously huge financial bubble? That's hard to say, but it's certainly a part of Microsoft's future; on top of pushing Copilot as a non-negotiable part of the Microsoft 365 suite and reportedly mandating its employees put AI to work, the tech giant is reportedly rolling out a new biometric collection setting that can only be turned off three times a year. A Slashdot story yesterday relayed an editor's experience when he noticed that, after uploading a photo from his phone's local storage to Microsoft's OneDrive cloud storage, the privacy and permissions page noted an opt-out "people section" feature wherein "OneDrive uses AI to recognize faces in your photos to help you find photos of friends and family." When he went to turn it off, a disclaimer stated: "You can only turn off this setting three times a year." It doesn't sound great, but users are speculating about what might motivate the restriction. Commenter on the Slashdot article AmiMoJo replied, "There might be a more benign reason for it. In GDPR countries, if you turn it off they will probably need to delete all the biometric data ... if people toggle it too often, it's going to consume a large amount of CPU time." Regardless, the choice to make it opt-out rather than opt-in is sure to raise eyebrows among privacy advocates. Speaking with Slashdot, Microsoft chose not to explain the reasoning behind the rule, but said "OneDrive inherits privacy features and settings from Microsoft 365 and SharePoint, where applicable." The same article included a quote from a security activist from the Electronic Frontier Foundation, Thorin Klosowski, who said: "Any feature related to privacy really should be opt-in and companies should provide clear documentation so its users can understand the risks and benefits to make that choice for themselves." I can't definitively say why the setting was rolled out this way, but it's hard not to feel like this is yet another surreptitious means for AI to corrode privacy standards and encroach where it's not always welcome. In a vacuum, it's thorny -- but paired with the nascent prominence of Copilot, LLM chatbots, Microsoft's internal insistence on AI, and so on, it feels like another data point in a trend worthy of scrutiny.

[4]

Microsoft adds AI facial recognition to OneDrive, can only be disabled three times per year

Microsoft has rolled out an update for OneDrive that added AI facial recognition to the digital storage service, specifically for photos. However, the feature reportedly can only be disabled three times per year. The discovery comes from Slashdot, which discovered Microsoft's warning after uploading an image from local storage on their smartphone to Microsoft's OneDrive"file-hosting app. After the upload was complete, the user ventured to the Privacy and Permissions section of the app and discovered the"People Section"feature, along with the description"OneDrive uses AI to recognize faces in your photos." According to the description, OneDrive uses AI to recognize faces in photos to assist users in sifting through their collection of photos to find specific people, such as friends or family. Think of this feature as Google Photos' "People" search, but in OneDrive. What is probably the most concerning aspect of this feature is the warning, "You can only turn off this setting 3 times a year." Unfortunately, and unsurprisingly, Microsoft doesn't provide an explanation as to why users can only switch this feature on/off three times a year. If I were to guess why, it probably has something to do with the processing power required to carry out the process, whether that be on-device or on Microsoft's end. If that is the case, I would argue Microsoft shouldn't roll out such a feature that is so demanding on either side of it, especially one that potentially encroaches on the privacy of users. Microsoft provided a non-statement to Slashdot about the feature, and despite the opportunity to shed some light on the reasoning behind the limited disable count, the company responded with this statement: "OneDrive inherits privacy features and settings from Microsoft 365 and SharePoint, where applicable."

Share

Share

Copy Link

Microsoft has rolled out a new AI-driven facial recognition feature for OneDrive, raising privacy concerns due to its opt-out nature and a puzzling restriction on disabling the feature.

Microsoft Introduces AI-Powered Facial Recognition in OneDrive

Microsoft has introduced a new AI-powered facial recognition feature within its OneDrive cloud storage service. This functionality, currently in preview, allows the AI to identify and group faces in users' photos, aiming to simplify photo organization and search by individuals

1

. This feature mirrors capabilities already present in services like Google Photos and Apple Photos, which have offered similar face grouping for several years1

.

Source: TweakTown

Privacy Concerns and User Control Limitations

The rollout has immediately sparked privacy concerns, primarily because the feature is enabled by default for early access users, making it an opt-out rather than an opt-in system

3

. Further escalating the controversy is an unusual restriction: users are permitted to toggle the facial recognition setting on or off only three times within a calendar year1

2

3

4

. Microsoft has not yet provided a clear justification for this strict limitation, prompting widespread discussion and expert analysis regarding user autonomy over personal data.

Source: PC Gamer

Related Stories

Microsoft's Assurances and Public Reaction

Despite these concerns, Microsoft has issued statements addressing data privacy. The company asserts that face groupings are private to the user and not shared publicly, even if photos are made available. Moreover, Microsoft claims it does not utilize facial scans or biometric data derived from this feature to train or enhance its underlying AI models

1

. If a user disables the feature, Microsoft has committed to permanently deleting all associated facial grouping data within 30 days1

.

Source: The Register

However, privacy advocates, including the Electronic Frontier Foundation, continue to emphasize the importance of opt-in consent for such features. Thorin Klosowski from EFF highlighted, "Any feature related to privacy really should be opt-in and companies should provide clear documentation so its users can understand the risks and benefits to make that choice for themselves"

3

. The ongoing debate underscores the broader challenges in balancing AI innovation with robust user privacy protections.References

Summarized by

Navi

[2]

Related Stories

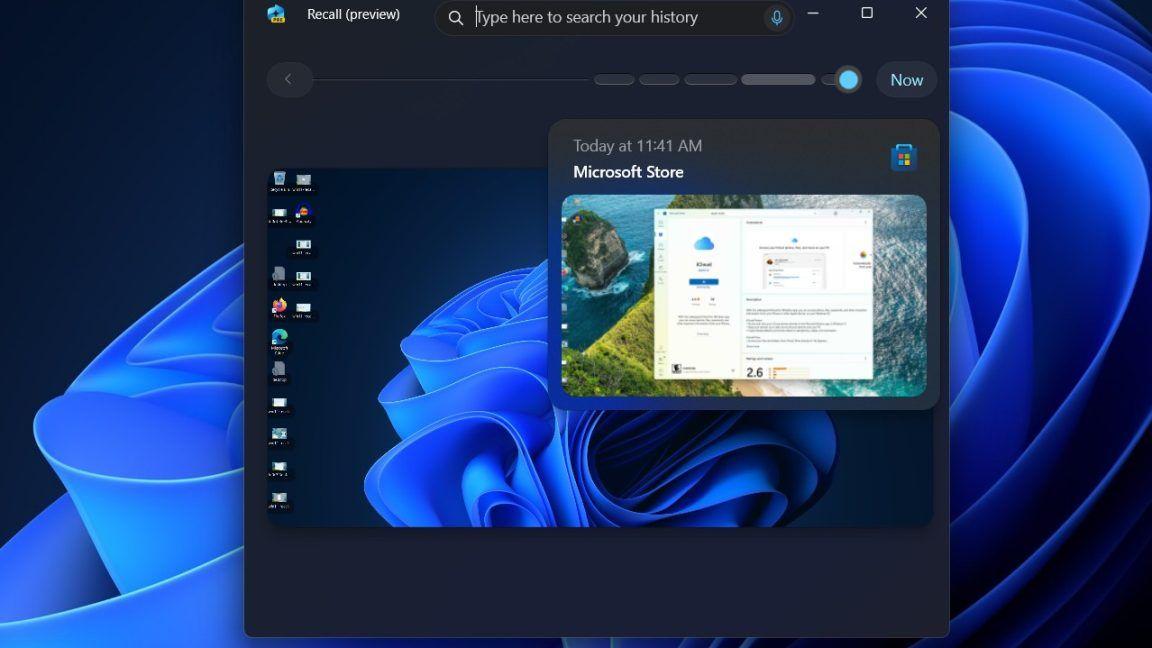

Microsoft's Controversial Recall Feature Returns to Windows 11, Sparking Privacy Concerns

11 Apr 2025•Technology

Microsoft Relaunches Controversial Recall Feature for Copilot+ PCs Amid Privacy Concerns

26 Apr 2025•Technology

Facebook's New AI Feature Seeks Access to Users' Unpublished Photos, Raising Privacy Concerns

28 Jun 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation