Microsoft's AI Chip Strategy: Shifting Away from Nvidia and AMD

4 Sources

4 Sources

[1]

Microsoft aims to swap AMD, Nvidia GPUs for its own AI chips

Microsoft buys a lot of GPUs from both Nvidia and AMD. But moving forward, Redmond's leaders want to shift the majority of its AI workloads from GPUs to its own homegrown accelerators. The software titan is rather late to the custom silicon party. While Amazon and Google have been building custom CPUs and AI accelerators for years, Microsoft only revealed its Maia AI accelerators in late 2023. Driving the transition is a focus on performance per dollar, which for a hyperscale cloud provider is arguably the only metric that really matters. Speaking during a fireside chat moderated by CNBC on Wednesday, Microsoft CTO Kevin Scott said that up to this point, Nvidia has offered the best price-performance, but he's willing to entertain anything in order to meet demand. Going forward, Scott suggested Microsoft hopes to use its homegrown chips for the majority of its datacenter workloads. When asked, "Is the longer term idea to have mainly Microsoft silicon in the data center?" Scott responded, "Yeah, absolutely." Later, he told CNBC, "It's about the entire system design. It's the networks and cooling, and you want to be able to have the freedom to make decisions that you need to make in order to really optimize your compute for the workload." With its first in-house AI accelerator, the Maia 100, Microsoft was able to free up GPU capacity by shifting OpenAI's GPT-3.5 to its own silicon back in 2023. However, with just 800 teraFLOPS of BF16 performance, 64GB of HBM2e, and 1.8TB/s of memory bandwidth, the chip fell well short of competing GPUs from Nvidia and AMD. Microsoft is reportedly in the process of bringing a second-generation Maia accelerator to market next year that will no doubt offer more competitive compute, memory, and interconnect performance. But while we may see a change in the mix of GPUs to AI ASICs in Microsoft data centers moving forward, they're unlikely to replace Nvidia and AMD's chips entirely. Over the past few years, Google and Amazon have deployed tens of thousands of their TPUs and Trainium accelerators. While these chips have helped them secure some high-profile customer wins, Anthropic for example, these chips are more often used to accelerate the company's own in-house workloads. As such, we continue to see large-scale Nvidia and AMD GPU deployments on these cloud platforms, in part because customers still want them. It should be noted that AI accelerators aren't the only custom chips Microsoft has been working on. Redmond also has its own CPU called Cobalt and a whole host of platform security silicon designed to accelerate cryptography and safeguard key exchanges across its vast datacenter domains. ®

[2]

Microsoft wants to mainly use its own AI data center chips in the future

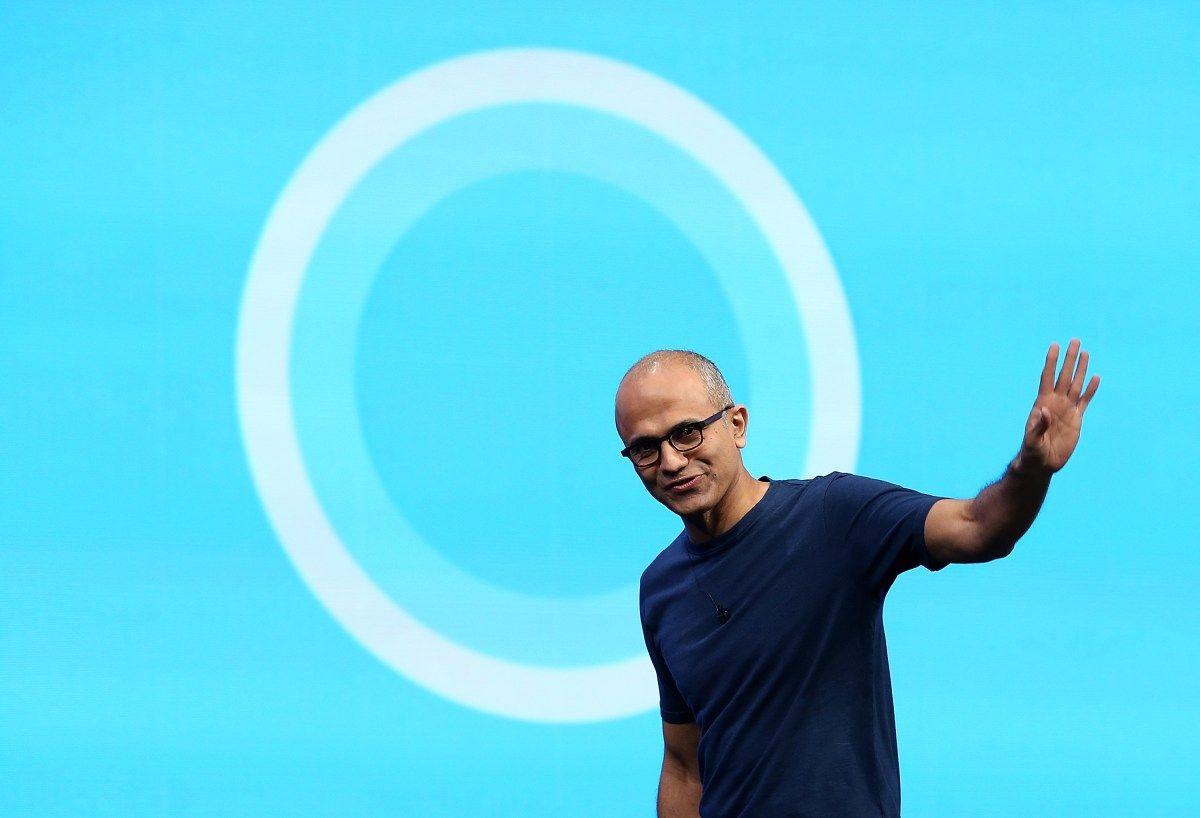

Microsoft Chief Technology Officer and Executive Vice President of Artificial Intelligence Kevin Scott speaks at the Microsoft Briefing event at the Seattle Convention Center Summit Building in Seattle, Washington, on May 21, 2024. Microsoft would like to mainly use its own chips in its data centers in the future, the tech giant's chief technology officer said on Wednesday, in a move which could reduce its reliance on major players like Nvidia and AMD. Semiconductors and the servers that sit inside data centers have underpinned the development of artificial intelligence models and applications. Tech giant Nvidia has dominated the space so far with its graphics processing unit (GPUs), while rival AMD has a smaller slice of the pie. But major cloud computing players, including Microsoft, have also designed their own custom chips for specifically for data centers. Kevin Scott, chief technology officer at Microsoft, laid out the company's strategy around chips for AI during a fireside chat at Italian Tech Week that was moderated by CNBC. Microsoft primarily uses chips from Nvidia and AMD in its own data centers. The focus has been on picking the right silicon -- another shorthand term for semiconductor -- that offers "the best price performance" per chip. "We're not religious about what the chips are. And ... that has meant the best price performance solution has been Nvidia for years and years now," Scott said. "We we will literally entertain anything in order to ensure that we've got enough capacity to meet this demand." At the same time, Microsoft has been using some of its own chips. In 2023, Microsoft launched the Azure Maia AI Accelerator which is designed for AI workloads, as well as the Cobalt CPU. In addition, the firm is reportedly working on its next-generation of semiconductor products. Last week, the U.S. technology giant unveiled new cooling technology using "microfluids" to solve the issue of overheating chips. When asked if the longer term plan is to have mainly Microsoft chips in the firm's own data centers, Scott said: "Absolutely," adding that the company is using "lots of Microsoft" silicon right now. The focus on chips is part of a strategy to eventually design an entire system that goes into the data center, Scott said. "It's about the entire system design. It's the networks and the cooling and you want to be able to have the freedom to make the decisions that you need to make in order to really optimize your compute to the workload," Scott said. Microsoft and its rivals Google and Amazon are designing their own chips to not only reduce reliance on Nvidia and AMD, but also to make their products more efficient for their specific requirements.

[3]

Microsoft outlines plan to move beyond Nvidia in powering AI data centers

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. Looking ahead: AI's appetite for compute is reshaping the data center race. For now, Nvidia's powerful accelerators dominate the infrastructure that powers massive AI workloads. But that grip could loosen soon, as Microsoft and other Big Tech companies pour billions into developing their own chips and expanding capacity to keep pace with ever-growing demand. Microsoft currently relies on Nvidia AI chips for its data centers but is actively working to implement its own hardware solutions in the future. According to Chief Technology Officer Kevin Scott, the company is building long-term plans that would not require chips from third-party providers. Scott shared details about Microsoft's strategy during a recent discussion at Italian Tech Week, moderated by CNBC hosts. Redmond does not place "faith" in any specific AI accelerator, Scott said, instead opting for whichever solution offers the best balance of price and performance. Nvidia's GPU technology has met Microsoft's (and other Big Tech companies') needs for years, Scott acknowledged. However, he added that Microsoft "will literally entertain anything to ensure we have enough capacity to meet this demand." Redmond currently relies on a diverse fleet of accelerators, including chips from Nvidia, AMD, and other partners. The company also develops its own solutions, such as the Arm-based Cobalt CPU and the Maia dedicated AI accelerator. Microsoft is now working on the next generation of its hardware, Scott confirmed, though he did not provide further details. Microsoft already uses numerous in-house silicon designs in its data centers and is likely to continue expanding these projects. Scott revealed that the company's goal is to build complete, end-to-end systems - including network and cooling infrastructure - allowing total freedom to optimize performance. In fact, Microsoft has been increasingly transparent about its data center ambitions. The company recently announced the development of the world's most powerful AI data center, which is expected to deliver a substantial performance boost over today's top supercomputers. A new microfluidic cooling solution is also in development. Despite growing warnings about a potential AI bubble that could disrupt the IT industry, Scott emphasized that computing capacity still falls short of demand. Microsoft anticipates a "massive" crunch in the near future, as corporations struggle to expand capacity quickly enough to keep up with the surge in AI workloads triggered by ChatGPT and similar applications.

[4]

Microsoft wants to rely more on its own chips for AI

On Wednesday,Microsoft confirmed its intention to eventually favor its own semiconductors to power its artificial intelligence data centers. Kevin Scott, chief technology officer and executive vice president in charge of AI, explained that the company wanted to optimize its technological autonomy and price-performance ratio in a context of explosive demand for computing power. Since 2023, Microsoft has introduced two of its own components: the Azure Maia AI Accelerator, designed for AI workloads, and the Cobalt processor, intended for other uses in its data centers. The group is reportedly already working on a new generation. Scott said that the goal was to control the entire architecture, from silicon to networks to cooling, in order to best adapt the infrastructure to specific needs. This strategy brings Microsoft closer to Amazon and Alphabet, which are also developing their own chips to reduce their dependence on Nvidia and AMD. However, the pressure on computing capacity remains intense. Along with Meta, Amazon, and Alphabet, Microsoft plans to invest heavily, for a total of over $300bn this year. According to Scott, even the most ambitious forecasts are not enough: "Since the launch of ChatGPT, it's almost impossible to build fast enough to keep up."

Share

Share

Copy Link

Microsoft plans to transition from relying on Nvidia and AMD GPUs to using its own custom AI chips in data centers. This move aims to optimize performance and meet growing AI computing demands.

Microsoft's Shift to Custom AI Chips

Microsoft, a major player in the cloud computing and AI space, is planning a significant shift in its data center strategy. The tech giant aims to reduce its reliance on Nvidia and AMD GPUs by developing and deploying its own custom AI chips

1

2

. This move is driven by the need to optimize performance and meet the growing demands of AI workloads.The Drive for Custom Silicon

Kevin Scott, Microsoft's Chief Technology Officer, revealed the company's long-term vision during a fireside chat at Italian Tech Week. Scott emphasized that Microsoft's primary focus is on achieving the best price-performance ratio for its AI workloads

2

. While Nvidia has been the go-to solution for years, Microsoft is now willing to explore all options to meet the surging demand for AI computing power.

Source: CNBC

Microsoft's Custom Chip Journey

The company's foray into custom silicon began in late 2023 with the introduction of the Maia 100 AI accelerator and the Cobalt CPU

1

3

. Although the first-generation Maia chip fell short of competing GPUs in terms of performance, Microsoft is reportedly working on a second-generation accelerator that promises more competitive compute, memory, and interconnect capabilities1

.

Source: The Register

Holistic System Design Approach

Scott highlighted that Microsoft's strategy goes beyond just chip development. The company aims to control the entire system design, including networks and cooling infrastructure

2

. This holistic approach allows Microsoft to make decisions that optimize compute for specific workloads, potentially giving them an edge in the highly competitive cloud AI market.Related Stories

Industry-wide Trend

Microsoft's move aligns with a broader industry trend. Tech giants like Google and Amazon have been developing their own custom chips for years, deploying tens of thousands of TPUs and Trainium accelerators in their data centers

1

4

. This shift towards custom silicon is driven by the need for better performance, cost-efficiency, and reduced dependence on third-party suppliers.

Source: TechSpot

Challenges and Future Outlook

Despite the push for custom chips, Microsoft and its competitors face significant challenges. The demand for AI computing power continues to outpace supply, with Scott warning of a "massive" capacity crunch in the near future

3

. To address this, major tech companies, including Microsoft, Meta, Amazon, and Alphabet, are planning to invest over $300 billion in infrastructure this year alone4

.As Microsoft continues to develop its custom chip capabilities, it's unlikely to completely abandon Nvidia and AMD GPUs in the short term. The transition will be gradual, with a mix of third-party and in-house solutions powering Microsoft's AI workloads for the foreseeable future

1

.References

Summarized by

Navi

[1]

[4]

Related Stories

Microsoft deploys Maia 200 AI chip but won't stop buying from Nvidia, Nadella confirms

26 Jan 2026•Technology

Microsoft Leads AI Race with Massive Nvidia Chip Acquisition

18 Dec 2024•Technology

Microsoft Unveils Custom Chips and Infrastructure Upgrades to Boost AI Performance and Efficiency

20 Nov 2024•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation