Nvidia drops $20 billion on AI chip startup Groq in largest acquisition ever

28 Sources

28 Sources

[1]

Nvidia acquires AI chip challenger Groq for $20B, report says | TechCrunch

Nvidia is buying the AI chip startup Groq for $20 billion, according to a report from CNBC. The purchase is expected to be Nvidia's largest ever, and with Groq on its side, Nvidia is poised to become even more dominant in chip manufacturing. As tech companies compete to grow their AI capabilities, they need computing power, and Nvidia's GPUs have emerged as the industry standard. But Groq has been working on a different type of chip called an LPU (language processing unit), which it has claimed can run LLMs at 10 times faster and using one-tenth the energy. Groq's CEO Jonathan Ross is known for this sort of innovation -- when he worked for Google, he helped invent the TPU (tensor processing unit), a custom AI accelerator chip. In September, Groq raised $750 million at a $6.9 billion valuation. Its growth has been quick and significant -- the company said that it powers the AI apps of more than 2 million developers, up from about 356,000 last year.

[2]

Nvidia buys AI chip startup Groq's assets for $20 billion in the company's biggest deal ever -- Transaction includes acquihires of key Groq employees, including CEO

Nvidia, the largest GPU manufacturer in the world and the linchpin of the AI data center buildout, has entered into a non-exclusive licensing agreement with AI chip rival Groq to use the company's intellectual property. The deal is valued at $20 billion and includes acquihires of key employees within the firm who will now be joining Nvidia. The firm spent $7 billion for Israeli chip company Mellanox in 2019, so the record has now been toppled. Groq is an American AI startup developing Language Processing Units (LPUs) that it positions as significantly more efficient and cost-effective than standard GPUs. Groq's LPUs are ASICs, which are seeing growing interest from many firms due to their custom design, which is better suited to certain AI tasks, such as large-scale inference. Groq argues that it excels in inference, having previously called it a high-volume, low-margin market. Nvidia is the largest benefactor of the AI boom because it supplies most of the world's data centers and has deals with essentially every AI constituent. Groq has accused Nvidia in the past of predatory tactics over exclusivity, claiming that potential customers remain fearful of Nvidia's inventory allotment if they're found talking to competitors, such as Groq, historically. Those concerns seem to have been laid to bed with the deal. "We plan to integrate Groq's low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads... While we are adding talented employees to our ranks and licensing Groq's IP, we are not acquiring Groq as a company." -- Jensen Huang, Nvidia CEO (as per CNBC). Earlier this year, Groq built its first data center in Europe to counter Nvidia's AI dominance, shaping up to be an underdog story that challenged a behemoth on cost-to-scale. Now, Groq's own LPUs will be deployed in Nvidia's AI factories, as the license covers "inference technology," according to SiliconANGLE. As part of this transaction, Groq founder and CEO Jonathan Ross and president Sunny Madra will be hired by Nvidia, along with other employees. Ross previously worked at Google, where he helped develop the Tensor Processing Unit (TPU). Simon Edwards, Groq's current finance chief, will step up as the new CEO under this refreshed structure. Acquiring a company's think tank like this is referred to as an acquihire. Among tech firms, it's a common way to evade antitrust scrutiny while gaining access to a company's assets/IP. Meta's AI hiring sprees also fall under this category, along with Nvidia's recent recruitment of Enfabrica's CEO. The announcement characterizes the deal as a non-exclusive agreement, meaning Groq will remain an independent entity, and GroqCloud, the company's platform through which it loans its LPUs, will continue to operate as before. Before this deal, Groq was valued at $6.9 billion in September of this year and was on pace to report $500 million in fiscal revenue.

[3]

Why did Nvidia really drop $20B on Groq?

El Reg speculates about what GPUzilla really gets out of the deal This summer, AI chip startup Groq raised $750 million at a valuation of $6.9 billion. Just three months later, Nvidia celebrated the holidays by dropping nearly three times that to license its technology and squirrel away its talent. In the days that followed, the armchair AI gurus of the web have been speculating wildly as to how Nvidia can justify spending $20 billion to get Groq's tech and people. Pundits believe Nvidia knows something we don't. Theories run the gamut from the deal signifying Nvidia intends to ditch HBM for SRAM, a play to secure additional foundry capacity from Samsung, or an attempt to quash a potential competitor. Some hold water better than others, and we certainly have a few of our own. Nvidia paid $20 billion to non-exclusively license Groq's intellectual property, which includes its language processing units (LPUs) and accompanying software libraries. Groq's LPUs form the foundation of its high-performance inference-as-a-service offering, which it will keep and continue to operate without interruption after the deal closes. The arrangement is clearly engineered to avoid regulatory scrutiny. Nvidia isn't buying Groq, it's licensing its tech. Except... it's totally buying Groq. How else to describe a deal that sees Groq's CEO Jonathan Ross and president Sunny Madra move to Nvidia, along with most of its engineering talent? Sure, Groq is technically sticking around as an independent company with Simon Edwards at the helm as its new CEO, but with much of its talent gone, it's hard to see how the chip startup survives long-term. The argument that Nvidia just wiped a competitor off the board therefore works. Whether that move was worth $20 billion is another matter, given it could provoke an antitrust lawsuit. One prominent theory about Nvidia's motives is that Groq's LPUs use static random access memory (SRAM), which is orders of magnitude faster than the high-bandwidth memory (HBM) found in GPUs today. A single HBM3e stack can achieve about 1 TB/s of memory bandwidth per module and 8 TB/s per GPU today. The SRAM in Groq's LPUs can be 10 to 80 times faster. Since large language model (LLM) inference is predominantly bound by memory bandwidth, Groq can achieve stupendously fast token generation rates. In Llama 3.3 70B, the benchmarkers at Artificial Analysis report that Groq's chips can churn out 350 tok/s. Performance is even better when running a mixture of experts models, like gpt-oss 120B, where the chips managed 465 tok/s. We're also in the middle of a global memory shortage and demand for HBM has never been higher. So, we understand why some might look at this deal and think Groq could help Nvidia cope with the looming memory crunch. The simplest answer is often the right one - just not this time. Sorry to have to tell you this, but there's nothing special about SRAM. It's in basically every modern processor, including Nvidia's chips. SRAM also has a pretty glaring downside. It's not exactly what you'd call space efficient. We're talking, at most, a few hundred megabytes per chip compared to 36 GB for a 12-high HBM3e stack for a total of 288 GB per GPU. Groq's LPUs have just 230 MB of SRAM each, which means you need hundreds or even thousands of them just to run a modest LLM. At 16-bit precision, you'd need 140 GB of memory to hold the model weights and an additional 40 GB for every 128,000 token sequence. Groq needed 574 LPUs stitched together using a high-speed interconnect fabric to run Llama 70B. You can get around this by building a bigger chip - each of Cerebras' WSE-3 wafers features more than 40 GB of SRAM on board, but these chips are the size of a dinner plate and consume 23 kilowatts. Anyway, Groq hasn't gone this route. Suffice it to say, if Nvidia wanted to make a chip that uses SRAM instead of HBM, it didn't need to buy Groq to do it. So, what did Nvidia throw money at Groq for? Our best guess is that it was really for Groq's "assembly line architecture." This is essentially a programmable data flow design built with the express purpose of accelerating the linear algebra calculations computed during inference. Most processors today use a Von Neumann architecture. Instructions are fetched from memory, decoded, executed, and then written to a register or stored in memory. Modern implementations introduce things like branch prediction, but the principles are largely the same. Data flow works on a different principle. Rather than a bunch of load-store operations, data flow architectures essentially process data as it's streamed through the chip. As Groq explains it, these data conveyor belts "move instructions and data between the chip's SIMD (single instruction/multiple data) function units." "At each step of the assembly process, the function unit receives instructions via the conveyor belt. The instructions inform the function unit where it should go to get the input data (which conveyor belt), which function it should perform with that data, and where it should place the output data." According to Groq, this architecture effectively eliminates bottlenecks that bog down GPUs, as it means the LPU is never waiting for memory or compute to catch up. Groq can make this happen with an LPU and between them, which is good news as Groq's LPUs aren't that potent on their own. On paper, they achieve BF16 perf, roughly on par with an RTX 3090 or the INT8 perf of an L40S. But, remember that's peak FLOPS under ideal circumstances. In theory, data flow architectures should be able to achieve better real-world performance for the same amount of power. It's worth pointing out that data flow architectures aren't restricted to SRAM-centric designs. For example, NextSilicon's data flow architecture uses HBM. Groq opted for an SRAM-only design because it kept things simple, but there's no reason Nvidia couldn't build a data flow accelerator based on Groq's IP using SRAM, HBM, or GDDR. So, if data flow is so much better, why isn't it more common? Because it's a royal pain to get right. But, Groq has managed to make it work, at least for inference. And, as Ai2's Tim Dettmers recently put it, chipmakers like Nvidia are quickly running out of levers they can pull to juice chip performance. Data flow gives Nvidia new techniques to apply as it seeks extra speed, and the deal with Groq means Jensen Huang's company is in a better position to commercialize it. Groq also provides Nvidia with an inference-optimized compute architecture, something that it's been sorely lacking. Where it fits, though, is a bit of a mystery. Most of Nvidia's "inference-optimized" chips, like the H200 or B300, aren't fundamentally different from their "mainstream" siblings. In fact, the only difference between the H100 and H200 was that the latter used faster, higher capacity HBM3e which just happens to benefit inference-heavy workloads. As a reminder, LLM inference can be broken into two stages: the compute-heavy prefill stage, during which the prompt is processed, and the memory-bandwidth-intensive decode phase during which the model generates output tokens. That's changing with Nvidia's Rubin generation of chips in 2026. Announced back in September, the Rubin CPX is designed specifically to accelerate the compute-intensive prefill phase of the inference pipeline, freeing up its HBM-packed Vera Rubin superchips to handle decode. This disaggregated architecture minimizes resource contention and helps to improve utilization and throughput. Groq's LPUs are optimized for inference by design, but they don't have enough SRAM to make for a very good decode accelerator. They could, however, be interesting as a speculative decoding part. If you're not familiar, speculative decoding is a technique which uses a small "draft" model to predict the output of a larger one. When those predictions are correct, system performance can double or triple, driving down cost per token. These speculative draft models are generally quite small, often consuming a few billion parameters at most, making Groq's existing chip designs plausible for such a design. Do we need a dedicated accelerator for speculative decoding? Sure, why not. Is it worth $20 billion? Depends on how you measure it. Compared with publicly traded companies whose total valuation is around $20 billion, like HP, Inc., or Figma, it may seem steep. But for Nvidia, $20 billion is a relatively affordable amount - it recorded $23 billion in cash flow from operations last quarter alone. In the end, it means more chips and accessories for Nvidia to sell. Perhaps the least likely take we've seen is the suggestion that Groq somehow opens up additional foundry capacity for Nvidia. Groq currently uses GlobalFoundries to make its chips, and plans to build its next-gen parts on Samsung's 4 nm process tech. Nvidia, by comparison, does nearly all of its fabrication at TSMC and is heavily reliant on the Taiwanese giant's advanced packaging tech. The problem with this theory is that it doesn't actually make any sense. It's not like Nvidia can't go to Samsung to fab its chips. In fact, Nvidia has fabbed chips at Samsung before - the Korean giant made most of Nvidia's Ampere generation product. Nvidia needed TSMC's advanced packaging tech for some parts like the A100, but it doesn't need the Taiwanese company to make Rubin CPX. Samsung or Intel can probably do the job. All of this takes time, and licensing Groq's IP and hiring its team doesn't change that. The reality is Nvidia may not do anything with Groq's current generation of LPUs. Jensen might just be playing the long game, as he's been known to do. ®

[4]

Nvidia to buy AI chip startup Groq for $20 billion, CNBC reports

Dec 24 (Reuters) - Nvidia (NVDA.O), opens new tab has agreed to buy Groq, a designer of high-performance artificial intelligence accelerator chips, for $20 billion in cash, CNBC reported on Wednesday. While the acquisition includes all of Groq's assets, its nascent Groq cloud business is not part of the transaction, the report said, citing Alex Davis, CEO of Disruptive, which led the startup's latest financing round. Groq is expected to alert its investors about the deal later in the day, CNBC reported. Nvidia and Groq did not immediately respond to Reuters request for comments. Reporting by Harshita Mary Varghese in Bengaluru; Editing by Shailesh Kuber Our Standards: The Thomson Reuters Trust Principles., opens new tab

[5]

Exclusive: Nvidia buying AI chip startup Groq for about $20 billion in its largest acquisition on record

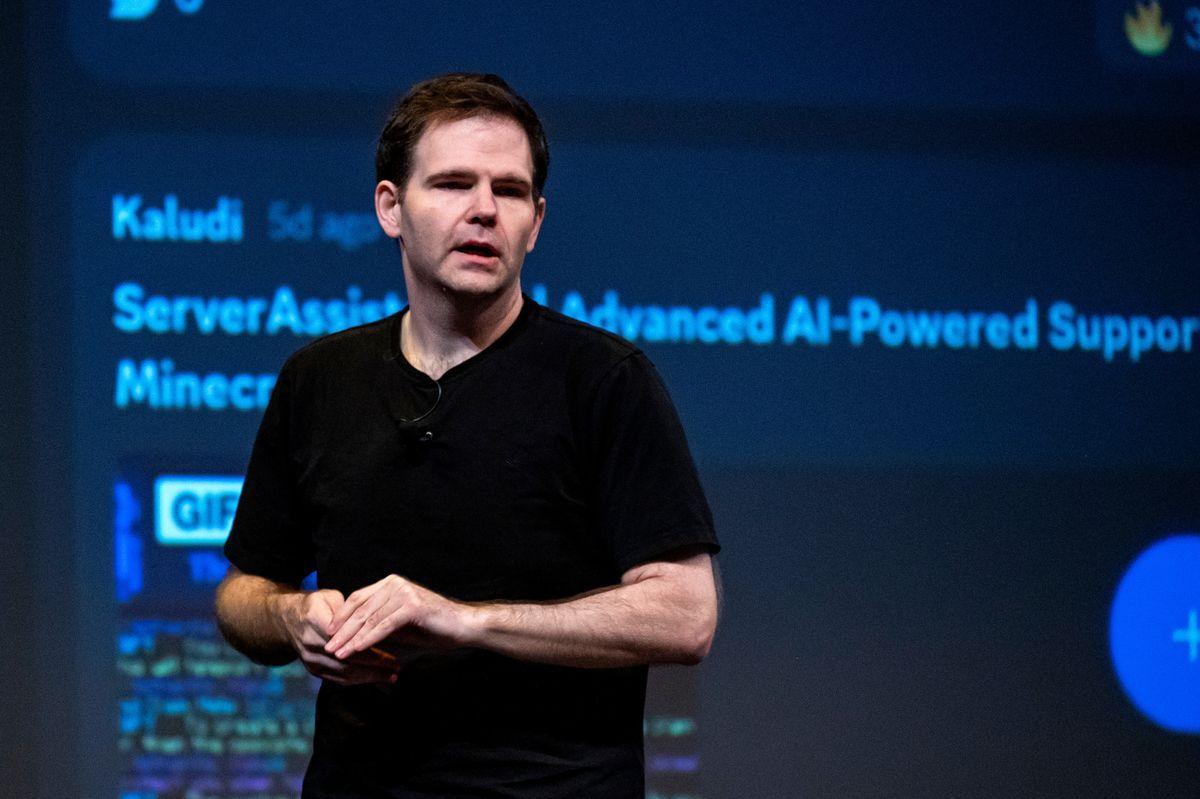

Jonathan Ross, chief executive officer of Groq Inc., during the GenAI Summit in San Francisco, California, US, on Thursday, May 30, 2024. Nvidia has agreed to buy Groq, a designer of high-performance artificial intelligence accelerator chips, for $20 billion in cash, according to Alex Davis, CEO of Disruptive, which led the startup's latest financing round in September. Davis, whose firm has invested more than half a billion dollars in Groq since the company was founded in 2016, said the deal came together quickly. Groq raised $750 million at a valuation of about $6.9 billion three months ago. Investors in the round included Blackrock and Neuberger Berman, as well as Samsung, Cisco, Altimeter and 1789 Capital, where Donald Trump Jr. is a partner. Groq is expected to alert its investors about the deal later on Wednesday. While the acquisition includes all of Groq's assets, its nascent Groq cloud business is not part of the transaction, said Davis. It would mark by far Nvidia's largest deal ever. The chipmaker's biggest acquisition to date came in 2019 with the purchase of Israeli chip designer Mellanox for close to $7 billion. At the end of October, Nvidia had $60.6 billion in cash and short-term investments, up from $13.3 billion in early 2023. Groq has been targeting revenue of $500 million this year amid booming demand for AI accelerator chips used in speeding up the process for large language models to complete inference-related tasks. The company was not pursuing a sale when it was approached by Nvidia. Colette Kress, Nvidia's CFO, declined comment on the transaction. Groq was founded in 2016 by a group of former engineers, including Jonathan Ross, the company's CEO. Ross was one of the creators of Google's tensor processing unit, or TPU, the company's custom chip that's being used by some companies as an alternative to Nvidia's graphics processing units. In its initial filing with the SEC, announcing a $10.3 million fundraising in late 2016, the company listed as principals Ross and Douglas Wightman, an entrepreneur and former engineer at the Google X "moonshot factory." Groq isn't the only chip startup that's gained traction during the AI boom. AI chipmaker Cerebras Systems had planned to go public this year but withdrew its IPO filing in October after announcing that it raised over $1 billion in a fundraising round. In a filing with the SEC, Cerebras said it does not intend to conduct a proposed offering "at this time," but didn't provide a reason. A spokesperson told CNBC at the time that the company still hopes to go public as soon as possible.

[6]

Nvidia's $20 billion Groq deal looks a lot like an acquisition in disguise

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. The big picture: Nvidia has just locked in a $20 billion licensing deal with AI startup Groq that gives it strategic access to both technical knowledge and key personnel who might otherwise power competing designs. The agreement doesn't rise to the level of a legal merger, but it sure looks like one and could still raise regulatory scrutiny. When Nvidia confirmed a $20 billion deal with Groq on December 24, industry headlines initially hailed it as the largest acquisition in the company's history. Within hours, Nvidia clarified that it had not bought the specialized AI hardware startup. Instead, the company described the arrangement as a "non-exclusive licensing agreement" for Groq's inference technology - language that, while technically accurate, raises familiar questions about where partnership ends and acquisition quietly begins. The $20 billion price tag makes this one of the most expensive licensing agreements in tech history. For Nvidia, which reported $32 billion in profit last quarter, the deal underscores both the strategic importance of securing AI inference technology and the growing pressure from emerging chip architectures outside its dominant GPU ecosystem. The Groq deal fits a pattern Silicon Valley observers call a hackquisition - a transaction that isn't legally an acquisition but operates similarly in practice. Such deals often combine large cash payments, intellectual property licensing, and selective hiring of key executives. Microsoft, Google, Amazon, and Meta have used this approach to secure technology or talent that might face regulatory scrutiny if acquired outright. In Nvidia's case, the targets appear to be Groq's CEO Jonathan Ross, president Sunny Madra, and several core engineers specializing in ultra-efficient AI inference chips. Groq built its reputation on a distinctive compute architecture designed for low-latency inference at scale. The company's first batch of chips drew mixed reactions. Some analysts viewed the design as a breakthrough path beyond GPUs for inference tasks; others doubted its scalability and viability in data center environments dominated by Nvidia hardware. Still, Nvidia's decision to license Groq's technology gives it the ability to integrate or adapt key aspects of Groq's design for future products. Jonathan Ross brings a technical pedigree that adds weight to the move. Before founding Groq, he helped develop Google's tensor processing unit (TPU) - the chip line that enabled Google to train and run large-scale AI models without relying on Nvidia GPUs. That experience makes him one of the few engineers with firsthand expertise in designing specialized AI processors capable of rivaling GPU performance. Google's success with TPUs has forced Nvidia to reckon with the possibility that future AI workloads could shift from general-purpose GPUs to dedicated inference hardware. Energy efficiency and inference speed are now key competitive benchmarks, and TPUs are showing growing advantages on both fronts. Although Nvidia still commands roughly 90 percent of the AI chip market, it cannot ignore these signals. Bringing Ross and his team into Nvidia's orbit - even under the guise of a non-exclusive deal - effectively neutralizes Groq as an independent challenger while importing its expertise into Nvidia's engineering operation. Whether or not Nvidia ever releases a TPU-style product, executives have made clear they intend to lead both training and inference segments. The structure of the Groq agreement may be as consequential as the technology itself. An outright acquisition would likely have triggered antitrust scrutiny, given Nvidia's dominant position in AI hardware. By opting for a licensing deal instead, Nvidia gains access without changing formal ownership. This maneuver has drawn attention across Big Tech, as regulators debate whether such transactions skirt merger reviews. Groq CEO Jonathan Ross helped create Google's tensor processing unit. Critics describe such deals as functional acquisitions that consolidate power without formally transferring equity, often letting the buyer selectively hire talent and integrate technology while leaving the selling company hollowed out. A notable example is Meta's $15 billion agreement with Scale AI, which reduced the startup's independence as key leaders joined Meta's AI division. Whether the Groq agreement sparks regulatory pushback remains uncertain. Its holiday-week announcement may limit immediate public attention, but antitrust concerns are unlikely to disappear. Nvidia's deal, structured for speed and discretion, could face long-term scrutiny if regulators see it as undermining competition in the AI hardware market.

[7]

Nvidia deal shows why inference is AI's next battleground

Why it matters: Groq's language processing unit (LPU) chips power real-time chatbot queries -- as opposed to model training -- potentially giving Nvidia an edge in the AI race. The big picture: Currently, Nvidia's chips power much of the AI training phase, but inference is a bottleneck that Nvidia doesn't fully control yet. * Groq's chips are purpose-built for inference -- the stage at which AI models use what they've learned in the training process to produce real-world results. * Inference is where AI companies take their models from the lab to the bank. And with the soaring costs to train AI, those models better get to the bank soon. Cheap and efficient inference is essential for AI use at scale. * Investors are pouring money into inference startups, hoping to find the missing link between AI experimentation and everyday use, Axios' Chris Metinko reported earlier this year. * Better inference could push more companies to launch more and bigger enterprise AI initiatives. That, in turn, could drive more training and boost demand for Nvidia's training chips. How it works: AI models operate in two distinct phases: training and inference. * In the training phase, models ingest vast datasets of text, images and video and then use that data to build internal knowledge. * In the inference phase, the model recognizes patterns in data it's never seen before and generates responses to prompts based on those patterns. * Think of the phases like a student studying for a test and then taking the test. Between the lines: Groq, founded in 2016 by Jonathan Ross, is unrelated to Elon Musk's xAI chatbot, also called Grok. * Ross -- along with Groq's president Sunny Madra and other employees -- will join Nvidia, according to a statement on Groq's website. * Groq will continue to operate independently. The Nvidia/Groq deal is a "non-exclusive inference technology licensing agreement" that looks suspiciously like an acquisition, or at least an acquihire. * The structure could "keep the fiction of competition alive," Bernstein Research analyst Stacy Rasgon wrote in a note to clients, according to CNBC. * This kind of deal is a familiar one, used not only to try to skirt antitrust scrutiny, but also to bring on sought-after AI talent. * In similar deals, Microsoft lured Mustafa Suleyman, co-founder of DeepMind and Google brought back Noam Shazeer, the co-inventor of the Transformer -- the T in GPT. * Aside from founding Groq, new Nvidia employee Ross invented Google's Tensor Processing Unit (TPU). What we're watching: AI's future hinges on whether companies can afford to deploy what they've already built.

[8]

Nvidia makes its largest-ever purchase with Groq agreement

Groq, an AI chip startup, entered into an agreement with Nvidia to help "advance and scale" Groq's tech. "As part of this agreement, Jonathan Ross, Groq's Founder, Sunny Madra, Groq's President, and other members of the Groq team will join Nvidia to help advance and scale the licensed technology," Groq announced in a blog post on Wednesday. "Groq will continue to operate as an independent company with Simon Edwards stepping into the role of Chief Executive Officer. GroqCloud will continue to operate without interruption." The deal was first reported as an exclusive with CNBC on Wednesday. Alex Davis, the CEO of Disruptive, the company that led Groq's latest financing round, said Nvidia has agreed to buy Groq's assets for $20 billion in cash, the news outlet reported. Davis's firm has invested more than half a billion dollars in Groq over the past nine years, CNBC reported. "We plan to integrate Groq's low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads," Nvidia CEO Jensen Huang wrote in an email obtained by CNBC. This is Nvidia's largest deal ever, according to CNBC. The company, which produces GPU chips that power many AI models, has reported surging revenues this year. Nvidia made $57 billion in revenue during the third quarter of 2025, a whopping $2 million more than Wall Street analysts expected, Mashable's Chris Taylor reported in November. The fourth quarter of 2025 is predicted to be even better for the company. "Sales are off the charts," Huang said of the company's Blackwell chips at the time. "Cloud GPUs are sold out."

[9]

Nvidia's Groq bet shows that the economics of AI chip-building are still unsettled | Fortune

Nvidia built its AI empire on GPUs. But its $20 billion bet on Groq suggests the company isn't convinced GPUs alone will dominate the most important phase of AI yet: running models at scale, known as inference. The battle to win on AI inference, of course, is over its economics. Once a model is trained, every useful thing it does -- answering a query, generating code, recommending a product, summarizing a document, powering a chatbot, or analyzing an image -- happens during inference. That's the moment AI goes from a sunk cost into a revenue-generating service, with all the accompanying pressure to reduce costs, shrink latency (how long you have to wait for an AI to answer), and improve efficiency. That pressure is exactly why inference has become the industry's next battleground for potential profits -- and why Nvidia, in a deal announced just before the Christmas holiday, licensed technology from Groq, a startup building chips designed specifically for fast, low-latency AI inference, and hired most of its team, including CEO and founder Jonathan Ross. Nvidia CEO Jensen Huang has been explicit about the challenge of inference. While he says Nvidia is "excellent at every phase of AI," he told analysts at the company's Q3 earnings call in November that inference is "really, really hard." Far from a simple case of one prompt in and one answer out, modern inference must support ongoing reasoning, millions of concurrent users, guaranteed low latency, and relentless cost constraints. And AI agents, which have to handle multiple steps, will dramatically increase inference demand and complexity -- and raise the stakes of getting it wrong. "People think that inference is one shot, and therefore it's easy. Anybody could approach the market that way," Huang said. "But it turns out to be the hardest of all, because thinking, as it turns out, is quite hard." Nvidia's support of Groq underscores that belief, and signals that even the company that dominates AI training is hedging on how inference economics will ultimately shake out. Huang has also been blunt about how central inference will become to AI's growth. In a recent conversation on the BG2 Podcast, Huang said inference already accounts for more than 40% of AI-related revenue -- and predicted that it is "about to go up by a billion times." "That's the part that most people haven't completely internalized," Huang said. "This is the industry we were talking about. This is the industrial revolution." The CEO's confidence helps explain why Nvidia is willing to hedge aggressively on how inference will be delivered, even as the underlying economics remain unsettled. Nvidia is hedging its bets to make sure that they have their hands in all parts of the market, said Karl Freund, founder and principal analyst at Cambrian-AI Research. "It's a little bit like Meta acquiring Instagram," he explained. "It's not they thought Facebook was bad, they just knew that there was an alternative that they wanted to make sure wasn't competing with them." That, even though Huang had made strong claims about the economics of the existing Nvidia platform for inference. "I suspect they found that it either wasn't resonating as well with clients as they'd hoped, or perhaps they saw something in the chip memory-based approach that Groq and another company called D-Matrix has," said Freund, referring to another fast, low-latency AI chip startup backed by Microsoft that recently raised $275 million at a $2 billion valuation. Freund said Nvidia's move into Groq could lift the entire category. "I'm sure D-Matrix is a pretty happy startup right now, because I suspect their next round will go at a much higher valuation thanks to the [Nvidia-Groq deal]," he said. Other industry executives say the economics of AI inference are shifting as AI moves beyond chatbots into real-time systems like robots, drones, and security tools. Those systems can't afford the delays that come with sending data back and forth to the cloud, or the risk that computing power won't always be available. Instead, they favor specialized chips like Groq's over centralized clusters of GPUs. Behnam Bastani, CEO and founder of OpenInfer, which focuses on running AI inference close to where data is generated -- such as on devices, sensors, or local servers rather than distant cloud data centers -- said his startup is targeting these kind of applications at the "edge." The inference market, he emphasized, is still nascent. And Nvidia is looking to corner that market with its Groq deal. With inference economics still unsettled, he said Nvidia is trying to position itself as the company that spans the entire inference hardware stack, rather than betting on a single architecture.

[10]

Scoop: Nvidia deal a big win for Groq employees and investors

Why it matters: Social media has been full of questions about what the unusual arrangement means for Groq employees -- both those heading to Nvidia and those staying put. Catch up quick: Groq and Nvidia on Wednesday announced a "non-exclusive licensing agreement," with media reports accurately pegging the deal value at around $20 billion. * The companies said that Groq CEO Jonathan Ross and president Sunny Madra would join Nvidia, while Groq would continue to operate as a standalone company led by new CEO Simon Edwards (who had been CFO). * It's an unusual structure, but the latest in a recent spate of Big AI deals that seem designed to avoid tripping antitrust wires. Behind the scenes: Most Groq shareholders will receive per-share distributions tied to the $20 billion valuation, according to sources. * Around 85% will be paid up-front, while another 10% is paid midyear 2026 and the remainder at the end of 2026. * Around 90% of Groq employees are said to be joining Nvidia, and they will be paid cash for all vested shares. Their unvested shares will be paid out at the $20 billion valuation, but via Nvidia stock that vests on a schedule. * There are also around 50 members of that group whose entire stock packages are being accelerated and paid out in cash. * Remaining Groq employees will also be paid for vested shares, plus will receive a package that includes economic participation in the ongoing company. * Any Groq employee -- staying or leaving -- with the company for less than one year will have their vesting cliff dropped, so they can get some up-front liquidity. By the numbers: Groq had raised around $3.3 billion in venture capital funding since its 2016 founding, including a $750 million infusion this past fall at nearly a $7 billion post-money valuation. * Backers include Social Capital, BlackRock, Neuberger Berman, Deutsche Telekom Capital Partners, Samsung, 1789 Capital, Cisco, D1, Cleo Capital, Altimeter, Firestreak Ventures, Conversion Capital and Modi Venture. * The company has never done a secondary tender for either employees or investors. The bottom line: Everyone gets paid. A lot.

[11]

A Deal With Groq Is Lifting Nvidia's Stock as 2026 Approaches

The partnership will have Grog's founder and CEO Jonathan Ross and others from the company joining Nvidia, which is reportedly paying $20 billion for some Groq assets. The trading year is almost over -- but Nvidia still has some news to make. The chip giant earlier this week struck a deal with inference chipmaker Groq, a non-exclusive licensing pact that according to the announcement leaves the latter company independent -- but also has founder and CEO Jonathan Ross, President Sunny Madra and other members of the company joining Nvidia (NVDA) "to help advance and scale the licensed technology." The news helped lift Nvidia's shares on Friday, with the stock -- up some 40% in 2025 so far -- more than 1% higher in morning trading. (Read Investopedia's live coverage of today's trading here.) Investors may be cheered in part by reports that Nvidia is also acquiring some of Groq's assets, with CNBC reporting a $20 billion price tag for them -- it's considered Nvidia's biggest-ever acquisition -- in an indication of sustained opportunity for dealmaking in the AI sector to close out the year. Neither Groq nor Nvidia responded to Investopedia's requests for comment in time for publication. Groq CFO Simon Edwards is set to take over as CEO. The company in September said it raised $750 million at a valuation of $6.9 billion. "Inference is defining this era of AI, and we're building the American infrastructure that delivers it with high speed and low cost," Ross said at the time. Wall Street analysts continue to see room for Nvidia's shares to keep rising. The mean price target as tracked by Visible Alpha is $254, well above recently prices around $191. The company remains the world's most-valuable, with a market capitalization above $4.6 billion.

[12]

Nvidia Is Reportedly Buying AI Chip Designer Groq's Assets for $20B

Nvidia is reportedly purchasing the artificial intelligence (AI) chip designer Groq's assets. As per the report, the chipmaker is acquiring the AI inference technology assets for a price of $20 billion (roughly Rs. 1.79 lakh crore). The amount is nearly threefold of Groq's recent valuation of $6.9 billion (roughly Rs. 61,986 crore), at which the company raised $750 million (roughly Rs. 6,740 crore). Groq has announced the deal vaguely, but did not mention the assets being purchased or the financial details. Nvidia Reportedly Purchases Groq's Assets According to CNBC, Nvidia and Groq have reached an agreement that allows the former to buy assets from the latter in an all-cash deal. The publication cited Alex Davis, CEO of Disruptive, for the information. Notably, in September, David led Groq's funding round worth $750 million (roughly Rs. 6,740 crore). If true, this will mark Nvidia's most expensive purchase at $20 billion (roughly Rs. 1.79 lakh crore), with the previous record held by the company's acquisition of the Israeli chip designer Mellanox for nearly $7 billion (roughly Rs. 62,870 crore) in 2019. Notably, both Mellanox and Groq are fabless companies, meaning while they design chipsets, they do not have the setup to fabricate them. On Christmas Eve, Groq published a newsroom post, announcing the agreement. However, instead of calling it a purchasing deal, the company referred to it as "a non-exclusive licensing agreement" for its inference technology. As part of the deal, the company's Founder, Jonathan Ross, President Sunny Madra, and several other members will also join Nvidia. Notably, the company highlighted that its cloud business, GroqCloud, is not part of the deal, and it will continue to run as an independent company under the Chief Finance Officer Simon Edwards, who will now serve as the CEO. Separately, citing an email to the staff obtained by the publication, CNBC claimed that Nvidia CEO Jensen Huang believes that the deal will expand the chipmaker's capabilities. Huang reportedly also revealed that Groq's low-latency processors will be integrated into Nvidia's AI factory architecture to expand the platform. With this integration, it is said that the platform will serve a broad range of AI inference and real-time workloads. "While we are adding talented employees to our ranks and licensing Groq's IP, we are not acquiring Groq as a company," Huang reportedly wrote in the email.

[13]

Nvidia agrees to buy chip startup Groq's assets for $20 billion: Reports - The Economic Times

Under the arrangement, Groq founder and CEO Jonathan Ross, who was with Google's Tensor Processing Unit, will join Nvidia along with president Sunny Madra and other key staff. Simon Edwards, Groq's finance chief, will take the helm of the company as it maintains its cloud business, GroqCloud, independently.Chipmaker Nvidia will acquire assets from chip startup Groq for approximately $20 billion in cash, according to media reports. This will be the company's largest transaction ever as it moves to dominate the AI hardware market. The deal will bring Groq's specialised inference chip technology and top engineering talent under Nvidia's wing. The startup will continue to operate independently. Deal details Under the arrangement, Groq founder and CEO Jonathan Ross, who was with Google's Tensor Processing Unit, will join Nvidia along with president Sunny Madra and other key staff. Simon Edwards, Groq's finance chief, will take the helm of the company as it maintains its cloud business, GroqCloud, independently. The deal will happen at a nearly threefold premium over Groq's $6.9 billion valuation, when the startup had raised $750 million in September led by investment firm Disruptive. What does Groq do? Groq is one of a number of upstarts that do not use external high-bandwidth memory chips, which free them from the memory crunch affecting the global chip industry. The approach, which uses a form of on-chip memory called SRAM, helps speed up interactions with chatbots and other AI models, but also limits the size of the model that can be served. Groq's primary rival in the approach is Cerebras Systems, which Reuters earlier this month reported plans to go public as soon as next year. Both Groq and Cerebras have signed large deals in the Middle East. As per an internal memo cited by CNBC, Nvidia CEO Jensen Huang said the company plans to "integrate Groq's low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads". Huang clarified that while Nvidia is "adding talented employees to our ranks and licensing Groq's IP, we are not acquiring Groq as a company". Venture capitalist Chamath Palihapitiya, an early backer of Groq, reflected on the company's journey in a post on X. He recalled how Ross "convinced me we could take on the giants, build new silicon, and that AI was coming" back in 2016 before Groq had even been incorporated. The investor said Ross was "a technical genius of biblical proportions" and shared his original investment memo, calling the Nvidia deal the close of a "nearly decade-long chapter." Consolidation concerns This comes as the chipmaker faces competition from tech giants developing custom AI chips. Google, Amazon, and Microsoft are investing billions in proprietary processors to cut their dependence on Nvidia, while rivals like Advanced Micro Devices (AMD) and Cerebras Systems are looking at the same market.

[14]

Nvidia Stock Climbs As The King Of AI Defends Its Throne - NVIDIA (NASDAQ:NVDA)

NVIDIA Corp. (NASDAQ:NVDA) made a key move on Wednesday to secure its dominance in the AI inference market through a non-exclusive licensing agreement with Groq. NVDA stock is climbing. See the chart and price action here. Analysts at Rosenblatt Securities view the deal as a critical maneuver to counter competition from Alphabet, Inc. (NASDAQ:GOOGL) (NASDAQ:GOOG) Google's tensor processing unit (TPU) and solidify Nvidia's market leadership as the AI industry shifts focus from training massive models to real-time deployment. Nvidia's stock climbed on Friday as investors and Wall Street signaled approval of the strategic deal. Read Next: Trump Blasts $50 Million Defense CEO Paychecks While Weapons Face Delays Talent and Technology Infusion At the heart of the agreement is a massive transfer of high-level talent. Jonathan Ross, Groq's founder and the original architect of Google's TPU program, will join NVIDIA alongside Groq's president, Sunny Madra, and other key team members to scale the licensed technology. Groq will continue to operate as an independent entity under new CEO Simon Edwards, and Nvidia gains access to Groq's specialized language processing unit (LPU) technology. The LPU technology is optimized for high-performance, low-cost inference. The upcoming LPU V2 is expected to move to Samsung's 4nm process, delivering an order of magnitude higher performance and significant power efficiency gains. Defending the Competitive Moat Rosenblatt points to the choice of a licensing structure rather than an outright acquisition as a calculated move to avoid the regulatory scrutiny facing other Big Tech mergers. The licensing strategy mirrors a similar move in September 2025, when Nvidia executed a $900 million deal with Enfabrica to bolster its networking infrastructure. Nvidia effectively neutralizes a rising competitor while expanding its technical capabilities by licensing the technology and hiring the architects behind it. Outlook and Valuation Rosenblatt maintained a Buy rating and a $245 price target for NVDA shares, noting that the potential integration of Nvidia's CUDA development tools with Groq's LPU hardware could further expand the chipmaker's reach across multiple end markets. The Groq deal positions Nvidia to remain the undisputed leader of the next era of AI infrastructure. NVDA Price Action: Nvidia shares were up 1.77% at $191.95 on Friday, according to data from Benzinga Pro. Read Next: Lucid Takes On Tesla: $50K Crossover Is Coming For The Model Y Photo: Shutterstock NVDANVIDIA Corp$191.121.33%OverviewGOOGAlphabet Inc$314.53-0.36%GOOGLAlphabet Inc$313.07-0.32%Market News and Data brought to you by Benzinga APIs

[15]

NVIDIA Buys Groq for $20B : Licensing Pact, Faster Inference Chips & CUDA Support Ahead

What does a $20 billion acquisition mean for the future of AI hardware? That's the question on everyone's mind as NVIDIA, a titan in the tech world, officially acquires Groq, a rising star in AI inference technology. Matthew Berman breaks down how this deal could reshape the competitive landscape, diving into the strategic reasons behind NVIDIA's bold move. Groq's innovative Latency Processing Units (LPUs) are designed to deliver unparalleled speed and efficiency for real-time AI applications, and now they're part of NVIDIA's arsenal. This isn't just another acquisition, it's a statement about where the industry is headed and who plans to lead it. In this overview, we'll explore the implications of this monumental deal and what it means for developers, businesses, and the broader AI ecosystem. From Groq's specialized inference technology to NVIDIA's vision of a unified AI hardware platform, there's a lot to unpack. How will this acquisition impact NVIDIA's rivalry with companies like Google and Cerebras? And what does it signal about the growing importance of inference in AI's evolution? Whether you're a tech enthusiast or an industry insider, this breakdown offers a closer look at the forces shaping the future of AI. It's a moment worth reflecting on, one that could redefine how AI systems are built and deployed. NVIDIA Acquires Groq for $20B The Significance of Groq's Inference Technology Groq, founded by Jonathan Ross, the visionary behind Google's Tensor Processing Unit (TPU), has established itself as a key player in the AI chip industry. The company's focus on inference technology has enabled it to develop LPUs that deliver exceptional efficiency and ultra-low latency. Unlike generalized GPUs, which are versatile but less optimized for specific tasks, Groq's LPUs are purpose-built to handle inference workloads with precision and speed. Inference technology is essential for deploying AI models in real-world applications. It powers a wide range of systems, including autonomous vehicles, virtual assistants, and recommendation engines, by allowing them to process data and make decisions in real time. Groq's LPUs are designed to deliver faster results while consuming less energy, addressing the growing demand for cost-effective and high-performance solutions. These capabilities make Groq's technology particularly valuable as industries increasingly rely on AI to enhance operational efficiency and customer experiences. NVIDIA's Strategic Vision and Competitive Edge The acquisition of Groq reflects NVIDIA's strategic response to the rapidly evolving AI hardware landscape. While NVIDIA's GPUs have long dominated the market for training AI models, they are less efficient for inference tasks compared to specialized chips like Groq's LPUs. By incorporating Groq's technology, NVIDIA can offer a more comprehensive suite of hardware solutions tailored to meet the diverse needs of AI workloads. This move also positions NVIDIA to compete more effectively with industry rivals such as Google, which has heavily invested in TPUs, and Cerebras, known for its wafer-scale processors. Inference represents a lucrative and recurring revenue stream, as it supports the ongoing deployment of AI systems rather than the one-time costs associated with training models. By expanding its capabilities in this area, NVIDIA is poised to capture a larger share of the growing AI hardware market. Additionally, the acquisition aligns with NVIDIA's broader vision of creating a unified ecosystem for AI development. By integrating Groq's LPUs into its offerings, NVIDIA can provide developers with a seamless experience, allowing them to use both generalized and specialized hardware within a single platform. This approach not only simplifies development but also accelerates the adoption of NVIDIA's expanded hardware solutions. NVIDIA Buys Groq for $20B Key Details and Operational Integration Under the terms of the agreement, Groq will retain operational independence while transitioning to new leadership. Founder Jonathan Ross and other key members of Groq's team will join NVIDIA, making sure a smooth integration of Groq's technology into NVIDIA's ecosystem. This collaborative approach is designed to preserve Groq's innovative culture while using NVIDIA's resources to drive further advancements in AI hardware. Groq's existing cloud services will remain uninterrupted, providing continuity for its current customers. This commitment underscores NVIDIA's dedication to maintaining customer trust and minimizing disruptions during the integration process. Furthermore, NVIDIA plans to extend its CUDA software platform to support Groq's LPUs. This integration will enable developers to work within a unified software environment, reducing complexity and fostering innovation. Complementary Technologies and Industry Implications The acquisition highlights the complementary strengths of NVIDIA's GPUs and Groq's LPUs. NVIDIA's GPUs are highly versatile, capable of handling a wide range of tasks, including both training and inference. However, their general-purpose design can limit efficiency in specialized applications. Groq's LPUs, on the other hand, are optimized for inference tasks such as image recognition, natural language processing, and recommendation systems. They deliver faster performance and lower operational costs for these specific workloads. By combining these technologies, NVIDIA can offer customers a choice between generalized and specialized solutions, depending on their unique requirements. This flexibility is particularly valuable as businesses increasingly adopt AI-driven solutions that demand both training and inference capabilities. The collaboration between NVIDIA and Groq has the potential to set new performance benchmarks for AI hardware, driving innovation and expanding the possibilities for AI applications across industries. The acquisition also reflects a broader trend in the AI industry: the growing importance of inference as a key driver of growth and revenue. As AI models become more complex and widely deployed, businesses will require hardware that can efficiently handle inference to scale their operations effectively. NVIDIA's decision to structure the deal as a licensing agreement demonstrates a strategic approach to navigating potential regulatory challenges. By allowing Groq to remain independent, NVIDIA minimizes antitrust concerns while still benefiting from Groq's expertise and technology. Future Prospects and Industry Impact The acquisition positions NVIDIA as a leader in both generalized and specialized AI chip markets. By integrating Groq's inference technology, NVIDIA can deliver enhanced performance and cost efficiency across a wide range of AI workloads. This dual capability will be particularly valuable as industries increasingly adopt AI-driven solutions requiring both training and inference capabilities. Looking ahead, the partnership between NVIDIA and Groq is expected to drive significant advancements in AI hardware. Their combined expertise could lead to the development of new technologies that redefine performance standards for AI inference. For businesses and developers, this collaboration promises a future of more powerful, efficient, and accessible AI solutions, allowing them to unlock new opportunities and achieve greater success in an AI-driven world.

[16]

No, NVIDIA Isn't Acquiring Groq, But Jensen Just Executed a 'Surgical' Masterclass That No One Was Expecting

NVIDIA's CEO, Jensen Huang, might have given his chip team a 'Christmas' gift that no one would've expected, as it was reported that Team Green had entered into an agreement with Groq, a company that builds specialized AI hardware. And these aren't simple chips; they could be a gateway for NVIDIA to dominate inference-class workloads. To understand why this is a 'Masterclass,' we need to examine two distinct battlefronts: the regulatory loopholes Jensen has just leveraged, and the hardware dominance he has secured. CNBC was the first to report on this development, claiming that NVIDIA is "buying" Groq Inc. in a mega $20 billion deal, marking the biggest acquisition by Jensen. This led to a massive wildfire in the industry, where some suggested that regulatory investigations would hinder the move, while others claimed it was the end of Groq. However, later on, Groq officially released a statement on its website, stating that it has entered into a "non-exclusive licensing agreement" with NVIDIA, granting the AI giant access to inference technology. We plan to integrate Groq's low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads. While we are adding talented employees to our ranks and licensing Groq's IP, we are not acquiring Groq as a company. - NVIDIA CEO Jensen Huang in an internal mail Therefore, the perception of a merger, at least on paper, was nullified following Groq's statement. Now, the sequence of events seems quite interesting to me, especially since the only thing this deal lacks to be considered a full-scale acquisition is the avoidance of mentioning it in official disclosures. This is a classical "Reverse Acqui-hire" move from NVIDIA here, and if someone doesn't know what this means, it is a move from Microsoft's playbook, where the tech giant back in 2024, announced a deal with Inflection worth $653 million, which includes the likes of Mustafa Suleyman and Karén Simonya joining Microsoft, that spearheaded the firm's AI strategy. Reverse Acqui-hire translates to a company hiring key talent from a startup, and leaving behind a "bare-minimum" corporate structure, which ultimately prevents such a move from being a merger. Now, it appears that Jensen managed to execute something similar to avoid being under the FTC's investigation, as by framing the Groq deal as a "non-exclusive licensing agreement," NVIDIA is essentially outside the scope of the Hart-Scott-Rodino (HSR) Act. Interestingly, Groq mentions that GroqCloud will continue to operate, but only as a 'bare structure'. What happened is that NVIDIA acquired Groq's talent and IP for a reported $20 billion, managed to escape regulatory investigations, which allowed them to execute the deal in a matter of days. And when you talk about the hardware they now have access to, that's the more interesting part of the NVIDIA-Groq deal. This is the segment that I am most excited to discuss, as Groq has a hardware ecosystem in place that could replicate NVIDIA's success in the training era, and I'll justify this ahead as well. The AI industry has evolved dramatically in the past few months in terms of compute demand. While companies like OpenAI, Meta, Google, and others are engaged in training frontier models, they are also looking to have a robust inference stack onboard, as that's where most hyperscalers earn money. When Google announced Ironwood TPUs, the industry hyped it as an inference-focused option, and the ASICs were touted as a replacement for NVIDIA, mainly because there were claims that Jensen had yet to offer a solution that dominated inference throughput. We have the Rubin CPX, but I'll discuss that later. When we talk about inference, compute demand changes dramatically, since with training, the industry requires throughput over latency and high arithmetic intensity, which is why modern-day accelerators are beefed up with HBM and massive tensor cores. Since hyperscalers are pivoting towards inference, they now require a fast, predictable, and feed-forward execution engine, as response latency is the primary bottleneck. To bring in fast compute, companies like NVIDIA have targeted workloads such as massive-context inference (prefill and general inference) with Rubin CPX, or Google, which touts itself as a more power-efficient choice with TPUs. However, when it comes to decoding, there are not many options available. Decode refers to the token-generation phase of inference in a transformer model, and it is becoming increasingly important as a key aspect of AI workload classification. Decode requires deterministic and low-latency behaviour, and given the constraints brought in by the use of HBM (latency and power) in inferencing environments, Groq has something unique out there, which is the use of SRAM (Static RAM). It's now time to talk about LPUs, now that I have made it clear why there's a need for a new look at inference compute. LPUs are a creation of Groq's former CEO, Jonathan Ross, who, by the way, is joining NVIDIA after the recent arrangement. Ross is known for his work with Google's TPUs, so we can be certain that Team Green is acquiring a major asset in-house. LPUs (Language Processing Units) are Groq's solution to inference-class workloads, and the company distinguishes itself from others by being based on two core bets. The first being deterministic execution and on-die SRAM as primary weight storage. This is Groq's approach to achieving speed by ensuring predictability. Groq has previously showcased two leading solutions: their GroqChip and partner-based GroqCard. Based on the information released in official documents, these chips feature 230 MB of on-die SRAM with up to 80 TB/s of on-die memory bandwidth. The use of SRAM is one of the key advantages of LPUs, as it allows orders-of-magnitude lower latency. With HBM, when you factor in the latency brought on by DRAM access and memory controller queues, SRAM wins by a considerable margin. On-die SRAM enables Groq to achieve tens of terabytes per second of internal bandwidth, allowing the firm to deliver leading throughput. SRAM also enables Groq to offer a power-efficient platform, as accessing SRAM requires significantly lower energy per bit and eliminates PHY overhead. And, in decode, LPUs lead to energy per token improving significantly, which is a significant factor, given that decode workloads are memory-intensive. This is the architectural aspect of LPUs, and while it may appear significant, it is just one part of how LPUs perform. The other element is leveraging deterministic cycles, which focuses on compile-time scheduling to eliminate time variations across kernels. Compile-time scheduling ensures that 'delays' within decode pipelines are non-existent, and this is a significant factor, as it allows for perfect pipeline utilization, allowing for a much higher throughput relative to modern-day accelerators. To sum it up, LPUs are dedicated entirely to what hyperscalers need for inference, but there's one caveat that the industry currently ignores. LPUs are real and effective inference hardware, but they're highly specialized and haven't yet become a mainstream default platform, and that's where NVIDIA comes in. While we still don't know how LPUs can be integrated into NVIDIA's offerings, one way to do it is by offering them as part of rack-scale inference systems (similar to Rubin CPX), paired with networking infrastructure. This would allow GPUs to handle prefill/long-context, with LPUs to focus on decode, essentially meaning that in inference tasks, NVIDIA has everything sorted out. This could transform the image of LPUs from an experimental option to a standard inference method, ensuring their widespread adoption among hyperscalers. There's no doubt that this deal marks one of the biggest achievements for NVIDIA when it comes to advancing its portfolio, since all indicators point towards the fact that inference will be the next option NVIDIA will talk about, and LPUs will be a core part of the company's strategy for this area of AI workloads.

[17]

Nvidia, in the Last Days of 2025, Just Made a Game-Changing Move

Nvidia's $20 billion move may help the company stay ahead in the fast-paced world of AI. Nvidia (NVDA 1.21%) has dominated the early stages of the artificial intelligence (AI) boom, and this is thanks to the company's top-performing chips. These graphics processing units (GPUs) power the training of models, so that they have what it takes to then go on and do the job of solving complex problems. Though rivals exist, Nvidia has remained in the lead -- the company has kept its promise of innovating on an annual basis to ensure this position. Still, this isn't the only way Nvidia plans on staying ahead, and right now, in the last days of 2025, the tech giant made a game-changing move -- one that could help secure its leadership in the next phase of AI growth. Let's find out more. From gaming to AI First, though, let's take a quick look at Nvidia's path so far. The AI giant actually started out about 30 years ago with a focus on serving the video game industry. Nvidia still does this, but a decade ago, the company recognized the AI opportunity and put its focus there. As a result, Nvidia developed the world's most sought-after AI chips as well as a full menu of related products -- from enterprise software to networking tools. But the star of the show continues to be the GPU, and the biggest tech names, from Microsoft to Amazon, flock to Nvidia for each new release. This momentum has fueled explosive earnings growth for the chip designer, with revenue and net income climbing in the double and triple digits to record levels. In the most recent fiscal year, Nvidia's revenue reached more than $130 billion. Now, let's move along to Nvidia's game-changing move. It's key because it not only addresses the risk of competition, but it also strengthens Nvidia's position in what may next drive AI growth: AI inferencing. We'll start with competition. Nvidia faces it from market heavyweights like Advanced Micro Devices and even some of its own customers that are developing their own AI chips, such as Amazon. But Nvidia's biggest threat may not come from these giants. Instead, it may come from small start-ups specializing in technology that could either compete with or replace Nvidia chips -- particularly if these start-ups specialize in the area of AI inferencing. The importance of inferencing Before I go any further, I'll explain the importance of inferencing. It's the powering of the "thinking" AI models go through to do their job. So even after they're trained, they will need GPUs (or another similar tool) to fuel that process. The AI inferencing market, worth about $103 billion today, may reach $255 billion by 2032, according to Fortune Business Insights. Nvidia has said repeatedly that inferencing is the next major area of growth in this AI boom and has specifically designed its latest architecture, Blackwell, for inferencing strength. But Nvidia isn't stopping there, and instead made this exciting move: The company is acquiring inferencing technology from start-up Groq for $20 billion in cash, according to CNBC. This represents Nvidia's largest deal ever. CNBC reported that in an email to employees, Nvidia chief Jensen Huang said: "We plan to integrate Groq's low-latency processors into the Nvidia AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads." Nvidia's financial strength Nvidia, with $60 billion in cash as of the end of the latest quarter, has the financial strength for this deal and potentially others. And, importantly, it shows that Nvidia is ready to invest in the developments of smaller rivals to reinforce its own platform -- this, along with the company's own commitment to innovation, is a surefire way to stay ahead of the crowd. The Groq deal is particularly attractive because it involves inferencing, an area that could supercharge Nvidia's growth in the years to come. And the addition of Groq executives to the Nvidia team should facilitate the integration of this new technology with Nvidia's. Groq's chief executive officer, the company president, and other executives will join Nvidia as part of the deal to "help advance and scale the licensed technology," according to a Groq blog post. All of this is great news for Nvidia investors as we head toward a new year -- and a new phase of AI growth driven by inferencing.

[18]

Nvidia's Groq deal sees positive reactions from Wall Street analysts

Wall Street analysts said that Nvidia's (NVDA) recent deal with Groq offers both "offense and defense" and is strategic. On Wednesday, Groq said it entered a non-exclusive licensing agreement with Nvidia for its inference technology. Under the agreement, Groq's Founder Jonathan Ross, President Analysts believe the acquisition enhances Nvidia's AI system stack and widens its competitive moat, reinforcing its leadership position. Nvidia can expand into more real-time AI inference markets, especially robotics and autonomy, and integrate energy-efficient, low-latency inference within its solutions. Groq's LPU hardware could diversify Nvidia's offerings, increase customer choices, and address threats from specialized ASIC chips, though it may complicate future product direction and pricing.

[19]

Nvidia Acquires Tech and Talent From Inference Chip Maker Groq | PYMNTS.com

Groq said in a Wednesday (Dec. 24) blog post that it entered into a non-exclusive licensing agreement with chip maker Nvidia for Groq's inference technology. Reached by PYMNTS, an Nvidia spokesperson said in an emailed statement: "We haven't acquired Groq. We've taken a non-exclusive license to Groq's IP and have hired engineering talent from Groq's team to join us in our mission to provide world-leading accelerate computing technology." Groq said in its blog post that with this agreement, the companies aim to expand access to high-performance, low-cost inference. As part of the agreement, Groq founder Jonathan Ross, Groq President Sunny Madra and other members of the Groq team will join Nvidia, according to the post. "Groq will continue to operate as an independent company with [Groq Chief Financial Officer] Simon Edwards stepping into the role of Chief Executive Officer," the company said in the post. "GroqCloud will continue to operate without interruption." Reuters reported Friday (Dec. 26) that with this deal, Nvidia acquires Groq technology and talent without acquiring the company. The report compared the deal to Meta's hiring of the CEO of ScaleAI and Amazon's hiring of founders of Adept AI. Groq said in September that it was valued at $6.9 billion in a funding round in which it raised $750 million in new financing. In a press release announcing the new financing, the company said its compute powered more than 2 million developers and Fortune 500 companies and that it was growing its global presence and playing a central role in the global deployment of artificial intelligence (AI) technology that originated in America, a goal promoted by the White House. "Inference is defining this era of AI, and we're building the American infrastructure that delivers it with high speed and low cost," Ross said in the September press release. The valuation Groq achieved in September was more than double the one it gained about a year earlier. In August 2024, Groq was valued at $2.8 billion in a funding round in which it secured $640 million. Ross said at the time: "We intend to make the resources available so that anyone can create cutting-edge AI products, not just the largest tech companies." PYMNTS reported Dec. 12 that investment and engineering resources were shifting toward inference infrastructure as companies that spent the past two years experimenting with large language models began moving those systems into live environments.

[20]

Nvidia's "Aqui-Hire" of Groq Eliminates a Potential Competitor and Marks Its Entrance Into the Non-GPU, AI Inference Chip Space | The Motley Fool

On Friday, artificial intelligence (AI) chip start-up Groq announced via a very brief press release that it "has entered into a non-exclusive licensing agreement with Nvidia (NVDA +1.09%) for Groq's inference technology." The deal also includes Jonathan Ross, Groq's founder and CEO, Sunny Madra, Groq's president, and other members of the Groq team joining Nvidia to "help advance and scale the licensed technology." This was a smart move by Nvidia, in my view. Nvidia has tons of cash, and it makes sense to use it to accomplish two goals at once: eliminate a potential competitor and obtain a new chip technology to offer its customers. Here's what investors should know. That Nvidia is not only entering into a non-exclusive license with Groq, but also hiring its founder-CEO, president, and reportedly key engineering talent, makes this deal an "acqui-hire" and as close to a full-fledged acquisition as possible. Granted, Groq will reportedly continue to operate, with its CFO taking over the CEO position, and operate its GroqCloud. However, with the founder -- who is the mastermind behind the company's tech -- leaving, it appears that all advancements in Groq's tech will now be made under Nvidia. No doubt, Nvidia structured the deal to avoid potential regulatory scrutiny. The company already dominates the AI chip space, so any acquisition that could potentially increase its current or future market share further would likely garner a very close look from regulators. The deal's size wasn't disclosed by the companies involved, but one major financial outlet has reported it at $20 billion, which would be Nvidia's largest deal to date, by far. Its prior largest deal was its $6.9 billion acquisition of high-performance networking specialist Mellanox Technologies in 2020. That acquisition proved to be extremely successful, as Nvidia's networking business is booming. If the $20 billion is accurate, it represents a huge premium over Groq's most recent valuation. After a $750 million financing round in September, Groq's valuation was $6.9 billion. Nvidia tried to buy leading central processing unit (CPU) chip designer Arm Holdings in 2020, but that massive deal was called off due to significant antitrust concerns from regulators in the U.S. and elsewhere. Groq's chips are language processing units (LPUs) designed for AI inferencing. Inferencing is the second step in the two-step AI process, following training. Training involves using vast amounts of data to teach an AI model, while inference is the deployment of that model to generate answers to a user's questions, images, and other outputs. Nvidia's graphics processing units (GPUs) have long dominated the AI training stage. They're also leaders in AI inference, but they face growing competition in this area. Competitors include Advanced Micro Devices' (AMD) data center GPUs, as well as custom application-specific integrated circuits (ASICs) that Broadcom and Marvell Technology are making for big tech company customers. Until now, the big tech companies have just used their custom AI chips internally and, where applicable, also in their cloud computing services. However, it was recently revealed that social media giant Meta Platforms is considering buying Alphabet's Google custom AI chip, called a tensor processing unit (TPU), for inferencing purposes in its data centers. The big tech companies are exploring alternatives to Nvidia's GPUs for two reasons: to reduce costs and to diversify their supply chains. Relying on just a single supplier for anything can be risky. Groq's goal was for its LPUs to be a big player in the AI inference market. The company claims its technology is faster than alternatives for specific inference applications. Its plans included selling its chips for less money than Nvidia GPUs and perhaps other offerings. It makes good sense that Nvidia views Groq's tech as potentially very valuable and evidently viewed the company as a potential, significant future rival. Groq founder and CEO Jonathan Ross is widely considered the creator of Google's TPU. Granted, he didn't create this chip alone, but he was the force behind the effort to develop it.

[21]

Groq Founder Jonathan Ross' Net Worth Rises After NVIDIA's $20B AI Deal

NVIDIA and Groq Sign a Non-Exclusive Inference Technology License Groq framed the agreement around expanding access to high-performance, low-cost inference. The license gives NVIDIA rights to use without exclusivity. Groq said Ross and Madra will help NVIDIA scale the licensed technology. AI demand has started shifting toward serving users in real time. Inference workloads require low latency and steady throughput. Groq has positioned its chips around that requirement. This positioning helps explain why NVIDIA pursued a license, not a full acquisition. Ross brings prior AI silicon experience to the transition. He helped launch Google's AI chip program before he started Groq. Groq was formed to build dedicated hardware for fast model responses and predictable latency. Public discussion has focused on the deal's implied value. A widely shared report suggested a cash agreement "for about" $20 billion tied to Groq assets. Groq and did not publish a transaction price in their announcements.

[22]

Wall Street reacts to NVDA/Groq tie-up By Investing.com

Investing.com -- Nvidia last week disclosed a non-exclusive licensing agreement with AI chip startup Groq, a deal that also brings Groq's founder, president and key engineers into the AI chip giant while leaving the company operating independently. Under the agreement, Nvidia will license Groq's inference technology, which is designed for ultra-low latency AI workloads. According to media reports, Nvidia had agreed to buy Groq for $20 billion in cash, but neither company confirmed this yet. Citi analyst Atif Malik sees the deal as "a clear positive on two fronts." With the deal, Nvidia "is indirectly acknowledging that specialized inference architectures are important for real-time and cost-efficient AI deployments to address competition from TPUs and perhaps to a lesser extent upcoming startups." Moreover, while a licensing approach allows Nvidia to avoid regulatory scrutiny tied to a full takeover. Malik added that licensing Groq's IP lets Nvidia "swiftly add more inference-optimized compute stacks to its road map without building the technology from the ground up." Stifel Ruben Roy highlighted that Groq's language processing units (LPUs) are optimized for fast decode inference and ultra-low latency. Roy said Groq's SRAM-based architecture could complement Nvidia's upcoming Vera Rubin platform, allowing systems to be tuned for different workloads. "We believe that the addition of an SRAM-based LPUs will add another option where token/$ and token/Watt metrics are optimized depending on workload," Roy said. Truist analyst William Stein described the tie-up as a competitive response to growing pressure from alternative inference architectures, particularly TPUs. The analyst said the move is designed "to fortify NVDA's competitive positioning, specifically vs. the TPU," adding that while the reported $20 billion price tag is large in absolute terms, it is modest relative to Nvidia's cash position and near-term free cash flow generation. In a separate note, UBS analyst Timothy Arcuri said the deal "could bolster NVDA's ability to service high-speed inference applications," an area where traditional GPUs are less efficient due to reliance on off-chip high-bandwidth memory. He added that the move is consistent with Nvidia's push toward offering "ASIC-like architectures in addition to its mainstream GPU roadmap," alongside initiatives such as Rubin CPX.

[23]

Truist Securities reiterates Buy rating on Nvidia stock amid Groq deal By Investing.com

Investing.com - Truist Securities has reiterated its Buy rating and $275.00 price target on Nvidia (NASDAQ:NVDA) following the chipmaker's reported partnership with AI startup Groq. This target sits below the analyst high target of $352, according to InvestingPro data, which also shows a strong analyst consensus recommendation of 1.33 (Strong Buy). The semiconductor giant is reportedly paying $20 billion to license Groq's technology and bring its founder, president, and other employees into Nvidia, according to Truist analyst William Stein. While Nvidia confirmed the transaction to Truist, it did not verify the financial terms. For a company with a market capitalization of approximately $4.63 trillion, this investment represents less than 0.5% of Nvidia's total value. Truist views the acquisition as a strategic move by Nvidia to strengthen its competitive position in AI inference capabilities, specifically against Google's Tensor Processing Unit (TPU). This aligns with Nvidia's impressive 65.22% revenue growth over the last twelve months, as reported in InvestingPro's detailed financial metrics. The deal represents a significant investment in absolute terms, but Truist notes it remains relatively small compared to Nvidia's substantial cash position and ongoing cash flow generation. InvestingPro data shows Nvidia operates with a moderate debt level of $10.82 billion and maintains strong cash flows that can sufficiently cover its interest payments. Groq, a privately held company, has developed specialized AI processing technology that will now be integrated into Nvidia's expanding artificial intelligence portfolio. As a prominent player in the Semiconductors & Semiconductor Equipment industry, Nvidia continues to leverage its 75% cash return on invested capital to strengthen its market position. For deeper insights into Nvidia's financial health and growth prospects, InvestingPro offers comprehensive Pro Research Reports with expert analysis on this and 1,400+ other top stocks. In other recent news, Nvidia has entered into a non-exclusive inference technology licensing agreement with AI chip startup Groq. This deal, announced Wednesday, includes a significant financial commitment, with Nvidia reportedly paying $20 billion in cash to access and develop Groq's AI inferencing technology. The agreement also involves key members of Groq's management team, including founder Jonathan Ross, joining Nvidia. While some analysts, such as those from D.A. Davidson, have expressed confusion over Nvidia's strategic motivations, others view the deal more positively. Rosenblatt analysts have described the partnership as a strategic win, and BofA Securities has reiterated its Buy rating on Nvidia, maintaining a $275.00 price target. Similarly, Baird has confirmed its Outperform rating on the company, reflecting confidence in the potential benefits of the Groq licensing deal. Despite differing opinions, the agreement has sparked significant interest and discussion among analysts and investors. This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.

[24]

Nvidia acquires start-up Groq, a rival in the AI chip market, for 20 billion dollars