Nvidia launches Alpamayo open AI models to bring human-like reasoning to autonomous vehicles

10 Sources

10 Sources

[1]

Nvidia launches Alpamayo, open AI models that allow autonomous vehicles to 'think like a human' | TechCrunch

At CES 2026, Nvidia launched Alpamayo, a new family of open-source AI models, simulation tools, and datasets for training physical robots and vehicles that are designed to help usher autonomous vehicles reason through complex driving situations. "The ChatGPT moment for physical AI is here - when machines begin to understand, reason, and act in the real world," Nvidia CEO Jensen Huang said in a statement. "Alpamayo brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments, and explain their driving decisions." At the core of Nvidia's new family is Alpamayo 1, a 10-billion-parameter chain-of-thought, reason-based vision language action (VLA) model that allows an AV to think more like a human so it can solve complex edge cases - like how to navigate a traffic light outage at a busy intersection - without previous experience. "It does this by breaking down problems into steps, reasoning through every possibility, and then selecting the safest path," Ali Kani, Nvidia's vice president of automotive, said Monday during a press briefing. Alpamayo 1's underlying code is available on Hugging Face. Developers can fine-tune Alpamayo into smaller, faster versions for vehicle development, use it to train simpler driving systems, or build tools on top of it like auto-labeling systems that automatically tag video data or evaluators that check if a car made a smart decision. "They can also use Cosmos to generate synthetic data and then train and test their Alpamayo-based AV application on the combination of the real and synthetic dataset," Kani said. Cosmos is Nvidia's brand of generative world models, AI systems that create a representation of a physical environment so they can make predictions and take actions. As part of the Alpamayo rollout, Nvidia is also releasing an open dataset with more than 1,700 hours of driving data collected across a range of geographies and conditions, covering rare and complex real-world scenarios. The company is additionally launching AlpaSim, an open-source simulation framework for validating autonomous driving systems. Available on GitHub, AlpaSim is designed to recreate real-world driving conditions, from sensors to traffic, so developers can safely test systems at scale.

[2]

Nvidia Debuts New AI Tools for Autonomous Vehicles, Robots

Nvidia Corp., aiming to extend the reach of its technology, announcedBloomberg Terminal a group of AI models and tools designed to speed the development of autonomous vehicles and power a new generation of robots. The company unveiled a vehicle platform called Alpamayo that allows cars to "reason" in the real world, Chief Executive Officer Jensen Huang said Monday during a presentation at the CES trade show in Las Vegas. Potential users can take the Alpamayo model and retrain it themselves, Nvidia said. The free offering is aimed at creating vehicles that can think their way out of unexpected situations, such as a traffic-light outage. An onboard computer in a car will analyze inputs - from cameras and other sensors -- break them down into steps and come up with solutions. Nvidia is building on its work with Mercedes-Benz to develop vehicles capable of hands-free driving on the highway that can also find their way through cities. The first Nvidia-powered car will be on the road in the first quarter in the US, Huang said. Europe will follow in the second quarter, with an Asia introduction coming in the second half, he said. "We imagine that someday a billion cars on the road will all be autonomous," he said. The company also introduced AI models and other technology for robots. It's working with Siemens AG to bring AI to more of the physical world, Huang said during the event.

[3]

Nvidia's Alpamayo AI platform for autonomous cars will debut in the new Mercedes-Benz CLA

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. The big picture: Nvidia CEO Jensen Huang's keynote at CES 2026 focused primarily on the company's AI hardware business, including the upcoming Vera Rubin chip. However, he also delivered a surprise announcement: Nvidia's first AI-powered autonomous vehicle platform, dubbed Alpamayo, will debut later this year in the new Mercedes-Benz CLA. Nvidia describes Alpamayo as an open portfolio of reasoning vision-language-action (VLA) models, simulation tools, and datasets designed to power robots, industrial automation, and Level 4 autonomous vehicle architectures. The platform includes Alpamayo R1 - the first open reasoning VLA model for autonomous driving - and AlpaSim, an open simulation blueprint for high-fidelity AV testing. According to Huang, Alpamayo can not only control the steering wheel, brakes, and accelerator like any self-driving car, but also apply "human-like" reasoning to decide what actions to take based on sensor input. Built on Nvidia's Cosmos Reason AI model, Alpamayo R1 also allows humans to decode and understand why an autonomous vehicle made a specific decision in any scenario. The AI model behind Alpamayo uses 10 billion parameters and is subdivided into smaller, runtime-capable models deployed directly within the AlpaSim framework and the physical AI open datasets. This approach allows partners and AV software vendors to benchmark their experimental applications against real-world metrics. The first passenger car to feature Alpamayo will be the next-generation Mercedes-Benz CLA, scheduled to hit US roads in the first quarter of this year with Nvidia's so-called "Level 2++" driver-assistance features. The two-door coupe recently earned a 5-star Euro NCAP rating, scoring 94 percent for adult protection, 89 for child safety, and 93 for pedestrian protection. Huang also highlighted Drive Hyperion - Nvidia's open, modular, Level-4-ready AI platform adopted by several automotive suppliers, including Aeva, AUMOVIO, Astemo, Arbe, Bosch, Hesai, Magna, OmniVision, Quanta, Sony, and ZF. Drive Hyperion is powered by two Blackwell-based Drive AGX Thor SoCs, delivering more than 2,000 FP4 teraflops of real-time compute to generate a 360-degree sensor view. Beyond its automotive AI platforms, Nvidia unveiled the Vera Rubin AI chip and DLSS 4.5 at CES 2026. Gamers, however, were left waiting, as no new GeForce card was announced - further signaling the company's shift away from its gaming roots. Still, Team Green is expected to make gaming-related announcements soon, including official Linux support for GeForce Now.

[4]

CES 2026: NVIDIA launches Alpamayo 1 for autonomous vehicles

The announcement spans agentic AI, physical AI, robotics, autonomous vehicles, and biomedical research. NVIDIA says the combined release gives developers one of the most comprehensive open AI toolkits currently available. For autonomous vehicles, NVIDIA introduced Alpamayo, a new family of open models, simulation tools, and datasets designed for reasoning-based driving systems. The release includes Alpamayo 1, an open vision language action model that enables vehicles to understand their surroundings and explain their actions. NVIDIA also launched AlpaSim, an open-source simulation framework that supports closed-loop training and evaluation across varied environments and edge cases. To support development, the company released Physical AI Open Datasets with more than 1,700 hours of driving data collected across a wide range of geographies and conditions. NVIDIA's expanded portfolio includes open models from several product families. These include Nemotron for agentic AI, Cosmos for physical AI, Alpamayo for autonomous vehicle development, Isaac GR00T for robotics, and Clara for healthcare and life sciences.

[5]

Nvidia unveils open-source AI models for next-gen self-driving vehicles

Alpamayo integrates open models, simulation frameworks and data sets for automotive developers to build on. Nvidia has unveiled a family of open-source AI models targeting next-generation reasoning-based autonomous vehicles (AV). 'Alpamayo' is touted to be the industry's first chain-of-thought reasoning vision language action model designed for the autonomous vehicle research. The new tool - built for Level 4 autonomy - allows AI systems to "act with human-like judgement" when presented with new scenarios, said Nvidia. Available for download via Hugging Face, Alpamayo integrates open models, simulation frameworks and data sets, which automotive developers can use to build upon. Rather than running directly in vehicles, the system will serve as "large-scale teacher models" that developers can use to fine-tune their autonomous vehicle stacks, the company said. CEO Jensen Huang made unveiled Alpamayo at the annual CES technology showcase yesterday (5 January). "The ChatGPT moment for physical AI is here - when machines begin to understand, reason and act in the real world," he commented in reference to the system. "Robotaxis are among the first to benefit. Alpamayo brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments and explain their driving decisions - it's the foundation for safe, scalable autonomy." The company also announced that it is working with robotaxi operators with hopes that the software stack would be picked up for AV use as early as 2027. The company already has a full-stack platform for AV, spanning cloud, simulation and vehicle. Yesterday, it said that the new Mercedes-Benz CLA will be launched with its advanced driver assistance capabilities by the end of the year. The vehicle will be released in the US in the coming months before being rolled out in Europe and Asia. Speaking at the company's annual shareholders' meeting last year, Huang said that alongside AI, robotics represents Nvidia's other biggest market for potential growth, placing self-driving cars first in line to benefit from the technology's commercial application. Automakers Toyota, Aurora and Continental announced partnerships with Nvidia at last year's CES to develop and build their consumer and commercial vehicle fleets using the chipmaker's AI tech. Goldman Sachs predicted last year that the robotaxis' ride share market in North America will grow at a compound annual rate of around 90pc from 2025 to 2030, which could push the gross profit for the US AV market to around $3.5bn. Nvidia's latest AV announcements come just after Alphabet's Waymo suffered a major setback after a massive power outage in San Francisco last month halted several of its vehicles on the road. Alongside announcing Alpamayo, Nvidia unveiled Rubin, its first extreme-codesigned, six-chip AI platform now in full production. The Rubin platform is the successor to the company's Blackwell architecture. Don't miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic's digest of need-to-know sci-tech news.

[6]

Nvidia at CES: Alpamayo signals the real arrival of physical AI - SiliconANGLE

Nvidia at CES: Alpamayo signals the real arrival of physical AI At CES 2026, Nvidia Corp. announced Alpamayo, a new open family of AI models, simulation tools and datasets aimed at one of the hardest problems in technology: making autonomous vehicles safe in the real world, not just in demos. On the surface, Alpamayo looks like another AI platform announcement. In reality, it's a continuation -- and validation -- of a shift I've been tracking for years across my interviews on theCUBE: the industry is moving from perception AI to physical AI -- systems that can perceive, reason, act and explain decisions in environments where mistakes are unacceptable. This isn't about better lane-keeping or smoother highway driving. It's about the long tail: rare, unpredictable situations that don't show up often, but define whether autonomy is safe, scalable and trustworthy. Traditional autonomous driving systems have treated the problem like a pipeline: See the world, plan a route, execute commands. That approach works -- until something unexpected happens. Alpamayo represents a different philosophy. Nvidia is introducing models that can think through situations step-by-step, showing not just what a vehicle should do, but why. That ability to reason -- and to explain decisions -- is critical if autonomy is going to move beyond pilots and into widespread Level 4 deployment. Just as important: Alpamayo is not meant to run directly in cars. It's a teacher system -- a way to train, test and harden autonomous stacks before they ever touch the road. That distinction matters, and it aligns closely with what we've been hearing from operators actually deploying autonomy today. If you've been watching our coverage, Alpamayo feels less like a leap and more like a convergence. In my interviews with Gatik, CEO Gautam Narang described how they use Nvidia-partnered simulation to scale safely across markets. Gatik doesn't rely on simulation instead of real-world driving -- they use it to multiply learning. Thousands of real miles become millions of synthetic miles, across every sensor modality, to validate safety before expanding into new regions. What stood out to me in that conversation wasn't the tooling -- it was the philosophy. Gautam was clear: There is no substitute for real-world data. Simulation and synthetic data only work when they're grounded in live telemetry. That blend -- real data feeding simulation, simulation feeding learning loops -- is exactly what Nvidia is formalizing with Alpamayo. That's physical AI in practice: the fusion of a physical system (a truck), digital twins and large-scale compute working together as one system. We heard a similar reality check in our discussions with Plus.ai. CEO David Liu made a point I've repeated often on theCUBE: Near-real-time is not real-time. When you're moving 80,000 pounds down a highway, decisions every 50 milliseconds aren't a luxury -- they're the baseline. Plus treats the vehicle as an edge supercomputer. The AI driver runs locally, making decisions 20 times per second, while learning happens in the cloud and is continuously distilled back into the vehicle. That architecture -- cloud-trained intelligence, on-device execution -- is exactly the pattern Alpamayo is designed to support. Nvidia's Thor and Cosmo platforms show up repeatedly in these conversations for a reason. Autonomy isn't just a software problem. It's a full-stack systems problem: sensors, compute, networking, redundancy and safety validation all working together. Another theme that keeps resurfacing in our coverage is confusion around autonomy levels. In my conversation with Tensor, the distinction was refreshingly clear: Level 2 and Level 3 assist the driver. Level 4 replaces the driver -- hands off, eyes off, in defined conditions. Reliable Level 4 isn't about flashy demos. It's about consistency across environments, privacy-preserving on-device intelligence, and resilience when connectivity drops. Alpamayo's emphasis on reasoning, explanation and simulation-first validation speaks directly to those requirements. This is also why openness matters. Nvidia isn't locking Alpamayo behind closed doors. By releasing open models, open simulation, and large-scale open datasets, they're enabling the ecosystem -- automakers, startups and researchers -- to stress-test autonomy at scale. Zooming out, Alpamayo fits neatly into a broader arc we've been covering with Nvidia across events like GTC and Dell Tech World. As Kari Briski has explained on theCUBE, enterprises -- and now robotics and mobility companies -- are moving from CPU-era operations to GPU-driven AI factories. These factories don't just produce models. They produce decisions. Tokens become actions. Data becomes behavior. In physical systems like vehicles and robots, latency, throughput and reliability aren't abstract metrics -- they determine safety. Alpamayo is what happens when that AI factory mindset is applied to the physical world. Alpamayo isn't the "ChatGPT moment for cars" because it's flashy. It's because it acknowledges a hard truth: Autonomy only scales when systems can reason about the unexpected and explain their choices. From simulation-heavy operators such as Gatik, to real-time edge systems like Plus, to agentic vehicle visions such as Tensor, the industry has been signaling the same thing in our interviews for years. Nvidia is now putting structure, tooling and openness behind that signal. This is physical AI growing up -- and it's one of the clearest steps yet toward making Level 4 autonomy real, not theoretical.

[7]

Nvidia Unveils Alpamayo AI: 'Chat-GPT Moment' For Cars - NVIDIA (NASDAQ:NVDA)

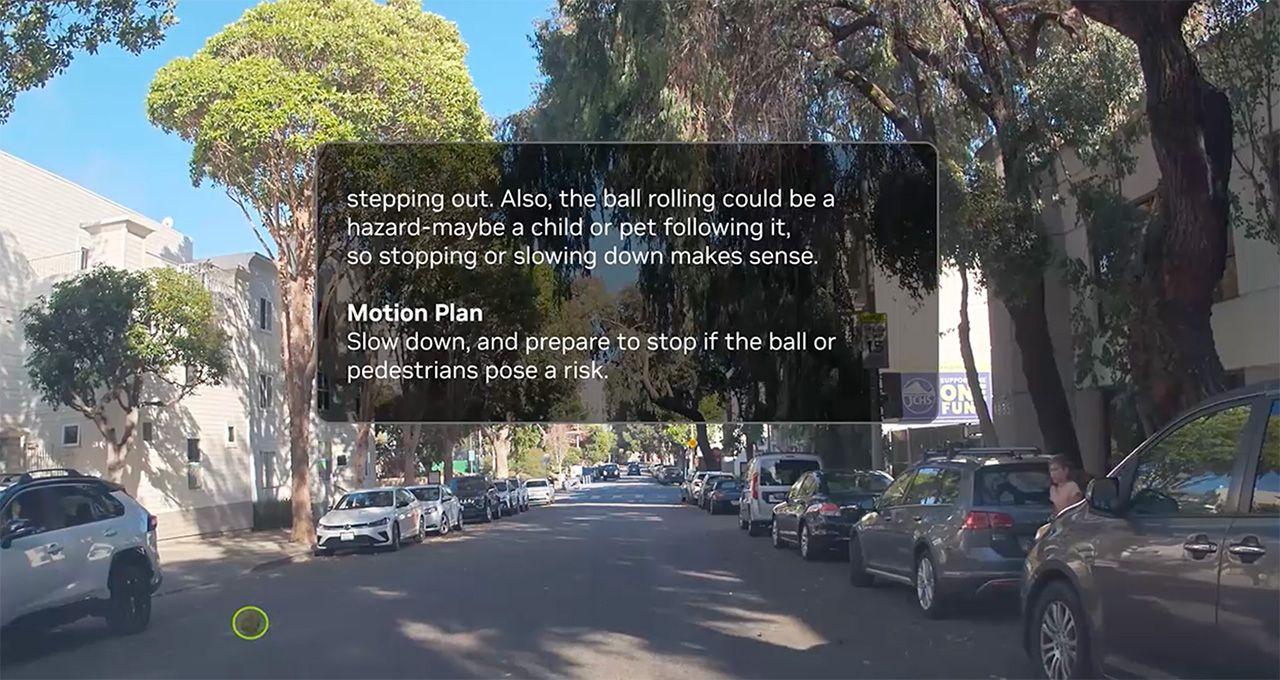

At CES 2026 on Monday, NVIDIA Corp. (NASDAQ:NVDA) unveiled a shift in autonomous vehicle (AV) development with the release of the open-source Alpamayo family. NVDA stock is moving. See the chart and price action here. Previous self-driving systems relied on separate modules for "seeing" (perception) and "steering" (planning), while Alpamayo introduced vision language action (VLA) models that possess human-like reasoning capabilities. Read Next: What To Expect At CES 2026: Nvidia, AMD, Joby, Archer, D-Wave And More The "Reasoning" Revolution A core challenge in autonomy has been the "long tail," which is rare, unpredictable road scenarios that traditional algorithms fail to navigate. Nvidia says Alpamayo 1, a 10-billion-parameter model, addresses this by using chain-of-thought reasoning. "The ChatGPT moment for physical AI is here -- when machines begin to understand, reason and act in the real world," said Jensen Huang, CEO of Nvidia. "Robotaxis are among the first to benefit. Alpamayo brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments and explain their driving decisions -- it's the foundation for safe, scalable autonomy," Huang added. Much like a human driver might think, "There is a ball in the street, so a child might follow," Alpamayo 1 generates trajectories alongside logical traces. This transparency is crucial for helping developers and regulators understand why a vehicle made a specific decision. A Three-Pillar Ecosystem Nvidia is providing a full-stack open development environment: By moving toward end-to-end physical AI, NVIDIA is leveraging its dominant hardware position, specifically the DRIVE Thor platform, to run these massive neural networks. Nvidia said industry leaders such as Lucid Group, Inc. (NASDAQ:LCID) and Uber Technologies, Inc. (NYSE:UBER) are already showing interest in the Alpamayo framework to fast-track their Level 4 roadmaps. "The shift toward physical AI highlights the growing need for AI systems that can reason about real-world behavior, not just process data," said Kai Stepper, vice president of ADAS and autonomous driving at Lucid Motors. "Advanced simulation environments, rich datasets and reasoning models are important elements of the evolution," Stepper added. As Huang noted, this could be the "ChatGPT moment" for physical AI, where machines finally begin to understand the nuances of the physical world rather than just reacting to it. Read Next: Maduro Down, Drone Stocks Up After Venezuela Mission Photo: Shutterstock NVDANVIDIA Corp$187.85-0.14%OverviewLCIDLucid Group Inc$11.750.51%UBERUber Technologies Inc$81.050.38%Market News and Data brought to you by Benzinga APIs

[8]

Nvidia Previews AI-Powered Cars With Ability to 'Reason' | PYMNTS.com

According to a report Monday (Jan. 5) from Bloomberg News, the tech giant has introduced a platform called Alpamayo that lets cars "reason" in the real world, as CEO Jensen Huang said during a presentation at the CES trade show in Las Vegas. The company says potential users can retrain the Alpamayo model themselves, with the goal of creating cars that can think their way out of scenarios like traffic-light outages. The car's onboard computer will study inputs from cameras and other sensors, divide them into steps and arrive at a solution, Bloomberg said. The report adds that Nvidia is expanding on its work with Mercedes-Benz to create vehicles capable of hands-free driving on the highway that can also navigate city streets. The first Nvidia-powered car will roll out in the U.S. during the first quarter, said Huang, with Europe following in the second quarter and a debut in Asia in the second half of the year. "We imagine that someday a billion cars on the road will all be autonomous," he said. According to Bloomberg, Nvidia's presentation also involved AI models and other technology for robots. Huang said the company is collaborating with Siemens to further the presence of AI in the physical world. The launch comes at a time when carmakers are upping their use of AI to meet increasing vehicle complexity, safety expectations and software integration. As covered here late last year, IBM has noted that manufacturers are turning to machine learning, computer vision and predictive modeling as traditional vehicle programs and factory processes can no longer keep up with modern demands. The Kaizen Institute adds that increasing pressure to improve efficiency and safety is driving companies to include AI in design, production, logistics and in-car systems. "That momentum is now driving strategic decisions," that report added. "A PYMNTS Intelligence analysis found that roughly 75% of automakers plan to integrate generative AI into vehicles this year, a signal that the industry is moving from experimental pilots toward full-scale deployment." Meanwhile, this week also saw a report that Uber and Lyft were resurrecting their driverless taxi programs after abandoning the technology. The ride-hailing companies are developing plans to include driverless cars provided by companies such as the Google-owned Waymo on their apps this year, The Wall Street Journal (WSJ) reported.

[9]

Nvidia reveals Alpamayo, world's first 'thinking' model for autonomous driving (NVDA:NASDAQ)

Nvidia (NVDA) CEO Jensen Huang unveiled Alpamayo, a family of open models built for autonomous driving, during his keynote address at CES 2026 in Las Vegas on Monday. "Today, we are introducing Alpamayo, the world's first thinking model for autonomous driving," Huang said. "Not Alpamayo provides advanced reasoning AI for autonomous vehicles, promising improved safety and broader AV adoption by major automakers and service providers. Vera Rubin introduces a new multi-chip design and enhanced efficiency, meeting increasing AI computing demand for training and inference and enabling improved capabilities for partners. Automakers like Mercedes-Benz, JLR, Lucid, and Uber are adopting Alpamayo, while tech firms and cloud service providers such as AWS, Google, Meta, OpenAI, and Oracle expect to use Vera Rubin.

[10]

NVIDIA unveils open-source AI models to speed safe autonomous driving

Image: NVIDIA NVIDIA unveiled a new family of open-source artificial intelligence models, simulation tools and datasets aimed at accelerating the development of safer, reasoning-based autonomous vehicles, as competition intensifies to deploy higher levels of self-driving technology. Announced at the CES technology show, the Alpamayo family is designed to address so-called "long-tail" driving scenarios, rare and complex situations that remain among the biggest obstacles to large-scale autonomous vehicle deployment. Autonomous systems have traditionally relied on separate perception and planning models, a structure that can struggle when vehicles encounter unfamiliar conditions. NVIDIA said Alpamayo introduces reasoning-based vision language action (VLA) models that allow systems to analyse cause and effect step by step, improving decision-making, safety and explainability. The AI models could help autonomous vehicles handle complex environments "The ChatGPT moment for physical AI is here, when machines begin to understand, reason and act in the real world," NVIDIA founder and CEO Jensen Huang said in a statement. He said the technology could help autonomous vehicles handle complex environments and explain their driving decisions, a key factor in building trust and scaling deployment. The Alpamayo family combines three elements: open AI models, simulation frameworks and large-scale datasets. Rather than operating directly inside vehicles, the models are designed to act as "teacher" systems, which developers can fine-tune or distil into smaller models suitable for real-world use. NVIDIA said it is releasing Alpamayo 1, a 10-billion-parameter chain-of-thought reasoning model for autonomous driving research, alongside AlpaSim, an open-source simulation platform for closed-loop testing. The company is also making available physical AI open datasets comprising more than 1,700 hours of driving data collected across diverse geographies and conditions. The company said the tools would enable a self-reinforcing development loop, allowing developers to train, test and refine reasoning-based autonomous driving systems more efficiently. Automotive and mobility companies to explore new tech from NVIDIA Automotive and mobility companies, including Jaguar Land Rover, Lucid and Uber, as well as research groups such as Berkeley DeepDrive, are exploring the Alpamayo platform, NVIDIA said, as they work toward level 4 autonomy, where vehicles can operate without human intervention in defined conditions. Against a backdrop of slower progress and rising scrutiny in the autonomous vehicle sector, the company said open development and improved reasoning capabilities could help the industry overcome technical barriers and advance safer deployment at scale.

Share

Share

Copy Link

Nvidia unveiled Alpamayo at CES 2026, a family of open-source AI models designed to help autonomous vehicles reason through complex driving situations. The platform includes a 10-billion-parameter model, simulation tools, and over 1,700 hours of driving data. Mercedes-Benz CLA will be the first vehicle to feature the technology, launching in Q1 2026.

Nvidia Introduces Alpamayo at CES 2026

Nvidia has launched Alpamayo, a comprehensive family of open-source AI models aimed at transforming how autonomous vehicles navigate complex driving situations. Announced by CEO Jensen Huang at CES 2026, the platform marks what the company calls "the ChatGPT moment for physical AI" - when machines begin to understand, reason, and act in the real world

1

. The release brings together simulation tools, datasets, and AI models specifically designed for reasoning-based autonomous vehicles, offering developers one of the most comprehensive open toolkits currently available4

.

Source: Seeking Alpha

Chain-of-Thought Reasoning Powers Next-Gen Self-Driving Vehicles

At the core of the platform sits Alpamayo 1, a 10-billion-parameter chain-of-thought vision language action model that enables autonomous vehicles to think more like humans when confronting unfamiliar scenarios

1

. Built for Level 4 autonomy, the system breaks down problems into steps, reasons through every possibility, and selects the safest path - similar to how a human driver would approach a traffic light outage at a busy intersection1

. This human-like reasoning capability allows the system to handle edge cases without previous experience, addressing one of the most persistent challenges in autonomous driving development.

Source: TechSpot

The vision language action model not only controls steering, brakes, and acceleration but also applies judgment to autonomous driving decisions based on sensor input

3

. Built on Nvidia's Cosmos Reason AI model, Alpamayo R1 allows humans to decode and understand why an autonomous vehicle made a specific decision in any scenario, bringing transparency to the decision-making process3

.Open-Source Framework and Extensive Datasets

Alpamayo 1's underlying code is available on Hugging Face, enabling developers to fine-tune the model into smaller, faster versions for vehicle development or use it to train simpler driving systems

1

. Rather than running directly in vehicles, the system serves as large-scale teacher models that developers can use to refine their autonomous vehicle stacks5

. Ali Kani, Nvidia's vice president of automotive, explained that developers can use Cosmos to generate synthetic data and then train and test their Alpamayo-based AV applications on combinations of real and synthetic datasets1

.Nvidia is releasing Physical AI Open Datasets with more than 1,700 hours of driving data collected across a wide range of geographies and conditions, covering rare and complex real-world scenarios

1

. The company also launched AlpaSim, an open-source simulation framework available on GitHub that recreates real-world driving conditions - from sensors to traffic - allowing developers to safely test systems at scale1

. The simulation framework supports closed-loop training and evaluation across varied environments and edge cases4

.

Source: TechCrunch

Related Stories

Mercedes-Benz CLA to Feature Alpamayo Technology

The first passenger car to feature Alpamayo will be the next-generation Mercedes-Benz CLA, scheduled to hit US roads in the first quarter of 2026 with Nvidia's Level 2++ driver-assistance features

3

. Europe will follow in the second quarter, with an Asia introduction coming in the second half of the year, Huang announced . The two-door coupe recently earned a 5-star Euro NCAP rating, scoring 94 percent for adult protection, 89 for child safety, and 93 for pedestrian protection3

.Nvidia is building on its work with Mercedes-Benz to develop vehicles capable of hands-free driving on highways that can also navigate through cities . The company announced it is working with robotaxi operators with hopes that the software stack would be adopted for AV use as early as 2027

5

. Huang expressed ambitious long-term goals, stating "we imagine that someday a billion cars on the road will all be autonomous" .Broader Physical AI Portfolio and Market Implications

Alpamayo is part of Nvidia's expanded portfolio that includes open models from several product families: Nemotron for agentic AI, Cosmos for physical AI, Isaac GR00T for robots, and Clara for healthcare and life sciences

4

. Huang also highlighted Drive Hyperion - Nvidia's open, modular, Level-4-ready AI platform adopted by several automotive suppliers, including Aeva, AUMOVIO, Astemo, Arbe, Bosch, Hesai, Magna, OmniVision, Quanta, Sony, and ZF3

.Goldman Sachs predicted that the robotaxis' ride share market in North America will grow at a compound annual rate of around 90 percent from 2025 to 2030, which could push the gross profit for the US AV market to around $3.5 billion

5

. This market potential underscores why Nvidia is positioning autonomous vehicles as a critical growth area alongside AI and robotics. The timing of the announcement is notable, coming shortly after Alphabet's Waymo suffered a setback when a massive power outage in San Francisco halted several of its vehicles5

.References

Summarized by

Navi

[1]

[3]

[4]

[5]

Related Stories

NVIDIA Unveils Alpamayo-R1: First Open-Source Reasoning AI Model for Autonomous Driving Research

01 Dec 2025•Technology

Nvidia unveils Rubin platform and physical AI models, igniting Wall Street optimism for future

06 Jan 2026•Technology

Nvidia Expands AI Partnerships for Next-Generation Autonomous Vehicles

07 Jan 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology