NVIDIA Unveils 800V High-Voltage DC Power System for Next-Gen AI Data Centers

5 Sources

5 Sources

[1]

Nvidia to boost AI server racks to megawatt scale, increasing power delivery by five times or more

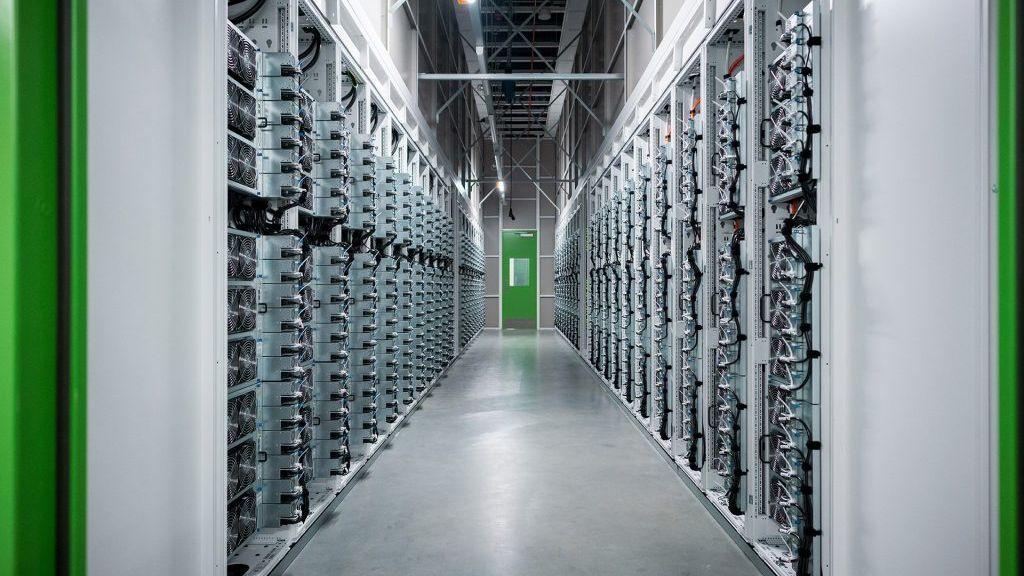

Nvidia is developing a new power infrastructure called the 800 V HVDC architecture to deliver the power requirements of 1 MW server racks and more, with plans to deploy it by 2027. According to Nvidia, the current 54V DC power distribution system is already reaching its limit as racks begin to exceed 200 kilowatts. As AI chips become more powerful and demand more electricity, these existing systems would no longer be able to practically keep up, requiring data centers to build new solutions so that their electrical circuits do not get overwhelmed. For example, Nvidia says that its GB200 NVL72 or GB300 NVL72 needs around eight power shelves. If it used 54V DC power distribution, the power shelves would consume 64 U of rack space, which is more than what the average server rack can accommodate. Aside from this, it also said that delivering 1 MW using 54V DC requires a 200 kg copper busbar -- that means a gigawatt AI data center, which many companies are now racing to build, would need 500,000 tons of copper. This is nearly half of the U.S.'s total copper output in 2024, and that's just for one site. So, instead of using the 54V DC system, which is installed directly at the server cabinet, Nvidia is proposing to use the 800 V HVDC, which will connect near the site's 13.8kV AC power source. Aside from freeing up space in the server racks, this will also streamline the approach and make power transmission within the data center more efficient. It will also remove the multiple AC to DC and DC to DC transformations used in the current system, which added complexity. The 800 V HVDC will also reduce the system current for the same power load, potentially increasing the total wattage delivered by up to 85% without the need to upgrade the conductor. "With lower current, thinner conductors can handle the same load, reducing copper requirements by 45%," said the company. "Additionally, DC systems eliminate AC-specific inefficiencies, such as skin effect and reactive power losses, further improving efficiency." According to Digitimes [machine translated], the AI giant is working with Infineon, Texas Instruments, and Navitas to help develop this system. Furthermore, it's expected that they will deploy wide-bandgap semiconductors like gallium nitride (GaN) and silicon carbide (SiC) to achieve the high power densities needed by these powerful AI systems. The 800 V HVDC is a technical challenge that data centers must solve for power efficiency, especially as they start to breach 1 GW capacity and more. This solution should help them reduce wasted power, which, in turn, would reduce operating costs.

[2]

NVIDIA's new 800V high-voltage DC power distrbution system will fuel next-gen data centers

NVIDIA's new 800V high-voltage DC power distribution system: completely move PSU modules out of server racks: more room for computer, networking modules. As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. NVIDIA recently announced that it would be adopting a new 800V high-voltage DC power distribution system, which will power the waves of next-generation data centers. In a new post on X by insider @Jukanlosreve we're hearing that 3 semiconductor companies have been officially named as NVIDIA partners in developing this new 800V high-voltage system, referred to as the "three major power IC players" with Infineon, Texas Instruments (TI), and Navitas. NVIDIA's new 800V high-voltage DC power distribution system has an extremely complex design, with companies forced to offer robust and diverse power solutions to help NVIDIA achieve its lofty goals. NVIDIA is most likely preparing to introduce more power semiconductors in the future, but in the early stages of this new ultra-high-end battleground, these three companies will hold a very important competitive edge. The new 800V high-voltage DC system will see the complete move of the power supply modules out of the server racks themselves, which will free up internal space for more compute and networking modules, maximizing compute density. In its first phase, PSUs will be placed beside the rack in a "sidecar" configuration, where over time, NVIDIA's expansive goal is to gradually integrate these power modules into a centralized power delivery system for the entire AI data center. This means that over time, the entire power supply solution must not just achieve far higher power density than existing technologies, but also offer end-to-end integration capabilities, extending from the power grid infrastructure, all the way through and into the internal architecture of the AI data center. South Korean media outlet The Bell reports that NVIDIA's new technological direction presents a "very high competitive barrier' that on one side of the argument requires an expansive product lineup -- extending from the power grid infrastructure to PSUs, BBUs, and voltage converters on processor boards -- through to the necessary technical and manufacturing capabilities. NVIDIA will need many years to get its proposed 800V high-voltage DC system fully deployed, where transitioning from sidecar configurations to fully centralized architectures to possibly take even longer. The general industry consensus is that NVIDIA's new architecture represents a significant step up in complexity when compared to current AI data centers. As it stands, the AI data center power semiconductor supply chain is already dominated by top-tier companies, smaller PMIC companies will find themselves in a harder position to enter this new market, where their best opportunities might come from current, traditional data centers, where there is wiggle room to compete in the server power supply market.

[3]

Texas Instruments Collaborates with NVIDIA to Revolutionize AI Data Center Power Distribution

With the growth of AI, the power required per data center rack is predicted to increase from 100kW today to more than 1MW in the near future.1 To power a 1MW rack, today's 48V distribution system would require almost 450lbs of copper, making it physically impossible for a 48V system to scale power delivery to support computing needs in the long term.2 The new 800V high-voltage DC power-distribution architecture will provide the power density and conversion efficiency that future AI processors require, while minimizing the growth of the power supply's size, weight and complexity. This 800V architecture will enable engineers to scale power-efficient racks as data-center demand evolves. "A paradigm shift is happening right in front of our eyes," said Jeffrey Morroni, director of power management research and development at Kilby Labs and a TI Fellow. "AI data centers are pushing the limits of power to previously unimaginable levels. A few years ago, we faced 48V infrastructures as the next big challenge. Today, TI's expertise in power conversion combined with NVIDIA's AI expertise are enabling 800V high-voltage DC architectures to support the unprecedented demand for AI computing."

[4]

Vertiv Boosts AI Infrastructure with NVIDIA 800 VDC Power Alignment

Vertiv aligns with the NVIDIA AI roadmap to stay one GPU generation ahead, enabling customers to deploy their power and cooling infrastructure in sync with NVIDIA's next-generation compute platforms. Vertiv provides end-to-end power, cooling, integrated infrastructure and services to support AI factories and other data center deployments. As rack power requirements in AI environments scale beyond 300 kilowatts, 800 VDC enables more efficient, centralized power delivery by reducing copper usage, current, and thermal losses. Vertiv's upcoming portfolio will feature centralized rectifiers, high-efficiency DC busways, rack-level DC-DC converters, and DC-compatible backup systems, expanding its broad, end-to-end power management portfolio that already includes a robust AC power train.

[5]

Vertiv Accelerates AI Infrastructure Evolution in Alignment with NVIDIA 800 VDC Power Architecture Announcement

Vertiv (NYSE: VRT), a global provider of critical digital infrastructure, today confirmed its strategic alignment with NVIDIA's announcement of an AI roadmap to deploy 800 VDC power architectures for the next generation of AI-centric data centers. Paving the way for future-ready designs, Vertiv's 800 VDC power portfolio is scheduled for release in the second half of 2026 -- ahead of NVIDIA Kyber and NVIDIA Rubin Ultra platform rollouts. Vertiv aligns with the NVIDIA AI roadmap to stay one GPU generation ahead, enabling customers to deploy their power and in sync with NVIDIA's next-generation compute platforms. Vertiv provides end-to-end power, cooling, integrated infrastructure and services to support AI factories and other As rack power requirements in AI environments scale beyond 300 kilowatts, 800 VDC enables more efficient, centralized power delivery by reducing copper usage, current, and thermal losses. Vertiv's upcoming portfolio will feature centralized rectifiers, high-efficiency DC busways, rack-level DC-DC converters, and DC-compatible backup systems, expanding its broad, end-to-end power management portfolio that already includes a robust AC power train. "As GPUs evolve to support increasingly complex AI applications at giga-watt scale, power and cooling providers need to be equally innovative to provide energy-efficient and high-density solutions for the AI factories. While the 800 VDC portfolio is new, DC power isn't a new direction for us, it's a continuation of what we've already done at scale," said Scott Armul, executive vice president of global portfolio and business units at Vertiv. "We've spent decades deploying higher-voltage across global telecom, industrial, and data center applications. We're entering this transition from a position of strength and bringing real-world experience to meet the demands of the AI factory." Vertiv's experience in DC power spans more than two decades of ±400 VDC deployments, broadened by strategic acquisitions during the early 2000's. These solutions support critical loads in global telecom networks, integrated microgrids, and mission-critical facilities. This foundation establishes Vertiv as a trusted leader in the safe design, deployment, and operation of higher-voltage DC architectures, with proven scale, portfolio, and long-term serviceability. "It is important development and opportunity for Vertiv to march next level journey of High Density Accelerated Computing Infrastructure." said Subhasis Majumder, VP/GM at Vertiv India. "We have been in DC Power space for more than two decades serving and Industrial segment. With this collaboration, we are enhancing our portfolio to support Innovative AI Factory at large scale with minimising energy losses " Designed for homogeneous AI zones in hyperscale environments, Vertiv's 800 VDC portfolio is a key pillar of its "unit of compute" strategy -- a systems-level design engineered to enable all infrastructure components -- to interoperate as one modular and scalable system, matching infrastructure demands of next-generation GPUs. Vertiv's support for both AC and DC architectures is a strategic differentiator in the evolving AI data center landscape.

Share

Share

Copy Link

NVIDIA announces a new 800V high-voltage DC power distribution system to meet the increasing power demands of AI data centers, collaborating with major semiconductor companies to revolutionize data center infrastructure.

NVIDIA's Power Innovation for AI Data Centers

NVIDIA, the AI giant, is set to revolutionize data center power infrastructure with its newly announced 800V high-voltage DC (HVDC) power distribution system. This groundbreaking technology aims to address the escalating power demands of AI computing, which are pushing current systems to their limits

1

.

Source: TweakTown

The Need for Power Innovation

As AI chips become increasingly powerful, they require more electricity, causing existing power systems to struggle. NVIDIA reports that the current 54V DC power distribution system is reaching its capacity as racks begin to exceed 200 kilowatts. The company predicts that power requirements per data center rack will surge from 100kW today to more than 1MW in the near future

3

.The 800V HVDC Solution

NVIDIA's proposed 800V HVDC system offers several advantages over the current 54V DC system:

-

Increased Power Delivery: The new system can deliver up to five times more power, supporting 1MW server racks and beyond

1

. -

Space Efficiency: By connecting near the site's 13.8kV AC power source, the 800V HVDC system frees up space in server racks, allowing for more compute and networking modules

2

. -

Reduced Copper Usage: The new system can potentially reduce copper requirements by 45%, addressing the massive copper demand of current systems

1

. -

Improved Efficiency: By eliminating multiple AC to DC and DC to DC transformations, the 800V HVDC system streamlines power transmission within data centers

1

.

Related Stories

Collaborative Effort and Implementation

NVIDIA is not working alone on this ambitious project. The company has partnered with semiconductor giants Infineon, Texas Instruments, and Navitas to develop the 800V HVDC system

2

. These collaborations aim to create robust and diverse power solutions to meet NVIDIA's goals.The implementation of the new power system will occur in phases:

- Initially, power supply units (PSUs) will be placed beside the rack in a "sidecar" configuration.

- Over time, NVIDIA plans to integrate these power modules into a centralized power delivery system for entire AI data centers

2

.

Source: Tom's Hardware

Industry Impact and Future Outlook

The shift to 800V HVDC represents a significant leap in data center technology. It presents both challenges and opportunities for the industry:

-

Competitive Barrier: The new system requires an expansive product lineup and advanced technical capabilities, potentially creating a high barrier to entry for smaller companies

2

. -

Infrastructure Alignment: Companies like Vertiv are aligning their products with NVIDIA's AI roadmap, developing 800 VDC power portfolios to support next-generation compute platforms

4

5

. -

Long-term Vision: While full deployment may take years, the industry consensus is that this new architecture represents a significant advancement in AI data center design

2

.

Source: DT

As AI continues to drive technological innovation, NVIDIA's 800V HVDC system stands poised to reshape the landscape of data center infrastructure, enabling the next generation of high-performance AI computing.

References

Summarized by

Navi

[1]

[2]

Related Stories

Nvidia and ABB Join Forces to Power Next-Gen AI Data Centers with 800 VDC Architecture

14 Oct 2025•Technology

Nvidia Unveils Omniverse DSX Blueprint for Gigawatt-Scale AI Data Centers

29 Oct 2025•Technology

NVIDIA Unveils Vision for Next-Gen 'AI Factories' with Vera Rubin and Kyber Architectures

13 Oct 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy