Nvidia CEO Claims AI Chips Outpacing Moore's Law, Promising Faster AI Progress

2 Sources

2 Sources

[1]

Exclusive: Nvidia CEO says his AI chips are improving faster than Moore's Law

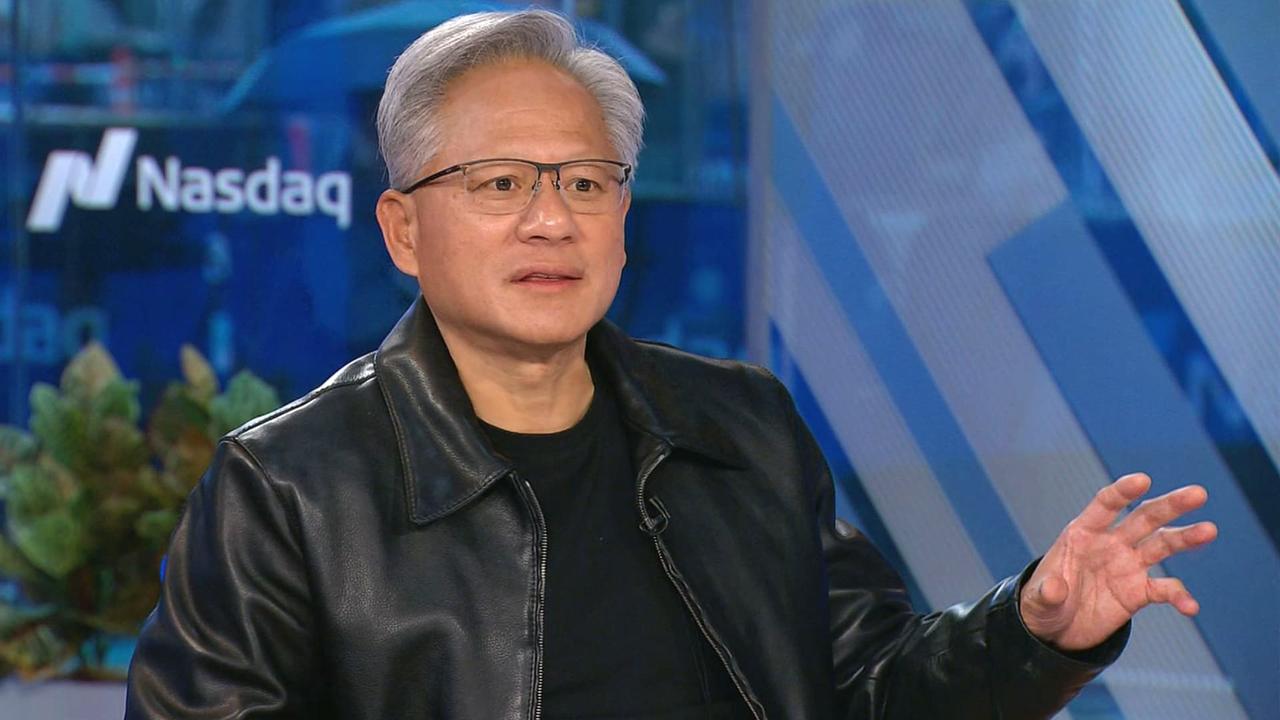

Nvidia CEO Jensen Huang says the performance of his company's AI chips is advancing faster than historical rates set by Moore's Law, the rubric that drove computing progress for decades. "Our systems are progressing way faster than Moore's Law," said Huang in an interview with TechCrunch on Tuesday, the morning after he delivered a keynote to a 10,000-person crowd at CES in Las Vegas. Coined by Intel co-founder Gordon Moore in 1965, Moore's Law predicted that the number of transistors on computer chips would roughly double every year, essentially doubling the performance of those chips. This prediction mostly panned out, and created rapid advances in capability and plummeting costs for decades. In recent years, Moore's Law has slowed down. However, Huang claims that Nvidia's AI chips are moving at an accelerated pace of their own; the company says its latest datacenter superchip is more than 30x faster for running AI inference workloads than its previous generation. "We can build the architecture, the chip, the system, the libraries, and the algorithms all at the same time," said Huang. "If you do that, then you can move faster than Moore's Law, because you can innovate across the entire stack." The bold claim from Nvidia's CEO comes at a time when many are questioning whether AI's progress has stalled. Leading AI labs - such as Google, OpenAI, and Anthropic - use Nvidia's AI chips to train and run their AI models, and advancements to these chips would likely translate to further progress in AI model capabilities. This isn't the first time Huang has suggested Nvidia is surpassing Moore's law. On a podcast in November, Huang suggested the AI world is on pace for "hyper Moore's Law." Huang rejects the idea that AI progress is slowing. Instead he claims there are now three active AI scaling laws: pre-training, the initial training phase where AI models learn patterns from large amounts of data; post-training, which fine tunes an AI model's answers using methods such as human feedback; and test-time compute, which occurs during the inference phase and gives an AI model more time to "think" after each question. "Moore's Law was so important in the history of computing because it drove down computing costs," Huang told TechCrunch. "The same thing is going to happen with inference where we drive up the performance, and as a result, the cost of inference is going to be less." (Of course, Nvidia has grown to be the most valuable company on Earth by riding the AI boom, so it benefits Huang to say so.) Nvidia's H100s were the chip of choice for tech companies looking to train AI models, but now that tech companies are focusing more on inference, some have questioned whether Nvidia's expensive chips will still stay on top. AI models that use test-time compute are expensive to run today. There's concern that OpenAI's o3 model, which uses a scaled up version of test-time compute, would be too expensive for most people to use. For example, OpenAI spent nearly $20 per task using o3 to achieve human-level scores on a test of general intelligence. A ChatGPT Plus subscription costs $20 for an entire month of usage. Huang held up Nvidia's latest datacenter superchip, the GB200 NVL72, onstage like a shield during Monday's keynote. This chip is 30 to 40x faster at running AI inference workloads than Nvidia's previous best selling chips, the H100. Huang says this performance jump means that AI reasoning models like OpenAI's o3, which uses a significant amount of compute during the inference phase, will become cheaper over time. Huang says he's overall focused on creating more performant chips, and that more performant chips create lower prices in the long run. "The direct and immediate solution for test-time compute, both in performance and cost affordability, is to increase our computing capability," Huang told TechCrunch. He noted that in the long term, AI reasoning models could be used to create better data for the pre-training and post-training of AI models. We've certainly seen the price of AI models plummet in the last year, in part due to computing breakthroughs from hardware companies like Nvidia. Huang says that's a trend he expects to continue with AI reasoning models, even though the first versions we've seen from OpenAI have been rather expensive. More broadly, Huang claimed his AI chips today are 1,000x better than what it made 10 years ago. That's a much faster pace than the standard set by Moore's law, one Huang says he sees no sign of stopping soon.

[2]

Jensen Huang claims Nvidia's AI chips are outpacing Moore's Law

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. What just happened? Challenging conventional wisdom, Nvidia CEO Jensen Huang said that his company's AI chips are outpacing the historical performance gains set by Moore's Law. This claim, made during his keynote address at CES in Las Vegas and repeated in an interview, signals a potential paradigm shift in the world of computing and artificial intelligence. For decades, Moore's Law, coined by Intel co-founder Gordon Moore in 1965, has been the driving force behind computing progress. It predicted that the number of transistors on computer chips would roughly double every year, leading to exponential growth in performance and plummeting costs. However, this law has shown signs of slowing down in recent years. Huang, however, painted a different picture of Nvidia's AI chips. "Our systems are progressing way faster than Moore's Law," he told TechCrunch, pointing to the company's latest data center superchip, which is claimed to be more than 30 times faster for AI inference workloads than its predecessor. Huang attributed this accelerated progress to Nvidia's comprehensive approach to chip development. "We can build the architecture, the chip, the system, the libraries, and the algorithms all at the same time," he explained. "If you do that, then you can move faster than Moore's Law, because you can innovate across the entire stack." This strategy has apparently yielded impressive results. Huang claimed that Nvidia's AI chips today are 1,000 times more advanced than what the company produced a decade ago, far outstripping the pace set by Moore's Law. Rejecting the notion that AI progress is stalling, Huang outlined three active AI scaling laws: pre-training, post-training, and test-time compute. He pointed to the importance of test-time compute, which occurs during the inference phase and allows AI models more time to "think" after each question. During his CES keynote, Huang showcased Nvidia's latest data center superchip, the GB200 NVL72, touting its 30 to 40 times faster performance in AI inference workloads compared to its predecessor, the H100. This leap in performance, Huang argued, will make expensive AI reasoning models like OpenAI's o3 more affordable over time. "The direct and immediate solution for test-time compute, both in performance and cost affordability, is to increase our computing capability," Huang said. He added that in the long term, AI reasoning models could be used to create better data for the pre-training and post-training of AI models. Nvidia's claims come at a crucial time for the AI industry, with AI companies such as Google, OpenAI, and Anthropic relying on its chips and their advancements in performance. Moreover, as the focus in the tech industry shifts from training to inference, questions have arisen about whether Nvidia's expensive products will maintain their dominance. Huang's claims suggest that Team Green is not only keeping pace but setting new standards in inference performance and cost-effectiveness. While the first versions of AI reasoning models like OpenAI's o3 have been expensive to run, Huang expects the trend of plummeting AI model costs to continue, driven by computing breakthroughs from hardware companies like Nvidia.

Share

Share

Copy Link

Nvidia's CEO Jensen Huang asserts that the company's AI chips are advancing faster than Moore's Law, potentially revolutionizing AI capabilities and costs.

Nvidia's AI Chips Surpass Moore's Law

In a bold claim that could reshape the landscape of artificial intelligence and computing, Nvidia CEO Jensen Huang has stated that his company's AI chips are advancing at a pace that surpasses Moore's Law. This assertion, made during a keynote address at CES in Las Vegas and reiterated in an interview with TechCrunch, signals a potential paradigm shift in the progression of AI technology

1

2

.Understanding Moore's Law and Nvidia's Claim

Moore's Law, a principle that has guided computing progress for decades, predicted that the number of transistors on computer chips would roughly double every year, leading to exponential growth in performance. However, in recent years, this law has shown signs of slowing down

1

.Huang contends that Nvidia's AI chips are breaking this trend:

"Our systems are progressing way faster than Moore's Law," Huang stated, pointing to Nvidia's latest datacenter superchip, which is purportedly more than 30 times faster for AI inference workloads than its predecessor

1

.The Secret Behind Nvidia's Rapid Progress

Huang attributes this accelerated progress to Nvidia's comprehensive approach to chip development:

"We can build the architecture, the chip, the system, the libraries, and the algorithms all at the same time," he explained. "If you do that, then you can move faster than Moore's Law, because you can innovate across the entire stack."

1

This strategy has apparently yielded impressive results, with Huang claiming that Nvidia's AI chips today are 1,000 times more advanced than what the company produced a decade ago

2

.Implications for AI Progress

Rejecting notions of AI progress stalling, Huang outlined three active AI scaling laws: pre-training, post-training, and test-time compute. He emphasized the importance of test-time compute, which allows AI models more time to "think" after each question

1

2

.Nvidia's Latest Innovation: The GB200 NVL72

During his CES keynote, Huang showcased Nvidia's latest datacenter superchip, the GB200 NVL72. This chip is claimed to be 30 to 40 times faster at running AI inference workloads than Nvidia's previous best-selling chip, the H100

1

2

.Related Stories

The Future of AI Costs and Performance

Huang argues that this performance leap will make expensive AI reasoning models, like OpenAI's o3, more affordable over time. "The direct and immediate solution for test-time compute, both in performance and cost affordability, is to increase our computing capability," Huang stated

1

.While the first versions of AI reasoning models have been expensive to run, Huang expects the trend of plummeting AI model costs to continue, driven by computing breakthroughs from hardware companies like Nvidia

1

2

.Industry Impact and Future Prospects

Nvidia's claims come at a crucial time for the AI industry, with major AI companies such as Google, OpenAI, and Anthropic relying on its chips. As the focus in the tech industry shifts from training to inference, questions have arisen about whether Nvidia's expensive products will maintain their dominance

2

.Huang's assertions suggest that Nvidia is not only keeping pace but setting new standards in inference performance and cost-effectiveness, potentially cementing its position as a leader in the AI hardware space for years to come.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research