NVIDIA Contributes Blackwell Platform Design to Open Compute Project, Advancing AI Infrastructure

4 Sources

4 Sources

[1]

How NVIDIA's Blackwell Platform is Transforming AI Infrastructure

NVIDIA has made a significant contribution to the Open Compute Project (OCP) by sharing essential components of its Blackwell accelerated computing platform design. This initiative is expected to influence data center technologies, promoting open, efficient, and scalable solutions. By aligning with OCP standards, NVIDIA aims to transform AI infrastructure and enhance its Spectrum-X Ethernet networking platform. By opening up its Blackwell accelerated computing platform design, NVIDIA is not just sharing technology; it's creating an environment where AI infrastructure can thrive in an open, efficient, and scalable way. For those who have experienced the frustration of technological bottlenecks, this move offers a promising glimpse into a future where AI advancements are not only possible but inevitable. But what does this mean for the everyday tech enthusiast or the industry professional navigating the complexities of AI development? At its core, NVIDIA's initiative is about breaking down silos and building bridges across the tech landscape. By aligning with OCP standards and expanding its Spectrum-X Ethernet networking platform, NVIDIA is setting the stage for a new era of AI performance and efficiency. This isn't just about faster processors or more powerful GPUs; it's about laying the groundwork for the next wave of AI innovations, from large language models to complex data processing tasks. NVIDIA's involvement with the OCP includes the release of critical design elements from the GB200 NVL72 system. These contributions focus on rack architecture and liquid-cooling specifications, which are essential for efficient data center operations. Previously, NVIDIA shared the HGX H100 baseboard design, reinforcing its commitment to open hardware ecosystems. These efforts are anticipated to propel advancements in AI infrastructure, providing a robust foundation for scalable and efficient data center solutions. The Spectrum-X Ethernet networking platform is central to NVIDIA's strategy for boosting AI factory performance. By adhering to OCP standards, NVIDIA introduces the ConnectX-8 SuperNICs, which enhance networking speeds and optimize AI workloads. This alignment is vital for developing AI factories capable of meeting the growing demands of AI applications. The ConnectX-8 SuperNICs mark a significant advancement in networking capabilities, facilitating faster and more efficient data processing. NVIDIA's accelerated computing platform is engineered to support the next wave of AI advancements. It features a liquid-cooled system with 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell GPUs, delivering substantial performance improvements, particularly for large language model inference. The platform emphasizes efficiency and scalability, making it crucial for developing advanced AI infrastructure. By using liquid cooling technology, NVIDIA ensures optimal performance and energy efficiency, addressing the increasing demands of AI workloads. NVIDIA's collaboration with over 40 global electronics manufacturers highlights its dedication to simplifying AI factory development. This partnership aims to foster innovation and accelerate the adoption of open computing standards. Additionally, Meta's contribution of the Catalina AI rack architecture to the OCP, based on NVIDIA's platform, underscores the industry's recognition of NVIDIA's technological advancements. These collaborations are instrumental in shaping the future of AI infrastructure, promoting a more open and cooperative approach to technology development. NVIDIA plans to release the ConnectX-8 SuperNICs for OCP 3.0 next year, as part of its ongoing collaboration with industry leaders to advance open computing standards. By continuing to innovate and contribute to the open hardware ecosystem, NVIDIA is set to play a crucial role in the evolution of AI infrastructure. These efforts are expected to drive further advancements in data center technologies, paving the way for more efficient and scalable AI solutions. NVIDIA's strategic move to open hardware through its Blackwell platform contribution to the OCP represents a significant step forward in the evolution of AI infrastructure. By sharing key design elements and collaborating with industry leaders, NVIDIA is poised to influence the future of data center technologies, promoting open, efficient, and scalable solutions. As the company continues to innovate and contribute to the open hardware ecosystem, it is expected to play a crucial role in shaping the future of AI infrastructure.

[2]

Nvidia aims to boost Blackwell GPUs by donating platform design to the Open Compute Project - SiliconANGLE

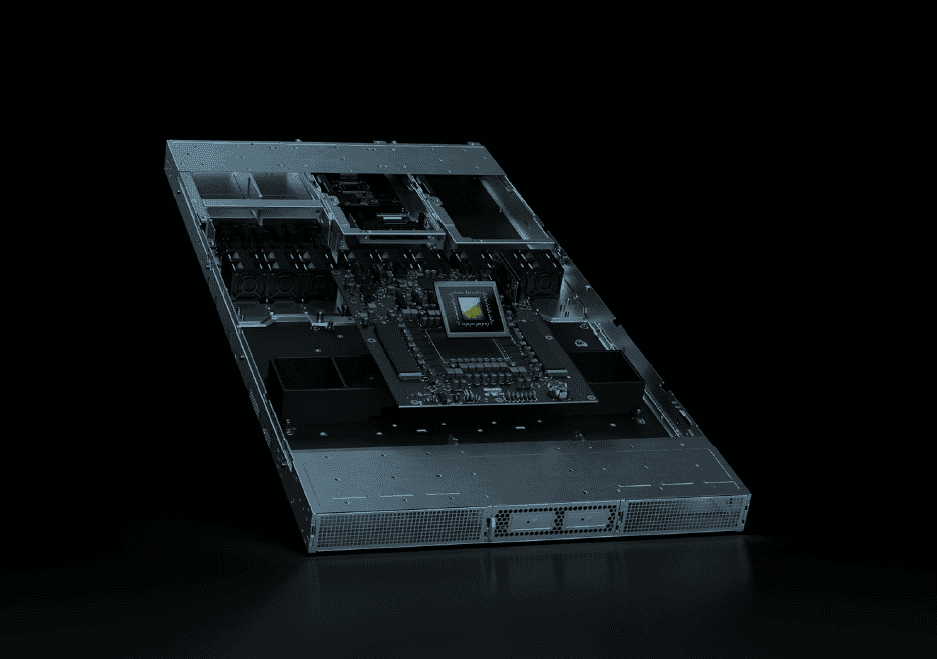

Nvidia aims to boost Blackwell GPUs by donating platform design to the Open Compute Project Nvidia Corp. today said it has contributed parts of its Blackwell accelerated computing platform design to the Open Compute Project and broadened support for OCP standards in its Spectrum-X networking fabric. Nvidia hopes the move will help solidify its new line of Blackwell graphics processing units, which are now in production as a standard for artificial intelligence and high-performance computing. In a separate announcement at the OCP Global Summit, Arm Holdings plc announced a collaboration with Samsung Electronics Co. Ltd.'s Foundry, ADTechnology Co. and South Korean ship startup Rebellions Inc. to develop an AI CPU chiplet platform targeted at cloud, HPC and AIML training and inferencing. The elements of the GB200 NVL72 system electro-mechanical design (pictured) that Nvidia will share with OCP include the rack architecture, compute and switch tray mechanicals, liquid-cooling and thermal environment specifications and NVLink cable cartridge volumetrics. NVLink is a high-speed interconnect technology Nvidia developed to enable faster communication between GPUs. The GB200 NVL72 is a liquid-cooled appliance that ships with 36 GB200 accelerators and 72 Blackwell GPUs. The NVLink domain connects them into a single massive GPU that can provide 130 terabytes-per-second low-latency communications. The GB200 Grace Blackwell Super Chip connects two Blackwell Tensor Core GPUs with an Nvidia Grace CPU. The company said the rack-scale machine can conduct large language model inferencing 30 times faster than the predecessor H100 Tensor Core GPU and is 25 times more energy-efficient. Nvidia has contributed to OCP for more than a decade, including its 2022 submission of the HGX H100 baseboard design, which is now a de facto standard for AI servers, and its 2023 donation of the ConnectX-7 adapter network interface card design, which is now the foundation design of the OCP Network Interface Card 3.0. Spectrum-X is an Ethernet networking platform built for AI workloads, particularly in data center environments. It combines Nvidia Spectrum-4 Ethernet switches and its BlueField-3 data processing units for low-latency, high-throughput and efficient networking architecture. Nvidia said it remains committed to offering customers an Infiniband option. The platform will now support OCP's Switch Abstraction Interface and Software for Open Networking in the Cloud standards. The Switch Abstraction Interface standardizes how network operating systems interact with network switch hardware. SONiC is a hardware-independent network software layer that is aimed at cloud infrastructure operators, data centers and network administrators. Nvidia said customers can use Spectrum-X's adaptive routing and telemetry-based congestion control to accelerate Ethernet performance for scale-out AI infrastructure. ConnectX-8 SuperNIC network interface cards for OCP 3.0 will be available next year, enabling organizations to build more flexible networks. "In the last five years, we've seen a better than 20,000-fold increase in the complexity of AI models," said Shar Narasimhan, director of product marketing for Nvidia data center GPUs. "Were also using richer and larger data sets." Nvidia has responded with a system design that shards, or fragments, models across clusters of GPUs linked with a high-speed interconnect so that all processors function as a single GPU. In the GB200 NVL72, each GPU has direct access to every other GPU over a 1.8 terabytes-per-second interconnect. "This enables all of these GPUs to work as a single unified GPU," Narasimhan said. Previously, the maximum number of GPUs connected in a single NVLink domain was eight on an HGX H200 baseboard, with a communication speed of 900 gigabits per second. The GB200 NVL72 increased capacity to 72 Blackwell GPUs communicating at 1.8 terabytes per second or 36 times faster than the previous high-end Ethernet standard. "One of the key elements was using NVSwitch to keep all of the servers and the compute GPUs close together so we could mount them into a single rack," Narasimhan said. "That allowed us to use copper cabling for NVLink to lower costs and use far less power than fiber optics." Nvidia added 100 pounds of steel reinforcement to the rack to accommodate the dense infrastructure and developed quick release plumbing and cabling. The NVLink spine was reinforced to hold up to 5,000 copper cables and deliver 120 kW of power, more than double the load of current rack designs. "We're contributing the entire rack with all of the innovation that we performed to reinforce the rack itself, upgrade the NV Links, line cooling and plumbing quick disconnect innovations as well as the manifolds that sit on top of the compute trays and switch trays to deliver direct liquid cooling to each individual tray," Narasimhan said. The Arm-led project will combine Rebellions' Rebel AI accelerator with ADTechnology's Neoverse CSS V3-powered compute chiplet implemented with Samsung Foundry two-nanometer Gate-All-Around advanced process technology. The companies said the chiplet will deliver two to three times the performance and power efficiency of competing architectures when running generative AI workloads. Rebellions earlier this year raised $124 million to fund its engineering efforts as it takes on Nvidia for AI processing.

[3]

Nvidia contributes Blackwell platform design to the Open Compute Project -- members can build their own custom designs for Blackwell GPUs

Nvidia has contributed its GB200 NVL72 rack and compute/switch tray designs to the Open Compute Project (OCP), enabling OCP members to build their designs based on Nvidia Blackwell GPUs. The company is sharing key design elements of its high-performance server platform to accelerate the development of open data center platforms that can support Nvidia's power-hungry next-generation GPUs with Nvidia networking. The GB200 NVL72 system with up to 72 GB100 or GB200 GPUs is at the heart of this contribution. Nvidia shares critical electro-mechanical designs, including details about the rack architecture, cooling system, and compute tray components. The GB200 NVL72 system features a modular design based on Nvidia's MGX architecture that connects 36 Grace CPUs and 72 Blackwell GPUs in a rack-scale configuration. This setup provides a 72-GPU NVLink domain, allowing the system to act as a massive single GPU. At the OCP event, Nvidia introduced a new joint reference design of the GB200 NVL72. It was developed with Vertiv, a leading power and cooling solution known for its expertise in high-density compute data centers. This new reference design reduces deployment time for cloud service providers (CSPs) and data centers adopting the Nvidia Blackwell platform. Using this reference architecture, data centers no longer need to create custom power, cooling, or spacing designs specific to the GB200 NVL72. Instead, they can rely on Vertiv's advanced solutions for space-saving power management and energy-efficient cooling. This approach allows data centers to deploy 7MW GB200 NVL72 clusters faster globally, cutting implementation time by as much as 50%. "Nvidia has been a significant contributor to open computing standards for years, including their high-performance computing platform that has been the foundation of our Grand Teton server for the past two years," said Yee Jiun Song, VP of Engineering at Meta. "As we progress to meet the increasing computational demands of large-scale artificial intelligence, Nvidia's latest contributions in rack design and modular architecture will help speed up the development and implementation of AI infrastructure across the industry." In addition to hardware contributions, Nvidia is expanding support for OCP standards with its Spectrum-X Ethernet networking platform. By aligning with OCP's community-developed specifications, Nvidia speeds up the connectivity of AI data centers while enabling organizations to maintain software consistency to preserve previous investments. Nvidia's networking advancements include the ConnectX-8 SuperNIC, which will be available for OCP 3.0 next year. These SuperNICs support data speeds up to 800Gb/s, and their programmable packet processing is optimized for large-scale AI workloads, which is expected to help organizations build more flexible, AI-optimized networks. More than 40 electronics manufacturers are working with Nvidia to build its Blackwell platform. Meta, the founder of OCP, is among the notable partners. Meta plans to contribute its Catalina AI rack architecture, based on the GB200 NVL72 system, to the OCP. By collaborating closely with the OCP community, Nvidia is working to ensure its designs and specifications are accessible to a wide range of data center developers. As a result, Nvidia will be able to sell its Blackwell GPUs and ConnectX-8 SuperNIC to companies that rely on OCP standards. "Building on a decade of collaboration with OCP, Nvidia is working alongside industry leaders to shape specifications and designs that can be widely adopted across the entire data center," said Jensen Huang, founder and CEO of Nvidia. "By advancing open standards, we're helping organizations worldwide take advantage of the full potential of accelerated computing and create the AI factories of the future."

[4]

NVIDIA Contributes Blackwell Platform Design to Open Hardware Ecosystem, Accelerating AI Infrastructure Innovation

SAN JOSE, Calif., Oct. 15, 2024 (GLOBE NEWSWIRE) -- OCP Global Summit -- To drive the development of open, efficient and scalable data center technologies, NVIDIA today announced that it has contributed foundational elements of its NVIDIA Blackwell accelerated computing platform design to the Open Compute Project (OCP) and broadened NVIDIA Spectrum-X™ support for OCP standards. At this year's OCP Global Summit, will be sharing key portions of the GB200 NVL72 system electro-mechanical design with the OCP community -- including the rack architecture, compute and switch tray mechanicals, liquid-cooling and thermal environment specifications, and NVLink™ cable cartridge volumetrics -- to support higher compute density and networking bandwidth. has already made several official contributions to OCP across multiple hardware generations, including its HGX™ H100 baseboard design specification, to help provide the ecosystem with a wider choice of offerings from the world's computer makers and expand the adoption of AI. In addition, expanded Spectrum-X Ethernet networking platform alignment with -developed specifications enables companies to unlock the performance potential of AI factories deploying OCP-recognized equipment while preserving their investments and maintaining software consistency. "Building on a decade of collaboration with OCP, is working alongside industry leaders to shape specifications and designs that can be widely adopted across the entire data center," said , founder and CEO of . "By advancing open standards, we're helping organizations worldwide take advantage of the full potential of accelerated computing and create the AI factories of the future." Accelerated Computing Platform for the Next Industrial Revolution NVIDIA's accelerated computing platform was designed to power a new era of AI. GB200 NVL72 is based on the MGX™ modular architecture, which enables computer makers to quickly and cost-effectively build a vast array of data center infrastructure designs. The liquid-cooled system connects 36 Grace™ CPUs and 72 Blackwell GPUs in a rack-scale design. With a 72-GPU NVLink domain, it acts as a single, massive GPU and delivers 30x faster real-time trillion-parameter large language model inference than the H100 Tensor Core GPU. The Spectrum-X Ethernet networking platform, which now includes the next-generation ConnectX-8 SuperNIC™, supports OCP's Switch Abstraction Interface (SAI) and Software for Open Networking in the Cloud (SONiC) standards. This allows customers to use Spectrum-X's adaptive routing and telemetry-based congestion control to accelerate Ethernet performance for scale-out AI infrastructure. ConnectX-8 SuperNICs feature accelerated networking at speeds of up to 800Gb/s and programmable packet processing engines optimized for massive-scale AI workloads. ConnectX-8 SuperNICs for OCP 3.0 will be available next year, equipping organizations to build highly flexible networks. Critical Infrastructure for Data Centers As the world transitions from general-purpose to accelerated and AI computing, data center infrastructure is becoming increasingly complex. To simplify the development process, is working closely with 40+ global electronics makers that provide key components to create AI factories. Additionally, a broad array of partners are innovating and building on top of the Blackwell platform, including Meta, which plans to contribute its Catalina AI rack architecture based on GB200 NVL72 to OCP. This provides computer makers with flexible options to build high compute density systems and meet the growing performance and energy efficiency needs of data centers. "NVIDIA has been a significant contributor to open computing standards for years, including their high-performance computing platform that has been the foundation of our Grand Teton server for the past two years," said , vice president of engineering at Meta. "As we progress to meet the increasing computational demands of large-scale artificial intelligence, NVIDIA's latest contributions in rack design and modular architecture will help speed up the development and implementation of AI infrastructure across the industry." Certain statements in this press release including, but not limited to, statements as to: the benefits, impact, and performance of NVIDIA's products, services, and technologies, including Blackwell accelerated computing platform, Spectrum-X Ethernet networking platform, GB200 NVL72, NVLink, HGX H100, MGX modular architecture, Grace CPUs, H100 Tensor Core GPU, and ConnectX-8 SuperNIC; contributing foundational elements of its Blackwell accelerated computing platform design to the (OCP) and broaden Spectrum-X support for OCP standards; the benefits and impact of NVIDIA's collaboration with third parties; third parties using or adopting our products or technologies; working alongside industry leaders to shape specifications and designs that can be widely adopted across the entire data center; by advancing open standards, helping organizations worldwide take advantage of the full potential of accelerated computing and create the AI factories of the future; as the world transitioning from general-purpose to accelerated and AI computing, data center infrastructure becoming increasingly complex; and the timing and themes of the 2024 OCP Global Summit are forward-looking statements that are subject to risks and uncertainties that could cause results to be materially different than expectations. Important factors that could cause actual results to differ materially include: global economic conditions; our reliance on third parties to manufacture, assemble, package and test our products; the impact of technological development and competition; development of new products and technologies or enhancements to our existing product and technologies; market acceptance of our products or our partners' products; design, manufacturing or software defects; changes in consumer preferences or demands; changes in industry standards and interfaces; unexpected loss of performance of our products or technologies when integrated into systems; as well as other factors detailed from time to time in the most recent reports files with the , or , including, but not limited to, its annual report on Form 10-K and quarterly reports on Form 10-Q. Copies of reports filed with the are posted on the company's website and are available from without charge. These forward-looking statements are not guarantees of future performance and speak only as of the date hereof, and, except as required by law, disclaims any obligation to update these forward-looking statements to reflect future events or circumstances. Many of the products and features described herein remain in various stages and will be offered on a when-and-if-available basis. The statements above are not intended to be, and should not be interpreted as a commitment, promise, or legal obligation, and the development, release, and timing of any features or functionalities described for our products is subject to change and remains at the sole discretion of . will have no liability for failure to deliver or delay in the delivery of any of the products, features or functions set forth herein. © 2024 . All rights reserved. , the logo, ConnectX, Grace, HGX, MGX, Spectrum-X, NVLink and SuperNIC are trademarks and/or registered trademarks of in the and other countries. Other company and product names may be trademarks of the respective companies with which they are associated. Features, pricing, availability and specifications are subject to change without notice. A photo accompanying this announcement is available at https://www.globenewswire.com/NewsRoom/AttachmentNg/a75e1ec2-a3aa-4833-a1fc-65420becb4cf

Share

Share

Copy Link

NVIDIA has shared key components of its Blackwell accelerated computing platform design with the Open Compute Project (OCP), aiming to promote open, efficient, and scalable data center solutions for AI infrastructure.

NVIDIA's Contribution to Open Compute Project

NVIDIA has made a significant move in the AI infrastructure landscape by contributing key elements of its Blackwell accelerated computing platform design to the Open Compute Project (OCP). This initiative aims to drive the development of open, efficient, and scalable data center technologies

1

2

3

.Key Components Shared

The contribution includes critical design elements from the GB200 NVL72 system, such as:

- Rack architecture

- Compute and switch tray mechanicals

- Liquid-cooling and thermal environment specifications

- NVLink cable cartridge volumetrics

1

3

These components are essential for efficient data center operations, particularly in supporting high-density compute environments required for advanced AI workloads.

Blackwell Platform Specifications

The GB200 NVL72 system, at the heart of this contribution, is a liquid-cooled appliance featuring:

- 36 NVIDIA Grace CPUs

- 72 Blackwell GPUs

- NVLink domain connecting GPUs into a single massive GPU

- 130 terabytes-per-second low-latency communications

2

3

This system is designed to deliver substantial performance improvements, particularly for large language model inference, boasting 30 times faster performance than its predecessor, the H100 Tensor Core GPU

2

.Spectrum-X Ethernet Networking Platform

NVIDIA has also expanded support for OCP standards in its Spectrum-X Ethernet networking platform. This includes:

- Alignment with OCP's Switch Abstraction Interface (SAI) and Software for Open Networking in the Cloud (SONiC) standards

- Introduction of ConnectX-8 SuperNICs, supporting data speeds up to 800Gb/s

- Optimization for large-scale AI workloads

1

2

3

Related Stories

Industry Collaboration and Impact

NVIDIA's initiative has garnered support from various industry players:

- Collaboration with over 40 global electronics manufacturers

- Partnership with Vertiv to develop a joint reference design for the GB200 NVL72

- Meta's contribution of its Catalina AI rack architecture, based on NVIDIA's platform, to the OCP

1

3

4

These collaborations aim to accelerate the adoption of open computing standards and simplify AI factory development.

Future Implications

NVIDIA's contribution is expected to have far-reaching effects on the AI infrastructure landscape:

- Enabling OCP members to build custom designs based on Blackwell GPUs

- Potentially reducing deployment time for cloud service providers and data centers by up to 50%

- Accelerating the development and implementation of AI infrastructure across the industry

3

4

As the world transitions from general-purpose to accelerated and AI computing, NVIDIA's open hardware initiative is poised to play a crucial role in shaping the future of data center technologies and AI infrastructure.

References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

NVIDIA Unveils Vision for Next-Gen 'AI Factories' with Vera Rubin and Kyber Architectures

13 Oct 2025•Technology

NVIDIA Unveils NVLink Fusion: Enabling Custom AI Infrastructure with Industry Partners

19 May 2025•Technology

NVIDIA Unveils Blackwell AI GPUs: A Leap Forward in AI and Data Center Technology

24 Aug 2024

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research