Nvidia Launches DGX Cloud Lepton: A Marketplace for AI Computing Power

7 Sources

7 Sources

[1]

Nvidia software aims to create marketplace for AI computing power

SAN FRANCISCO/TAIPEI, May 19 (Reuters) - Nvidia (NVDA.O), opens new tab announced a new software platform on Monday that will create a marketplace for cloud-based artificial intelligence chips. Nvidia's graphics processors, or GPUs, dominate the market for training AI models and a raft of new cloud players called "neoclouds" such as CoreWeave and Nebius Group have emerged to specialize in renting out Nvidia's chips to software developers. The Santa Clara, California-based company announced the new tool called Lepton that lets cloud computing companies sell GPU capacity in one spot. In addition to CoreWeave (CRWV.O), opens new tab and Nebius, other firms joining the Lepton platform are Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nscale, SoftBank Corp and Yotta Data Services. Nvidia cloud vice president Alexis Bjorlin said that despite demand surging for chips at startups and large companies, the process of finding available chips has been "very manual". "It's almost like everyone's calling everyone for what compute capacity is available," Bjorlin told Reuters in an interview. "We're just trying to make it seamless, because it enables the ecosystem to grow and develop, and it enables all of the clouds - the global clouds and the new cloud providers - access to Nvidia's entire developer ecosystem." Absent so far from Nvidia's Lepton partner list are major cloud providers such as Microsoft (MSFT.O), opens new tab, Amazon Web Services (AMZN.O), opens new tab or Alphabet's (GOOGL.O), opens new tab Google. Bjorlin said the system is designed for them to be able to sell their capacity on the marketplace if they choose. Lepton will eventually allow developers to search for Nvidia chips located in specific countries to meet data storage requirements, Bjorlin said. It will also allow companies that already own some Nvidia chips to more seamlessly search for more to rent. Nvidia did not disclose what the business model will be for the new software platform or say whether it would charge commissions or fees to either developers or clouds. However, Bjorlin said developers will "still retain their own relationship to the underlying compute providers, so they've contracted directly with them." Reporting by Stephen Nellis in San Francisco and Max A. Cherney in Taipei; Editing by Sam Holmes Our Standards: The Thomson Reuters Trust Principles., opens new tab Suggested Topics:Artificial Intelligence Max A. Cherney Thomson Reuters Max A. Cherney is a correspondent for Reuters based in San Francisco, where he reports on the semiconductor industry and artificial intelligence. He joined Reuters in 2023 and has previously worked for Barron's magazine and its sister publication, MarketWatch. Cherney graduated from Trent University with a degree in history.

[2]

NVIDIA Announces DGX Cloud Lepton to Connect Developers to NVIDIA's Global Compute Ecosystem

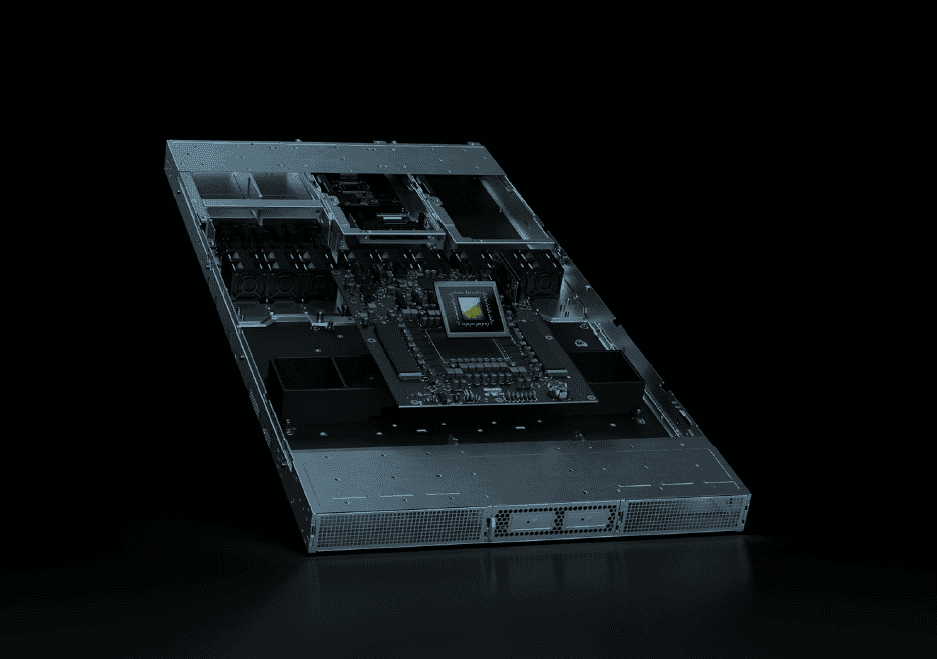

COMPUTEX -- NVIDIA today announced NVIDIA DGX Cloud Lepton™ -- an AI platform with a compute marketplace that connects the world's developers building agentic and physical AI applications with tens of thousands of GPUs, available from a global network of cloud providers. To meet the demand for AI, NVIDIA Cloud Partners (NCPs) including CoreWeave, Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nebius, Nscale, Softbank Corp. and Yotta Data Services will offer NVIDIA Blackwell and other NVIDIA architecture GPUs on the DGX Cloud Lepton marketplace. Developers can tap into GPU compute capacity in specific regions for both on-demand and long-term computing, supporting strategic and sovereign AI operational requirements. Leading cloud service providers and GPU marketplaces are expected to also participate in the DGX Cloud Lepton marketplace. "NVIDIA DGX Cloud Lepton connects our network of global GPU cloud providers with AI developers," said Jensen Huang, founder and CEO of NVIDIA. "Together with our NCPs, we're building a planetary-scale AI factory." DGX Cloud Lepton helps address the critical challenge of securing reliable, high-performance GPU resources by unifying access to cloud AI services and GPU capacity across the NVIDIA compute ecosystem. The platform integrates with the NVIDIA software stack, including NVIDIA NIM™ and NeMo™ microservices, NVIDIA Blueprints and NVIDIA Cloud Functions, to accelerate and simplify the development and deployment of AI applications. For cloud providers, DGX Cloud Lepton provides management software that delivers real-time GPU health diagnostics and automates root-cause analysis, eliminating manual operations and reducing downtime. Key benefits of the platform include: A New Bar for AI Cloud Performance NVIDIA today also announced NVIDIA Exemplar Clouds to help NCPs enhance security, usability, performance and resiliency, using NVIDIA's expertise, reference hardware and software and operational tools. NVIDIA Exemplar Clouds tap into NVIDIA DGX™ Cloud Benchmarking, a comprehensive suite of tools and recipes for optimizing workload performance on AI platforms and quantifying the relationship between cost and performance. Yotta Data Services is the first NCP in the Asia-Pacific region to join the NVIDIA Exemplar Cloud initiative.

[3]

Nvidia software aims to create marketplace for AI computing power

Nvidia cloud vice president Alexis Bjorlin said that despite demand surging for chips at startups and large companies, the process of finding available chips has been "very manual". Absent so far from Nvidia's Lepton partner list are major cloud providers such as Microsoft, Amazon Web Services or Alphabet's Google. Bjorlin said the system is designed for them to be able to sell their capacity on the marketplace if they choose.Nvidia announced a new software platform on Monday that will create a marketplace for cloud-based artificial intelligence chips. Nvidia's graphics processors, or GPUs, dominate the market for training AI models and a raft of new cloud players called "neoclouds" such as CoreWeave and Nebius Group have emerged to specialize in renting out Nvidia's chips to software developers. The Santa Clara, California-based company announced the new tool called Lepton that lets cloud computing companies sell GPU capacity in one spot. In addition to CoreWeave and Nebius, other firms joining the Lepton platform are Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nscale, SoftBank Corp and Yotta Data Services. Nvidia cloud vice president Alexis Bjorlin said that despite demand surging for chips at startups and large companies, the process of finding available chips has been "very manual". "It's almost like everyone's calling everyone for what compute capacity is available," Bjorlin told Reuters in an interview. "We're just trying to make it seamless, because it enables the ecosystem to grow and develop, and it enables all of the clouds - the global clouds and the new cloud providers - access to Nvidia's entire developer ecosystem." Absent so far from Nvidia's Lepton partner list are major cloud providers such as Microsoft, Amazon Web Services or Alphabet's Google. Bjorlin said the system is designed for them to be able to sell their capacity on the marketplace if they choose. Lepton will eventually allow developers to search for Nvidia chips located in specific countries to meet data storage requirements, Bjorlin said. It will also allow companies that already own some Nvidia chips to more seamlessly search for more to rent. Nvidia did not disclose what the business model will be for the new software platform or say whether it would charge commissions or fees to either developers or clouds. However, Bjorlin said developers will "still retain their own relationship to the underlying compute providers, so they've contracted directly with them."

[4]

NVIDIA Announces DGX Cloud Lepton to Connect Developers to NVIDIA's Global Compute Ecosystem - NVIDIA (NASDAQ:NVDA)

CoreWeave, Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nebius Nscale, SoftBank Corp. and Yotta Data Services to Bring Tens of Thousands of GPUs to DGX Cloud Lepton MarketplaceNVIDIA Exemplar Clouds Raise the Performance Bar for NVIDIA Cloud Partners TAIPEI, Taiwan, May 19, 2025 (GLOBE NEWSWIRE) -- COMPUTEX -- NVIDIA today announced NVIDIA DGX Cloud Lepton™ -- an AI platform with a compute marketplace that connects the world's developers building agentic and physical AI applications with tens of thousands of GPUs, available from a global network of cloud providers. To meet the demand for AI, NVIDIA Cloud Partners (NCPs) including CoreWeave, Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nebius, Nscale, Softbank Corp. and Yotta Data Services will offer NVIDIA Blackwell and other NVIDIA architecture GPUs on the DGX Cloud Lepton marketplace. Developers can tap into GPU compute capacity in specific regions for both on-demand and long-term computing, supporting strategic and sovereign AI operational requirements. Leading cloud service providers and GPU marketplaces are expected to also participate in the DGX Cloud Lepton marketplace. "NVIDIA DGX Cloud Lepton connects our network of global GPU cloud providers with AI developers," said Jensen Huang, founder and CEO of NVIDIA. "Together with our NCPs, we're building a planetary-scale AI factory." DGX Cloud Lepton helps address the critical challenge of securing reliable, high-performance GPU resources by unifying access to cloud AI services and GPU capacity across the NVIDIA compute ecosystem. The platform integrates with the NVIDIA software stack, including NVIDIA NIM™ and NeMo™ microservices, NVIDIA Blueprints and NVIDIA Cloud Functions, to accelerate and simplify the development and deployment of AI applications. For cloud providers, DGX Cloud Lepton provides management software that delivers real-time GPU health diagnostics and automates root-cause analysis, eliminating manual operations and reducing downtime. Key benefits of the platform include: Improved productivity and flexibility: Offers a unified experience across development, training and inference, helping boost productivity. Developers can purchase GPU capacity directly from participating cloud providers through the marketplace or bring their own compute clusters, giving them greater flexibility and control.Frictionless deployment: Enables deployment of AI applications across multi-cloud and hybrid environments with minimal operational burden, using integrated services for inference, testing and training workloads.Agility and sovereignty: Gives developers quick access to GPU resources in specific regions, enabling compliance with data sovereignty regulations and meeting low-latency requirements for sensitive workloads.Predictable performance: Provides participating cloud providers enterprise-grade performance, reliability and security, ensuring a consistent user experience. A New Bar for AI Cloud Performance NVIDIA today also announced NVIDIA Exemplar Clouds to help NCPs enhance security, usability, performance and resiliency, using NVIDIA's expertise, reference hardware and software and operational tools. NVIDIA Exemplar Clouds tap into NVIDIA DGX™ Cloud Benchmarking, a comprehensive suite of tools and recipes for optimizing workload performance on AI platforms and quantifying the relationship between cost and performance. Yotta Data Services is the first NCP in the Asia-Pacific region to join the NVIDIA Exemplar Cloud initiative. Availability Developers can sign up for early access to NVIDIA DGX Cloud Lepton. Watch the COMPUTEX keynote from Huang and learn more at NVIDIA GTC Taipei. About NVIDIA NVIDIA NVDA is the world leader in accelerated computing. For further information, contact: Natalie Hereth NVIDIA Corporation +1-360-581-1088 [email protected] Certain statements in this press release including, but not limited to, statements as to: the benefits, impact, performance and availability of NVIDIA's products, services; NVIDIA's collaborations with third parties and the benefits and impact thereof; third parties using or adopting our products and technologies, the benefits and impact thereof; together with cloud partners, NVIDIA building a virtual global AI factory and additional regional cloud providers being added to the marketplace in the coming months are forward-looking statements within the meaning of Section 27A of the Securities Act of 1933, as amended, and Section 21E of the Securities Exchange Act of 1934, as amended, which are subject to the "safe harbor" created by those sections and that are subject to risks and uncertainties that could cause results to be materially different than expectations. Important factors that could cause actual results to differ materially include: global economic conditions; our reliance on third parties to manufacture, assemble, package and test our products; the impact of technological development and competition; development of new products and technologies or enhancements to our existing product and technologies; market acceptance of our products or our partners' products; design, manufacturing or software defects; changes in consumer preferences or demands; changes in industry standards and interfaces; unexpected loss of performance of our products or technologies when integrated into systems; as well as other factors detailed from time to time in the most recent reports NVIDIA files with the Securities and Exchange Commission, or SEC, including, but not limited to, its annual report on Form 10-K and quarterly reports on Form 10-Q. Copies of reports filed with the SEC are posted on the company's website and are available from NVIDIA without charge. These forward-looking statements are not guarantees of future performance and speak only as of the date hereof, and, except as required by law, NVIDIA disclaims any obligation to update these forward-looking statements to reflect future events or circumstances. Many of the products and features described herein remain in various stages and will be offered on a when-and-if-available basis. The statements above are not intended to be, and should not be interpreted as a commitment, promise, or legal obligation, and the development, release, and timing of any features or functionalities described for our products is subject to change and remains at the sole discretion of NVIDIA. NVIDIA will have no liability for failure to deliver or delay in the delivery of any of the products, features or functions set forth herein. © 2025 NVIDIA Corporation. All rights reserved. NVIDIA, the NVIDIA logo, DGX, DGX Cloud Lepton, NeMo and NVIDIA NIM are trademarks and/or registered trademarks of NVIDIA Corporation in the U.S. and other countries. Other company and product names may be trademarks of the respective companies with which they are associated. Features, pricing, availability and specifications are subject to change without notice. NVDANVIDIA Corp$132.10-2.02%Stock Score Locked: Want to See it? Benzinga Rankings give you vital metrics on any stock - anytime. Reveal Full ScoreEdge RankingsMomentum83.62Growth95.02Quality94.05Value6.46Price TrendShortMediumLongOverviewMarket News and Data brought to you by Benzinga APIs

[5]

Nvidia Launches GPU Marketplace to Broaden Access to Its Chips | PYMNTS.com

The move signals a strategic shift for Nvidia, which is now building direct relationships with developers to strengthen its role in the fast-growing AI infrastructure ecosystem. Chipmaker Nvidia announced Sunday (May 18) that it is launching an artificial intelligence marketplace for developers to tap an expanded list of graphics processing unit (GPU) cloud providers in addition to hyperscalers. Called DGX Cloud Lepton, the service acts as a unified interface linking developers to a decentralized network of cloud providers that offer Nvidia's GPUs for AI workloads. Typically, developers must rely on cloud hyperscalers like Amazon Web Services, Microsoft Azure or Google Cloud to access GPUs. However, with GPUs in high demand, Nvidia seeks to open the availability of GPUs from an expanded roster of cloud providers beyond hyperscalers. When one cloud provider has some idle GPUs in between jobs, these chips will be available in the marketplace for another developer to tap. The marketplace will include GPU cloud providers CoreWeave, Crusoe, Lambda, SoftBank and others, according to a Sunday press release. The move comes as Nvidia looks to address growing frustration among startups, enterprises and researchers over limited GPU availability. With AI model training requiring vast compute resources -- especially for large language models and computer vision systems -- developers often face long wait times or capacity shortages. DGX Cloud Lepton fits into the future, functioning as a discovery tool and marketplace, enabling users to compare and select from various cloud GPU vendors based on availability, cost and other preferences. Developers retain full autonomy over their provider choices, and the company does not restrict or intermediate those relationships, The Wall Street Journal reported Monday (May 19). This direct approach to developers also signals a strategic shift for Nvidia. Traditionally, the company worked behind the scenes with cloud providers who would, in turn, offer access to Nvidia hardware. Now, Nvidia is cultivating its own relationship with developers and enterprise customers, a move that could strengthen its influence in the growing AI services ecosystem.

[6]

NVIDIA Announces DGX Cloud Lepton to Connect Developers to NVIDIA's Global Compute Ecosystem

, (GLOBE NEWSWIRE) -- COMPUTEX -- today announced DGX Cloud Lepton™ -- an AI platform with a compute marketplace that connects the world's developers building agentic and physical AI applications with tens of thousands of GPUs, available from a global network of cloud providers. To meet the demand for AI, (NCPs) including CoreWeave, Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nebius, Nscale, Softbank Corp. and Yotta Data Services will offer Blackwell and other architecture GPUs on the DGX Cloud Lepton marketplace. Developers can tap into GPU compute capacity in specific regions for both on-demand and long-term computing, supporting strategic and sovereign AI operational requirements. Leading cloud service providers and GPU marketplaces are expected to also participate in the DGX Cloud Lepton marketplace. "NVIDIA DGX Cloud Lepton connects our network of global GPU cloud providers with AI developers," said , founder and CEO of . "Together with our NCPs, we're building a planetary-scale AI factory." DGX Cloud Lepton helps address the critical challenge of securing reliable, high-performance GPU resources by unifying access to cloud AI services and GPU capacity across the compute ecosystem. The platform integrates with the software stack, including NIM™ and NeMo™ microservices, Blueprints and Cloud Functions, to accelerate and simplify the development and deployment of AI applications. For cloud providers, DGX Cloud Lepton provides management software that delivers real-time GPU health diagnostics and automates root-cause analysis, eliminating manual operations and reducing downtime. Key benefits of the platform include: A New Bar for AI Cloud Performance today also announced Exemplar Clouds to help NCPs enhance security, usability, performance and resiliency, using NVIDIA's expertise, reference hardware and software and operational tools. Exemplar Clouds tap into DGX™ Cloud Benchmarking, a comprehensive suite of tools and recipes for optimizing workload performance on AI platforms and quantifying the relationship between cost and performance. Certain statements in this press release including, but not limited to, statements as to: the benefits, impact, performance and availability of NVIDIA's products, services; NVIDIA's collaborations with third parties and the benefits and impact thereof; third parties using or adopting our products and technologies, the benefits and impact thereof; together with cloud partners, building a virtual global AI factory and additional regional cloud providers being added to the marketplace in the coming months are forward-looking statements within the meaning of Section 27A of the Securities Act of 1933, as amended, and Section 21E of the Securities Exchange Act of 1934, as amended, which are subject to the "safe harbor" created by those sections and that are subject to risks and uncertainties that could cause results to be materially different than expectations. Important factors that could cause actual results to differ materially include: global economic conditions; our reliance on third parties to manufacture, assemble, package and test our products; the impact of technological development and competition; development of new products and technologies or enhancements to our existing product and technologies; market acceptance of our products or our partners' products; design, manufacturing or software defects; changes in consumer preferences or demands; changes in industry standards and interfaces; unexpected loss of performance of our products or technologies when integrated into systems; as well as other factors detailed from time to time in the most recent reports files with the , or , including, but not limited to, its annual report on Form 10-K and quarterly reports on Form 10-Q. Copies of reports filed with the are posted on the company's website and are available from without charge. These forward-looking statements are not guarantees of future performance and speak only as of the date hereof, and, except as required by law, disclaims any obligation to update these forward-looking statements to reflect future events or circumstances. Many of the products and features described herein remain in various stages and will be offered on a when-and-if-available basis. The statements above are not intended to be, and should not be interpreted as a commitment, promise, or legal obligation, and the development, release, and timing of any features or functionalities described for our products is subject to change and remains at the sole discretion of . will have no liability for failure to deliver or delay in the delivery of any of the products, features or functions set forth herein.

[7]

Nvidia software aims to create marketplace for AI computing power

SAN FRANCISCO/TAIPEI (Reuters) -Nvidia announced a new software platform on Monday that will create a marketplace for cloud-based artificial intelligence chips. Nvidia's graphics processors, or GPUs, dominate the market for training AI models and a raft of new cloud players called "neoclouds" such as CoreWeave and Nebius Group have emerged to specialize in renting out Nvidia's chips to software developers. The Santa Clara, California-based company announced the new tool called Lepton that lets cloud computing companies sell GPU capacity in one spot. In addition to CoreWeave and Nebius, other firms joining the Lepton platform are Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nscale, SoftBank Corp and Yotta Data Services. Nvidia cloud vice president Alexis Bjorlin said that despite demand surging for chips at startups and large companies, the process of finding available chips has been "very manual". "It's almost like everyone's calling everyone for what compute capacity is available," Bjorlin told Reuters in an interview. "We're just trying to make it seamless, because it enables the ecosystem to grow and develop, and it enables all of the clouds - the global clouds and the new cloud providers - access to Nvidia's entire developer ecosystem." Absent so far from Nvidia's Lepton partner list are major cloud providers such as Microsoft, Amazon Web Services or Alphabet's Google. Bjorlin said the system is designed for them to be able to sell their capacity on the marketplace if they choose. Lepton will eventually allow developers to search for Nvidia chips located in specific countries to meet data storage requirements, Bjorlin said. It will also allow companies that already own some Nvidia chips to more seamlessly search for more to rent. "It's a good move for them," said Mario Morales, group vice president at research firm IDC. "Nvidia is close to about 5 million developers. So they want to figure out a way where they can give them (developers) more access to the technology." Nvidia did not disclose what the business model will be for the new software platform or say whether it would charge commissions or fees to either developers or clouds. However, Bjorlin said developers will "still retain their own relationship to the underlying compute providers, so they've contracted directly with them." (Reporting by Stephen Nellis in San Francisco and Max A. Cherney in Taipei, additional reporting by Wen-Yee Lee in Taipei; Editing by Sam Holmes and Kate Mayberry)

Share

Share

Copy Link

Nvidia introduces DGX Cloud Lepton, a new software platform creating a marketplace for cloud-based AI chips, connecting developers with a global network of GPU providers to address the surging demand for AI computing power.

Nvidia Introduces DGX Cloud Lepton

Nvidia, the world leader in accelerated computing, has announced a groundbreaking software platform called DGX Cloud Lepton. This innovative marketplace aims to connect AI developers with a global network of GPU cloud providers, addressing the critical challenge of securing reliable, high-performance GPU resources for AI applications

1

2

.Meeting the Surging Demand for AI Computing Power

As the demand for AI chips surges among startups and large companies, the process of finding available chips has been largely manual and inefficient. Nvidia's cloud vice president, Alexis Bjorlin, described the current situation: "It's almost like everyone's calling everyone for what compute capacity is available"

3

. DGX Cloud Lepton aims to streamline this process, making it seamless for developers to access the necessary computing power.Key Features and Benefits

DGX Cloud Lepton offers several advantages to both developers and cloud providers:

- Unified access to cloud AI services and GPU capacity across the NVIDIA compute ecosystem

- Integration with NVIDIA's software stack, including NIM and NeMo microservices, Blueprints, and Cloud Functions

- Real-time GPU health diagnostics and automated root-cause analysis for cloud providers

- Support for both on-demand and long-term computing needs

- Region-specific GPU access to meet data sovereignty requirements

2

4

Expanding the GPU Provider Network

While major cloud providers like Microsoft, Amazon Web Services, and Google are notably absent from the initial partner list, the system is designed to accommodate their participation in the future. The current roster of NVIDIA Cloud Partners (NCPs) includes CoreWeave, Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nebius, Nscale, SoftBank Corp, and Yotta Data Services

1

3

.NVIDIA Exemplar Clouds

Alongside DGX Cloud Lepton, Nvidia announced NVIDIA Exemplar Clouds, an initiative to help NCPs enhance security, usability, performance, and resiliency. This program leverages NVIDIA's expertise, reference hardware and software, and operational tools, including DGX Cloud Benchmarking for optimizing workload performance

2

4

.Related Stories

Strategic Shift for Nvidia

The launch of DGX Cloud Lepton represents a strategic move for Nvidia, as it begins to build direct relationships with developers. This approach could strengthen Nvidia's role in the rapidly growing AI infrastructure ecosystem, moving beyond its traditional behind-the-scenes partnerships with cloud providers

5

.Future Implications

Jensen Huang, founder and CEO of NVIDIA, envisions the platform as a step towards building a "planetary-scale AI factory"

2

. As the AI industry continues to evolve, DGX Cloud Lepton has the potential to significantly impact how developers access and utilize GPU resources, potentially reshaping the landscape of AI development and deployment.References

Summarized by

Navi

[2]

Related Stories

NVIDIA Unveils NVLink Fusion: Enabling Custom AI Infrastructure with Industry Partners

19 May 2025•Technology

Nvidia's GTC 2025: Ambitious AI Advancements Amid Growing Challenges

15 Mar 2025•Business and Economy

Nvidia Reportedly in Talks to Acquire Lepton AI for Hundreds of Millions, Eyeing Server Rental Market

27 Mar 2025•Business and Economy

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy