Nvidia Vera Rubin architecture slashes AI costs by 10x with advanced networking at its core

32 Sources

32 Sources

[1]

Nvidia's Vera Rubin Architecture Thrives on Networking

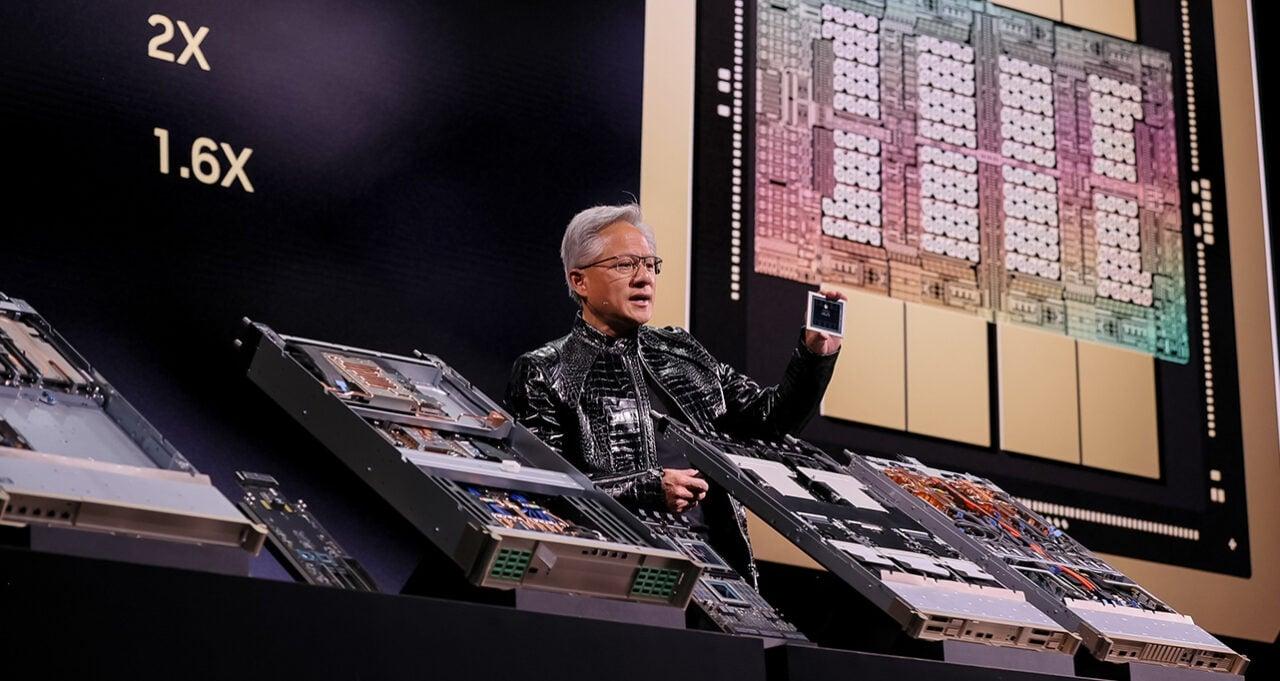

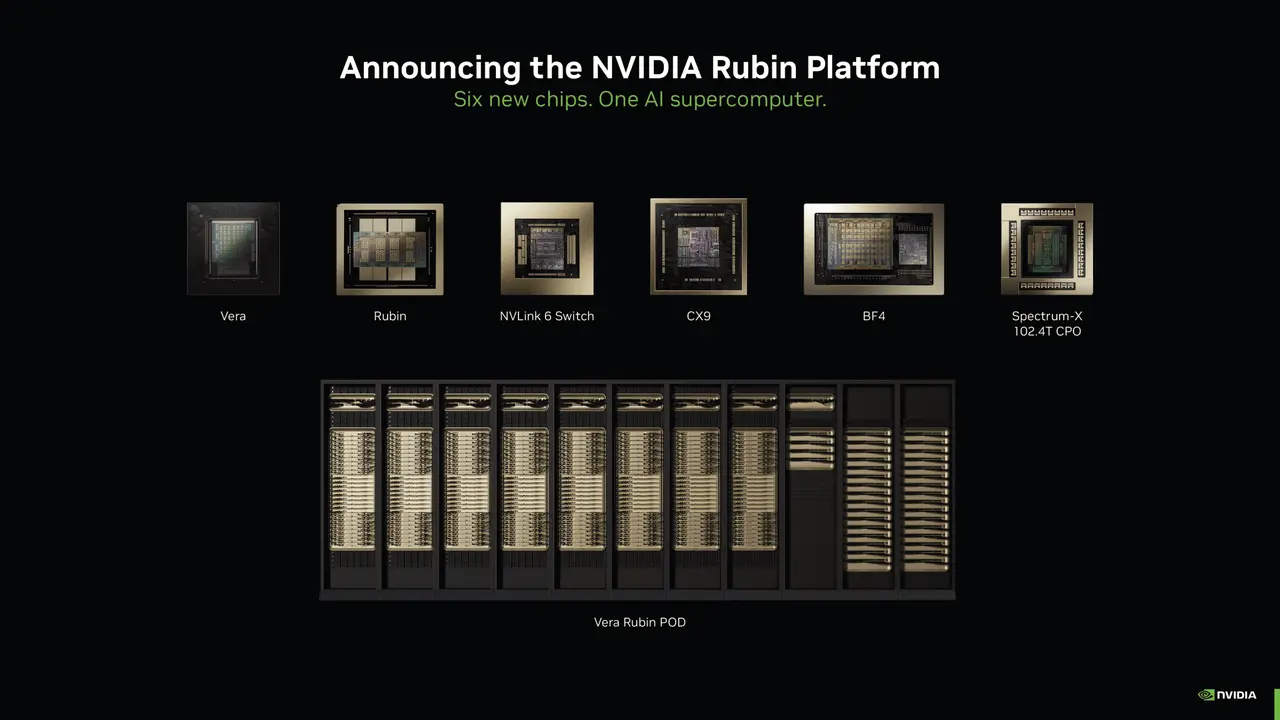

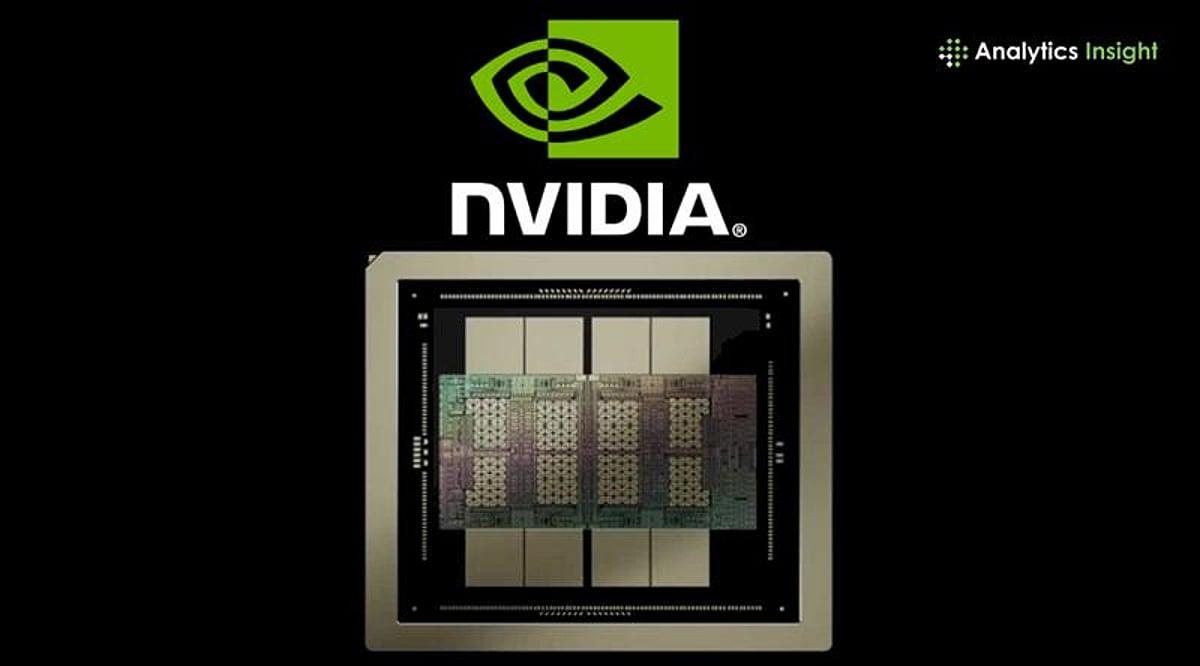

Earlier this week, Nvidia surprise-announced their new Vera Rubin architecture (no relation to the recently unveiled telescope) at the Consumer Electronics Show in Las Vegas. The new platform, set to reach customers later this year, is advertised to offer a ten-fold reduction in inference costs and a four-fold reduction in how many GPUs it would take to train certain models, as compared to Nvidia's Blackwell architecture. The usual suspect for improved performance is the GPU. Indeed, the new Rubin GPU boasts 50 quadrillion floating-point operations per second (petaFLOPS) of 4-bit computation, as compared to 10 petaflops on Blackwell, at least for transformer-based inference workloads like large language models. However, focusing on just the GPU misses the bigger picture. There are a total of six new chips in the Vera-Rubin-based computers: the Vera CPU, the Rubin GPU, and four distinct networking chips. To achieve performance advantages, the components have to work in concert, says Gilad Shainer, senior vice president of networking at Nvidia. "The same unit connected in a different way will deliver a completely different level of performance," Shainer says. "That's why we call it extreme co-design." AI workloads, both training and inference, run on large numbers of GPUs simultaneously. "Two years back, inferencing was mainly run on a single GPU, a single box, a single server," Shainer says. "Right now, inferencing is becoming distributed, and it's not just in a rack. It's going to go across racks." To accommodate these hugely distributed tasks, as many GPUs as possible need to effectively work as one. This is the aim of the so-called scale-up network: the connection of GPUs within a single rack. Nvidia handles this connection with their NVLink networking chip. The new line includes the NVLink6 switch, with double the bandwidth of the previous version (3,600 gigabytes per second for GPU-to-GPU connections, as compared to 1,800 GB/s for NVLink5 switch). In addition to the bandwidth doubling, the scale-up chips also include double the number of SerDes -- serializer/deserializers (which allow data to be sent across fewer wires) and an expanded number of calculations that can be done within the network. "The scale-up network is not really the network itself," Shainer says. "It's computing infrastructure, and some of the computing operations are done on the network...on the switch." The rationale for offloading some operations from the GPUs to the network is two-fold. First, it allows some tasks to only be done once, rather than having every GPU having to perform them. A common example of this is the all-reduce operation in AI training. During training, each GPU computes a mathematical operation called a gradient on its own batch of data. In order to train the model correctly , all the GPUs need to know the average gradient computed across all batches. Rather than each GPU sending its gradient to every other GPU, and every one of them computing the average, it saves computational time and power for that operation to only happen once, within the network. A second rationale is to hide the time it takes to shuttle data in-between GPUs by doing computations on them en-route. Shainer explains this via an analogy of a pizza parlor trying to speed up the time it takes to deliver an order. "What can you do if you had more ovens or more workers? It doesn't help you; you can make more pizzas, but the time for a single pizza is going to stay the same. Alternatively, if you would take the oven and put it in a car, so I'm going to bake the pizza while traveling to you, this is where I save time. This is what we do." In-network computing is not new to this iteration of Nvidia's architecture. In fact, it has been in common use since around 2016. But, this iteration adds a broader swath of computations that can be done within the network to accommodate different workloads and different numerical formats, Shainer says. The rest of the networking chips included in the Rubin architecture comprise the so-called scale-out network. This is the part that connects different racks to each other within the data center. Those chips are the ConnectX-9, a networking interface card; the BlueField-4 a so-called data processing unit, which is paired with two Vera CPUs and a ConnectX-9 card for offloading networking, storage, and security tasks; and finally the Spectrum-6 Ethernet switch, which uses co-packaged optics to send data between racks. The Ethernet switch also doubles the bandwidth of the previous generations, while minimizing jitter -- the variation in arrival times of information packets. "Scale-out infrastructure needs to make sure that those GPUs can communicate well in order to run a distributed computing workload and that means I need a network that has no jitter in it," he says. The presence of jitter implies that if different racks are doing different parts of the calculation, the answer from each will arrive at different times. One rack will always be slower than the rest, and the rest of the racks, full of costly equipment, sit idle while waiting for that last packet. "Jitter means losing money," Shainer says. None of Nvidia's host of new chips are specifically dedicated to connect between data centers, termed '"scale-across." But Shainer argues this is the next frontier. "It doesn't stop here, because we are seeing the demands to increase the number of GPUs in a data center," he says. "100,000 GPUs is not enough anymore for some workloads, and now we need to connect multiple data centers together."

[2]

Nvidia launches powerful new Rubin chip architecture | TechCrunch

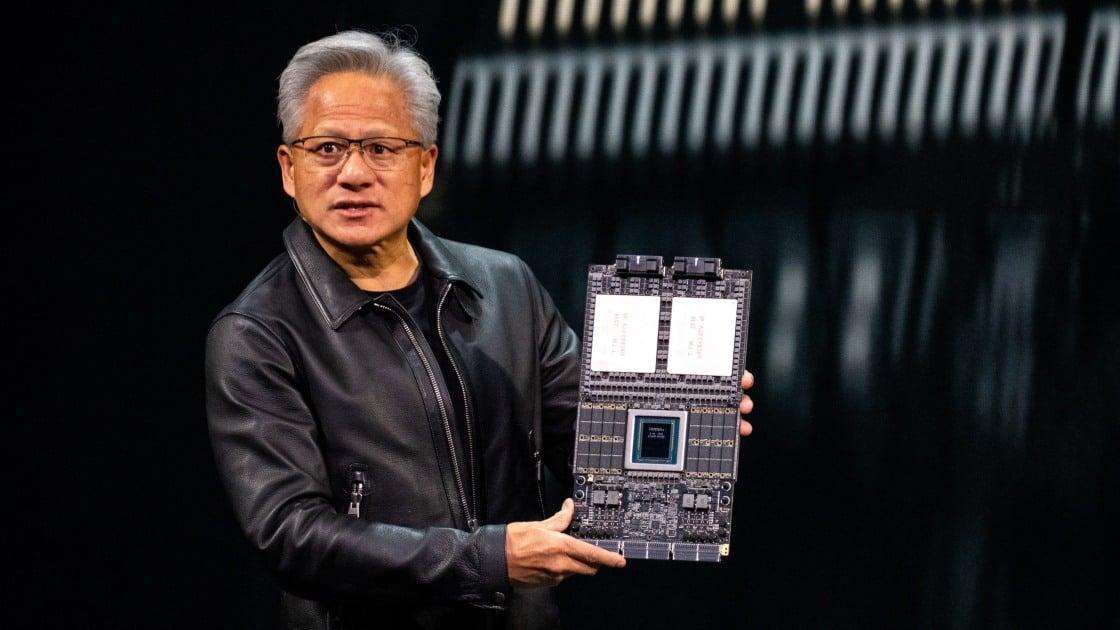

Today at the Consumer Electronics show, Nvidia CEO Jensen Huang officially launched the company's new Rubin computing architecture, which he described as the state of the art in AI hardware. The new architecture is currently in production and is expected to ramp up further in the second half of the year. "Vera Rubin is designed to address this fundamental challenge that we have: The amount of computation necessary for AI is skyrocketing." Huang told the audience. "Today, I can tell you that Vera Rubin is in full production." The Rubin architecture, which was first announced in 2024, is the latest result of Nvidia's relentless hardware development cycle, which has transformed Nvidia into the most valuable corporation in the world. The Rubin architecture will replace the Blackwell architecture, which in turn, replaced the Hopper and Lovelace architectures. Rubin chips are already slated for use by nearly every major cloud provider, including high-profile Nvidia partnerships with Anthropic, OpenAI, and Amazon Web Services. Rubin systems will also be used in HPE's Blue Lion supercomputer and the upcoming Doudna supercomputer at Lawrence Berkeley National Lab. Named for the astronomer Vera Florence Cooper Rubin, the Rubin architecture consists of six separate chips designed to be used in concert. The Rubin GPU stands at the center, but the architecture also addresses growing bottlenecks in storage and interconnection with new improvements in the Bluefield and NVLink systems respectively. The architecture also includes a new Vera CPU, designed for agentic reasoning. Explaining the benefits of the new storage, Nvidia's senior director of AI infrastructure solutions Dion Harris pointed to the growing cache-related memory demands of modern AI systems. "As you start to enable new types of workflows, like agentic AI or long-term tasks, that puts a lot of stress and requirements on your KV cache," Harris told reporters on a call, referring to a memory system used by AI models to condense inputs. "So we've introduced a new tier of storage that connects externally to the compute device, which allows you to scale your storage pool much more efficiently." As expected, the new architecture also represents a significant advance in speed and power efficiency. According to Nvidia's tests, the Rubin architecture will operate three and a half times faster than the previous Blackwell architecture on model-training tasks and five times faster on inference tasks, reaching as high as 50 petaflops. The new platform will also support eight times more inference compute per watt. Rubin's new capabilities come amid intense competition to build AI infrastructure, which has seen both AI labs and cloud providers scramble for Nvidia chips as well as the facilities necessary to power them. On an earnings call in October 2025, Huang estimated that between $3 trillion and $4 trillion will be spent on AI infrastructure over the next five years.

[3]

Jensen Huang Says Nvidia's New Vera Rubin Chips Are in 'Full Production'

Nvidia CEO Jensen Huang says that the company's next-generation AI superchip platform, Vera Rubin, is on schedule to begin arriving to customers later this year. "Today, I can tell you that Vera Rubin is in full production," Huang said during a press event on Monday at the annual CES technology trade show in Las Vegas. Rubin will cut the cost of running AI models to about one-tenth of Nvidia's current leading chip system, Blackwell, the company told analysts and journalists during a call on Sunday. Nvidia also said Rubin can train certain large models using roughly one-fourth as many chips as Blackwell requires. Taken together, those gains could make advanced AI systems significantly cheaper to operate and make it harder for Nvidia's customers to justify moving away from its hardware. Nvidia said on the call that two of its existing partners, Microsoft and CoreWeave, will be among the first companies to begin offering services powered by Rubin chips later this year. Two major AI data centers that Microsoft is currently building in Georgia and Wisconsin will eventually include thousands of Rubin chips, Nvidia added. Some of Nvidia's partners have already started running their next-generation AI models on early Rubin systems, the company said. The semiconductor giant also said it's working with Red Hat, which makes open source enterprise software for banks, automakers, airlines, and government agencies, to offer more products that will run on the new Rubin chip system. Nvidia's latest chip platform is named after Vera Rubin, an American astronomer who reshaped how scientists understand the properties of galaxies. The system includes six different chips, including the Rubin GPU and an Vera CPU, both of which are built using Taiwan Semiconductor Manufacturing Company's 3 nanometer fabrication process and the most advanced bandwidth memory technology currently available. Nvidia's sixth-generation interconnect and switching technologies link the various chips together. Each part of this chip system is "completely revolutionary and the best of its kind," Huang proclaimed during the company's CES press conference. Nvidia has been developing the Rubin system for years, and Huang first announced the chips were coming during a keynote speech in 2024. Last year, the company said that systems built on Rubin would begin arriving in the second half of 2026. It's unclear exactly what Nvidia means by saying that Vera Rubin is in "full production." Typically, production for chips this advanced -- which Nvidia is building with its longtime partner TSMC -- starts at low volume while the chips go through testing and validation and ramps up at a later stage.

[4]

Nvidia launches Vera Rubin AI computing platform at CES 2026

Nvidia claims the Rubin GPU is capable of delivering five times as much AI training compute as Blackwell. The Vera Rubin architecture as whole can train a large "mixture of experts" (MOE) AI model in the same amount of time as Blackwell while using a quarter of the GPUs and at one-seventh the token cost. The Rubin launch was originally expected for late this year. Its early arrival today comes just a couple of months after Nvidia reported record high data center revenue, up 66 percent over the prior year. That growth was driven by demand for Blackwell and Blackwell Ultra GPUs, which have set a high bar for Rubin's success and served as a bellwether for the "AI bubble". Products and services running on Rubin will be available from Nvidia's partners starting in the second half of 2026.

[5]

Why Nvidia's new Rubin platform could change the future of AI computing forever

The first platforms will roll out to partners later in the year. The last several years have been stupendous for Nvidia. When generative AI became all the rage, demand for the tech giant's hardware skyrocketed as companies and developers scrambled for its graphics cards to train their large language models (LLMs). During CES 2026, Nvidia held a press conference to unveil its latest innovation in the AI space: the Rubin platform. Also: CES 2026 live updates: Biggest TV, smart glasses, phone news, and more we've seen so far Nvidia announced what the technology can do, and it's all pretty dense, so to keep things concise, I'm only focusing on the highlights. Rubin is an AI supercomputing platform designed to make "building, deploying, and securing the world's largest and most advanced AI systems at the lowest cost" possible. According to Nvidia, the platform can deliver up to a 10x reduction in inference token costs and requires four times fewer graphics cards to train mixture-of-experts (MoE) models compared to the older Blackwell platform. The easiest way to think about Nvidia Rubin is to imagine Blackwell, but on a much grander scale. The goal with Rubin is to accelerate mainstream adoption of advanced AI models, particularly in the consumer space. One of the biggest hurdles holding back widespread adoption of LLMs is cost. As models grow larger and more complex, the hardware and infrastructure required to train and support the models become astronomically expensive. By sharply reducing those token costs via Rubin, Nvidia hopes to make large-scale AI deployment more practical. Also: Nvidia's physical AI models clear the way for next-gen robots - here's what's new Nvidia said that it used an "extreme codesign" approach when developing the Rubin platform, creating a single AI supercomputer made up of six integrated chips. At the center is an Nvidia Vera CPU, an energy-efficient processor for large-scale AI factories, built with 88 custom Olympus cores, full Armv9.2 compatibility, and fast NVLink-C2C connectivity to deliver high performance. Working alongside the CPU is the Nvidia Rubin GPU, serving as the platform's primary workhorse. Sporting a third-generation Transform Engine, it is capable of delivering up to 50 petaflops of NVFP4 computational power. Connecting everything together is the Nvidia NVLink 6 Switch, enabling ultra-fast GPU-to-GPU communication. Nvidia's ConnectX-9 SuperNIC handles high-speed networking, while the Bluefield-4 DPU offloads some of the workload from the CPU and GPU so they focus more on AI models. Rounding everything out is the company's Spectrum-6 Ethernet switch to provide next-gen networking for AI data centers. Also: The most exciting AI wearable at CES 2026 might not be smart glasses after all The Rubin will be available in multiple configurations, such as the Nvidia Vera Rubin NVL72. This combines 36 Nvidia Vera CPUs, 72 Nvidia Rubin GPUs, an Nvidia NVLink 6 switch, multiple Nvidia ConnectX-9 SuperNICs, and Nvidia BlueField-4 DPUs. Judging from all the news, I don't think these supercomputing platforms will be something that the average person can buy from Best Buy. Nvidia said that the first of these Rubin platforms will roll out to partners sometime in the second half of 2026. Among the first will be Amazon Web Services, Google Cloud, and Microsoft. If Nvidia's gamble pays off, these computers could usher in a new era of AI computing where scale is much more manageable.

[6]

Nvidia's focus on rack-scale AI systems is a portent for the year to come -- Rubin points the way forward for company, as data center business booms

Those who tuned into Nvidia's CES keynote on January 5 may have found themselves waiting for a familiar moment that never arrived. There was no GeForce reveal and no tease of the next RTX generation. For the first time in roughly five years, Nvidia stood on the CES stage without a new GPU announcement to anchor the show. That absence was no accident. Rather than refresh its graphics lineup, Nvidia used CES 2026 to talk about the Vera Rubin platform and launch its flagship NVL72 AI supercomputer, both slated for production in the second half of 2026 -- a reframing of what Nvidia now considers its core product. The company is no longer content to sell accelerators one card at a time; it is selling entire AI systems instead. Vera Rubin is not being positioned as a conventional GPU generation, even though it includes a new GPU architecture. Nvidia describes it as a rack-scale computing platform built from multiple classes of silicon that are designed, validated, and deployed together. At its center are Rubin GPUs and Vera CPUs, joined by NVLink 6 interconnects, BlueField 4 DPUs, and Spectrum 6 Ethernet switches. Each rack integrates 72 Rubin GPUs and 36 Vera CPUs into a single logical system. Nvidia says each Rubin GPU can deliver up to 50 PFLOPS of NVFP4 compute for AI inference using low-precision formats, roughly five times the throughput of its Blackwell predecessor in similar inference workloads. Memory capacity and bandwidth scale accordingly, with HBM4 pushing hundreds of gigabytes per GPU and aggregate rack bandwidth measured in the hundreds of terabytes per second. These monolithic Vera Rubin systems are designed to reduce the cost of inference by an order of magnitude compared with Blackwell-based deployments. That claim rests on several pillars: higher utilization through tighter coupling, reduced communication overhead via NVLink 6, and architectural changes that target the realities of large language models rather than traditional HPC workloads. One of those changes is how Nvidia handles model context. BlueField 4 DPUs introduce a shared memory tier for long-context inference, storing key-value data outside the GPU frame buffer and making it accessible across the rack. As models push toward million-token context windows, memory access and synchronization increasingly dominate runtime. Nvidia is seems to be taking the view that treating context as a first-class system resource, rather than a per-GPU issue, will unlock more consistent scaling. This emphasis on pre-integrated systems reflects how Nvidia's largest customers now buy hardware. Hyperscalers and AI labs deploy accelerators in standardized blocks, often measured in racks or data halls rather than individual cards. By delivering those blocks as finished products, Nvidia shortens deployment timelines and reduces the tuning work customers must do themselves. CES became the venue to outline that vision, even if it meant leaving traditional GPU announcements off the agenda. Suddenly, the lack of a new GeForce announcement becomes a whole lot easier to explain. Nvidia's current consumer line-up of 50-series GPUs is still pretty fresh, and it continues to command prices in excess of $3,500 per unit. Introducing an interim refresh would carry higher costs at a time when memory pricing is at all-time highs, and supply remains tight. The company has also leaned more heavily on software updates, particularly DLSS and other AI-assisted rendering techniques, to extend the useful life of existing GPUs. From a purely commercial perspective, consumer GPUs now represent a smaller (and, unfortunately, shrinking) share of Nvidia's revenue and focus than they did even two years ago, let alone five. Data center products tied to AI training and inference account for the majority of growth, and those customers need system-level gains, not incremental improvements in graphics performance. Lisa Su, during her AMD keynote, said it best: "There's never been a technology like AI." CES, once a showcase for new PC hardware, has become a stage for AI announcements. This does not mean Nvidia -- or AMD for that matter -- is abandoning gaming or professional graphics. Rather, it suggests a lengthening cadence between major GPU architectures. When the next GeForce generation arrives, it is likely to incorporate lessons from Rubin, particularly around memory hierarchy and interconnect efficiency, rather than simply increasing shader counts. Nvidia's system-centric approach inevitably invites comparison with rivals pursuing similar strategies. AMD is pairing its Instinct accelerators with EPYC CPUs in tightly coupled server designs, while Intel is attempting to unify CPUs, GPUs, and accelerators under a common programming model. Apple has taken vertical integration even further in consumer devices, designing CPUs, GPUs, and neural engines as a single system on a chip. What distinguishes Nvidia is the depth of its software stack. CUDA, TensorRT, and the company's AI frameworks remain deeply entrenched in research and production environments. By extending that stack everywhere it can, Nvidia increases the switching cost for customers who might otherwise consider alternative silicon. There are risks to this approach, and large customers are increasingly exploring in-house accelerators to reduce dependence on a single vendor, and complex rack-scale systems raise the stakes for manufacturing or design issues. Because of this, Nvidia's ability to deliver Rubin on schedule will matter just as much as the performance metrics presented at CES. Still, the decision to use CES 2026 to spotlight Vera Rubin rather than a new GPU points to where Nvidia sees its future. Let's face it: We, and Nvidia, all know that the next phase of computing will be defined less by individual chips and more by how effectively those chips are integrated into scalable systems. Nvidia is therefore aligning itself with where the demand and investment are, even if that means placing less emphasis on the hardware that defined the company for decades.

[7]

Nvidia CEO Says New Rubin Chips Are on Track, Helping Speed AI

The Rubin processor is 3.5 times better at training and five times better at running AI software than its predecessor, Blackwell, and customers including Microsoft will be among the first to deploy the new hardware in the second half of the year. Nvidia Corp. Chief Executive Officer Jensen Huang said that the company's highly anticipated Rubin data center processors are in production and customers will soon be able to try out the technology. All six of the chips for a new generation of computing equipment -- named after astronomer Vera Rubin -- are back from manufacturing partners and on track for deployment by customers in the second half of the year, Huang said at the CES trade show in Las Vegas Monday. "Demand is really high," he said. The growing complexity and uptake of artificial intelligence software is placing a strain on existing computer resources, creating the need for much more, Huang said. Nvidia, based in Santa Clara, California, is seeking to maintain its edge as the leading maker of artificial intelligence accelerators, the chips used by data center operators to develop and run AI models. Some on Wall Street have expressed concern that competition is mounting for Nvidia -- and that AI spending can't continue at its current pace. Data center operators also are developing their own AI accelerators. But Nvidia has maintained bullish long-term forecasts that point to a total market in the trillions of dollars. Rubin is Nvidia's latest accelerator and is 3.5 times better at training and five times better at running AI software than its predecessor, Blackwell, the company said. A new central processing unit has 88 cores -- the key data-crunching elements -- and provides twice the performance of the component that it's replacing. The company is giving details of its new products earlier in the year than it typically does -- part of a push to keep the industry hooked on its hardware, which has underpinned an explosion in AI use. Nvidia usually dives into product details at its spring GTC event in San Jose, California. Even while talking up new offerings, Nvidia said previous generations of products are still performing well. The company also has seen strong demand from customers in China for the H200 chip that the Trump administration has said it will consider letting the chipmaker ship to that country. License applications have been submitted, and the US government is deciding what it wants to do with them, Chief Financial Officer Colette Kress told analysts. Regardless of the level of license approval, Kress said, Nvidia has enough supply to serve customers in the Asian nation without affecting the company's ability to ship to customers elsewhere in the world. For Huang, CES is yet another stop on his marathon run of appearances at events, where he's announced products, tie-ups and investments all aimed at adding momentum to the deployment of AI systems. His counterpart at Nvidia's closest rival, Advanced Micro Devices Inc.'s Lisa Su, was slated to give a keynote presentation at the show later Monday. Get the Tech Newsletter bundle. Get the Tech Newsletter bundle. Get the Tech Newsletter bundle. Bloomberg's subscriber-only tech newsletters, and full access to all the articles they feature. Bloomberg's subscriber-only tech newsletters, and full access to all the articles they feature. Bloomberg's subscriber-only tech newsletters, and full access to all the articles they feature. Bloomberg may send me offers and promotions. Plus Signed UpPlus Sign UpPlus Sign Up By submitting my information, I agree to the Privacy Policy and Terms of Service. The new hardware, which also includes networking and connectivity components, will be part of its DGX SuperPod supercomputer while also being available as individual products for customers to use in a more modular way. The step-up in performance is needed because AI has shifted to more specialized networks of models that not only sift through massive amounts of inputs but need to solve particular problems through multistage processes. The company emphasized that Rubin-based systems will be cheaper to run than Blackwell versions because they'll return the same results using smaller numbers of components. Microsoft Corp. and other large providers of remote computing will be among the first to deploy the new hardware in the second half of the year, Nvidia said. For now, the majority of spending on Nvidia-based computers is coming from the capital expenditure budgets of a handful of customers, including Microsoft, Alphabet Inc.'s Google Cloud and Amazon.com Inc.'s AWS. Nvidia is pushing software and hardware aimed at broadening the adoption of AI across the economy, including robotics, health care and heavy industry. As part of that effort, Nvidia announced a group of tools designed to accelerate development of autonomous vehicles and robots.

[8]

Nvidia unpacks Vera Rubin rack system at CES

CES used to be all about consumer electronics, TVs, smartphones, tablets, PCs, and - over the last few years - automobiles. Now, it's just another opportunity for Nvidia to peddle its AI hardware and software -- in particular its next-gen Vera Rubin architecture. The AI arms dealer boasts that, compared to Blackwell, the chips will deliver up to 5x higher floating point performance for inference, 3.5x for training, along with 2.8x more memory bandwidth and an NvLink interconnect that's now twice as fast. But don't get too excited just yet. It's not like the chips are launching earlier than previously expected. They're still expected to arrive in the second half of the year, just like Blackwell and Blackwell Ultra did. Nvidia normally holds off until GTC in March to reveal its next-gen chips. Perhaps AMD's aggressive rack scale roadmap has Nvidia's CEO Jensen Huang nervous. Announced at Advancing AI late last spring and expected later this year, AMD's double-wide Helios racks promise to deliver performance on par with Vera Rubin NVL72 while offering customers 50 percent more HBM4. Nvidia has also been teasing the Vera Rubin platform for nearly a year now, to the point where there's not much we didn't already know about the platform. But even though you won't be able to get your hands on Rubin for a few more months, it's never too early for a closer look at what the multi-million dollar machines will buy you. The flagship system for Nvidia's Vera Rubin CPU and GPU architectures is once again its NVL72 rack systems. At first blush, the machine doesn't look all that different from its Blackwell and Blackwell Ultra-based siblings. But under the hood, Nvidia has been hard at work refining the architecture for better serviceability and telemetry. Switch trays can now be serviced without taking down the machine first. Nvidia also has new reliability, availability, and serviceability features which enable customers to check in on the health of the GPUs without dropping them from the cluster first. These health checks can now run between training checkpoints or jobs, Ian Buck, Nvidia's VP and General Manager of Hyperscale and HPC, tells El Reg. At the heart of the rack is the Vera Rubin superchip, which, if history tells us anything, should bear the VR200 code name. Much like Blackwell, the Vera Rubin superchip features two dual-die Rubin GPUs, each capable of churning out 50 petaFLOPS of inference performance or 35 petaFLOPS for training. Both of those numbers refer to peak performance achievable when using NVLFP4 data type. According to Buck, for this generation, Nvidia is using a new adaptive compression technique that's better suited to generative AI and mixture of experts (MoE) model inference to achieve the 50 petaFLOP claim rather than structured sparsity. As you may recall, while structured sparsity did have benefits for certain workloads, it didn't offer many if any advantages for LLM inference. We've asked Nvidia about higher precision data types, like FP8 and BF16 which remain relevant for vision language model inference, image generation, fine tuning, and training workloads; we'll let you know if we hear back. The GPUs are fed by 288 GB of HBM4 memory -- 576 GB per superchip -- which, despite delivering the same capacity as the Blackwell Ultra-based GB300, is now 2.8x faster at 22 TB/s per socket (44 TB/s per superchip). If that number seems a little high, that's because Nvidia initially targeted 13 TB/s of HBM4 bandwidth when it first teased Rubin last year. Buck tells us that the jump to 22 TB/s was attained entirely through silicon and doesn't rely on techniques like memory compression. The two Rubin GPUs are paired to Nvidia's new Vera CPU via a 1.8 TB/s NvLink-C2C interconnect. The CPU contains 88 of Nvidia's custom Arm-based Olympus cores and is paired with 1.5 TB of LPDDR5x memory -- 3x that of the GB200. We guess we know why memory is in such short supply these days. Actually it's more complicated than that, but this certainly isn't helping the situation. However, one of the most important features Vera brings to the table is support for confidential computing across the system's NvLink domain, something that previously was only available on x86-based HGX systems. Nvidia's Vera Rubin NVL72 racks feature 72 Rubin GPUs, 20.7 TB of HBM4, 36 Vera CPUs, 54 TB of LPDDR5x which are spread across 18 compute blades interconnected by nine NvSwitch 6 blades which deliver 3.6 TB/s of bandwidth to each GPU -- twice that of last gen. Nvidia isn't ready to say how much power that additional compute and bandwidth will require. However, Buck tells us that while it will be higher, we shouldn't expect power to double. If you're scratching your head wondering "didn't Nvidia say this thing was supposed to have 144 GPUs?" you wouldn't be the only one. At GTC 2025, Huang announced that they were changing the way they counted GPUs from the package to the dies on board. In that sense, the Blackwell-based NVL72s also had 144 GPUs, but Nvidia was going to wait for Vera Rubin to make the switch to the new convention. It seems Nvidia has since changed its mind and is sticking with the established naming convention. Having said that, we may yet see Nvidia racks with at least 144 GPUs on board before long. The Rubin CPUs we've talked about up to this point actually are one of two accelerators announced so far. Rubin CPX is the other. Unveiled in September, the chip is a more niche product, designed specifically to accelerate the compute-intense prefill phase of LLM inference. Since prefill isn't bandwidth-bound, CPX doesn't need HBM and can instead make do with slower DRAM. Each CPX accelerator will be capable of churning out 30 petaFLOPS of NVFP4 compute and will sport 128 GB of GDDR7 memory. In a graphic shared this summer, Nvidia showed an NVL144 CPX blade with four 288 GB Rubin SXM modules and eight Rubin CPX prefill accelerators for a total of 12 GPUs per node. The complete rack system would only need 12 compute blades for the thing to have 144 GPUs, though only 48 of them would be connected via NVLink. As with past Nvidia rack systems, eight NVL72 racks form a SuperPOD with the GPU slinger's Spectrum-X Ethernet and/or Quantum-X InfiniBand the glue used to stitch them together. Multiple SuperPODS can then be combined to form larger compute environments for training or distributed inference. If you aren't ready to make the switch to Nvidia's rack-scale kit, don't worry. Eight-way (NVL8) HGX systems based around the Rubin platform are still available, but we're told liquid cooling is no longer a suggestion, but a requirement. These smaller systems, 64 to be exact, can also be combined to form a SuperPOD with 512 GPUs -- just shy of the of the more powerful NVL72 SuperPOD at 576. For this generation, Nvidia also has two new NICs, which it teased on a few occasions over the last year. At GTC DC, Nvidia showed off the ConnectX-9, a 1.6 Tbps "superNIC" designed for high-speed distributed computing, which we sometimes call the backend network. For storage, management, and security, Nvidia is pushing its BlueField-4 data processing units (DPUs), which feature an integrated 800 Gbps ConnectX-9 NIC and a 64-core Grace CPU on board. This, we should note, isn't the same Grace CPU found in the GB200, but a newer version based on Arm's Neoverse V3 core architecture. The beefier CPU is designed to offload software defined networking, storage, security, and can also run hypervisors for virtualized environments. Cramming 64 Grace cores onto a NIC might seem like overkill, but Nvidia has a specific reason for wanting that much compute hanging off the machine like a computer in front of a computer. Alongside all its shiny new hardware, Nvidia showed off what it's describing as a "new class of memory between the GPU and storage," designed to offload key value (KV) caches. The basic idea isn't new. KV caches store the model's state. You can think of this like its short-term memory. Calculating the key value vectors is one of the more compute-intensive aspects of the inference. Because inference workloads often involve passing over the same info multiple times, it makes sense to cache the computed vectors in memory. By doing this, only changes need to be computed and data in the cache can be reused. This sounds simple, but, in practice, KV caches can be quite large, easily consuming tens of gigabytes in order to keep track of 100,000 or so tokens. That might sound like a lot, but a single user running a code assistant or agent can blow through that rather quickly. As we understand it, Nvidia's Inference Context Storage platform will work with storage platforms from multiple partner vendors, and will take advantage of the BlueField-4 DPU, NIXL GPU direct storage libraries, and optimize KV cache offloading for maximum performance and efficiency. Combined with technologies like Rubin CPX, this kind of high-performance KV offloading should allow the GPUs to spend more time generating tokens and less time waiting on data to be shuffled about and recomputed. Nvidia's decision to "launch" Rubin -- again it isn't actually shipping in volume yet -- betrays an increasingly competitive compute landscape. As we mentioned earlier, AMD's Helios rack systems promise to deliver floating point performance roughly equivalent to Nvidia's Vera Rubin NVL72 at 2.9 exaFLOPS versus 2.5-3.6 exaFLOPS of FP4, respectively. For applications that can't take advantage of Nvidia's adaptive compression tech, Helios is, at least on paper, faster. However, with Nvidia planning to ship faster memory on Rubin than initially planned, AMD no longer has a bandwidth advantage. It does still have a capacity lead with 432 GB of HBM4 per GPU socket compared to 288 GB on Rubin. In theory, this should allow the AMD-based system to serve 50 percent larger MoE models on a single double-wide rack. In practice, the real-world performance is going to depend heavily on how well tunneling Ultra Accelerator Link (UALink) over Broadcom's Tomahawk 6 Ethernet switches actually works. AMD's MI450-series GPUs appear very well positioned to compete against Rubin, but as we've seen repeated with Amazon and Google, the ability to scale that compute often makes a bigger difference than the chip's individual performance. AMD is also having to play catch up on the software ecosystem front. The company's HIP and ROCm libraries have certainly come a long way since the MI300X made its debut at the end of 2023, but the company still has a ways to go. Nvidia certainly isn't making the situation any easier for AMD. At CES, the GPU giant unveiled a slew of new software frameworks aimed at enterprises, robotics devs, and the automotive industry. This includes the development of new foundation models for domain specific applications like retrieval augmented generation, safety, speech, and autonomous driving. The latter, called Alpamayo, is a relatively small "reasoning vision language action" model designed to help level-4 autonomous vehicles better handle unique and fast evolving road conditions. Level-4 capable vehicles are capable of driving fully autonomously, unsupervised driving in specific environments, like high-ways or urban environments. Nvidia's autonomous driving stack is due to hit US roads late this year with the level-2++ capable Mercedes Benz CLA. This class of autonomous vehicle is capable of driving itself in similar conditions as level-4, but requires the supervision of a human operator. With Nvidia kicking off the New Year with Rubin -- a chip we hadn't expected to get a good look at for another three months -- we're left to wonder what we'll see at GTC, which is slated to run from March 16-19 in San Jose, California. In addition to the regular mix of software libraries and foundation models, we expect to get a lot more details on the Kyber racks that'll underpin the company's Vera Rubin Ultra platform starting in 2027. As you might have noticed, Nvidia, AMD, AWS, and others have gotten in the habit of pre-announcing products well in advance of them shipping or becoming generally available. As the saying goes: enterprises don't buy products, they buy roadmaps. In this case, however, it's really about ensuring they have somewhere to put them. Nvidia's Kyber racks are expected to pull 600 kilowatts of power which means datacenter operators need to start preparing now, if they want to deploy them on day one. We don't yet have a full picture of what Vera Rubin Ultra will offer, but we know it'll feature four reticle-sized Rubin Ultra GPUs, 1TB of HBM4e, and will deliver 100 petaFLOPS of FP4 performance. As things currently stand, Nvidia plans to cram 144 of these GPU packages (576 GPU dies) into a single NvLink domain which is expected to deliver 15 exaFLOPS of FP4 inference performance or 10 exaFLOPS for training. ®

[9]

Nvidia launches Vera Rubin NVL72 AI supercomputer at CES -- promises up to 5x greater inference performance and 10x lower cost per token than Blackwell, coming 2H 2026

AI is everywhere at CES 2026, and Nvidia GPUs are at the center of the expanding AI universe. Today, during his CES keynote, CEO Jensen Huang shared his plans for how the company will remain at the forefront of the AI revolution as the technology reaches far beyond chatbots into robotics, autonomous vehicles, and the broader physical world. First up, Huang officially launched Vera Rubin, Nvidia's next-gen AI data center rack-scale architecture. Rubin is the result of what the company calls "extreme co-design" across six types of chips: the Vera CPU, the Rubin GPU, the NVLink 6 switch, the ConnectX-9 SuperNIC, the BlueField-4 data processing unit, and the Spectrum-6 Ethernet switch. Those building blocks all come together to create the Vera Rubin NVL72 rack. Demand for AI compute is insatiable, and each Rubin GPU promises much more of it for this generation: 50 PFLOPS of inference performance with the NVFP4 data type, 5x that of Blackwell GB200, and 35 PFLOPS of NVFP4 training performance, 3.5x that of Blackwell. To feed those compute resources, each Rubin GPU package has eight stacks of HBM4 memory delivering 288GB of capacity and 22 TB/s of bandwidth. Per-GPU compute is just one building block in the AI data center. As leading large language models have shifted from dense architectures that activate every parameter to produce a given output token to mixture-of-experts (MoE) architectures that only activate a portion of the available parameters per token, it has become possible to scale up those models relatively efficiently. However, communication among those experts within models requires vast amounts of inter-node bandwidth. Vera Rubin introduces NVLink 6 for scale-up networking, which boosts per-GPU fabric bandwidth to 3.6 TB/s (bi-directional). Each NVLink 6 switch boasts 28 TB/s of bandwidth, and each Vera Rubin NVL72 rack has nine of these switches for 260 TB/s of total scale-up bandwidth. The Nvidia Vera CPU implements 88 custom Olympus Arm cores with what Nvidia calls "spatial multi-threading," for up to 176 threads in flight. The NVLink C2C interconnect used to coherently connect the Vera CPU to the Rubin GPUs has doubled in bandwidth, to 1.8 TB/s. Each Vera CPU can address up to 1.5 TB of SOCAMM LPDDR5X memory with up to 1.2 TB/s of memory bandwidth. To scale out Vera Rubin NVL72 racks into DGX SuperPods of eight racks each, Nvidia is introducing a pair of Spectrum-X Ethernet switches with co-packaged optics, all built up from its Spectrum-6 chip. Each Spectrum-6 chip offers 102.4 Tb/s of bandwidth, and Nvidia is offering it in two switches. The SN688 boasts 409.6 Tb/s of bandwidth for 512 ports of 800G Ethernet or 2048 ports of 200G. The SN6810 offers 102.4 Tb/s of bandwidth that can be channeled into 128 ports of 800G or 512 ports of 200G Ethernet. Both of these switches are liquid-cooled, and Nvidia claims they're more power-efficient, more reliable, and offer better uptime, presumably against hardware that lacks silicon photonics. As context windows grow to millions of tokens, Nvidia says that operations on the key-value cache that holds the history of interactions with an AI model become the bottleneck for inference performance. To break through that bottleneck, Nvidia is using its next-gen BlueField 4 DPUs to create what it calls a new tier of memory: the Inference Context Memory Storage Platform. The company says this tier of storage is meant to enable efficient sharing and reuse of key-value cache data across AI infrastructure, resulting in better responsiveness and throughput and predictable, power-efficient scaling of agentic AI architectures. For the first time, Vera Rubin also expands Nvidia's trusted execution environment to the entire rack by securing the chip, fabric, and network level, which Nvidia says is key to ensuring secrecy and security for AI frontier labs' precious state-of-the-art models. All told, each Vera Rubin NVL72 rack offers 3.6 exaFLOPS of NVFP4 inference performance, 2.5 exaFLOPS of NVFP4 training performance, 54 TB of LPDDR5X memory connected to the Vera CPUs, and 20.7 TB of HBM4 offering 1.6 PB/s of bandwidth. To keep those racks productive, Nvidia highlighted several reliability, availability, and serviceability (RAS) improvements at the rack level, such as a cable-free modular tray design that enables much quicker swapping of components versus prior NVL72 racks, improved NVLink resiliency that allows for zero-downtime maintenance, and a second-generation RAS engine that allows for zero-downtime health checks. All of this raw compute and bandwidth is impressive on its face, but the total cost of ownership picture is likely most important to Nvidia's partners as they ponder massive investments in future capacity. With Vera Rubin, Nvidia says it takes only 1/4 the number of GPUs to train MoE models versus Blackwell, and that Rubin can cut the cost per token for MoE inference down by as much as 10x across a broad range of models. If we invert those figures, it suggests that Rubin can also increase training throughput and deliver vastly more tokens in the same rack space. Nvidia says it's gotten all six of the chips it needs to build Vera Rubin NVL72 systems back from the fabs and that it's pleased with the performance of the workloads it's running on them. The company expects that it will ramp into volume production of Vera Rubin NVL72 systems in the second half of 2026, which remains consistent with its past projections regarding Rubin availability.

[10]

Nvidia unveils Vera Rubin early, signaling a faster AI hardware cycle

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. Looking ahead: Nvidia kicked off the year with an unusual move: unveiling its next-generation AI computing architecture months ahead of schedule. At CES 2026 in Las Vegas, CEO Jensen Huang used his keynote to introduce the company's Vera Rubin server systems - a clear signal that Nvidia intends to press its advantage as demand for ever-larger AI models accelerates. The Rubin launch, now slated for mid-2026 availability, marks a shift in Nvidia's traditional rollout cadence. The company typically reserves major chip announcements for its spring developer conference, but Huang said the pace of AI development is forcing the entire semiconductor industry to move faster. "The amount of computing necessary for AI is skyrocketing," Huang told the audience. "The race is on for AI. Everyone is trying to get to the next frontier." Nvidia claims the Rubin GPU delivers roughly five times the training compute of Blackwell. Vera Rubin represents Nvidia's largest architectural leap since the Blackwell generation. Rather than a single chip, the platform is built around a tightly integrated system of six components: the Vera CPU, Rubin GPU, a sixth-generation NVLink switch, ConnectX-9 networking, the BlueField-4 data processing unit, and the Spectrum-X 102.4-terabit-per-second co-packaged optical interconnect. Nvidia executives describe the result as "six chips that make one AI supercomputer." Each part is designed to reduce bottlenecks across both AI training and inference. Nvidia claims the Rubin GPU delivers roughly five times the training compute of Blackwell. When applied to large mixture-of-experts models - now a standard approach for frontier-scale systems - the company says Rubin can match Blackwell's training time using one-quarter the number of GPUs and at roughly one-seventh the cost per processed token. Huang framed the architecture as a response to deeper shifts in how AI workloads are evolving, particularly around inference. In his view, inference is no longer a simple pattern-matching task, but a "thinking process," as models increasingly need to reason over long sequences, multiple data types, and real-world context. That idea feeds into Nvidia's broader vision of simulation-driven AI, where virtual environments train systems to operate in the physical world. The Vera Rubin platform is designed to support the massive compute and memory demands of these workloads, especially for robotics, autonomous vehicles, and digital twins. Huang said Nvidia's goal is to deliver "the entire stack," from silicon to networking to software, so developers can focus on building applications rather than stitching infrastructure together. The announcement also underscores how far Nvidia has expanded beyond GPUs alone. With Rubin, the company has fused compute, networking, memory, and security into a single rack-scale platform, aiming to eliminate the bottlenecks that increasingly define AI performance. Huang argued that this level of integration effectively positions Nvidia as both the world's largest networking hardware company and the top chipmaker for AI computing. For inference tasks, Rubin promises a 10-fold cost reduction compared with Blackwell, according to Nvidia. The platform supports third-generation confidential computing and will be the first rack-scale trusted computing system upon full deployment. The early unveiling follows Nvidia's record data center revenue, which rose 66% year-over-year in the last quarter, driven largely by demand for Blackwell and Blackwell Ultra GPUs. That success has set high expectations for Rubin. Analysts view the ahead-of-schedule announcement as a signal that development and manufacturing remain on track, and that Nvidia intends to move quickly as the next wave of AI infrastructure spending ramps up.

[11]

NVIDIA DGX SuperPOD Sets the Stage for Rubin-Based Systems

NVIDIA DGX Rubin systems unify the latest NVIDIA breakthroughs in compute, networking and software to deliver up to 10x reduction in inference token cost compared with the NVIDIA Blackwell platform -- accelerating any AI workload, from inference and training to long-context reasoning. NVIDIA DGX SuperPOD is paving the way for large-scale system deployments built on the NVIDIA Rubin platform -- the next leap forward in AI computing. At the CES trade show in Las Vegas, NVIDIA today introduced the Rubin platform, comprising six new chips designed to deliver one incredible AI supercomputer, and engineered to accelerate agentic AI, mixture‑of‑experts (MoE) models and long‑context reasoning. The Rubin platform unites six chips -- the NVIDIA Vera CPU, Rubin GPU, NVLink 6 Switch, ConnectX-9 SuperNIC, BlueField-4 DPU and Spectrum-6 Ethernet Switch -- through an advanced codesign approach that accelerates training and reduces the cost of inference token generation. DGX SuperPOD remains the foundational design for deploying Rubin‑based systems across enterprise and research environments. The NVIDIA DGX platform addresses the entire technology stack -- from NVIDIA computing to networking to software -- as a single, cohesive system, removing the burden of infrastructure integration and allowing teams to focus on AI innovation and business results. "Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof," said Jensen Huang, founder and CEO of NVIDIA. New Platform for the AI Industrial Revolution The Rubin platform used in the new DGX systems introduces five major technology advancements designed to drive a step‑function increase in intelligence and efficiency: * Sixth‑Generation NVIDIA NVLink -- 3.6TB/s per GPU and 260TB/s per Vera Rubin NVL72 rack for massive MoE and long‑context workloads. * NVIDIA Vera CPU -- 88 NVIDIA custom Olympus cores, full Armv9.2 compatibility and ultrafast NVLink-C2C connectivity for industry-leading efficient AI factory compute. * NVIDIA Rubin GPU -- 50 petaflops of NVFP4 compute for AI inference featuring a third-generation Transformer Engine with hardware‑accelerated compression. * Third‑Generation NVIDIA Confidential Computing -- Vera Rubin NVL72 is the first rack-scale platform delivering NVIDIA Confidential Computing, which maintains data security across CPU, GPU and NVLink domains. * Second‑Generation RAS Engine -- Spanning GPU, CPU and NVLink, the NVIDIA Rubin platform delivers real-time health monitoring, fault tolerance and proactive maintenance, with modular cable-free trays enabling 3x faster servicing. Together, these innovations deliver up to 10x reduction in inference token cost of the previous generation -- a critical milestone as AI models grow in size, context and reasoning depth. DGX SuperPOD: The Blueprint for NVIDIA Rubin Scale‑Out Rubin-based DGX SuperPOD deployments will integrate: * NVIDIA DGX Vera Rubin NVL72 or DGX Rubin NVL8 systems * NVIDIA BlueField‑4 DPUs for secure, software‑defined infrastructure * NVIDIA Inference Context Memory Storage Platform for next-generation inference * NVIDIA ConnectX‑9 SuperNICs * NVIDIA Quantum‑X800 InfiniBand and NVIDIA Spectrum‑X Ethernet * NVIDIA Mission Control for automated AI infrastructure orchestration and operations NVIDIA DGX SuperPOD with DGX Vera Rubin NVL72 unifies eight DGX Vera Rubin NVL72 systems, featuring 576 Rubin GPUs, to deliver 28.8 exaflops of FP4 performance and 600TB of fast memory. Each DGX Vera Rubin NVL72 system -- combining 36 Vera CPUs, 72 Rubin GPUs and 18 BlueField‑4 DPUs -- enables a unified memory and compute space across the rack. With 260TB/s of aggregate NVLink throughput, it eliminates the need for model partitioning and allows the entire rack to operate as a single, coherent AI engine. NVIDIA DGX SuperPOD with DGX Rubin NVL8 systems delivers 64 DGX Rubin NVL8 systems featuring 512 Rubin GPUs. NVIDIA DGX Rubin NVL8 systems bring Rubin performance into a liquid-cooled form factor with x86 CPUs to give organizations an efficient on-ramp to the Rubin era for any AI project in the develop‑to‑deploy pipeline. Powered by eight NVIDIA Rubin GPUs and sixth-generation NVLink, each DGX Rubin NVL8 delivers 5.5x NVFP4 FLOPS compared with NVIDIA Blackwell systems. Next‑Generation Networking for AI Factories The Rubin platform redefines the data center as a high-performance AI factory with revolutionary networking, featuring NVIDIA Spectrum-6 Ethernet switches, NVIDIA Quantum-X800 InfiniBand switches, BlueField-4 DPUs and ConnectX-9 SuperNICs, designed to sustain the world's most massive AI workloads. By integrating these innovations into the NVIDIA DGX SuperPOD, the Rubin platform eliminates the traditional bottlenecks of scale, congestion and reliability. Optimized Connectivity for Massive-Scale Clusters The next-generation 800Gb/s end-to-end networking suite provides two purpose-built paths for AI infrastructure, ensuring peak efficiency whether using InfiniBand or Ethernet: * NVIDIA Quantum-X800 InfiniBand: Delivers the industry's lowest latency and highest performance for dedicated AI clusters. It utilizes Scalable Hierarchical Aggregation and Reduction Protocol (SHARP v4) and adaptive routing to offload collective operations to the network. * NVIDIA Spectrum-X Ethernet: Built on the Spectrum-6 Ethernet switch and ConnectX-9 SuperNIC, this platform brings predictable, high-performance scale-out and scale-across connectivity to AI factories using standard Ethernet protocols, optimized specifically for the "east-west" traffic patterns of AI workloads. Engineering the Gigawatt AI Factory These innovations represent an extreme codesign with the Rubin platform. By mastering congestion control and performance isolation, NVIDIA is paving the way for the next wave of gigawatt AI factories. This holistic approach ensures that as AI models grow in complexity, the networking fabric of the AI factory remains a catalyst for speed rather than a constraint. NVIDIA Software Advances AI Factory Operations and Deployments NVIDIA Mission Control -- AI data center operation and orchestration software for NVIDIA Blackwell-based DGX systems -- will be available for Rubin-based NVIDIA DGX systems to enable enterprises to automate the management and operations of their infrastructure. NVIDIA Mission Control accelerates every aspect of infrastructure operations, from configuring deployments to integrating with facilities to managing clusters and workloads. With intelligent, integrated software, enterprises gain improved control over cooling and power events for NVIDIA Rubin, as well as infrastructure resiliency. NVIDIA Mission Control enables faster response with rapid leak detection, unlocks access to NVIDIA's latest efficiency innovations and maximizes AI factory productivity with autonomous recovery. NVIDIA DGX systems also support the NVIDIA AI Enterprise software platform, including NVIDIA NIM microservices, such as for the NVIDIA Nemotron-3 family of open models, data and libraries. DGX SuperPOD: The Road Ahead for Industrial AI DGX SuperPOD has long served as the blueprint for large‑scale AI infrastructure. The arrival of the Rubin platform will become the launchpad for a new generation of AI factories -- systems designed to reason across thousands of steps and deliver intelligence at dramatically lower cost, helping organizations build the next wave of frontier models, multimodal systems and agentic AI applications. NVIDIA DGX SuperPOD with DGX Vera Rubin NVL72 or DGX Rubin NVL8 systems will be available in the second half of this year. See notice regarding software product information.

[12]

Nvidia New Rubin Platform Shows Memory Is No Longer 'Afterthought' in AI

A boom in AI demand and the accompanying shortage in memory supply is all anyone in the industry is talking about. At CES 2026 in Las Vegas, Nevada, it was also at the heart of Nvidia's latest major product releases. On Monday, the company officially launched the Rubin platform, made up of six chips that combine into one AI supercomputer, which company officials claim is more efficient than the Blackwell models and boasts increases in compute and memory bandwidth. “Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof,†Nvidia CEO Jensen Huang said in a press release. Rubin-based products will be available from Nvidia partners in the second half of 2026, company executives said, naming AWS, Anthropic, Google, Meta, Microsoft, OpenAI, Oracle, and xAI among the companies expected to adopt Rubin. “The efficiency gains in the NVIDIA Rubin platform represent the kind of infrastructure progress that enables longer memory, better reasoning, and more reliable outputs," Anthropic CEO Dario Amodei said in the press release. GPUs have become an expensive and scarce commodity as the rapidly scaling data center projects drain the global memory chip supply. According to a recent report from Tom's Hardware, gigantic data center projects required roughly 40% of the global DRAM chip output. The shortage has gotten to such a point that it is causing price hikes in consumer electronics and is rumored to impact GPU prices as well. According to a report from South Korean news agency Newsis, chipmaker AMD is expected to raise the prices of some of its GPU offerings later this month, and Nvidia will allegedly follow suit in February. Nvidia's focus has been on evading this chip bottleneck. Just last month, the tech giant made its largest purchase ever with Groq, a chipmaker that specializes in inference. Now, with a product that promises high levels of inference and the ability to train complex models with fewer chips at a lower cost, the company might be hoping to ease some of those shortage-driven worries in the industry. Company executives shared that Rubin delivers up to ten times reduction in inference token costs and four times reduction in the number of GPUs used to train models that rely on an AI architecture called mixture of experts (MoE), like DeepSeek. To add on that, the company is also unveiling a new class of AI-native storage infrastructure designed specifically for inference, called Inference Context Memory Storage Platform. Agentic AI, the tech world's hot new thing for the last year or so, has put an increased importance on AI memory. Rather than simply responding to single questions, AI systems are now expected to remember much more information about earlier interactions to autonomously carry out some tasks, which means there is more data to be managed during the inference stage. The new platform aims to solve that by adding a new tier of memory for inference, to store some context data and extend the GPU's memory capacity. "The bottleneck is shifting from compute to context management," Nvidia's senior director of HPC and AI hyperscale infrastructure solutions Dion Harris said. "To scale, storage can no longer be an afterthought." "As inference scales to giga-scale, context becomes a first-class data type, and the new Nvidia inference context memory storage platform is ideally positioned to support it," Harris claimed. Time will tell if efficiency can successfully address some of the bottlenecks brought about by the intense chip demand. But even if the memory problem is resolved, the AI industry will continue to face other bottlenecks in its unprecedented growth, most notably via the immense strain that data centers put on the U.S. power grid.

[13]

NVIDIA unveils Rubin six-chip system for next-gen AI at CES 2026

NVIDIA used the CES 2026 stage today to formally launch its new Rubin computing architecture, positioning it as the company's most advanced AI hardware platform to date. CEO Jensen Huang said Rubin has already entered full production and will scale further in the second half of the year, signaling NVIDIA's confidence in demand. Huang framed Rubin as a direct response to the explosive growth in AI workloads, particularly large-scale training and long-horizon reasoning tasks. He told the audience that AI computation must continue to rise at an unprecedented pace.

[14]

Nvidia stacks GPUs, CPUs, and DPUs in one rack to outcompute Huawei

Aggregate NVLink throughput reaches 260TB/s per DGX rack for efficiency At CES 2026, Nvidia unveiled its next-generation DGX SuperPOD powered by the Rubin platform, a system designed to deliver extreme AI compute in dense, integrated racks. According to the company, the SuperPOD integrates multiple Vera Rubin NVL72 or NVL8 systems into a single coherent AI engine, supporting large scale workloads with minimal infrastructure complexity. With liquid cooled modules, high speed interconnects, and unified memory, the system targets institutions seeking maximum AI throughput and reduced latency. Each DGX Vera Rubin NVL72 system includes 36 Vera CPUs, 72 Rubin GPUs, and 18 BlueField 4 DPUs, delivering a combined FP4 performance of 50 petaflops per system. Aggregate NVLink throughput reaches 260TB/s per rack, allowing the full memory and compute space to operate as a single coherent AI engine. The Rubin GPU incorporates a third generation Transformer Engine and hardware accelerated compression, allowing inference and training workloads to process efficiently at scale. Connectivity is reinforced by Spectrum-6 Ethernet switches, Quantum-X800 InfiniBand, and ConnectX-9 SuperNICs, which support deterministic high speed AI data transfer. Nvidia's SuperPOD design emphasizes end to end networking performance, ensuring minimal congestion in large AI clusters. Quantum-X800 InfiniBand delivers low latency and high throughput, while Spectrum-X Ethernet handles east west AI traffic efficiently. Each DGX rack incorporates 600TB of fast memory, NVMe storage, and integrated AI context memory to support both training and inference pipelines. The Rubin platform also integrates advanced software orchestration through Nvidia Mission Control, streamlining cluster operations, automated recovery, and infrastructure management for large AI factories. A DGX SuperPOD with 576 Rubin GPUs can achieve 28.8 Exaflops FP4, while individual NVL8 systems deliver 5.5x higher FP4 FLOPS than previous Blackwell architectures. By comparison, Huawei's Atlas 950 SuperPod claims 16 Exaflops FP4 per SuperPod, meaning Nvidia reaches higher efficiency per GPU and requires fewer units to achieve extreme compute levels. Rubin based DGX clusters also use fewer nodes and cabinets than Huawei's SuperCluster, which scales into thousands of NPUs and multiple petabytes of memory. This performance density allows Nvidia to compete directly with Huawei's projected compute output while limiting space, power, and interconnect overhead. The Rubin platform unifies AI compute, networking, and software into a single stack. Nvidia AI Enterprise software, NIM microservices, and mission critical orchestration create a cohesive environment for long context reasoning, agentic AI, and multimodal model deployment. While Huawei scales primarily through hardware count, Nvidia emphasizes rack level efficiency and tightly integrated software controls, which may reduce operational costs for industrial scale AI workloads.

[15]

Nvidia's Vera Rubin is months away -- Blackwell is getting faster right now

The big news this week from Nvidia, splashed in headlines across all forms of media, was the company's announcement about its Vera Rubin GPU. This week, Nvidia CEO Jensen Huang used his CES keynote to highlight performance metrics for the new chip. According to Huang, the Rubin GPU is capable of 50 PFLOPs of NVFP4 inference and 35 PFLOPs of NVFP4 training performance, representing 5x and 3.5x the performance of Blackwell. But it won't be available until the second half of 2026. So what should enterprises be doing now? Blackwell keeps on getting better The current, shipping Nvidia GPU architecture is Blackwell, which was announced in 2024 as the successor to Hopper. Alongside that release, Nvidia emphasized that that its product engineering path also included squeezing as much performance as possible out of the prior Grace Hopper architecture. It's a direction that will hold true for Blackwell as well, with Vera Rubin coming later this year. "We continue to optimize our inference and training stacks for the Blackwell architecture," Dave Salvator, director of accelerated computing products at Nvidia, told VentureBeat. In the same week that Vera Rubin was being touted by Nvidia's CEO as its most powerful GPU ever, the company published new research showing improved Blackwell performance. How Blackwell performance has improved inference by 2.8x Nvidia has been able to increase Blackwell GPU performance by up to 2.8x per GPU in a period of just three short months. The performance gains come from a series of innovations that have been added to the Nvidia TensorRT-LLM inference engine. These optimizations apply to existing hardware, allowing current Blackwell deployments to achieve higher throughput without hardware changes. The performance gains are measured on DeepSeek-R1, a 671-billion parameter mixture-of-experts (MoE) model that activates 37 billion parameters per token. Among the technical innovations that provide the performance boost: * Programmatic dependent launch (PDL): Expanded implementation reduces kernel launch latencies, increasing throughput. * All-to-all communication: New implementation of communication primitives eliminates an intermediate buffer, reducing memory overhead. * Multi-token prediction (MTP): Generates multiple tokens per forward pass rather than one at a time, increasing throughput across various sequence lengths. * NVFP4 format: A 4-bit floating point format with hardware acceleration in Blackwell that reduces memory bandwidth requirements while preserving model accuracy. The optimizations reduce cost per million tokens and allow existing infrastructure to serve higher request volumes at lower latency. Cloud providers and enterprises can scale their AI services without immediate hardware upgrades. Blackwell has also made training performance gains Blackwell is also widely used as a foundational hardware component for training the largest of large language models. In that respect, Nvidia has also reported significant gains for Blackwell when used for AI training. Since its initial launch, the GB200 NVL72 system delivered up to 1.4x higher training performance on the same hardware -- a 40% boost achieved in just five months without any hardware upgrades. The training boost came from a series of updates including: * Optimized training recipes. Nvidia engineers developed sophisticated training recipes that effectively leverage NVFP4 precision. Initial Blackwell submissions used FP8 precision, but the transition to NVFP4-optimized recipes unlocked substantial additional performance from the existing silicon. * Algorithmic refinements. Continuous software stack enhancements and algorithmic improvements enabled the platform to extract more performance from the same hardware, demonstrating ongoing innovation beyond initial deployment. Double-down on Blackwell or wait for Vera Rubin? Salvator noted that the high-end Blackwell Ultra is a market-leading platform purpose-built to run state-of-the-art AI models and applications. He added that the Nvidia Rubin platform will extend the company's market leadership and enable the next generation of MoEs to power a new class of applications to take AI innovation even further. Salvator explained that the Vera Rubin is built to address the growing demand in compute created by the continuing growth in model size and reasoning token generation from leading models such as MoE. "Blackwell and Rubin can serve the same models, but the difference is the performance, efficiency and token cost," he said. According to Nvidia's early testing results, compared to Blackwell, Rubin can train large MoE models in a quarter the number of GPUs, inference token generation with 10X more throughput per watt, and inference at 1/10th the cost per token. "Better token throughput performance and efficiency, means newer models can be built with more reasoning capability and faster agent-to-agent interaction, creating better intelligence at lower cost," Salvator said. What it all means for enterprise AI builders For enterprises deploying AI infrastructure today, current investments in Blackwell remain sound despite Vera Rubin's arrival later this year. Organizations with existing Blackwell deployments can immediately capture the 2.8x inference improvement and 1.4x training boost by updating to the latest TensorRT-LLM versions -- delivering real cost savings without capital expenditure. For those planning new deployments in the first half of 2026, proceeding with Blackwell makes sense. Waiting six months means delaying AI initiatives and potentially falling behind competitors already deploying today. However, enterprises planning large-scale infrastructure buildouts for late 2026 and beyond should factor Vera Rubin into their roadmaps. The 10x improvement in throughput per watt and 1/10th cost per token represent transformational economics for AI operations at scale. The smart approach is phased deployment: Leverage Blackwell for immediate needs while architecting systems that can incorporate Vera Rubin when available. Nvidia's continuous optimization model means this isn't a binary choice; enterprises can maximize value from current deployments without sacrificing long-term competitiveness.

[16]

Nvidia's new Vera Rubin chips: 4 things to know

Nvidia's new superchip is here. Credit: Patrick T. Fallon / AFP via Getty Images Nvidia CEO Jensen Huang announced at CES 2026 in Las Vegas this week that its new superchip platform, dubbed Vera Rubin, was on schedule and set to be released later this year. The news was one of the key takeaways from the highly anticipated keynote from Huang. Nvidia is the dominant player powering the AI industry, so a new line of chips is obviously a big deal. Here are four things to know as we await Vera Rubin's drop later this year. Nvidia introduced six chips on the so-called Rubin platform, one of which is the so-called Vera Rubin superchip that combines one Vera CPU and two Rubin GPUs in a processor. "Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof," Huang said in a statement. "With our annual cadence of delivering a new generation of AI supercomputers -- and extreme codesign across six new chips -- Rubin takes a giant leap toward the next frontier of AI." Massive AI companies will look to package different parts of this new line of chips together to make massive supercomputers that power their products. "These huge systems are what hyperscalers like Microsoft, Google, Amazon, and social media giant Meta are spending billions of dollars to get their hands on," wrote Yahoo. Nvidia assured the public the chips were set to be released this year, but when, exactly, remains unclear. "Typically, production for chips this advanced -- which Nvidia is building with its longtime partner TSMC -- starts at low volume while the chips go through testing and validation and ramps up at a later stage," wrote Wired. There had been rumors of delays, so the announcement at CES seems aimed at quelling those fears. Nvidia has promised the Vera Rubin superchips are powerful and more efficient, which should, in turn, make AI products relying on them more efficient. That's why major companies will likely be lining up to purchase the new line of products. Huang said the Rubin chips could generate tokens -- the units used to measure output -- ten times more efficiently. We're still waiting to get all the details -- and to see when the chips actually hit the market -- but the announcement certainly was a major bit of AI news out of CES.

[17]

Nvidia debuts Rubin chip with 336B transistors and 50 petaflops of AI performance - SiliconANGLE

Nvidia debuts Rubin chip with 336B transistors and 50 petaflops of AI performance Nvidia Corp. today announced a new flagship graphics processing unit, Rubin, that provides five times the inference performance of Blackwell. The GPU made its debut at CES alongside five other data center chips. Customers can deploy them together in a rack called the Vera Rubin NVL72 that Nvidia says ships with 220 trillion transistors, more bandwidth than the entire internet and real-time component health checks. Rubin includes 336 billion transistors that provide 50 petaflops of performance when processing NVFP4 data. Blackwell, Nvidia's previous-generation GPU architecture, provided up to 10 petaflops. Rubin's training speed, meanwhile, is 250% faster at 35 petaflops. Some of the chip's computing power is provided by a module called the Transformer Engine that also shipped with Blackwell. According to Nvidia, Rubin's Transformer Engine is based on a newer design with a performance-boosting feature called hardware-accelerated adaptive compression. Compressing a file reduces the number of bits it contains. That decreases the amount of data AI models have to crunch and thereby speeds up processing. "Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof," said Nvidia Chief Executive Officer Jensen Huang. "With our annual cadence of delivering a new generation of AI supercomputers -- and extreme codesign across six new chips -- Rubin takes a giant leap toward the next frontier of AI." Nvidia plans to ship its new silicon as part of an appliance called the Vera Rubin NVL72 NVL72. It will combine 72 Rubin chips with 36 of the company's new Vera central processing units, which also made their debut at CES. Vera includes 88 cores based on a custom design called Olympus. They're compatible with Armv9.2, a widely-used version of Arm Holdings plc's CPU instruction set architecture. The Vera Rubin NVL72 keeps its chips in modules called trays. According to Nvidia, the trays have a cable-free design that cuts assembly and servicing times by a factor of up to 18 compared to Blackwell-based appliances. The RAS Engine, a subsystem that the company's GPU racks use to automate certain maintenance tasks, has been upgraded as well. It provides fault tolerance features and performs real-time health checks to verify that the hardware is working as expected. Nvidia says that the Vera Rubin NVL72 provides 260 terabits per second per bandwidth, which is more than the entire internet. The appliance processes AI models' traffic with the help of three different chips called the NVLink 6 Switch, Spectrum-6 and ConnectX-9. All three were announced at CES today. NVLink 6 Switch enables multiple GPUs inside a Vera Rubin NVL72 rack to exchange data with one another at once. That data exchange is needed to coordinate the GPUs' work while they're running distributed AI models. The Spectrum-6, in turn, is an Ethernet switch that facilitates connections between GPUs installed in different racks. Nvidia's third new networking chip, the ConnectX-9, is what's known as a SuperNIC. It's a hardware interface that a server can use to access the network of the host data center. ConnectX-9 performs networking tasks that were historically carried out by a server's CPU, which leaves more processing capacity for AI workloads. Rounding out the list of chips that Nvidia debuted today is the BlueField-4. It's a DPU, or data processing unit. A DPU offloads work from a server's main processor much like a SuperNIC, but it does so across a broader range of tasks. The BlueField-4 can perform not only networking-related computations but also certain cybersecurity and storage management operations. The BlueField-4 powers a new storage system that Nvidia calls the Inference Context Memory Storage Platform. According to the company, it will help optimize large language models' key-value cache. An LLM's attention mechanism, the component it uses to determine which data points to use and how, often repeat the same calculations. A key-value cache allows an LLM to perform a frequently recurring calculation only once, save the results and then reuse those results. That's more hardware-efficient than calculating the same output from scratch every time it's needed. The Vera Rubin NVL72 will ship alongside a smaller appliance called the DGX Rubin NVL8 that includes 8 Rubin GPUs instead of 72. The two systems form the basis of the DGX SuperPOD, a new reference architecture for building AI clusters. It combines Nvidia's latest chips with a software platform called Mission Control that companies can use to manage their AI infrastructure.

[18]

NVIDIA officially unveils Rubin: its next-gen AI platform with huge upgrades, next-gen HBM4