Nvidia's Blackwell GPUs Dominate Latest MLPerf AI Training Benchmarks

6 Sources

6 Sources

[1]

Is Nvidia's Blackwell the Unstoppable Force in AI Training, or Can AMD Close the Gap?

Nvidia's NVL72, a package that connects 36 Grace CPUs and 72 Blackwell GPUs, was used to achieve top results on LLM pre-training. For those who enjoy rooting for the underdog, the latest MLPerf benchmark results will disappoint: Nvidia's GPUs have dominated the competition yetagain. This includes chart topping performance on the latest and most demanding benchmark, pre-training the Llama 3.1 403B large language model. That said, the computers built around the newest AMD GPU, MI325X, matched the performance of Nvidia's H200, Blackwell's predecessor, on the most popular LLM fine-tuning benchmark. This suggests that AMD is one generation behind Nvidia. MLPerf training is one of the machine learning competitions run by the MLCommons consortium. "AI performance sometimes can be sort of the wild west. MLPerf seeks to bring order to that chaos," says Dave Salvator, director of accelerated computing products at Nvidia. "This is not an easy task." The competition consists of six benchmarks, each probing a different industry-relevant machine learning task. The benchmarks are: content recommendation, large language model pre-training, large language model fine-tuning, object detection for machine vision applications, image generation, and graph node classification for applications such as fraud detection and drug discovery. The large language model pre-training task is the most resource intensive, and this round it was updated to be even more so. The term 'pre-training' is somewhat misleading -- it might give the impression that it's followed by a phase called 'training.' It's not. Pre-training is where most of the number crunching happens, and what follows is usually fine-tuning, which refines the model for specific tasks. In previous iterations, the pre-training was done on the GPT3 model. This iteration, it was replaced by Meta's Llama 3.1 403B, which is more than twice the size of GPT3 and uses a four times larger context window. The context window is how much input text the model can process at once. This larger benchmark represents the industry trend for ever larger models, as well as including some architectural updates. For all six benchmarks, the fastest training time was on Nvidia's Blackwell GPUs. Nvidia itself submitted to every benchmark (other companies also submitted using various computers built around Nvidia GPUs). Nvidia's Salvator emphasized that this is the first deployment of Blackwell GPUs at scale, and that this performance is only likely to improve. "We're still fairly early in the Blackwell development lifecycle," he says. This is the first time AMD has submitted to the training benchmark, although in previous years other companies have submitted using computers that included AMD GPUs. In the most popular benchmark, LLM fine-tuning, AMD demonstrated that its latest Instinct MI325X GPU performed on-par with Nvidia's H200s. Additionally, the Instinct MI325X showed a 30 percent improvement over its predecessor, the Instinct MI300X. (The main difference between the two is that MI325X comes with 30 percent more high-bandwidth memory than MI300X.) For it's part, Google submitted to a single benchmark, the image generation task, with its Trillium TPU. Of all submissions to the LLM fine-tuning benchmarks, the system with the largest number of GPUs was submitted by Nvidia, a computer connecting 512 B200s. At this scale, networking between GPUs starts to play a significant role. Ideally, adding more than one GPU would divide the time to train by the number of GPUs. In reality, it is always less efficient than that, as some of the time is lost to communication. Minimizing that loss is key to efficiently training the largest models. This becomes even more significant on the pre-training benchmark, where the smallest submission used 512 GPUs, and the largest used 8192. For this new benchmark, the performance scaling with more GPUs was notably close to linear, achieving 90 percent of the ideal performance. Nvidia's Salvator attributes this to the NVL72, an efficient package that connects 36 Grace CPUs and 72 Blackwell GPUs with NVLink, to form a system that acts as a "acts as a single, massive GPU," the data sheet claims. Multiple NVL72s were then connected with InfiniBand network technology. Notably, the largest submission for this round of MLPerf -- at 8192 GPUs -- is not the largest ever, despite the increased demands of the pre-training benchmark. Previous rounds saw submissions with over 10,000 GPUs. Kenneth Leach, principal AI and machine learning engineer at Hewlett Packard Enterprise, attributes the reduction to improvements in GPUs, as well as networking between them. "Previously, we needed 16 server nodes [to pre-train LLMs], but today we're able to do it with 4. I think that's one reason we're not seeing so many huge systems, because we're getting a lot of efficient scaling." One way to avoid the losses associated with networking is to put many AI accelerators on the same huge wafer, as done by Cerebras, who recently claimed to beat Nvidia's Blackwell GPUs by more than a factor of two on inference tasks. However, that result was measured by Artificial Analysis, which queries different providers without controlling how the workload is executed. So its not an apples to apples comparison in the way the MLPerf benchmark ensures. The MLPerf benchmark also includes a power test, measuring how much power is consumed to achieve each training task. This round, only a single submitter -- Lenovo -- included a power measurement in their submission, making it impossible to make comparisons across performers. The energy it took to fine-tune an LLM on two Blackwell GPUs was 6.11 gigajoules, or 1698 kilowatt hours, or roughly the energy it would take to heat small home for a winter. With growing concerns about AI's energy use, the power efficiency of training is crucial, and this author is perhaps not alone in hoping more companies submit these results in future rounds.

[2]

Nvidia chips make gains in training largest AI systems, new data shows

SAN FRANCISCO, June 4 (Reuters) - Nvidia's (NVDA.O), opens new tab newest chips have made gains in training large artificial intelligence systems, new data released on Wednesday showed, with the number of chips required to train large language models dropping dramatically. MLCommons, a nonprofit group that publishes benchmark performance results for AI systems, released new data about chips from Nvidia and Advanced Micro Devices (AMD.O), opens new tab, among others, for training, in which AI systems are fed large amounts of data to learn from. While much of the stock market's attention has shifted to a larger market for AI inference, in which AI systems handle questions from users, the number of chips needed to train the systems is still a key competitive concern. China's DeepSeek claims to create a competitive chatbot using far fewer chips than U.S. rivals. The results were the first that MLCommons has released about how chips fared at training AI systems such as Llama 3.1 405B, an open-source AI model released by Meta Platforms (META.O), opens new tab that has a large enough number of what are known as "parameters" to give an indication of how the chips would perform at some of the most complex training tasks in the world, which can involve trillions of parameters. Nvidia and its partners were the only entrants that submitted data about training that large model, and the data showed that Nvidia's new Blackwell chips are, on a per-chip basis, more than twice as fast as the previous generation of Hopper chips. In the fastest results for Nvidia's new chips, 2,496 Blackwell chips completed the training test in 27 minutes. It took more than three times that many of Nvidia's previous generation of chips to get a faster time, according to the data. In a press conference, Chetan Kapoor, chief product officer for CoreWeave, which collaborated with Nvidia to produce some of the results, said there has been a trend in the AI industry toward stringing together smaller groups of chips into subsystems for separate AI training tasks, rather than creating homogenous groups of 100,000 chips or more. "Using a methodology like that, they're able to continue to accelerate or reduce the time to train some of these crazy, multi-trillion parameter model sizes," Kapoor said. Reporting by Stephen Nellis in San Francisco; Editing by Leslie Adler Our Standards: The Thomson Reuters Trust Principles., opens new tab Suggested Topics:Artificial Intelligence

[3]

NVIDIA Blackwell Delivers Breakthrough Performance in Latest MLPerf Training Results

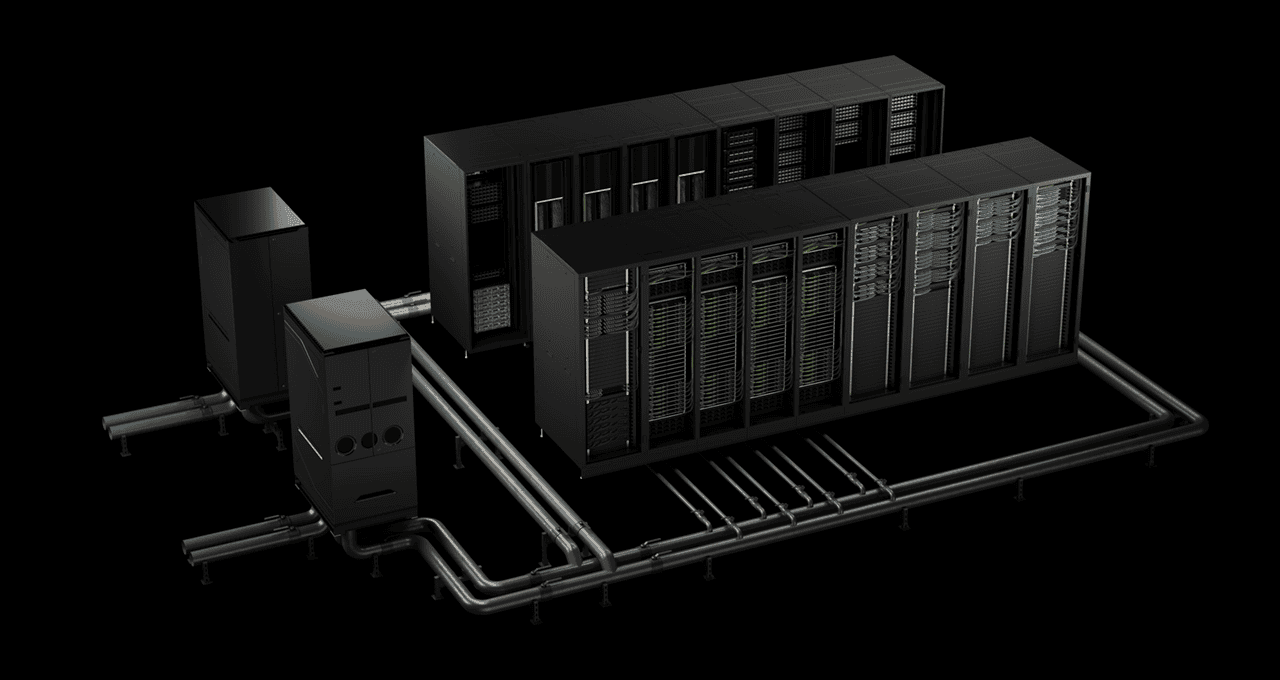

NVIDIA is working with companies worldwide to build out AI factories -- speeding the training and deployment of next-generation AI applications that use the latest advancements in training and inference. The NVIDIA Blackwell architecture is built to meet the heightened performance requirements of these new applications. In the latest round of MLPerf Training -- the 12th since the benchmark's introduction in 2018 -- the NVIDIA AI platform delivered the highest performance at scale on every benchmark and powered every result submitted on the benchmark's toughest large language model (LLM)-focused test: Llama 3.1 405B pretraining. The NVIDIA platform was the only one that submitted results on every MLPerf Training v5.0 benchmark -- underscoring its exceptional performance and versatility across a wide array of AI workloads, spanning LLMs, recommendation systems, multimodal LLMs, object detection and graph neural networks. The at-scale submissions used two AI supercomputers powered by the NVIDIA Blackwell platform: Tyche, built using NVIDIA GB200 NVL72 rack-scale systems, and Nyx, based on NVIDIA DGX B200 systems. In addition, NVIDIA collaborated with CoreWeave and IBM to submit GB200 NVL72 results using a total of 2,496 Blackwell GPUs and 1,248 NVIDIA Grace CPUs. On the new Llama 3.1 405B pretraining benchmark, Blackwell delivered 2.2x greater performance compared with previous-generation architecture at the same scale. On the Llama 2 70B LoRA fine-tuning benchmark, NVIDIA DGX B200 systems, powered by eight Blackwell GPUs, delivered 2.5x more performance compared with a submission using the same number of GPUs in the prior round. These performance leaps highlight advancements in the Blackwell architecture, including high-density liquid-cooled racks, 13.4TB of coherent memory per rack, fifth-generation NVIDIA NVLink and NVIDIA NVLink Switch interconnect technologies for scale-up and NVIDIA Quantum-2 InfiniBand networking for scale-out. Plus, innovations in the NVIDIA NeMo Framework software stack raise the bar for next-generation multimodal LLM training, critical for bringing agentic AI applications to market. These agentic AI-powered applications will one day run in AI factories -- the engines of the agentic AI economy. These new applications will produce tokens and valuable intelligence that can be applied to almost every industry and academic domain. The NVIDIA data center platform includes GPUs, CPUs, high-speed fabrics and networking, as well as a vast array of software like NVIDIA CUDA-X libraries, the NeMo Framework, NVIDIA TensorRT-LLM and NVIDIA Dynamo. This highly tuned ensemble of hardware and software technologies empowers organizations to train and deploy models more quickly, dramatically accelerating time to value. The NVIDIA partner ecosystem participated extensively in this MLPerf round. Beyond the submission with CoreWeave and IBM, other compelling submissions were from ASUS, Cisco, Dell Technologies, Giga Computing, Google Cloud, Hewlett Packard Enterprise, Lambda, Lenovo, Nebius, Oracle Cloud Infrastructure, Quanta Cloud Technology and Supermicro.

[4]

Nvidia says its Blackwell chips lead benchmarks in training AI LLMs

Nvidia is rolling out its AI chips to data centers and what it calls AI factories throughout the world, and the company announced today its Blackwell chips are leading the AI benchmarks. Nvidia and its partners are speeding the training and deployment of next-generation AI applications that use the latest advancements in training and inference. The Nvida Blackwell architecture is built to meet the heightened performance requirements of these new applications. In the latest round of MLPerf Training -- the 12th since the benchmark's introduction in 2018 -- the Nvidia AI platform delivered the highest performance at scale on every benchmark and powered every result submitted on the benchmark's toughest large language model (LLM)-focused test: Llama 3.1 405B pretraining. The Nvidia platform was the only one that submitted results on every MLPerf Training v5.0 benchmark -- underscoring its exceptional performance and versatility across a wide array of AI workloads, spanning LLMs, recommendation systems, multimodal LLMs, object detection and graph neural networks. The at-scale submissions used two AI supercomputers powered by the Nvidia Blackwell platform: Tyche, built using Nvidia GB200 NVL72 rack-scale systems, and Nyx, based on Nvidia DGX B200 systems. In addition, Nvidia collaborated with CoreWeave and IBM to submit GB200 NVL72 results using a total of 2,496 Blackwell GPUs and 1,248 Nvidia Grace CPUs. On the new Llama 3.1 405B pretraining benchmark, Blackwell delivered 2.2 times greater performance compared with previous-generation architecture at the same scale. On the Llama 2 70B LoRA fine-tuning benchmark, Nvidia DGX B200 systems, powered by eight Blackwell GPUs, delivered 2.5 times more performance compared with a submission using the same number of GPUs in the prior round. These performance leaps highlight advancements in the Blackwell architecture, including high-density liquid-cooled racks, 13.4TB of coherent memory per rack, fifth-generation Nvidia NVLink and Nvidia NVLink Switch interconnect technologies for scale-up and Nvidia Quantum-2 InfiniBand networking for scale-out. Plus, innovations in the Nvidia NeMo Framework software stack raise the bar for next-generation multimodal LLM training, critical for bringing agentic AI applications to market. These agentic AI-powered applications will one day run in AI factories -- the engines of the agentic AI economy. These new applications will produce tokens and valuable intelligence that can be applied to almost every industry and academic domain. The Nvidia data center platform includes GPUs, CPUs, high-speed fabrics and networking, as well as a vast array of software like Nvidia CUDA-X libraries, the NeMo Framework, Nvidia TensorRT-LLM and Nvidia Dynamo. This highly tuned ensemble of hardware and software technologies empowers organizations to train and deploy models more quickly, dramatically accelerating time to value. The Nvidia partner ecosystem participated extensively in this MLPerf round. Beyond the submission with CoreWeave and IBM, other compelling submissions were from ASUS, Cisco, Giga Computing, Lambda, Lenovo Quanta Cloud Technology and Supermicro. First MLPerf Training submissions using GB200 were developed by MLCommons Association with more than 125 members and affiliates. Its time-to-train metric ensures training process produces a model that meets required accuracy. And its standardized benchmark run rules ensure apples-to-apples performance comparisons. The results are peer-reviewed before publication. The basics on training benchmarks Dave Salvator is someone I knew when he was part of the tech press. Now he is director of accelerated computing products in the Accelerated Computing Group at Nvidia. In a press briefing, Salvator noted that Nvidia CEO Jensen Huang talks about this notion of the types of scaling laws for AI. They include pre training, where you're basically teaching the AI model knowledge. That's starting from zero. It's a heavy computational lift that is the backbone of AI, Salvator said. From there, Nvidia moves into post-training scaling. This is where models kind of go to school, and this is a place where you can do things like fine tuning, for instance, where you bring in a different data set to teach a pre-trained model that's been trained up to a point, to give it additional domain knowledge of your particular data set. And then lastly, there is time-test scaling or reasoning, or sometimes called long thinking. The other term this goes by is agentic AI. It's AI that can actually think and reason and problem solve, where you basically ask a question and get a relatively simple answer. Test time scaling and reasoning can actually work on much more complicated tasks and deliver rich analysis. And then there is also generative AI which can generate content on an as needed basis that can include text summarization translations, but then also visual content and even audio content. There are a lot of types of scaling that go on in the AI world. For the benchmarks, Nvidia focused on pre-training and post-training results. "That's where AI begins what we call the investment phase of AI. And then when you get into inferencing and deploying those models and then generating basically those tokens, that's where you begin to get your return on your investment in AI," he said. The MLPerf benchmark is in its 12th round and it dates back to 2018. The consortium backing it has over 125 members and it's been used for both inference and training tests. The industry sees the benchmarks as robust. "As I'm sure a lot of you are aware, sometimes performance claims in the world of AI can be a bit of the Wild West. MLPerf seeks to bring some order to that chaos," Salvator said. "Everyone has to do the same amount of work. Everyone is held to the same standard in terms of convergence. And once results are submitted, those results are then reviewed and vetted by all the other submitters, and people can ask questions and even challenge results." The most intuitive metric around training is how long does it take to train an AI model trained to what's called convergence. That means hitting a specified level of accuracy right. It's an apples-to-apples comparison, Salvator said, and it takes into account constantly changing workloads. This year, there's a new Llama 3.140 5b workload, which replaces the ChatGPT 170 5b workload that was in the benchmark previously. In the benchmarks, Salvator noted Nvidia had a number of records. The Nvidia GB200 NVL72 AI factories are fresh from the fabrication factories. From one generation of chips (Hopper) to the next (Blackwell), Nvidia saw a 2.5 times improvement for image generation results. "We're still fairly early in the Blackwell product life cycle, so we fully expect to be getting more performance over time from the Blackwell architecture, as we continue to refine our software optimizations and as new, frankly heavier workloads come into the market," Salvator said. He noted Nvidia was the only company to have submitted entries for all benchmarks. "The great performance we're achieving comes through a combination of things. It's our fifth-gen NVLink and NVSwitch up delivering up to 2.66 times more performance, along with other just general architectural goodness in Blackwell, along with just our ongoing software optimizations that make that make that performance possible," Salvator said. He added, "Because of Nvidia's heritage, we have been known for the longest time as those GPU guys. We certainly make great GPUs, but we have gone from being just a chip company to not only being a system company with things like our DGX servers, to now building entire racks and data centers with things like our rack designs, which are now reference designs to help our partners get to market faster, to building entire data centers, which ultimately then build out entire infrastructure, which we then are now referring to as AI factories. It's really been this really interesting journey."

[5]

Nvidia chips make gains in training largest AI systems, new data shows

Nvidia and its partners were the only entrants that submitted data about training that large model, and the data showed that Nvidia's new Blackwell chips are, on a per-chip basis, more than twice as fast as the previous generation of Hopper chips. In the fastest results for Nvidia's new chips, 2,496 Blackwell chips completed the training test in 27 minutes. It took more than three times that many of Nvidia's previous generation of chips to get a faster time, according to the data.Nvidia's newest chips have made gains in training large artificial intelligence systems, new data released on Wednesday showed, with the number of chips required to train large language models dropping dramatically. MLCommons, a nonprofit group that publishes benchmark performance results for AI systems, released new data about chips from Nvidia and Advanced Micro Devices, among others, for training, in which AI systems are fed large amounts of data to learn from. While much of the stock market's attention has shifted to a larger market for AI inference, in which AI systems handle questions from users, the number of chips needed to train the systems is still a key competitive concern. China's DeepSeek claims to create a competitive chatbot using far fewer chips than U.S. rivals. The results were the first that MLCommons has released about how chips fared at training AI systems such as Llama 3.1 405B, an open-source AI model released by Meta Platforms that has a large enough number of what are known as "parameters" to give an indication of how the chips would perform at some of the most complex training tasks in the world, which can involve trillions of parameters. Nvidia and its partners were the only entrants that submitted data about training that large model, and the data showed that Nvidia's new Blackwell chips are, on a per-chip basis, more than twice as fast as the previous generation of Hopper chips. In the fastest results for Nvidia's new chips, 2,496 Blackwell chips completed the training test in 27 minutes. It took more than three times that many of Nvidia's previous generation of chips to get a faster time, according to the data. In a press conference, Chetan Kapoor, chief product officer for CoreWeave, which collaborated with Nvidia to produce some of the results, said there has been a trend in the AI industry toward stringing together smaller groups of chips into subsystems for separate AI training tasks, rather than creating homogenous groups of 100,000 chips or more. "Using a methodology like that, they're able to continue to accelerate or reduce the time to train some of these crazy, multi-trillion parameter model sizes," Kapoor said.

[6]

Nvidia chips make gains in training largest AI systems, new data shows

SAN FRANCISCO (Reuters) -Nvidia's newest chips have made gains in training large artificial intelligence systems, new data released on Wednesday showed, with the number of chips required to train large language models dropping dramatically. MLCommons, a nonprofit group that publishes benchmark performance results for AI systems, released new data about chips from Nvidia and Advanced Micro Devices, among others, for training, in which AI systems are fed large amounts of data to learn from. While much of the stock market's attention has shifted to a larger market for AI inference, in which AI systems handle questions from users, the number of chips needed to train the systems is still a key competitive concern. China's DeepSeek claims to create a competitive chatbot using far fewer chips than U.S. rivals. The results were the first that MLCommons has released about how chips fared at training AI systems such as Llama 3.1 405B, an open-source AI model released by Meta Platforms that has a large enough number of what are known as "parameters" to give an indication of how the chips would perform at some of the most complex training tasks in the world, which can involve trillions of parameters. Nvidia and its partners were the only entrants that submitted data about training that large model, and the data showed that Nvidia's new Blackwell chips are, on a per-chip basis, more than twice as fast as the previous generation of Hopper chips. In the fastest results for Nvidia's new chips, 2,496 Blackwell chips completed the training test in 27 minutes. It took more than three times that many of Nvidia's previous generation of chips to get a faster time, according to the data. In a press conference, Chetan Kapoor, chief product officer for CoreWeave, which collaborated with Nvidia to produce some of the results, said there has been a trend in the AI industry toward stringing together smaller groups of chips into subsystems for separate AI training tasks, rather than creating homogenous groups of 100,000 chips or more. "Using a methodology like that, they're able to continue to accelerate or reduce the time to train some of these crazy, multi-trillion parameter model sizes," Kapoor said. (Reporting by Stephen Nellis in San Francisco; Editing by Leslie Adler)

Share

Share

Copy Link

Nvidia's new Blackwell GPUs show significant performance gains in AI model training, particularly for large language models, according to the latest MLPerf benchmarks. The results highlight Nvidia's continued dominance in AI hardware.

Nvidia's Blackwell GPUs Lead in MLPerf Training Benchmarks

Nvidia has once again demonstrated its dominance in AI hardware with its latest Blackwell GPUs, showcasing significant performance gains in the most recent MLPerf training benchmarks. The results, released by MLCommons, a nonprofit consortium of over 125 members, highlight Nvidia's continued leadership in AI model training, particularly for large language models (LLMs)

1

.Benchmark Performance and Improvements

The MLPerf Training v5.0 benchmarks included six tests covering various AI tasks, with the most resource-intensive being the LLM pre-training task. This round featured Meta's Llama 3.403B model, which is more than twice the size of the previously used GPT3 and has a four times larger context window

1

.Key performance highlights include:

- Nvidia's Blackwell GPUs achieved the fastest training times across all six benchmarks

3

. - On the new Llama 3.405B pre-training benchmark, Blackwell delivered 2.2x greater performance compared to the previous generation architecture at the same scale

4

. - For the Llama 2 70B LoRA fine-tuning benchmark, Nvidia DGX B200 systems with eight Blackwell GPUs showed 2.5x more performance than the previous round's submission with the same number of GPUs

3

.

Scaling and Efficiency

The benchmarks also demonstrated impressive scaling capabilities:

- In the fastest results, 2,496 Blackwell chips completed the training test in 27 minutes

2

. - It required more than three times as many of Nvidia's previous generation chips to achieve a faster time

5

. - The performance scaling with more GPUs was notably close to linear, achieving 90% of the ideal performance

1

.

Related Stories

Technological Advancements

Nvidia's performance improvements are attributed to several factors:

- The NVL72 package, which efficiently connects 36 Grace CPUs and 72 Blackwell GPUs

1

.

Source: IEEE

- Advancements in the Blackwell architecture, including high-density liquid-cooled racks and 13.4TB of coherent memory per rack

3

.

Source: NVIDIA

- Fifth-generation Nvidia NVLink and NVLink Switch interconnect technologies for scale-up

3

. - Nvidia Quantum-2 InfiniBand networking for scale-out capabilities

3

.

Industry Implications and Future Outlook

The benchmark results underscore Nvidia's vision for "AI factories" – large-scale computing infrastructures designed to train and deploy next-generation AI applications

3

. This concept aligns with the industry trend of creating smaller, more efficient GPU clusters for specific AI training tasks, as noted by Chetan Kapoor, chief product officer at CoreWeave2

.While Nvidia maintains its lead, competitors are not far behind. AMD's latest Instinct MI325X GPU demonstrated performance on par with Nvidia's H200s in the LLM fine-tuning benchmark, suggesting they are about one generation behind Nvidia

1

.As the AI hardware landscape continues to evolve, these benchmarks provide crucial insights into the capabilities of different chip architectures and their potential impact on the development of increasingly sophisticated AI models and applications.

References

Summarized by

Navi

Related Stories

NVIDIA Blackwell Ultra Dominates MLPerf Training v5.1, Sets 10-Minute Record for Llama 3.1 405B

12 Nov 2025•Technology

NVIDIA's Blackwell GPUs Deliver Up to 2.2x Performance Boost in MLPerf v4.1 AI Training Benchmarks

14 Nov 2024•Technology

NVIDIA Blackwell Dominates MLPerf Inference Benchmarks, AMD's MI325X Challenges Hopper

03 Apr 2025•Technology

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research