NVIDIA Unveils Alpamayo-R1: First Open-Source Reasoning AI Model for Autonomous Driving Research

6 Sources

6 Sources

[1]

Nvidia announces new open AI models and tools for autonomous driving research

Nvidia announces new open AI models and tools for autonomous driving research Nvidia announced new infrastructure and AI models on Monday as it works to build the backbone technology for physical AI, including robots and autonomous vehicles that can perceive and interact with the real world. The semiconductor giant announced Alpamayo-R1, an open reasoning vision language model for autonomous driving research at the NeurIPS AI conference in San Diego, California. The company claims this is the first vision language action model focused on autonomous driving. Visual language models can process both text and images together, allowing vehicles to "see" their surroundings and make decisions based on what they perceive. This new model is based on Nvidia's Cosmos Reason model, a reasoning model that thinks through decisions before it responds. Nvidia initially released the Cosmos model family in January 2025. Additional models were released in August. Technology like the Alpamayo-R1 is critical for companies looking to reach level 4 autonomous driving, which means full autonomy in a defined area and under specific circumstances, Nvidia said in a blog post. Nvidia hopes that this type of reasoning model will give autonomous vehicles the "common sense" to better approach nuanced driving decisions like humans do. This new model is available on GitHub and Hugging Face. Alongside the new vision model, Nvidia also uploaded new step-by-step guides, inference resources and post-training workflows to GitHub -- collectively called the Cosmos Cookbook -- to help developers better use and train Cosmos models for their specific use cases. The guide covers data curation, synthetic data generation, and model evaluation. These announcements come as the company is pushing full-speed into physical AI as a new avenue for its advanced AI GPUs. Nvidia's co-founder and CEO Jensen Huang has repeatedly said that the next wave of AI is physical AI. Bill Dally, Nvidia's chief scientist, echoed that sentiment in a conversation with TechCrunch over the summer, emphasizing physical AI in robotics. "I think eventually robots are going to be a huge player in the world and we want to basically be making the brains of all the robots," Dally said at the time. "To do that, we need to start developing the key technologies."

[2]

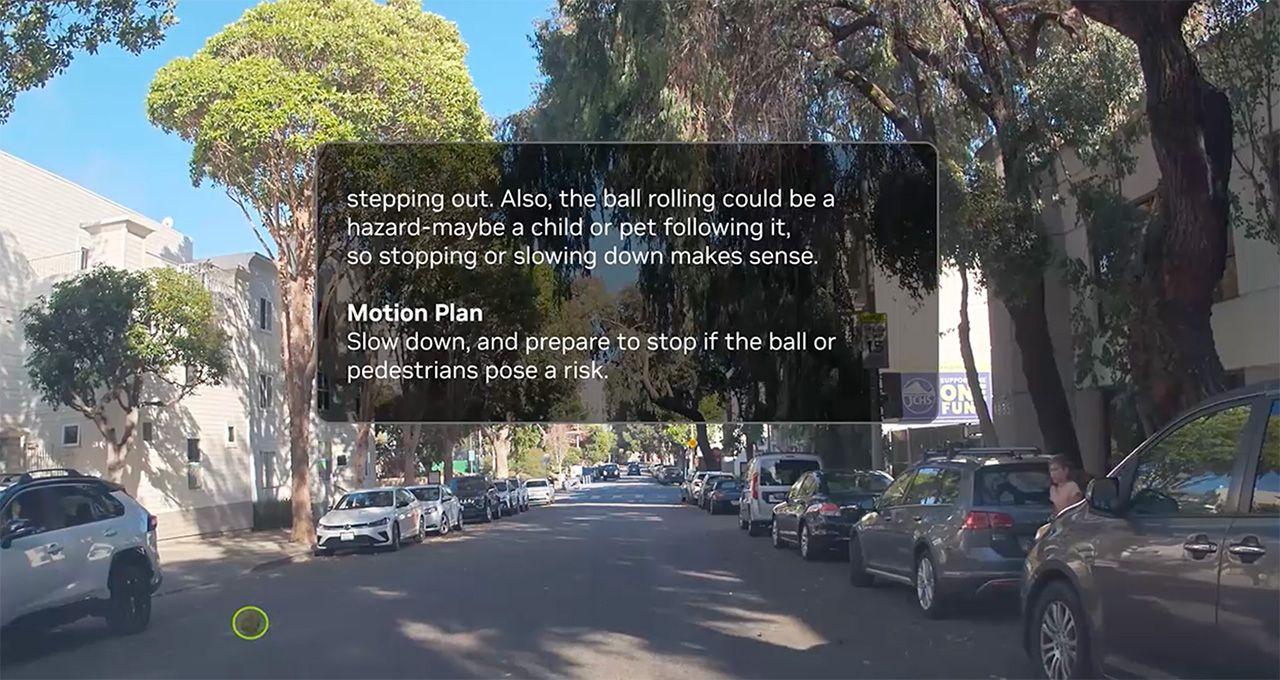

At NeurIPS, NVIDIA Advances Open Model Development for Digital and Physical AI

Your browser doesn't support HTML5 video. Here is a link to the video instead. Researchers worldwide rely on open-source technologies as the foundation of their work. To equip the community with the latest advancements in digital and physical AI, NVIDIA is further expanding its collection of open AI models, datasets and tools -- with potential applications in virtually every research field. At NeurIPS, one of the world's top AI conferences, NVIDIA is unveiling open physical AI models and tools to support research, including Alpamayo-R1, the world's first industry-scale open reasoning vision language action (VLA) model for autonomous driving. In digital AI, NVIDIA is releasing new models and datasets for speech and AI safety. NVIDIA researchers are presenting over 70 papers, talks and workshops at the conference, sharing innovative projects that span AI reasoning, medical research, autonomous vehicle (AV) development and more. These initiatives deepen NVIDIA's commitment to open source -- an effort recognized by a new Openness Index from Artificial Analysis, an independent organization that benchmarks AI. The Artificial Analysis Open Index rates the NVIDIA Nemotron family of open technologies for frontier AI development among the most open in the AI ecosystem based on the permissibility of the model licenses, data transparency and availability of technical details. While previous iterations of self-driving models struggled with nuanced situations -- a pedestrian-heavy intersection, an upcoming lane closure or a double-parked vehicle in a bike lane -- reasoning gives autonomous vehicles the common sense to drive more like humans do. AR1 accomplishes this by breaking down a scenario and reasoning through each step. It considers all possible trajectories, then uses contextual data to choose the best route. For example, by tapping into the chain-of-thought reasoning enabled by AR1, an AV driving in a pedestrian-heavy area next to a bike lane could take in data from its path, incorporate reasoning traces -- explanations on why it took certain actions -- and use that information to plan its future trajectory, such as moving away from the bike lane or stopping for potential jaywalkers. AR1's open foundation, based on NVIDIA Cosmos Reason, lets researchers customize the model for their own non-commercial use cases, whether for benchmarking or building experimental AV applications. For post-training AR1, reinforcement learning has proven especially effective -- researchers observed a significant improvement in reasoning capabilities with AR1 compared with the pretrained model. NVIDIA DRIVE Alpamayo-R1 will be available on GitHub and Hugging Face, and a subset of the data used to train and evaluate the model is available in the NVIDIA Physical AI Open Datasets. NVIDIA has also released the open-source AlpaSim framework to evaluate AR1. Learn more about reasoning VLA models for autonomous driving. Developers can learn how to use and post-train Cosmos-based models using step-by-step recipes, quick-start inference examples and advanced post-training workflows now available in the Cosmos Cookbook. It's a comprehensive guide for physical AI developers that covers every step in AI development, including data curation, synthetic data generation and model evaluation. There are virtually limitless possibilities for Cosmos-based applications. The latest examples from NVIDIA include: Policy models can be trained in NVIDIA Isaac Lab and Isaac Sim , and data generated from the policy models can then be used to post-train NVIDIA GR00T N models for robotics. NVIDIA ecosystem partners are developing their latest technologies with Cosmos WFMs. AV developer Voxel51 is contributing model recipes to the Cosmos Cookbook. Physical AI developers 1X, Figure AI, Foretellix, Gatik, Oxa, PlusAI and X-Humanoid are using WFMs for their latest physical AI applications. And researchers at ETH Zurich are presenting a NeurIPS paper that highlights using Cosmos models for realistic and cohesive 3D scene creation. NVIDIA Nemotron Additions Bolster the Digital AI Developer Toolkit NVIDIA is also releasing new multi-speaker speech AI models, a new model with reasoning capabilities and datasets for AI safety, as well as open tools to generate high-quality synthetic datasets for reinforcement learning and domain-specific model customization. These tools include: NVIDIA ecosystem partners using NVIDIA Nemotron and NeMo tools to build secure, specialized agentic AI include CrowdStrike, Palantir and ServiceNow. NeurIPS attendees can explore these innovations at the Nemotron Summit, taking place today, from 4-8 p.m. PT, with an opening address by Bryan Catanzaro, vice president of applied deep learning research at NVIDIA. Of the dozens of NVIDIA-authored research papers at NeurIPS, here are a few highlights advancing language models:

[3]

NVIDIA debuts first open reasoning AI for self-driving vehicles

NVIDIA is expanding the frontiers of AI research with a bold move. The company on Monday announced the launch of open physical and digital AI models that could reshape autonomous vehicles, robotics, and speech processing. The company unveiled these breakthroughs at NeurIPS, one of the world's top AI conferences, signaling a new era for open-source AI development. Among the highlights is Alpamayo-R1 (AR1), the world's first open reasoning vision-language-action (VLA) model for autonomous driving. Designed to combine chain-of-thought reasoning with path planning, AR1 helps vehicles navigate complex scenarios with human-like judgment.

[4]

NVIDIA Open Sources Reasoning Model for Autonomous Driving at NeurIPS 2025 | AIM

NVIDIA, AR1 breaks down scenes step by step, considers possible trajectories and uses contextual data to determine routes. At NeurIPS 2025, NVIDIA announced a new set of open models, datasets and tools spanning autonomous driving, speech AI and safety research, strengthening its position in open digital and physical AI development. The company also received recognition from Artificial Analysis' new Openness Index, which placed NVIDIA's Nemotron family among the most transparent model ecosystems. NVIDIA released DRIVE Alpamayo-R1, described by the company as "the world's first open reasoning VLA model for autonomous driving." Bryan Catanzaro, NVIDIA's vice president of applied deep learning research, said the model integrates chain-of-thought reasoning with path planning to support research on complex road scenarios and level-4 autonomy. According to NVIDIA, AR1 breaks down scenes step by step, considers possible trajectories and uses contextual data to determine routes. A subset of its training data is available through NVIDIA's Physical AI Open Datasets, and the model will be accessible on GitHub and Hugging Face. Built on NVIDIA Cosmos Reason, AR1 can be customised for non-commercial research. NVIDIA said reinforcement learning was effective in post-training the model, improving its reasoning performance compared with the pretrained version. The company also released AlpaSim, an open framework for evaluating AR1. Moreover, NVIDIA expanded the Cosmos ecosystem with new tools and workflows in the Cosmos Cookbook, offering step-by-step guidance for model post-training, synthetic data generation and evaluation. New Cosmos-based systems include LidarGen, a world model for generating lidar data; Omniverse NuRec Fixer, for correcting artifacts in neural reconstructions; Cosmos Policy for turning video models into robot policies; and ProtoMotions3, a framework for training physically simulated digital humans and robots. Industry partners, including Voxel51, 1X, Figure AI, Foretellix, Gatik, Oxa, PlusAI and X-Humanoid, are using Cosmos world foundation models. ETH Zurich researchers are presenting NeurIPS work showing how Cosmos models can generate cohesive 3D scenes. In digital AI, NVIDIA introduced new models and datasets under the Nemotron and NeMo umbrellas. These include MultiTalker Parakeet, a speech recognition model for multi-speaker environments; Sortformer, a diarization model; and Nemotron Content Safety Reasoning, which the company said applies domain-specific safety rules using reasoning. NVIDIA also opened the Nemotron Content Safety Audio Dataset, used for detecting unsafe audio content. Tools for synthetic data and reinforcement learning were also released, including NeMo Gym for RL environments and the NeMo Data Designer Library, now open-sourced under Apache 2.0. CrowdStrike, Palantir and ServiceNow are among partners using Nemotron and NeMo tools for specialised agentic AI. NVIDIA researchers are presenting more than 70 papers and sessions at NeurIPS.

[5]

Nvidia just unveiled its first AI model for autonomous driving research

Nvidia announced new AI models and infrastructure on Monday at the NeurIPS AI conference in San Diego, California, to advance physical AI for robots and autonomous vehicles that perceive and interact with the real world. The initiative focuses on developing backbone technology for autonomous driving research through open-source tools. The semiconductor company introduced Alpamayo-R1, described as an open reasoning vision-language model tailored for autonomous driving research. Nvidia positions this as the first vision-language action model specifically focused on autonomous driving applications. Vision-language models integrate processing of both text and images, enabling vehicles to "see" their surroundings and generate decisions based on perceptual inputs from the environment. Alpamayo-R1 builds directly on Nvidia's Cosmos-Reason model, which functions as a reasoning model that deliberates through decisions prior to generating responses. This foundational approach allows for more structured decision-making in complex scenarios. Nvidia first released the broader Cosmos model family in January 2025, establishing a series of AI tools designed for advanced reasoning tasks. In August 2025, the company expanded this lineup with additional models to enhance capabilities in physical AI domains. Video: Nvidia According to a Nvidia blog post, technology such as Alpamayo-R1 plays a critical role for companies pursuing level 4 autonomous driving. This level defines full autonomy within a designated operational area and under particular conditions, where vehicles operate without human intervention in those parameters. The reasoning capabilities embedded in the model aim to equip autonomous vehicles with the "common sense" required to handle nuanced driving decisions in ways comparable to human drivers. Developers can access Alpamayo-R1 immediately on platforms including GitHub and Hugging Face, facilitating widespread adoption and experimentation in autonomous driving projects. In parallel, Nvidia released the Cosmos Cookbook on GitHub, comprising step-by-step guides, inference resources, and post-training workflows. These materials assist developers in training and deploying Cosmos models for targeted use cases, with coverage extending to data curation processes, synthetic data generation techniques, and comprehensive model evaluation methods.

[6]

Nvidia releases open-source software for self-driving car development

On Monday, Nvidia released Alpamayo-R1 for self-driving vehicles. The software is what is known as a "vision-language-action" AI model, which means that the self-driving vehicle translates what its sensor banks see on the road into a description using natural language. Nvidia on Monday released new open-source software aimed at speeding up the development of self-driving cars using some of the newest "reasoning" techniques in artificial intelligence. Nvidia has risen to become the world's most valuable company as its chips have become central to the development of AI. But the company also maintains a broad software research arm that releases open-source AI code that others, such as Palantir Technologies, can adopt. On Monday, Nvidia released Alpamayo-R1 for self-driving vehicles. The software is what is known as a "vision-language-action" AI model, which means that the self-driving vehicle translates what its sensor banks see on the road into a description using natural language. The breakthrough with Alpamayo, which was named for a mountain peak in Peru that is particularly tricky to scale, is that it thinks aloud to itself as it plans its path through the world. For example, if the car sees a bike path, it will note that it sees the path and is adjusting course. Most previous self-driving car software was limited in how it explained why the car chose a particular path, making it hard for engineers to understand what needed to be fixed to make the cars safer. "One of the entire motivations behind making this open is so that developers and researchers can... understand how these models work so we can, as an industry, come up with standard ways of evaluating how they work," Katie Young, senior marketing manager for the automotive enterprise at Nvidia, told Reuters.

Share

Share

Copy Link

NVIDIA announced Alpamayo-R1, the world's first open reasoning vision-language-action model for autonomous driving research at NeurIPS 2025. The model combines chain-of-thought reasoning with path planning to help vehicles navigate complex scenarios with human-like judgment, marking a significant step toward Level 4 autonomous driving capabilities.

NVIDIA's Breakthrough in Autonomous Driving AI

NVIDIA announced a significant advancement in autonomous driving technology at the NeurIPS AI conference in San Diego, California, unveiling Alpamayo-R1 (AR1), described as the world's first open reasoning vision-language-action (VLA) model specifically designed for autonomous driving research

1

. This groundbreaking model represents a major step forward in the company's push toward physical AI applications, combining advanced reasoning capabilities with practical autonomous vehicle development2

.

Source: ET

The semiconductor giant positions AR1 as a critical technology for companies pursuing Level 4 autonomous driving, which defines full autonomy within designated operational areas under specific conditions

1

. Unlike previous iterations of self-driving models that struggled with nuanced situations, AR1 incorporates chain-of-thought reasoning that enables vehicles to approach complex driving scenarios with human-like judgment2

.Technical Architecture and Capabilities

Alpamayo-R1 builds directly on NVIDIA's Cosmos Reason model, a reasoning framework that deliberates through decisions before generating responses

5

. As a vision-language model, AR1 can process both text and images simultaneously, allowing vehicles to "see" their surroundings and make informed decisions based on perceptual inputs from the environment1

.The model's reasoning process involves breaking down complex scenarios step by step, considering all possible trajectories, and using contextual data to determine the optimal route

4

. For example, when navigating a pedestrian-heavy area adjacent to a bike lane, AR1 can incorporate reasoning traces—explanations for specific actions—to plan future trajectories, such as moving away from bike lanes or stopping for potential jaywalkers2

.

Source: NVIDIA

Open-Source Accessibility and Development Tools

NVIDIA has made Alpamayo-R1 immediately accessible to researchers and developers through GitHub and Hugging Face platforms, facilitating widespread adoption and experimentation in autonomous driving projects

1

. The model's open foundation allows researchers to customize it for non-commercial use cases, whether for benchmarking or building experimental autonomous vehicle applications2

.Accompanying the model release, NVIDIA introduced the Cosmos Cookbook, a comprehensive collection of step-by-step guides, inference resources, and post-training workflows uploaded to GitHub

1

. This resource covers essential aspects of AI development including data curation, synthetic data generation, and model evaluation, providing developers with the tools necessary to train and deploy Cosmos models for targeted applications5

.

Source: Interesting Engineering

Related Stories

Industry Recognition and Strategic Vision

NVIDIA's commitment to open-source development has gained recognition from Artificial Analysis' new Openness Index, which rates the company's Nemotron family among the most transparent model ecosystems in AI

4

. The index evaluates models based on license permissibility, data transparency, and availability of technical details2

.CEO Jensen Huang has consistently emphasized that the next wave of AI development lies in physical AI applications, with Chief Scientist Bill Dally reinforcing this vision by stating the company's goal to "basically be making the brains of all the robots"

1

. This strategic direction positions NVIDIA at the forefront of developing foundational technologies for autonomous vehicles and robotics applications3

.References

Summarized by

Navi

[3]

Related Stories

Nvidia launches Alpamayo open AI models to bring human-like reasoning to autonomous vehicles

06 Jan 2026•Technology

NVIDIA Unveils Cosmos Reason and Advanced AI Models for Robotics and Physical AI Applications

12 Aug 2025•Technology

NVIDIA Unveils Comprehensive AI Stack for Next-Generation Humanoid Robots

30 Sept 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology