Nvidia Unveils Blackwell Ultra B300: A Leap Forward in AI Computing

9 Sources

9 Sources

[1]

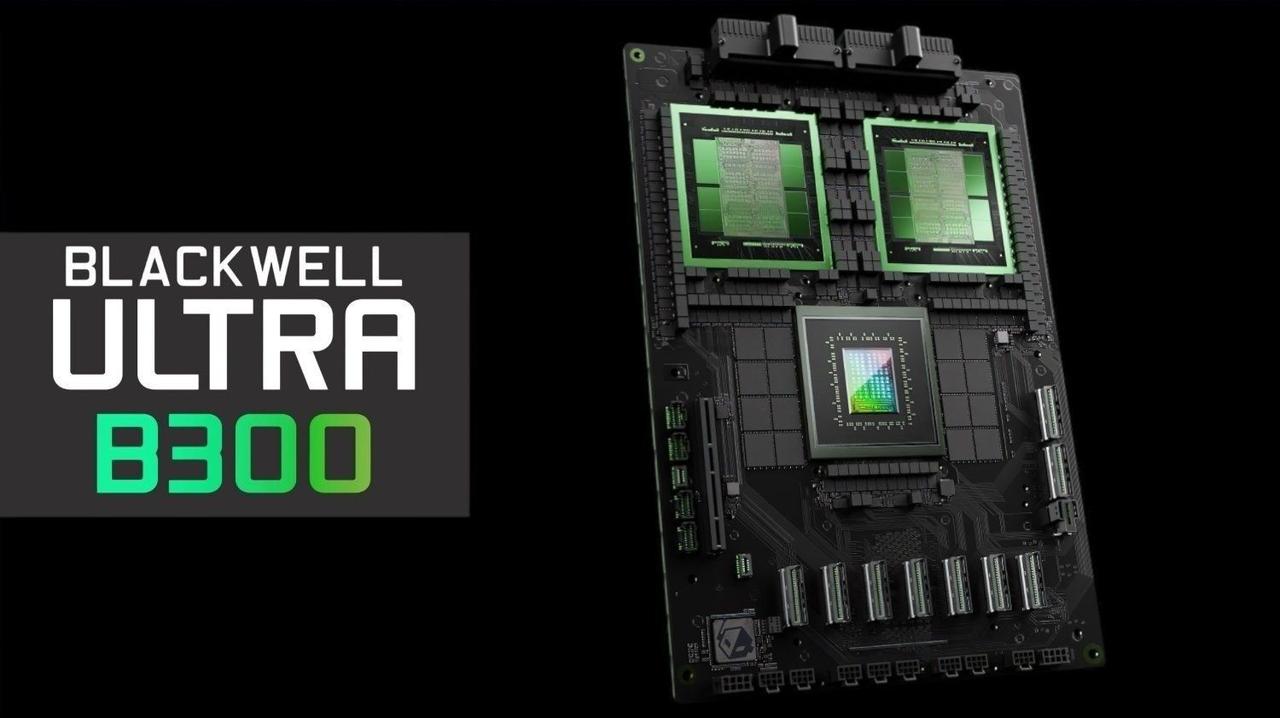

Nvidia announces Blackwell Ultra B300 -- 1.5X faster than B200 with 288GB HBM3e and 15 PFLOPS dense FP4

The Nvidia Blackwell Ultra B300 data center GPU was announced today during CEO Jensen Huang's keynote at GTC 2025 in San Jose, CA. Offering 50% more memory and FP4 compute than the existing B200 solution, it raises the stakes in the race to faster and more capable AI models yet again. Nvidia says it's "built for the age of reasoning," referencing more sophisticated AI LLMs like DeepSeek R1 that do more than just regurgitate previously digested information. Naturally, Blackwell Ultra B300 isn't just about a single GPU. Along with the base B300 building block, there will be new B300 NVL16 server rack solutions, a GB300 DGX Station, and GB300 NV72L full rack solutions. Put eight NV72L racks together, and you get the full Blackwell Ultra DGX SuperPOD: 288 Grace CPUs, 576 Blackwell Utlra GPUs, 300TB of HBM3e memory, and 11.5 ExaFLOPS of FP4. These can be linked together in supercomputer solutions that Nvidia classifies as "AI factories." While Nvidia says that Blackwell Ultra will have 1.5X more dense FP4 compute, what isn't clear is whether other compute have scaled similarly. We would expect that to be the case, but it's possible Nvidia has done more than simply enabling more SMs, boosting clocks, and increasing the capacity of the HBM3e stacks. Clocks may be slightly slower in FP8 or FP16 modes, for example. But here are the core specs that we have, with some inference of other data (indicated by question marks). We asked for some clarification on the performance and details for Blackwell Ultra B300 and were told: "Blackwell Ultra GPUs (in GB300 and B300) are different chips than Blackwell GPUs (GB200 and B200). Blackwell Ultra GPUs are designed to meet the demand for test-time scaling inference with a 1.5X increase in the FP4 compute." Does that mean B300 is a physically larger chip to fit more tensor cores into the package? That seems to be the case, but we're awaiting further details. What's clear is that the new B300 GPUs will offer significantly more computational throughput than the B200. Having 50% more on-package memory will enable even larger AI models with more parameters, and the accompanying compute will certainly help. Nvidia gave some examples of the potential performance, though these were compared to Hopper, so that muddies the waters. We'd like to see comparisons between B200 and B300 in similar configurations -- with the same number of GPUs, specifically. But that's not what we have. By leveraging FP4 instructions, using B300 alongside its new Dynamo software library to help with serving reasoning models like DeepSeek, Nvidia says an NV72L rack can deliver 30X more inference performance than a similar Hopper configuration. That figure naturally derives from improvements to multiple areas of the product stack, so the faster NVLink, increased memory, added compute, and FP4 all factor into the equation. In a related example, Blackwell Ultra can deliver up to 1,000 tokens/second with the DeepSeek R1-671B model, and it can do so faster. Hopper, meanwhile, only offers up to 100 tokens/second. So, there's a 10X increase in throughput, cutting the time to service a larger query from 1.5 minutes down to 10 seconds. The B300 products should begin shipping before the end of the year, sometime in the second half of the year. Presumably, there won't be any packaging snafus this time, and things won't be delayed, though Nvidia does note that it made $11 billion in revenue from Blackwell B200/B100 last fiscal year. It's a safe bet to say it expects to dramatically increase that figure for the coming year.

[2]

Nvidia unveils 288 GB Blackwell Ultra GPUs

GTC Nvidia's Blackwell GPU architecture is barely out of the cradle - and the graphics chip giant is already looking to extend its lead over rival AMD with an Ultra-themed refresh of the technology. Announced on stage at Nvidia's GPU Technology Conference (GTC) in San Jose, California, on Tuesday by CEO and leather jacket aficionado Jensen Huang, the Blackwell Ultra family of accelerators boasts up to 15 petaFLOPS of dense 4-bit floating-point performance and up to 288 GB of HBM3e memory per chip. And if you're primarily interested in deploying GPUs for AI inference, that's a bigger deal than you might think. While training is generally limited by how much compute you can throw at the problem, inference is primarily a memory-bound workload. The more memory you have, the bigger the model you can serve. According to Ian Buck, Nvidia veep of hyperscale and HPC, the Blackwell Ultra will enable reasoning models including DeepSeek-R1 to be served at 10x the throughput of the Hopper generation, meaning questions that previously may have taken more than a minute to be answered can now be done in as little as ten seconds. With 288 GB of capacity across eight stacks of HBM3e memory onboard, a single Blackwell Ultra GPU can now run substantially larger models. At FP4, Meta's Llama 405B could fit on a single GPU with plenty of vRAM left over for key-value caches. To achieve this higher capacity, Nvidia's Blackwell Ultra swapped last-gen's eight-high HBM3e stacks for fatter 12-high modules, boosting capacity by 50 percent. However, we're told that memory bandwidth remains the same at a still class-leading 8 TB/s. If any of this sounds familiar, this isn't the first time we've seen Nvidia employ this strategy. In fact, Nv is following a similar playbook to its H200, which was essentially just an H100 with faster, higher-capacity HBM3e onboard. However, this time around, with these latest Blackwells, Nvidia isn't just strapping on more memory, it's also juiced the peak floating-point performance by 50 percent - at least for FP4 anyway. Nvidia tells us that FP8 and FP16/BF16 performance is unchanged from last gen. More memory, more compute, more GPUs While many have fixated on Nvidia's $30,000 or $40,000 chips, it's worth remembering that Hopper, Blackwell, and now its Ultra refresh aren't one chip so much as a family of products ranging the gamut from PCIe add-in cards and servers to rack-scale systems and even entire supercomputing clusters. In the datacenter, Nvidia will offer Blackwell Ultra in both its more traditional HGX servers and its rack-scale NVL72 offerings. Nvidia's HGX form factor has, at least for the past few generations, featured up to eight air-cooled GPUs stitched together by a high-speed NVLink switch fabric. However, this time it has opted instead to cram twice as many GPUs into a box in a config it's calling the B300 NVL16. According to Nvidia, the Blackwell-based B300 NVL will deliver 7x the compute and 4x the memory capacity of its most powerful Hopper systems, which, by our calculation, works out to 112 petaFLOPS of dense FP4 compute and 4.6 terabytes of HBM3e memory capacity. However, this also suggests that only the B300's individual floating-point performance will top out at 7 petaFLOPS of dense FP4 - the same as the Blackwell B100-series chips it announced last year. For even larger workloads, Nvidia will also offer the accelerators in its Superchip form-factor. Like last year's GB200, the GB300 Superchip will pair two Blackwell Ultra GPUs with a combined 576 GB of HBM3e memory with a 72-core Grace Arm-compatible CPU. Up to 36 of these Superchips can be stitched together using Nvidia's NVLink switches to form an NVL72 rack-scale system. But rather than the 13.5 terabytes of HBM3e of last year's model, the Grace-Blackwell GB300-based systems will offer up to 20 terabytes of vRAM. What's more, Buck says the system has been redesigned for this generation with an eye toward improved energy efficiency and serviceability. And if that's still not big enough, eight of these racks can be combined to form a GB300 SuperPOD system containing 576 Blackwell Ultra GPUs and 288 Grace CPUs. Where does this leave Blackwell? Given its larger memory capacity, it'd be easy to look at Nvidia's line-up and question whether Blackwell Ultra will end up cannibalizing shipments of the non-Ultra variant. However, the two platforms are clearly aimed at different markets, with Nvidia presumably charging a premium for its Ultra SKUs. In a press briefing ahead of Huang's keynote address today, Nvidia's Buck described three distinct AI scaling laws, including pre-training scaling, post-training scaling, and test-time scaling, each of which require compute resources to be applied in different ways. At least on paper, Blackwell Ultra's higher memory capacity should make it well suited to the third one of these regimes, as they allow customers to either serve up larger models - AKA inference - faster or at higher volumes. Meanwhile, for those building large clusters for compute-bound training workloads, we expect the standard Blackwell parts to continue to see strong demand. After all, there's little sense in paying extra for memory you don't necessarily need. With that said, there's no reason why you wouldn't use a GB300 for training. Nvidia tells us the higher HBM capacity and faster 800G networking offered by its ConnectX-8 NICs will contribute to higher training performance. Competition With Nvidia's Blackwell Ultra processors expected to start trickling out sometime in the second half of 2025, this puts it in contention with AMD's upcoming Instinct MI355X accelerators, which are in an awkward spot. We would say the same about Intel's Gaudi3 but that was already true when it was announced. Since launching its MI300-series GPUs in late 2023, AMD's main point of differentiation was that its accelerators had more memory (192 GB and later 256 GB) than Nvidia's (141 GB and later 192 GB), making them attractive to customers, such as Microsoft or Meta, deploying large multi-hundred- or even trillion-parameter-scale models. MI355X will also see AMD juice memory capacities to 288 GB of HBM3e and bandwidth to 8 TB/s. What's more, AMD claims the chips will close the gap considerably, promising floating-point performance roughly on par with Nvidia's B200. However, at a system level, Nvidia's new HGX B300 NVL16 systems will offer twice the memory, and significantly higher FP4 floating-point performance, roughly 50 percent more. If that weren't enough, AMD's answer to Nvidia's NVL72 is still another generation away with its forthcoming MI400 platform. This may explain why, during its last earnings call, AMD CEO Lisa Su revealed that her company planned to move up the release of its MI355X from late in the second half to the middle of the year. Team Red also has the potential to undercut its rival on pricing and availability, a strategy it's used to great effect in its ongoing effort to steal share from Intel. ®

[3]

Nvidia unveils new Blackwell Ultra B300 AI GPU and next-gen Vera Rubin roadmap

Forward-looking: Nvidia CEO Jensen Huang unveiled a robust lineup of AI-accelerating GPUs at the company's 2025 GPU Technology Conference, including the Blackwell Ultra B300, Vera Rubin, and Rubin Ultra. These GPUs are designed to enhance AI performance, particularly in inference and training tasks. The Blackwell Ultra B300, set for release in the second half of 2025, increases memory capacity from 192GB to 288GB of HBM3e and offers a 50% boost in dense FP4 tensor compute compared to the Blackwell GB200. These enhancements support larger AI models and improve inference performance for frameworks such as DeepSeek R1. In a full NVL72 rack configuration, the Blackwell Ultra will deliver 1.1 exaflops of dense FP4 inference compute, marking a significant leap over the current Blackwell B200 setup. The Blackwell Ultra B300 isn't just a standalone GPU. Alongside the core B300 unit, Nvidia is introducing new B300 NVL16 server rack solutions, the GB300 DGX Station, and the GB300 NV72L full rack system. Combining eight NV72L racks forms the complete Blackwell Ultra DGX SuperPOD (pictured above), featuring 288 Grace CPUs, 576 Blackwell Ultra GPUs, 300TB of HBM3e memory, and an impressive 11.5 ExaFLOPS of FP4 compute power. These systems can be interconnected to create large-scale supercomputers, which Nvidia is calling "AI factories." Initially teased at Computex 2024, next-gen Vera Rubin GPUs are expected to launch in the second half of 2026, delivering substantial performance improvements, particularly in AI training and inference. Vera Rubin features tens of terabytes of memory and is paired with a custom Nvidia-designed CPU, Vera, which includes 88 custom Arm cores with 176 threads. The GPU integrates two chips on a single die, achieving 50 petaflops of FP4 inference performance per chip. In a full NVL144 rack setup, Vera Rubin can deliver 3.6 exaflops of FP4 inference compute. Building on Vera Rubin's architecture, Rubin Ultra is slated for release in the second half of 2027. It will utilize the NVL576 rack configuration, with each GPU featuring four reticle-sized dies, delivering 100 petaflops of FP4 precision per chip. Rubin Ultra promises 15 exaflops of FP4 inference compute and 5 exaflops of FP8 training performance, significantly surpassing Vera Rubin's capabilities. Each Rubin Ultra GPU will include 1TB of HBM4e memory, contributing to 365TB of fast memory across the entire rack. Nvidia also introduced a next-generation GPU architecture called "Feynman," expected to debut in 2028 alongside the Vera CPU. While details remain scarce, Feynman is anticipated to further advance Nvidia's AI computing capabilities. During his keynote, Huang outlined Nvidia's ambitious vision for AI, describing data centers as "AI factories" that produce tokens processed by AI models. He also highlighted the potential for "physical AI" to power humanoid robots, leveraging Nvidia's software platforms to train AI models in virtual environments for real-world applications. Nvidia's roadmap is happy to position these GPUs as pivotal in the future of computing, emphasizing the need for increased computational power to keep pace with AI advancements. This strategy comes as Nvidia aims to reassure investors following recent market fluctuations, building on the success of its Blackwell chips.

[4]

1,000 trillion operations a second: NVIDIA's AI universe just got insane

Central to these revelations is introducing the Blackwell Ultra processor, a next-generation AI chipset designed to enhance AI reasoning capabilities, marking a significant shift from generative AI to more complex AI tasks. The Blackwell Ultra processor represents a monumental leap in AI computing, engineered to handle the increasing demands of advanced AI applications. Its architecture is optimized for intricate AI reasoning, enabling more sophisticated and efficient processing of complex datasets. This advancement is expected to facilitate the development of more autonomous and intelligent systems across various industries. According to NVIDIA, Blackwell Ultra, built on the groundbreaking Blackwell architecture introduced a year ago, includes the NVIDIA GB300 NVL72 rack-scale solution and the NVIDIA HGX B300 NVL16 system. The GB300 NVL72 delivers 1.5x more AI performance than the NVIDIA GB200 NVL72 and increases Blackwell's revenue opportunity by 50x for AI factories, compared with those built with NVIDIA Hopper. RTX Pro series Complementing the Blackwell Ultra, NVIDIA introduced the RTX PRO 6000 Blackwell series GPUs, tailored for professionals such as designers, developers, and data scientists.

[5]

Nvidia announces DGX SuperPOD with Blackwell Ultra GPUs

Nvidia announced what it called the world's most advanced enterprise AI infrastructure -- Nvidia DGX SuperPOD built with Nvidia Blackwell Ultra GPUs -- which provides enterprises across industries with AI factory supercomputing for state-of-the-art agentic AI reasoning. Enterprises can use new Nvidia DGX GB300 and Nvidia DGX B300 systems, integrated with Nvidia networking, to deliver out-of-the-box DGX SuperPOD AI supercomputers that offer FP4 precision and faster AI reasoning to supercharge token generation for AI applications. AI factories provide purpose-built infrastructure for agentic, generative and physical AI workloads, which can require significant computing resources for AI pretraining, post-training and test-time scaling for applications running in production. "AI is advancing at light speed, and companies are racing to build AI factories that can scale to meet the processing demands of reasoning AI and inference time scaling," said Jensen Huang, founder and CEO of Nvidia, in a statement. "The Nvidia Blackwell Ultra DGX SuperPOD provides out-of-the-box AI supercomputing for the age of agentic and physical AI." DGX GB300 systems feature Nvidia Grace Blackwell Ultra Superchips -- which include 36 Nvidia Grace CPUs and 72 Nvidia Blackwell Ultra GPUs -- and a rack-scale, liquid-cooled architecture designed for real-time agent responses on advanced reasoning models. Air-cooled Nvidia DGX B300 systems harness the Nvidia B300 NVL16 architecture to help data centers everywhere meet the computational demands of generative and agentic AI applications. To meet growing demand for advanced accelerated infrastructure, Nvidia also unveiled Nvidia Instant AI Factory, a managed service featuring the Blackwell Ultra-powered NVIDIA DGX SuperPOD. Equinix will be first to offer the new DGX GB300 and DGX B300 systems in its preconfigured liquid- or air-cooled AI-ready data centers located in 45 markets around the world. NVIDIA DGX SuperPOD With DGX GB300 Powers Age of AI Reasoning DGX SuperPOD with DGX GB300 systems can scale up to tens of thousands of Nvidia Grace Blackwell Ultra Superchips -- connected via NVLink, Nvidia Quantum-X800 InfiniBand and Nvidia Spectrum-X™ Ethernet networking -- to supercharge training and inference for the most compute-intensive workloads. DGX GB300 systems deliver up to 70 times more AI performance than AI factories built with Nvidia Hopper systems and 38TB of fast memory to offer unmatched performance at scale for multistep reasoning on agentic AI and reasoning applications. The 72 Grace Blackwell Ultra GPUs in each DGX GB300 system are connected by fifth-generation NVLink technology to become one massive, shared memory space through the NVLink Switch system. Each DGX GB300 system features 72 Nvidia ConnectX-8 SuperNICs, delivering accelerated networking speeds of up to 800Gb/s -- double the performance of the previous generation. Eighteen Nvidia BlueField-3 DPUs pair with Nvidia Quantum-X800 InfiniBand or NvidiaSpectrum-X Ethernet to accelerate performance, efficiency and security in massive-scale AI data centers. DGX B300 Systems Accelerate AI for Every Data Center The Nvidia DGX B300 system is an AI infrastructure platform designed to bring energy-efficient generative AI and AI reasoning to every data center. Accelerated by Nvidia Blackwell Ultra GPUs, DGX B300 systems deliver 11 times faster AI performance for inference and a 4x speedup for training compared with the Hopper generation. Each system provides 2.3TB of HBM3e memory and includes advanced networking with eight NVIDIA ConnectX-8 SuperNICs and two BlueField-3 DPUs. Nvidia Software Accelerates AI Development and Deployment To enable enterprises to automate the management and operations of their infrastructure, Nvidia also announced Nvidia Mission Control -- AI data center operation and orchestration software for Blackwell-based DGX systems. Nvidia DGX systems support the Nvidia AI Enterprise software platform for building and deploying enterprise-grade AI agents. This includes Nvidia NIM microservices, such as the new Nvidia Llama Nemotron open reasoning model family announced today, and Nvidia AI Blueprints, frameworks, libraries and tools used to orchestrate and optimize performance of AI agents. Nvidia Instant AI Factory offers enterprises an Equinix managed service featuring the Blackwell Ultra-powered Nvidia DGX SuperPOD with Nvidia Mission Control software. With dedicated Equinix facilities around the globe, the service will provide businesses with fully provisioned, intelligence-generating AI factories optimized for state-of-the-art model training and real-time reasoning workloads -- eliminating months of pre-deployment infrastructure planning. Availability Nvidia DGX SuperPOD with DGX GB300 or DGX B300 systems are expected to be available from partners later this year. NVIDIA Instant AI Factory is planned to be available starting later this year.

[6]

NVIDIA Blackwell Ultra AI Factory Platform Paves Way for Age of AI Reasoning

GTC -- NVIDIA today announced the next evolution of the NVIDIA Blackwell AI factory platform, NVIDIA Blackwell Ultra -- paving the way for the age of AI reasoning. NVIDIA Blackwell Ultra boosts training and test-time scaling inference -- the art of applying more compute during inference to improve accuracy -- to enable organizations everywhere to accelerate applications such as AI reasoning, agentic AI and physical AI. Built on the groundbreaking Blackwell architecture introduced a year ago, Blackwell Ultra includes the NVIDIA GB300 NVL72 rack-scale solution and the NVIDIA HGX™ B300 NVL16 system. The GB300 NVL72 delivers 1.5x more AI performance than the NVIDIA GB200 NVL72, as well as increases Blackwell's revenue opportunity by 50x for AI factories, compared with those built with NVIDIA Hopper™. "AI has made a giant leap -- reasoning and agentic AI demand orders of magnitude more computing performance," said Jensen Huang, founder and CEO of NVIDIA. "We designed Blackwell Ultra for this moment -- it's a single versatile platform that can easily and efficiently do pretraining, post-training and reasoning AI inference." NVIDIA Blackwell Ultra Enables AI Reasoning The NVIDIA GB300 NVL72 connects 72 Blackwell Ultra GPUs and 36 Arm Neoverse-based NVIDIA Grace™ CPUs in a rack-scale design, acting as a single massive GPU built for test-time scaling. With the NVIDIA GB300 NVL72, AI models can access the platform's increased compute capacity to explore different solutions to problems and break down complex requests into multiple steps, resulting in higher-quality responses. GB300 NVL72 is also expected to be available on NVIDIA DGX™ Cloud, an end-to-end, fully managed AI platform on leading clouds that optimizes performance with software, services and AI expertise for evolving workloads. NVIDIA DGX SuperPOD™ with DGX GB300 systems uses the GB300 NVL72 rack design to provide customers with a turnkey AI factory. The NVIDIA HGX B300 NVL16 features 11x faster inference on large language models, 7x more compute and 4x larger memory compared with the Hopper generation to deliver breakthrough performance for the most complex workloads, such as AI reasoning. In addition, the Blackwell Ultra platform is ideal for applications including: NVIDIA Scale-Out Infrastructure for Optimal Performance Advanced scale-out networking is a critical component of AI infrastructure that can deliver top performance while reducing latency and jitter. Blackwell Ultra systems seamlessly integrate with the NVIDIA Spectrum-X™ Ethernet and NVIDIA Quantum-X800 InfiniBand platforms, with 800 Gb/s of data throughput available for each GPU in the system, through an NVIDIA ConnectX®-8 SuperNIC. This delivers best-in-class remote direct memory access capabilities to enable AI factories and cloud data centers to handle AI reasoning models without bottlenecks. NVIDIA BlueField®-3 DPUs, also featured in Blackwell Ultra systems, enable multi-tenant networking, GPU compute elasticity, accelerated data access and real-time cybersecurity threat detection. Global Technology Leaders Embrace Blackwell Ultra Blackwell Ultra-based products are expected to be available from partners starting from the second half of 2025. Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro are expected to deliver a wide range of servers based on Blackwell Ultra products, in addition to Aivres, ASRock Rack, ASUS, Eviden, Foxconn, GIGABYTE, Inventec, Pegatron, Quanta Cloud Technology (QCT), Wistron and Wiwynn. Cloud service providers Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure and GPU cloud providers CoreWeave, Crusoe, Lambda, Nebius, Nscale, Yotta and YTL will be among the first to offer Blackwell Ultra-powered instances. NVIDIA Software Innovations Reduce AI Bottlenecks The entire NVIDIA Blackwell product portfolio is supported by the full-stack NVIDIA AI platform. The NVIDIA Dynamo open-source inference framework -- also announced today -- scales up reasoning AI services, delivering leaps in throughput while reducing response times and model serving costs by providing the most efficient solution for scaling test-time compute. NVIDIA Dynamo is new AI inference-serving software designed to maximize token revenue generation for AI factories deploying reasoning AI models. It orchestrates and accelerates inference communication across thousands of GPUs, and uses disaggregated serving to separate the processing and generation phases of large language models on different GPUs. This allows each phase to be optimized independently for its specific needs and ensures maximum GPU resource utilization. Blackwell systems are ideal for running new NVIDIA Llama Nemotron Reason models and the NVIDIA AI-Q Blueprint, supported in the NVIDIA AI Enterprise software platform for production-grade AI. NVIDIA AI Enterprise includes NVIDIA NIM™ microservices, as well as AI frameworks, libraries and tools that enterprises can deploy on NVIDIA-accelerated clouds, data centers and workstations. The Blackwell platform builds on NVIDIA's ecosystem of powerful development tools, NVIDIA CUDA-X™ libraries, over 6 million developers and 4,000+ applications scaling performance across thousands of GPUs.

[7]

NVIDIA GB300 'Blackwell Ultra' AI GPU: 288GB HBM3E, 1.4kW power, 50% faster than GB200

TL;DR: NVIDIA has launched the GB300 "Blackwell Ultra" NVL72 AI server, featuring a GB300 AI GPU with 50% more performance than the GB200 and 288GB of HBM3E memory. It offers 1.5x more performance than the GB200 NVL72 and enhances AI factory revenue opportunities by 50x compared to Hopper-based servers. NVIDIA has officially unveiled its beefed-up GB300 "Blackwell Ultra" NVL72 AI server, with its new GB300 AI GPU featuring 50% more performance over GB200, and a larger pool of 288GB of HBM3E memory. NVIDIA's new GB300 "Blackwell Ultra" AI GPUs will continue the AI domination led by GB200, with memory capacity increased through using new 12-Hi HBM3E memory stacks, and even more compute power for AI workloads. NVIDIA is also coupling GB300 with the latest Spectrum Ultra X800 Ethernet switches (512-Radix). The new NVIDIA GB300 NVL72 rack-scale solution has 1.5x more performance than an NVIDIA GB200 NVL72 AI server, as well as increasing Blackwell revenue opportunities by 50x for AI factories compared to AI servers built on the Hopper GPU architecture. Inside, NVIDIA's new GB300 NVL72 AI server connects 72 x GB300 Blackwell Ultra AI GPUs and 36 Arm Neoverse-based NVIDIA Grace CPUs in a rack-scale design, acting as a single gigantic GPU built for test-time scaling. NVIDIA's new GB300 NVL72 has AI models accessing the platform's massive performance gains to explore different solutions to problems and break down complex requests into multiple steps, resulting in higher-quality responses. Jensen Huang, founder and CEO of NVIDIA said: "AI has made a giant leap - reasoning and agentic AI demand orders of magnitude more computing performance. We designed Blackwell Ultra for this moment - it's a single versatile platform that can easily and efficiently do pretraining, post-training and reasoning AI inference". NVIDIA explains its new GB300 "Blackwell Ultra" AI platform: "NVIDIA Blackwell Ultra boosts training and test-time scaling inference - the art of applying more compute during inference to improve accuracy - to enable organizations everywhere to accelerate applications such as AI reasoning, agentic AI and physical AI". "Built on the groundbreaking Blackwell architecture introduced a year ago, Blackwell Ultra includes the NVIDIA GB300 NVL72 rack-scale solution and the NVIDIA HGX™ B300 NVL16 system. The GB300 NVL72 delivers 1.5x more AI performance than the NVIDIA GB200 NVL72, as well as increases Blackwell's revenue opportunity by 50x for AI factories, compared with those built with NVIDIA Hopper".

[8]

AI at warp speed: Nvidia's new GB300 superchip arrives this year

Nvidia has announced its next generation of AI superchips, the Blackwell Ultra GB300, which will ship in the second half of this year, the Vera Rubin for the second half of next year, and the Rubin Ultra, set to arrive in the second half of 2027. The company reports it is currently making $2,300 in profit every second, driven largely by its AI-centric data center business. The Blackwell Ultra GB300, while part of Nvidia's annual cadence of AI chip releases, does not utilize a new architecture. In a prebriefing with journalists, Nvidia revealed that a single Ultra chip provides the same 20 petaflops of AI performance as the original Blackwell, now enhanced with 288GB of HBM3e memory, up from 192GB. The Blackwell Ultra DGX GB300 "Superpod" cluster will maintain its configuration of 288 CPUs and 576 GPUs, delivering 11.5 exaflops of FP4 computing but with an increased memory capacity of 300TB compared to 240TB in the previous version. In comparisons with the H100 chip, which significantly contributed to Nvidia's AI success in 2022, the Blackwell Ultra offers 1.5 times the FP4 inference performance and can accelerate AI reasoning tasks. Specifically, the NVL72 cluster can execute an interactive version of DeepSeek-R1 671B, receiving answers in ten seconds rather than the H100's 1.5 minutes, thanks to its capability to process 1,000 tokens per second -- ten times more than the previous generation of Nvidia chips. Nvidia has introduced a desktop computer called the DGX Station, which will feature a single GB300 Blackwell Ultra chip, along with 784GB of unified system memory and built-in 800Gbps Nvidia networking, while still providing the promised 20 petaflops of AI performance. Companies like Asus, Dell, and HP will market versions of this desktop alongside Boxx, Lambda, and Supermicro. Nvidia reveals DGX Spark: The world's smallest AI supercomputer Furthermore, Nvidia is launching the GB300 NVL72 rack, which offers 1.1 exaflops of FP4, 20TB of HBM memory, 40TB of "fast memory," 130TB/sec of NVLink bandwidth, and 14.4 TB/sec networking capabilities. The forthcoming Vera Rubin architecture will significantly boost performance, featuring 50 petaflops of FP4, a substantial increase from Blackwell's 20 petaflops. Its successor, Rubin Ultra, will integrate two Rubin GPUs for a total of 100 petaflops of FP4 and nearly quadruple the memory at 1TB. A complete NVL576 rack of Rubin Ultra claims up to 15 exaflops of FP4 inference and 5 exaflops of FP8 training, representing a 14-fold performance increase over the Blackwell Ultra rack scheduled for this year. Nvidia has reported $11 billion in revenue from Blackwell, with the top four customers acquiring 1.8 million Blackwell chips in 2025 alone. CEO Jensen Huang highlighted the increasing demand for computational power, stating that the industry now requires "100 times more than we thought we needed this time last year" to meet current needs. Huang also announced the next architecture to come after Vera Rubin in 2028 will be named Feynman, after the renowned physicist Richard Feynman, noting that some family members of pioneering astronomer Vera Rubin were present at the announcement event.

[9]

NVIDIA Blackwell Ultra "B300" Unleashing In 2H 2025 - 1.1 Exaflops of FP4 Compute, 288 GB HBM3e Memory, 50% Faster Than GB200

NVIDIA Blackwell Ultra goes official, offering a huge scale-up in memory capacity with upgraded AI compute capabilities for data centers. The launch of Blackwell in its first iteration was hit with a few hiccups, but the company has worked its way to ensure that the supply of its latest AI powerhouse is in an even better state, providing the latest hardware and solutions to major AI and Data Center vendors. The initial B100 and B200 GPU families offer an insane amount of AI compute capabilities and the company is going to set new industry standards with the next-gen offering, Blackwell Ultra. These B300 chips will expand upon Blackwell, offering not just increased memory densities with up to 12-Hi HBM3E stacks but also offering even more compute capabilities for faster AI. These chips will be coupled with the latest Spectrum Ultra X800 Ethernet switches (512-Radix). Press Release: Built on the groundbreaking Blackwell architecture introduced a year ago, Blackwell Ultra includes the NVIDIA GB300 NVL72 rack-scale solution and the NVIDIA HGX B300 NVL16 system. The GB300 NVL72 delivers 1.5x more AI performance than the NVIDIA GB200 NVL72, as well as increases Blackwell's revenue opportunity by 50x for AI factories, compared with those built with NVIDIA Hopper. NVIDIA Blackwell Ultra Enables AI Reasoning The NVIDIA GB300 NVL72 connects 72 Blackwell Ultra GPUs and 36 Arm Neoverse-based NVIDIA Grace CPUs in a rack-scale design, acting as a single massive GPU built for test-time scaling. With the NVIDIA GB300 NVL72, AI models can access the platform's increased compute capacity to explore different solutions to problems and break down complex requests into multiple steps, resulting in higher-quality responses. GB300 NVL72 is also expected to be available on NVIDIA DGX Cloud, an end-to-end, fully managed AI platform on leading clouds that optimizes performance with software, services and AI expertise for evolving workloads. NVIDIA DGX SuperPOD with DGX GB300 systems uses the GB300 NVL72 rack design to provide customers with a turnkey AI factory. The NVIDIA HGX B300 NVL16 features 11x faster inference on large language models, 7x more compute and 4x larger memory compared with the Hopper generation to deliver breakthrough performance for the most complex workloads, such as AI reasoning.

Share

Share

Copy Link

Nvidia announces the Blackwell Ultra B300 GPU, offering 1.5x faster performance than its predecessor with 288GB HBM3e memory and 15 PFLOPS of dense FP4 compute, designed to meet the demands of advanced AI reasoning and inference.

Nvidia Introduces Blackwell Ultra B300: A New Era in AI Computing

Nvidia has unveiled its latest advancement in AI computing technology, the Blackwell Ultra B300 GPU, during CEO Jensen Huang's keynote at GTC 2025 in San Jose, California. This new offering represents a significant leap forward in AI processing capabilities, particularly for reasoning and inference tasks

1

.Key Features and Performance Improvements

The Blackwell Ultra B300 boasts impressive specifications that set it apart from its predecessors:

- 1.5x faster performance compared to the B200

- 288GB of HBM3e memory, a 50% increase from the previous generation

- 15 PFLOPS of dense FP4 compute power

- Designed for advanced AI reasoning tasks

These improvements enable the B300 to handle more sophisticated AI models, such as DeepSeek R1, which go beyond simple information regurgitation to perform complex reasoning tasks

1

2

.Scalable Solutions for Enterprise AI

Nvidia is not just offering a single GPU but a range of solutions to meet various enterprise needs:

- B300 NVL16 server rack solutions

- GB300 DGX Station

- GB300 NV72L full rack solutions

The pinnacle of these offerings is the Blackwell Ultra DGX SuperPOD, which combines:

- 288 Grace CPUs

- 576 Blackwell Ultra GPUs

- 300TB of HBM3e memory

- 11.5 ExaFLOPS of FP4 compute power

1

3

Performance in Real-World Applications

Nvidia has provided some performance comparisons, albeit primarily against the older Hopper architecture:

- An NV72L rack can deliver 30x more inference performance than a similar Hopper configuration

- Blackwell Ultra can process up to 1,000 tokens/second with the DeepSeek R1-671B model, compared to Hopper's 100 tokens/second

- This translates to reducing query response times from 1.5 minutes to just 10 seconds

1

2

Market Positioning and Competition

The introduction of Blackwell Ultra comes at a time when Nvidia is looking to extend its lead over competitors like AMD and Intel in the AI accelerator market. The increased memory capacity of 288GB per chip allows for running substantially larger models, which is crucial for memory-bound inference workloads

2

4

.Related Stories

Future Roadmap and AI Vision

Nvidia's announcement also included glimpses into future developments:

- Vera Rubin GPUs, expected in 2026, promising further performance improvements

- Rubin Ultra, slated for 2027, with even more ambitious specifications

- "Feynman" architecture, anticipated for 2028

3

Jensen Huang outlined Nvidia's vision of data centers as "AI factories," emphasizing the growing importance of computational power in AI advancements

3

5

.Availability and Industry Impact

The Blackwell Ultra B300 products are expected to start shipping in the second half of 2025. This timeline puts Nvidia in direct competition with AMD's upcoming Instinct MI355X accelerators

2

4

.As AI continues to evolve rapidly, Nvidia's latest offerings are positioned to meet the increasing demands for more powerful and efficient AI computing solutions across various industries, from research and development to enterprise applications.

References

Summarized by

Navi

[1]

[2]

[4]

[5]

Related Stories

NVIDIA Unveils Blackwell Ultra GB300: A Leap Forward in AI Accelerator Technology

27 Aug 2025•Technology

NVIDIA's GB300 'Blackwell Ultra' AI Servers: A Leap Towards Fully Liquid-Cooled AI Clusters

11 Mar 2025•Technology

NVIDIA Unveils Roadmap for Next-Gen AI GPUs: Blackwell Ultra and Vera Rubin

28 Feb 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology