Nvidia Unveils Blackwell Ultra GPUs and AI Desktops, Focusing on Reasoning Models and Revenue Generation

5 Sources

5 Sources

[1]

Nvidia plans to make DeepSeek's AI 30 times faster - CEO Huang explains how

In January, the emergence of DeepSeek's R1 artificial intelligence program prompted a stock market selloff. Seven weeks later, chip giant Nvidia, the dominant force in AI processing, seeks to place itself squarely in the middle of the dramatic economics of cheaper AI that DeepSeek represents. On Tuesday, at the SAP Center in San Jose, Calif., Nvidia co-founder and CEO Jensen Huang discussed how the company's Blackwell chips can dramatically accelerate DeepSeek R1. Also: Google claims Gemma 3 reaches 98% of DeepSeek's accuracy - using only one GPU Nvidia claims that its GPU chips can process 30 times the throughput that DeepSeek R1 would normally have in a data center, measured by the number of tokens per second, using new open-source software called Nvidia Dynamo. "Dynamo can capture that benefit and deliver 30 times more performance in the same number of GPUs in the same architecture for reasoning models like DeepSeek," said Ian Buck, Nvidia's head of hyperscale and high-performance computing, in a media briefing before Huang's keynote at the company's GTC conference. The Dynamo software, available today on GitHub, distributes inference work across as many as 1,000 Nvidia GPU chips. More work can be accomplished per second of machine time by breaking up the work to run in parallel. The result: For an inference task priced at $1 per million tokens, more of the tokens can be run each second, boosting revenue per second for services providing the GPUs. Buck said service providers can then decide to run more customer queries on DeepSeek or devote more processing to a single user to charge more for a "premium" service. Premium services "AI factories can offer a higher premium service at premium dollar per million tokens," said Buck, "and also increase the total token volume of their whole factory." The term "AI factory" is Nvidia's coinage for large-scale services that run a heavy volume of AI work using the company's chips, software, and rack-based equipment. The prospect of using more chips to increase throughput (and therefore business) for AI inference is Nvidia's answer to investor concerns that less computing would be used overall because DeepSeek can cut the amount of processing needed for each query. By using Dynamo with Blackwell, the current model of Nvidia's flagship AI GPU, the Dynamo software can make such AI data centers produce 50 times as much revenue as with the older model, Hopper, said Buck. Also: Deepseek's AI model proves easy to jailbreak - and worse Nvidia has posted its own tweaked version of DeepSeek R1 on HuggingFace. The Nvidia version reduces the number of bits used by R1 to manipulate variables to what's known as "FP4," or floating-point four bits, which is a fraction of the computing needed for the standard floating-point 32 or B-float 16. "It increases the performance from Hopper to Blackwell substantially," said Buck. "We did that without any meaningful changes or reductions or loss of the accuracy model. It's still the great model that produces the smart reasoning tokens." In addition to Dynamo, Huang unveiled the newest version of Blackwell, "Ultra," following on the first model that was unveiled at last year's show. The new version enhances various aspects of the existing Blackwell 200, such as increasing DRAM memory from 192GB of HBM3e high-bandwidth memory to as much as 288GB. Also: Nvidia CEO Jensen Huang unveils next-gen 'Blackwell' chip family at GTC When combined with Nvidia's Grace CPU chip, a total of 72 Blackwell Ultras can be assembled in the company's NVL72 rack-based computer. The system will increase the inference performance running at FP4 by 50% over the existing NVL72 based on the Grace-Blackwell 200 chips. Other announcements made at GTC The tiny personal computer for AI developers, unveiled at CES in January as Project Digits, has received its formal branding as DGX Spark. The computer uses a version of the Grace-Blackwell combo called GB10. Nvidia is taking reservations for the Spark starting today. A new version of the DGX "Station" desktop computer, first introduced in 2017, was unveiled. The new model uses the Grace-Blackwell Ultra and will come with 784 gigabytes of DRAM. That's a big change from the original DGX Station, which relied on Intel CPUs as the main host processor. The computer will be manufactured by Asus, BOXX, Dell, HP, Lambda, and Supermicro, and will be available "later this year." Also: Why Mark Zuckerberg wants to redefine open source so badly Huang talked about an adaptation of Meta's open-source Llama large language models, called Llama Nemotron, with capabilities for "reasoning;" that is, for producing a string of output itemizing the steps to a conclusion. Nvidia claims the Nemotron models "optimize inference speed by 5x compared with other leading open reasoning models." Developers can access the models on HuggingFace. Improved network switches As widely expected, Nvidia has offered for the first time a version of its "Spectrum-X" network switch that puts the fiber-optic transceiver inside the same package as the switch chip rather than using standard external transceivers. Nvidia says the switches, which come with port speeds of 200- or 800GB/sec, improve on its existing switches with "3.5 times more power efficiency, 63 times greater signal integrity, 10 times better network resiliency at scale, and 1.3 times faster deployment." The switches were developed with Taiwan Semiconductor Manufacturing, laser makers Coherent and Lumentum, fiber maker Corning, and contract assembler Foxconn. Nvidia is building a quantum computing research facility in Boston that will integrate leading quantum hardware with AI supercomputers in partnerships with Quantinuum, Quantum Machines, and QuEra. The facility will give Nvidia's partners access to the Grace-Blackwell NVL72 racks. Oracle is making Nvidia's "NIM" microservices software "natively available" in the management console of Oracle's OCI computing service for its cloud customers. Huang announced new partners integrating the company's Omniverse software for virtual product design collaboration, including Accenture, Ansys, Cadence Design Systems, Databricks, Dematic, Hexagon, Omron, SAP, Schneider Electric With ETAP, and Siemens. Nvidia unveiled Mega, a software design "blueprint" that plugs into Nvidia's Cosmos software for robot simulation, training, and testing. Among early clients, Schaeffler and Accenture are using Meta to test fleets of robotic hands for materials handling tasks. General Motors is now working with Nvidia on "next-generation vehicles, factories, and robots" using Omniverse and Cosmos. Updated graphics cards Nvidia updated its RTX graphics card line. The RTX Pro 6000 Blackwell Workstation Edition provides 96GB of DRAM and can speed up engineering tasks such as simulations in Ansys software by 20%. A second version, Pro 6000 Server, is meant to run in data center racks. A third version updates RTX in laptops. Also: AI chatbots can be hijacked to steal Chrome passwords - new research exposes flaw Continuing the focus on "foundation models" for robotics, which Huang first discussed at CES when unveiling Cosmos, he revealed on Tuesday a foundation model for humanoid robots called Nvidia Isaac GROOT N1. The GROOT models are pre-trained by Nvidia to achieve "System 1" and "System 2" thinking, a reference to the book Thinking Fast and Slow by cognitive scientist Daniel Kahneman. The software can be downloaded from HuggingFace and GitHub. Medical devices giant GE is among the first parties to use the Isaac for Healthcare version of Nvidia Isaac. The software provides a simulated medical environment that can be used to train medical robots. Applications could include operating X-ray and ultrasound tests in parts of the world that lack qualified technicians for these tasks. Nvidia updated its Nvidia Earth technology for weather forecasting with a new version, Omniverse Blueprint for Earth-2. It includes "reference workflows" to help companies prototype weather prediction services, GPU acceleration libraries, "a physics-AI framework, development tools, and microservices." Also: The best AI for coding (and what not to use - including DeepSeek R1) Storage equipment vendors can embed AI agents into their equipment through a new partnership called the Nvidia AI Data Platform. The partnership means equipment vendors may opt to include Blackwell GPUs in their equipment. Storage vendors Nvidia is working with include DDN, Dell, Hewlett Packard Enterprise, Hitachi Vantara, IBM, NetApp, Nutanix, Pure Storage, VAST Data, and WEKA. The first offerings from the vendors are expected to be available this month. Nvidia said this is the largest GTC event to date, with 25,000 attendees expected in person and 300,000 online.

[2]

The week in chip news: Nvidia's GTC 2025 blitz, new NVMe HDDs and watercooled SSD, Intel's restructuring begins

Nvidia's GTC 2025, an annual event where the company lays out its roadmap of new products and vision for the coming year, created a flood of news as the company extended its roadmap out to a four-year horizon with plenty of mind-bendingly powerful new AI GPUs and systems. Nvidia CEO Jensen Huang also clarified the company's recent maneuvers behind the scenes, including that it is now building production silicon in the US in TSMC's Arizona fab, and he also threw cold water on rumors of Nvidia's participation in a supposed industry consortium that would take over rival company Intel's chipmaking fabs. That news comes as Intel begins shuffling its foundry management structure under new CEO Lip-Bu Tan. Nvidia's GTC has developed its own gravity, and many other companies are also now using the event to make their own announcements. This year, we found quite a few interesting developments on the storage front, including a demo for PCIe-connected NVMe hard drives, the first production liquid-cooled SSDs, and a new Toshiba SSD that's bursting at the seams with 122 TB of capacity. Let's dive in. Nvidia is dominating not only the AI market, but also the entire fabless chipmaker realm. Early in the week, we learned that Nvidia's explosive growth equates to making nearly as much revenue as its next nine fabless competitors combined last year. Yes, that includes big names like AMD, Qualcomm, Broadcom, and MediaTek, among others. That was an impressive start to the news cycle for GTC 2025 week, but the real fireworks began the following day when Nvidia unveiled its new roadmap. Nvidia announced its new Rubin GPUs for 2026, Rubin Ultra in 2027, and teased an all-new Feynman architecture that will arrive in 2028, extending its public roadmap out to a four-year window, which Jensen claimed is "something no other technology CEO has ever done before." That included the headline announcement of the company's next-gen Blackwell Ultra B3000 GPUs. Looking out to Nvidia's most exotic high-powered AI systems, the company had its future Rubin Ultra systems on display, and we had the chance to get up close and personal with the new rack design. These powerful GPUs will come with four reticle-sized GPU dies (the largest chips that can be made with existing chipmaking tools) crammed into a single package fused with 1TB of HBM4E memory. Those massive chips will be combined with Nvidia's 88-core CPUs and crammed into Kyber racks, consuming up to 600,000 Watts (600kW) of power apiece. That will push Nvidia SuperPODS up to multi-megawatts of power consumption. That pushes performance density to once-unthinkable levels, but power constraints are already a pressing issue for new and existing data centers alike: Most data center projects are now limited by how much power they can access from the grid. As Kyber shows, this will become a more critical issue soon -- these systems are expected to land in the second half of 2027. Naturally, tying together massive clusters of GPUs brings complex challenges, but Nvidia plans for future clusters to communicate at the speed of light. To that end, the company unveiled its Spectrum-X Photonics and Quantum-X Photonics networking switch platforms. These photonics platforms deliver an incredible 1.6 Tb/s of bandwidth per port, or up to 400 Tb/s in aggregate, thus allowing up to millions of GPUs to operate in a single cluster. As usual, though, some of the biggest storylines from Nvidia didn't stem from its product announcements but rather from its plans for the future. One pertains to TSMC, as Nvidia announced that it is now running production silicon through TSMC fabs in Arizona (it's also producing some Blackwell systems in the US, too), but Nvidia also shared details, or rather lack thereof, about Intel. Jensen threw cold water on rumors that it was asked to be in an industry consortium that supposedly included AMD, Broadcom, and Qualcomm. This would help TSMC take over the Intel Foundry chipmaking operations. Jensen denied that he had been asked to join a consortium, saying, "Nobody has invited us to a consortium. [...] There might be a party. I was not invited." That's good news for Intel, which made news of its own as Intel's new CEO, Lip-Bu Tan, has now taken the helm with a $1 million yearly salary plus a whopping potential total of $68 million in bonuses. Later in the week, Intel told us that Ann Kelleher, the EVP responsible for developing Intel's chip fabrication technologies, would retire by the end of the year. Intel had previously laid out a public succession plan for Kelleher, but the company decided to reorganize the Intel Foundry operations as part of the changeover. It isn't clear how much of the new organizational structure was planned by Lip-Bu Tan, but he clearly executed the new plan mere days after he assumed his new role as CEO. Perhaps we'll see more similarly decisive actions at Intel soon as Tan enacts a turnaround plan. Meanwhile, Intel ex-CEO Pat Gelsinger stopped by GTC to repeat his claim that Jensen "got lucky with AI." Gelsinger also lamented Intel's past indecisive execution of his Larabee GPU project, which was famously killed after he left the company. HBM memories attract all the attention for AI GPUs and accelerators, but the massive data training sets have to be stored somewhere, and storage solutions tailored specifically for AI use cases are now proliferating. We saw several new storage devices at GTC 2025, with I'm always a sucker for high-capacity SSDs, and Kioxia's new PCIe 5.0 LC9 certainly fits that bill. Kioxia announced the new 122.88TB SSD at the show, and it packs a big performance that matches the big capacity with up to 15 GB/s of bandwidth on tap. Seagate also had a proof-of-concept demo that included NVMe HDDs. When it comes to NVMe, we naturally think of SSDs, but HDDs with the interface have been under development for quite some time. Extra performance isn't the goal, though. Seagate says utilizing the NVMe interface will enable scaling pools of SSDs up to the exabyte level by utilizing the fast and flexible NVMe-over-Fabrics (NVMe-oF) protocol. When deployed into software-controlled tiered storage systems in tandem with NVMe SSDs, Seagate thinks it has the winning formula for top-tier AI server storage performance. Finally, the 'world's first' liquid-cooled enterprise SSD debuted at the event. We've seen more than a few different takes on watercooled SSDs for the consumer market. Still, none of them are designed for the harsh environments or the stringent reliability metrics needed for servers. Solidigm's D7-PS10101 E1.S is watercooled but also hot-swappable, meaning it can be changed without shutting down the server, thus also meeting critical serviceability criteria for AI data centers that can't miss a beat. Jensen Huang also was up to his usual antics as he served food from the Denny's food truck to attendees, and there were far too many other product announcements to cover here. Head to our GTC 2025 page for more details on the other new hardware and specs.

[3]

Nvidia cranks up agentic AI with revamped Blackwell Ultra GPUs and next-gen AI desktops

Nvidia cranks up agentic AI with revamped Blackwell Ultra GPUs and next-gen AI desktops Artificial intelligence agents dominated the conversation at Nvidia Corp.'s GTC 2025 event, and much of the talk was about how they'll benefit from the accelerated performance of the company's latest graphics processing units. The company lifted the veil on Nvidia Blackwell Ultra, the latest evolution of its newest GPU platform, saying it's designed to bear the brunt of next-generation AI reasoning workloads, launching them alongside its first-ever Blackwell-powered desktop computers for AI developers. Blackwell Ultra provides a significant upgrade to the original Blackwell architecture that was announced one year earlier during the previous edition of GTC, improving performance and scale for both AI training and inference workloads and paving the way for so-called AI agents to rule the world. AI agents are the next big thing in AI systems, promising to perform multiple kinds of tasks autonomously for users without any more effort than a simple prompt telling it what it's supposed to do. If they live up to their billing, they could well prove to be transformational in numerous different industries. But for that to happen, they need a reliable, blazing-fast infrastructure to support them. That's where Nvidia's Blackwell Ultra comes in. It bundles the Nvidia GB300 NVL72 rack-scale solution that packs a whopping 72 of the latest Blackwell GPUs with 36 Arm Neoverse-based Nvidia Grace central processing units, running them together as if they were a single, super-high-performance chip. It's combined with the Nvidia HGX B300 NVL16 baseboard system in order to provide 1.5 times better AI performance than last year's GB200 NVL72 rack-scale set up. What's more, it boosts the "revenue opportunity" for so-called AI factories by up to 50 times when compared with those powered by older Hopper architecture-based GPUs. Nvidia Chief Executive Jensen Huang justified the need for ever more compute power, saying that AI technology has made a gigantic leap in the last couple of years. "Reasoning and agentic AI demand orders of magnitude more computing performance," he said. "We designed Blackwell Ultra for this moment. It's a single versatile platform that can easily and efficiently do pretraining, post-training and reasoning inference." Nvidia said the GB300 NVL72 system will be made available through the Nvidia DGX Cloud platform, which is a managed AI infrastructure service that runs on leading cloud infrastructures such as Amazon Web Services and Google Cloud. In addition, companies will be able to install it on-premises when they purchase an Nvidia DGX SuperPOD hardware system to build their own turnkey AI factory. Other components of the Blackwell Ultra architecture include Nvidia's Spectrum-X Ethernet and Quantum-X800 InfiniBand networking systems, which provide up to 800 gigabytes per second of data throughput for each of the 72 GPUs in the system. Also onboard is a cluster of Nvidia's BlueField-3 data processing units, which are used to process nonessential, related computing tasks to free up the GPUs to focus solely on training and inference. Besides making its own on-premises and cloud-based systems, Nvidia is also offering the Blackwell Ultra architecture to key partners in the AI server space. It promised that the likes of Cisco Systems Inc., Dell Technologies Inc., Hewlett Packard Enterprise Co., Lenovo Group Ltd. and Supermicro Computer Inc. are all set to start shipping a number of server systems based on the new architecture. Others, including AsusTek Computer Inc., Gigabyte Technology Co., Ltd., Pegatron Corp. and Inventec Corp. will also sell Blackwell Ultra-based servers later this year. Finally, the new platform will also be offered on Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure, as well as GPU cloud pure plays such as CoreWeave and Crusoe. Nvidia said the Blackwell Ultra architecture is set to deliver "breakthrough performance" for the most complex AI workloads envisaged, enabling AI agents that will be able to reason, plan and take actions to achieve those plans. In addition, Nvidia has grand ideas around so-called "physical AI," powering a new generation of more intelligent and capable autonomous robots, cars and drones at much bigger scales than previously thought possible. Blackwell GPUs land on desktops The age of agentic AI isn't just about AI factories living in enormous data centers, though. For agentic AI to happen, there's a need for much more flexibility around where those AI agents can live and run experiments, which explains why Nvidia is also launching its first "personal AI supercomputers" powered by the Blackwell GPUs. These new AI supercomputers come in two forms - DGX Spark and DGX Station - and they look much like any regular laptop or desktop computer, except they pack a hugely powerful Blackwell GPU to do all sorts of impressive agentic AI number crunching in any location. The new machines, which were developed as part of Nvidia's Project DIGITS, are meant for AI developers, researchers and data scientists who need to prototype, fine-tune and experiment with large language models running locally, instead of relying on cloud-based infrastructure. Nvidia said the DGX Spark and DGX Station systems will be built in various form factors by the likes of Asus, Dell, HP and Lenovo. They'll feature a single Nvidia GB10 Grace Blackwell Superchip, which is a new version of the Blackwell GPU that has been optimized for integration with desktops. Those chips are combined with 5th-generation tensor cores and FP4 support, making them able to process up to 1,000 trillion operations per second of AI compute. In other words, they'll be able to run the most powerful new AI reasoning models locally, without breaking a sweat, Nvidia said. As a result, AI developers, researchers and even students will have local access to the same kind of AI horsepower normally only found in an enterprise data center -- a stunning 1 petaflop of performance. Nvidia said the GB10 Superchip relies on the company's NVLink-C2C interconnect technology to connect it with the onboard CPUs, enabling data to flow between them up to five-times faster than 5th-generation PCIe. The chips also feature the Nvidia ConnectX-8 SuperNIC, which supports networking speeds of up to 800 Gb/s, enabling multiple AI supercomputers to be linked together for even larger local workloads. Customers interested in buying the DGX Spark desktops can register their interest with Nvidia starting today, while the more powerful DGX Stations will become available from partners include Asus, Dell, HP and Supermicro later in the year. Huang said AI has transformed every layer of the computing stack in the cloud and now it's doing the same to the desktop PC. "With these new DGX personal AI computers, AI can now span from cloud services to desktop and edge applications," he promised.

[4]

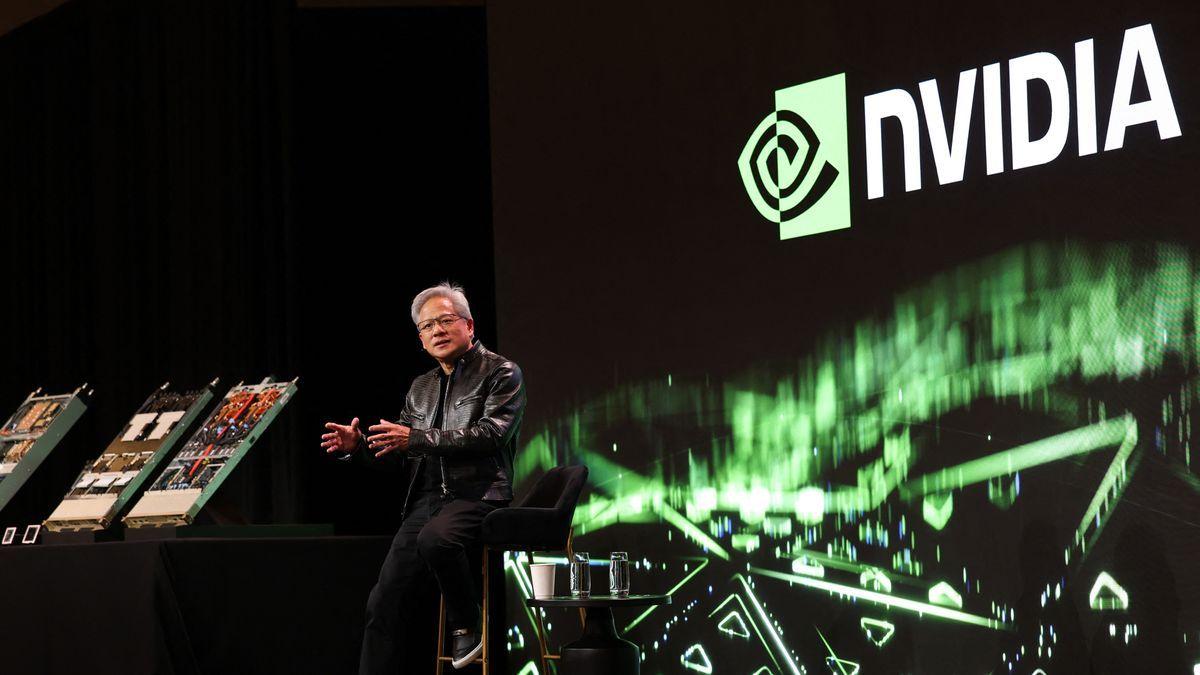

It's Jensen Huang's AI world. We just live in it. - SiliconANGLE

Nvidia CEO Jensen Huang kept his company on top of the AI world this week. At the company's annual GTC developer conference this week in San Jose, Huang (pictured here on the exhibit hall floor with throngs of admirers seeking selfies with the tech rock star) introduced a series of new graphics processing units, new AI supercomputers big and small, more software to make it all work, and a vision of enabling AI factories to produce massive amounts of new intelligence -- and revenue. And that includes Nvidia, as investors well know. This week it splashed some of that cash it's making on a $30 billion consortium to build more AI infrastructure, and reportedly on acquiring the synthetic data startup Gretel. Gotta keep those flywheels going! Speaking of GPUs, AI hosting platform CoreWeave filed to go public, aiming to raise $2.7 billion. Another sign of the continued demand for GPUs: Even though Microsoft passed on the opportunity to lock in $12 billion worth of CoreWeave's GPU capacity, OpenAI stepped in to snap it up. Shadow IT? Try Shadow AI. Stealth use of AI models could present a big problem for companies needing to protect their data and their reputations. SoftBank is buying AI chipmaker Ampere Computing for $6.5 billion -- not a big surprise given all that demand for AI chips, not to mention SoftBank's 90% ownership of Arm, on which Ampere chips are based. Google finally managed to bag cybersecurity phenom Wiz after the deal faltered last year, but it cost a pretty penny: $32 billion, the company's biggest acquisition ever. It's another sign, if we needed any at this point, that it's serious about bolstering Google Cloud. We'll learn more about that next month when we cover its Google Cloud Next conference in Las Vegas. The European Union's campaign against U.S. Big Tech isn't slowing down, as it separately found that both Apple and Google breached its antitrust rules. Here's more detail on that and the other big news this week on SiliconANGLE and beyond: Nvidia, of course! The graphics chip company -- sorry, AI factory -- held its GTC event this week, and no surprise, it was mostly about AI, with a day of quantum computing thrown in. Here's all our coverage from GTC, the major AI conference of the year, with my own observations below: Scaling up: Nvidia redefines the computing stack with new releases for the AI factory Nvidia cranks up agentic AI with revamped Blackwell Ultra GPUs and next-gen AI desktops Nvidia's new reasoning models and building blocks pave the way for advanced AI agents Nvidia announces new AI models for smarter, more adaptable robots Nvidia expands Omniverse to simulate gigawatt AI data centers and drive robotic factories Nvidia debuts new silicon photonics switches for AI data centers The key takeaways from Nvidia CEO Jensen Huang's GTC keynote Nvidia GTC heats up: Catch the AI breakthroughs on theCUBE Michael Dell calls AI a revolution, as Dell deepens Nvidia alliance Dell aims new servers and software at Nvidia-powered AI applications HPE's Antonio Neri on AI, data centers and the future of infrastructure HPE and Nvidia tighten partnership with broad infrastructure enhancements Accenture debuts AI agent builder within its AI Refinery platform Deloitte hops aboard the agentic AI hype train with Zora AI Some of my observations from keynotes, panels, interviews and wandering the show floor: * Huang is pushing hard on his AI Factory idea, and it's not entirely a marketing term. Its AI factories are indeed producing the raw material of the AI era -- tokens used to train and run the AI models to give us those mostly amazing answers to our queries -- and they require the same energy and physical infrastructure as steel or autos. It's just that the output isn't hardware but software -- which continues to eat the world. * The key to that for Nvidia is, of course, ever more powerful chips, and least surprising of all, Nvidia introduced Blackwell Ultra, the successor graphics processing unit coming later in the year even as the current Blackwell chips, which are 40 times slower, are still in short supply. But somewhat more unusual, Huang also tipped a chip not due until late 2026, called Rubin, to provide all its partners time to make sure they're prepared for the tick-tock transitions to new chips. "We're not building chips anymore," Huang said in a press Q&A. "Now we build AI infrastructure that is deployed hundreds of billions of dollars at a time. So my planning needs to be upstream many years and downstream many years." * How long can this AI building frenzy last? There aren't many signs of a slowdown, really, but these are big bets Nvidia and its customers are making. Like most semiconductor cycles, and like other tech infrastructure buildouts such as the internet boom, eventually things slow down. When that happens, the scale of this buildout means it could be a hard fall. Just not yet. * Nvidia also introduced Dynamo, which Huang described as the "operating system of the AI factory." The open-source "inference serving library" is intended to speed up inference, the process of running models, so they can prepare more accurate responses to queries in a process called "reasoning" -- more on that in a second. * A year after the last GTC when many AI projects were still prototypes or experiments, enterprises have turned a page with AI. "We've moved to a stage where we're moving into full production," Rahul Kulkarni, director of product management for compute and AI/ML infrastructure at Amazon Web Services, told SiliconANGLE, with solid results from clients such as Adobe, ServiceNow and Perplexity. * At the same time, there's still no easy button for AI. "There is no simple out-of-the-box solution for companies to get started," Kulkarni noted. Many are working on that, including Nvidia, AWS and a gazillion system integrators, a sign that even AI-forward enterprises are still in the early stages of reinventing their businesses with it. * The next big thing in AI -- beyond agents, which themselves are still nascent -- is "reasoning," Nvidia confirms, and I put it in quotes because it still seems to anthropomorphize a process that still really doesn't work the way humans learn and think. Reasoning models take more time -- and of course more tokens and therefore more compute -- to get to better answers. This is a big improvement over quick-and-dirty chatbots, of course. Just don't fool yourself into thinking that they're "thinking" or truly human-level intelligent. * One reason that remains true is that animals such as people learn perhaps much more by living in the physical world than by language. As Jeff Hawkins and others contend, if we're ever going to get to really capable AIs without burning up the world to provide energy for compute-intensive large language models, there's much more kinds of learning we need to employ than ever more clever next-word prediction. And many of them involve interacting with physical objects in the real world -- requiring what some call a "world model." "We have models of the physical world that we acquire in the first few weeks of life," Meta Platforms Chief AI Scientist Yann LeCun said in an onstage interview. That's going to require architectures that are completely different from LLMs, he argues. That's one reason "physical AI," a term Nvidia and others use as a wrapper for robots and autonomous vehicles, was a big focus at GTC. "The time has come for robots," Huang said, who showed off a cute little robot onstage. * Developers developers developers! That's whom Nvidia is aiming for with its cute little DGX Spark "AI supercomputer" that starts at $2999 and can be added to a laptop for easy model development and other AI work such as creating proofs of concept. Oddly enough, it's easier and often cheaper than renting the same compute in the cloud. "We're essentially offloading work from DGX Cloud and other GPU cloud resources," Bob Pette, VP and GM of enterprise platforms at Nvidia, told me. * Quantum computing is coming -- maybe a bit sooner than Jensen implied a couple months ago. He admitted that his offhand comment that practical quantum computing could be as much as 30 years away -- tanking quantum stocks for awhile -- was perhaps too negative. In a series of panels Thursday at GTC, the CEOs of more than a dozen quantum companies explained their various approaches, from using atoms and superconducting materials as qubits to finding new ways to correct quantum's rampant errors. * Still, the reality is that it's still going to be a good number of years before quantum is solving a lot of practical problems at large scale. LeCun noted archly in a separate onstage interview that the main use case for quantum computers for now seems to be simulating quantum computers. And even a chastened Huang seemed mildly skeptical at times about how soon it will be useful -- in fact, he didn't exactly "walk back" his comments so much as explain that new technologies -- such as, say, accelerated computing -- just take longer to have a big impact than people realize. * One sign of the nascent nature of quantum is simply that there are so many disparate approaches. The quantum CEOs made good cases for each of them, but it's far from clear which if any will get to practical usefulness, or when. "Some of us may come together," IonQ Executive Chairman Pete Chapman said in an admission that consolidation of quantum companies with similar approaches is likely. * Quantum computing won't replace classical computing. Instead, it will work in tandem, even perhaps employing a "quantum processing unit" in conventional computers like GPUs are used today. However, quantum computing isn't just for running existing workloads faster but doing things such as drug discovery at massive scale that simply can't be done now. "Quantum will be a big compute resource like classical computers are a big data resource," said Ben Bloom, founder and CEO of Atom Computing. "We need to figure out how to use that." * One way is to enable a better approach to doing AI. "We have not been able to compute like nature computes," noted Krysta Svore, a technical fellow at Microsoft. "Quantum takes us a step closer." * One last morsel: Denny's has a new limited-time menu item: Nvidia Breakfast Bytes. Basically pigs in a blanket with pancakes and syrup, this was a combo order Huang loved when he was a dishwasher at Denny's -- where he started Nvidia with his co-founders. On another note, check out this deep dive from Paul Gillin on what could be a ticking time bomb in the AI era: Shadow AI: Companies struggle to control unsanctioned use of new tools Nvidia, xAI join $30B AI investment consortium backed by Microsoft Nvidia reportedly acquires Gretel for $320M+ to strengthen AI training tools Money-hungry AI search startup Perplexity in talks to raise up to $1B in fresh funding AI-driven commercial contractor platform BuildOps raises $127M at $1B valuation Dataminr raises $85M for its real-time analytics platform XAI acquires AI video generation startup Hotshot Carbon Arc reels in $56M for its AI data platform AI code assist startup Graphite raises $52M to try and keep ahead of the competition Halliday raises $20M to build AI-driven blockchain agents to do away with smart contracts Rerun gets $17M to build the essential data infrastructure for AI-powered robots, drones and cars Google Cloud is helping gaming startups use AI change the industry Google Gemini introduces collaborative canvas and podcast-like audio overviews Baidu debuts its first AI reasoning model to compete with DeepSeek Mistral AI's newest model packs more power in a much smaller package Oracle lets customers create and modify AI agents across its Fusion application suite Cisco debuts new AI-powered customer service features for Webex Zoom introduces new AI capabilities and agents with major release Adobe unleashes an army of AI agents on sales and marketing teams LogicMonitor improves visibility into AI workloads Qualtrics says its new AI agents can satisfy most customer complaints without human guidance Report co-authored by Fei-Fei Li stresses need for AI regulations to consider future risks Court rules copyrighting AI-generated art is a no-go - even if you invented the software There's even more AI and big data news on SiliconANGLE SoftBank agrees to buy Arm chipmaker Ampere Computing for $6.5B AI cloud operator CoreWeave files for $2.7B IPO Meanwhile, Microsoft chose not to exercise $12 billion Coreweave option (per Semafor), but luckily for the GPU farm, OpenAI did Dozens of tech firms urge EU to become more technologically independent Virtual desktop startup Nerdio raises $500M at $1B+ valuation Cloud infrastructure startup Evroc raises €50.6M to build new data centers Google reportedly partnering with MediaTek for next-generation TPU production Micron's stock falls despite solid jump in revenue from AI memory chips Confluent expands AI and analytics capabilities in Apache Flink and Tableflow We have plenty more news on cloud, infrastructure and apps In its largest-ever acquisition, Google buys cybersecurity startup Wiz for $32B VulnCheck raises $12M to boost global expansion of exploit intelligence platform Orion Security raises $6M to plug sensitive data leaks with AI smarts Cloudflare introduces Threat Events Feed to enhance cyberthreat visibility JFrog's Conan introduces Conan Audit to strengthen C/C++ dependency security Prompt Security launches authorization features to strengthen AI data access controls Enterprise AI adoption jumps 30-fold as organizations face growing cybersecurity risks AI-driven threats fuel rise in phishing and zero-day attacks Zimperium report warns that mobile rooting and jailbreaking still pose serious security risks Flashpoint report highlights rising cyberthreats, with infostealers and ransomware leading the way More cybersecurity news here EU finds Apple, Google breached DMA antitrust rules Alphabet spins off its Taara laser-powered networking venture FTC commissioners claim they were 'illegally fired' by Trump Kraken enters agreement to acquire futures trading platform NinjaTrader for $1.5B Skidattl wants to make augmented reality overlays ubiquitous with QR codes

[5]

In DeepSeek Era, Nvidia Fixes On Blackwell Ultra's AI Money-Making Potential

Revealed at its GTC 2025 event, Nvidia says that its next-generation Blackwell Ultra GPU for AI data centers is designed for reasoning models like DeepSeek R1 and claims that the chip can significantly increase the revenue AI providers generate. Nvidia has revealed the first details of the successor to its fast-selling Blackwell GPU architecture, saying the follow-up is built for AI reasoning models like DeepSeek R1 while claiming that the GPU can significantly increase the revenue AI providers generate. Called Blackwell Ultra, the GPU increases the maximum HBM3e high-bandwidth memory by 50 percent to 288 GB and boosts 4-bit floating point (FP4) inference performance by just as much, Nvidia announced Tuesday at its GTC 2025 event alongside new DGX SuperPods, a new DGX Station and new Blackwell-based RTX Pro GPUs. [Related: Nvidia Seeks To Turbocharge AI PC Development With GeForce RTX 50 GPUs] The company said Blackwell Ultra-based products from technology partners are set to debut in the second half of 2025. These partners include OEMs such as Dell Technologies, Cisco, Hewlett Packard Enterprise, Lenovo and Supermicro as well as cloud service providers like Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure. In a briefing with journalists the day before, Nvidia executive Ian Buck provided these details and added that Blackwell Ultra is "built for the age of reasoning," referencing the advent of reasoning models such as the Chinese-developed DeepSeek R1. While the arrival of DeepSeek R1 and its cost-effective nature earlier this year put into question the need for large data centers filled with Nvidia's power-hungry and powerful GPUs, the AI computing giant has pushed back on any negative implications by arguing that such breakthroughs point to the need for faster AI chips in greater numbers. "While DeepSeek can be served with upwards of 1 million tokens per dollar, typically they'll generate up to 10,000 or more tokens to come up with that answer. This new world of reasoning requires new software, new hardware to help accelerate and advance AI," said Buck, whose title is vice president of hyperscale and high-performance computing. With data centers running DeepSeek and other kinds of AI models representing what Buck called a $1 trillion opportunity, Nvidia is focusing on how its GPUs, systems and software can help AI application providers make more money, with Buck saying that Blackwell Ultra alone can enable a 50-fold increase in "data center revenue opportunity." The 50-fold increase is based on the performance improvement Buck said Nvidia can provide for the 671-billion-parameter DeepSeek-R1 reasoning model with the new GB300 NVL72 rack-scale platform -- which updates the recently launched GB200 NVL72 with the new Blackwell Ultra-based GB300 superchip -- over an HGX H100-based data center at the same power level. Whereas the HGX H100 can deliver 100 tokens per second in 90 seconds with the DeepSeek-R1 model, the GB300 NVL72 can increase the tokens per second by 10 times to 1,000 while shortening the delivery time to just 10 seconds, according to Buck. "The combination of total token volume [and] dollar per token expands from Hopper to Blackwell by 50X by providing a higher-value service, which offers a premium experience and a different price point in the market," he said. "As we reduce the cost of serving these models, they can serve more with the same infrastructure and increase total volume at the same time," Buck added. The company did not discuss how much more potential revenue Blackwell Ultra can generate than Blackwell, which became Nvidia's "fastest product ramp" yet by generating $11 billion from initial shipments between November and January. Nvidia unveiled Blackwell Ultra as the AI computing giant seeks to not only maintain its dominance but also ensure there continues to be high demand for its products that allowed it to more than double revenue to $130.5 billion last year. With the new, Blackwell Ultra-based GB300 NVL72 platform, the company improved its energy efficiency and serviceability, according to Buck. Consisting of 72 Blackwell Ultra GPUs and 36 Grace CPUs, the GB300 NVL72 platform can achieve 1.1 exaflops of FP4 dense computation, and it comes with 20 TB of high-bandwidth memory as well as 40 TB of fast memory. The platform's NVLink bandwidth can top out at 130 TBps while networking speeds reach 14.4 TBps. Nvidia said GB300 NVL72 will be made available on its DGX Cloud AI supercomputing platform, which is accessible from cloud service providers like AWS, Microsoft Azure and Google Cloud. With Blackwell Ultra, Nvidia will provide two flavors of new DGX SuperPod configurations. The liquid-cooled DGX SuperPod with DGX GB300 systems consists of eight GB300 NVL72 platforms, amounting to 288 Grace CPUs, 576 Blackwell Ultra GPUs and 300 TB of fast memory that can produce 11.4 exaflops of FP4 computation. The DGX SuperPod with DGX B300 systems, on the other hand, is pitched by Nvidia as a "scalable, air-cooled architecture" that will be offered as a new design for the company's modular MGX server racks and enterprise data centers. These B300-based DGX SuperPod clusters are made up of Nvidia's HGX B300 NVL16 platform, which the company said provides 11 times faster inference on large language models, seven times more compute and four times larger memory compared to a Hopper-based platform. Nvidia did not disclose power requirements in the Monday briefing. Nvidia also revealed a new Blackwell Ultra-powered DGX Station desktop PC as well as new Blackwell-based RTX Pro GPUs for laptops, desktops and servers. It also provided new details for the Project Digits' mini PC that was revealed at CES 2025 in January. The company called the DGX Station the "ultimate desktop computer for the AI era" because of how it features a GB300 Grace Blackwell Ultra Desktop Superchip and 784 GB of unified system memory to enable 20 petaflops of AI performance. The DGX Station will also feature the Nvidia ConnectX-8 SuperNIC, which enables networking speeds of up to 800 Gbps for connecting multiple DGX Stations. Nvidia said the DGX Station will be made available from several OEMs, including Dell, HP Inc. and Supermicro, later this year. Nvidia revealed that Project Digits is now known as DGX Spark, which will feature the GB10 Grace Blackwell Superchip to deliver up to 1,000 trillion operations per second of AI computation for the fine-tuning and inferencing of reasoning models. Asus, Dell, HP and Lenovo plan to release their own version of the DGX Spark. While Nvidia didn't indicate a release window, it said its website is accepting reservations now. Nvidia said the RTX Pro Blackwell GPUs will feature "groundbreaking AI and graphics performance," which will "redefine visualization, simulation and scientific computing for millions of professions," according to Nvidia. Compared with the Ada Lovelace-based RTX Pro GPUs, the new Blackwell models come with an improved streaming multiprocessor that delivers 50 percent faster throughput and new neutral shaders that "integrate AI inside of programmable shaders" for AI-augmented graphics. They also sport fourth-generation RT Cores that can deliver up to double the ray tracing performance, fifth-generation Tensor Cores that enable up to 4,000 AI trillion operations per second and add support for FP4 precision as well as larger, faster GDDR7 memory. The RTX Pro GPUs for laptops, spanning from the high-end 5000 series to the low-end 500 series, will support up to 24 GB of GDDR7 memory with error-correction while the desktop models, spanning from the 5000 series to the 4000 series, will max out to 96 GB. The RTX Pro 6000 series for data centers will also top out to 96 GB. Nvidia said the RTX Pro 6000 data center GPU will be made available soon in server configurations from OEMs such as Cisco Systems, Dell, Hewlett Packard Enterprise and Lenovo. The GPU will become available in instances from Amazon Web Services, Google Cloud, Microsoft Azure and CoreWeave later this year. The RTX Pro desktop GPUs, on the other hand, are expected to debut in April with availability from distributors PNY and TD Synnex. They will then arrive in PCs the following month from OEMs and system builders like Boxx, Dell, HP, Lambda and Lenovo. The RTX Pro laptops GPUs will land later this year from Dell, HP, Lenovo and Razer.

Share

Share

Copy Link

Nvidia introduces Blackwell Ultra GPUs and AI desktops at GTC 2025, emphasizing their potential for AI reasoning models and increased revenue generation for AI providers.

Nvidia Unveils Blackwell Ultra GPUs for Advanced AI Processing

Nvidia, the leading AI chip manufacturer, has unveiled its next-generation Blackwell Ultra GPUs at its annual GTC 2025 event, positioning the company at the forefront of AI technology advancements

1

. The new GPUs are designed to handle complex AI workloads, particularly focusing on reasoning models and agentic AI, which require significantly more computing power2

.Blackwell Ultra: Powering AI Factories

The Blackwell Ultra architecture represents a substantial upgrade from its predecessor, offering up to 1.5 times better AI performance compared to last year's GB200 NVL72 rack-scale setup

2

. Nvidia CEO Jensen Huang emphasized the need for increased computing power to meet the demands of reasoning and agentic AI, stating that Blackwell Ultra is "a single versatile platform that can easily and efficiently do pretraining, post-training and reasoning inference"2

.Revenue Generation and AI Infrastructure

Nvidia is positioning Blackwell Ultra as a revenue-generating powerhouse for AI providers. The company claims that the new architecture can boost the "revenue opportunity" for AI factories by up to 50 times compared to those powered by older Hopper architecture-based GPUs

2

. This focus on revenue generation is part of Nvidia's strategy to maintain its dominance in the AI chip market5

.Expanding AI Accessibility

In addition to data center solutions, Nvidia is introducing AI capabilities to desktop computers:

-

DGX Spark and DGX Station: These "personal AI supercomputers" are designed for AI developers, researchers, and data scientists who need to prototype and experiment with large language models locally

2

. -

Project DIGITS: This initiative aims to bring AI computing power to smaller form factors, with various manufacturers set to produce these systems

2

5

.

Industry Partnerships and Availability

Nvidia is collaborating with major tech companies to integrate Blackwell Ultra into various platforms:

- Server manufacturers: Cisco, Dell, HPE, Lenovo, and Supermicro will ship server systems based on the new architecture

2

. - Cloud providers: AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure will offer Blackwell Ultra-based solutions

2

. - GPU cloud specialists: Companies like CoreWeave and Crusoe will also provide access to the new technology

2

.

Related Stories

Market Impact and Future Outlook

The introduction of Blackwell Ultra comes at a time when the AI industry is experiencing rapid growth and evolution. Nvidia's focus on reasoning models like DeepSeek R1 demonstrates the company's adaptability to emerging AI trends

5

. However, some industry observers note that while the AI building frenzy continues, the scale of this infrastructure buildout could lead to a significant downturn when the market eventually slows4

.Conclusion

Nvidia's Blackwell Ultra GPUs and associated AI technologies represent a significant leap forward in AI computing capabilities. By focusing on reasoning models, revenue generation, and accessibility across various platforms, Nvidia is solidifying its position as a leader in the AI chip market. As these technologies become available in the latter half of 2025, their impact on AI development and deployment will likely be substantial

5

.References

Summarized by

Navi

[2]

[3]

[4]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology