NVIDIA's Networking Innovations Pave the Way for Giga-Scale AI Super-Factories

5 Sources

5 Sources

[1]

Gearing Up for the Gigawatt Data Center Age

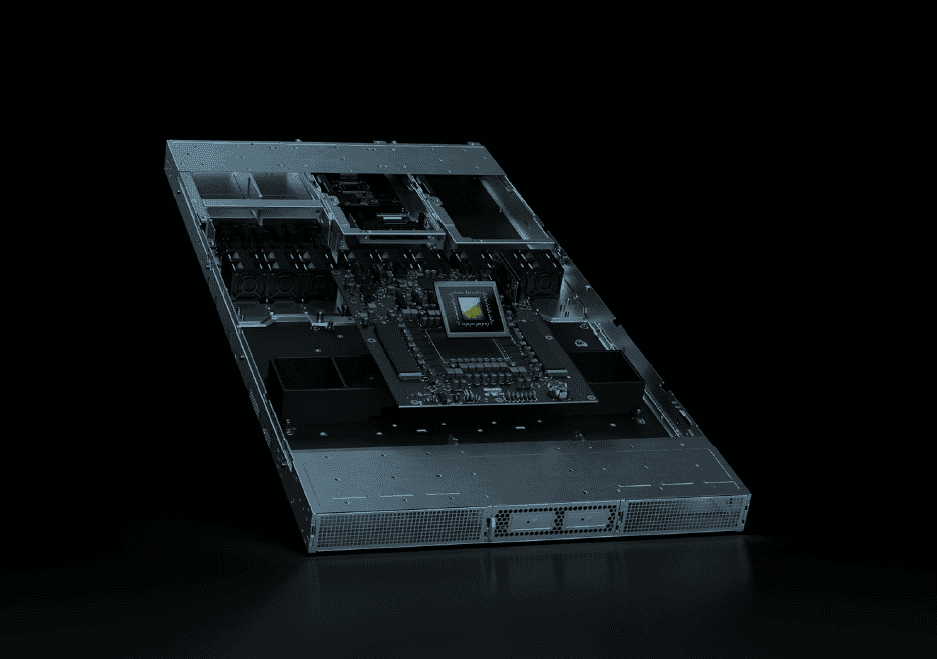

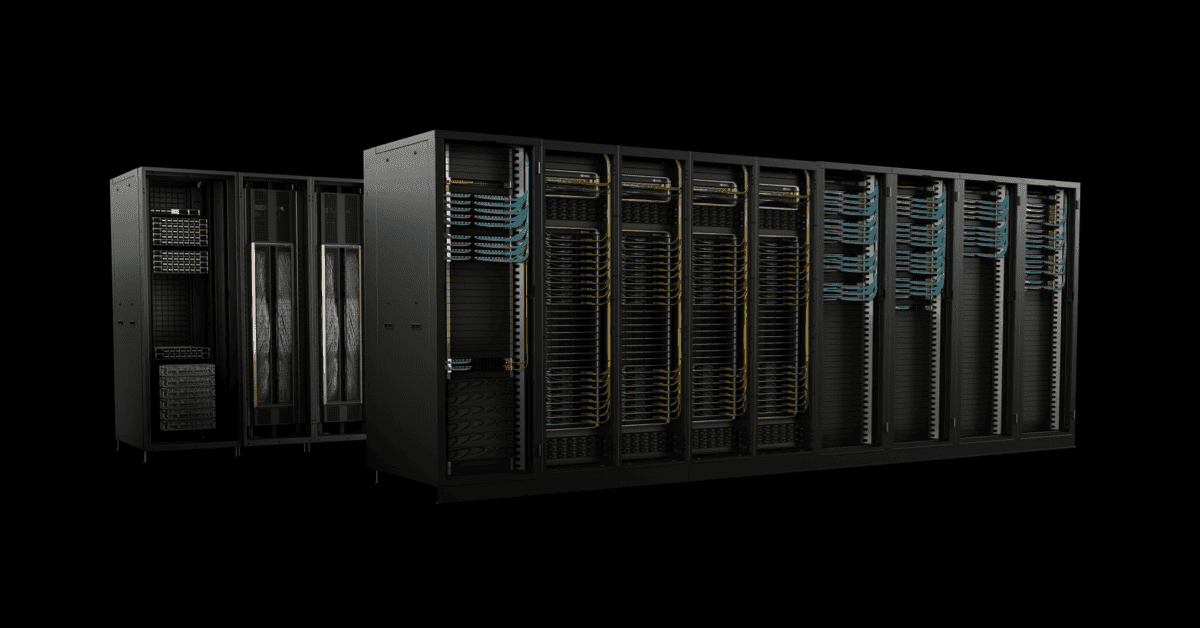

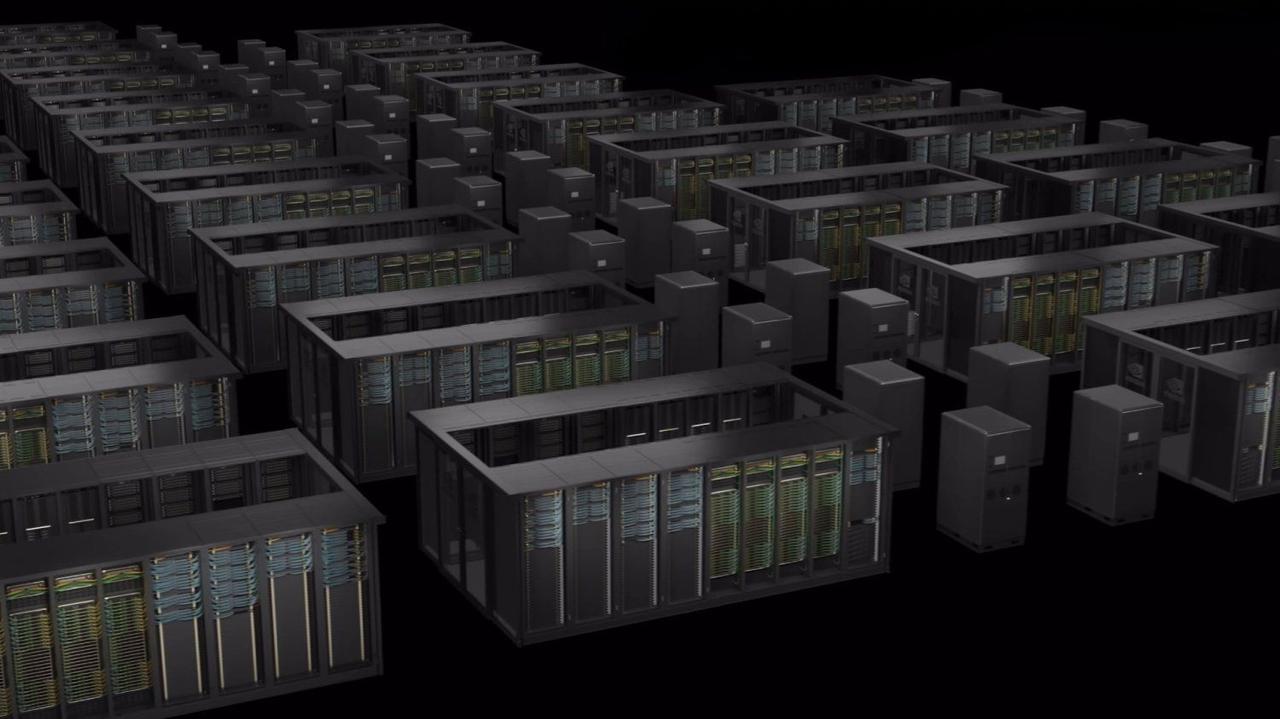

Inside the AI factories powering the trillion‑parameter era -- and why the network matters more than ever. Across the globe, AI factories are rising -- massive new data centers built not to serve up web pages or email, but to train and deploy intelligence itself. Internet giants have invested billions in cloud-scale AI infrastructure for their customers. Companies are racing to build AI foundries that will spawn the next generation of products and services. Governments are investing too, eager to harness AI for personalized medicine and language services tailored to national populations. Welcome to the age of AI factories -- where the rules are being rewritten and the wiring doesn't look anything like the old internet. These aren't typical hyperscale data centers. They're something else entirely. Think of them as high-performance engines stitched together from tens to hundreds of thousands of GPUs -- not just built, but orchestrated, operated and activated as a single unit. And that orchestration? It's the whole game. This giant data center has become the new unit of computing, and the way these GPUs are connected defines what this unit of computing can do. One network architecture won't cut it. What's needed is a layered design with bleeding-edge technologies -- like co-packaged optics that once seemed like science fiction. The complexity isn't a bug; it's the defining feature. AI infrastructure is diverging fast from everything that came before it, and if there isn't rethinking on how the pipes connect, scale breaks down. Get the network layers wrong, and the whole machine grinds to a halt. Get it right, and gain extraordinary performance. With that shift comes weight -- literally. A decade ago, chips were built to be sleek and lightweight. Now, the cutting edge looks like the multi‑hundred‑pound copper spine of a server rack. Liquid-cooled manifolds. Custom busbars. Copper spines. AI now demands massive, industrial-scale hardware. And the deeper the models go, the more these machines scale up, and out. The NVIDIA NVLink spine, for example, is built from over 5,000 coaxial cables -- tightly wound and precisely routed. It moves more data per second than the entire internet. That's 130 TB/s of GPU-to-GPU bandwidth, fully meshed. This isn't just fast. It's foundational. The AI super-highway now lives inside the rack. The Data Center Is the Computer Training the modern large language models (LLMs) behind AI isn't about burning cycles on a single machine. It's about orchestrating the work of tens or even hundreds of thousands of GPUs that are the heavy lifters of AI computation. These systems rely on distributed computing, splitting massive calculations across nodes (individual servers), where each node handles a slice of the workload. In training, those slices -- typically massive matrices of numbers -- need to be regularly merged and updated. That merging occurs through collective operations, such as "all-reduce" (which combines data from all nodes and redistributes the result) and "all-to-all" (where each node exchanges data with every other node). These processes are susceptible to the speed and responsiveness of the network -- what engineers call latency (delay) and bandwidth (data capacity) -- causing stalls in training. For inference -- the process of running trained models to generate answers or predictions -- the challenges flip. Retrieval-augmented generation systems, which combine LLMs with search, demand real-time lookups and responses. And in cloud environments, multi-tenant inference means keeping workloads from different customers running smoothly, without interference. That requires lightning-fast, high-throughput networking that can handle massive demand with strict isolation between users. Traditional Ethernet was designed for single-server workloads -- not for the demands of distributed AI. Tolerating jitter and inconsistent delivery were once acceptable. Now, it's a bottleneck. Traditional Ethernet switch architectures were never designed for consistent, predictable performance -- and that legacy still shapes their latest generations. Distributed computing requires a scale-out infrastructure built for zero-jitter operation -- one that can handle bursts of extreme throughput, deliver low latency, maintain predictable and consistent RDMA performance, and isolate network noise. This is why InfiniBand networking is the gold standard for high-performance computing supercomputers and AI factories. With NVIDIA Quantum InfiniBand, collective operations run inside the network itself using Scalable Hierarchical Aggregation and Reduction Protocol technology, doubling data bandwidth for reductions. It uses adaptive routing and telemetry-based congestion control to spread flows across paths, guarantee deterministic bandwidth and isolate noise. These optimizations let InfiniBand scale AI communication with precision. It's why NVIDIA Quantum infrastructure connects the majority of the systems on the TOP500 list of the world's most powerful supercomputers, demonstrating 35% growth in just two years. For clusters spanning dozens of racks, NVIDIA Quantum‑X800 Infiniband switches push InfiniBand to new heights. Each switch provides 144 ports of 800 Gbps connectivity, featuring hardware-based SHARPv4, adaptive routing and telemetry-based congestion control. The platform integrates co‑packaged silicon photonics to minimize the distance between electronics and optics, reducing power consumption and latency. Paired with NVIDIA ConnectX-8 SuperNICs delivering 800 Gb/s per GPU, this fabric links trillion-parameter models and drives in-network compute. But hyperscalers and enterprises have invested billions in their Ethernet software infrastructure. They need a quick path forward that uses the existing ecosystem for AI workloads. Enter NVIDIA Spectrum‑X: a new kind of Ethernet purpose-built for distributed AI. Spectrum‑X Ethernet: Bringing AI to the Enterprise Spectrum‑X reimagines Ethernet for AI. Launched in 2023 Spectrum‑X delivers lossless networking, adaptive routing and performance isolation. The SN5610 switch, based on the Spectrum‑4 ASIC, supports port speeds up to 800 Gb/s and uses NVIDIA's congestion control to maintain 95% data throughput at scale. Spectrum‑X is fully standards‑based Ethernet. In addition to supporting Cumulus Linux, it supports the open‑source SONiC network operating system -- giving customers flexibility. A key ingredient is NVIDIA SuperNICs -- based on NVIDIA BlueField-3 or ConnectX-8 -- which provide up to 800 Gb/s RoCE connectivity and offload packet reordering and congestion management. Spectrum-X brings InfiniBand's best innovations -- like telemetry-driven congestion control, adaptive load balancing and direct data placement -- to Ethernet, enabling enterprises to scale to hundreds of thousands of GPUs. Large-scale systems with Spectrum‑X, including the world's most colossal AI supercomputer, have achieved 95% data throughput with zero application latency degradation. Standard Ethernet fabrics would deliver only ~60% throughput due to flow collisions. A Portfolio for Scale‑Up and Scale‑Out No single network can serve every layer of an AI factory. NVIDIA's approach is to match the right fabric to the right tier, then tie everything together with software and silicon. NVLink: Scale Up Inside the Rack Inside a server rack, GPUs need to talk to each other as if they were different cores on the same chip. NVIDIA NVLink and NVLink Switch extend GPU memory and bandwidth across nodes. In an NVIDIA GB300 NVL72 system, 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell Ultra GPUs are connected in a single NVLink domain, with an aggregate bandwidth of 130 TB/s. NVLink Switch technology further extends this fabric: a single GB300 NVL72 system can offer 130 TB/s of GPU bandwidth, enabling clusters to support 9x the GPU count of a single 8‑GPU server. With NVLink, the entire rack becomes one large GPU. Photonics: The Next Leap To reach million‑GPU AI factories, the network must break the power and density limits of pluggable optics. NVIDIA Quantum-X and Spectrum-X Photonics switches integrate silicon photonics directly into the switch package, delivering 128 to 512 ports of 800 Gb/s with total bandwidths ranging from 100 Tb/s to 400 Tb/s. These switches offer 3.5x more power efficiency and 10x better resiliency compared with traditional optics, paving the way for gigawatt‑scale AI factories. Toward Million‑GPU AI Factories AI factories are scaling fast. Governments in Europe are building seven national AI factories, while cloud providers and enterprises across Japan, India and Norway are rolling out NVIDIA‑powered AI infrastructure. The next horizon is gigawatt‑class facilities with a million GPUs. To get there, the network must evolve from an afterthought to a pillar of AI infrastructure. The lesson from the gigawatt data center age is simple: the data center is now the computer. NVLink stitches together GPUs inside the rack. NVIDIA Quantum InfiniBand scales them across it. Spectrum-X brings that performance to broader markets. Silicon photonics makes it sustainable. Everything is open where it matters, optimized where it counts.

[2]

NVIDIA Spectrum-XGS connects data centers in different cities to create AI Super-Factories

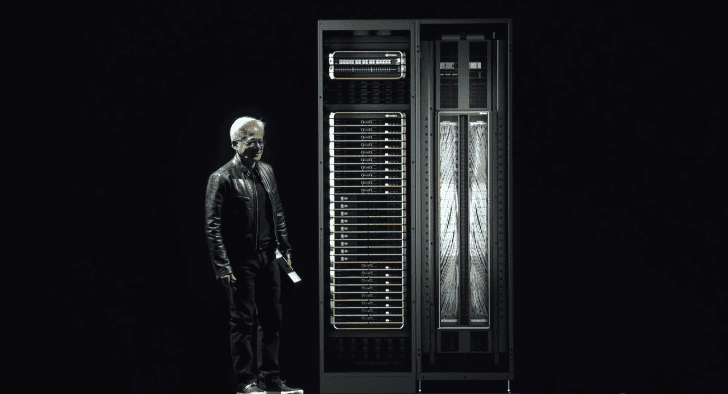

TL;DR: NVIDIA Spectrum-XGS Ethernet enables seamless connection of distributed data centers into unified, giga-scale AI super-factories, overcoming traditional Ethernet limitations with enhanced bandwidth, latency management, and congestion control. This hyperscale networking technology accelerates multi-GPU communication, driving breakthroughs in generative AI and large-scale AI infrastructure. NVIDIA Spectrum-XGS Ethernet is a new technology built for the AI era, and a time where the limits of data centers are demanding scale that often exceeds the capabilities of a single building. With Spectrum-XGS Ethernet, NVIDIA's latest networking technology will help combine the power of multiple data centers into "unified, giga-scale AI super-factories." NVIDIA Spectrum-XGS Ethernet will create "unified, giga-scale AI super-factories," image credit: NVIDIA. The technology was also created due to the limitations of off-the-shelf Ethernet networking, which is prone to latency, jitter, and "unpredictable performance." How Spectrum-XGS Ethernet differs is that it includes NVIDIA Spectrum-X switches and NVIDIA ConnectX-8 SuperNICs to deliver greater bandwidth density with telemetry, latency management, and something called "auto-adjusted distance congestion control." Spectrum-XGS Ethernet offers nearly double the performance to accelerate multi-GPU and multi-node communication across geographically distributed AI clusters. Designed for hyperscale, the first company that will deploy the technology in its data centers is CoreWeave. "The AI industrial revolution is here, and giant-scale AI factories are the essential infrastructure," said Jensen Huang, founder and CEO of NVIDIA. "With NVIDIA Spectrum-XGS Ethernet, we add scale-across to scale-up and scale-out capabilities to link data centers across cities, nations, and continents into vast, giga-scale AI super-factories." "CoreWeave's mission is to deliver the most powerful AI infrastructure to innovators everywhere," said Peter Salanki, cofounder and chief technology officer of CoreWeave. "With NVIDIA Spectrum-XGS, we can connect our data centers into a single, unified supercomputer, giving our customers access to giga-scale AI that will accelerate breakthroughs across every industry." Connecting data centers across different cities and countries to create "giga-scale AI super-factories" is impressive and could serve as the next step in exponentially expanding the capabilities of generative AI. NVIDIA is showcasing this technology at Hot Chips 2025, which is currently taking place at Stanford University.

[3]

NVIDIA's Spectrum-XGS Tech Fuses Multiple Datacenters Into a Single 'Giga-Cluster,' Unlocking Unprecedented Computing Power

After interconnecting powerful AI GPUs, NVIDIA has now presented a solution that connects multiple datacenters to bring massive compute power onboard. Scaling up AI computing power through architectural advancements isn't the right move because there will be a point when limitations will come into play. That's why NVIDIA has introduced a new interconnect platform that allows firms to combine 'distributed data centers into unified, giga-scale AI super-factories'. According to NVIDIA, the Spectrum-XGS eliminates the limitations present in scaling up a single datacenter, especially when it comes to geographical concerns, and the cost to cool such large systems in a single facility. Instead, interconnect multiple AI clusters to achieve significantly higher AI performance. With NVIDIA Spectrum-XGS Ethernet, we add scale-across to scale-up and scale-out capabilities to link data centers across cities, nations and continents into vast, giga-scale AI super-factories. - NVIDIA's CEO Jensen Huang Spectrum-XGS is claimed to be a derivative of the company's original Spectrum technology and a foundational pillar for AI computation. According to the company's blog post, Spectrum-XGS doubles performance relative to NCCL, a similar implementation for interconnecting multiple GPU nodes. More importantly, the technology comes with features like auto-adjusted distance congestion control and latency management, to ensure the longer distances between interconnected data centers don't result in performance loss. Interestingly, Coreweave will become the first company to integrate its hyperscalers with Spectrum-XGS Ethernet, and this move is claimed to "accelerate breakthroughs across every industry, " meaning that we'll see compute levels that were deemed impossible before. NVIDIA isn't stopping with the AI hype yet, and after the debut of technologies like silicon photonics network switches, we will definitely see interconnect mechanisms going to new levels.

[4]

NVIDIA Introduces Spectrum-XGS Ethernet to Connect Distributed Data Centers into Giga-Scale AI Super-Factories

NVIDIA announced NVIDIA Spectrum-XGS Ethernet, a scale-across technology for combining distributed data centers into unified, giga-scale AI super-factories. As AI demand surges, individual data centers are reaching the limits of power and capacity within a single facility. To expand, data centers must scale beyond any one building, which is limited by off-the-shelf Ethernet networking infrastructure with high latency and jitter and unpredictable performance. Spectrum-X Ethernet is a breakthrough addition to the NVIDIA Spectrum-X?? Ethernet platform that removes these boundaries by introducing scale-across infrastructure. It serves as a third pillar of AI computing beyond scale-up and scale-out, designed for extending the extreme performance and scale of Spectrum-X Ethernet to interconnect multiple, distributed data centers to form massive AI super-factories capable of giga-scale intelligence. Spectrum-XGS Ethernet is fully integrated into the Spectrum-X platform, featuring algorithms that dynamically adapt the network to the distance between data center facilities. The Spectrum-X Ethernet networking platform provides 1.6x greater bandwidth density than off-the-shelf networking for multi-tenant, hyperscale AI factories -- including the AI supercomputer. It comprises NVIDIA Spectrum-X switches and NVIDIA ConnectX®?-8 SuperNICs, delivering seamless scalability, ultralow latency and breakthrough performance for enterprises building the future of AI. Today's announcement follows a drumbeat of networking innovation announcements from NVIDIA, including NVIDIA Spectrum-X and NVIDIA Quantum-X silicon photonics networking switches, which enable AI factories to connect millions of GPUs across sites while reducing energy consumption and operational costs. Availability: NVIDIA Spectrum-XGS Ethernet is available now as part of the NVIDIA Spectrum-X Ethernet platform.

[5]

Nvidia is betting on dedicated networks for the era of 'AI factories'.

NVIDIA announces the rise of "AI factories," new data centers designed for training and inferencing very large-scale artificial intelligence models. These infrastructures are based on tens of thousands of GPUs connected by specialized network architectures. The company is highlighting its NVLink interconnect, capable of 130 TB/s of GPU-to-GPU bandwidth, and its InfiniBand Quantum technology, the benchmark in high-performance computing, which reduces latency and doubles effective bandwidth with the SHARP protocol. For companies committed to the Ethernet ecosystem, NVIDIA offers Spectrum-X, launched in 2023, which adapts Ethernet to the needs of AI with advanced congestion management and sustained throughput of 95% compared to 60% for traditional Ethernet. The strategy combines NVLink for internal rack communication, InfiniBand for hyperscale clusters, and Spectrum-X for existing Ethernet environments to support the rise of models with several thousand billion parameters.

Share

Share

Copy Link

NVIDIA introduces new networking technologies, including Spectrum-XGS Ethernet and NVLink, to connect distributed data centers into unified AI super-factories, addressing the growing demands of large-scale AI computation.

The Rise of AI Factories

In the rapidly evolving landscape of artificial intelligence, NVIDIA is spearheading a new era of computing infrastructure known as "AI factories." These are not your typical data centers, but massive, purpose-built facilities designed to train and deploy large-scale AI models

1

. As companies and governments worldwide invest billions in cloud-scale AI infrastructure, these AI factories are becoming the new frontier of computational power.

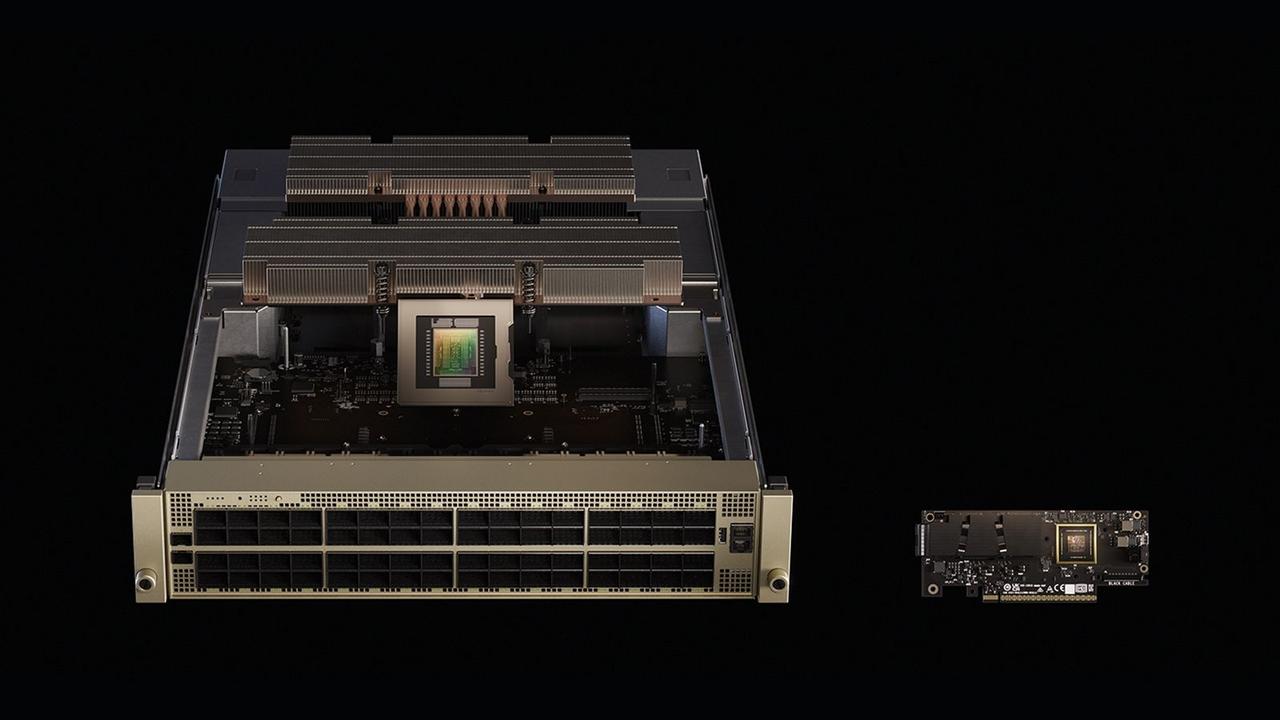

Source: NVIDIA

NVIDIA's Networking Innovations

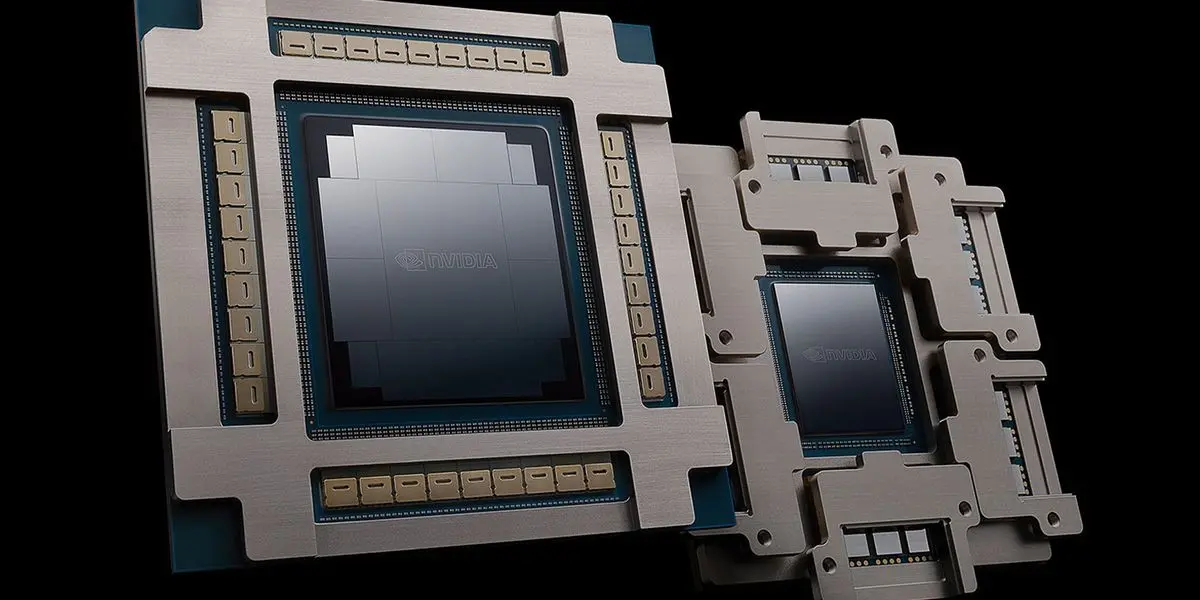

At the heart of these AI super-factories lies NVIDIA's cutting-edge networking technology. The company has introduced several key innovations to address the unique challenges posed by distributed AI computing:

-

NVIDIA Spectrum-XGS Ethernet: This new technology enables the seamless connection of distributed data centers into unified, giga-scale AI super-factories

2

. It overcomes traditional Ethernet limitations by offering enhanced bandwidth, latency management, and congestion control. -

NVLink: NVIDIA's NVLink spine, built from over 5,000 coaxial cables, provides an astounding 130 TB/s of GPU-to-GPU bandwidth

1

. This fully meshed network moves more data per second than the entire internet, creating a super-highway inside the server rack. -

NVIDIA Quantum InfiniBand: This technology is the gold standard for high-performance computing supercomputers and AI factories

1

. It uses adaptive routing and telemetry-based congestion control to optimize data flow and minimize network noise.

Overcoming Traditional Networking Limitations

Traditional Ethernet networks were not designed for the demands of distributed AI workloads. They often suffer from jitter, inconsistent delivery, and unpredictable performance

1

. NVIDIA's new networking solutions address these issues:- Spectrum-X Ethernet: This platform provides 1.6x greater bandwidth density than off-the-shelf networking for multi-tenant, hyperscale AI factories

4

. - Auto-adjusted distance congestion control: This feature ensures that longer distances between interconnected data centers don't result in performance loss

3

. - SHARP technology: Scalable Hierarchical Aggregation and Reduction Protocol doubles data bandwidth for collective operations

1

.

Scaling Across: The Third Pillar of AI Computing

Source: TweakTown

NVIDIA's CEO, Jensen Huang, emphasizes that Spectrum-XGS Ethernet adds a "scale-across" capability to the existing scale-up and scale-out approaches

2

. This allows data centers across cities, nations, and continents to be linked into vast, giga-scale AI super-factories.Related Stories

Industry Impact and Adoption

The impact of these networking innovations is already being felt in the industry:

- CoreWeave, a cloud services provider, will be the first company to deploy Spectrum-XGS Ethernet in its data centers

2

. - NVIDIA Quantum infrastructure connects the majority of the systems on the TOP500 list of the world's most powerful supercomputers, demonstrating 35% growth in just two years

1

.

Source: Wccftech

Future Implications

As AI models continue to grow in size and complexity, with some reaching trillion-parameter scales, the demand for more powerful and efficient networking solutions will only increase. NVIDIA's innovations in this space are laying the groundwork for the next generation of AI infrastructure, potentially unlocking unprecedented computing power and accelerating breakthroughs across various industries

3

.The era of AI factories and giga-scale computing is just beginning, and NVIDIA's networking technologies are poised to play a crucial role in shaping this new computational landscape.

References

Summarized by

Navi

[2]

[4]

[5]

Related Stories

Nvidia Unveils Groundbreaking Silicon Photonics Technology for Next-Gen AI Data Centers

25 Aug 2025•Technology

NVIDIA's Spectrum-X Ethernet Revolutionizes AI Data Centers for Meta and Oracle

14 Oct 2025•Technology

Nvidia Unveils Revolutionary Silicon Photonics Switches for AI Data Centers

19 Mar 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology