Nvidia Unveils Rubin CPX: A New GPU Optimized for Long-Context AI Inference

11 Sources

11 Sources

[1]

Nvidia unveils new GPU designed for long-context inference | TechCrunch

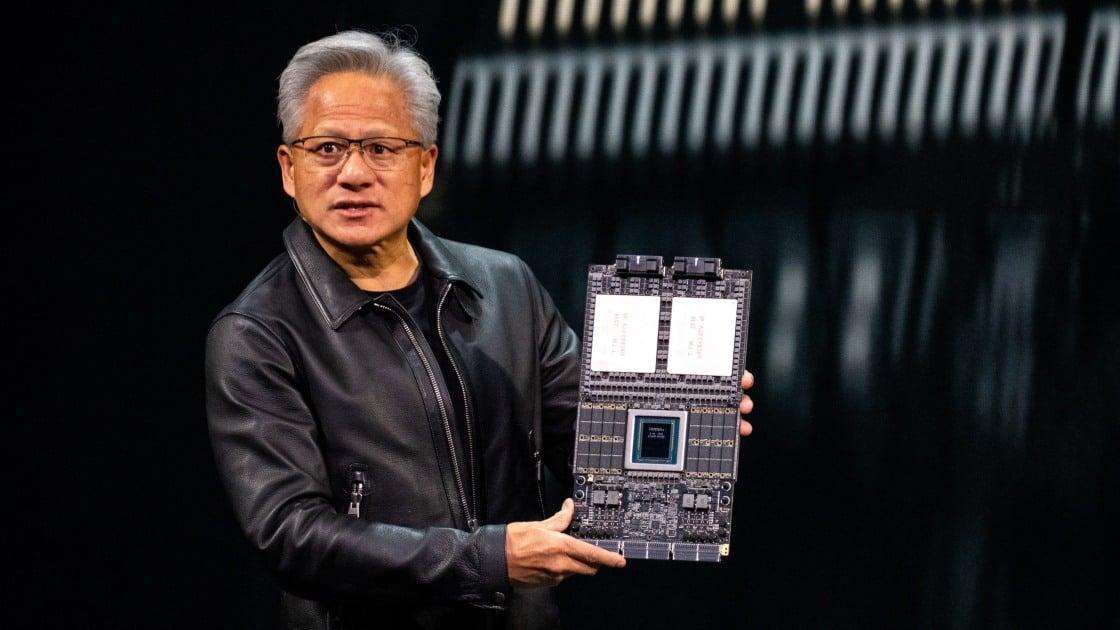

At the AI Infrastructure Summit on Tuesday, Nvidia announced a new GPU called the Rubin CPX, designed for context windows larger than 1 million tokens. Part of the chip giant's forthcoming Rubin series, the CPX is optimized for processing large sequences of context and is meant to be used as part of a broader "disaggregated inference" infrastructure approach. For users, the result will be better performance on long-context tasks like video generation or software development. Nvidia's relentless development cycle has resulted in enormous profits for the company, which brought in $41.1 billion in data center sales in its most recent quarter. The Rubin CPX is slated to be available at the end of 2026.

[2]

Nvidia Rubin CPX forms one half of new, "disaggregated" AI inference architecture -- approach splits work between compute- and bandwidth-optimized chips for best performance

Nvidia's "disaggregated" inference strategy will combine HBM-equipped Rubin GPUs with new Rubin CPX chips. Nvidia has announced its new Rubin CPX GPU today, a "purpose-built GPU designed to meet the demands of long-context AI workloads." The Rubin CPX GPU, not to be confused with a plain Rubin GPU, is an AI accelerator/GPU focused on maximizing the inference performance of the upcoming Vera Rubin NVL144 CPX rack. As AI workloads evolve, the computing architectures designed to power them are evolving in tandem. Nvidia's new strategy for boosting inference, termed disaggregated inference, relies on multiple distinct types of GPUs working in tandem to reach peak performance. Compute-focused GPUs will handle what it calls the "context phase," while different chips focused on memory bandwidth will handle the throughput-intensive "generation phase." The company explains that cutting-edge AI workloads involving multi-step reasoning and persistent memory, like AI video generation or agentic AI, benefit from the availability of huge amounts of context information. Inference for these large AI models has become the new frontier for AI hardware development, as opposed to training those models. To this end, Rubin CPX GPU is designed to be a workhorse for the compute-intensive context phase of disaggregated inference (more on that below), while the standard Rubin GPU can handle the more memory-bandwidth-limited generation phase. Rubin CPX is good for 30 petaFLOPs of raw compute performance on the company's new NVFP4 data type, and it has 128 GB of GDDR7 memory. For reference, the standard Rubin GPU will be able to reach 50 PFLOPs of FP4 compute and is paired with 288 GB of HBM4 memory. Early renders of the Rubin CPX GPU, such as the one above, appear to feature a single-die GPU design. The Rubin GPU will be a dual-die chiplet design, and as pointed out by ComputerBase, one-half of a standard Rubin would output 25 PFLOPs FP4; this leads some to speculate that Rubin CPX is a single, hyper-optimized slice of a full-fat Rubin GPU. The choice to include GDDR7 on the rather than HBM4 is also one of optimization. As mentioned, disaggregated inference workflows will split the inference process between the Rubin and Rubin CPX GPUs. Once the compute-optimized Rubin CPX has built the context for a task, for which the performance parameters of GDDR7 are sufficient, it will then pass the ball to a Rubin GPU for the generation phase, which benefits from the use of high-bandwidth memory. Rubin CPX will be available inside Nvidia's Vera Rubin NVL144 CPX rack, coming with Vera Rubin in 2026. The rack, which will contain 144 Rubin GPUs, 144 Rubin CPX GPUs, 36 Vera CPUs, 100 TB of high-speed memory, and 1.7 PB/s of memory bandwidth, is slated to produce 8 exaFLOPs NVFP4. This is 7.5x higher performance than the current-gen GB300 NVL72, and beats out the 3.6 exaFLOPs of the base Vera Rubin NVL144 without CPX. Nvidia claims that $100 million spent on AI systems with Rubin CPX could translate to $5 billion in revenue. For more about what all we know about the upcoming Vera Rubin AI platform, see our premium coverage of Nvidia's roadmap. We'll expect to see Rubin, Rubin CPX, and Vera Rubin altogether in person at Nvidia's presentation at GTC 2026 this March.

[3]

Nvidia's context-optimized Rubin CPX GPUs were inevitable

Why strap pricey, power-hungry HBM to a job that doesn't benefit from the bandwidth? Analysis Nvidia on Tuesday unveiled the Rubin CPX, a GPU designed specifically to accelerate extremely long-context AI workflows like those seen in code assistants such as Microsoft's GitHub Copilot, while simultaneously cutting back on pricey and power-hungry high-bandwidth memory (HBM). The first indication that Nvidia might be moving in this direction came when CEO Jensen Huang unveiled Dynamo during his GTC keynote in spring. The framework brought mainstream attention to the idea of disaggregated inference. As you may already be aware, inference on large language models (LLMs) can be broken into two categories: a computationally intensive compute phase and a second memory bandwidth-bound decode phase. Traditionally, both the prefill and decode have taken place on the same GPU. Disaggregated serving allows different numbers of GPUs to be assigned to each phase of the pipeline, avoiding compute or bandwidth bottlenecks as context sizes grow - and they're certainly growing quickly. Over the past few years, model context windows have leapt from a mere 4,096 tokens (think word fragments, numbers, and punctuation) on Llama 2 to as many as 10 million with Meta's Llama 4 Scout, released earlier this year. These large context windows are a bit like the model's short-term memory and dictate how many tokens it can keep track of when processing and generating a response. For the average chatbot, this is relatively small. The ChatGPT Plus plan supports a context length of about 32,000 tokens. It takes a fairly long conversation to exhaust it. For agentic workloads like code generation, however, making sense of a codebase may require juggling hundreds of thousands, if not millions, of tokens worth of code. In scenarios like this, far more raw compute capacity is required than memory bandwidth. Dedicating loads of HBM-packed GPUs to the prefill stage is expensive, power-hungry, and inefficient. Instead, Nvidia plans to reserve its HBM-equipped GPUs for decode and is introducing a new GPU, the Rubin CPX, which uses slower, cheaper, but more power-frugal GDDR7 memory instead. The result is a configuration that enables you to do the same amount of work using far less expensive hardware. According to Nvidia, each Rubin CPX accelerator will be capable of delivering 30 petaFLOPS of NVFP4 compute (it's unclear whether that figure assumes sparsity), and sport 128 GB of GDDR7 memory with both hardware encode and decode functionality intact. GDDR7 is a fraction of the speed of HBM. For example, the GDDR7-based RTX Pro 6000 has 96 GB of memory, but it maxes out at between 1.6-1.7 TBps. Compare that to a B300 SXM module, which has 180 GB of HBM3E and can deliver 4 TBps of bandwidth to each of its two Blackwell GPU dies. While Nvidia is touting NVFP4 performance, key-value (KV) caches have traditionally stored context at BF16 to preserve model accuracy, so actual prefill performance will likely depend on at what precision the key and value caches are stored. We're told the chip will also deliver a 3x acceleration to attention, a key mechanism in LLM inference, compared to its GB300 superchips. For comparison, the version of Rubin revealed at GTC will feature a pair of reticle-sized GPU dies on a single SXM module that'll deliver a combined 50 petaFLOPS of NVFP4, 288 GB of HBM4 with 13 TBps of memory bandwidth. Nvidia hasn't said how these chips will be integrated into its rack-scale NVL systems, but we do know that the GPU giant will offer a CPX version of that rack with two Vera CPUs, and 16 GPUs, eight Rubin (HBM) and eight Rubin CPX (GDDR7) per compute tray. In total, Nvidia's NVL144 CPX racks will feature 36 CPU sockets and 288 GPUs per rack. It's not immediately obvious how Nvidia will integrate these CPX GPUs into its system, though the NVL144 naming suggests Nvidia won't use its 1.8 TBps NVLink-C2C interconnect, and will instead utilize PCIe 6.0. Model context has become something of a new battleground for infrastructure and software vendors over the past year as models have become more advanced. In addition to disaggregated serving with frameworks like Nvidia Dynamo or llm-d, the idea of prompt caching and KV cache offload has also been gaining speed. The idea behind these is rather than recomputing KV caches every time, computation outputs from the prefill phase are cached to system memory. This way only tokens that haven't already been processed yet have to be computed. The developers of LMCache, which provides KV cache offload and caching functionality for popular model runners like vLLM, claim the approach can cut the time to first token by as much as 10x. This approach can be tiered with CXL memory expansion modules, in-memory databases such as Redis, or even storage arrays, so that when a user walks away from a chat or AI coding session, the KV cache can be saved for future use. Even when GPU and system memory have been exhausted, the KV caches only have to be retrieved from slower CXL memory or storage, but don't have to be recomputed. Enfabrica CEO Rochan Sankar, whose company has developed a sort of memory area network aimed at addressing the model context problem, likens this hierarchy to short, medium, and long-term memory. As we mentioned earlier, larger contexts put a bigger computational burden on accelerators, but they also require more memory. DeepSeek's latest model will use roughly 104 GB or 208 GB of memory for every 128,000-token sequence, depending on whether you store them at FP8 or BF16. That means to support just ten simultaneous requests with a 128K context length, you'd need 1-2 TB of memory. This is one of the challenges Enfabrica hopes will drive adoption of its Emfasys CXL memory appliances, each of which can expose up to 18 TB of memory as an RDMA target for GPU systems to access. The Pliops XDP LightningAI platform is similar in concept using RDMA to sidestep compatibility issues with CXL and SSDs rather than DRAM. Using NAND flash is an interesting choice. On the one hand, it's substantially less expensive. On the other, KV caching is an inherently write-intensive operation, which could be problematic unless you happen to have hoarded tons of Optane SSDs. ®

[4]

Nvidia unveils AI chips for video, software generation

Sept 9 (Reuters) - Nvidia (NVDA.O), opens new tab said on Tuesday it would launch a new artificial intelligence chip by the end of next year, designed to handle complex functions such as creating videos and software. The chips, dubbed "Rubin CPX", will be built on Nvidia's next-generation Rubin architecture -- the successor to its latest "Blackwell" technology that marked the company's foray into providing larger processing systems. As AI systems grow more sophisticated, tackling data-heavy tasks such as "vibe coding" or AI-assisted code generation and video generation, the industry's processing needs are intensifying. AI models can take up to 1 million tokens to process an hour of video content -- a challenging feat for traditional GPUs, the company said. Tokens refer to the units of data processed by an AI model. To remedy this, Nvidia will integrate various steps of the drawn-out processing sequence such as video decoding, encoding, and inference -- when AI models produce an output -- together into its new chip. Investing $100 million in these new systems could help generate $5 billion in token revenue, the company said, as Wall Street increasingly focuses on the return from pouring hundreds of billions of dollars into AI hardware. The race to develop the most sophisticated AI systems has made Nvidia the world's most valuable company, commanding a dominant share of the AI chip market with its pricey, top-of-the-line processors. Reporting by Arsheeya Bajwa in Bengaluru; Editing by Shilpi Majumdar Our Standards: The Thomson Reuters Trust Principles., opens new tab

[5]

Nvidia unveils Rubin CPX GPU with 128GB memory for enterprise AI workloads

Shipments planned for late 2026 with Rubin Ultra and Feynman already on roadmap Nvidia has announced a brand new GPU built on the Rubin architecture and designed for long-context AI workloads. Rubin CPX, as it's known, includes 128GB of GDDR7 memory, making it the company's first GPU at that capacity. There were rumors of a 128GB RTX gaming card, but this is 100% not that. This GPU is a compute engine aimed at inference in areas such as software development, research, and high-definition video generation. It will not be running Metal Gear Solid Delta: Snake Eater any time soon. The GPU delivers up to 30 petaFLOPs of NVFP4 compute and integrates hardware attention acceleration that Nvidia says is three times faster than the GB300 NVL72. It also incorporates four NVENC and four NVDEC units to accelerate video workflows. As part of Nvidia's broader push toward disaggregated inference, Rubin CPX is designed to handle the compute-heavy context phase, while other Rubin GPUs and Vera CPUs address generation tasks. By concentrating Rubin CPX on context processing tasks, Nvidia aims to improve throughput while lowering high-value inference deployment costs. Nvidia's Dynamo software will manage things behind the scenes, handing low-latency cache transfers and routing across components. The company's largest deployment model is the Vera Rubin NVL144 CPX rack. Each unit integrates 144 Rubin CPX GPUs, 144 Rubin GPUs, and 36 Vera CPUs. Together they deliver 8 exaFLOPs of NVFP4 compute, 100TB of high-speed memory, and 1.7PB/s of memory bandwidth. Quantum-X800 InfiniBand or Spectrum-X Ethernet with ConnectX-9 SuperNICs provide the connectivity. Shipments of Rubin CPX and the NVL144 CPX racks are currently penciled in for late 2026, following the recent tape-out at TSMC. Nvidia's roadmap includes Rubin Ultra, now expected in 2027, and Feynman, slated for 2028. Those designs will extend the Rubin architecture with higher density modules, HBM4E memory, and faster networking.

[6]

Nvidia previews Rubin CPX graphics card for disaggregated inference - SiliconANGLE

Nvidia previews Rubin CPX graphics card for disaggregated inference Nvidia Corp. today previewed an upcoming chip, the Rubin CPX, that will power artificial intelligence appliances with 8 exaflops of performance. AI inference involves two main steps. First, an AI model analyzes the information on which it will draw to answer the user's prompt. Once the analysis is complete, the algorithm generates its prompt response one token at a time. Today, the two tasks are usually done using the same hardware. Nvidia plans to take a different approach with its future AI systems. Instead of performing both steps of the inference workflow using the same graphics card, it plans to assign each step to a different chip. The company calls this approach disaggregated inference. Nvidia's upcoming Rubin CPX chip is optimized for the initial, so-called context phase of the two-step inference workflow. The company will use it to power a rack-scale system called the Vera Rubin NVL144 CPX (pictured.) Each appliance will combine 144 Rubin CPX chips with 144 Rubin GPUs, upcoming processors optimized for both phases of the inference workflow. The accelerators will be supported by 36 central processing units. Nvidia says the upcoming system will provide 8 exaflops of computing capacity. One exaflop corresponds to a quintillion, or a million trillion, computing operations per second. That's more than seven times the performance of the top-end GB300 NVL72 appliances currently sold by Nvidia. Under the hood, the Rubin CPX is based on a monolithic die design with 128 gigabytes of integrated GDDR7 memory. Nvidia also included components optimized to run the attention mechanism of large language models. An LLM's attention mechanism enables it to identify and prioritize the most important parts of the text snippet it's processing. According to Nvidia, the Rubin CPX can perform the task three times faster than its current-generation silicon. "We've tripled down on the attention processing," said Ian Buck, Nvidia's vice president of hyperscale and high-performance computing. The executive detailed that video processing workloads will receive a speed boost as well. The Rubin CPX includes hardware-level support for video encoding and decoding. That's the process of compressing a clip before it's transmitted over the network to save bandwidth and then restoring the original file. According to Nvidia, the Rubin CPX will enable AI models to process prompts with one million tokens' worth of data. That corresponds to tens of thousands of lines of code or one hour of video. In many cases, increasing the amount of data an AI model can consider while generating a prompt response boosts its output quality.

[7]

NVIDIA Launches Rubin CPX GPU for Million-Token AI Workloads

The semiconductor giant also introduced its AI Factory reference designs along with MLPerf Inference v5.1 results showing record performance for its Blackwell Ultra GPUs. NVIDIA has introduced Rubin CPX, a new class of GPU designed to process massive AI workloads such as million-token coding and long-form video applications. The launch took place at the AI Infra Summit in Santa Clara, where the company also shared new benchmark results from its Blackwell Ultra architecture. The system is scheduled for availability at the end of 2026. According to NVIDIA, every $100 million invested in Rubin CPX infrastructure could generate up to $5 billion in token revenue. "Just as RTX revolutionised graphics and physical AI, Rubin CPX is the first CUDA GPU purpose-built for massive-context AI, where models reason across millions of tokens of knowledge at once," NVIDIA chief Jensen Huang said. What Rubin CPX Offers Rubin CPX accelerates attention mec

[8]

NVIDIA Rubin CPX GPU to feature 128GB GDDR7 memory, launches end of 2026

TL;DR: NVIDIA's Rubin CPX GPU, launching in late 2026, delivers 30 PetaFLOPS of NVFP4 compute with 128GB GDDR7 memory, optimized for massive-context AI models and long-format video processing. Integrated in the Vera Rubin NVL144 CPX platform, it offers 8 exaflops AI performance and advanced memory bandwidth for next-gen AI inference. NVIDIA has announced its upcoming Rubin CPX GPU, a new specialized accelerator from the next-gen Rubin family of AI chips, made specifically for massive-context AI models, sporting a huge 128GB of GDDR7 memory. The new Rubin CPX GPU features 30 PetaFLOPS of NVFP4 compute performance on a single monolithic die, which marks a shift away from dual-GPU packages that NVIDIA has used on its current Blackwell and Blackwell Ultra AI GPUs, as well as the design path that the rest of the Rubin family will follow. Rubin CPX works hand in hand with NVIDIA Vera CPUs and Rubin GPUs inside of the new NVIDIA Vera Rubin NVL144 CPX platform, with the integrated NVIDIA MGX system featuring 8 exaflops of AI compute to provide 7.5x more AI performance than the new NVIDIA GB300 NVL72 AI system, as well as 100TB of fast memory and 1.7PB/sec of memory bandwidth in a single rack. Jensen Huang, founder and CEO of NVIDIA, said: "The Vera Rubin platform will mark another leap in the frontier of AI computing - introducing both the next-generation Rubin GPU and a new category of processors called CPX. Just as RTX revolutionized graphics and physical AI, Rubin CPX is the first CUDA GPU purpose-built for massive-context AI, where models reason across millions of tokens of knowledge at once". NVIDIA's new Rubin CPX enables the highest performance and token revenue for long-context processing -- far beyond what today's systems were designed to handle. This transforms AI coding assistants from simple code-generation tools into sophisticated systems that are capable of comprehending and optimizing large-scale software projects. The company explains that in order to process video, that AI models can "take up to 1 million tokens for an hour of content, pushing the limits of traditional GPU compute. Rubin CPX integrates video decoder and encoders, as well as long-context inference processing, in a single chip for unprecedented capabilities in long-format applications such as video search and high-quality generative video". "Built on the NVIDIA Rubin architecture, the Rubin CPX GPU uses a cost‑efficient, monolithic die design packed with powerful NVFP4 computing resources and is optimized to deliver extremely high performance and energy efficiency for AI inference tasks". NVIDIA's new Rubin CPX is expected to be available at the end of 2026.

[9]

Nvidia Launches New Chip To Boost AI Coding And Video Tools - NVIDIA (NASDAQ:NVDA)

On Tuesday, Nvidia NVDA unveiled the Rubin CPX (Core Partitioned X-celerator) GPU (Graphics Processing Unit), a new processor class built for massive-context artificial intelligence workloads such as million-token coding and generative video at the AI Infra Summit. The chip integrates long-context inference, video processing, and NVFP4 computing power to deliver faster, more efficient performance for next-generation AI applications. The Rubin CPX works with Nvidia Vera CPUs and Rubin GPUs inside the Vera Rubin NVL144 CPX platform, which delivers 7.5x more AI performance than prior systems, 100TB of memory, and 1.7 petabytes per second of bandwidth in a single rack. Also Read: Nvidia Stock Slips As Broadcom's OpenAI Chip Deal Signals Rising AI Rivalry Rubin CPX seeks to transform coding assistants, accelerate generative video, and unlock new opportunities for AI developers and creators by enabling large-scale context reasoning. Nvidia's stock gained 25% year-to-date, topping the NASDAQ Composite Index's 13% returns. Analysts set a consensus price forecast of $209.57 for Nvidia based on 37 ratings. The three most recent ratings came from Citigroup on August 28, 2025, JP Morgan on September 4, 2025, and Citigroup again on September 8, 2025. Their average forecast of $208.33 implies a 24.32% upside for Nvidia shares. However, Citi analyst Atif Malik cut Nvidia's price forecast to $210 from $220, warning that rising AI chip competition will weigh on future sales. He cited Broadcom's AVGO $10 billion custom chip order and growing traction with Alphabet GOOGL Google's Tensor Processing Units (TPUs) as key risks, predicting Nvidia's 2026 GPU sales could fall about 4% below prior estimates. Malik flagged Nvidia's reliance on its top two clients for 39% of quarterly revenue as another concern, while noting Broadcom's XPU momentum with partners like Meta Platforms META, OpenAI, and Oracle ORCL could further erode Nvidia's dominance. Price Action: NVDA stock is trading lower by 0.15% to $168.05 at last check on Tuesday. Read Next: Qualcomm And Valeo Broaden Collaboration To Speed Hands Off Driving Features Photo: Shutterstock NVDANVIDIA Corp$168.540.14%Stock Score Locked: Want to See it? Benzinga Rankings give you vital metrics on any stock - anytime. Reveal Full ScoreEdge RankingsMomentum87.99Growth97.86Quality92.71Value4.41Price TrendShortMediumLongOverviewAVGOBroadcom Inc$337.82-2.27%GOOGLAlphabet Inc$237.041.28%METAMeta Platforms Inc$760.791.13%ORCLOracle Corp$238.11-0.16%Market News and Data brought to you by Benzinga APIs

[10]

NVIDIA Rubin CPX GPU Is Designed For Super AI Tasks Including Million-Token Coding & GenAI, Up To 128 GB GDDR7 Memory, 30 PFLOPs of FP4

NVIDIA is unveiling new details of its next-gen Rubin AI platform, which will feature Vera CPUs alongside a new Rubin CPX chip with up to 128 GB GDDR7 memory. NVIDIA Rubin AI Platform Doubles Down On AI With Groundbreaking Speed & Efficiency, Rubin CPX GPUs Offer Up To 128 GB GDDR7 Memory NVIDIA has already distilled a lot of information about its next-gen Rubin AI platforms and even teased its next-next-gen Feynman platform. Today, NVIDIA is providing additional information on its Rubin GPUs and the respective platform, which will feature a range of new technologies such as Vera CPUs and the ConnectX-9 SuperNICs. NVIDIA today announced NVIDIA Rubin CPX, a new class of GPU purpose-built for massive-context processing. This enables AI systems to handle million-token software coding and generative video with groundbreaking speed and efficiency. Rubin CPX works hand in hand with NVIDIA Vera CPUs and Rubin GPUs inside the new NVIDIA Vera Rubin NVL144 CPX platform. This integrated NVIDIA MGX system packs 8 exaflops of AI compute to provide 7.5x more AI performance than NVIDIA GB300 NVL72 systems, as well as 100TB of fast memory and 1.7 petabytes per second of memory bandwidth in a single rack. A dedicated Rubin CPX compute tray will also be offered for customers looking to reuse existing Vera Rubin 144 systems. NVIDIA Rubin CPX enables the highest performance and token revenue for long-context processing -- far beyond what today's systems were designed to handle. This transforms AI coding assistants from simple code-generation tools into sophisticated systems that can comprehend and optimize large-scale software projects. To process video, AI models can take up to 1 million tokens for an hour of content, pushing the limits of traditional GPU compute. Rubin CPX integrates video decoder and encoders, as well as long-context inference processing, in a single chip for unprecedented capabilities in long-format applications such as video search and high-quality generative video. Built on the NVIDIA Rubin architecture, the Rubin CPX GPU uses a cost‑efficient, monolithic die design packed with powerful NVFP4 computing resources and is optimized to deliver extremely high performance and energy efficiency for AI inference tasks. via NVIDIA The brand new addition to the Rubin family is also a new class of GPUs that are purpose-built for AI tasks such as million-token software coding and GenAI. These new GPUs are said to deliver "Groundbreaking" speed and efficiency. The NVIDIA Rubin CPX chips will be accommodated alongside NVIDIA's next-gen Vera CPUs, the successor to the Grace CPU, inside the Vera Rubin NVL 144 CPX platform. This is an MGX system which offers up to 8 Exaflops of AI compute, a 7.5x uplift over the Grace Blackwell GB300 NVL72 platform. The system will also offer 100 TB of fast memory and a memory bandwidth of 1.7 Petabytes. The system offers 3x higher Attention performance than GB300 NVL72. Some features of the NVIDIA Vera Rubin CPX platform versus the Grace Blackwell platform: * 7.5x higher AI compute (8 Exaflops NVFP4) * 3.0x higher bandwidth (1.7 PB/s bandwidth) * 4.0x higher memory (150 TB in GDDR7) Talking about each chip, the NVIDIA Rubin CPX GPU will offer 30 PFLOPs of NVFP4 AI compute power & pack up to 128 GB of GDDR7 memory. Now, GDDR7 memory on a data center platform is an interesting choice. NVIDIA says that they have chosen GDDR7 instead of HBM for Rubin CPX due to its cost-efficient nature. These also come with 4x the NVENC and NVDNC capabilities. These expanded video capabilities will help a lot in GenAI tasks. NVIDIA expects the availability of the first Rubin CPX systems by the end of 2026, while Vera Rubin itself is expected to enter production soon, with a launch planned by GTC 2026.

[11]

NVIDIA Unveils Its Newest 'Rubin CPX' AI GPUs, Featuring 128 GB GDDR7 Memory & Targeted Towards High-Value Inference Workloads

NVIDIA has surprisingly unveiled a rather 'new class' of AI GPUs, featuring the Rubin CPX AI chip that offers immense inferencing power when combined with a rack-scale cluster. NVIDIA's Rubin CPX GPU Will Be Available In a Rack-Scale Configuration, Scaling To new Performance Levels Team Green has realized that AI inferencing is probably the next place to focus on when it comes to computing capabilities, and the firm has now announced a new class of AI chips under the 'CFX' lineup, with initial debut coming with the Rubin series. Announced at the AI Infra Summit, Team Green unveiled the Rubin CPX GPU, which is targeted towards long-context AI, and more importantly, will co-exist alongside Rubin GPUs and Vera CPUs. NVIDIA claims that the chip will bring in a 'revolution' when it comes to performing AI inference efficiently. In terms of specifications, the Rubin CPX features 30 petaFLOPs of NVFP4 compute, 128 GB of GDDR7 memory, and will feature in the 'exclusive' NVIDIA Vera Rubin NVL144 CPX rack, which will integrate 144 Rubin CPX GPUs, 144 Rubin GPUs, and 36 Vera CPUs to deliver eight exaFLOPs of NVFP4 compute. This figure alone is 7.5x times higher than Blackwell Ultra, and with technologies such as Spectrum-X Ethernet, NVIDIA plans to deliver a whopping million-token context AI inference workloads, scaling to new levels of performance. The platform is claimed to deliver " 30x to 50x return on investment", and the Vera Rubin NVL144 CPX rack will break the computing barriers present in "building the next generation of generative AI applications". Rubin CPX will also be available in other configurations as well, but they are yet to be announced, however, the chip is seen as a relatively low-cost solution, considering the integration of GDDR7 memory, rather than HBM. Team Green is covering all corners of the AI industry, leaving competitors little room to outpace them. NVIDIA has now swiftly transitioned towards focusing on inferencing, and with next-gen Rubin AI lineup dropping next year, we can see a huge leap in computing capabilities.

Share

Share

Copy Link

Nvidia announces the Rubin CPX, a GPU designed for long-context AI workloads, featuring 128GB of GDDR7 memory and 30 petaFLOPs of NVFP4 compute power. This new chip is part of Nvidia's 'disaggregated inference' strategy, aimed at improving AI performance for tasks like video generation and software development.

Nvidia Introduces Rubin CPX: A New Era in AI Inference

Nvidia, the leading GPU manufacturer, has unveiled its latest innovation in AI hardware: the Rubin CPX GPU. Announced at the AI Infrastructure Summit, this new chip is specifically designed to handle long-context AI workloads, marking a significant advancement in the field of artificial intelligence processing

1

.

Source: Benzinga

Technical Specifications and Performance

The Rubin CPX boasts impressive specifications:

- 30 petaFLOPs of NVFP4 compute power

- 128GB of GDDR7 memory

- Hardware-accelerated attention mechanism, 3x faster than the GB300 NVL72

- Four NVENC and four NVDEC units for video acceleration

2

5

Source: AIM

Disaggregated Inference: A New Approach

Nvidia's Rubin CPX is part of a broader strategy called 'disaggregated inference'. This approach splits AI workloads into two phases:

- Context phase: Handled by compute-optimized GPUs like the Rubin CPX

- Generation phase: Managed by memory bandwidth-optimized GPUs like the standard Rubin

2

This strategy aims to improve efficiency and performance for AI tasks requiring extensive context processing, such as video generation and software development

3

.Related Stories

Applications and Industry Impact

The Rubin CPX is designed to excel in scenarios where AI models need to process massive amounts of context:

- Video generation: Processing up to 1 million tokens for an hour of video content

- Code generation: Handling large codebases for AI-assisted programming

- Research and high-definition video workflows

4

Nvidia claims that a $100 million investment in systems using Rubin CPX could potentially generate $5 billion in token revenue, highlighting the significant economic impact of this technology

4

.Deployment and Future Roadmap

The Rubin CPX will be available as part of Nvidia's Vera Rubin NVL144 CPX rack, which includes:

- 144 Rubin CPX GPUs

- 144 standard Rubin GPUs

- 36 Vera CPUs

- 100TB of high-speed memory

- 1.7PB/s of memory bandwidth

5

The entire system is capable of delivering 8 exaFLOPs of NVFP4 compute power. Shipments are expected to begin in late 2026

1

5

.

Source: The Register

Looking ahead, Nvidia's roadmap includes:

- Rubin Ultra: Expected in 2027

- Feynman: Slated for 2028

These future iterations promise even higher density modules, HBM4E memory, and faster networking capabilities

5

.References

Summarized by

Navi

[3]

Related Stories

Nvidia Unveils Vera Rubin Superchip: Six-Trillion Transistor AI Platform Set for 2026 Production

29 Oct 2025•Technology

NVIDIA Unveils Next-Gen AI Powerhouses: Rubin and Rubin Ultra GPUs with Vera CPUs

19 Mar 2025•Technology

NVIDIA Unveils Roadmap for Next-Gen AI GPUs: Blackwell Ultra and Vera Rubin

28 Feb 2025•Technology

Recent Highlights

1

SpaceX acquires xAI as Elon Musk bets big on 1 million satellite constellation for orbital AI

Technology

2

French Police Raid X Office as Grok Investigation Expands to Include Holocaust Denial Claims

Policy and Regulation

3

UNICEF Demands Global Crackdown on AI-Generated Child Abuse as 1.2 Million Kids Victimized

Policy and Regulation