OpenAI's Bold Move: Integrating ChatGPT into College Campuses

3 Sources

3 Sources

[1]

OpenAI Wants to get College Kids Hooked on AI

AI chatbots like OpenAI's ChatGPT have been shown repeatedly to provide false information, hallucinate completely made-up sources and facts, and lead people astray with their confidently wrong answers to questions. For that reason, AI tools are viewed with skepticism by many educators. So, of course, OpenAI and its competitors are targeting colleges and pushing its services on studentsâ€"concerns be damned. According to the New York Times, OpenAI is in the midst of a major push to make ChatGPT a fixture on college campuses, replacing many aspects of the college experience with AI alternatives. According to the report, the company wants college students to have a "personalized AI account" as soon as they step on campus, same as how they receive a school email address. It envisions ChatGPT serving as everything from a personal tutor to a teacher's aide to a career assistant that helps students find work after graduation. Some schools are already buying in, despite the educational world initially greeting AI with distrust and outright bans. Per the Times, schools like the University of Maryland, Duke University, and California State University have all signed up for OpenAI's premium service, ChatGPT Edu, and have started to integrate the chatbot into different parts of the educational experience. It's not alone in setting its sights on higher education, either. Elon Musk's xAI offered free access to its chatbot Grok to students during exam season, and Google is currently offering its Gemini AI suite to students for free through the end of the 2025-26 academic year. But that is outside of the actual infrastructure of higher education, which is where OpenAI is attempting to operate. Universities opting to embrace AI, after initially taking hardline positions against it over fears of cheating, is unfortunate. There is already a fair amount of evidence piling up that AI is not all that beneficial if your goal is to learn and retain accurate information. A study published earlier this year found that reliance on AI can erode critical thinking skills. Others have similarly found that people will "offload" the more difficult cognitive work and rely on AI as a shortcut. If the idea of university is to help students learn how to think, AI undermines it. And that's before you get into the misinformation of it all. In an attempt to see how AI could serve in a focused education setting, researchers tried training different models on a patent law casebook to see how they performed when asked questions about the material. They all produced false information, hallucinated cases that did not exist, and made errors. The researchers reported that OpenAI's GPT model offered answers that were "unacceptable" and "harmful for learning" about a quarter of the time. That's not ideal. Considering that OpenAI and other companies want to get their chatbots ingrained not just in the classroom, but in every aspect of student life, there are other harms to consider, too. Reliance on AI chatbots can have a negative impact on social skills. And the simple fact that universities are investing in AI means they aren't investing in areas that would create more human interactions. A student going to see a tutor, for example, creates a social interaction that requires using emotional intelligence and establishing trust and connection, ultimately adding to a sense of community and belonging. A chatbot just spits out an answer, which may or may not be correct.

[2]

Welcome to campus, here's your ChatGPT

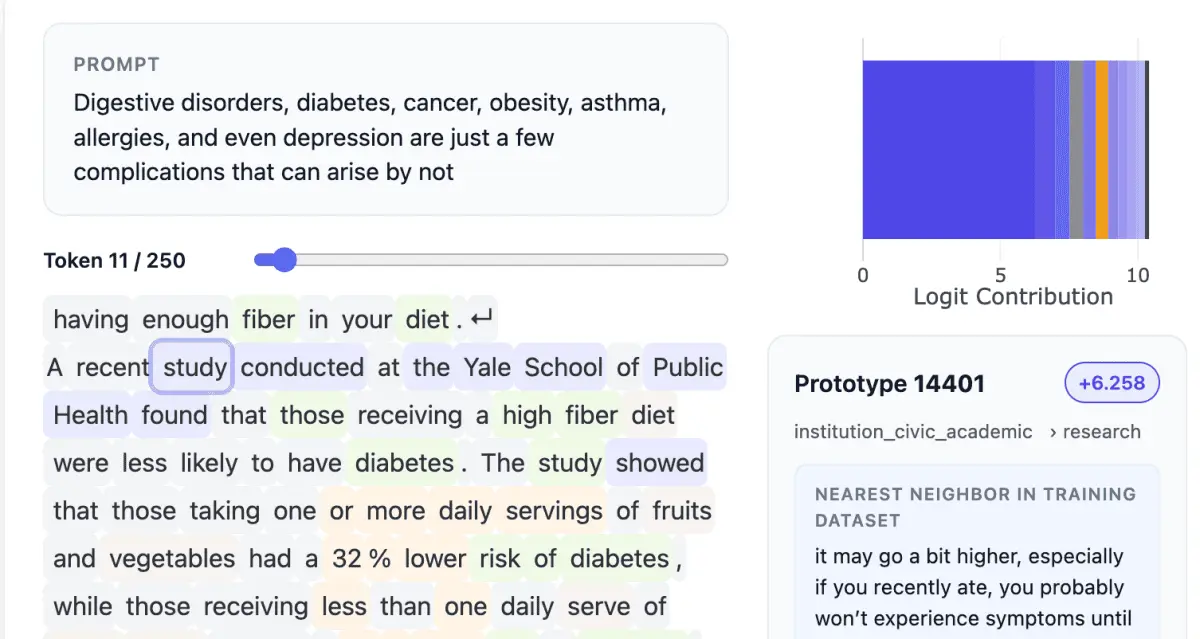

Some universities, including the University of Maryland and California State University, are already working to make AI tools part of students' everyday experiences. In early June, Duke University began offering unlimited ChatGPT access to students, faculty and staff. The school also introduced a university platform, called DukeGPT, with AI tools developed by Duke.OpenAI, the maker of ChatGPT, has a plan to overhaul college education -- by embedding its artificial intelligence tools in every facet of campus life. If the company's strategy succeeds, universities would give students AI assistants to help guide and tutor them from orientation day through graduation. Professors would provide customized AI study bots for each class. Career services would offer recruiter chatbots for students to practice job interviews. And undergrads could turn on a chatbot's voice mode to be quizzed aloud before a test. OpenAI dubs its sales pitch "AI-native universities." "Our vision is that, over time, AI would become part of the core infrastructure of higher education," Leah Belsky, OpenAI's vice president of education, said in an interview. In the same way that colleges give students school email accounts, she said, soon "every student who comes to campus would have access to their personalized AI account." To spread chatbots on campuses, OpenAI is selling premium AI services to universities for faculty and student use. It is also running marketing campaigns aimed at getting students who have never used chatbots to try ChatGPT. Some universities, including the University of Maryland and California State University, are already working to make AI tools part of students' everyday experiences. In early June, Duke University began offering unlimited ChatGPT access to students, faculty and staff. The school also introduced a university platform, called DukeGPT, with AI tools developed by Duke. OpenAI's campaign is part of an escalating AI arms race among tech giants to win over universities and students with their chatbots. The company is following in the footsteps of rivals like Google and Microsoft that have for years pushed to get their computers and software into schools, and court students as future customers. The competition is so heated that Sam Altman, OpenAI's CEO, and Elon Musk, who founded the rival xAI, posted dueling announcements on social media this spring offering free premium AI services for college students during exam period. Then Google upped the ante, announcing free student access to its premium chatbot service "through finals 2026." OpenAI ignited the recent AI education trend. In late 2022, the company's rollout of ChatGPT, which can produce human-sounding essays and term papers, helped set off a wave of chatbot-fueled cheating. Generative AI tools like ChatGPT, which are trained on large databases of texts, also make stuff up, which can mislead students. Less than three years later, millions of college students regularly use AI chatbots as research, writing, computer programming and idea-generating aides. Now OpenAI is capitalizing on ChatGPT's popularity to promote the company's AI services to universities as the new infrastructure for college education. OpenAI's service for universities, ChatGPT Edu, offers more features, including certain privacy protections, than the company's free chatbot. ChatGPT Edu also enables faculty and staff to create custom chatbots for university use. (OpenAI offers consumers premium versions of its chatbot for a monthly fee.) OpenAI's push to AI-ify college education amounts to a national experiment on millions of students. The use of these chatbots in schools is so new that their potential long-term educational benefits, and possible side effects, are not yet established. A few early studies have found that outsourcing tasks like research and writing to chatbots can diminish skills like critical thinking. And some critics argue that colleges going all-in on chatbots are glossing over issues like societal risks, AI labor exploitation and environmental costs. OpenAI's campus marketing effort comes as unemployment has increased among recent college graduates -- particularly in fields like software engineering, where AI is now automating some tasks previously done by humans. In hopes of boosting students' career prospects, some universities are racing to provide AI tools and training. California State University announced this year that it was making ChatGPT available to more than 460,000 students across its 23 campuses to help prepare them for "California's future AI-driven economy." Cal State said the effort would help make the school "the nation's first and largest AI-empowered university system." Some universities say they are embracing the new AI tools in part because they want their schools to help guide, and develop guardrails for, the technologies. "You're worried about the ecological concerns. You're worried about misinformation and bias," Edmund Clark, the chief information officer of California State University, said at a recent education conference in San Diego. "Well, join in. Help us shape the future." Last spring, OpenAI introduced ChatGPT Edu, its first product for universities, which offers access to the company's latest AI. Paying clients like universities also get more privacy: OpenAI says it does not use the information that students, faculty and administrators enter into ChatGPT Edu to train its AI. (The New York Times has sued OpenAI and its partner, Microsoft, over copyright infringement. Both companies have denied wrongdoing.) Last fall, OpenAI hired Belsky to oversee its education efforts. An ed tech startup veteran, she previously worked at Coursera, which offers college and professional training courses. She is pursuing a two-pronged strategy: marketing OpenAI's premium services to universities for a fee while advertising free ChatGPT directly to students. OpenAI also convened a panel of college students recently to help get their peers to start using the tech. Among those students are power users like Delphine Tai-Beauchamp, a computer science major at the University of California, Irvine. She has used the chatbot to explain complicated course concepts, as well as help explain coding errors and make charts diagraming the connections between ideas. "I wouldn't recommend students use AI to avoid the hard parts of learning," Tai-Beauchamp said. She did recommend students try AI as a study aid. "Ask it to explain something five different ways." Belsky said these kinds of suggestions helped the company create its first billboard campaign aimed at college students. "Can you quiz me on the muscles of the leg?" asked one ChatGPT billboard, posted this spring in Chicago. "Give me a guide for mastering this Calc 101 syllabus," another said. Belsky said OpenAI had also begun funding research into the educational effects of its chatbots. "The challenge is, how do you actually identify what are the use cases for AI in the university that are most impactful?" Belsky said during a December AI event at Cornell Tech in New York City. "And then how do you replicate those best practices across the ecosystem?" Some faculty members have already built custom chatbots for their students by uploading course materials like their lecture notes, slides, videos and quizzes into ChatGPT. Jared DeForest, the chair of environmental and plant biology at Ohio University, created his own tutoring bot, called SoilSage, which can answer students' questions based on his published research papers and science knowledge. Limiting the chatbot to trusted information sources has improved its accuracy, he said. "The curated chatbot allows me to control the information in there to get the product that I want at the college level," DeForest said. But even when trained on specific course materials, AI can make mistakes. In a new study -- "Can AI Hold Office Hours?" -- law school professors uploaded a patent law casebook into AI models from OpenAI, Google and Anthropic. Then they asked dozens of patent law questions based on the casebook and found that all three AI chatbots made "significant" legal errors that could be "harmful for learning." "This is a good way to lead students astray," said Jonathan S. Masur, a professor at the University of Chicago Law School and a co-author of the study. "So I think that everyone needs to take a little bit of a deep breath and slow down." OpenAI said the 250,000-word casebook used for the study was more than twice the length of text that its GPT-4o model can process at once. Anthropic said the study had limited usefulness because it did not compare the AI with human performance. Google said its model accuracy had improved since the study was conducted. Belsky said a new "memory" feature, which retains and can refer to previous interactions with a user, would help ChatGPT tailor its responses to students over time and make the AI "more valuable as you grow and learn." Privacy experts warn that this kind of tracking feature raises concerns about long-term tech company surveillance. In the same way that many students today convert their school-issued Gmail accounts into personal accounts when they graduate, Belsky envisions graduating students bringing their AI chatbots into their workplaces and using them for life. "It would be their gateway to learning -- and career life thereafter," Belsky said.

[3]

Inside OpenAI's Strategy to Make ChatGPT a College Staple

Universities Bet Big on AI OpenAI's Ambitious Plan to Embed ChatGPT in Higher Education with ChatGPT Edu OpenAI aims to revolutionize college education in collaboration with universities to create a new AI-based ecosystem. This would include assistance for students and faculty from orientation till graduation. Campuses like the University of Maryland, California State University, and Duke allow university-managed ChatGPT Edu with strong privacy controls. This is a strategic shift, effectively spearheading AI as a core educational infrastructure.

Share

Share

Copy Link

OpenAI is pushing to make ChatGPT a central part of college education, from personalized AI accounts for students to AI-assisted tutoring and career guidance. This move is met with both enthusiasm and concern in the academic world.

OpenAI's Vision for AI-Native Universities

OpenAI, the company behind ChatGPT, is making a bold move to revolutionize higher education by integrating its AI tools into every aspect of campus life. The company's ambitious plan, dubbed "AI-native universities," aims to provide students with personalized AI assistants from orientation day through graduation

1

2

.

Source: Analytics Insight

Leah Belsky, OpenAI's vice president of education, envisions AI becoming "part of the core infrastructure of higher education," with every student receiving access to a personalized AI account upon arrival on campus

2

. This initiative represents a significant shift in OpenAI's strategy, moving from a consumer-focused approach to targeting institutional adoption.Universities Embracing AI

Several prominent universities have already begun implementing OpenAI's vision:

- University of Maryland and California State University are working to integrate AI tools into students' daily experiences

2

. - Duke University now offers unlimited ChatGPT access to students, faculty, and staff, alongside a custom platform called DukeGPT

2

. - California State University announced plans to make ChatGPT available to over 460,000 students across its 23 campuses, aiming to become "the nation's first and largest AI-empowered university system"

2

.

ChatGPT Edu: OpenAI's Offering for Higher Education

OpenAI is promoting ChatGPT Edu, a premium service tailored for universities. This version offers enhanced features and privacy protections compared to the free chatbot

2

. Key aspects include:

Source: Gizmodo

- Custom chatbot creation for university-specific use

- Certain privacy safeguards for user data

- Expanded functionality for faculty and staff

The AI Arms Race in Education

OpenAI's push into higher education is part of a larger trend, with tech giants competing to dominate the educational AI market:

- Google is offering its Gemini AI suite to students for free through the 2025-26 academic year

1

. - Elon Musk's xAI provided free access to its chatbot Grok during exam season

1

. - Microsoft has long been pushing its computers and software into schools

2

.

Related Stories

Concerns and Criticisms

Despite the enthusiasm from some institutions, the integration of AI into education has raised significant concerns:

- Misinformation and Accuracy: AI chatbots have been shown to provide false information and hallucinate non-existent sources

1

. - Impact on Learning: Studies suggest that reliance on AI can erode critical thinking skills and lead to the "offloading" of cognitive work

1

. - Social Skills: There are concerns about the negative impact on students' social skills and the loss of human interactions in education

1

. - Long-term Effects: The long-term educational benefits and potential side effects of widespread AI use in education are not yet established

2

.

Source: ET

The Broader Implications

The integration of AI into higher education raises important questions about the future of learning and workforce preparation:

- Universities are racing to provide AI tools and training in response to increasing unemployment among recent graduates, particularly in fields where AI is automating tasks

2

. - Some critics argue that the rapid adoption of AI in education glosses over societal risks, labor exploitation, and environmental costs associated with these technologies

2

.

As OpenAI and other tech companies continue their push into higher education, the debate over the role of AI in learning and its impact on students' skills and future prospects is likely to intensify.

References

Summarized by

Navi

[3]

Related Stories

OpenAI Partners with California State University for Largest AI Education Deal Yet

05 Feb 2025•Technology

OpenAI Explores Integration of Custom Chatbots in Online Education

06 Dec 2024•Technology

OpenAI secures early lead in higher education with 700,000 ChatGPT licenses sold to colleges

18 Dec 2025•Business and Economy

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Pentagon Summons Anthropic CEO as $200M Contract Faces Supply Chain Risk Over AI Restrictions

Policy and Regulation

3

Canada Summons OpenAI Executives After ChatGPT User Became Mass Shooting Suspect

Policy and Regulation