OpenAI's Sora: Revolutionizing AI Video Generation Amid Copyright Concerns

30 Sources

30 Sources

[1]

The three big unanswered questions about Sora

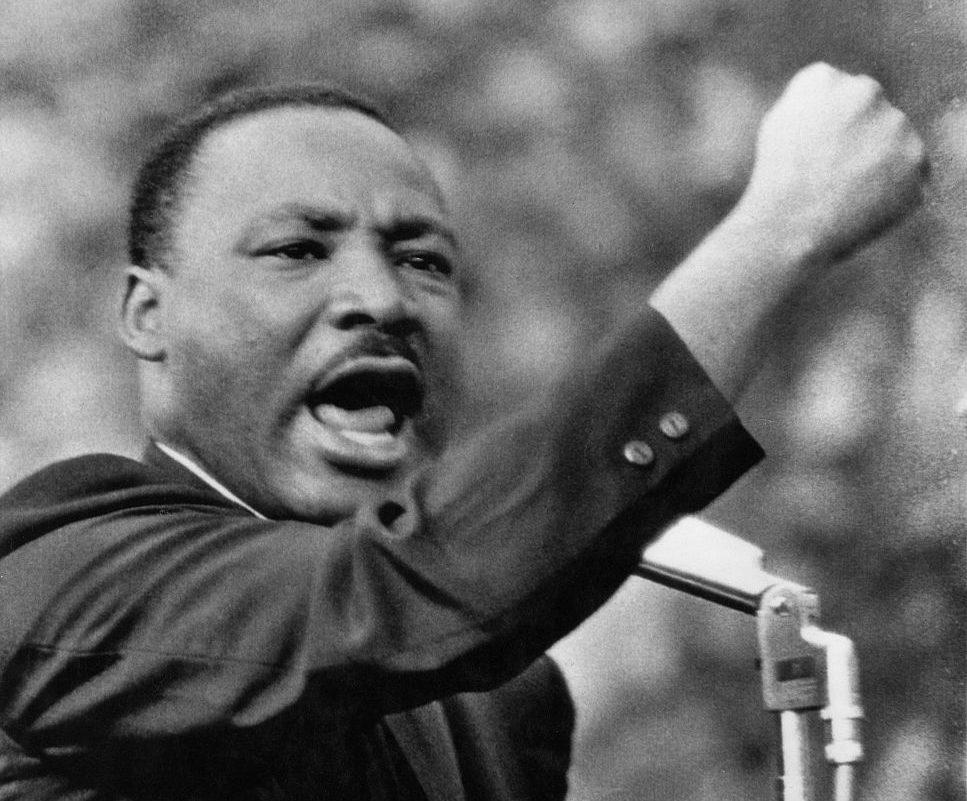

To some people who believed earnestly in OpenAI's promise to build AI that benefits all of humanity, the app is a punchline. A former OpenAI researcher who left to build an AI-for-science startup referred to Sora as an "infinite AI tiktok slop machine." That hasn't stopped it from soaring to the top spot on Apple's US App Store. After I downloaded the app, I quickly learned what types of videos are, at least currently, performing well: bodycam-style footage of police pulling over pets or various trademarked characters, including SpongeBob and Scooby Doo; deepfake memes of Martin Luther King Jr. talking about Xbox; and endless variations of Jesus Christ navigating our modern world. Just as quickly, I had a bunch of questions about what's coming next for Sora. Here's what I've learned so far. OpenAI is betting that a sizable number of people will want to spend time on an app in which you can suspend your concerns about whether what you're looking at is fake and indulge in a stream of raw AI. One reviewer put it this way: "It's comforting because you know that everything you're scrolling through isn't real, where other platforms you sometimes have to guess if it's real or fake. Here, there is no guessing, it's all AI, all the time." This may sound like hell to some. But judging by Sora's popularity, lots of people want it. So what's drawing these people in? There are two explanations. One is that Sora is a flash-in-the-pan gimmick, with people lining up to gawk at what cutting-edge AI can create now (in my experience, this is interesting for about five minutes). The second, which OpenAI is betting on, is that we're witnessing a genuine shift in what type of content can draw eyeballs, and that users will stay with Sora because it allows a level of fantastical creativity not possible in any other app. There are a few decisions down the pike that may shape how many people stick around: how OpenAI decides to implement ads, what limits it sets for copyrighted content (see below), and what algorithms it cooks up to decide who sees what. OpenAI is not profitable, but that's not particularly strange given how Silicon Valley operates. What is peculiar, though, is that the company is investing in a platform for generating video, which is the most energy-intensive (and therefore expensive) form of AI we have. The energy it takes dwarfs the amount required to create images or answer text questions via ChatGPT. This isn't news to OpenAI, which has joined a half-trillion-dollar project to build data centers and new power plants. But Sora -- which currently allows you to generate AI videos, for free, without limits -- raises the stakes: How much will it cost the company?

[2]

OpenAI Says It's Giving Sora Users More Control Over How Their Images Are Used

It's been less than a week since OpenAI dropped a sister app to ChatGPT, and it's been a wild, AI deepfake fever dream. Sora is the company's brand new invite-only social media app, named after its AI video generator. And some changes are coming that will affect how you can use it. Sora's most popular feature is called cameo, and it lets you upload a video of your likeness that you and your friends can place yourself into any AI-generated scene. It's how I made a video of CEO Sam Altman claiming Gemini is better than ChatGPT, and how CNET smart glasses expert Scott Stein made a scarily realistic version of him reviewing the new Meta AI smart glasses. The advanced model's capabilities have many people worried about the possibility of misinformation and copyright infringement. When you sign up for Sora, you can decide to opt out of letting other people use your likeness. And bigger copyright holders, like movie and game studios, at first needed to opt out if they didn't want OpenAI's video model to be able to create clips using their copyrighted characters and imagery. That simply isn't how copyright law works, Robert Rosenberg, intellectual property lawyer at Moses and Singer LLP, told me in an interview. "The law has been around a long time that copyright attaches to works from the moment that they're created," Rosenberg said. "For [OpenAI] to say you need to opt out, I think, was a complete non-starter for the copyright community." OpenAI reversed its stance late last week after Sora's launch. And the company isn't stopping there. OpenAI's Head of Sora Bill Peebles said on Sunday there are going to be some new cameo restrictions. You always had the option to block other people from using your likeness, but now you can also add restricted keywords or scenarios. For example, you can tell Sora not to allow people to use your face and voice in AI political commentary, Peebles said. The company is also working on making the white watermark more visible when you download videos. Sora's safety guardrails are still leaning toward overmoderating, as is common with new AI media tools, but the company is refining them to "reduce false negatives and catch loopholes." These changes seem good in theory, but it will all depend on how they're implemented. Sora is a bit unique: It's a social media platform and an AI video generator. Established law, like Section 230 of the Communications Decency Act, says social media companies are platforms and therefore aren't responsible for what content their users create and share on them. But we've seen entertainment giants like Disney, Universal and Warner Bros. take AI firms to court because their models let people reproduce copyrighted characters without permission. These new changes to Sora could help OpenAI get ahead of similar lawsuits. "The question is going to be how much responsibility the platforms are taking for these [name, image and likeness] uses," said Rosenberg. "It's certainly better than what they came out of the box doing, but the question is whether they're going to implement it in a way that satisfies individuals and the creative community." OpenAI is no stranger to copyright concerns. The New York Times and others have sued, alleging the AI company illegally uses their copyrighted content to feed its AI chatbot. These concerns grew when OpenAI dropped an image generator model and people quickly began to make Studio Ghibli-esque AI images. Copyright continues to be one of the most important and controversial legal issues for AI and content creators. (Disclosure: Ziff Davis, CNET's parent company, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

[3]

OpenAI wasn't expecting Sora's copyright drama

When OpenAI released its new AI-generated video app Sora last week, it launched with an opt-out policy for copyright holders -- media companies would need to expressly indicate they didn't want their AI-generated characters running rampant on the app. But after days of Nazi SpongeBob, criminal Pikachu, and Sora-philosophizing Rick and Morty, OpenAI CEO Sam Altman announced the company would reverse course and "let rightsholders decide how to proceed." In response to a question about why OpenAI changed its policy, Altman said that it came from speaking with stakeholders and suggested he hadn't expected the outcry. "I think the theory of what it was going to feel like to people, and then actually seeing the thing, people had different responses," Altman said. "It felt more different to images than people expected." The TikTok-like Sora app offers an endless scroll and the ability to create 10-second videos -- with audio included -- of virtually anything, including your own AI-generated self (dubbed a "cameo") and anyone who's given consent for you to use their likeness. While it tries to restrict depictions of people who aren't on the app, its text prompts have proven more than capable of generating copyrighted characters. Altman said that many rightsholders are excited, but they want "a lot more controls," adding that Sora "got very popular very quickly ... We thought we could slow down the ramp; that didn't happen." "We obviously really care about rightsholders and about people," he said. "We want to build these additional controls, but I think you will see a lot of major pieces of content be available with restrictions about what they can do and not do." Among the system's early adopters, Altman said he was surprised people would have "in-between" feelings about allowing people to make AI-generated videos using their likenesses on Sora. He said he expected people would either want to try making their cameo public or not, but not that there would be so much nuance, and so that's why the company recently introduced more restrictions. A lot of people have changed their minds about whether or not they'd like their cameos to be public, Altman said, but that "they don't want their cameo to say offensive things or things that they find deeply problematic." Bill Peebles, OpenAI's head of Sora, posted on X Sunday that the team had "heard from lots of folks who want to make their cameos available to everyone but retain control over how they're used," adding that now users can specify how their cameo is used via text instructions to Sora, such as "don't put me in videos that involve political commentary" or "don't let me say this word." Peebles also said that the team is working on ways to make the Sora watermark on downloaded videos "clearer and more visible." Many people have raised concerns about the misinformation crisis that could naturally come from hyperrealistic AI-generated videos -- especially when the watermark denoting them as AI-generated isn't very large and can be removed easily, according to video tutorials proliferating online. "I also know people are already finding ways to remove it," Altman said of the watermark during the Q&A Monday. During Altman's keynote speech at DevDay, he said the company was immediately releasing a preview of Sora 2 in OpenAI's API, allowing developers to access the same model that powers Sora 2 and create ultra-realistic AI-generated videos for their own purposes, ostensibly without any sort of watermark. During the Q&A with reporters, when asked about how the company would implement safeguards for Sora 2 in the API, Altman did not specifically answer the question. Altman said he was surprised by the amount of demand for generating videos solely for group chats -- i.e., for sharing with just one other person or a handful of people, but not more widely than that. Although that's been popular, he said, "it's not a great fit for how the current app works." He positioned the launch's speed bumps as learning opportunities. "Not for much longer will we have the only good video model out there, and there's going to be a ton of videos with none of our safeguards, and that's fine, that's the way the world works," Altman said, adding, "We can use this window to get society to really understand, 'Hey, the playing field changed, we can generate almost indistinguishable video in some cases now, and you've got to be ready for that.'" Altman said he feels that people don't pay attention to OpenAI's technology when people at the company talk about it, only when they release it. "We've got to have ... this sort of technological and societal co-evolution," Altman said. "I believe that works, and I actually don't know anything else that works. There are clearly going to be challenges for society contending with this quality, and what will get much better, with the video generation. But the only way that we know of to help mitigate it is to get the world to experience it and figure out how that's going to go." It's a controversial take, especially for an AI CEO. For as long as AI has been around, it's been used in ways that disproportionately affect minorities and vulnerable populations -- ranging from wrongful arrests to AI-generated revenge porn. OpenAI has some guardrails for Sora in place, but if history -- and the last week -- is any guide, people will find ways to get around them. Watermark removers are already proliferating online, with some people using "magic eraser"-type tools and others coding their own ways to remove the watermark convincingly. For now, text prompts don't allow for generating a specific face without permission, but people have allegedly already gotten around that rule to generate close-enough approximations of someone to instill fear or make threats, and to make suggestive videos, including videos of women holding dildo-like objects. When asked if OpenAI's plans translate to a "move fast and break things" approach, Altman said, "Not at all," adding that user criticism of Sora right now leans toward saying that the company is "way too restrictive" and "censorship." He said the company was starting the rollout conservatively and "will find ways to allow more over time." Altman said turning a profit on Sora was "not in my top 10 concerns but ... obviously someday we have to be very profitable, and we're confident and patient that we will get there." He said right now the company is in a phase of "investing aggressively." Whatever the initial difficulties, Greg Brockman, president of OpenAI, said he was struck by the adoption curve for Sora -- and that it was even more intense than that of ChatGPT. It's stayed consistent at the top of the list for free apps in Apple's App Store. "I think this points a little bit to the future -- this thing we keep coming back to: We're going to need more compute," he said. "To some extent, that's the number-one lesson of the [Sora] launch so far." It was essentially a pitch for Stargate, OpenAI's joint venture with SoftBank and Oracle to bolster AI infrastructure in the US, starting with a $100 billion investment and adding up to $500 billion over four years. President Donald Trump has championed the venture, and OpenAI has announced a handful of new data center sites in Texas, Ohio, and New Mexico. The energy-hungry projects have been controversial, and they're often able to run with staff of a couple hundred people after initial construction, despite promises of large-scale job creation. But OpenAI is full speed ahead. On Monday, it struck a deal with chipmaker AMD that could allow OpenAI to take a 10 percent stake. Hours later, in the Q&A with reporters, Altman was asked how interested the company is in building its own chip. "We are interested in the full stack of AI infrastructure," he replied. At another point, he told reporters they should "expect to hear a lot more" from OpenAI on the infrastructure stack. During the session, OpenAI executives over and over again emphasized the lack of compute and how much it can block OpenAI and its competitors from being able to offer services at scale. "Asking, 'How much compute do you want?' is a little bit like asking, 'How much of the workforce do you want?'" Brockman said. "The answer is you can always get more out of more." And right now, more capacity for deepfaking your friends is the latest selling point.

[4]

OpenAI IP promises ring hollow to Sora losers

Altman promises copyright holders a cut of video revenue, if he ever figures out how to make some. analysis OpenAI's new Sora 2 video generator has become the most popular free app in Apple's App Store since launching last week. It has also drawn ire from Hollywood studios and anyone whose characters and storylines appear in the user-generated content without their explicit permission. Now CEO Sam Altman says rightsholders will be getting greater control over how their properties are used - and may even be paid. Altman said in a recent blog post that OpenAI was making two big changes to Sora 2, which was released on Tuesday September 30. He noted that this backtrack occurred after "taking feedback from users, rightsholders, and other interested groups." "First, we will give rightsholders more granular control over generation of characters, similar to the opt-in model for likeness but with additional controls," Altman said. The likenesses Altman mentioned seem to refer to Sora's new "cameo" feature, which allows people to grant permission for others to use their face and voice in Sora videos. According to OpenAI's help page, users who want to be featured in cameos have to record video and audio of themselves for Sora to ingest. Users who chose to hand their likeness over to OpenAI can restrict the use of their appearance to only their own videos, or to those made by approved people, mutual followers, or anyone on the app. "We are hearing from a lot of rightsholders who are very excited for this new kind of 'interactive fan fiction' and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used," Altman wrote. "We want to apply the same standard towards everyone, and let rightsholders decide how to proceed." Given that this is generative AI, and no one really knows how the black box makes its decisions, Altman admitted that "there may be some edge cases of generations that get through that shouldn't." The OpenAI CEO didn't specify exactly how these new features would work with copyrighted material - for example, if copyright holders would be able to grant limited use rights, how permission would be requested and granted, nor how rights would be enforced in the case of complicated copyright chains of ownership. Altman also didn't mention reports that all copyright holders would be required to opt out of having Sora use their data. Then there's the question of whether Sora would use copyrighted material for training, even if rightsholders banned their material from being used in the final output. The devil is in the details, and a lot of details have simply not been disclosed. Given these omissions, Altman's blog post feels a lot like an attempt to squeeze the words "opt-in" near "rightsholders" in the same sentence without actually connecting the two. We reached out to OpenAI to get an explanation of whether IP holders would still have to formally opt out of having their properties used by Sora, but didn't get an answer by press time. A lot of Hollywood power-players are unhappy about the fact that their properties can now be - and reportedly have been - spun into AI videos doing things they'd never allow if they were in control. According to reports from the days following Sora 2's release, Disney has opted out of having its intellectual property appear in Sora 2. Talent agency WME, which represents a number of film, TV, music, and sports A-listers, has opted all of its clients out of Sora 2 as well. In an effort to stem the tide, Altman also floated the idea of revenue sharing for IP holders who let their content be used by Sora - although, again, with very few details. "We are going to have to somehow make money for video generation," Altman said, saying that users were creating far more videos than the AI giant expected. The company has yet to turn a profit, but once it does, IP rightsholders are going to get a slice, he said. "The exact model will take some trial and error to figure out, but we plan to start very soon," Altman added. "Our hope is that the new kind of engagement is even more valuable than the revenue share, but of course we want both to be valuable." Overall, the approach seems akin to YouTube's in its early years - let people post a flood of content, copyright be damned, then sort out the mechanisms for payment and control once the horse is out of the barn. But given how little profit the AI industry is actually making as a whole, that revenue sharing promise rings particularly hollow. At least when Google bought YouTube, it had its search advertising business humming along, and one could theoretically see how YouTube would slot into its ad platform and make money someday. OpenAI has no such cash cow yet. ®

[5]

What the Arrival of A.I. Video Generators Like Sora Means for Us

Brian X. Chen is The Times's lead consumer technology writer and the author of Tech Fix, a column about the tech we use. This month, OpenAI, the maker of the popular ChatGPT chatbot, graced the internet with a technology that most of us probably weren't ready for. The company released an app called Sora, which lets users instantly generate realistic-looking videos with artificial intelligence by typing a simple description, such as "police bodycam footage of a dog being arrested for stealing rib-eye at Costco." Sora, a free app on iPhones, has been as entertaining as it is has been disturbing. Since its release, lots of early adopters have posted videos for fun, like phony cellphone footage of a raccoon on an airplane or fights between Hollywood celebrities in the style of Japanese anime. (I, for one, enjoyed fabricating videos of a cat floating to heaven and a dog climbing rocks at a bouldering gym.) Yet others have used the tool for more nefarious purposes, like spreading disinformation, including fake security footage of crimes that never happened. The arrival of Sora, along with similar A.I.-powered video generators released by Meta and Google this year, has major implications. The tech could represent the end of visual fact -- the idea that video could serve as an objective record of reality -- as we know it. Society as a whole will have to treat videos with as much skepticism as people already do words. In the past, consumers had more confidence that pictures were real ("Pics or it didn't happen!"), and when images became easy to fake, video, which required much more skill to manipulate, became a standard tool for proving legitimacy. Now that's out the door. "Our brains are powerfully wired to believe what we see, but we can and must learn to pause and think now about whether a video, and really any media, is something that happened in the real world," said Ren Ng, a computer science professor at the University of California, Berkeley, who teaches courses on computational photography. Sora, which became the most downloaded free app in Apple's App Store this week, has caused upheaval in Hollywood, with studios expressing concern that videos generated with Sora have already infringed on copyrights of various films, shows and characters. Sam Altman, the chief executive of OpenAI, said in a statement that the company was collecting feedback and would soon give copyright holders control over generation of characters and a path to making money from the service. (The New York Times has sued OpenAI and its partner, Microsoft, claiming copyright infringement of news content related to A.I. systems. The two companies have denied those claims.) So how does Sora work, and what does this all mean for you, the consumer? Here's what to know. How do people use Sora? While anyone can download the Sora app for free, the service is currently invitation-only, meaning people can use the video generator only by receiving an invite code from another Sora user. Plenty of people have been sharing codes on sites and apps like Reddit and Discord. Once users register, the app looks similar to short-form video apps like TikTok and Instagram's Reels. Users can create a video by typing a prompt, such as "a fight between Biggie and Tupac in the style of the anime 'Demon Slayer.'" (Before Mr. Altman announced that OpenAI would give copyright holders more control over how their intellectual property was used on the service, OpenAI initially required them to opt out of having their likeness and brands used on the service, so deceased people became easy targets for experimentation.) Users can also upload a real photo and generate a video with it. After the video generates in about a minute, they can post it to a feed inside the app, or they can download the video and share it with friends or post it on other apps such as TikTok and Instagram. When Sora arrived this month, it stood out because videos generated with the service looked much more real than those made with similar services including Veo 3 from Google, a tool built into the Gemini chatbot, and Vibe, which is part of the Meta AI app. What does this mean for me? The upshot is that any video you see on an app that involves scrolling through short videos, such as TikTok, Instagram's Reels, YouTube Shorts and Snapchat, now has a high likelihood of being fake. Sora signifies an inflection point in the era of A.I. fakery. Consumers can expect copycats to emerge in the coming months, including from bad actors offering A.I. video generators that can be used with no restrictions. "Nobody will be willing to accept videos as proof of anything anymore," said Lucas Hansen, a founder of CivAI, a nonprofit that educates people about A.I.'s abilities. What problems should I look out for? OpenAI has restrictions in place to prevent people from abusing Sora by generating videos with sexual imagery, malicious health advice and terrorist propaganda. However, after an hour of testing the service, I generated some videos that could be concerning: * Fake dashcam footage that can be used for insurance fraud: I asked Sora to generate dashcam video of a Toyota Prius being hit by a large truck. After the video was generated, I was even able to change the license plate number. * Videos with questionable health claims: Sora made a video of a woman citing nonexistent studies about deep-fried chicken being good for your health. That was not malicious, but bogus nonetheless. * Videos defaming others: Sora generated a fake broadcast news story making disparaging comments about a person I know. Since Sora's release, I have also seen plenty of problematic A.I.-generated videos while scrolling through TikTok. There was one featuring phony dashcam footage of a Tesla falling off a car carrier onto a freeway, another with a fake broadcast news story about a fictional serial killer and a fabricated cellphone video of a man being escorted out of a buffet for eating too much. An OpenAI spokesman said the company had released Sora as its own app to give people a dedicated space to enjoy A.I.-generated videos and recognize that the clips were made with A.I. The company also integrated technology to make videos simple to trace back to Sora, including watermarks and data stored inside the video files that act as signatures, he said. "Our usage policies prohibit misleading others through impersonation, scams or fraud, and we take action when we detect misuse," the company said. How do I know what's fake? Even though videos generated with Sora include a watermark of the app's branding, some users have already realized they can crop out the watermark. Clips made with Sora also tend to be short -- up to 10 seconds long. Any video resembling the quality of a Hollywood production could be fake, because A.I. models have largely been trained with footage from TV shows and movies posted on the web, Mr. Hansen said. In my tests, videos generated with Sora occasionally produced obvious mistakes, including misspellings of restaurant names and speech that was out of sync with people's mouths. But any advice on how to spot an A.I.-generated video is destined to be short-lived because the technology is rapidly improving, said Hany Farid, a professor of computer science at the University of California, Berkeley, and a founder of GetReal Security, a company that verifies the authenticity of digital content. "Social media is a complete dumpster," said Dr. Farid, adding that one of the surest ways to avoid fake videos is to stop using apps like TikTok, Instagram and Snapchat.

[6]

Sora gives deepfakes 'a publicist and a distribution deal.' It could change the internet

Online safety experts say something else that is happening may be less obvious but more consequential to the future of the internet: OpenAI has essentially rebranded deepfakes as a light-hearted plaything and recommendation engines are loving it. OpenAI hide caption Videos made with OpenAI's Sora app are flooding TikTok, Instagram Reels and other platforms, making people increasingly familiar -- and fed up -- with nearly unavoidable synthetic footage being pumped out by what amounts to an artificial intelligence slop machine. Digital safety experts say something else that is happening may be less obvious but more consequential to the future of the internet: OpenAI has essentially rebranded deepfakes as a light-hearted plaything and recommendation engines are loving it. As the videos race across millions of peoples' feeds, perceptions are being quickly reshaped about the truth, and soon, perhaps the basic norms of being online. "It's as if deepfakes got a publicist and a distribution deal," said Daisy Soderberg-Rivkin, a former trust and safety manager at TikTok. "It's an amplification of something that has been scary for a while, but now it has a whole new platform." Aaron Rodericks, Bluesky's head of trust and safety, said the public is not ready for a collapse between reality and fakery this drastic. "In a polarized world, it becomes effortless to create fake evidence targeting identity groups or individuals, or to scam people at scale. What used to be an inflammatory rumour -- like a fabricated story about an immigrant or a politician -- can now be rendered as believable video proof," Rodericks said. "Most people won't have the media literacy or the tools to tell the difference." NPR spoke with three former OpenAI employees, who all said they were not surprised the company would launch a social media app showcasing its latest video technology as investor pressure builds for the company to dazzle the world as it did three years ago after the release of ChatGPT. Like with the chat bot, changes are already coming fast. OpenAI included numerous guardrails with Sora, including moderation, restrictions on scammy, violent and pornographic material, watermarks and controls over how one's likeness is used. Some of the safety rails are being gamed by users intent on finding workarounds. And in response, OpenAI scrambles to plug the holes. One former OpenAI employee who was not authorized to speak publicly said being worried about safety protections dropping over time is a legitimate fear. "Releasing Sora tells the world where the party is going. AI videos may be the last conquered social media frontier, and OpenAI wants to own it, but as Silicon Valley competes over it, companies will bend the rules to stay competitive, and that could be bad for society," the person said. Former TikTok manager Soderberg-Rivkin said it is just a matter of time before a developer releases a Sora-esque app with no safety rules, similar to how Elon Musk built Grok specifically as an "anti-woke" and more unrestrained answer to leading chatbots. "When there's an unregulated version with no safety rails, it will be used to generate synthetic child sexual abuse material that bypasses current detections," said Rodericks, whose employer, Blueaky has leaned into customizable content moderation to set it apart from platforms like X, where there are fewer rules. "You'll see state-sponsored actors fabricating realistic news segments and propaganda to legitimize false narratives." A spokesperson for OpenAI declined to comment. Sora is currently the No. 1 most-downloaded iPhone app, but people can only use the app with an invite code from current users. Those who have been regularly using it have already noticed how it has become more restrictive. Doing videos of celebrities has become more difficult. Trying to replicate some of the more outlandish videos that have been created like a fake Jeffrey Epstein on a boat heading toward an island, or a replica of Sean "Diddy" Combs' addressing his prison sentence, has gotten trickier. Yet other controversial prompts, like having someone be arrested, or putting someone in a Nazi uniform, are still generating videos. OpenAI CEO Sam Altman wrote days after the Sora app was unveiled that rights holders are going to have more control over how their likeness is used, shifting the default approach from "opt-out" to "opt in." Altman also wrote that Sora will, eventually, share revenue the app makes with rightsholders. "Please expect a very high rate of change from us; it reminds me of the early days of ChatGPT," he said. The constant stream of AI slop filling up everyone's feeds has raised the question of whether users will tire of AI videos as a genre of content. Most major video platforms now have relatively loose policies around sharing AI videos, but will Sora trigger a backlash? Could that force social media companies to institute a crackdown or ban on AI-generated content? Not likely, said Soderberg-Rivkin, who points out that even if that were to happen, enforcement would run up against just how sophisticated leading AI generators have become. "If you say no AI use on a social media platform, the fact of the matter is it's getting harder and harder to detect when text, videos and images are AI, which is scary," she said. "A no-AI policy will not stop AI from sneaking in." Another former OpenAI employee who also was not authorized to speak publicly argued that releasing a deepfake AI social media platform was the right business decision, even if it contributes to the collapse of everyone's shared sense of reality. "We're already at the point where we can't tell what's real and what's not online, and OpenAI and other tech companies will have to solve around that," said the former OpenAI engineer, using tech lingo for finding a solution to a problem. "But that's not an argument for not trying to dominate this market. You can't stop progress. If OpenAI didn't release Sora, someone else would have." In fact, Meta is also trying to, with its recent introduction of Vibes, a platform where people can make and share short AI-generated deepfakes. In July, Google introduced Veo 3, an AI video tool. But it wasn't until OpenAI's release of the Sora app that personalized AI slop really took off. Trust and safety professionals, like Soderberg-Rivkin, say Sora will likely mark a turning point in the history of the internet, the moment when deepfakes went from a mostly one-off phenomenon to the status quo, which may make people disengage with social media more, or at least shatter faith in the integrity of what people are watching online. Disinformation experts have long warned about a concept known as "the liar's dividend," when the proliferation of deepfakes allows individuals, especially people in power, to dismiss real content as fabrications. But now, experts say, that reality is more acute than ever before. "I'm less worried about a very specific nightmare scenario where deepfakes swing an election, and I'm really more worried about a baseline erosion of trust," Soderberg-Rivkin said. "In a world where everything can be fake, and the fake stuff looks and feels real, people will stop believing everything."

[7]

OpenAI's new Sora app and other AI video tools give scams a new edge, experts warn

Why it matters: AI-generated content is quickly blurring the lines between what's real and what's not -- and scammers thrive on blurred realities. Driving the news: OpenAI rolled out its new Sora iOS app last week, powered by the company's updated, second-generation video-creation model. * The app is unique in that it allows users to upload photos of themselves and others to create AI-generated videos using their likenesses -- but app users need the consent of anyone who will be shown in a video. * OpenAI CEO Sam Altman said in an update Friday that the app will also give people "more granular control over generation of characters," including specifying in what scenarios their character can be used. * People have been quick to show off fun ways they can use the tool, with some posting videos of themselves in TV ads or being arrested. The flip side: The number of reported impersonation scams has skyrocketed in the U.S. in recent years -- and that's before AI tools have came into the picture. * In 2024, Americans lost $2.95 billion to imposter scams where fraudsters pretended to be a known person or organization, according to the Federal Trade Commission. Between the lines: AI voice scams -- which have a lower barrier to entry given how advanced the technology already is -- have already taken off. * Earlier this year, scammers impersonated the voices of Secretary of State Marco Rubio, White House chief of staff Susie Wiles, and other senior officials in calls to government workers. * Last week, a mother in Buffalo, New York, said she received a scam call in which someone pretended to be holding her son hostage and used a likeness of his voice to prove he was there. What they're saying: "This problem is ubiquitous," Matthew Moynahan, CEO of GetReal Security, which helps customers identify deepfakes and forgeries, told Axios. "It's like air, it's going to live everywhere." Threat level: It's easy to download and share content created using Sora outside of OpenAI's platform, and it's possible to remove the watermark indicating it's AI-generated. * Scammers can use that capability to dupe unsuspecting people into sending money, clicking on malicious links, or making poor investment decisions, Rachel Tobac, CEO of SocialProof Security, told Axios. * "We have to inform everyday people that we now live in a world where AI video and audio is believable," she said. Zoom in: Tobac laid out a few scenarios where she could see Sora being abused: * A parent could receive a video as part of an extortion scam impersonating their child. * A threat actor hoping to keep people from voting could create a video of a long line outside a polling center or fake interviews with poll workers saying the polls are closing early. * A nation-state could even create a fake but believable video of an attack on a major city to sow unrest and panic in the U.S. The intrigue: Fraudsters were already impersonating company executives, and new AI video tools are only going to amplify those schemes, Rafe Pilling, director of threat intelligence at Sophos, told Axios. * "Things have improved leaps and bound," Pilling said. "Ultimately, [these services] will get abused, no doubt." The other side: Meanwhile, creating realistic deepfakes with Meta AI's new tools has proven difficult because of one simple thing: They don't clone people's voices. * In Meta AI's new "Vibes" section, every video was just set to vague music and showed people and animals vibing to the tunes. * Each one looked like the AI slop videos that have flooded users' Facebook and Instagram feeds for months. Yes, but: Ben Nimmo, principal investigator on OpenAI's intelligence and investigations team, told reporters Monday that people are using ChatGPT three times more often to identify potential scams than adversaries are using it in their scam operations. What to watch: The world is still only at the beginning of AI development, and experts have warned that video tools will only get better at duping everyone. * "This is the greatest unmanaged enterprise risk I have ever seen," Moynahan said. "This is an existential problem."

[8]

Sora update: OpenAI will require copyright holders to opt out

OpenAI launched Sora, its AI video app for iOS users, last week, and it quickly shot to the top position on the Apple App Store charts -- despite the fact that the app is invite-only. Powered by the Sora 2 video model, the Sora app lets users create and share AI videos in a TikTok-like feed. If you've gained access to Sora, you've probably noticed a common thread on the platform: There's a lot of copyrighted content being used for these AI-generated videos. Mashable's tech editor said over the weekend that the Sora app feed was dominated by popular characters from SpongeBob Squarepants, Rick & Morty, and various Nintendo franchises. CNBC also reported on one popular Sora video featuring OpenAI CEO Sam Altman standing alongside Pokémon characters. "I hope Nintendo doesn't sue us," the AI-generated Altman says in the video. If you assumed OpenAI cleared the use of intellectual property like Pokémon with copyright holders like The Pokémon Company or Nintendo, you'd be wrong. An OpenAI representative told Mashable that the company is taking an opt-out approach when it comes to copyright, pointing to the company's Copyright Dispute form. This means intellectual property is fair game unless the copyright holder contacts OpenAI and opts-out from the platform. In fact, an OpenAI spokesperson told Mashable that copyright holders cannot request a blanket opt-out for their IPs. Instead, copyright holders must request specific characters be blocked or flag specific videos. OpenAI appears to realize that it needs to work more with copyright holders, however. Over the weekend, users began sharing screenshots of "Content Violation" warnings. In the freewheeling days after the app's launch, users could make videos featuring all sorts of copyrighted material, but that seems to be changing fast. This Tweet is currently unavailable. It might be loading or has been removed. Interestingly, the Wall Street Journal reported that before Sora's launch, OpenAI reached out to talent agencies and film studios to notify them about Sora and that their copyrighted works could appear in the app. In an Oct. 3 post on his personal blog, OpenAI CEO Altman told users to "expect a very high rate of change," and admitted that OpenAI would need to monetize Sora to cope with user demand. "We are hearing from a lot of rightsholders who are very excited for this new kind of 'interactive fan fiction' and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used (including not at all)," Altman wrote. In addition, in a post on X published on Sunday, OpenAI's Head of Sora Bill Peebles said that the company is going to put restrictions on the app's cameos feature. The cameos feature in Sora allows users to upload real video of themselves in order to enable Sora users to generate AI content with their likeness and image. This Tweet is currently unavailable. It might be loading or has been removed. According to Peebles, Sora users will be able to choose exactly how their likeness is used. Peebles used the example of users putting restrictions such as "don't put me in videos that involve political commentary" or "don't let me say this word."

[9]

OpenAI Backtracks on Copyright Policy for Sora 2 After Wild Videos Appear

Social media has been awash with wild, AI-generated videos the past few days thanks to OpenAI's newly-released Sora 2, which has stormed to the top of the App Store despite being invite only. As well as generating AI videos that come with sound, Sora 2 is essentially its own social media platform. Users have been creating videos of OpenAI CEO Sam Altman stealing content from Hayao Miyazaki of Studio Ghibli fame. But since it launched, users have also been making videos of recognizable figures like SpongeBob Squarepants, Pokémon characters, and members of The Simpsons engaged in illegal activities or at least doing something their IP owners would not approve of. There have also been outrageous, realistic videos of celebrities like Michael Jackson and Stephen Hawking. Altman addressed this issue in a blog post over the weekend, saying that Sora 2 will give rightsholders "more granular control over generation of characters." "We are hearing from a lot of rightsholders who are very excited for this new kind of 'interactive fan fiction' and think this new kind of engagement will accrue a lot of value to them," Altman writes. "But want the ability to specify how their characters can be used (including not at all)." When Sora was first announced, it was reported by The Wall Street Journal that OpenAI informed Hollywood studios that, unless they explicitly opted out, their copyrighted content may appear in Sora output. But now OpenAI is switching that to opt-in while Altman tries to appease rightsholders by vaguely offering a revenue-sharing scheme. "We are going to have to somehow make money for video generation. People are generating much more than we expected per user, and a lot of videos are being generated for very small audiences," Altman says. "We are going to try sharing some of this revenue with rightsholders who want their characters generated by users. The exact model will take some trial and error to figure out, but we plan to start very soon." Despite what Altman says about rightsholders being "very excited" about their beloved characters appearing in Sora's AI videos, there will be discontent in some boardrooms. Warner Bros. Discovery, Disney, and NBC Universal have all sued AI image generator Midjourney in recent months for exactly this type of output. Warner Bros. Discovery accuses Midjourney of allowing its subscribers to "pick iconic copyrighted characters" which are then used for "infringing images and videos, and unauthorized derivatives, with every imaginable scene featuring those characters." That sounds very similar to what Sora users have been up to this past weekend.

[10]

OpenAI promises more 'granular control' to copyright owners after Sora 2 generates videos of popular characters

Company behind the AI video app says it will work with rights holders to 'block characters from Sora at their request' OpenAI is promising to give copyright holders "more granular control" over character generation after its new app Sora 2 produced a flood of videos that depicted copyrighted characters. Sora 2, a video generator powered by artificial intelligence, was launched last week on an invite-only basis. The app allows users to generate short videos based on a text prompt. The Guardian's review of the feed of AI-generated videos last week showed copyrighted characters from shows such as SpongeBob SquarePants, South Park, Pokémon and Rick and Morty. The Wall Street Journal reported last week that before OpenAI released Sora 2, the company told talent agencies and studios if they didn't want their copyrighted material replicated by the video generator, they would have to opt out. OpenAI told the Guardian content owners can flag copyright infringement using a "copyright disputes form", but that individual artists or studios cannot have a blanket opt-out. Varun Shetty, OpenAI's head of media partnerships, said: "We'll work with rights holders to block characters from Sora at their request and respond to takedown requests." On Saturday, OpenAI CEO Sam Altman, said in a blog post that the company had been "taking feedback" from users, rights holders and other groups, and would make changes as a result. He said rights holders would be given more "granular control" over the generation of characters, similar to how people can opt-in to share their own likeness in the app, but with "additional controls". "We are hearing from a lot of rightsholders who are very excited for this new kind of 'interactive fan fiction' and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used (including not at all)." Altman said OpenAI would "let rightsholders decide how to proceed" and that there would be some "edge cases of generations" that get through the platform's guardrails that should not. He said the company would also "have to somehow make money" from video generation, and already the platform was seeing people generating much more than expected per user. He said this could mean payment to rights holders who grant permission for their characters to be generated. "The exact model will take some trial and error to figure out, but we plan to start very soon," Altman said. "Our hope is that the new kind of engagement is even more valuable than the revenue share, but of course we want both to be valuable." He said there would be a high rate of change, similar to the early ChatGPT days, and there would be "some good decisions and some missteps".

[11]

Sora 2 is getting tighter copyright controls and monetization features, says OpenAI CEO Sam Altman

Earlier this week OpenAI launched Sora 2, a substantial upgrade on its previous AI video generation tool, together with a new app and a TikTok-style personalized feed of video clips - and there are some changes on the way, according to OpenAI CEO Sam Altman. First, Altman says copyright holders are going to get "more granular control" over how their characters can be used in the Sora app - in other words, it may not be so easy to generate clips featuring Marvel and Disney characters in the future. "Rightsholders... are very excited for this new kind of 'interactive fan fiction' and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used (including not at all)," explains Altman. From OpenAI's perspective, it wants as many users and as many video clip generations as it can possibly get - and it's hoping to make the technology compelling enough that copyright holders want to get involved, while also giving them the choice to opt out. The second change that Altman talks about in his new blog post is around monetization rewards - though it seems this will be for companies whose work is included in the Sora clips, rather than the users who are creating them. According to Altman, Sora users are generating a lot more video than OpenAI expected, and so there might be some kind of pay-to-generate system introduced in the near future (there have been previous hints at getting users to pay during periods of high demand). "We are going to try sharing some of this revenue with rightsholders who want their characters generated by users," says Altman. "The exact model will take some trial and error to figure out, but we plan to start very soon." Right now, the Sora app is only available for iOS, and only in the US and Canada - and you need to have an invite to try it out. However, you can expect it to become more widely available soon, and most probably with tighter restrictions on what you can make.

[12]

Sora 2 Has a Huge Financial Problem

"People are generating much more than we expected per user, and a lot of videos are being generated for very small audiences." It didn't take long for OpenAI's text-to-video-and-audio AI generator app, Sora 2, to melt down into a messy pile of potentially copyright-infringing AI slop. Within just days, the TikTok-style app's mind-numbing feed of AI-generated content was filled with videos of Nickelodeon's SpongeBob SquarePants cooking up blue crystals in a meth lab, entire episodes of South Park, and depictions of physicist Stephen Hawking being brutalized in horrible ways. The app's prominent use of recognizable intellectual property and the likenesses of real people, combined with its sheer amount of hype, has allowed it to shoot up to the top of Apple's App Store, with Meta's competing Vibes app, which was released less than a week before Sora 2, quickly turning into a long-forgotten footnote in AI slop history. All that success creates a problem for OpenAI: how to stop Sora 2 from burning through cash as users generate countless resource-intensive AI videos. In an update posted to his personal blog, OpenAI CEO Sam Altman admitted that the company had a lot of work to do, especially when it comes to turning its AI slop generator into a moneymaker. "We are going to have to somehow make money for video generation," he said. "People are generating much more than we expected per user, and a lot of videos are being generated for very small audiences." It's a pressing issue for the firm because of the sheer amount of computing power needed to generate AI videos. Last month, researchers from the open-source AI platform Hugging Face found that the energy demands of text-to-video generators quadruple when the length of videos doubles, suggesting the power required increases quadratically, not linearly. Multiply those demands by the number of videos being generated on Sora 2, and OpenAI is likely looking at massive compute demands. In other words, OpenAI's foray into slop-based social media could quickly turn into a very costly endeavor. And turning Sora 2 into a source of revenue won't be easy. It's not clear that users will actually pay to use the service -- and adding to OpenAI's woes, rightsholders could soon be asking questions about its eyebrow-raising ask-for-forgiveness-later approach to intellectual property and content moderation. The company has since slammed on the brakes, implementing guardrails on Sora 2 that were mysteriously absent on day one, as copyright lawyer Aaron Moss pointed out in a recent article. Users are running into the company's content blocks at full force, resulting in widespread frustration. As Altman's blog post indicates, the company doesn't have a fleshed-out plan on how to generate revenue. Some of the proceeds, according to the CEO, will be shared "with rightsholders who want their characters generated by users." But where those proceeds will come from -- presumably, either from advertising or users -- remains unclear. "The idea -- perhaps similar to YouTube's ad-monetization program for videos that include copyrighted material -- would give studios a financial incentive to opt in," Moss wrote in his piece. "When thousands of GPU-intensive ten-second 'South Park' clips also risk copyright lawsuits, the math gets ugly fast," he added. But if "studios become partners rather than adversaries, OpenAI can potentially offset costs while buying legal cover." Wooing rightsholders to OpenAI's side, especially now that the company has allowed for potentially copyright-infringing material to proliferate widely, is far easier said than done. Indeed, major rightsholders have already filed lawsuits against generative AI companies. Case in point, just last month, Warner Bros. Discovery sued AI text-to-image generator company Midjourney for infringement, joining Disney and NBC Universal. "There's a world of difference between studios collecting YouTube ad revenue on video clips they produce and control, and handing over their characters for anyone to freely manipulate in exchange for a few bucks," Moss wrote. Other netizens pointed out that copyright holders may balk at OpenAI's courting attempts. "Why on earth would any brand pay for their IP to be surfaced by a user prompt upon their requests?" one argued. "That's not even close to how any of this works." "Why would I, as a multibillion-dollar rightsholder, not just take you to the cleaners?" another user added.

[13]

The Sora videos you create could change dramatically with new update

OpenAI will allow rightsholders to decide whether they want their IP included in the AI video generator The new social app from OpenAI created for generating AI short-form videos has taken the world by storm. Just over a week since Sora's release, it seems a new update is already on the way. What's happened? Sam Altman, the CEO of OpenAI, the same company that founded ChatGPT, has announced new updates for the newly-launched Sora in his blog. First, the update will bring more control for rightsholders, such as Disney and other companies with famous IP, to choose how their characters or brand can (or can't) be used in AI-generated content. Second, there will be revenue for rightsholders who allow their characters to be generated by users. Altman states Sora has to have a revenue stream, and while the actual model on how this will work is yet to be determined, this could be a good platform for rightsholders. Altman also cautions that the platform will experience a "very high rate of change" as the company try things, make failures, gathers input and course-corrects. Recommended Videos Why is it important? The first change - granular controls for rightsholders - is going to dramatically change what the generative AI is able to create. If a brand like Disney or Studio Ghibli decides it doesn't want its IP included in the model, it won't be able to generate videos using their characters. OpenAI has already run into trouble with Studio Ghibli and others, with well-known characters from South Park to Pokemon being generated through Sora. These changes indicate Sora is in a race to become a top social and AI-generating app, and it's already shot to the top of app charts. Why should I care? If you're already creating videos in Sora, or are keen to check it out, what you're able to generate is about to shift. We'll have to wait and see if rightsholders are open to allowing Sora to use their IP in its AI-generated videos, or whether we'll see big names refuse to opt-in. If rightsholders are reluctant to allow their IP to be used in the videos Sora creates, the appeal of the platform could diminish. However, if OpenAI is able to convince brands to get on board with an enticing revenue model, we could see Altman's vision of "this new kind of 'interactive fan fiction'" explode in popularity. What's next? Altman confirms these updates are on the horizon and coming very soon, as the team continues to iterate on various methods and transfer lessons across all their products. The revenue-sharing model remains unspecified and will change based on feedback and experimentation.

[14]

OpenAI starts major mess in Japan as Sora cribs Nintendo, anime

Sora, OpenAI's generative video app, has only been out for a week, yet it has already opened a proverbial can of worms for the artificial intelligence organization. As users gravitate toward styles and figures from pop culture, Sora has generated a ton of media related to major anime and video game franchises. OpenAI seemingly did not implement many measures to protect rights holders against their copyrighted content being used as grist for generative AI. The mess has prompted OpenAI CEO Sam Altman to issue a statement on the guardrails Sora users can expect in the near future. Like ChatGPT, Sora takes text prompts from users to generate content -- in this case video footage. Its powers are expansive: Users can do anything from create convincing deepfake versions of real people, to copying specific visual aesthetics. One clip that's gone viral since Sora went live depicts none other than OpenAI's CEO Sam Altman grilling and slicing open a realistic Pikachu, the beloved Pokémon mascot. Another popular video prompted Sora to create a fake Cyberpunk 2077 mission. Curiously, the app spit back something that seemed to be based on an actual in-game level, with details like the voice acting and vehicle designs bearing an uncanny resemblance to its source material. Sora's capabilities are sparking much discourse on the potential dangers of misinformation or security issues inherent to convincing footage involving real-world figures. The question over how Sora is able to depict its subjects with such fidelity and what data OpenAI used to achieve that effect is also lingering in the air. According to the Wall Street Journal, OpenAI is asking copyright holders to opt out. Some companies evidently took OpenAI up on the offer, or at least the app has stringent blocks against generating anything that could be mistaken for a Marvel or Disney property. Japanese entertainment companies, on the other hand, seem to be treated as fair game by Sora's inner logic. The app is capable of recreating the look and feel of anime like Attack on Titan, Dragon Ball Z, and One Piece with startling fidelity, a reality that's sparked criticism from the public. Japanese politician Akihisa Shiozaki in particular has led the charge by holding an "urgent meeting" with Japanese government officials to discuss what should be done with regard to intellectual property rights in the country. With concern that technology like Sora is "devouring Japanese culture," reportedly people within that gathering were requesting that the government take swift action. In a blog post, Altman acknowledged the situation vaguely by noting that OpenAI was "struck by how deep the connection between users and Japanese content is!" Altman also claimed that OpenAI did discuss the ramifications of its technology prior to its release, but having Sora in the hands of the public has given the organization more tangible feedback. Despite this move-fast-and-break-things approach, Altman is promising that rightsholders will soon be able to exert more "granular control" over relevant properties. While he didn't share specifics, the measures will extend beyond opting in or out of the app. Here's Altman: We are hearing from a lot of rightsholders who are very excited for this new kind of "interactive fan fiction" and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used (including not at all). We assume different people will try very different approaches and will figure out what works for them. But we want to apply the same standard towards everyone, and let rightsholders decide how to proceed (our aim of course is to make it so compelling that many people want to). There may be some edge cases of generations that get through that shouldn't, and getting our stack to work well will take some iteration. One popular source of inspiration for users is Nintendo, the Japanese company behind many iconic video game characters. And if Nintendo is known for anything among hardcore enthusiasts, it's for its protective approach toward intellectual property. Coincidentally, AI-generated footage of Sam Altman saying "I hope Nintendo doesn't sue us" has also garnered much attention online. Nintendo recently denied claims that it was working with the Japanese government to prevent generative AI from using its IP. It did, however, reiterate its general stance on unauthorized usage of its creations. "Whether generative AI is involved or not, we will continue to take necessary actions against infringement of our intellectual property rights," Nintendo said in a social media post.

[15]

OpenAI offers more copyright control for Sora 2 videos

When OpenAI released its new video generation model Sora 2 last week, users delighted in creating hyper-realistic clips inspired by real cartoons and video games, from South Park to Pokemon. But the US tech giant is giving more power to the companies that hold the copyright for such characters to put a stop to these artificial intelligence copies, boss Sam Altman said. OpenAI, which also runs ChatGPT, is facing many lawsuits over copyright infringements, including one major case with the New York Times. The issue made headlines in March when a new ChatGPT image generator unleashed a flood of AI pictures in the style of Japanese animation house Studio Ghibli. Less than a week after Sora 2 was released on October 1 -- with a TikTok-style app allowing users to insert themselves into AI-created scenes -- Altman said OpenAI would tighten its policy on copyrighted characters. "We will give rightsholders more granular control over generation of characters," he wrote in a blog post on Friday. It would be "similar to the opt-in model for likeness but with additional controls," he said. The Wall Street Journal reported in September that OpenAI would require copyright holders, such as movie studios, to opt out of having their work appear in AI videos generated by Sora 2. After the launch of the invitation-only Sora 2 app, the tool usually refused requests for videos featuring Disney or Marvel characters, some users said. However, clips showing characters from other US franchises, as well as Japanese characters from popular game and anime series, were widely shared. These included sophisticated AI clips showing Pikachu from Pokemon in various movie parodies, as well as scenarios featuring Nintendo's Super Mario and Sega's Sonic the Hedgehog. "We'd like to acknowledge the remarkable creative output of Japan -- we are struck by how deep the connection between users and Japanese content is!" Altman said. Nintendo said in a post on X on Sunday that it had "not had any contact with the Japanese government about generative AI." "Whether generative AI is involved or not, we will continue to take necessary actions against infringement of our intellectual property rights," the game giant said. Japanese lawmaker Akihisa Shiozaki also weighed in on X, warning of "serious legal and political issues." "I would like to address this issue as soon as possible in order to protect and nurture the world-leading Japanese creators," he said.

[16]

This Trend Is Making It Even Harder to Tell When a Video Is AI-Generated

Now, people are starting to add Sora watermarks to real videos, sowing even more confusion. Did you know you can customize Google to filter out garbage? Take these steps for better search results, including adding my work at Lifehacker as a preferred source. It's scary how realistic AI-generated videos are getting. What's even scarier, however, is how accessible the tools to create these videos are. Using something like OpenAI's Sora app, people can create hyper-realistic short-form videos of just about anything they want -- including real people, like celebrities, friends, or even themselves. OpenAI knows the risks involved with an app that makes generating realistic videos this easy. As such, the company places a watermark on any Sora generation you create via the app. That way, when you're scrolling throughout your social media feeds, if you see a little Sora logo with a cute cloud with eyes bouncing around, you know it's AI-generated. My immediate worry when OpenAI announced this app was that people would find a way to remove the watermark, sowing confusion across the internet. I wasn't wrong: There are already plenty of options out there for interested parties who want to make their AI slop even more realistic. But what I didn't expect was the opposite: people who want to add the Sora watermark to real videos, to make them look as if they were created with AI. I was recently scrolling -- or, perhaps, doomscrolling -- on X when I started seeing some of these videos, like this one featuring Apple executive Craig Federighi: The post says "sora is getting so good," and includes the Sora watermark, so I assumed someone made a cameo of Federighi in the app and posted it on X. To my surprise, however, the video is simply pulled from one of Apple's pre-recorded WWDC events -- one where Federighi parkours around Apple HQ. Later, I saw this clip, which also uses a Sora watermark. At first glance, you might be fooled into thinking it's an OpenAI product. But look closer, and you can tell the clip uses real people: The shots are too perfect, without the fuzziness or glitching that you tend to see from AI video generation. This clip is simply spoofing the way Sora tends to generate multi-shot clips of people talking. (Astute viewers may also notice the watermark is a little larger and more static than the real Sora watermark.) This Tweet is currently unavailable. It might be loading or has been removed. As it turns out, the account that posted that second clip also made a tool for adding a Sora watermark to any video. They don't explain the thinking or purpose behind the tool, but it's definitely real. And even if this tool didn't exist, I'm sure it wouldn't be too hard to edit a Sora watermark into a video, especially if you weren't concerned about replicating the movement of Sora's official watermark. To be clear, people were already posting like this before adding the watermark tool. The joke is to say you made something with Sora, but post a popular or infamous clip instead -- say, Drake's Sprite ad from 15 years ago, Taylor Swift dancing at The Eras Tour, or an entire Sonic the Hedgehog movie. It's a funny meme, especially when it's obvious that the video wasn't made by Sora. This Tweet is currently unavailable. It might be loading or has been removed. But this is an important reminder to be constantly vigilant when scrolling through videos on your feeds. You have to be on the lookout for both clips that aren't real, as well as clips that are actually real, but are being advertised as AI-generated. There are a lot of implications here. Sure, it's funny to slap a Sora watermark on a viral video, but what happens when someone adds the watermark to a real video of illegal activity? "Oh, that video isn't real. Any videos you see of it without the watermark were tampered with." At the moment, it doesn't seem like anyone has figured out how to perfectly replicate the Sora watermark, so there will be signs if someone actually tries to pass a real video off as AI. But this is still all a bit concerning, and I don't know what the solution could be. Maybe we're heading towards a future in which internet videos are simply treated as untrustworthy across the board. If you can't determine what's real or fake, why bother trying?

[17]

Excitement -- and concerns -- over OpenAI's Sora 2 and other AI video tools

Alain Sherter is a senior managing editor with CBS News. He covers business, economics, money and workplace issues for CBS MoneyWatch. The next frontier of online video further blurs the line between human- and AI-generated content. In late September, Meta CEO Mark Zuckerberg announced "Vibes," a feature that allows users to create and watch AI-generated videos. ChatGPT maker OpenAI quickly followed with the launch of Sora 2, which people can use to create videos with cameos of themselves, friends and others who grant permission. Despite only being available by invitation, the new tool promptly jumped to the top of Apple's app store. The apps are part of a burgeoning family of AI tools that make it far easier for non-experts to create sophisticated videos, including hyperrealistic or fantastical content "You're only limited by your imagination," Hany Farid, a professor of electrical engineering and computer sciences at UC Berkeley, told CBS News. In unveiling Sora 2, for example, OpenAI showed how simple prompts such as "a man rides a horse which is on another horse" or "figure skater performs a triple axle with a cat on her head" are used to create quirky, and convincing, videos. Beyond offering a creative outlet, the tools also represent a new era for social media, with Sora 2 and Meta's "Vibes" offering a TikTok-like experience. The main difference: The videos users scroll are all AI-generated. Adam Nemeroff, an assistant provost and technology expert at Quinnipiac University, thinks Meta is planning for AI content generated through Vibes to eventually co-exist in users' feeds with human-made videos. "I would imagine that would be the case, because Meta is in the business of attention." Nemeroff also expects big tech players to eventually try to monetize AI-generated content through advertisements and brand placements. Farid noted that, despite the enormous growth of generative AI tools like ChatGPT, Anthropic's Claude and Google's Gemini, tech companies are still refining how to churn out profits from the rapid adoption of artificial intelligence. OpenAI has said it plans to give Sora 2 users the option to "pay some amount to generate an extra video if there's too much demand relative to available compute." The emergence of AI-created videos is heightening concerns about a potential flood of low-quality "AI slop," including "deepfake" content that could be mistaken as real. Meta, for instance, allows users to cross-post "Vibes" videos on other platforms, such as Facebook Stories. "They're the kinds of things that you can kind of distract from other more reputable or better information from a quality standpoint," Nemeroff said. "But they're often popping up next to the same things in the same places." A page on OpenAI's website details some of the measures the company has taken with Sora 2 to limit the production of potentially harmful content and to help users distinguish AI content. "Every video generated with Sora includes both visible and invisible provenance signals," according to the company. OpenAI and Meta did not respond to requests for comment on what steps they are taking to ensure the apps are used safely. Experts say advancements in AI-generated videos portend major changes for the entertainment industry and other online content players. "Anybody with a keyboard and internet connection will be able to create a video of anybody saying or doing anything they want," Farid said. That shift will be messy, with movie and TV industry professionals already insisting on industry guardrails to ensure AI doesn't encroach on their livelihood. One immediate concerns for the industry is that Sora 2, which lets content creators use clips of copyrighted characters, appears to put the burden of enforcing those rights on copyright holders. "Since Sora 2's release, videos that infringe our members' films, shows and characters have proliferated on OpenAI's service and across social media," Charles Rivkin, chairman and CEO of the Motion Pictures Association, said in a statement on Tuesday. "While OpenAI clarified it will 'soon' offer rightsholders more control over character generation, they must acknowledge it remains their responsibility - not rightsholders' - to prevent infringement on the Sora 2 service. OpenAI needs to take immediate and decisive action to address this issue." In another recent controversy over the use of AI, Dutch producer and comedian Eline Van der Velden recently sparked backlash in Hollywood after she unveiled an AI-generated actress. The Screen Actors Guild responded by saying that "creativity is, and should remain, human-centered." "I think there's a disruption coming, and there will be some destruction and some creation," Farid said. "And I think it's coming for more than just the movie and music industry -- it's coming for a lot of industries."

[18]

OpenAI offers more copyright control for Sora 2 videos

Tokyo (AFP) - When OpenAI released its new video generation model Sora 2 last week, users delighted in creating hyper-realistic clips inspired by real cartoons and video games, from South Park to Pokemon. But the US tech giant is giving more power to the companies that hold the copyright for such characters to put a stop to these artificial intelligence copies, boss Sam Altman said. OpenAI, which also runs ChatGPT, is facing many lawsuits over copyright infringements, including one major case with the New York Times. The issue made headlines in March when a new ChatGPT image generator unleashed a flood of AI pictures in the style of Japanese animation house Studio Ghibli. Less than a week after Sora 2 was released on October 1 -- with a TikTok-style app allowing users to insert themselves into AI-created scenes -- Altman said OpenAI would tighten its policy on copyrighted characters. "We will give rightsholders more granular control over generation of characters," he wrote in a blog post on Friday. It would be "similar to the opt-in model for likeness but with additional controls", he said. The Wall Street Journal reported in September that OpenAI would require copyright holders, such as movie studios, to opt out of having their work appear in AI videos generated by Sora 2. After the launch of the invitation-only Sora 2 app, the tool usually refused requests for videos featuring Disney or Marvel characters, some users said. However, clips showing characters from other US franchises, as well as Japanese characters from popular game and anime series, were widely shared. These included sophisticated AI clips showing Pikachu from Pokemon in various movie parodies, as well as scenarios featuring Nintendo's Super Mario and Sega's Sonic the Hedgehog. "We'd like to acknowledge the remarkable creative output of Japan -- we are struck by how deep the connection between users and Japanese content is!" Altman said. Nintendo said in a post on X on Sunday that it had "not had any contact with the Japanese government about generative AI". "Whether generative AI is involved or not, we will continue to take necessary actions against infringement of our intellectual property rights," the game giant said. Japanese lawmaker Akihisa Shiozaki also weighed in on X, warning of "serious legal and political issues". "I would like to address this issue as soon as possible in order to protect and nurture the world-leading Japanese creators," he said.

[19]

Pikachu instrumental in OpenAI copyright U-turn on Sora app?