Oracle's AI Infrastructure Expansion: AMD and Nvidia Compete for Dominance

18 Sources

18 Sources

[1]

AMD and Oracle partner to deploy 50,000 MI450 Instinct GPUs in new AI superclusters -- deployment of expansion set for 2026, powered by AMD's Helios rack

That's just the start, too, with the deal set to expand in 2027 "and beyond" AMD and Oracle have inked a deal for a deployment of 50,000 next-generation MI450 Instinct professional graphics cards and Zen 6 Epyc CPUs as part of an "AI supercluster" Oracle is developing for its cloud infrastructure. It's not clear if this will work in conjunction with, or as part of, the previously announced deals between OpenAI and AMD, or Oracle and OpenAI, but it's yet another example of major AI hardware and infrastructure companies announcing enormous financial investments with one another. Nvidia has been the darling of the AI scene for the past few years, and particularly so as part of the hundreds of billions of dollars of investment announced just this year to build out new AI inferencing and training data centers around the world. But Nvidia isn't the only company with powerful AI hardware, and AMD's next-generation Instinct GPUs are, at least on paper, a fair competitor for what Nvidia has now and what it has coming next in Vera Rubin. That suggestion, partnered with an insatiable demand for AI compute power, has led to AMD making some serious deals with some very serious AI companies; That now includes Oracle. The new supercluster Oracle is developing will use AMD's Helios rack system, which combines AMD next-generation Instinct MI450 graphics cards with Venice (Zen 6) Epyc CPUs, as well as AMD's next-generation advanced networking hardware, codenamed Vulcano. Designed for use in both training and inference workloads, AMD positions this combination much like Nvidia does with its NVIDIA GB200 NVL72 clusters, which combine Nvidia Blackwell graphics chips with Grace Hopper processors. It's an all-in-one package that allows companies like Oracle to quickly scale up compute performance for AI workloads. "Through our decade-long collaboration with AMD -- from EPYC to AMD Instinct accelerators -- we're continuing to deliver the best price-performance, open, secure, and scalable cloud foundation in partnership with AMD to meet customer needs for this next era of AI," Oracle said in a statement. Although AMD's Zen 6 Epyc CPUs and MI450 graphics chips aren't ready yet - the Oracle deployment won't happen until the latter half of 2026 - AMD has already talked a big game about them. It claims they'll be hotly competitive with Nvidia, and even described it as being like the 2021 moment in consumer CPUs, where it finally eclipsed the then-king of the industry, Intel. It plans to do the same to Nvidia next year. It'll have its work cut out for it, as Nvidia's Vera Rubin GPU design should be much faster and more efficient than Blackwell if Nvidia's performance scaling of the past few generations is anything to go by. What seemingly won't be difficult for any of these companies, though, is making money from each other and making announcements about how much money they're making from each other. Just about all the top AI software and hardware firms have done deals with one another worth tens of billions of dollars in recent months. It's enough to question where all the money is going to come from, and if someone doesn't pay, is it all going to burst the bubble that grows ever larger with each announcement?

[2]

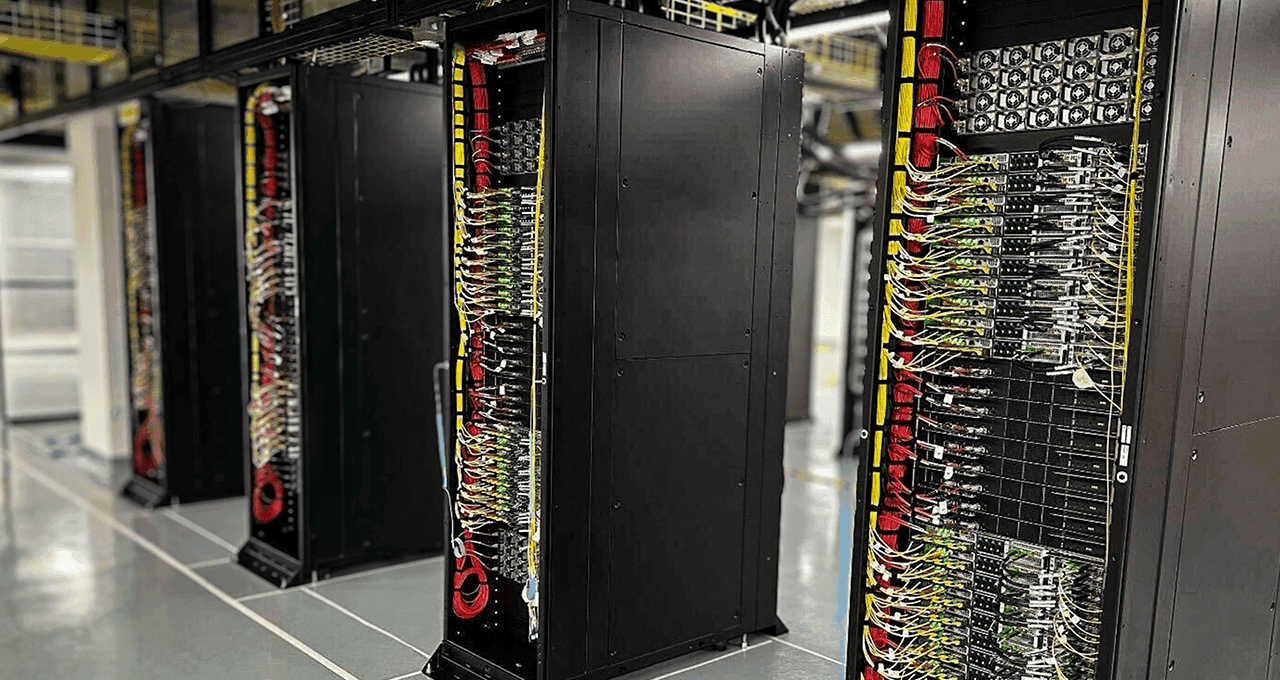

Oracle aims to bring another 18 zettaFLOPS online in 2H 2026

New clusters to feature 800,000 Nvidia Blackwell and 50,000 AMD Instinct MI450X GPUs Oracle on Tuesday revealed it would field more than 18 zettaFLOPS worth of AI infrastructure from Nvidia and AMD by the second half of next year. This includes a cluster of 800,000 Nvidia GPUs capable of delivering up to 16 zettaFLOPS of peak AI performance -- that's sparse FP4 in case you're wondering. The cluster, part of Oracle Cloud Infrastructure's Zettascale10 offering, is a big win for Nvidia, which isn't only furnishing the GPUs and rack systems, but also the networking. Stitching the GPUs together will be Nvidia's Spectrum-X Ethernet switching platform, marking the latest large scale cluster built around the platform. If that weren't enough, Oracle also plans to offer a slew of Nvidia AI services through its cloud platform. While Nvidia counts its billions, AMD expects to see 50,000 of its MI450X-series accelerators deployed at Oracle data centers in the second half of next year, with additional deployments expected the following year. First teased at AMD's advancing AI event in June, the MI450X will be offered in a rack scale architecture similar to Nvidia's NVL72 that it calls Helios. Each rack is equipped with 72 MI450X GPUs stitched together using an open alternative to Nvidia's high-speed NVLink interconnect called Ultra Accelerator Link (UALink). At OCP, we caught our first glimpse of what production Helios racks based on the new open rack wide (ORW) form factor will end up looking like. In case you're wondering, the double-wide system is technically one rack as defined by the OCP spec. AMD predicts that a single Helios rack will deliver 2.9 exaFLOPS of FP4 and up to 1.4 exaFLOPS of FP8 performance, along with 31 TB of HBM4 memory good for 1.4 petabytes a second of bandwidth. It's not clear at this point whether that's dense or sparse FLOPS, but if we had to guess, it's probably the latter. This puts it in the same performance class as Nvidia's upcoming Vera Rubin NVL144 systems, albeit with a boatload more HBM. That puts OCI's initial deployment of 50,000 MI450Xs at just over two zettaFLOPS of ultra-low precision compute. While it's fun to throw around the word zettaFLOPS, few customers will actually be able to harness all the compute Oracle is laying down. Not only would they have to lock in an entire cluster, but also FP4 is generally regarded as a storage format for AI inference, with model builders, like OpenAI, only now warming to the format. For the kinds of training jobs customers are likely to rent out a cluster of 50 thousand or more GPUs for, higher precision data types like BF16, FP8, have historically been preferred. That's not to say it's impossible to train a model natively at FP4. Nvidia recently published a paper exploring the merits of pretraining using 4-bit microscaling datatypes like NVFP4, and early findings suggest that the datatype can achieve quality levels comparable to FP8. One company likely to end up getting access to a substantial quantity of Oracle's GPU hoard is OpenAI. Both Nvidia and AMD recently signed investment deals with the AI flag bearer, predicated on large scale deployments of their accelerators by OpenAI's partners - and Oracle happens to be its biggest. And while AMD's datacenter GPU market share is still dwarfed by Nvidia's, that's likely to change. Under a recently announced agreement, OpenAI will have the opportunity to acquire 160 million shares of the chipmaker at a penny a pop if the House of Zen can facilitate the deployment of six gigawatts of instinct accelerators. The 50,000 MI450X cluster announced by Oracle this week appears to be the first piece of an initial gigawatt-scale deployment. By our estimate, this suggests that Oracle could end up deploying another 180,000 or so MI450s as part of the deal. ®

[3]

Oracle and AMD expand AI partnership to keep up with demand

WASHINGTON (AP) -- Oracle and Advanced Micro Devices are expanding their partnership with the deployment of 50,000 AMD graphic processing units beginning in the third quarter of 2026 with further expansion to follow. The so-called AI "supercluster" is a massive, interconnected group of high-performance computers designed to work together as a single system. AMD shares jumped 3% before the bell Tuesday, while Oracle's slipped 1.8%. The companies said that next-generation AI models are poised to outgrow the limits of current AI infrastructure. No dollar figures for what each company's investment in the expanded partnership.

[4]

Oracle Cloud to deploy 50,000 AMD AI chips, signaling new Nvidia competition

AMD Chair and CEO Lisa Su looks on, on the day of a meeting of the White House Task Force on Artificial Intelligence (AI) Education in the East Room at the White House in Washington, D.C., U.S., September 4, 2025. Oracle Cloud Infrastructure on Tuesday announced that it will deploy 50,000 Advanced Micro Devices graphics processors starting in the second half of 2026. The move is the latest sign that cloud companies are increasingly offering AMD's GPUs as an alternative to Nvidia's market-leading GPUs for artificial intelligence. "We feel like customers are going to take up AMD very, very well -- especially in the inferencing space," Karan Batta, senior vice president of Oracle Cloud Infrastructure, told CNBC's Seema Mody. Oracle will use AMD's Instinct MI450 chips, which were announced earlier this year. They are AMD's first AI chips that can be assembled into a larger rack-sized system that enables 72 of the chips to work as one, which is needed to create and deploy the most advanced AI algorithms. OpenAI CEO Sam Altman appeared with AMD CEO Lisa Su at a company event in June to announce the product. "I think AMD has done a really fantastic job, just like Nvidia, and I think both of them have their place," Batta said. He added that AMD's software stack is "critical" and that "customers are going to take up AMD very, very well, in the inferencing space."

[5]

NVIDIA and Oracle to Accelerate Enterprise AI and Data Processing

New OCI Zettascale10 computing cluster, accelerated by NVIDIA GPUs, and AI database integrations to deliver intelligence at every layer of the business. AI is transforming the way enterprises build, deploy and scale intelligent applications. As demand surges for enterprise-grade AI applications that offer speed, scalability and security, industries are swiftly moving toward platforms that can streamline data processing and deliver intelligence at every layer of the business. At Oracle AI World, Oracle today announced a new OCI Zettascale10 computing cluster accelerated by NVIDIA GPUs, designed for high-performance AI inference and training workloads. The cluster will deliver up to 16 zettaflops of peak AI compute performance and harness NVIDIA Spectrum-X Ethernet -- the first Ethernet platform purpose-built for AI -- enabling hyperscalers to interconnect millions of GPUs with unprecedented efficiency and scale. Other announcements include added support for NVIDIA NIM microservices in Oracle Database 26ai, NVIDIA accelerated computing integration in the new Oracle AI Data Platform, native availability of the NVIDIA AI Enterprise software platform in the OCI Console and more. "I believe the AI market has been defined by critical partnerships such as the one between Oracle and NVIDIA," said Mahesh Thiagarajan, executive vice president of Oracle Cloud Infrastructure. "These partnerships provide force multipliers that help ensure customer success in this rapidly evolving space. OCI Zettascale10 delivers multi‑gigawatt capacity for the most challenging AI workloads with NVIDIA's next-generation GPU platform. In addition, the native availability of NVIDIA AI Enterprise on OCI gives our joint customers a leading AI toolset close at hand to OCI's 200+ cloud services, supporting a long tail of customer innovation." "Through this latest collaboration, Oracle and NVIDIA are marking new frontiers in cutting-edge accelerated computing -- streamlining database AI pipelines, speeding data processing, powering enterprise use cases and making inference easier to deploy and scale on OCI," said Ian Buck, vice president of hyperscale and high-performance computing at NVIDIA. Oracle Database 26ai, Oracle's flagship database, is adding key functionality to accelerate high-volume AI vector workloads. Oracle Database 26ai application programming interfaces now support integration with NVIDIA NeMo Retriever, allowing developers to easily run vector embedding models or implement retrieval-augmented generation (RAG) pipelines using NVIDIA NIM microservices. NVIDIA offers a full suite of NIM microservices for every stage of a RAG pipeline: NeMo Retriever extraction models for ingesting multimodal data at scale, NeMo Retriever embedding models for converting data chunks into vector embeddings, NeMo Retriever reranking models for boosting overall accuracy of final responses, and large language models (LLMs) to generate the final contextually accurate responses. Oracle Private AI Services Container is a new service that makes it easy to deploy AI services wherever needed, including cloud and on-premises environments. Oracle's first implementation, which supports execution on CPU resources, has now been designed to support the future use of NVIDIA GPUs for vector embedding and index generation using the NVIDIA cuVS open-source library. Embedding generation and vector search index creation are two important tasks required by vector databases. As data volumes increase and AI applications mature, vector index creation build times increasingly become a bottleneck. GPUs are incredibly efficient at building approximate nearest neighbor search algorithms. Users who want to accelerate their index builds will soon be able to offload this computationally intensive task to NVIDIA GPUs using planned Oracle Private AI Services Container capabilities. Once the index has been built, it can be formatted for search on Oracle AI Database 26ai. NVIDIA accelerated computing is also integrated into the new Oracle AI Data Platform, which provides a comprehensive ecosystem that unites enterprise data with AI models, developer tools, and tight controls over privacy and governance. The Oracle AI Data Platform includes a built-in NVIDIA GPU option to power high-performance workloads. It also features a new NVIDIA RAPIDS Accelerator for Apache Spark plug-in to unlock faster analytics, extract, transform, load, and machine learning pipelines through GPU acceleration. The RAPIDS Accelerator for Apache Spark plug-in uses GPUs to accelerate processing by combining the power of the NVIDIA cuDF library and the scale of the Spark distributed computing framework. All of this is designed to enable GPU-acceleration for Apache Spark applications with no code changes. Oracle Media and Entertainment is using the NVIDIA NeMo Curator library with a Nemotron vision language model (VLM) to power video understanding. This pipeline accelerates Oracle's video-centric AI workflows by automating the pre-processing steps: video decoding, clip segmentation, transcoding and more. It enables high-quality, scalable filtering, deduplication, annotation, classification and quality control for both video and associated text. This capability enables Oracle to generate dense video captions and curate images needed to train downstream models, improving their efficiency and reliability. NVIDIA NeMo Retriever Parse, a transformer-based vision-encoder-decoder model designed for high-precision document understanding, enhances Oracle Fusion Document Intelligence by making it easier to extract meaningful information from complex documents. The model goes beyond simple text scanning -- it can handle versatility, diversity and variability in enterprise documents, extracting critical metadata while preserving document structure. These capabilities can be used to build agentic or multimodal RAG applications. Bringing all these capabilities together, Oracle AI Hub now offers enterprises a single access point for building, deploying and managing custom AI solutions. Users can deploy NVIDIA NIM microservices through Oracle AI Hub, delivering a simple, no-code experience for deploying models, including NVIDIA Nemotron LLMs, VLMs and more. The initial release features a curated set of hosted NIM microservices and early access to next-generation, streamlined inference capabilities. With the integration of NIM microservices, designed to run a broad range of LLMs from a single container, customers can quickly deploy models for various business applications. Enterprises can now also harness NVIDIA AI Enterprise, natively integrated within OCI, for simplified access to NVIDIA's cloud-native suite of software tools, libraries and frameworks. This integration streamlines the development, deployment and management of AI solutions, providing robust enterprise support across Oracle's platform. NVIDIA AI Enterprise is now natively available within the OCI Console experience, allowing users to directly enable it when provisioning supported GPU instances. This capability is available across OCI's distributed cloud, including public regions, sovereign clouds and dedicated regions, to help customers meet security and compliance requirements. This new offering allows customers to access a full suite of AI tools without having to separately procure orders through the Oracle Cloud Marketplace, providing a streamlined process to build AI applications at scale with flexible pricing, enterprise support, expert guidance and priority security updates. NVIDIA was also recognized at Oracle AI World as a 2025 Oracle Partner award winner, underscoring the company's work with Oracle to transform the AI landscape for enterprises and drive innovation across the OCI ecosystem.

[6]

Oracle's mega cloud dream hits 16 zettaFLOPS

OpenAI's Stargate cluster in Texas runs on Oracle's new infrastructure Oracle has announced what it calls the largest AI supercomputer in the cloud, the OCI Zettascale10. The company claims the system can deliver 16 zettaFLOPS of peak performance across 800,000 Nvidia GPUs. That output, when divided, equals about 20 petaflops per GPU, roughly matching the Grace Blackwell GB300 Ultra chip used in high-end desktop AI systems. Oracle says the platform is the foundation for OpenAI's Stargate cluster in Abilene, Texas, built to handle some of the most demanding AI workloads now emerging in research and commercial use. "The highly scalable custom RoCE design maximizes fabric-wide performance at gigawatt scale while keeping most of the power focused on compute...," said Peter Hoeschele, vice president, Infrastructure and Industrial Compute, OpenAI. At the core of the Zettascale10 system is Oracle Acceleron RoCE networking, designed to increase scalability and reliability for data-heavy AI operations. This architecture uses network interface cards as mini switches, linking GPUs across several isolated network planes. The design aims to reduce latency between GPUs and allow jobs to continue running if one network path fails. "Featuring Nvidia full-stack AI infrastructure, OCI Zettascale10 provides the compute fabric needed to advance state-of-the-art AI research and help organizations everywhere move from experimentation to industrialized AI," said Ian Buck, vice president of Hyperscale, Nvidia. Oracle claims this structure can lower costs by simplifying tiers within the network while maintaining consistent performance across nodes. It also introduces Linear Pluggable and Receiver Optics to reduce energy and cooling use without cutting bandwidth. Although Oracle's figures are impressive, the company has not provided independent verification of its 16 zettaFLOPS claim. Cloud performance metrics can vary depending on how throughput is calculated, and Oracle's comparison may rely on theoretical peaks rather than sustained rates. Given that the system's advertised total equals the sum of 800,000 top-end GPUs, real-world efficiency could depend heavily on network design and software optimization. Analysts may wait to see whether the configuration delivers performance comparable to leading AI clusters already run by other major cloud providers. The Zettascale10 positions Oracle alongside other major players racing to provide the infrastructure behind the best GPUs and AI tools. The company says customers could train and deploy large models across Oracle's distributed cloud environment, supported by data sovereignty measures. Oracle also says Zettascale10 offers operational flexibility through independent plane-level maintenance, allowing updates with less downtime. "With OCI Zettascale10, we're fusing OCI's Oracle Acceleron RoCE network architecture with next-generation Nvidia AI infrastructure to deliver multi-gigawatt AI capacity at unmatched scale," said Mahesh Thiagarajan, executive vice president, Oracle Cloud Infrastructure. "Customers can build, train, and deploy their largest AI models into production using less power and will have the freedom to operate across Oracle's distributed cloud with strong data and AI sovereignty..." Still, observers note that other providers are building their own large-scale GPU clusters and advanced cloud storage systems, which could narrow Oracle's advantage. This system will roll out next year, and only then will it be clear whether the architecture can meet demand for scalable, efficient, and reliable AI computation. Via HPCWire

[7]

Oracle's AMD deal marks a new phase in the AI chip race

AMD isn't coming for Nvidia's lunch (not yet, at least). But Oracle's 50,000-GPU deal with AMD, announced Tuesday, does mean the software giant won't have to beg Nvidia for seconds. Oracle's cloud arm has been dining almost exclusively at Nvidia's table, where access is measured in wafer batches and waiting lists. Oracle is buying leverage -- a negotiation tactic that just happens to cost billions. Every AI firm right now is feeling the hunger. Compute power is the new currency, and Nvidia has been the market's main course. Its chips, software stack, and interconnects have become both the backbone and bottleneck of the industry, leaving even trillion-dollar companies to compete for scraps. The Oracle-AMD announcement is less about what Oracle is buying than it is about what it's broadcasting: that it won't be left starving while the rest of the valley feasts. The timing is the real tell. Oracle's announcement comes amid a scramble among megaclouds to secure "second sources" -- rival suppliers who can hedge both shortages and optics. Those 50,000 AMD Instinct MI450s aren't even shipping until 2026 (which, in AI time, can feel like next century). Between now and then, Nvidia will release new architectures. So Oracle's big order is more hedge than harvest, a note to shareholders that it's thinking ahead. The scale alone underscores the hierarchy. Nvidia's data-center GPU fleet numbers in the millions; Oracle's planned 50,000 AMD units would barely register on Nvidia's balance sheet. Yet in the market's imagination, even a hint of supply competition counts as disruption. Wall Street cheered the announcement because it suggested that someone -- anyone! -- might ease the bottleneck that has been inflating hardware costs and stock prices alike. AMD, for its part, has been waiting years for this kind of invitation. It has the silicon pedigree but not the software ecosystem; Nvidia's CUDA remains the default language of machine learning. So the MI450 will have to prove it can match Nvidia's performance without collapsing under compatibility issues -- a challenge no amount of marketing bravado can smooth over. AMD's momentum has gained fresh air from its newly disclosed multigigawatt supply deal with OpenAI where, under the agreement, AMD will deliver up to 6 gigawatts of GPU capacity over several generations, beginning with the MI450 series in 2026, and OpenAI is granted warrants to acquire up to 160 million shares (roughly 10 % of AMD) contingent on delivery milestones. This makes the Oracle-AMD deal less about immediate compute and more about credibility: AMD is no longer a speculative alternative, it's a sanctioned partner of one of the AI world's heaviest users. In a capital-intensive industry, the appearance of competition can be almost as valuable as the competition itself. But Nvidia's win is structural: a world so dependent on its ecosystem that even defiance reinforces its dominance. The Oracle-AMD deal hints at what the next phase of AI infrastructure will look like -- not rebellion, but redundancy. The industry isn't trying to dethrone Nvidia; it's trying to ensure that the next supply shock or tariff or tweet doesn't derail its ambitions. It's the beginning of a more balanced meal -- one where resilience, not reverence, decides who gets to eat. Oracle didn't just buy GPUs this week. It bought time, leverage, and the illusion of choice -- the new currency of the AI economy. Oracle's table might look fuller after this week, but the recipes haven't changed. The AI feast remains Nvidia's to cater, and everyone else is still borrowing utensils. The meal goes on, and the kitchen still belongs to the same chef -- but AMD is on the line, plating dishes of its own.

[8]

Nvidia might dominate the industry, but Oracle is still betting on AMD chips for its superclusters

Oracle has announced an expansion of its partnership with AMD at its annual AI World conference, revealing it will become the first hyperscaler to offer a publicly available supercluster powered by 50,000 AMD Instinct MI450 Series GPUs. The two companies already have a long-standing partnership, and this news builds on prior deployments using AMD's MI300X and MI355X GPUs. The supercluster will feature a full suite of AMD components across its Helios rack architecture, including the MI450 GPUs, next-gen AMD EPYC 'Venice' CPUs and Pensando 'Vulcano' networking. The messaging at AI World 2025 has been clear - the company's commitment is to bring AI to you and your data, and not the other way around. This has presented the company with an entire opportunity to open up with third parties, buddying up with rival hyperscalers for cloud hosting and maintaining multiple chip vendor options, all of which is designed to give the customer choice. The latest Helios racks offer a dense, liquid-cooled design for supporting up to 72 GPUs per rack - the usual low-latency promises are also made. Oracle will also provision up to three 800 Gbps 'Vulcano' AI-NICs per GPU, and those GPUs will provide up to 432GB of HBM4 and 20 TB/s of memory bandwidth. Oracle boasted that the latest configuration will allow customers to train and infer models that are 50% larger than previous generations. At the same time, OCI has also announced the general availability of OCI Compute with AMD Instinct MI355X GPUs, with up to 131,072 of them available in the zettascale Supercluster. OCI EVP Mahesh Thiagarajan said that the continued partnership with AMD responds to customer demands for "robust, scalable and high-performance infrastructure" with "the best price-performance, open, secure, and scalable cloud foundation." AMD shares rose by around 8.7% in the day following the announcement.

[9]

Oracle AI World 25 - Oracle bets big on AMD to diversify AI infrastructure portfolio as chip wars intensify

Oracle has announced a major expansion of its partnership with AMD, positioning itself to be the first hyperscaler to offer a publicly available AI supercluster powered by 50,000 AMD Instinct MI450 Series GPUs starting in Q3 2026, with further expansion planned for 2027 and beyond. The move, unveiled at Oracle AI World in Las Vegas, represents a significant bet on AMD's ability to challenge NVIDIA's dominance in the AI infrastructure market. It comes as Oracle continues to build out massive AI capacity to support the unnamed large customers -- including what CEO Safra Catz has described as a $30 billion+ annual contract -- that have driven Oracle Cloud Infrastructure's explosive growth. Forrest Norrod, executive vice president and general manager of AMD's Data Center Solutions Business Group said: AMD and Oracle continue to set the pace for AI innovation in the cloud. With our AMD Instinct GPUs, EPYC CPUs, and advanced AMD Pensando networking, Oracle customers gain powerful new capabilities for training, fine-tuning, and deploying the next generation of AI. The Oracle deal comes on the heels of AMD's recent announcement of a 6-gigawatt infrastructure partnership with OpenAI, positioning the chip maker as an alternative to NVIDIA for hyperscale AI deployments. OpenAI has deals with both vendors -- $100 billion with NVIDIA for 10 gigawatts, and now the AMD arrangement -- suggesting that major AI players are looking to avoid single-vendor dependency. For Oracle, the AMD relationship is sensible - it provides choice. Mahesh Thiagarajan, executive vice president of Oracle Cloud Infrastructure, said: Our customers are building some of the world's most ambitious AI applications, and that requires robust, scalable, and high-performance infrastructure. By bringing together the latest AMD processor innovations with OCI's secure, flexible platform and advanced networking powered by Oracle Acceleron, customers can push the boundaries with confidence. Oracle said that the AMD Instinct MI450 Series GPUs will offer up to 432 GB of HBM4 memory and 20 TB/s of memory bandwidth -- allowing customers to train and run models that are 50% larger than previous generations. A big upgrade. Alongside this announcement, Oracle also launched OCI Zettascale10, described as the largest AI supercomputer in the cloud, capable of connecting up to 800,000 NVIDIA GPUs across multiple data centers to deliver up to 16 zettaFLOPS of peak performance. The system underpins the flagship supercluster Oracle is building with OpenAI at the Stargate site in Abilene, Texas. The dual announcements -- massive NVIDIA infrastructure alongside the AMD partnership -- highlight Oracle's strategy of providing customers with options while building the capacity to support multiple chip architectures at scale. Alongside the AI infrastructure news, Oracle formally launched OCI Dedicated Region25, potentially reducing the entry point for on-premises clouds from what had been a 10-12 rack requirement down to just three racks. The announcement confirms details shared in a pre-briefing last week. Scott Twaddle, senior vice president of Product and Industries at OCI, positioned the move as addressing sovereign AI requirements (a growing concern amongst enterprise technology buyers): Organizations want the freedom to run AI and cloud services where they deliver the most value, a need that's only growing as sovereign AI considerations drive stricter requirements around data location and control. With OCI Dedicated Region25, we're bringing the full power of Oracle Cloud to virtually any data center. The three-rack configuration includes all 200+ OCI services with the same SLAs and pricing as public cloud regions. During last week's pre-briefing, Pradeep Vincent, Senior Vice President and Chief Technical Architect at OCI, emphasized that the configuration includes: All OCI services, no exceptions" and stated that "any new OCI service that will be launched in a public region or any other region will automatically go to dedicated region as well. Oracle said that organizations can start with three racks and expand to hyperscale by adding network expansion racks without downtime or re-architecting. The timing of OCI Dedicated Region25 is particularly relevant given the increased focus on data sovereignty across global markets. With geopolitical tensions and regulatory pressures becoming increasingly uncertain, the market is forcing CIOs to rethink where their data physically resides and who has jurisdiction over it. The challenge in recent years has been that cloud economics favor scale and centralization, whilst data sovereignty requirements make that challenging (in fact, they often demand the opposite). If organizations wanted hyperscale cloud, but needed to keep data within their own four walls, faced a tough choice in the case of Oracle: commit to a 12-rack deployment (typically requiring significant data center space, power, and upfront investment) or settle for limited cloud services. The three-rack configuration announced today means, in theory, that organizations no longer have to choose between sovereignty and cloud scale -- they can have both, at what looks set to be a more accessible price point. This announcement clearly aims to address the operational challenges facing enterprise buyers at the moment. In Europe, GDPR priorities have intensified, with regulators taking an increasingly skeptical view of transatlantic data flows. For European organizations handling sensitive data, keeping it in-region is increasingly becoming important to risk management. In Asia-Pacific, China's Data Security Law and Personal Information Protection Law impose requirements on data localization and cross-border transfers. India has implemented data localization requirements for payment data and is considering even more stringent requirements. Indonesia, Vietnam, and other Southeast Asian nations are all moving toward stronger data residency requirements. Even in the US, certain sectors -- particularly financial services, healthcare, and defense -- face regulatory requirements that make sovereign cloud deployments attractive. Recent scrutiny around data access by foreign governments has heightened sensitivity around where data resides and who can access it. The practical result is that enterprises are being forced to at least consider fragmenting their cloud strategies. A global bank might need to keep European customer data in Europe, Asian data in Asia, and US data in the US -- not because of technical requirements, but because of legal and regulatory ones. Previously, running three separate 12-rack Dedicated Regions would have been cost-prohibitive for all but the largest organizations. At three racks per region, the economics start to make a bit more sense. One existing customer highlighted the practical benefits. Kazushi Koga, corporate executive officer and head of platform at Fujitsu, said: With OCI Dedicated Region25 delivering the full range of OCI services via a small physical footprint inside our own data centers, we will be able to deploy apps and services quickly and easily to our customers while benefiting from the flexibility to expand our deployment without downtime or re-architecting. Oracle also announced Multicloud Universal Credits today, addressing what has been an ongoing pain point: buying Oracle services across multiple clouds with separate procurement processes. The new licensing option enables customers to procure Oracle AI Database and OCI services across Oracle Database@AWS, Oracle Database@Azure, Oracle Database@Google Cloud, and OCI itself using a single consumption model. Karan Batta, senior vice president at OCI, said: With multiple regions now live across AWS, Google Cloud, and Microsoft Azure and the coming launch of Oracle Multicloud Universal Credits, we're giving customers more choices and flexibility than ever by simplifying contracts and introducing the industry's first flexible, cross-cloud consumption model. Dave McCarthy, research vice president at IDC, said that this will remove procurement roadblocks: Oracle has already done the technical work to create multicloud offerings in AWS, Google Cloud, and Microsoft Azure, and now they've taken it a step further and simplified procurement, contracting, and governance to provide even more flexibility to customers. For CFOs and procurement officers juggling multiple cloud vendors, this could significantly reduce administrative overhead and potentially unlock volume discounts across their entire multicloud estate. However, the effectiveness will depend on how well the program integrates with existing enterprise agreements and whether organizations can actually shift spending dynamically without triggering contract renegotiations. Oracle also continues expanding database service availability across major cloud providers, moving toward what Dave Rosenberg, Senior Vice President of OCI Product Marketing, described in last week's pre-briefing as "symmetry across all the clouds." All three hyperscaler partnerships now include partner reseller programs for the first time, enabling system integrators like Accenture, Deloitte, and Infosys to offer Oracle database services through marketplace private offers. During last week's pre-briefing, Pradeep Vincent also provided insight into why Oracle is building gigawatt-scale AI data centers rather than distributing inference capacity closer to end users. Addressing a common industry question, he said: One question I get asked quite often is: you're building these giant gigawatt sites? Is that actually going to be just for training, or is it useful for inference? Or should the inference capacity be spread across the world, closer to population centers, in smaller footprints? Vincent explained that the rise of agentic AI -- where multiple inference calls chain together to complete a single user request -- creates new infrastructure requirements: What we find is that there is a remarkable convergence between what the training needs and the inference needs in the context of agentic AI and complicated workflow inference. In the inference world, a single inference may be small, but most of our customers have lots and lots of inferences that they need to do in order to satisfy an end user request. That's the crux of agentic AI: a whole bunch of inference requests, database lookups, data management service lookups, and API calls. The latency across these inference calls becomes critical to the end customer experience. Operating gigawatt-scale data centers introduces unique challenges, particularly around power grid management. Vincent explained: When we have tens of megawatts or 100 megawatt data center, when the load oscillates, the grid can take it. But when we talk about gigawatts of data centers, we have to do a lot more engineering investment. The grid doesn't like it if you oscillate the load on a gigawatt level. Oracle's approach involves using energy storage systems, capacitors, and other techniques to "modulate the oscillations as seen by the grid." On the cooling front, Vincent confirmed that "for almost all of our large AI super clusters, we've been investing in liquid cooling systems that can reject heat at much higher density." What strikes me as interesting about Oracle's infrastructure announcements this morning is how operational they are. There's no 'big ticket' AI reveal. Instead, Oracle is systematically addressing the unglamorous infrastructure challenges that enterprises actually face when deploying AI at scale: multi-vendor chip supply chains, data sovereignty constraints that fragment cloud strategies, procurement friction across multiple cloud providers, and the physics of power management at gigawatt scale. Putting all these pieces together creates quite a compelling picture for enterprise buyers - and I will be asking customers on the ground this week about how far these announcements address their ongoing challenges. My colleague Jon Reed will be picking up the application and software announcements this week and I'm sure there will be more to come from the multiple keynotes in Vegas today - keep your eyes peeled for those write ups on diginomica.

[10]

Oracle details upcoming AI clusters powered by Nvidia, AMD chips - SiliconANGLE

Oracle details upcoming AI clusters powered by Nvidia, AMD chips Oracle Corp. today announced plans to build new artificial intelligence clusters powered by Nvidia Corp. and Advanced Micro Devices Inc. chips. The first cluster, the OCI Zettascale10, will be hosted in the company's OCI public cloud. It will enable customers to configure AI environments with up to 800,000 Nvidia graphics processing units. Separately, Oracle is building a 50,00 GPU cluster based on the Instinct MI450, AMD's upcoming series of flagship AI accelerators. Other key players in the AI market are also using GPUs from multiple suppliers to avoid overdependence on a single chipmaker. Oracle's major rivals in the cloud market all offer a mix of Nvidia and AMD GPUs. OpenAI, which has commissioned $300 billion worth of AI infrastructure from the database maker, plans to deploy custom AI chips alongside the off-the-shelf silicon it currently uses. The architecture that underpins the OCI Zettascale10 powers a data center Oracle is currently building for OpenAI in Abilene, Texas. The offering will support multi-gigawatt clusters with up to 800,000 Nvidia GPUs. Oracle estimates that OCI Zettascale10 will offer peak AI performance of 16 zettaflops, or 16 trillion billion computations per second. The company plans to link together the GPUs in OCI Zettascale10 clusters using Nvidia's Spectrum-X series of Ethernet network equipment. The product family is centered on two devices. The first is the BlueField-3 SuperNIC, a chip that connects GPU servers to a data center's network and offloads certain computing tasks from their main processors. The chip is joined by a line of Ethernet switches called the Spectrum SN5000. Oracle's implementation of the network devices features a technology it calls Acceleron RoCE. Typically, moving data between GPUs requires sending it through the central processing units of the servers that host the GPUs. Acceleron RoCE skips that step to improve performance. Oracle is currently taking orders for OCI Zettascale10. The offering will become available in the second half of 2026. "Customers can build, train, and deploy their largest AI models into production using less power per unit of performance and achieving high reliability," said Mahesh Thiagarajan, the executive vice president of Oracle Cloud Infrastructure. "In addition, customers will have the freedom to operate across Oracle's distributed cloud with strong data and AI sovereignty controls." Oracle plans to bring the OCI Zettascale10 online alongside an AI cluster equipped with 50,000 of AMD's MI450 graphics cards. The GPUs will run in racks based on a new design, Helios, that the chipmaker detailed today. A Helios rack can host 72 MI450 chips, each of which includes up to 432 gigabytes of HBM4 memory. HBM4 is a high-speed RAM variety that is not yet in mass production. AMD estimates that the technology will enable Helios to offer double the memory capacity and bandwidth of a system equipped with Nvidia's upcoming Vera Rubin chips. Helios racks also contain other components. They will incorporate AMD's upcoming Venice server CPU series and Vulcano, a future addition to its Pensando line of data processing units. The company says that each Helios rack will be capable of providing up to 1.4 exaflops of performance when processing FP8 data. Helios will use liquid cooling to remove the heat generated by its components. According to AMD, the system is based on a double-width design meant to make it easier for technicians to fix malfunctions. The company will enable hardware partners to extend the core Helios feature set to adapt it for their requirements. Oracle plans to install the first MI450-equipped Helios racks in its OCI data centers during the third quarter of 2026. The company will start bringing additional systems online in 2027.

[11]

Oracle unveils OCI Zettascale10 AI supercomputer with 800k Nvidia GPUs

Oracle has announced its OCI Zettascale10, a cloud-based AI supercomputer it claims offers 16 zettaFLOPS of peak performance. The system, utilizing 800,000 Nvidia GPUs, is designed to support large-scale AI workloads developed by partners including OpenAI. The company asserts that the system can achieve a peak performance of 16 zettaFLOPS distributed across its 800,000 Nvidia GPUs. This level of output, when calculated on a per-GPU basis, equates to approximately 20 petaflops for each unit. This individual performance metric is comparable to the output of the Grace-Blackwell GB300 Ultra chip, a component used in high-end desktop systems specifically designed for artificial intelligence tasks. The total figure positions the Zettascale10 as a significant entry in large-scale computational infrastructure. Oracle has identified the platform as the foundational infrastructure for OpenAI's Stargate cluster, which is located in Abilene, Texas. This facility is being constructed to manage some of the most demanding AI workloads currently emerging from both research initiatives and commercial applications. Peter Hoeschele, vice-president of Infrastructure and Industrial Compute at OpenAI, stated, "The highly scalable custom RoCE design maximizes fabric-wide performance at gigawatt scale while keeping most of the power focused on compute." Central to the Zettascale10 system is the Oracle Acceleron RoCE networking architecture, which has been engineered to enhance scalability and reliability for data-heavy AI operations. This design employs network interface cards that function as miniature switches, creating direct links between GPUs across several isolated network planes. This configuration is intended to reduce latency in communication between GPUs. It also provides redundancy, allowing computational jobs to continue processing without interruption even if one of the network paths experiences a failure. Nvidia's role in the system was highlighted by Ian Buck, vice-president of Hyperscale at the company. "Featuring Nvidia full-stack AI infrastructure, OCI Zettascale10 provides the compute fabric needed to advance state-of-the-art AI research and help organizations everywhere move from experimentation to industrialised AI," Buck said. Oracle also claims its network structure can lower costs by simplifying the tiers within the network fabric while delivering consistent performance across all nodes. The system introduces Linear-Pluggable and Receiver Optics technologies, aimed at reducing both energy consumption and cooling requirements without sacrificing bandwidth. The 16 zettaFLOPS performance claim from Oracle has not been independently verified. Performance metrics for cloud systems can differ based on the methodology used for calculation, and the company's figure might be based on theoretical peak performance rather than sustained operational rates. Since the system's advertised total output equals the sum of its 800,000 GPUs operating at their maximum potential, its real-world efficiency will depend significantly on factors like network design and software optimization. Analysts are expected to wait to see if the configuration delivers performance comparable to established AI clusters from other major cloud providers. The Zettascale10 system is designed to allow customers to train and deploy large AI models across Oracle's distributed cloud environment, which includes data sovereignty measures. Mahesh Thiagarajan, executive vice-president at Oracle Cloud Infrastructure, commented, "With OCI Zettascale10, we're fusing OCI's Oracle Acceleron RoCE network architecture with next-generation Nvidia AI infrastructure to deliver multi-gigawatt AI capacity at unmatched scale." He added that customers can build and train models using less power and operate with "strong data and AI sovereignty." The system also offers operational flexibility through independent plane-level maintenance, which permits updates with reduced downtime. Observers have noted that other major cloud providers are concurrently building their own large-scale GPU clusters and developing advanced cloud storage systems, which could narrow any competitive advantage held by Oracle. The Zettascale10 system is scheduled for a rollout next year. Its capacity to meet growing demand for scalable, efficient, and reliable AI computation will be evaluated following its deployment.

[12]

Oracle and AMD expand AI partnership to keep up with demand

WASHINGTON (AP) -- Oracle and Advanced Micro Devices are expanding their partnership with the deployment of 50,000 AMD graphic processing units beginning in the third quarter of 2026 with further expansion to follow. The so-called AI "supercluster" is a massive, interconnected group of high-performance computers designed to work together as a single system. AMD shares jumped 3% before the bell Tuesday, while Oracle's slipped 1.8%. The companies said that next-generation AI models are poised to outgrow the limits of current AI infrastructure. No dollar figures for what each company's investment in the expanded partnership.

[13]

Oracle and AMD expand AI partnership to keep up with demand

WASHINGTON -- WASHINGTON (AP) -- Oracle and Advanced Micro Devices are expanding their partnership with the deployment of 50,000 AMD graphic processing units beginning in the third quarter of 2026 with further expansion to follow. The so-called AI "supercluster" is a massive, interconnected group of high-performance computers designed to work together as a single system. AMD shares jumped 3% before the bell Tuesday, while Oracle's slipped 1.8%. The companies said that next-generation AI models are poised to outgrow the limits of current AI infrastructure. No dollar figures for what each company's investment in the expanded partnership.

[14]

AMD Just Scored Another Big AI Chip Deal -- What You Need to Know

Oracle said its initial deployment of those chips will begin in the third quarter of 2026, with more expected after that. Advanced Micro Devices just racked up another major AI deal, with signs of more to come. Cloud computing giant Oracle (ORCL) said Tuesday that it agreed to buy 50,000 GPUs from AMD (AMD) to build out its capacity to help customers scale their use of AI. Oracle said its initial deployment of those chips would start in the third quarter of next year, with that number likely to expand in 2027 and beyond. The company said that as demand for AI grows, "customers need flexible, open compute solutions engineered for extreme scale and efficiency." Shares of AMD were up about 3% in recent trading, not far from their record highs set earlier this month. They've added 85% of their value this year, with more than half of those gains coming in the wake of the massive partnership with ChatGPT maker OpenAI unveiled on Oct. 6. Oracle shares were 2% lower Tuesday, but have climbed roughly 80% in 2025 so far on its rosy outlook for AI-driven growth.

[15]

Oracle and AMD expand AI partnership to keep up with demand

Oracle and AMD are expanding their partnership to deploy 50,000 AMD GPUs starting Q3 2026, forming an AI "supercluster" for next-generation AI models. The venture aims to overcome current AI infrastructure limits. Oracle and Advanced Micro Devices are expanding their partnership with the deployment of 50,000 AMD graphic processing units beginning in the third quarter of 2026 with further expansion to follow. The so-called AI "supercluster" is a massive, interconnected group of high-performance computers designed to work together as a single system. AMD shares jumped 3% before the bell Tuesday, while Oracle's slipped 1.8%. The companies said that next-generation AI models are poised to outgrow the limits of current AI infrastructure. No dollar figures for what each company's investment in the expanded partnership.

[16]

Oracle Cloud To Deploy 50,000 AMD Chips, Challenging Nvidia's Dominance - NVIDIA (NASDAQ:NVDA), Advanced Micro Devices (NASDAQ:AMD)

Oracle's (NYSE:ORCL) Cloud Infrastructure announced on Tuesday that it will deploy 50,000 Advanced Micro Devices (NASDAQ:AMD) graphics processors starting in the second half of 2026, marking a significant shift away from the Nvidia (NASDAQ:NVDA) GPUs that dominate the market. AMD shares climbed 1.9% in pre-market trading, while Oracle's stock ticked slightly lower. Nvidia's shares were down 1.5%. Competition For Nvidia Oracle Cloud Infrastructure will deploy 50,000 of AMD's Instinct MI450 chips, introduced earlier this year. These are AMD's first AI processors designed to be combined into a rack-sized system, enabling 72 chips to operate as a single unit -- a key capability for building and running advanced AI models. The companies did not disclose the financial terms of the deal. "We feel like customers are going to take up AMD very, very well -- especially in the inferencing space," said Karan Batta, senior vice president of Oracle Cloud Infrastructure. AMD's software stack was also highlighted as "critical" by Batta. The decision to shift to AMD's GPUs is indicative of a broader trend in the cloud industry, where companies are increasingly considering AMD as a viable alternative to Nvidia's GPUs for AI. See Also: Bitcoin Not Yet A Short-Term Risk Diversifier Like Gold, Says Jeremy Siegel: 'It Will Snap Back But...' - Benzinga Jensen Huang On AMD-Open AI Deal The move comes just a week after AMD and OpenAI announced a deal. OpenAI will purchase up to six gigawatts of AMD's next-generation Instinct MI450X GPUs over the next five years -- a contract analysts estimate could generate over $100 billion in revenue. This deal was described as a "bet-the-farm" moment for AMD CEO Lisa Su. Nvidia's CEO, Jensen Huang, expressed his surprise at AMD's decision to grant OpenAI warrants for up to 160 million AMD shares, worth nearly 10% of the company, in exchange for a massive GPU purchase commitment. This was described as "imaginative" but "surprising" by Huang. The current AI boom has been described as a potential "high-tech house of cards" and an "excuse to eliminate millions of American jobs" by Gordon Johnson, CEO and Founder of GLJ Research, LLC. Benzinga's Edge Rankings place AMD in the 84th percentile for quality and the 94th percentile for growth, reflecting its strong performance in both areas. Check the detailed report here. READ NEXT: Oracle CEO Says 'Of Course' OpenAI Can Handle $60 Billion Cloud Bill Despite $5 Billion Loss In 2024: 'Just Look At The Rate...' Image via Shutterstock Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. AMDAdvanced Micro Devices Inc$221.142.18%OverviewNVDANVIDIA Corp$185.45-1.52%ORCLOracle Corp$303.99-1.31%Market News and Data brought to you by Benzinga APIs

[17]

AMD stock rises after Oracle partnership for AI chips By Investing.com

Investing.com -- Advanced Micro Devices (NASDAQ:AMD) stock rose 2.6% Tuesday morning after the chipmaker announced a partnership with Oracle (NYSE:ORCL) to provide cloud services using AMD's upcoming MI450 artificial intelligence processors. The companies revealed plans to initially deploy 50,000 MI450 processors in the third quarter of 2026, with further expansion slated for 2027 and beyond. This agreement gives AMD another significant customer for its upcoming AI chips while allowing Oracle to diversify its processor offerings amid surging demand for AI computing infrastructure. "Demand for large-scale AI capacity is accelerating as next-generation AI models outgrow the limits of current AI clusters," the companies stated in their announcement. The Oracle partnership comes just a week after AMD secured a multi-year deal to supply AI chips to OpenAI. That arrangement notably includes an option for the ChatGPT creator to acquire up to approximately 10% stake in AMD. The developments highlight AMD's growing footprint in the competitive AI chip market as companies scramble to secure computing capacity for developing and deploying artificial intelligence technologies. Both partnerships represent significant wins for AMD as it works to challenge Nvidia's dominance in the AI processor space.

[18]

Oracle to offer cloud services using AMD's upcoming AI chips

(Reuters) -Oracle will offer cloud services using Advanced Micro Devices' upcoming MI450 artificial intelligence chips, the companies said on Tuesday, as they rush to tap the booming demand for infrastructure to support tools such as ChatGPT. The companies will first deploy 50,000 MI450 processors in the third quarter of 2026 and further expand in 2027 and beyond. The deal gives AMD another major client for its upcoming chips, while allowing Oracle to expand its processor offerings, at a time when businesses are rushing to secure compute capacity for developing AI. "Demand for large-scale AI capacity is accelerating as next-generation AI models outgrow the limits of current AI clusters," the companies said. Shares of AMD rose more than 3% in premarket trading, bucking a broader market selloff as renewed concerns over a U.S.-China trade conflict dampened investor sentiment. Oracle shares were down about 1%. AMD last week unveiled a deal to supply AI chips to OpenAI in a multi-year deal, giving the ChatGPT creator an option to buy up to roughly 10% stake in the chipmaker. AMD had worked with OpenAI to improve the design of its MI450 chips for AI work and the startup is building a one-gigawatt facility based on the processor next year. OpenAI is also reported to have signed one of the biggest cloud deals ever with Oracle, under which the ChatGPT maker is expected to buy $300 billion in computing power for about five years. The "AI superclusters" with AMD will be powered by the chipmaker's "Helios" rack design. AMD's larger competitor and the world's most valuable firm - Nvidia - now sells racks, or fully integrated systems which include GPUs and CPUs, with AMD rushing to follow suit. (Reporting by Arsheeya Bajwa in Bengaluru and Max A. Cherney in San Francisco; Editing by Leroy Leo)

Share

Share

Copy Link

Oracle plans to significantly boost its AI computing power by deploying 50,000 AMD MI450 Instinct GPUs and 800,000 Nvidia GPUs, totaling over 18 zettaFLOPS of AI performance by late 2026. This move highlights the intensifying competition between AMD and Nvidia in the AI chip market.

Oracle's Ambitious AI Infrastructure Expansion

Oracle has unveiled plans for a massive expansion of its AI computing infrastructure, set to deploy by the second half of 2026. This ambitious project involves partnerships with both AMD and Nvidia, signaling a new chapter in the competition for AI chip dominance

1

2

.

Source: The Register

AMD's Breakthrough in Oracle's AI Strategy

At the heart of Oracle's expansion is a deal with AMD to deploy 50,000 next-generation MI450 Instinct GPUs. This partnership marks a significant milestone for AMD as it seeks to challenge Nvidia's stronghold in the AI chip market

1

3

.

Source: Market Screener

The AMD-powered cluster will utilize the Helios rack system, combining MI450 graphics cards with Zen 6 Epyc CPUs and advanced networking hardware codenamed Vulcano. AMD claims this setup will be highly competitive with Nvidia's offerings, potentially replicating their 2021 success in the consumer CPU market against Intel

1

.Nvidia's Continued Dominance

Despite AMD's advancements, Nvidia maintains a significant presence in Oracle's AI strategy. Oracle plans to field a cluster of 800,000 Nvidia GPUs, capable of delivering up to 16 zettaFLOPS of peak AI performance

2

. This cluster will be part of Oracle Cloud Infrastructure's Zettascale10 offering and will utilize Nvidia's Spectrum-X Ethernet switching platform5

.

Source: NVIDIA

Implications for AI Computing Power

The combined deployment of AMD and Nvidia GPUs is expected to bring Oracle's total AI computing power to over 18 zettaFLOPS by late 2026

2

. This massive increase in computing capability is driven by the growing demand for AI infrastructure to support advanced models and applications across various industries.Related Stories

Market Dynamics and Future Outlook

Oracle's dual partnership strategy reflects the intensifying competition in the AI chip market. While Nvidia has been the dominant player, AMD's recent advancements and partnerships, including a deal with OpenAI, suggest a shifting landscape

1

4

.The expansion also highlights the enormous financial investments being made in AI infrastructure. With multiple tech giants announcing deals worth tens of billions of dollars, questions arise about the sustainability of this rapid growth and its potential to create an AI investment bubble

1

.Impact on Enterprise AI and Data Processing

Beyond raw computing power, Oracle's expansion aims to enhance enterprise AI capabilities. The integration of NVIDIA AI Enterprise software and NVIDIA NIM microservices into Oracle's database and AI platforms promises to streamline data processing and deliver intelligence across various business layers

5

.As the AI infrastructure race continues, the collaboration between cloud providers and chip manufacturers will play a crucial role in shaping the future of enterprise AI and data processing capabilities.

References

Summarized by

Navi

[1]

[2]

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy