Samsung and SK hynix Unveil Next-Gen HBM4 Memory, Intensifying AI Chip Competition

2 Sources

2 Sources

[1]

Samsung Goes Head-to-Head with SK Hynix and Micron as It Unveils HBM4 Memory to the Public for the First Time

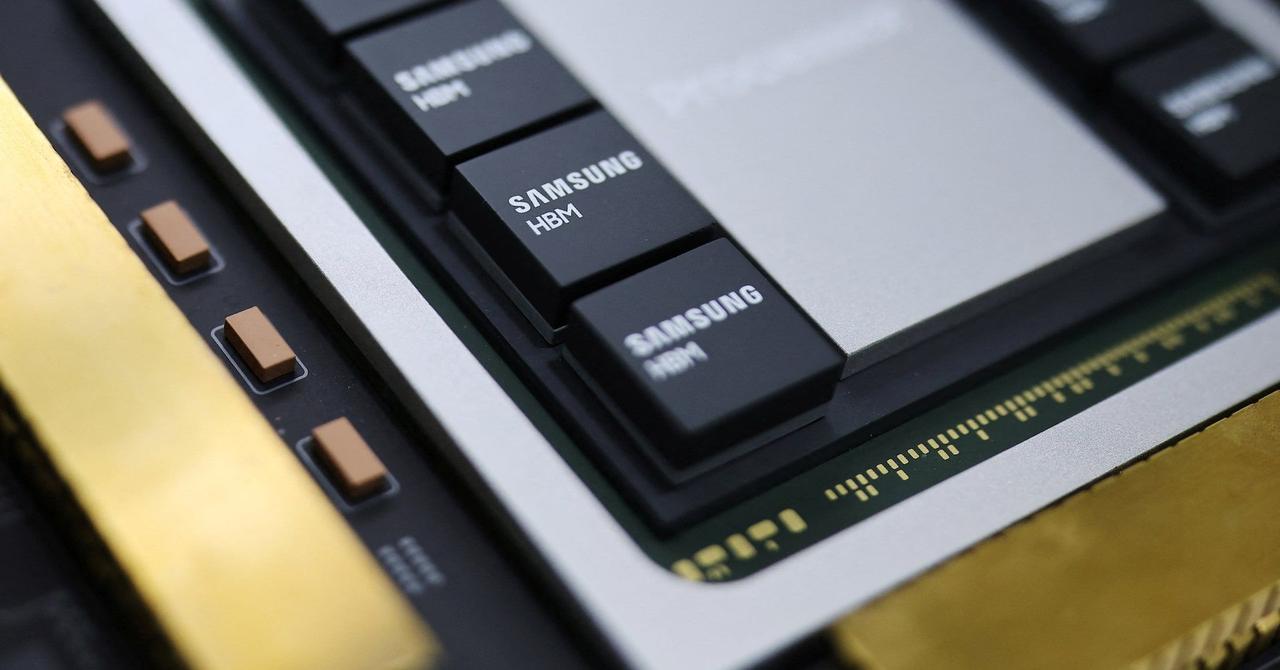

Samsung has showcased its HBM4 memory modules to the public for the first time, indicating that the Korean giant is indeed prepared for the upcoming HBM competition. HBM4 is one of the most 'prestigious' computing essentials in the modern-day markets, mainly since the memory module will be responsible for scaling up AI performance. Korean HBM manufacturers, such as Samsung, SK hynix, and Micron, are giving it their all to showcase competitive HBM4 solutions to the world, ensuring their adoption. Among the mainstream HBM manufacturers, Samsung is one of the entities that has made a massive comeback in the segment, following years of sluggish performance. At the Semiconductor Exhibition (SEDEX) 2025, Samsung showcased its HBM4 process to the public. Samsung is reportedly avoiding the mistakes that led to its loss of dominance in the DRAM segment, and with HBM4, the Korean giant is proceeding with mass production alongside its competitors to ensure it does not fall behind. According to a report from DigiTimes, Samsung's HBM4 logic die yield has reportedly reached a phenomenal 90%, indicating that the firm is on track for mass production, and more importantly, delays aren't anticipated for now. The Korean giant is also reported to be implementing several strategies to ensure early HBM4 adoption, including maintaining a competitive price, offering higher production capacities, and, more importantly, delivering faster pin speeds to clients like NVIDIA, which is rated to be around 11 Gbps, higher than what SK hynix and Micron are said to offer. For now, Samsung hasn't received NVIDIA's approval for HBM4 supply, but considering the progress made with the technology, the Korean giant is certainly optimistic. Along with Samsung, SK Hynix also showcased its HBM4 modules at the event, which are developed in collaboration with TSMC. It is certain that the future of the DRAM markets is going to be a lot more competitive, considering Samsung's rapid advancements, and at the same time, demand is at a level that hasn't been anticipated before.

[2]

Samsung, SK hynix showcase latest HBM4 chips at 2025 SEDEX - The Korea Times

Samsung Electronics and SK hynix on Wednesday showcased their latest high bandwidth memory (HBM) chips at a semiconductor exhibition in Seoul amid an expected showdown between the South Korean companies for the sixth-generation artificial intelligence (AI) chip market. At the 2025 Semiconductor Exhibition (SEDEX), which kicked off earlier in the day for a three-day run in southern Seoul, both companies displayed their HBM4 chips at their booths, underscoring their confidence in leading the rapidly growing sector. HBM, an advanced, high-performance memory chip, is a crucial component of Nvidia's graphics processing units (GPUs) that drive generative AI systems. While the current market is dominated by fifth-generation HBM3E chips, industry watchers expect HBM4 to become a major factor next year, as Nvidia plans to use it in its next-generation AI accelerator, Rubin. SK hynix, currently the leading supplier of HBM3E as part of the tripartite supply chain with Nvidia and TSMC, has completed development of HBM4 and is preparing for mass production. The company is reportedly in talks with Nvidia for large-scale supply. For Samsung Electronics, which has long dominated the memory market but recently lost ground in HBM, the new HBM4 lineup is seen as a potential game changer to regain its competitive edge. At a shareholders' meeting in March, Jun Young-hyun, head of the semiconductor division at Samsung Electronics, vowed to stay on schedule with the development and mass production of HBM4 products to avoid repeating the company's previous setback in the HBM3E market. According to a recent report by researching firm Counterpoint Research, SK hynix led the HBM market in the second quarter in terms of shipments with a share of 62 percent, followed by Micron Technology Inc. with 21 percent and Samsung Electronics with 17 percent.

Share

Share

Copy Link

Samsung and SK hynix showcase their latest HBM4 memory chips at the 2025 Semiconductor Exhibition (SEDEX) in Seoul, signaling an impending competition in the AI chip market. The event highlights the growing importance of high-bandwidth memory in AI acceleration.

South Korean Tech Giants Unveil Next-Generation Memory for AI

Samsung Electronics and SK hynix, two of South Korea's leading semiconductor manufacturers, have showcased their latest high bandwidth memory (HBM) chips at the 2025 Semiconductor Exhibition (SEDEX) in Seoul

2

. This event marks a significant milestone in the race for dominance in the artificial intelligence (AI) chip market, with both companies displaying their HBM4 modules to the public for the first time1

.The Importance of HBM in AI Acceleration

High bandwidth memory is a crucial component in graphics processing units (GPUs) that power generative AI systems. As the demand for more sophisticated AI applications grows, the need for faster and more efficient memory solutions becomes paramount. The current market is dominated by fifth-generation HBM3E chips, but industry experts anticipate HBM4 to become a major factor in the coming year

2

.Samsung's Comeback Strategy

Samsung, once the dominant player in the memory market, has faced challenges in recent years, particularly in the HBM segment. However, the company is making a strong comeback with its HBM4 technology. At SEDEX, Samsung demonstrated its readiness for mass production, reporting an impressive 90% yield for its HBM4 logic die

1

.To ensure early adoption of its HBM4 modules, Samsung is implementing several strategies:

- Maintaining competitive pricing

- Offering higher production capacities

- Delivering faster pin speeds, reportedly around 11 Gbps

SK hynix's Market Leadership

Currently, SK hynix leads the HBM market with a 62% share in shipments, followed by Micron Technology and Samsung

2

. The company has already completed the development of its HBM4 chips and is preparing for mass production. SK hynix's strong position is partly due to its involvement in the tripartite supply chain with Nvidia and TSMC for the current HBM3E chips.Related Stories

The Race for Nvidia's Next-Gen AI Accelerator

Both Samsung and SK hynix are vying for a position in Nvidia's supply chain for its upcoming AI accelerator, codenamed Rubin. This next-generation GPU is expected to utilize HBM4 technology, making it a crucial battleground for memory manufacturers

2

. While SK hynix is reportedly in talks with Nvidia for large-scale supply, Samsung has yet to receive approval but remains optimistic given its recent technological advancements1

.Implications for the DRAM Market

The intensifying competition in the HBM4 segment is expected to have significant implications for the broader DRAM market. With Samsung's rapid advancements and the unprecedented demand for high-performance memory, the industry is poised for a more competitive landscape in the coming years

1

.References

Summarized by

Navi

Related Stories

Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

02 Jan 2026•Technology

Samsung Secures NVIDIA Partnership for Next-Gen HBM4 Memory, Plans 2026 Mass Production

30 Oct 2025•Business and Economy

SK Hynix Accelerates HBM4 Development to Meet Nvidia's Demand, Unveils 16-Layer HBM3E

04 Nov 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology