The AI Chip Race: Nvidia's Dominance and the Challenges of Powering Artificial Intelligence

2 Sources

2 Sources

[1]

The geopolitics of chips: Nvidia and the AI boom

Your browser does not support playing this file but you can still download the MP3 file to play locally. Amid the artificial intelligence boom, demand for AI chips has exploded. But this push for chips also creates new challenges for countries and companies. How will countries cope with the huge amounts of energy these chips consume? Will anyone compete with Nvidia to supply the AI chips of the future? And can China develop its own chips to fuel its own AI development? James Kynge visits a data centre to find out how advanced AI chips are causing new problems for the sector. In Phoenix, Arizona, James meets Mark Bauer, co-leader with JLL's Data Center Solutions group, and Frank Eichenhorst, vice president of data centre operations at PhoenixNAP. How will the clash of titans play out between NVIDIA and Big Tech? And we hear from Amir Salek, senior managing director at Cerberus Capital and the brains behind Google's TPU chip; Tamay Besiroglu, associate director of Epoch AI; Dylan Patel, lead analyst at consulting firm SemiAnalysis; and the FT's global tech correspondent Tim Bradshaw to find out more about the battle for AI chips. Chip challengers try to break Nvidia's grip on AI market Amazon steps up effort to build AI chips that can rival Nvidia TSMC says it alerted US to potential violation of China AI chip controls Presented by James Kynge. Edwin Lane is the senior producer. The producer is Josh Gabert-Doyon. Executive producer is Manuela Saragosa. Sound design by Joseph Enrick Salcedo, with original music from Metaphor Music. The FT's head of audio is Cheryl Brumley. Special thanks to Tim Bradshaw.

[2]

Transcript: The geopolitics of chips -- Nvidia and the AI boom

James Kynge So far in this series, we've spoken about the big, lofty geopolitics of semiconductors. But for some people, the rise of advanced chips has also translated into a good old-fashioned gold rush. James Kynge This is Mark Bauer and his massive, almost monster-truck-sized Ford pick-up. Mark Bauer Right now, we're on Camelback on 44th Street. There's a cluster of data centres that are near the airport. James Kynge Here in Phoenix, Bauer is a big fish when it comes to the business of data centres. Data centres are the storehouses of our digital age. These are big warehouses where semiconductors are used to store and compute information in the cloud. And Phoenix is the second-largest data centre market in the US. Mark Bauer There used to be people who saw three years ago cloud, and they really didn't know what a cloud was, or like what's going to the cloud? Will that cloud actually go into that big giant building'? It's not necessarily in the cloud, OK? James Kynge And it's not just the cloud storage for your iPhone that happens here. Data centres like these are where the most advanced chips in the world are used for training artificial intelligence systems. And the rise of AI in recent years has meant that the data centre market here in Phoenix is booming. Mark Bauer You know, there's the industrial revolution. We are now going through a, you know, another evolution of an industry. James Kynge So how does it feel living in a boomtown? Mark Bauer I haven't known anything different. I moved here in 1987 and just decided that this was a good place. So I moved out here and we've been one of the fastest growing communities, you know, cities in the country ever since I moved here. James Kynge And Bauer says a lot of local people are also keen to cash in on the AI boom. Mark Bauer The amount of farmers that are out there that we get calls from saying, Mark, I think I got a perfect data centre site. You know, OK, everybody reads about that, I could sell for 30 bucks a foot if I've got -- if it's a data centre site, there's a lot to a data centre site. James Kynge There's lots of farmers out there who think they can cash in on this. Mark Bauer Well, we've made a couple farmers, you know, a good return on their investment. James Kynge The last few years have seen the demand for data centres and the chips they contain going through the roof, because AI just demands so much more computing power than anything we've ever seen before. And as AI gets more important, the demand for these data centres and the advanced AI chips they contain is only going to get bigger. [MUSIC PLAYING] This is Tech Tonic from the Financial Times. I'm your host, James Kynge, a longtime China correspondent for the FT. There's a battle going on for control of the global semiconductor industry. The chips that are in virtually every piece of electronics we use from our phones to our cars to the latest AI software that's changing our lives. In this series, we've gone deep into the miracle of modern chips and the struggle over who controls the industry's future. In this episode, I'll be looking at the race to deliver the chips that are going to power the AI revolution. James Kynge The world needs chips that can run artificial intelligence systems. Right now, these are among the most advanced chips being made. Not to mention one of the most valuable commodities in technology today. But there are three big challenges facing the supply of chips that will be needed for AI. The first thing is power. Here's the thing about data centres used for AI. They're unbelievably power hungry. When Bauer took me on this tour of Phoenix, he kept describing the buildings that we passed by in terms of their hunger for electricity. Mark Bauer Yeah, this building is a 48MW. Critical load building is 700MW; a vacant piece of land that had access to 24MW. James Kynge It's all about the megawatts. The megawatts needed to power the AI revolution. Mark Bauer It has 24MW, 350MW to that site, 30MW delivered to this site by the end of this year. James Kynge By the end of this decade, US data centres are forecast to need 35GW of power a year. That's equivalent to almost all the power that a country such as the UK uses today. So why are data centres so power hungry? Phoenix is home to block after block of data centres being developed for big-name clients such as Microsoft, OpenAI, Google and Amazon. We met Frank Eickenhorst, vice-president of PhoenixNAP, a local data centre company. Frank Eickenhorst . . . one at a time. This will turn green. James Kynge As we descended into the bowels of the data centre, we came into a massive room where rows and rows of semiconductors generated a tremendous amount of heat. [WHIRRING OF SERVERS RUNNING] So, we're now in the data centre itself. You can hear the whirr of the servers behind me. It's really loud in here. And there are these black cabinets which housed the servers. And then in the ceiling there's this incredible network of wires. The chips in those servers are completing billions and billions of calculations a second, and require constant cooling down. And the thing is, the more advanced AI chips aren't making things any easier. Frank Eickenhorst The problem with the chips are newer ones consume more energy, right? GPUs, or graphics processing units, consume a lot of power, puts out a lot of heat to the point where over there, we will have an area for water cooling, right? It's going to be impossible to cool with air. James Kynge Those advanced chips, like the graphic processing units or GPU chips that Eichenhorst referred to, are what's powering the development of artificial intelligence. And in Phoenix, as in many other data centre markets, the massive amount of energy needed for chips is becoming the big limiting factor. Data centres are being delayed because of a lack of power. Because AI requires millions of GPUs to do trillions and trillions of calculations, that saps energy from the grid. At the moment, the US is just not building its power infrastructure quickly enough to satisfy the escalating demand from the data centre gold rush. The other thing is that these GPU chips were never designed for AI. The GPU was pioneered by a company called Nvidia. And those GPUs were initially made for gamers. [ESPORTS TOURNAMENT CLIP PLAYING] James Kynge The GPU, as the name suggests, was first used for graphics in high-end computer games. Nvidia was known as a major sponsor of e-sports tournaments. [ESPORTS TOURNAMENT CLIP PLAYING] James Kynge A few years ago, researchers realised they could put Nvidia's GPUs together to train AI models. And as AI has grown, researchers started to require more and more of these chips. Tamay Besiroglu, associate director of the technology think-tank Epoch AI, says that's because of how AI has developed over the years. Tamay Besiroglu In around the early 2010s, there was this realisation that you can scale up machine learning models, and by doing so they become better at performing various tasks. And this requires using more chips during training to do all the computation that is needed to do this training. So the larger models you have, the more computation you need to do. James Kynge Larger models require more computing power, and that requires more powerful AI chips. Tamay Besiroglu So every year we're scaling up the size of training by four times. And so the result of spending more money on buying more expensive, more sophisticated AI chips. So today, AI labs might have access to 100,000 GPU cluster, whereas in the 2010s people might have used six GPUs or eight GPUs to train a single model. James Kynge All of this takes us to our second factor shaping the future of the AI chip industry: the total dominance of one company in supplying AI chips. Tamay Besiroglu Nvidia is by far the market leader in selling these chips for accelerating AI. So OpenAI, notably as well as many other labs, are using Nvidia chips to train these models to serve the models to customers. James Kynge Right now, Nvidia is estimated to have about 90 per cent of the market share for AI semiconductors, and all the leading AI companies like Google, Meta and OpenAI are buying boatloads of these AI chips from Nvidia. All of that has made it a massive company. It briefly became the biggest company in the world earlier this year. At the moment, that means Nvidia can pretty much charge what it likes for its GPUs. Tamay Besiroglu So Nvidia has these pretty astonishing mark-ups that they're charging. If you just look at the pure silicon, it costs maybe $1,000, and that goes into chips that are sold for maybe $30,000. Now there are other costs associated with the R&D they're doing and with design and so on. But the mark-ups they're charging are just very large. James Kynge But in spite of the price tag, the fact you can't get these chips from anyone else means you've gotta be quick out of the blocks. Nvidia's new chip, the Blackwell, is sold out until at least late next year. That market concentration has made Nvidia's customers nervous. The likes of Google, Amazon, Microsoft and OpenAI have all started building their own AI chips in the hopes of cutting costs and circumventing Nvidia. By far, the furthest ahead on this front is Google who have the TPU. Tamay Besiroglu Notably, Google has the TPU, which they're using to train a lot of really powerful models and also using those chips to serve those models to customers. James Kynge So how did they manage to build a chip that could compete with Nvidia? Well, it comes down to this man, Amir Salek, the architect behind Google's TPU chip. Amir Salek I joined Google in 2013 with the mission to build the semiconductor business for all of Google infrastructure and data centres. James Kynge Today, Salek is no longer at Google. He invests in chip companies and other deep tech firms. But when he was at Google, he helped to lead the development of the TPU chip. The idea was that Google would make a chip that, unlike Nvidia's GPU, was designed specifically for AI operations. Amir Salek It was early days of machine learning and things were looking kind of exciting and it was realised that this tiny little workload across data centres is growing at a rapid pace and Google has realised that it cannot rely on off-the-shelf semiconductor products because they have a scale that nobody else had at the time. James Kynge Salek and his team spent months quietly designing and tinkering with the first version of the TPU. Amir Salek Fundamentally, when you look at it, the GPU architecture, it's not an architecture that you would come up with if you were starting something from scratch for AI. James Kynge A more efficient chip would help Google save costs on data centres and reduce reliance on Nvidia. It was a resounding success. Interestingly, though, Google has never sold their chips to other companies like Nvidia does. It just sells access to chips on its own servers. You can use its TPU chips to train your own AI models. Amir Salek It is one of Google's advantages to be able to provide services with economics that other people don't have access to. So that's a competitive advantage. James Kynge Google is just one company working on its own AI chips to take on Nvidia. But just how hard is it to build an AI chip to compete with one of the world's most valuable companies? Tim Bradshaw, the FT's global tech correspondent, covers the chip industry. I asked him about Nvidia's biggest challenges. Tim Bradshaw So the biggest competitive threats in the long term, I think, probably comes from Nvidia's biggest customers, the big tech companies, the Microsofts, Googles and Amazons of the world that are all working on their own AI accelerators. You then have AMD and perhaps at some point Intel, which are I guess Nvidia's more traditional chipmaking rivals which are doing a, you know, AMD is actually making real strides creating a product which is capable of competing on the raw horsepower with Nvidia's best chips. But you then have a lot of much, much smaller companies that are trying to come after different parts of the AI chip opportunity. And so you have companies -- like Grok, like Cerebras -- that are a lot of them trying to go after the inference opportunity, ie not the training, the building of the AI models, but how it's deployed and rolled out to customers. James Kynge So even getting a small part of Nvidia's pie could be extremely lucrative. How difficult really is it to make a new AI chip? Tim Bradshaw It is a very, very difficult business to break into because you have to be good at not just the chip design, but the state of the art of AI and those algorithms and making sure that you're building the right processor for the right application. And then you've also got to get all the developers on board with the software so that people actually know how to use those chips because it is not enough just to make a chip. The hardware by itself is not enough. James Kynge But even if you manage to develop an AI chip comparable to Nvidia's, there's a further hurdle of actually getting it made. Tim Bradshaw Even Nvidia, the world's most valuable chip company, does not make physically its own chips. Then you've gotta get it built by TSMC or somebody else. If you're a small start-up, you've got to get to the front of their queue, which is led by Nvidia and Apple, and then you've got Microsoft, Amazon, Meta, all of those guys coming in after them. Their capacity is booked up many, many years in advance. If Nvidia is saying that the latest generation Blackwell chips are sold out through to the end of 2025, that means they're gonna be thinking about their manufacturing capacity through to the end of the decade. So for a small start-up that's trying to sort of get into that game, you've got to raise a lot of money very, very quickly. I think it's still hard to imagine that in five years' time Nvidia won't still have a very, very big portion of the market. James Kynge So the high barrier to entry that exists in the chip industry means that Nvidia may be able to maintain its market lead for at least a while longer. But there's a third factor that will become crucial in the world of AI chips. That is: what role will China play? China sees AI shaping the future, just as the US does. And in order to realise that AI future, it's building chips of its own. In the race to lead in artificial intelligence. China has a particular set of problems. The American government has effectively banned Nvidia and advanced semiconductor equipment makers from exporting to China. But that hasn't stopped the Chinese from investing huge amounts in trying to build their own AI chips. This is Dylan Patel, chief analyst at SemiAnalysis, an AI and semiconductor consulting firm. Dylan Patel What's interesting is that, at least according to the Chinese data, there's about four to 5000 new semiconductor companies every year in China, right? This is a humongous number. And of course, most will fail. This is just the way start-ups work. But there is also a lot of innovation, new thinking and ideas coming out of this, right? Progress is still being made and China is still catching up. James Kynge Patel follows companies like the Shanghai-based SMIC. This is China's leading chip company, a huge and highly profitable corporation with deep links to the Chinese state. He says that SMIC has been using ingenious responses to US export bans, specifically on manufacturing equipment, where the US prohibits the export of certain tools for advanced chipmaking. Dylan Patel So SMIC got around this in a very funny way by just taking these two different fabs that they had, one that's allowed to receive foreign tools and one that's not allowed to, and just building a very large bridge between them. And so for production purposes, in reality, this is effectively one large semiconductor fab. But in the eyes of the law and the regulations, they're treated as separate. James Kynge With these kinds of workarounds. It's worth asking how far behind Chinese chipmakers really are. Consider, for instance, the case of a chip made by the Chinese tech giant Huawei. Dylan Patel Huawei's Ascend 910C, which is coming out sort of the end of this year, early next year is when it starts shipping, is roughly on par with Nvidia's H100 generation. So they're basically one generation behind what Nvidia has. James Kynge Beijing is feeling some impact of these semiconductor bans, but it is nevertheless finding a way to keep climbing the technology ladder. And it turns out that one big advantage might be China's energy and grid infrastructure -- exactly the problem that American companies are running into in Phoenix. Dylan Patel China actually has a huge advantage in industrial capacity, right? Primarily because the US has a lot of restrictions around power-generation, a lot of industrial capacity limitations on building substations and transformers and buildings and so on and so forth, which, you know, everyone knows China is much faster at building, right? And so they can actually build a much larger data centre than we can, which then they can fit with more chips. James Kynge In other words, China's tech industry isn't dropping out of the chip race anytime soon. [MUSIC PLAYING] Over the course of this series, we've heard there is no technology so geopolitical as semiconductors. They animate the phones we use, the cars we drive, the infrastructures of our networked cities and the weapons systems of our militaries. But also the best chips drive the development of future technologies from artificial intelligence, to quantum computing, to space exploration. So whoever leads in chips defines the future. This has already led countries to go to extraordinary lengths in the battle to control who gets to use and make the most advanced chips. Chips have shaped national economic policy in the US and Europe, and military strategies in the China seas. The promise of a coming AI revolution is only going to make chips more crucial to the future of the world than ever before. Both China and the US want to be the global superpower of the future. We don't know yet which will eventually prevail, but it may well depend on who has the best chips. [MUSIC PLAYING] You've been listening to Tech Tonic from the Financial Times. I'm your host, James Kynge. Check out the free links to FT articles in the show notes. Our senior producer is Edwin Lane, and our producer is Josh Gabert-Doyon, executive producer is Manuela Saragosa, mixing by Breen Turner, Sam Giovinco and Joe Salcedo. Music by Metaphor Music. Our head of FT audio is Cheryl Brumley. Special thanks to Tim Bradshaw.

Share

Share

Copy Link

As AI development surges, the demand for specialized chips is skyrocketing, with Nvidia leading the pack. However, the industry faces significant challenges in power consumption and competition.

The AI Chip Boom and Its Challenges

The artificial intelligence (AI) boom has triggered an unprecedented demand for specialized AI chips, reshaping the semiconductor industry and creating new challenges for countries and companies alike

1

. At the forefront of this revolution is Nvidia, whose dominance in the AI chip market has sparked a race among competitors to develop alternatives.The Power Conundrum

One of the most pressing issues in the AI chip industry is the enormous power consumption of data centers housing these advanced chips. In Phoenix, Arizona, the second-largest data center market in the US, the boom is palpable

2

. Mark Bauer, a local data center expert, describes the situation as "another evolution of an industry," comparing it to the industrial revolution2

.However, this growth comes at a cost. Frank Eickenhorst, vice-president of PhoenixNAP, explains that newer chips, especially GPUs (Graphics Processing Units), consume significantly more energy and generate more heat than their predecessors

2

. This has led to the need for advanced cooling solutions, including water cooling systems, as traditional air cooling becomes insufficient.The Scale of Power Demand

The power requirements for AI chips are staggering. US data centers are projected to need 35GW of power annually by the end of this decade, equivalent to the current power consumption of the entire United Kingdom

2

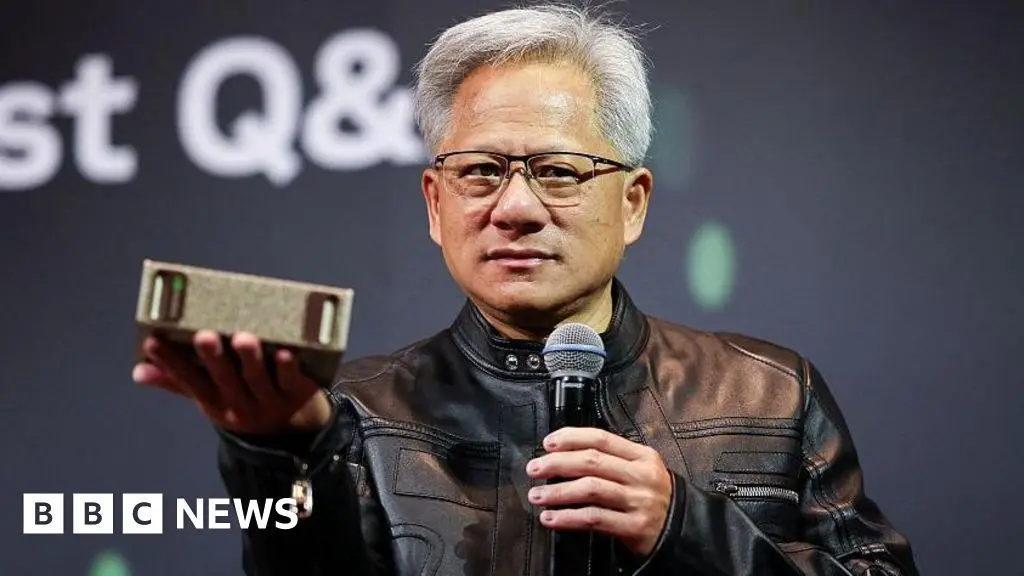

. This massive energy demand poses significant challenges for infrastructure and sustainability.Nvidia's Dominance and the Competition

Nvidia currently holds a commanding position in the AI chip market, but competitors are emerging. Companies like Amazon are stepping up efforts to develop their own AI chips to rival Nvidia's offerings

1

. The competition extends to other tech giants and startups, all vying for a share of this lucrative market.Related Stories

Geopolitical Implications

The AI chip race has geopolitical dimensions as well. Countries are grappling with the energy demands of these chips and the need to secure their supply chains. China, in particular, is pushing to develop its own AI chips to fuel its AI ambitions, potentially circumventing US export controls

1

.The Future of AI Chips

As the AI industry continues to evolve, several questions loom large:

- How will countries manage the enormous energy consumption of AI chips?

- Can any company successfully challenge Nvidia's dominance in the AI chip market?

- Will China succeed in developing its own advanced AI chips?

The answers to these questions will shape the future of AI development and have far-reaching implications for global technology leadership and economic competitiveness.

1

2

References

Summarized by

Navi

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology