Wikipedia Halts AI-Generated Article Summaries Amid Editor Backlash

14 Sources

14 Sources

[1]

"Yuck": Wikipedia pauses AI summaries after editor revolt

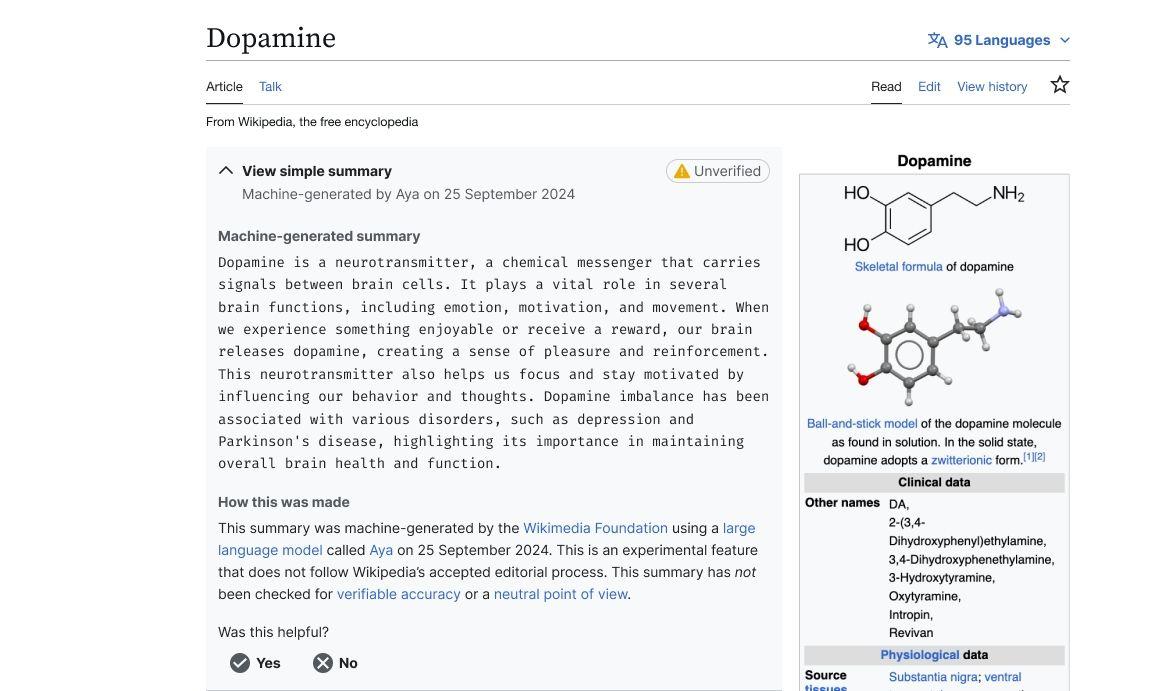

Generative AI is permeating the Internet, with chatbots and AI summaries popping up faster than we can keep track. Even Wikipedia, the vast repository of knowledge famously maintained by an army of volunteer human editors, is looking to add robots to the mix. The site began testing AI summaries in some articles over the past week, but the project has been frozen after editors voiced their opinions. And that opinion is: "yuck." The seeds of this project were planted at Wikimedia's 2024 conference, where foundation representatives and editors discussed how AI could advance Wikipedia's mission. The wiki on the so-called "Simple Article Summaries" notes that the editors who participated in the discussion believed the summaries could improve learning on Wikipedia. According to 404 Media, Wikipedia announced the opt-in AI pilot on June 2, which was set to run for two weeks on the mobile version of the site. The summaries appeared at the top of select articles in a collapsed form. Users had to tap to expand and read the full summary. The AI text also included a highlighted "Unverified" badge. Feedback from the larger community of editors was immediate and harsh. Some of the first comments were simply "yuck," with others calling the addition of AI a "ghastly idea" and "PR hype stunt." Others expounded on the issues with adding AI to Wikipedia, citing a potential loss of trust in the site. Editors work together to ensure articles are accurate, featuring verifiable information and a neutral point of view. However, nothing is certain when you put generative AI in the driver's seat. "I feel like people seriously underestimate the brand risk this sort of thing has," said one editor. "Wikipedia's brand is reliability, traceability of changes, and 'anyone can fix it.' AI is the opposite of these things."

[2]

Wikipedia pauses AI-generated summaries pilot after editors protest | TechCrunch

Wikipedia has reportedly paused an experiment that used AI to summarize articles on its platform after editors pushed back. Wikipedia announced earlier this month it was going to run the experiment for users who have the Wikipedia browser extension installed and chose to opt in, according to 404 Media. AI-generated summaries showed up at the top of every Wikipedia article with a yellow "unverified" label. Users had to click to expand and read them. Editors almost immediately criticized the pilot, raising concerns that it could damage Wikipedia's credibility. Often, the problem with AI-generated summaries is that they contain mistakes, the result of AI "hallucinations." News publications running similar experiments, like Bloomberg, have been forced to issue corrections and, in some cases, scale back their tests. While Wikimedia has paused its experiment, the platform has indicated it's still interested in AI-generated summaries for use cases like expanding accessibility.

[3]

Wikipedia Pauses AI Summaries After Pushback From Editors

(Credit: Thomas Fuller/SOPA Images/LightRocket via Getty Images) Wikipedia has paused an AI summary trial following harsh criticism from the platform's human editors. As 404 Media reports, Wikipedia began testing AI-generated summaries for mobile on June 2. Users who had the Wikipedia browser extension could see a "simple summary" appear on top of every article. These summaries came with a yellow "unverified" label, and users had to tap to expand and read. An open-weight Aya model from Cohere Labs generated the summaries. It was supposed to be a two-week trial for a small set of readers (10%) who opted in. However, the Wikimedia Foundation, the non-profit that runs Wikipedia, paused the trial on the second day after backlash from editors. Much of the feedback was centered around accuracy and credibility. "This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source," said one editor. "Let's not insult our readers' intelligence and join the stampede to roll out flashy AI summaries." "With Simple Article Summaries, you propose giving one singular editor with known reliability, and NPOV issues a platform at the very top of any given article whilst giving zero editorial control to others. It reinforces the idea that Wikipedia cannot be relied on, destroying a decade of policy work," said another editor. "Yuck," "Absolutely not," and "Very bad idea" were among some of the other reactions. AI summaries have been notorious for providing inaccurate details. In January, Apple was forced to pause its AI notification summaries after it was caught spreading fake news. More recently, Bloomberg was forced to correct over three dozen AI summaries on articles published this year. The reason? Inaccuracy, again. Wikipedia has paused the summaries for now, but it won't stop pursuing them. The platform will continue testing features that make its content accessible to people with different reading levels, but human editors will remain pivotal to deciding what information appears on Wikipedia, a spokesperson tells 404Media.

[4]

Wikipedia Tried to Add AI Summaries to Its Articles But Editors Revolted

(Credit: Thomas Fuller/SOPA Images/LightRocket via Getty Images) Wikipedia has long allowed its (human) users to add and edit entries. Recently, it rolled out AI summaries on articles, but pushback from editors has prompted the platform to halt the feature. As 404 Media reports, Wikipedia began testing AI-generated summaries for mobile on June 2. Users with the Wikipedia browser extension could see a "simple summary" on top of every article. They came with a yellow "unverified" label, which users had to tap to expand and read. An open-weight Aya model from Cohere Labs generated the summaries. It was supposed to be a two-week trial for a small set of readers (10%) who opted in. However, the Wikimedia Foundation, the nonprofit that runs Wikipedia, paused the trial on the second day after backlash from editors. Much of the feedback was centered around accuracy and credibility. "This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source," said one editor. "Let's not insult our readers' intelligence and join the stampede to roll out flashy AI summaries." "With Simple Article Summaries, you propose giving one singular editor with known reliability, and NPOV [neutral point of view] issues a platform at the very top of any given article whilst giving zero editorial control to others. It reinforces the idea that Wikipedia cannot be relied on, destroying a decade of policy work," said another editor. "Yuck," "Absolutely not," and "Very bad idea" were among some of the other reactions. AI summaries are known for providing inaccurate details. In January, Apple was forced to pause its news-focused AI notification summaries after it was caught spreading fake news. More recently, Bloomberg was forced to correct over three dozen AI summaries on articles published this year. The reason? Inaccuracy, again. Wikipedia has paused the summaries for now, but the platform will continue testing features that make its content accessible to people with different reading levels. Human editors will remain pivotal to deciding what information appears on Wikipedia, a spokesperson tells 404Media.

[5]

Wikipedia cancels plan to test AI summaries after editors skewer the idea

Wikipedia is backing off a plan to test AI article summaries. Earlier this month, the platform announced plans to trial the feature for about 10 percent of mobile web visitors. To say they weren't well-received by editors would be an understatement. The Wikimedia Foundation (WMF) then changed plans and cancelled the test. The AI summaries would have appeared at the top of articles for 10 percent of mobile users. Readers would have had to opt in to see them. The AI-generated summaries only appeared "on a set of articles" for the two-week trial period. Editor comments in the WMF's announcement (via 404 Media) ranged from "Yuck" to "Grinning with horror." One editor wrote, "Just because Google has rolled out its AI summaries doesn't mean we need to one-up them. I sincerely beg you not to test this, on mobile or anywhere else. This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source." "Wikipedia has in some ways become a byword for sober boringness, which is excellent," the editor continued. "Let's not insult our readers' intelligence and join the stampede to roll out flashy AI summaries." Editors' gripes weren't limited to the idea. They also criticized the nonprofit for excluding them from the planning phase. "You also say this has been 'discussed,' which is thoroughly laughable as the 'discussion' you link to has exactly one participant, the original poster, who is another WMF employee," an editor wrote. A Wikimedia Foundation spokesperson shared the following statement with Engadget: "The Wikimedia Foundation has been exploring ways to make Wikipedia and other Wikimedia projects more accessible to readers globally. This two-week, opt-in experiment was focused on making complex Wikipedia articles more accessible to people with different reading levels. For the purposes of this experiment, the summaries were generated by an open-weight Aya model by Cohere. It was meant to gauge interest in a feature like this, and to help us think about the right kind of community moderation systems to ensure humans remain central to deciding what information is shown on Wikipedia. For these experiments, our usual process includes discussing with volunteers (who create and curate all the information on Wikipedia) to make decisions on whether and how to proceed with building features. The discussion around this feature is an example of this process, where we built out a prototype of an idea and reached out to the Wikipedia volunteer community for their thoughts. It is common to receive a variety of feedback from volunteers, and we incorporate it in our decisions, and sometimes change course. We welcome such thoughtful feedback -- this is what continues to make Wikipedia a truly collaborative platform of human knowledge. As shared in our latest post on the community discussion page, we do not have any plans to continue the experiment at the moment, as we continue to assess and discuss the feedback we have already received from volunteers."In the "discussion" page, the organization explained that it wanted to cater to its audience's needs. "Many readers need some simplified text in addition to the main content," a WMF employee wrote. "In previous research, we heard that readers wanted to have an option to get a quick overview of a topic prior to jumping into reading the full article." The organization didn't rule out future uses of AI. But they said editors won't be left in the dark next time. "Bringing generative AI into the Wikipedia reading experience is a serious set of decisions, with important implications, and we intend to treat it as such," the spokesperson told 404 Media. "We do not have any plans for bringing a summary feature to the wikis without editor involvement." Update, June 13, 2025, 12:52PM ET: This story has been corrected to note that Wikipedia never actually started its AI summary test. The plan was announced, but cancelled before it took place. A statement from the Wikimedia Foundation has also been added, and the headline has been updated as well.

[6]

Wikipedia pauses AI summaries after editors skewer the idea

Wikipedia is backing off AI article summaries... for now. Earlier this month, the platform trialed the feature in its mobile app. To say they weren't well-received by editors would be an understatement. The Wikimedia Foundation (WMF) paused the test a day later. The AI summaries appeared at the top of articles for 10 percent of mobile users. Readers had to opt in to see them. The AI-generated summaries only appeared "on a set of articles" for the two-week trial period. Editor comments in the WMF's announcement (via 404 Media) ranged from "Yuck" to "Grinning with horror." One editor wrote, "Just because Google has rolled out its AI summaries doesn't mean we need to one-up them. I sincerely beg you not to test this, on mobile or anywhere else. This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source." "Wikipedia has in some ways become a byword for sober boringness, which is excellent," the editor continued. "Let's not insult our readers' intelligence and join the stampede to roll out flashy AI summaries." Editors' gripes weren't limited to the idea. They also criticized the nonprofit for excluding them from the planning phase. "You also say this has been 'discussed,' which is thoroughly laughable as the 'discussion' you link to has exactly one participant, the original poster, who is another WMF employee," an editor wrote. In a statement to 404 Media, a WMF spokesperson said the backlash influenced its decision. "It is common to receive a variety of feedback from volunteers, and we incorporate it in our decisions, and sometimes change course," the spokesperson stated. "We welcome such thoughtful feedback -- this is what continues to make Wikipedia a truly collaborative platform of human knowledge." In the "discussion" page, the organization explained that it wanted to cater to its audience's needs. "Many readers need some simplified text in addition to the main content," a WMF employee wrote. "In previous research, we heard that readers wanted to have an option to get a quick overview of a topic prior to jumping into reading the full article." The WMF employee stated that the average reading level for adult native English speakers is that of a 14- or 15-year-old. "It may be lower for non-native English speakers who regularly read English Wikipedia," they added. The organization didn't rule out future uses of AI. But they said editors won't be left in the dark next time. "Bringing generative AI into the Wikipedia reading experience is a serious set of decisions, with important implications, and we intend to treat it as such," the spokesperson told 404 Media. "We do not have any plans for bringing a summary feature to the wikis without editor involvement."

[7]

Wikipedia Tries to Calm Fury Over New AI-Generated Summaries Proposal

The Wikimedia Foundation, the organization behind Wikipedia, made the unfortunate decision to announce the trial of a new AI-fueled article generator this week. The backlash from the site's editors was so swift and so vengeful that the organization quickly walked back its idea, announcing a temporary "pause" of the new feature. A spokesperson on behalf of the Foundationâ€"which is largely separate from the decentralized community of editors that populate the site with articlesâ€"explained last week that, in an effort to make wikis "more accessible to readers globally through different projects around content discovery," the organization planned to trial "machine-generated, but editor moderated, simple summaries for readers." Like many other organizations that have been plagued by new automated features, Wikipedia's rank and file were quick to anger over the experimental new tool. The responses, which are posted to the open web, are truly something to behold. "What the hell? No, absolutely not," said one editor. "Not in any form or shape. Not on any device. Not on any version. I don't even know where to begin with everything that is wrong with this mindless PR hype stunt." "This will destroy whatever reputation for accuracy we currently have," another editor said. "People aren't going to read past the AI fluff to see what we really meant." Yet another editor was even more vehement: "Keep AI out of Wikipedia. That is all. WMF staffers looking to pad their resumes with AI-related projects need to be looking for new employers." "A truly ghastly idea," said another. "Since all WMF proposals steamroller on despite what the actual community says, I hope I will at least see the survey and thatâ€"unlike some WMF surveysâ€"it includes one or more options to answer 'NO'." "Are y'all (by that, I mean WMF) trying to kill Wikipedia? Because this is a good step in that way," another editor said. "We're trying to keep AI out of Wikipedia, not have the powers that be force it on us and tell us we like it." The forum is littered with countless other negative responses from editors who expressed a categorical rejection of the tool. Not long afterward, the organization paused the feature, 404 Media reported. “The Wikimedia Foundation has been exploring ways to make Wikipedia and other Wikimedia projects more accessible to readers globally,†a Wikimedia Foundation spokesperson told 404 Media. “This two-week, opt-in experiment was focused on making complex Wikipedia articles more accessible to people with different reading levels. For the purposes of this experiment, the summaries were generated by an open-weight Aya model by Cohere. It was meant to gauge interest in a feature like this, and to help us think about the right kind of community moderation systems to ensure humans remain central to deciding what information is shown on Wikipedia.â€

[8]

Wikipedia Pauses AI-Generated Summaries After Editor Backlash

"This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source," one Wikipedia editor said. The Wikimedia Foundation, the nonprofit organization which hosts and develops Wikipedia, has paused an experiment that showed users AI-generated summaries at the top of articles after an overwhelmingly negative reaction from the Wikipedia editors community. "Just because Google has rolled out its AI summaries doesn't mean we need to one-up them, I sincerely beg you not to test this, on mobile or anywhere else," one editor said in response to Wikimedia Foundation's announcement that it will launch a two-week trial of the summaries on the mobile version of Wikipedia. "This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source. Wikipedia has in some ways become a byword for sober boringness, which is excellent. Let's not insult our readers' intelligence and join the stampede to roll out flashy AI summaries. Which is what these are, although here the word 'machine-generated' is used instead." Two other editors simply commented, "Yuck." For years, Wikipedia has been one of the most valuable repositories of information in the world, and a laudable model for community-based, democratic internet platform governance. Its importance has only grown in the last couple of years during the generative AI boom as it's one of the only internet platforms that has not been significantly degraded by the flood of AI-generated slop and misinformation. As opposed to Google, which since embracing generative AI has instructed its users to eat glue, Wikipedia's community has kept its articles relatively high quality. As I recently reported last year, editors are actively working to filter out bad, AI-generated content from Wikipedia. A page detailing the the AI-generated summaries project, called "Simple Article Summaries," explains that it was proposed after a discussion at Wikimedia's 2024 conference, Wikimania, where "Wikimedians discussed ways that AI/machine-generated remixing of the already created content can be used to make Wikipedia more accessible and easier to learn from." Editors who participated in the discussion thought that these summaries could improve the learning experience on Wikipedia, where some article summaries can be quite dense and filled with technical jargon, but that AI features needed to be cleared labeled as such and that users needed an easy to way to flag issues with "machine-generated/remixed content once it was published or generated automatically." In one experiment where summaries were enabled for users who have the Wikipedia browser extension installed, the generated summary showed up at the top of the article, which users had to click to expand and read. That summary was also flagged with a yellow "unverified" label. Wikimedia announced that it was going to run the generated summaries experiment on June 2, and was immediately met with dozens of replies from editors who said "very bad idea," "strongest possible oppose," Absolutely not," etc. "Yes, human editors can introduce reliability and NPOV [neutral point-of-view] issues. But as a collective mass, it evens out into a beautiful corpus," one editor said. "With Simple Article Summaries, you propose giving one singular editor with known reliability and NPOV issues a platform at the very top of any given article, whilst giving zero editorial control to others. It reinforces the idea that Wikipedia cannot be relied on, destroying a decade of policy work. It reinforces the belief that unsourced, charged content can be added, because this platforms it. I don't think I would feel comfortable contributing to an encyclopedia like this. No other community has mastered collaboration to such a wondrous extent, and this would throw that away." A day later, Wikimedia announced that it would pause the launch of the experiment, but indicated that it's still interested in AI-generated summaries. "The Wikimedia Foundation has been exploring ways to make Wikipedia and other Wikimedia projects more accessible to readers globally," a Wikimedia Foundation spokesperson told me in an email. "This two-week, opt-in experiment was focused on making complex Wikipedia articles more accessible to people with different reading levels. For the purposes of this experiment, the summaries were generated by an open-weight Aya model by Cohere. It was meant to gauge interest in a feature like this, and to help us think about the right kind of community moderation systems to ensure humans remain central to deciding what information is shown on Wikipedia." "It is common to receive a variety of feedback from volunteers, and we incorporate it in our decisions, and sometimes change course," the Wikimedia Foundation spokesperson added. "We welcome such thoughtful feedback -- this is what continues to make Wikipedia a truly collaborative platform of human knowledge." "Reading through the comments, it's clear we could have done a better job introducing this idea and opening up the conversation here on VPT back in March," a Wikimedia Foundation project manager said. VPT, or "village pump technical," is where The Wikimedia Foundation and the community discuss technical aspects of the platform. "As internet usage changes over time, we are trying to discover new ways to help new generations learn from Wikipedia to sustain our movement into the future. In consequence, we need to figure out how we can experiment in safe ways that are appropriate for readers and the Wikimedia community. Looking back, we realize the next step with this message should have been to provide more of that context for you all and to make the space for folks to engage further." The project manager also said that "Bringing generative AI into the Wikipedia reading experience is a serious set of decisions, with important implications, and we intend to treat it as such, and that "We do not have any plans for bringing a summary feature to the wikis without editor involvement. An editor moderation workflow is required under any circumstances, both for this idea, as well as any future idea around AI summarized or adapted content."

[9]

Wikipedia halts AI plans as editors revolt

Wikipedia lives to fight another day, AI-free. Credit: Thomas Fuller / SOPA Images / LightRocket / Getty Images An experiment adding AI-generated summaries to the top of Wikipedia pages has been paused, following fierce backlash from its community editors. The Wikimedia Foundation, the nonprofit behind Wikipedia, confirmed this to 404 Media, which spotted the discussion on a page detailing the project. Introduced as "Simple Article Summaries" by the Web Team at Wikimedia, the project proposed AI-generated summaries as way "to make the wikis more accessible to readers globally." The team intended to launch a two-week experiment to 10 percent of readers on mobile pages to gauge interest and engagement. They emphasized that the summaries would involve editor moderation, but editors still responded with outrage. "I sincerely beg you not to test this, on mobile or anywhere else. This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source," one editor responded (emphasis theirs). "Wikipedia has in some ways become a byword for sober boringness, which is excellent. Let's not insult our readers' intelligence and join the stampede to roll out flashy AI summaries." Others simply responded, "yuck" and called it a "truly ghastly idea." Several editors called Wikipedia "the last bastion" of the web that's held out against integrating AI-generated summaries. Behind the scenes, it has proactively offered up datasets to offset AI bots vacuuming up the content of its pages and overloading its servers. Editors have been working hard to clean up the deluge of AI-generated content posted on Wikipedia pages. "Haven't we been getting good press for being a more reliable alternative to AI summaries in search engines? If they're getting the wrong answers, let's not copy their homework," said one editor. Another pointed out that the human-powered Wikipedia has been so good that search engines like Google rely on them. "I see little good that can come from mixing in hallucinated AI summaries next to our high quality summaries, when we can just have our high quality summaries by themselves." Wikipedia was created as a crowdsourced, neutral point of view, providing a reliable and informative source for anyone with internet access. Editors responding on the discussion page noted that Generative AI has an inherent hallucination problem, which not only could undermine that credibility, but provide inaccurate information on topics that require nuance and context that could be missing from simple summaries. Some of the editors checked how the AI model (Aya by Cohere) summarized certain topics like dopamine and Zionism and found inaccuracies. One editor even accused Wikimedia Foundation staffers of "looking to pad their resumes with AI-related projects." Eventually, the product manager who introduced the summary feature chimed in and said they will "pause the launch of the experiment so that we can focus on this discussion first and determine next steps together."

[10]

Wikipedia editors revolt over "truly ghastly" plan for AI slop -- they're winning (for now)

Editors weren't exactly thrilled about the prospect of AI slop -- low-quality, AI-generated writing -- appearing at the top of entry pages. Though every digital organization seems to be rushing to hop onto the AI bandwagon, Wikipedia is one of the few that's putting on the brakes... at least for now. As reported this week by 404 Media, the site has paused a planned two-week trial of AI-generated article summaries after receiving intense backlash from its editor community. The experiment, which was initially scheduled to begin on June 2 and announced on Wikipedia's Village Pump -- a gathering place for editors -- received hundreds of comments, nearly all of them saying the same thing: Don't do it. Wikipedia's AI-generated summaries, called "simple summaries," were explained as "machine-generated, but editor-moderated, simple summaries for readers." The idea behind it was simple and something we've all seen before: take existing Wikipedia text and simplify it for readers. Though Wikipedia wasn't launching the feature widely, it planned to run a two-week experiment on the mobile website, where 10% of readers would be given the option to see pre-generated summaries on a set of articles. Wikipedia's initial plan was to turn the feature off after two weeks and then use the data it collected to see if users were interested in it. As shared by 404 Media, the simple summaries would show up at the top of an article, and users would need to click to expand and read them. Like many AI features, it wasn't exactly well-received. Within hours of the announcement, Wikipedia's human volunteer editors flooded the thread with comments. One simply wrote, "Yuck," while another said they were "grinning with horror," adding, "Just because Google has rolled out its AI summaries doesn't mean we need to one-up them." And the comparison isn't exactly off. Though Google seems to have no plans to hit pause on its AI features, its AI Overviews have gone viral multiple times (for all the wrong reasons). Just a couple of weeks ago, an AI Overview told people it's still 2024 with complete confidence when asked what year it is. AI Overviews have advised people to eat glue and rocks, add glue to pizza, and have even previously given explanations for made-up idioms. The same Wikipedia editor further added that AI summaries "would do immediate and irreversible harm" to Wikipedia readers and the site's overall reputation as "a decently trustworthy and serious source." Wikipedia's plan to roll out AI summaries wasn't the only thing editors had gripes about, though. The announcement, which was made by someone on behalf of Wikipedia's Web Team, mentioned that "simple summaries" is one of the ideas they've been discussing. It included a link to the "discussion," where the team behind Wikipedia explained that the idea was to cater to its audience's needs, and that "many readers need some simplified text in addition to the main content." An editor pointed out how "laughable" it was in the comments, saying "the 'discussion' you link to has exactly one participant, the original poster, who is another WMF employee." In an email sent by a Wikimedia Foundation spokesperson to 404 Media, the spokesperson stated, "Reading through the comments, it's clear we could have done a better job introducing this idea and opening up the conversation here on VPT back in March." They further explained, "We do not have any plans for bringing a summary feature to the wikis without editor involvement," suggesting that though the feature has been paused, it hasn't been scrapped entirely. So while they've been paused for now, the AI-generated summaries will likely return, but with a bit more input from the people who actually write Wikipedia.

[11]

Wikipedia pauses AI summary experiment after editors say it 'would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source'

The Wikipedia community appears less than pleased with AI integration efforts to date. AI summaries are all up in our search engines these days, and I think it's fair to say the response has been varied to date. The Wikipedia editor community, however, appears to have taken a strong stance on a recently proposed experiment by the Wikimedia Foundation to add AI-generated summaries at the top of Wiki articles, causing the test to be paused for now. "This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source" said Wikipedia editor Cremastura (via 404 Media). "Wikipedia has in some ways become a byword for sober boringness, which is excellent." "Let's not insult our readers' intelligence and join the stampede to roll out flashy AI summaries. Which is what these are, although here the word 'machine-generated' is used instead." The comments came in response to a Wikipedia village pump announcement from the WMF web team, informing editors that a discussion was underway regarding the presentation of "machine-generated, but editor-moderated, simple summaries for readers." Among the proposals was a planned two-week experiment on the mobile website, wherein 10% of users would be given the opportunity to opt in to pre-generated summaries on a set of articles, before the experiment would be turned off and used to collect data on the response. To be fair, that seems like a pretty tentative step into AI summary testing on the Wikimedia Foundation's part, but the response was mostly negative. Several editors simply commented "yuck" to the proposal, with one calling it a "truly ghastly idea." However, some seemed to take a more positive view: "I'm glad that WMF is thinking about a solution [to] a key problem on Wikipedia: Most of our technical articles are way too difficult", writes user Femke. "Maybe we can use it as inspiration for writing articles appropriate for our broader audience." The announcement appears to be a continuation of the WMF's proposal earlier this year to integrate AI into Wikipedia's complex ecosystem. In May the WMF announced it was implementing a strategy to develop, host, and use AI in product infrastructure and research "at the direct service of the editors", which seems to have been received by the community with a similar degree of trepidation. Speaking to 404 Media, a Wikimedia Foundation manager said: "Reading through the comments, it's clear we could have done a better job introducing this idea", before confirming that the test has been pulled while it evaluates the feedback. For those familiar with the intricate debates that go on behind the scenes of even the seemingly most straightforward Wikipedia articles, all this internal discussion probably comes as no surprise. Wikipedia has thrived on lively discourse and editor debate regarding even the smallest of details of its pages, and over the years has become one of the internet's primary sources of reference -- with the English version said to receive more than 4,000 page views every second. Yep, I just sourced Wikipedia statistics from a Wikipedia page discussing Wikipedia. How's that for a fractal of dubiosity? Back when I were a lad, I was discouraged from using Wikipedia as a reference in my studies, as in the dark days of the early 2000s it was regarded as untrustworthy due to its reliance on crowdsourced information. However, although it's probably still best not to cite it as a reliable source in your college essays, over the years that perception has changed -- with strict moderation (and rigorous guidelines) helping to cement its reputation as one of the more reliable information outlets on the internet. That being said, in a world where the very sources it relies on are increasingly affected by the rise of AI-generated content, I do wonder how long it will be before even this stalwart of internet reference succumbs to some of the downsides of modern AI -- in ways that many of its editors (and those of us that use it on the daily) would rather it didn't.

[12]

Wikipedia Won't Add AI-Generated Slop After Editors Yelled At Them

The Wikimedia Foundation, a nonprofit group that hosts, develops, and controls Wikipedia, has announced that it won't be moving forward with plans to add AI-generated summaries to articles after it received an overwhelmingly negative reaction from its army of dedicated (and unpaid) human editors. As first reported by 404Media, Wikimedia quietly announced plans to test out AI-generated summaries on the popular and free online encyclopedia, which has become an important and popular bastion of knowledge and information on the modern internet. In a page posted on June 2 in the backrooms of Wikipedia titled "Simple Article Summaries," a Wikimedia rep explained that after discussions about AI at a recent 2024 Wiki conference, the nonprofit group was going to try a two-week test of machine-generated summaries. These summaries would be located at the top of the page and would be marked as unverified. Wikimedia intended to start offering these summaries to a small subset of mobile users starting on June 2. The plan to add AI-generated content to the top of pages received an extremely negative reaction from editors in the comments below the announcement. The first replies from two different editors was a simple "Yuck." Another followed up with: "Just because Google has rolled out its AI summaries doesn't mean we need to one-up them. I sincerely beg you not to test this, on mobile or anywhere else. This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source." "Nope," said another editor. "I don't want an additional floating window of content for editors to argue over. Not helpful or better than a simple article lead." A day later, after many, many editors continued to respond negatively to the idea, Wikimedia backed down and canceled its plans to add AI-generated summaries. Editors are the lifeblood of the platform, and if too many of them get mad and leave, entire sections of Wikipedia would rot and fail quickly, likely leading to the slow death of the site. "The Wikimedia Foundation has been exploring ways to make Wikipedia and other Wikimedia projects more accessible to readers globally," a Wikimedia Foundation rep told 404Media. "This two-week, opt-in experiment was focused on making complex Wikipedia articles more accessible to people with different reading levels. For the purposes of this experiment, the summaries were generated by an open-weight Aya model by Cohere. It was meant to gauge interest in a feature like this, and to help us think about the right kind of community moderation systems to ensure humans remain central to deciding what information is shown on Wikipedia." "It is common to receive a variety of feedback from volunteers, and we incorporate it in our decisions, and sometimes change course. We welcome such thoughtful feedback -- this is what continues to make Wikipedia a truly collaborative platform of human knowledge." In other words: We didn't give anyone a heads up about our dumb AI plans and got yelled at by a bunch of people online for 24 hours, and we won't be doing the bad thing anymore. Wikipedia editors have been fighting the good fight against AI slop flooding what has quickly become one of the last places on the internet to not be covered in ads, filled with junk, or locked behind an excessively expensive paywall. It is a place that contains billions of words written by dedicated humans around the globe. It's a beautiful thing. And if Wikimedia Foundation ever fucks that up with crappy AI-generated garbage, it will be the modern digital equivalent of the Library of Alexandria burning to the ground. So yeah, let's not do that, okay?

[13]

Wikipedia Halts AI Summaries After Editors Warn Of 'Irreversible Harm' -- Say No 'Need To One-Up' With Google - Alphabet (NASDAQ:GOOG)

Wikipedia has reportedly paused its experiment with artificial intelligence-generated summaries after facing overwhelming backlash from its editor community, who argued the feature would harm the platform's credibility. What Happened: The Wikimedia Foundation had planned to test AI-generated summaries on Wikipedia articles to make content more accessible to users with varying reading levels, reported 404 Media. The feature, which used an AI model by Cohere, was intended to simplify articles and display summaries at the top of pages. However, the trial sparked significant opposition from Wikipedia editors, who criticized the tool for potentially undermining the platform's reputation as a reliable, community-driven resource. See Also: YouTube Contributed $55 Billion To US GDP, New Report Reveals: 'The Creator Economy Is Just Getting Started,' Says CEO Neal Mohan One editor voiced their concern, saying, "Just because Google has rolled out its AI summaries doesn't mean we need to one-up them," adding, "This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source." In response to the criticism, the Wikimedia Foundation announced the pause of the experiment, though it pointed out that AI features could still be part of future developments, provided that editorial oversight remains central. "We do not have any plans for bringing a summary feature to the wikis without editor involvement. An editor moderation workflow is required under any circumstances, both for this idea, as well as any future idea around AI, summarized or adapted content," a Wikipedia project manager told the publication. Subscribe to the Benzinga Tech Trends newsletter to get all the latest tech developments delivered to your inbox. Why It's Important: Earlier this year, Apple Inc. AAPL received flak for its AI news summaries feature generated inaccurate results on iPhones. Following the criticism, Apple also paused the feature, saying, "We are working on improvements and will make them available in a future software update." Last year, Alphabet Inc.'s GOOG GOOG Google also received backlash after it rolled out "AI Overviews," formerly known as Search Generative Experience (SGE). One notable example involved a user asking how to stop cheese from sliding off pizza. In response, Google's AI recommended mixing glue into the sauce -- a suggestion it pulled from a Reddit comment without recognizing the sarcasm. Benzinga's Edge Stock Rankings highlight Alphabet's solid price momentum over the short, medium and long term. More detailed performance metrics are available here. Check out more of Benzinga's Consumer Tech coverage by following this link. Read Next: Alibaba, JD See Sales Soar In 618 Festival, Apple And Xiaomi Emerge As Top Selling Brands Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. Image Courtesy: pixinoo / Shutterstock.com GOOGAlphabet Inc$178.28-0.96%Stock Score Locked: Edge Members Only Benzinga Rankings give you vital metrics on any stock - anytime. Unlock RankingsEdge RankingsMomentum45.78Growth88.43Quality85.77Value51.30Price TrendShortMediumLongOverviewAAPLApple Inc$198.76-1.93%Market News and Data brought to you by Benzinga APIs

[14]

Wikipedia Pauses AI Summary Experiment After Editor Backlash

Wikipedia paused an experiment that used AI to summarise articles on its website, following pushback from editors. According to a TechCrunch report, the online encyclopedia ran the experiment for users who had the Wikipedia browser extension installed and chose to opt in. As part of the experiment, AI-generated summaries would appear at the top of every Wikipedia article alongside a yellow "unverified" label. Users had to click to expand and read them. The experiment received pushback from editors, who argued that it could damage Wikipedia's credibility. The online encyclopaedia released an AI strategy in April this year, one which it claimed puts human editors first. Wikipedia stated that it would invest in AI tools specifically designed to support its human editors. It prioritised four key areas where AI could make the biggest difference: Interestingly, Wikipedia did not mention AI-generated summaries of articles as a possible use case for the technology. If anything, the encyclopaedia claimed to prioritise using AI to assist human editors rather than to create knowledge. When discussing the rise in AI-generated summaries, the foundation listed a number of downsides, such as: "limited human oversight, vulnerability to biases, hallucinations, potential for misinformation and disinformation spread, limited local context, significant invisible human labor, and weak ability to handle nuanced topics." It had also considered the potential for AI-generated content to flood the website, outstrip the ability of Wikipedia editors to properly vet it and lead to widely spread misinformation. The most famous example of inaccurate AI-generated summaries was the controversy surrounding Google's AI Overviews. The feature, which is supposed to summarise results from a Google search and link to sources, was mocked for giving strange and nonsensical answers. The problem was that the feature was unable to differentiate between satirical and genuine sources or understand the context in which information may appear. It also often drew information from satirical forum responses or other unreliable sources. For example:

Share

Share

Copy Link

Wikipedia's experiment with AI-generated article summaries faces strong opposition from editors, leading to the suspension of the pilot program due to concerns over accuracy and credibility.

Wikipedia's AI Summary Experiment

Wikipedia, the collaborative online encyclopedia, recently attempted to introduce AI-generated summaries for its articles. The experiment, announced on June 2, 2025, was designed to provide simplified overviews of complex topics for readers with different reading levels

1

2

. However, the trial was short-lived, lasting only two days before being suspended due to significant pushback from the platform's volunteer editors3

.

Source: TechCrunch

The AI Summary Pilot

The pilot program aimed to display AI-generated summaries at the top of select Wikipedia articles for 10% of mobile users who opted in via a browser extension

4

. These summaries, generated by an open-weight Aya model from Cohere Labs, were presented with a yellow "unverified" label and required users to tap to expand and read the full text3

5

.

Source: Engadget

Editor Backlash and Concerns

The response from Wikipedia's editor community was swift and overwhelmingly negative. Editors expressed concerns about the potential damage to Wikipedia's reputation and the accuracy of the content. Some of the feedback included:

- "Yuck" and "Absolutely not"

3

- "This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source"

4

- "It reinforces the idea that Wikipedia cannot be relied on, destroying a decade of policy work"

3

Editors highlighted the risks associated with AI-generated content, citing recent incidents where other platforms had to retract or correct AI-produced summaries due to inaccuracies

3

4

.Related Stories

Wikimedia Foundation's Response

In light of the strong opposition, the Wikimedia Foundation, the non-profit organization that runs Wikipedia, decided to pause the experiment. A spokesperson stated that they welcome the thoughtful feedback and emphasized that human editors will remain central to deciding what information appears on Wikipedia

5

.The Foundation acknowledged the need for better communication and collaboration with the editor community in future AI-related initiatives. They stated, "Bringing generative AI into the Wikipedia reading experience is a serious set of decisions, with important implications, and we intend to treat it as such"

5

.Future of AI in Wikipedia

Source: Kotaku

While the AI summary trial has been halted, the Wikimedia Foundation has not entirely abandoned the idea of using AI to enhance Wikipedia's accessibility. They plan to continue exploring ways to make content more accessible to readers with different reading levels, but with a renewed focus on involving human editors in the process

2

5

.This incident highlights the ongoing challenges of integrating AI technologies into established platforms, especially those that rely heavily on human expertise and collaboration. As AI continues to evolve, finding the right balance between technological innovation and maintaining the trust and quality standards of platforms like Wikipedia will remain a critical challenge.

References

Summarized by

Navi

[1]

[3]

Related Stories

Wikipedia Faces Traffic Decline Amid AI Summaries and Changing Information Habits

17 Oct 2025•Technology

Wikipedia Unveils AI Strategy: Empowering Volunteers, Not Replacing Them

01 May 2025•Technology

Wikipedia's Battle Against AI-Generated Content: Editors Mobilize to Maintain Reliability

09 Aug 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research