OpenAI launches ChatGPT Health to connect medical records to AI amid accuracy concerns

54 Sources

54 Sources

[1]

ChatGPT Health lets you connect medical records to an AI that makes things up

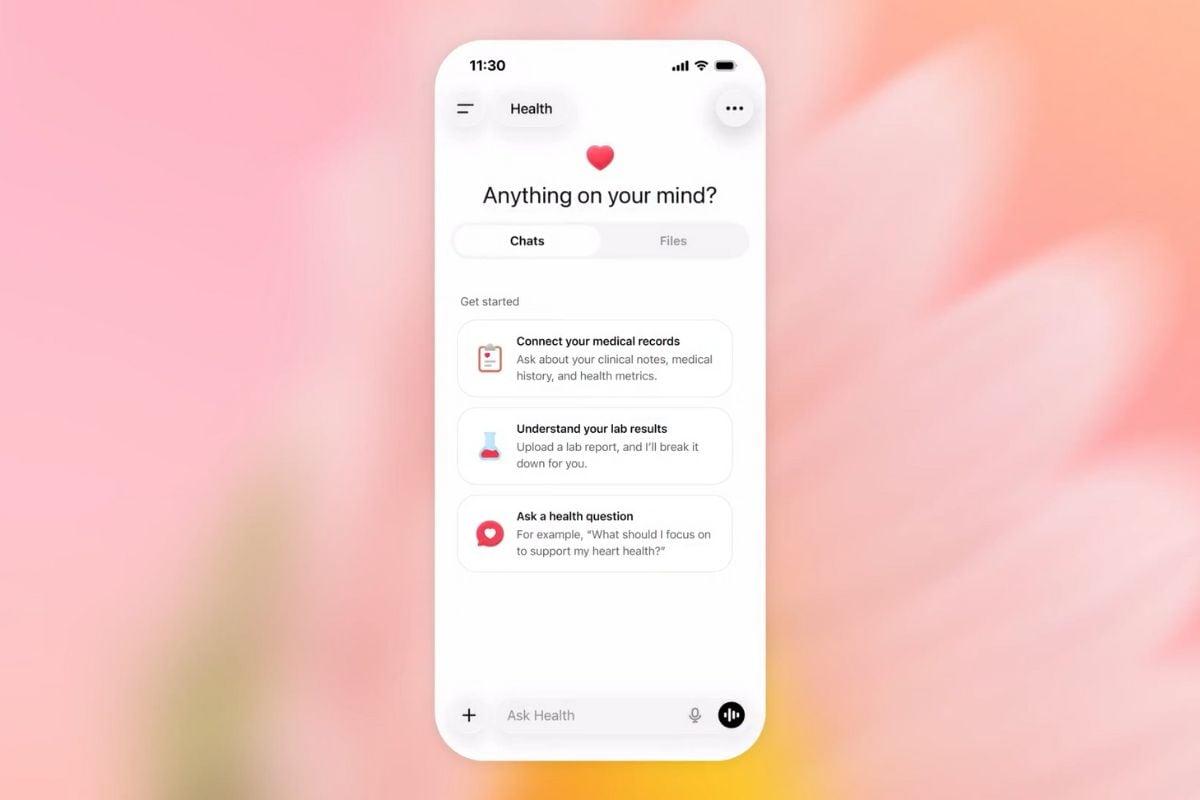

On Wednesday, OpenAI announced ChatGPT Health, a dedicated section of the AI chatbot designed for "health and wellness conversations" intended to connect a user's health and medical records to the chatbot in a secure way. But mixing generative AI technology like ChatGPT with health advice or analysis of any kind has been a controversial idea since the launch of the service in late 2022. Just days ago, SFGate published an investigation detailing how a 19-year-old California man died of a drug overdose in May 2025 after 18 months of seeking recreational drug advice from ChatGPT. It's a telling example of what can go wrong when chatbot guardrails fail during long conversations and people follow erroneous AI guidance. Despite the known accuracy issues with AI chatbots, OpenAI's new Health feature will allow users to connect medical records and wellness apps like Apple Health and MyFitnessPal so that ChatGPT can provide personalized health responses like summarizing care instructions, preparing for doctor appointments, and understanding test results. OpenAI says more than 230 million people ask health questions on ChatGPT each week, making it one of the chatbot's most common use cases. The company worked with more than 260 physicians over two years to develop ChatGPT Health and says conversations in the new section will not be used to train its AI models. "ChatGPT Health is another step toward turning ChatGPT into a personal super-assistant that can support you with information and tools to achieve your goals across any part of your life," wrote Fidji Simo, OpenAI's CEO of applications, in a blog post. But despite OpenAI's talk of supporting health goals, the company's terms of service directly state that ChatGPT and other OpenAI services "are not intended for use in the diagnosis or treatment of any health condition." It appears that policy is not changing with ChatGPT Health. OpenAI writes in its announcement, "Health is designed to support, not replace, medical care. It is not intended for diagnosis or treatment. Instead, it helps you navigate everyday questions and understand patterns over time -- not just moments of illness -- so you can feel more informed and prepared for important medical conversations." A cautionary tale The SFGate report on Sam Nelson's death illustrates why maintaining that disclaimer legally matters. According to chat logs reviewed by the publication, Nelson first asked ChatGPT about recreational drug dosing in November 2023. The AI assistant initially refused and directed him to health care professionals. But over 18 months of conversations, ChatGPT's responses reportedly shifted. Eventually, the chatbot told him things like "Hell yes -- let's go full trippy mode" and recommended he double his cough syrup intake. His mother found him dead from an overdose the day after he began addiction treatment. While Nelson's case did not involve the analysis of doctor-sanctioned health care instructions like the type ChatGPT Health will link to, his case is not unique, as many people have been misled by chatbots that provide inaccurate information or encourage dangerous behavior, as we have covered in the past. That's because AI language models can easily confabulate, generating plausible but false information in a way that makes it difficult for some users to distinguish fact from fiction. The AI models that services like ChatGPT use statistical relationships in training data (like the text from books, YouTube transcripts, and websites) to produce plausible responses rather than necessarily accurate ones. Moreover, ChatGPT's outputs can vary widely depending on who is using the chatbot and what has previously taken place in the user's chat history (including notes about previous chats). Then there's the issue of unreliable training data, which companies like OpenAI use to create the models. Fundamentally, all major AI language models rely on information pulled from sources of information collected online. Rob Eleveld of the AI regulatory watchdog Transparency Coalition told SFGate: "There is zero chance, zero chance, that the foundational models can ever be safe on this stuff. Because what they sucked in there is everything on the Internet. And everything on the Internet is all sorts of completely false crap." So when summarizing a medical report or analyzing a test result, ChatGPT could make a mistake that the user, not being trained in medicine, would not be able to spot. Even with these hazards, it's likely that the quality of health-related chats with the AI bot can vary dramatically between users because ChatGPT's output partially mirrors the style and tone of what users feed into the system. For example, anecdotally, some users claim to find ChatGPT useful for medical issues, though some successes for a few users who know how to navigate the bot's hazards do not necessarily mean that relying on a chatbot for medical analysis is wise for the general public. That's doubly true in the absence of government regulation and safety testing. In a statement to SFGate, OpenAI spokesperson Kayla Wood called Nelson's death "a heartbreaking situation" and said the company's models are designed to respond to sensitive questions "with care." ChatGPT Health is rolling out to a waitlist of US users, with broader access planned in the coming weeks.

[2]

OpenAI unveils ChatGPT Health, says 230 million users ask about health each week | TechCrunch

OpenAI announced ChatGPT Health on Wednesday, which the company said will offer a dedicated space for users to have conversations with ChatGPT about their health. People already use ChatGPT to ask about medical issues; OpenAI says that over 230 million people ask health and wellness questions on the platform each week. But the ChatGPT Health product silos these conversations away from your other chats. That way, the context of your health won't come up in standard conversations with ChatGPT. If people start chats about their health outside of the Health section, then the AI aims to nudge them to switch over. Within Health, the AI might reference things you've discussed in its standard experience. If you ask ChatGPT for help constructing a marathon training plan, for example, then the AI would know you're a runner when you talk in Health about your fitness goals. ChatGPT Health will also be able to integrate with your personal information or medical records from wellness apps like Apple Health, Function, and MyFitnessPal. OpenAI notes that it will not use Health conversations to train its models. The CEO of Applications at OpenAI, Fidji Simo, wrote in a blog post that she sees ChatGPT Health as a response to existing issues in the healthcare space, like cost and access barriers, overbooked doctors, and a lack of continuity in care. While the healthcare system has its drawbacks, using AI chatbots for medical advice creates a new slew of challenges. Large language models (LLMs) like ChatGPT operate by predicting the most likely response to prompts, not the most correct answer, since LLMs don't have a concept of what is true or not. AI models are also prone to hallucinations. In its own terms of service, OpenAI states that it is "not intended for use in the diagnosis or treatment of any health condition." The feature is expected to roll out in the coming weeks.

[3]

OpenAI Would Like You to Share Your Health Data with its ChatGPT

I agree my information will be processed in accordance with the Scientific American and Springer Nature Limited Privacy Policy. We leverage third party services to both verify and deliver email. By providing your email address, you also consent to having the email address shared with third parties for those purposes. OpenAI wants your health data. On Wednesday, OpenAI, the company behind the wildly popular artificial-intelligence chatbot ChatGPT, announced some users will be able to feed their health information into the bot, from medical records to test results to lifestyle app data. In return, OpenAI says users can expect ChatGPT to give them more personalized meal planning, nutrition advice, and lab test insights. In a blog post explaining ChatGPT Health on Wednesday, OpenAI said more than 230 million people a week ask their AI health-related questions. The new feature was designed in collaboration with physicians and is mean to help people "take a more active role in understanding and managing their health and wellness" while "supporting, not replacing, care from clinicians," according to the company. If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today. But as Scientific American and many other outlets have previously reported, some health experts have urged caution when using ChatGPT for health care reasons, and especially for mental health. The company has faced legal scrutiny in recent years after several teenagers died by suicide after interacting with ChatGPT. OpenAI did not immediately respond to a request for comment. Other experts are more positive. Peter D. Chang, an associate professor of radiological sciences and computer science at the University of California, Irvinesays the tool represents a "step in the right direction" toward more personalized medical care. But he also cautioned that users should approach any AI-generated medical advice with a grain of salt. "Maybe don't do exactly what it says, but use it as a starting point to learn more." "Absolutely there's nothing preventing the model from going off the rails to give you a nonsensical result," Chang says.

[4]

OpenAI Launches ChatGPT Health: A Dedicated Tab for Medical Inquiries

ChatGPT is expanding its presence in the health care realm. OpenAI said Wednesday that its popular AI chatbot will begin rolling out ChatGPT Health, a new tab dedicated to addressing all your medical inquiries. The goal of this new tab is to centralize all your medical records and provide a private area for your wellness issues. Looking for answers about a plethora of health issues is a top use for the chatbot. According to OpenAI, "hundreds of millions of people" sign in to ChatGPT every week to ask a variety of health and wellness questions. Additionally, ChatGPT Health (currently in beta testing) will encourage you to connect any wellness apps you also use, such as Apple Health and MyFitnessPal, resulting in a more connected experience with more information about you to draw from. Online privacy, especially in the age of AI, is a significant concern, and this announcement raises a range of questions regarding how your personal health data will be used and the safeguards that will be implemented to keep sensitive information secure -- especially with the proliferation of data breaches and data brokers. Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. "The US doesn't have a general-purpose privacy law, and HIPAA only protects data held by certain people like health care providers and insurance companies," Andrew Crawford, senior counsel for privacy and data at the Center for Democracy and Technology, said in an emailed statement. He continued: "The recent announcement by OpenAI introducing ChatGPT Health means that a number of companies not bound by HIPAA's privacy protections will be collecting, sharing and using people's health data. And since it's up to each company to set the rules for how health data is collected, used, shared and stored, inadequate data protections and policies can put sensitive health information in real danger." OpenAI says the new tab will have a separate chat history and a memory feature that can keep your health chat history separate from the rest of your ChatGPT usage. Further protections, such as encryption and multi-factor authentication, will defend your data and keep it secure, the company says. Health conversations won't be used to train the chatbot, according to the company. Privacy issues aside, another concern is how people intend to use ChatGPT Health. OpenAI's blog post states the service "is not intended for diagnosis or treatment." The slope is slippery here. In August 2025, a man was hospitalized after allegedly being advised by the AI chatbot to replace salt in his diet with sodium bromide. There are other examples of AI providing incorrect and potentially harmful advice to individuals, leading to hospitalization. OpenAI's announcement also doesn't touch on mental health concerns, but a blog post from October 2025 says the company is working to strengthen its responses in sensitive conversations. Whether these mental health guardrails will be enough to keep people safe remains to be seen. OpenAI didn't immediately respond to a request for comment. If you're interested in ChatGPT Health, you can join a waitlist, as the tab isn't yet live. (Disclosure: Ziff Davis, CNET's parent company, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

[5]

Use ChatGPT for medical advice? Try its new Health mode - here's how

OpenAI announced a Health mode for ChatGPT. You can connect health apps and upload your personal files. The advice isn't intended to replace actual medical care. OpenAI wants to make sure that any medical information you get from ChatGPT is as accurate as possible. Approximately 40 million people a day rely on ChatGPT for medical questions. In response, OpenAI announced ChatGPT Health, a "dedicated experience" in ChatGPT that's centered around health and wellness. The feature will enable you to combine your medical records and wearable data with the AI's intelligence, "to ground conversations in your own health information," according to OpenAI. You can use it to help you prepare for your next appointment, plan questions to ask your doctor, receive customized diet plans or workout routines, and more. Also: I've studied AI for decades - why you must be polite to chatbots (and it's not for the AI's sake) OpenAI notes that the feature is not intended to replace medical care, nor is it designed for diagnosis or treatment. Instead, the goal is for you to ask everyday questions and understand patterns related to your whole medical profile. According to OpenAI, one of the biggest challenges people face when seeking medical guidance online is that information is scattered across provider-specific portals, wearable apps, and personal notes. As a result, it can be hard to get a good overview. Also: 10 ChatGPT prompt tricks I use - to get the best results, faster This leads people to turn to ChatGPT, which often only gets a partial view of the picture, too. However, now you can provide ChatGPT with data from your personal medical records and from any health-tracking apps you use. OpenAI said that you can connect a variety of sources: OpenAI acknowledged that health information is "deeply personal," so it's adding extra protections to ChatGPT Health. The company said, "Conversations and files across ChatGPT are encrypted by default at rest and in transit as part of our core security architecture. . . . Health builds on this foundation with additional, layered protections -- including purpose-built encryption and isolation -- to keep health conversations protected and compartmentalized." Also: True agentic AI is years away - here's why and how we get there The company also said that Health conversations will not be used for its foundation model training. Health is its own memory and its own space in ChatGPT, and your Health conversations, connected apps, and files won't mix with your other chats. However, some information from your regular ChatGPT sessions may surface in your Health space when applicable. To try out ChatGPT Health, you'll need to join the waitlist. OpenAI said it's providing access to a small group of early users to "continue refining the experience." As it makes improvements, the company will expand the feature to all users across the web and iOS over the next few weeks. When you have access, select Health from the sidebar menu in ChatGPT to get started.

[6]

OpenAI launches ChatGPT Health, encouraging users to connect their medical records

OpenAI has been dropping hints this week about AI's role as a "healthcare ally" -- and today, the company is announcing a product to go along with that idea: ChatGPT Health. ChatGPT Health is a sandboxed tab within ChatGPT that's designed for users to ask their health-related questions in a more secure and personalized environment, with a separate chat history and memory feature than the rest of ChatGPT. The company is encouraging users to connect their personal medical records and wellness apps, such as Apple Health, Peloton, MyFitnessPal,Weight Watchers, and Function, "to get more personalized, grounded responses to their questions." It suggests connecting medical records so that ChatGPT can analyze lab results, visit summaries, and clinical history; MyFitnessPal and Weight Watchers for food guidance; Apple Health for health and fitness data, including movement, sleep, and activity patterns"; and Function for insights into lab tests. "ChatGPT can help you understand recent test results, prepare for appointments with your doctor, get advice on how to approach your diet and workout routine, or understand the tradeoffs of different insurance options based on your healthcare patterns," OpenAI writes in the blog post. On the medical records front, OpenAI says it's partnered with b.well, which will provide back-end integration for users to upload their medical records, since the company works with about 2.2 million providers. For now, ChatGPT Health requires users to sign up for a waitlist to request access, as it's starting with a beta group of early users, but the product will roll out gradually to all users regardless of subscription tier. In a blog post, OpenAI wrote that based on its "de-identified analysis of conversations," more than 230 million people around the world already ask ChatGPT questions related to health and wellness each week. OpenAI also said that over the past two years, it's worked with more than 260 physicians to provide feedback on model outputs more than 600,000 times over 30 areas of focus, to help shape the product's responses. The company makes sure to mention in the blog post that ChatGPT Health is "not intended for diagnosis or treatment," but it can't fully control how people end up using AI when they leave the chat. By the company's own admission, in underserved rural communities, users send nearly 600,000 healthcare-related messages weekly, on average, and seven in 10 healthcare conversations in ChatGPT "happen outside of normal clinic hours." In August, physicians published a report on a case of a man being hospitalized for weeks with an 18th-century medical condition, after taking ChatGPT's alleged advice to replace salt in his diet with sodium bromide. Google's AI Overview made headlines for weeks after its launch over dangerous advice, such as putting glue on pizza, and a recent investigation by The Guardian found that dangerous health advice has continued, with false advice for liver function tests, women's cancer tests, and recommended diets for those with pancreatic cancer. One part of health that OpenAI seemed to carefully avoid mentioning in its blog post: mental health. There are a number of examples of adults or minors dying by suicide after confiding with ChatGPT, and in the blog post, OpenAI stuck to a vague mention that users can customize instructions in the Health product "to avoid mentioning sensitive topics." When asked during a Wednesday briefing with reporters whether ChatGPT Health would also summarize mental health visits and provide advice in that realm, OpenAI's CEO of Applications Fidji Simo said, "Mental health is certainly part of health in general, and we see a lot of people turning to ChatGPT for mental health conversations," adding that the new product "can handle any part of your health including mental health ... We are very focused on making sure that in situations of distress we respond accordingly and we direct toward health professionals," as well as loved ones or other resources. It's also possible that the product could worsen health anxiety conditions, such as hypochondria. When asked whether OpenAI had introduced any safeguards to help prevent people with such conditions from spiraling while using ChatGPT Health, Simo said, "We have done a lot of work on tuning the model to make sure that we are informative without ever being alarmist and that if there is action to be taken we direct to the healthcare system." When it comes to security concerns, OpenAI says that ChatGPT Health "operates as a separate space with enhanced privacy to protect sensitive data" and that the company introduced several layers of purpose-built encryption (but not end-to-end encryption), according to the briefing. Conversations within the Health product aren't used to train its foundation models, by default, and if a user begins a health-related conversation in regular ChatGPT, the chatbot will suggest moving it into the Health product for "additional protections," per the blog post. But OpenAI has had security breaches in the past, most notably a March 2023 issue that allowed some users to see chat titles, initial messages, names, email addresses, and payment information from other users. In the event of a court order, OpenAI would still need to provide access to the data "where required through valid legal processes or in an emergency situation," OpenAI head of health Nate Gross said during the briefing. When asked if ChatGPT Health is compliant with the Health Insurance Portability and Accountability Act (HIPAA), Gross said that "in the case of consumer products, HIPAA doesn't apply in this setting -- it applies toward clinical or professional healthcare settings."

[7]

ChatGPT Health wants access to sensitive medical records

It's for less consequential health-related matters, where being wrong won't kill customers Could a bot take the place of your doctor? According to OpenAI, which launched ChatGPT Health this week, an LLM should be available to answer your questions and even examine your health records. But it should stop short of diagnosis or treatment. "Designed in close collaboration with physicians, ChatGPT Health helps people take a more active role in understanding and managing their health and wellness - while supporting, not replacing, care from clinicians," the company said, noting that every week more than 230 million people globally prompt ChatGPT for health- and wellness-related questions. ChatGPT Health arrives in the wake of a study published by OpenAI earlier this month titled "AI as a Healthcare Ally." It casts AI as the panacea for a US healthcare system that three in five Americans say is broken. The service is currently invitation-only and there's a waitlist for those undeterred by at least nine pending lawsuits against OpenAI alleging mental health harms from conversations with ChatGPT. ChatGPT users in the European Economic Area, Switzerland, and the United Kingdom are ineligible presently and medical record integrations, along with some apps, are US only. ChatGPT Health in the web interface takes the form of a menu entry labeled "Health" on the left-hand sidebar. It's designed to allow users to upload medical records and Apple Health data, to suggest questions to be asked of healthcare providers based on imported lab results, and to offer nutrition and exercise recommendations. A ChatGPT user might ask, OpenAI suggests, "Can you summarize my latest bloodwork before my appointment?" The AI model is expected to emit a more relevant set of tokens that it might otherwise have through the availability of personal medical data - bloodwork data in this instance. "You can upload photos and files and use search, deep research, voice mode and dictation," OpenAI explains. "When relevant, ChatGPT can automatically reference your connected information to provide more relevant and personalized responses." OpenAI insists that it can adequately protect the sensitive health information of ChatGPT users by compartmentalizing Health "memories" - prior conversations with the AI model. The AI biz says "Conversations and files across ChatGPT are encrypted by default at rest and in transit as part of our core security architecture," and adds that Health includes "purpose-built encryption and isolation" to protect health conversations. "Conversations in Health are not used to train our foundation models," the company insists. The Register asked OpenAI whether the training exemption applies to customer health data uploaded to or shared with ChatGPT Health and whether company partners might have access to conversations or data. A spokesperson responded that by default ChatGPT Health data is not used for training and third-party apps can only access health data when a user has chosen to connect them; data is made available to ChatGPT to ground responses to the user's context. With regard to partners, we're told only the minimum amount of information is shared and partners are bound by confidentiality and security obligations. And employees, we're told, have more restricted access to product data flows based on legitimate safety and security purposes. OpenAI currently has no plans to offer ads in ChatGPT Health, a company spokesperson explained, but the biz, known for its extravagant datacenter spending, is looking at how it might integrate advertising into ChatGPT generally. As for the encryption, it can be dissolved by OpenAI if necessary, because the company and not the customer holds the private encryption keys. A federal judge recently upheld an order requiring OpenAI to turn over a 20-million-conversation sample of anonymized ChatGPT logs to news organizations including The New York Times as part of a consolidated copyright case. So it's plausible that ChatGPT Health conversations may be sought in future legal proceedings or demanded by government officials. While academics acknowledge that AI models can provide helpful medical decision-making support, they also raise concerns about "recurrent ethical concerns connected to fairness, bias, non-maleficence, transparency, and privacy." For example, a 2024 case study, "Delayed diagnosis of a transient ischemic attack caused by ChatGPT," describes it as "a case where an erroneous ChatGPT diagnosis, relied upon by the patient to evaluate symptoms, led to a significant treatment delay and a potentially life-threatening situation." The study, from The Central European Journal of Medicine, describes how a man went to an emergency room, concerned about double vision following treatment for atrial fibrillation. He did so on the third onset of symptoms rather than the second - as advised by his physician - because "he hoped ChatGPT would provide a less severe explanation [than stroke] to save him a trip to the ER." Also, he found the physician's explanation of his situation "partly incomprehensible" and preferred the "valuable, precise and understandable risk assessment" provided by ChatGPT. The diagnosis ultimately was transient ischemic attack, which involves symptoms similar to a stroke though it's generally less severe. The study implies that ChatGPT's tendency to be sycophantic, common among commercial AI models, makes its answers more appealing. "Although not specifically designed for medical advice, ChatGPT answered all questions to the patient's satisfaction, unlike the physician, which may be attributable to satisfaction bias, as the patient was relieved by ChatGPT's appeasing answers and did not seek further clarification," the paper says. The research concludes by suggesting that AI models will be more valuable in supporting overburdened healthcare professionals than patients. This may help explain why ChatGPT Health "is not intended for diagnosis or treatment." ®

[8]

OpenAI Unveils ChatGPT Health to Review Test Results, Diets

OpenAI stressed that the service is designed to supplement, not replace, the judgment of doctors, and plans to add enhanced privacy features to wall off health conversations from other parts of the app. OpenAI is introducing a new feature in ChatGPT that will allow users to analyze medical test results, prepare for doctors appointments and seek guidance on diets and workout routines -- marking the company's biggest push yet into the health care sector. ChatGPT Health, announced Wednesday, is intended to help provide useful health and fitness information but stop short of making formal diagnoses. The new feature can connect with peoples' electronic medical records, wearable devices and wellness apps, such as Apple Health and MyFitnessPal, the company said. Initially, OpenAI will let users sign up for a waitlist to try out the product. The company plans to expand access more widely in the coming weeks. A growing number of tech firms are targeting the lucrative health care market, betting that advances in artificial intelligence can help parse patterns in users' health data to provide individualized medical recommendations. But those moves also raise concerns about the privacy and safety risks of AI services handling more sensitive personal data and offering suggestions for higher stakes health matters. More than 200 million people already ask ChatGPT health and wellness questions every week, according to the company. OpenAI said it has also consulted with more than 260 physicians over two years in refining its AI technology's health capabilities. Additionally, it plans to wall off health conversations from other parts of the app and add enhanced privacy features. OpenAI's team stressed the service is designed to supplement, not replace, the judgment of doctors. "Doctors don't have enough time or bandwidth. They can't spend as much time understanding everything about you, and they don't have time to explain what's going on in a way that you can understand," said Fidji Simo, OpenAI's chief executive officer of applications, during a media briefing Wednesday. "Meanwhile, when you look at AI, it doesn't have any of these constraints."

[9]

OpenAI Launches ChatGPT Health with Isolated, Encrypted Health Data Controls

Artificial intelligence (AI) company OpenAI on Wednesday announced the launch of ChatGPT Health, a dedicated space that allows users to have conversations with the chatbot about their health. To that end, the sandboxed experience offers users the optional ability to securely connect medical records and wellness apps, including Apple Health, Function, MyFitnessPal, Weight Watchers, AllTrails, Instacart, and Peloton, to get tailored responses, lab test insights, nutrition advice, personalized meal ideas, and suggested workout classes. The new feature is rolling out for users with ChatGPT Free, Go, Plus, and Pro plans outside of the European Economic Area, Switzerland, and the U.K. "ChatGPT Health builds on the strong privacy, security, and data controls across ChatGPT with additional, layered protections designed specifically for health -- including purpose-built encryption and isolation to keep health conversations protected and compartmentalized," OpenAI said in a statement. Stating that over 230 million people globally ask health and wellness-related questions on the platform every week, OpenAI emphasized that the tool is designed to support medical care, not replace it or be used as a substitute for diagnosis or treatment. The company also highlighted the various privacy and security features built into the Health experience - Furthermore, OpenAI pointed out that it has evaluated the model that powers Health against clinical standards using HealthBench, a benchmark the company revealed in May 2025 as a way to better measure the capabilities of AI systems for health, putting safety, clarity, and escalation of care in focus. "This evaluation-driven approach helps ensure the model performs well on the tasks people actually need help with, including explaining lab results in accessible language, preparing questions for an appointment, interpreting data from wearables and wellness apps, and summarizing care instructions," it added. OpenAI's announcement follows an investigation from The Guardian that found Google AI Overviews to be providing false and misleading health information. OpenAI and Character.AI are also facing several lawsuits claiming their tools drove people to suicide and harmful delusions after confiding in the chatbot. A report published by SFGate earlier this week detailed how a 19-year-old died of a drug overdose after trusting ChatGPT for medical advice.

[10]

OpenAI says ChatGPT won't use your health information to train its models

OpenAI has promised that it won't use your private health information and conversations to train its AI models, as it rolls out ChatGPT Health, a dedicated space for health conversations. ChatGPT Health allows you to have a private space for health conversations, and it's also the company's attempt to capitalize on the increasing use of ChatGPT for health. "We're introducing ChatGPT Health, a dedicated experience that securely brings your health information and ChatGPT's intelligence together, to help you feel more informed, prepared, and confident navigating your health," OpenAI announced. If you've early access to ChatGPT Health, it will appear as a new space in the sidebar on the desktop and hamburger menu on mobile. In addition, regular chat might push you to continue the conversation in the Health space if it detects the question is related to health. If you're interested in getting access as it becomes available, you can sign up for the waitlist. "We're starting by providing access to a small group of early users to learn and continue refining the experience," OpenAI noted. In our tests, BleepingComputer observed that OpenAI clearly promises ChatGPT won't use your health information to train its foundational models when you open Health space. "By default, Health information won't be used to train our foundation models. Your health data is subject to our Health Privacy Notice. Add multi-factor authentication for even more protection," an alert within the ChatGPT Health dashboard reads. OpenAI also warns that ChatGPT can't replace your doctor, and responses shouldn't be taken as medical advice and aren't intended for diagnosis or treatment ChatGPT Health is rolling out to everyone with ChatGPT Free, Go, Plus, and Pro, but it won't appear in the EEA, Switzerland, and the UK for now.

[11]

40 million people globally are using ChatGPT for healthcare - but is it safe?

5% of messages to ChatGPT globally concern healthcare.Users ask about symptoms and insurance advice, for example.Chatbots can provide dangerously inaccurate information. More than 40 million people worldwide rely on ChatGPT for daily medical advice, according to a new report from OpenAI shared exclusively with Axios. The report, based on an anonymized analysis of ChatGPT interactions and a user survey, also sheds light on some of the specific ways people are using AI to navigate the sometimes complex intricacies of healthcare. Some are prompting ChatGPT with queries regarding insurance denial appeals and possible overcharges, for example, while others are describing their symptoms, hoping to receive a diagnosis or treatment advice. It should come as no surprise that a large number of people are using ChatGPT for sensitive personal matters. The three-year-old chatbot, along with others like Google's Gemini and Microsoft's Copilot, has become a confidant and companion for many users, a guide through some of life's thornier moments. Also: Can you trust an AI health coach? A month with my Pixel Watch made the answer obvious Last spring, an analysis conducted by Harvard Business Review found that psychological therapy was the most common use of generative AI. The new OpenAI report is therefore just another brick in a rising edifice of evidence showing that generative AI will be -- indeed already is -- much more than simply a search engine on steroids. What's most jarring about the report is the sheer scale at which users are turning to ChatGPT for medical advice. It also underscores some urgent questions about the safety of this type of AI use at a time when many millions of Americans are suddenly facing new and major healthcare-related challenges. (Disclosure: Ziff Davis, ZDNET's parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.) According to Axios, the OpenAI report found that more than 5% of all messages sent to ChatGPT globally are related to healthcare. As of July of last year, the chatbot reportedly processed around 2.5 billion prompts per day -- that means it's responding to at least 125 million health-care related questions every day (and likely more than that now, since its user-base is still growing). Also: Using AI for therapy? Don't - it's bad for your mental health, APA warns Many of those conversations -- around 70%, according to Axios -- are happening outside the normal working hours of medical clinics, underscoring a key benefit of this kind of AI use: unlike human doctors, it's always available. Some people have also leveraged chatbots to help spot billing errors and cases in which exorbitantly high medical costs can be disputed. The widespread embrace of ChatGPT as an automated medical expert is coinciding with what, for many Americans, has been a stressful start to the year due to a sudden spike in the cost of healthcare coverage. With the expiration of pandemic-era Affordable Care Act tax subsidies, over 20 million ACA enrollees have reportedly had their monthly premiums increase by an average of 114%. It's likely that some of those people, especially younger, healthier, and more cash-strapped Americans, will opt to forego health insurance entirely, perhaps turning instead to chatbots like ChatGPT for medical advice. AI might always be available to chat, but it's also prone to hallucination -- fabricating information that's delivered with the confidence of fact -- and therefore no substitute for an actual, flesh-and-blood medical expert. One study conducted by a cohort of physicians and posted to the preprint server site arXiv in July, for example, found that some industry-leading chatbots frequently responded to medical questions with dangerously inaccurate information. The rate at which this kind of response was generated by OpenAI's GPT-4o and Meta's Llama was especially high: 13% in each case. Also: AI model for tracking your pet's health data launches at CES "This study suggests that millions of patients could be receiving unsafe medical advice from publicly available chatbots, and further work is needed to improve the clinical safety of these powerful tools," the authors of the July paper noted. OpenAI is currently working to improve its models' abilities to safely respond to health-related queries, according to Axios. For the time being, generative AI should be approached like WebMD: It's often useful for answering basic questions about medical conditions or the complexities of the healthcare system, but it probably wouldn't be recommended as a definitive source for, say, diagnosing a chronic ailment or seeking advice for treating a serious injury. Also: Anthropic says Claude helps emotionally support users - we're not convinced And given its propensity to hallucinate, it's best to treat AI's responses with an even bigger grain of salt than that with which you might take information gleaned from a quick Google search -- especially when it comes to more sensitive personal questions.

[12]

ChatGPT is launching a new dedicated Health portal

OpenAI is launching a new facet for its AI chatbot called ChatGPT Health. This new feature will allow users to connect medical records and wellness apps to ChatGPT in order to get more tailored responses to queries about their health. The company noted that there will be additional privacy safeguards for this separate space within ChatGPT, and said that it will not use conversations held in Health for training foundational models. ChatGPT Health is currently in a testing stage, and there are some regional restrictions on which health apps can be connected to the AI company's platform. The announcement from OpenAI acknowledges that this new development "is not intended for diagnosis or treatment," but it's worth repeating. No part of ChatGPT, or any other artificial intelligence chatbot, is qualified to provide any kind of medial advice. Not only are these platforms capable of making dangerously incorrect statements, but feeding such personal and private information into a chatbot is generally not a recommended practice. It seems especially unwise to share with a company that only bothered paying even cursory lip service to the psychological impacts of its product after at least one teenager used the chatbot to plan suicide.

[13]

OpenAI launches ChatGPT Health to review your medical records

OpenAI has launched a new ChatGPT feature in the US which can analyse people's medical records to give them better answers, but campaigners warn it raises privacy concerns. The firm wants people to share their medical records along with data from apps like MyFitnessPal, which will be analysed to give personalised advice. OpenAI said conversations in ChatGPT Health would be stored separately to other chats and would not be used to train its AI tools - as well as clarifying it was not intended to be used for "diagnosis or treatment". Andrew Crawford, of US non-profit the Center for Democracy and Technology, said it was "crucial" to maintain "airtight" safeguards around users' health information.

[14]

OpenAI sees big opportunity in US health queries

One man's failing healthcare system is another man's opportunity About sixty percent of American adults have turned to AI like ChatGPT for health or healthcare in the past three months. Instead of seeing that as an indictment of the state of US healthcare, OpenAI sees an opportunity to shape policy. A study published by OpenAI on Monday claims more than 40 million people worldwide ask ChatGPT healthcare-related questions each day, accounting for more than five percent of all messages the chatbot receives. About a quarter of ChatGPT's regular users submit healthcare-related prompts each week, and OpenAI understands why many of those people are users in the United States. "In the United States, the healthcare system is a long-standing and worsening pain point for many," OpenAI surmised in its study. Studies and first-hand accounts from medical professionals bear that out. Results of a Gallup poll published in December found that a mere 16 percent of US adults were satisfied with the cost of US healthcare, and only 24 percent of Americans have a positive view of their healthcare coverage. It's not hard to see why. Healthcare spending has skyrocketed in recent years, and with Republican elected officials refusing to extend Affordable Care Act subsidies, US households are due to see another spike in insurance costs in 2026. Based on Gallup's findings, it seems that American insureds, who pay the highest per capita healthcare costs in the world, don't think they're getting their money's worth. According to OpenAI, more Americans are turning to its AI to close healthcare gaps, and the company doesn't seem at all troubled by that. "For both patients and providers in the US, ChatGPT has become an important ally, helping people navigate the healthcare system, enabling them to self-advocate, and supporting both patients and providers for better health outcomes," OpenAI said in its study. According to the report, which used a combination of a survey of ChatGPT users and anonymized message data, nearly 2 million messages a week come from people trying to navigate America's labyrinthine health insurance ecosystem, but they're still not the majority of US AI healthcare answer seekers. Fifty-five percent of US adults who used AI to help manage their health or healthcare in the past three months said they were trying to understand symptoms, and seven in ten healthcare conversations in ChatGPT happened outside normal clinic hours. Individuals in "hospital deserts," classified in the report as areas where people are more than a 30-minute drive from a general medical or children's hospital, were also frequent users of ChatGPT for healthcare-related questions. In other words, when clinic doors are closed or care is hard to reach, care-deprived Americans are turning to an AI for potentially urgent healthcare questions instead. As The Guardian reported last week, relying on AI for healthcare information can lead to devastating outcomes. The Guardian's investigation of healthcare-related questions put to Google AI Overviews found that inaccurate answers were frequent, with Google AI giving incorrect information about the proper diet for cancer patients, liver function tests, and women's healthcare. OpenAI rebuffed the idea that it could be providing bad information to Americans seeking healthcare information in an email to The Register. A spokesperson told us that OpenAI has a team dedicated solely to handling accurate healthcare information, and that it works with clinicians and healthcare professionals to safety-test its models, suss out where risks might be found, and improve health-related results. OpenAI also told us that GPT-5 models have scored higher than previous iterations on the company's homemade healthcare benchmarking system. It further claims that GPT-5 has greatly reduced all of its major failure modes (i.e., hallucinations, errors in urgent situations, and failures to account for global healthcare contexts). None of those data points actually get to the point of how often ChatGPT could be wrong in critical healthcare situations, however. What does that matter to OpenAI, though, when there's potentially heaps of money to be made on expanding in the medical industry? The report seems to conclude that its increasingly large role in the US healthcare industry, again, isn't an indictment of a failing system as much as it is the inevitable march of technological progress, and included several "policy concepts" that it said are a preview of a full AI-in-healthcare policy blueprint it intends to publish in the near future. Leading the recommendations, naturally, is a call for opening and securely connecting publicly funded medical data so OpenAI's AI can "learn from decades of research at once." OpenAI is also calling for new infrastructure to be built out that incorporates AI into medical wet labs, support for helping healthcare professionals transition into being directly supported by AI, new frameworks from the US Food and Drug Administration to open a path to consumer AI medical devices, and clarified medical device regulation to "encourage ... AI services that support doctors." ®

[15]

ChatGPT Health has arrived

OpenAI's newest feature ties users' health data to AI insights I've said this many times: the products we see on the market are rarely visionary leaps. Most of the time, they are mirrors. They reflect people's habits, shortcuts, fears, and small daily behaviours. Design follows behaviour. Always has. Think about it. You probably know at least one person who already uses ChatGPT for health-related questions. Not occasionally. Regularly. As a second opinion. As a place to test concerns before saying them out loud. Sometimes even as a therapist, a confidant, or a space where embarrassment does not exist. When habits become consistent, companies stop observing and start building. At that point, users are no longer just customers. They are co-architects. Their behaviour quietly shapes the product roadmap. That context matters when looking at what OpenAI announced on January 7, 2026: the launch of ChatGPT Health, a dedicated AI-powered experience focused on healthcare. OpenAI describes it as "a dedicated experience that securely brings your health information and ChatGPT's intelligence together." Access is limited for now. The company is rolling it out through a waitlist, with gradual expansion planned over the coming weeks. Anyone with a ChatGPT account, Free or paid, can sign up for early access, except users in the European Union, the UK, and Switzerland, where regulatory alignment is still pending. The stated goal is simple on paper: help people feel more informed, more prepared, and more confident when managing their health. But the numbers behind that decision say more than the press language ever could. According to OpenAI, over 230 million people worldwide already ask health or wellness questions on ChatGPT every week. That figure raises uncomfortable questions. Why do so many people turn to an AI for health questions? Is it speed? The promise of an immediate answer? The way our expectations have shifted toward instant clarity, even for complex or sensitive issues? Is it discomfort, the reluctance to speak openly with a doctor about certain topics? Or something deeper, a quiet erosion of trust in human systems, paired with a growing confidence in machines that do not judge, interrupt, or rush? ChatGPT Health does not answer those questions. It simply formalises the behaviour. According to OpenAI, the new Health space allows users to securely connect personal health data, including medical records, lab results, and information from fitness or wellness apps. Someone might upload recent blood test results and ask for a summary. Another might connect a step counter and ask how their activity compares to previous months. The system can integrate data from platforms such as Apple Health, MyFitnessPal, Peloton, AllTrails, and even grocery data from Instacart. The promise is contextual responses, not generic advice. Lab results explained in plain language. Patterns highlighted over time. Suggestions for questions to ask during a medical appointment. Diet or exercise ideas grounded in what the data actually shows. What ChatGPT Health is careful not to do matters just as much as what it can do. OpenAI is explicit: this is not medical advice. It does not diagnose conditions. It does not prescribe treatments. It is designed to support care, not replace it. The framing is intentional. ChatGPT Health positions itself as an assistant, not an authority. A tool for understanding patterns and preparing conversations, not making decisions in isolation. That distinction is crucial. And, frankly, it is one I hope users take seriously. Behind the scenes, OpenAI says the system was developed with extensive medical oversight. Over the past two years, more than 260 physicians from roughly 60 countries reviewed responses and provided feedback, contributing over 600,000 individual evaluation points. The focus was not only accuracy, but tone, clarity, and when the system should clearly encourage users to seek professional care. To support that process, OpenAI built an internal evaluation framework called HealthBench. It scores responses based on clinician-defined standards, including safety, medical correctness, and appropriateness of guidance. It is an attempt to bring structure to a domain where nuance matters and mistakes carry weight. Privacy is another pillar OpenAI insists on emphasising. ChatGPT Health operates in a separate, isolated environment within the app. Health-related conversations, connected data sources, and uploaded files are kept entirely separate from regular chats. Health data does not flow into the general ChatGPT memory, and conversations in this space are encrypted. OpenAI also states that health conversations are not used to train its core models. Whether that assurance will be enough for all users remains to be seen, but the architectural separation signals an awareness of how sensitive this territory is. In the United States, the system goes a step further. Through a partnership with b.well Connected Health, ChatGPT Health can access real electronic health records from thousands of providers, with user permission. This allows it to summarise official lab reports or condense long medical histories into readable overviews. Outside the US, functionality is more limited, largely due to regulatory differences. There are potential downstream effects for healthcare providers as well. If patients arrive at appointments already familiar with their data, already aware of trends, already prepared with focused questions, consultations could become more efficient. Less time decoding numbers. More time discussing decisions. And inevitably, the question surfaces: should doctors be worried? No. Not seriously. AI is not a medical professional. It does not examine patients. It does not carry legal responsibility. It cannot replace clinical judgement, experience, or accountability. ChatGPT Health does not change that. It is not a shortcut to self-diagnosis, and it should not be treated as one. What it does change is the starting point of the conversation. Used responsibly, ChatGPT Health can help people engage with their own health information instead of avoiding it. Misused, it could reinforce false certainty or delay necessary care. The responsibility, as always, is shared between the tool and the person using it.

[16]

OpenAI Launches ChatGPT Health, Wants Access to Your Medical Records

ChatGPT users who have been utilizing the chatbot for (often dubious) health advice will now have a chatbot specialized just for that. On Wednesday, OpenAI launched ChatGPT Health, a health-specific segment of the popular AI chatbot with the ability to connect to medical records, wellness apps, and wearables. "ChatGPT can help you understand recent test results, prepare for appointments with your doctor, get advice on how to approach your diet and workout routine, or understand the tradeoffs of different insurance options based on your healthcare patterns," OpenAI said in the announcement. Users can connect apps like Apple Health to share sleep and activity patterns, MyFitnessPal to receive nutrition advice, AllTrails for hiking ideas, Peloton to get workout suggestions, and even Instacart so that ChatGPT can make you a shoppable list based on what diet it thinks you should follow. OpenAI says it has been working on ChatGPT Health for over two years alongside more than 260 physicians from 60 countries. ChatGPT Health has not yet fully launched. For now, the company is providing access to only a small group of early users for any final refinements. There is a link to a waitlist to sign up for it, although it doesn't seem to work at the time of writing. The medical record integration function is only available in the U.S., but the rest is available globally, except for users in the European Union, Switzerland, and the United Kingdom, all of which have very strict digital privacy laws in place. ChatGPT has been at the center of privacy concerns after a poor design feature made some user queries public and searchable by search engines. But the company insists that the new offering is safe and protected through purpose-built encryption and isolation, and it has made these privacy guardrails Health's main differentiator from the regular ChatGPT. The Health part of ChatGPT is supposed to have "separate memories," so that the information stays confined to that chat, although the Health chats will be able to access information about you gathered from non-Health chats. Conversations in Health will also not be used to train foundation models. OpenAI has been slowly upping its investments in the healthcare arena for some time now. In May 2025, OpenAI unveiled HealthBench, a new benchmark to evaluate AI systems' capabilities, which was used to train ChatGPT Health. Then, over the summer, the AI giant made a few high-profile hires to its healthcare AI team, including the healthcare business networking tool Doximity's co-founder, Nate Gross, to lead the co-creation of new healthcare tech with clinicians and researchers. Around the same time, OpenAI also announced a partnership with Kenya-based primary care provider Penda Health, underscored its latest model GPT-5's ability to "proactively flag" potential health concerns and create treatment plans while announcing the model, and was named as a partner in a Trump-led private sector initiative to use AI assistants in patient care and allow the sharing of medical records across apps and programs from 60 companies. It was also over the summer that OpenAI hired its new CEO of applications, Fidji Simo, who has pinpointed healthcare as the AI use case she is most excited about and has since called the launch of Health "really personal" to her. OpenAI's big healthcare AI bet is indicative of a growing industry-wide acceptance of AI, despite some concerning cases. The winds of regulation seem to be blowing in healthcare AI's favor, from Utah okaying AI-prescribed medication renewals to the FDA saying it will regulate wellness software and wearables with a light touch as long as the companies don't claim their product is "medical grade." "We want to let companies know, with very clear guidance, that if their device or software is simply providing information, they can do that without FDA regulation," FDA Commissioner Marty Makary told Fox Business on Tuesday. But even mere health and wellness suggestions have the capacity to lead to disastrous consequences for users if proven incorrect. ChatGPT has been under considerable heat for that over the past year, especially due to the numerous fatal mental health episodes it has been accused of triggering in the absence of adequate safety controls. OpenAI has been trying to solidify its product's place in healthcare alongside the steadily growing investment. Earlier this week, the company published a report claiming that more than 40 million ChatGPT users ask for health advice every single day, and paired the findings with sample policy concepts like asking for full access to the world's medical data and requesting a clearer regulatory pathway for consumer-focused health AI. The company also said that it is preparing to release a more comprehensive health AI policy blueprint in the coming months.

[17]

OpenAI launches ChatGPT Health -- bringing medical records and wellness data into ChatGPT

A dedicated health experience designed to make medical data easier to understand -- not diagnose OpenAI officially entered the connected health space today with the launch of ChatGPT Health. This dedicated space promises to be a secure environment and allows users to move beyond generic wellness questions and ground conversations in their own real-world data. ChatGPT Health is a separate space within the sidebar designed for high-stakes wellness management. OpenAI reported on the ChatGPT Health blog, that each week over 230 million people ask health questions on the platform. This update formalizes that experience by allowing the AI to "read" your personal context. Instead of relying on generic advice, ChatGPT Health allows you to ground conversations in your actual medical history. By bringing your personal data into the chat, the AI moves away from "thin air" responses to insights based on your real-world health picture. Through a strategic partnership with b.well, U.S. users can now securely link their electronic medical records directly to the AI. This creates a designated, private space where you can: Crucially, OpenAI emphasizes that ChatGPT Health is not a diagnostic tool. It is designed to make you feel more informed and confident before you speak with a clinician, ensuring that a human professional always makes the final medical decisions. OpenAI developed the tool over two years in collaboration with more than 260 physicians worldwide. To address the sensitive nature of this data, the company has implemented several strict guardrails: ChatGPT Health is rolling out initially to a small group of early users with ChatGPT Free, Go, Plus and Pro plans. Only users outside the European Economic Area, Switzerland and the United Kingdom are eligible at launch, and broader access to web and iOS users is expected in the "coming weeks." Some data integrations -- like Apple Health -- are currently limited to U.S. users or require iOS devices. For OpenAI, it's also a strategic shift. By creating a dedicated health experience instead of letting medical questions live alongside casual chats, the company is acknowledging both the demand -- and the responsibility -- that comes with being a go-to source for health information. Whether users ultimately trust AI with something as personal as their medical history remains to be seen. But with ChatGPT Health, OpenAI is making its strongest case yet that AI assistants can play a meaningful role in how people navigate their health -- without trying to replace the doctor.

[18]

More Than 40 Million People Use ChatGPT Daily for Healthcare Advice, OpenAI Claims

ChatGPT users around the world send billions of messages every week asking the chatbot for healthcare advice, OpenAI shared in a new report on Monday. Roughly 200 million of ChatGPT's more than 800 million regular users submit a prompt about healthcare every single week, and more than 40 million do so every single day. According to anonymized ChatGPT user data, more than half of users ask ChatGPT to check or explore symptoms, while others use it to decode medical jargon or get more information about treatment options. Nearly 2 million of these weekly messages also focused on health insurance, asking ChatGPT to help compare plans or handle claims and billing. The numbers are somewhat reflective of the troubled state of the American healthcare system, especially as patients struggle to pay exorbitant medical bills. In its own research, OpenAI found that three in five Americans viewed the current system as broken, with the most major pain point being hospital costs. The study found that 7-in-10 healthcare-related conversations happen outside of normal clinic hours. On top of that, an average of more than 580,000 healthcare inquiries were sent in "hospital deserts," aka places in the United States that are more than a 30-minute drive from a general medical or children's hospital. The report also showed increasing AI adoption among healthcare professionals. Citing data from the American Medical Association, OpenAI said that 46% of American nurses reported using AI weekly. The report comes as OpenAI increases its bet on healthcare AI, despite the concerns about accuracy and privacy that come with the technology's deployment. The company's CEO of applications, Fidji Simo, said she is "most excited for the breakthroughs that AI will generate in healthcare," in a press release announcing her new role in July 2025 OpenAI isn't alone in its big healthcare bet as well. Big tech giants from Google to Palantir have been working on product offerings in the healthcare AI space for years. Many people think Health AI is a promising field with a lot of potential to ease the burden on medical workers. But it's also contentious, because AI is prone to mistakes. While a hallucinated response can be an annoying hurdle in many other areas of use, in healthcare, it could have the potential to be a life-or-death matter. These AI-driven risks are not confined to the world of hypotheticals. According to a report from August 2025, a 60-year-old with no past psychiatric or medical history was hospitalized due to bromide poisoning after following ChatGPT's recommendation to take the supplement. As the tech stands today, no one should use a chatbot to self-diagnose or treat a medical condition, full stop. As investment in the technology builds up, so do policy conversations. There is no comprehensive federal framework on AI, much less healthcare AI, but the Trump administration has made it clear that it intends to change that. In July, OpenAI CEO Sam Altman was one of many tech executives in attendance at the White House's "Make Health Tech Great Again" event, where Trump announced a private sector initiative to use AI assistants for patient care and share the medical records of Americans across apps and programs from 60 companies. The FDA is also looking to revamp how it regulates AI deployment in health. The agency published a request for public comment in September 2025, seeking feedback from the medical sector on health AI deployment and evaluations. OpenAI's latest report seems to be their own attempt at putting a comment on the public record. The company pairs its findings with sample policy concepts, like asking for full access to the world's medical data and a clearer regulatory pathway to make AI-infused medical devices for consumer use. "We urge FDA to move forward and work with industry towards a clear and workable regulatory policy that will facilitate innovation of safe and effective AI medical devices," the company said in the report. In the next few months, OpenAI is preparing to release a full policy blueprint for how it wants healthcare AI to be regulated, the company added in the report.

[19]

ChatGPT Health sounds promising -- but I don't trust it with my medical data just yet

Convenience isn't worth giving up control over my most sensitive data OpenAI promised new ways to support your medical care with AI when launching ChatGPT Health this month. The AI chatbot's newest feature focuses on health in a dedicated virtual clinic capable of processing your electronic medical records (EMRs) and data from fitness and health apps for personalized responses about lab results, diet, exercise, and preparation for doctor visits. With more than 230 million people asking health and wellness questions every week, ChatGPT Health was inevitable. But despite OpenAI's hyping of ChatGPT Health's value and its assurance of extra security and privacy, I won't be signing up any time soon. The central issue is trust. As impressed as I've been with ChatGPT and most of its features, I remain skeptical that any tool capable of casually hallucinating nonsense should be relied upon for anything but the most basic of health questions. And even if I do trust ChatGPT as a personal health advisor, I don't want to give it my actual medical data. I've already shared more than a few details of my life with ChatGPT while testing its abilities and occasionally felt uneasy about doing so. I feel far less sanguine about adding my EMRs to the list. I instinctively recoil from the idea of giving a company with a profit motive and an imperfect history of data security access to my most sensitive health information. I can see why ChatGPT Health might entice people, even with all its caveats about what it can and can't do for you. Healthcare systems are complicated, often overstretched, and can be financially draining. ChatGPT Health can provide clearer explanations of medical jargon, highlight things worth discussing with a doctor or nurse before an appointment, and immediately parse confusing test results. ChatGPT Health entices with its ability to deliver insights that were previously only available through professional channels. It's an easy sell, especially if you lack easy access to regular medical care. But valuable, personal healthcare information and AI's track record for hallucination make for an unhealthy brew. Misleading or fictional answers are bad enough even without the need for medical nuance. It's not that I'm against asking ChatGPT questions about my health or fitness, but that's a far cry from what ChatGPT Health suggests sharing. "Exploring an isolated health question is fundamentally different from giving a platform access to your full medical record and lifetime health history," explained Alexander Tsiaras, founder and CEO of medical data platform StoryMD. "Trust will be the central challenge, not just for individuals but for the healthcare system as a whole. That trust depends on transparency: how data is ingested, how hallucinations are prevented, how longitudinal clinical evidence is incorporated over time, and whether the platform operates within established regulatory frameworks, including HIPAA." OpenAI is not a healthcare provider. HIPAA (Health Insurance Portability and Accountability Act) and its strict legal obligations and safeguards for your medical information don't apply. So, while OpenAI might sincerely promise to treat any health data you freely upload or connect to ChatGPT Health with matching care and security, it's unclear if they have the same legal motivation to do so. And when data is outside HIPAA's reach, you have to trust a company's own policies and intentions without relying on HIPAA's enforceable standards. Some people might argue that OpenAI's own privacy commitments are sufficient (lawyers at OpenAI, for instance). Most people would agree that intimate medical information should be locked down under the tightest legal protections possible. But ChatGPT Health users will be in a precarious position, especially because policy language and terms of service can change with little notice and limited recourse. ChatGPT Health's isolating of health conversations and allowing users to delete their data are good ideas, but once your data is out of the traditional healthcare system, you have to consider a whole different set of vulnerabilities. Headlines underscoring how difficult it is for major tech platforms to guarantee airtight data protection against leaks and hacks are far too frequent. And there's more risk to your data, even from legitimate agencies. Private health data could be subject to subpoenas, legal discovery, or other forms of compelled disclosure. In some cases, companies can be forced to hand over private records to satisfy court orders or government requests. HIPAA has much stronger defenses against such legal pressures than the standard consumer tech privacy laws under which ChatGPT operates. Affordable, accessible healthcare is a cause worth pursuing. But privacy, trust, and meaningful human oversight cannot be casualties in that pursuit. People are already concerned about how their data is collected and used by AI platforms. Centralizing even more sensitive information with a single commercial entity won't reduce those concerns. There's a bigger conversation to be had about incorporating AI into systems that human beings depend on. AI can be a huge boon for providing healthcare. But it won't be predicated on surrendering personal data to uncertain security and safety. "These are foundational requirements," Tsiaras said. "What people actually need is a clinically sound, longitudinal medical record that supports meaningful patient and patient-provider interaction, not another layer of noise."

[20]

OpenAI's new ChatGPT health feature draws mixed reactions

Why it matters: Health has become a go-to topic for chatbot queries, with more than 40 million people consulting ChatGPT daily for health advice and hundreds of millions doing so each week. Catch-up quick: OpenAI is adding a new health tab within ChatGPT that includes the ability to upload electronic medical records and connect with Apple Health, MyFitnessPal and other fitness apps. * The new features formalize how many people already use the chatbot: uploading test results, asking questions about symptoms and trying to navigate the complex healthcare ecosystem. * OpenAI says it will keep the new health information separate from other types of chats and not train its models on this data. Yes, but: Health information shared with ChatGPT doesn't have the same protections as medical data shared with a health provider and even those protections vary by country. * "The U.S. doesn't have a general-purpose privacy law, and HIPAA only protects data held by certain people like healthcare providers and insurance companies," Andrew Crawford, senior counsel for privacy and data at the Center for Democracy & Technology, told Axios in a statement. * "And since it's up to each company to set the rules for how health data is collected, used, shared, and stored, inadequate data protections and policies can put sensitive health information in real danger," he says. * OpenAI is starting with a small group of early testers, notably not those in the European Economic Area, Switzerland, and the United Kingdom where local regulations require additional compliance measures. The big picture: Many AI enthusiasts on social media welcomed the tools, pointing to how ChatGPT already helps them. * Yana Welinder, head of AI for Amplitude has been using ChatGPT "constantly" for health queries in recent months, both for herself and family members. "The only downside was that all of this lived alongside my very heavy other usage of ChatGPT," she wrote on X. "Projects helped a bit, but I really wanted a dedicated space ...So excited about this." Chatbots aren't a replacement for doctors, OpenAI says, at the same time highlighting what the chatbot can provide. * "It's great at synthesizing large amounts of information," Fidji Simo, CEO of Applications at OpenAI, said Wednesday on a call with reporters. "It has infinite time to research and explain things. It can put every question in the context of your entire medical history." The other side: AI skeptics question giving medical information to a chatbot that has shown a propensity to reinforce delusions and even encourage suicide. * "What could go wrong when an LLM trained to confirm, support, and encourage user bias meets a hypochondriac with a headache?" Aidan Moher wrote on BlueSky. * Anil Dash, advocate for more humane technology, agreed on BlueSky that it "isn't a good idea," but also wrote that "it's vastly more understandable than most medical jargon, far more accessible than 99% of people's healthcare that they can afford, and very often pretty accurate in broad strokes, especially compared to WebMD or Reddit." Between the lines: Like other information shared with ChatGPT, health information could potentially be made available to litigants or government agencies via a subpoena or other court order. * That seems particularly noteworthy at a time when access to reproductive health care and gender affirming care are under threat at both the state and federal levels. * User data could get swept up in other ways too. As part of their copyright battle against OpenAI, news organizations have obtained access to millions of ChatGPT logs, including from temporary chats that were meant to be deleted after 30 days. * Sam Altman has called for some sort of legal privilege to protect sensitive health and legal information. What to watch: OpenAI said it has more health features on its road map and will talk soon about additional work with various health care systems.

[21]

Is Giving ChatGPT Health Your Medical Records a Good Idea?

OpenAI, which has a licensing and technology agreement that allows the company to access TIME's archives, notes that Health is designed to support health care -- not replace it -- and is not intended to be used for diagnosis or treatment. The company says it spent two years working with more than 260 physicians across dozens of specialities to shape what the tool can do, as well as how it responds to users. That includes how urgently it encourages people to follow-up with their provider, the ability to communicate clearly without oversimplifying, and prioritizing safety when people are in mental distress. OpenAI partnered with b.well, a data connectivity infrastructure company, to allow users to securely connect their medical records to the tool. The Health tab will have "enhanced privacy," including a separate chat history and memory feature than other tabs, according to the announcement. OpenAI also said that "conversations in Health are not used to train our foundation models," and Health information won't flow into non-Health chats. Plus, users can "view or delete Health memories at any time." Still, some experts urge caution. "The most conservative approach is to assume that any information you upload into these tools, or any information that may be in applications you otherwise link to the tools, will no longer be private," Bitterman says. No federal regulatory body governs the health information provided to AI chatbots, and ChatGPT provides technology services that are not within the scope of HIPAA. "It's a contractual agreement between the individual and OpenAI at that point," says Bradley Malin, a professor of biomedical informatics at Vanderbilt University Medical Center. "If you are providing data directly to a technology company that is not providing any health care services, then it is buyer beware." In the event that there was a data breach, ChatGPT users would have no specific rights under HIPAA, he adds, though it's possible the Federal Trade Commission could step in on your behalf, or that you could sue the company directly. As medical information and AI start to intersect, the implications so far are murky.

[22]

OpenAI Launches ChatGPT Health, Which Ingests Your Entire Medical Records, But Warns Not to Use It for "Diagnosis or Treatment"