AI-Altered Image of Alex Pretti Reaches US Senate Floor, Exposing Misinformation Crisis

2 Sources

2 Sources

[1]

This AI-Enhanced Photo of Alex Pretti Was Aired by Major News Outlets

The cable news channel MS NOW, formerly MSNBC, aired an AI-edited photo of Alex Pretti, the protester who was killed by border agents in Minneapolis. MS NOW tells Snopes that it did not make the edit itself, rather it took the image from the internet without realizing that it had been edited. Many, including podcaster Joe Rogan, criticized MS NOW for making Pretti "appear more handsome". The edit makes Pretti's shoulders appear broader, his skin more tanned, and his nose less pronounced. A spokesperson from MS NOW tells Snopes that the network didn't make the edit itself, the way Rogan and others have suggested. Indeed, other news organizations, including the Daily Mail and International Business Times, ran the same AI-enhanced image. The original photo was sourced from the United States Department of Veterans Affairs official portrait of Pretti, where he worked as an intensive care unit (ICU) nurse. That image is a photo of a photo, hence the poor quality. Someone, it's not clear who (likely an internet user), ran that lo-fi image through a generative AI model to get a clearer image of Pretti. When feeding an image into an AI model like ChatGPT or Google's Nano Banana and asking it to improve the quality, the AI takes the image and text as a prompt and creates a novel image. While it will be close to the original photo, it is no longer a representation of reality. AI models also have biases where it tends to make people better-looking, hence why Pretti appears more attractive. MS NOW did not respond to PetaPixel's request for clarification. The Pretti portrait is not the only AI creation that spread misinformation from Minnesota. Another one that purportedly showed the moment Pretti was shot and killed was also AI-generated. Reuters reports the viral image does not match the multiple videos taken of Pretti's death. Meanwhile, the White House published a manipulated photo of a protester being arrested -- altering the face of Nekima Levy Armstrong so it looked like she was crying with tears streaming down her face when in reality she wore a stoic expression.

[2]

'Misrepresent reality': AI-altered shooting image surfaces in US Senate

Washington (United States) (AFP) - An AI-enhanced image depicting the moments before immigration agents shot an American nurse ricocheted across the internet -- and also made its way onto the hallowed floor of the US Senate. Social media platforms are awash with graphic footage from the moment US agents shot and killed 37-year-old intensive care nurse Alex Pretti in Minneapolis, which sparked nationwide outrage. One frame from the grainy footage was digitally altered using artificial intelligence, AI experts told AFP. The manipulated image, which purports to show Pretti surrounded by officers as one points a gun at his head, spread rapidly across Instagram, Facebook, X, and Threads. It contained several digital distortions, including a headless agent. "I am on the Senate floor to condemn the killing of US citizens at the hands of federal immigration officers," Senator Dick Durbin, a Democrat from Illinois, wrote on X Thursday, sharing a video of his speech in which he displayed the AI-enhanced image. "And to demand the Trump Administration take accountability for its actions." In comments beneath his post, several X users demanded an apology from the senator for promoting the manipulated image. On Friday, Durbin's office acknowledged the mistake. "Our office used a photo on the Senate floor that had been widely circulated online. Staff didn't realize until after the fact that the image had been slightly edited and regret that this mistake occurred," the senator's spokesperson told AFP. 'Advancing an agenda' The gaffe underscores how lifelike AI visuals -- even those containing glaring errors -- are seeping into everyday discourse, sowing confusion during breaking news events and influencing political debate at the highest levels. The AI-enhanced image also led some social media users to falsely claim the object in Pretti's right hand was a weapon, but analysis of the verified footage showed he was holding a phone. That analysis contradicted claims by officials in President Donald Trump's administration that Pretti posed a threat to officers. Disinformation watchdog NewsGuard said the use of AI tools to enhance details of witness footage can lead to fabrications that "misrepresent reality, in service to advancing an agenda." "AI tools are increasingly being used on social media to 'enhance' unclear images during breaking news events," NewsGuard said in a report. "AI 'enhancements' can invent faces, weapons, and other critical details that were never visible in original footage -- or in real life." The trend underscores a new digital reality in which fake images -- created or distorted using artificial intelligence tools -- often go viral on social media in the immediate aftermath of major news events such as shootings. "Even subtle changes to the appearance of a person can alter the reception of an image to be more or less favorable," Walter Scheirer, from the University of Notre Dame, told AFP, referring to the distorted image presented at the US Senate. "In the recent past, creating lifelike visuals took some effort. However now, with AI, this can be done instantly, making such content available to politicians on command." On Friday, the Trump administration charged a prominent journalist Don Lemon and others with civil rights crimes over coverage of immigration protests in Minneapolis, as the president branded Pretti an "agitator." Pretti's killing marked the second fatal shooting of a Minneapolis protester this month by federal agents. Earlier this month, AI deepfakes flooded online platforms following the killing of another protester -- 37-year-old Renee Nicole Good. AFP found dozens of posts across social media, in which users shared AI-generated images purporting to "unmask" the agent who shot her. Some X users used AI chatbot Grok to digitally undress an old photo of Good.

Share

Share

Copy Link

An AI-enhanced photograph of Alex Pretti, the protester killed by border agents in Minneapolis, was displayed on the US Senate floor and aired by major news outlets including MS NOW. The manipulated image contained digital distortions and sparked debate about how generative AI models are sowing confusion during breaking news events and influencing political discourse.

AI-Enhanced Photograph Spreads Across Media and Government

An AI-altered image depicting the final moments before US immigration agents shot 37-year-old intensive care nurse Alex Pretti has exposed a troubling gap in how media organizations and government officials verify visual content during breaking news events. The manipulated photograph, which purports to show Pretti surrounded by officers with one pointing a gun at his head, was not only aired by cable news channel MS NOW but also displayed on the US Senate floor by Senator Dick Durbin, a Democrat from Illinois

1

2

. The fatal shooting of Pretti in Minneapolis sparked nationwide outrage, and the subsequent spread of AI-generated misinformation has raised urgent questions about how generative AI models are being weaponized to misrepresent reality in politically charged situations.MS NOW tells Snopes that it did not create the edit itself but rather sourced the image from the internet without realizing it had been altered. Other news organizations, including the Daily Mail and International Business Times, also ran the same AI-enhanced photograph

1

. The original photo came from the United States Department of Veterans Affairs official portrait of Pretti, where he worked as an ICU nurse. Someone, likely an internet user, ran that low-quality image through a generative AI model to produce a clearer version, inadvertently creating digital distortions that altered Pretti's appearance, making his shoulders appear broader, his skin more tanned, and his nose less prominent.

Source: PetaPixel

How Generative AI Models Create Fabricating Details

When an image is fed into AI models like ChatGPT or similar tools and users request quality improvements, the AI treats the image and text as a prompt to create an entirely novel image. While the output may closely resemble the original photo, it no longer represents reality. AI models carry inherent biases that tend to make people appear more attractive, which explains why Pretti appeared more handsome in the altered version

1

. This phenomenon has serious implications for journalism and political debate, as these subtle changes can alter public perception and emotional responses to tragic events.The AI-altered image that reached the US Senate contained several obvious errors, including a headless agent, yet it still spread rapidly across Instagram, Facebook, X, and Threads

2

. The manipulated image also led some social media users to falsely claim the object in Pretti's right hand was a weapon, when verified footage showed he was holding a phone. This contradiction directly challenged claims by officials in the Trump administration that Pretti posed a threat to officers.Political Fallout and Senate Acknowledgment

Senator Dick Durbin displayed the AI-enhanced photograph during a Thursday speech on the Senate floor, writing on X: "I am on the Senate floor to condemn the killing of US citizens at the hands of federal immigration officers and to demand the Trump Administration take accountability for its actions"

2

. After X users demanded an apology for promoting the manipulated image, Durbin's office acknowledged the mistake on Friday. A spokesperson told AFP: "Our office used a photo on the Senate floor that had been widely circulated online. Staff didn't realize until after the fact that the image had been slightly edited and regret that this mistake occurred."This gaffe underscores how lifelike AI visuals are seeping into everyday discourse, sowing confusion during critical moments and influencing political debate at the highest levels. Walter Scheirer from the University of Notre Dame told AFP that even subtle changes to a person's appearance can alter how an image is received. "In the recent past, creating lifelike visuals took some effort. However now, with AI, this can be done instantly, making such content available to politicians on command"

2

.Related Stories

Broader Pattern of Visual Misinformation

The Pretti portrait is not the only AI creation spreading misinformation from Minneapolis. Another viral image that purportedly showed the moment Pretti was shot and killed was also AI-generated, and Reuters reports it does not match multiple videos taken of the deceased protester's death

1

. Meanwhile, the White House published a manipulated photo of a protester being arrested, altering the face of Nekima Levy Armstrong to make it appear she was crying with tears streaming down her face when she actually wore a stoic expression.

Source: France 24

Deepfakes and Digital Manipulation Escalate

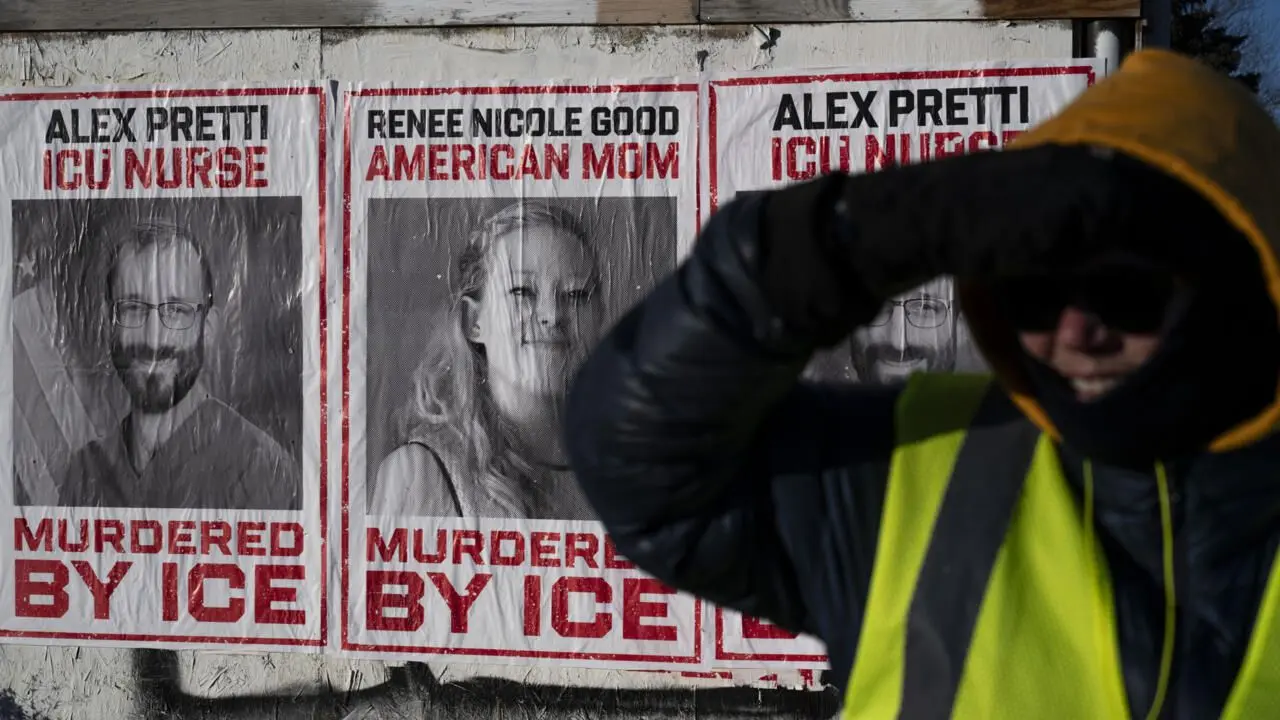

Pretti's killing marked the second fatal shooting of a Minneapolis protester this month by federal agents. Earlier in January, AI deepfakes flooded online platforms following the killing of another protester, 37-year-old Renee Nicole Good. AFP found dozens of posts across social media where users shared AI-generated images purporting to "unmask" the agent who shot her. Some X users even used AI chatbot Grok to digitally undress an old photo of Good

2

. On Friday, the Trump administration charged prominent journalist Don Lemon and others with civil rights crimes over coverage of immigration protests in Minneapolis, as the president branded Pretti an "agitator."For AI researchers and policymakers, these incidents signal an urgent need for verification protocols that can keep pace with the speed at which generative AI can produce convincing but false imagery. The challenge extends beyond detecting obvious fabrications to identifying subtle enhancements that fundamentally alter the truth while maintaining plausibility. As AI tools become more accessible and powerful, the line between documentation and digital manipulation continues to blur, threatening the integrity of visual evidence in journalism, legal proceedings, and political discourse.

References

Summarized by

Navi

Related Stories

Trump's White House pushes AI images further, eroding public trust in government communications

23 Jan 2026•Entertainment and Society

AI images claiming to unmask ICE agent in Minneapolis shooting spread confusion and false accusations

09 Jan 2026•Technology

AI-generated images of Nicolás Maduro spread rapidly despite platform safeguards

05 Jan 2026•Entertainment and Society

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy