AI-Enhanced Brain-Computer Interface Boosts Performance for Paralyzed Users

5 Sources

5 Sources

[1]

Brain-computer interface control with artificial intelligence copilots - Nature Machine Intelligence

Motor brain-computer interfaces (BCIs) decode neural signals to help people with paralysis move and communicate. Even with important advances in the past two decades, BCIs face a key obstacle to clinical viability: BCI performance should strongly outweigh costs and risks. To significantly increase the BCI performance, we use shared autonomy, where artificial intelligence (AI) copilots collaborate with BCI users to achieve task goals. We demonstrate this AI-BCI in a non-invasive BCI system decoding electroencephalography signals. We first contribute a hybrid adaptive decoding approach using a convolutional neural network and ReFIT-like Kalman filter, enabling healthy users and a participant with paralysis to control computer cursors and robotic arms via decoded electroencephalography signals. We then design two AI copilots to aid BCI users in a cursor control task and a robotic arm pick-and-place task. We demonstrate AI-BCIs that enable a participant with paralysis to achieve 3.9-times-higher performance in target hit rate during cursor control and control a robotic arm to sequentially move random blocks to random locations, a task they could not do without an AI copilot. As AI copilots improve, BCIs designed with shared autonomy may achieve higher performance.

[2]

AI-powered brain device allows paralysed man to control robotic arm

A man with partial paralysis was able operate a robotic arm when he used a non-invasive brain device partially controlled by artificial intelligence (AI), a study reports. The AI-enabled device also allowed the man to perform screen-based tasks four times better than when he used the device on its own. Brain-computer interfaces (BCIs) capture electrical signals from the brain, then analyse them to determine what the person wants to do and translate the signals into commands. Some BCIs are surgically implanted and record signals directly from the brain, which typically makes them more accurate than non-invasive devices that are attached to the scalp. Jonathan Kao, who studies AI and brain-computer interfaces at the University of California, Los Angeles, and his colleagues wanted to improve the performance of non-invasive BCIs. The results of their work are published in Nature Machine Intelligence this week. First, the team tested their BCI by tasking four people -- one with paralysis and three without -- with moving a computer cursor to a particular spot on a screen. All four were able to complete the task the majority of the time. When the authors added an AI co-pilot to the device, the participants completed the task more quickly and had a higher success rate. The device with the co-pilot doesn't need to decode as much brain activity because the AI can infer what the user wants to do, says Kao. "These co-pilots are essentially collaborating with the BCI user and trying to infer the goals that the BCI user is wishing to achieve, and then helps to complete those actions," he adds. The researchers also trained an AI co-pilot to control a robotic arm. The participants were required to use the robotic arm to pick up coloured blocks and move them to marked spots on a table. The person with paralysis could not complete the task using the conventional, non-invasive BCI, but was successful 93% of the time using the BCI with an AI co-pilot. Those without paralysis also completed the task more quickly when using the co-pilot.

[3]

Brain-AI System Translates Thoughts Into Movement - Neuroscience News

Summary: Researchers have created a noninvasive brain-computer interface enhanced with artificial intelligence, enabling users to control a robotic arm or cursor with greater accuracy and speed. The system translates brain signals from EEG recordings into movement commands, while an AI camera interprets user intent in real time. In tests, participants -- including one paralyzed individual -- completed tasks significantly faster with AI assistance, even accomplishing actions otherwise impossible without it. Researchers say this breakthrough could pave the way for safer, more accessible assistive technologies for people with paralysis or motor impairments. UCLA engineers have developed a wearable, noninvasive brain-computer interface system that utilizes artificial intelligence as a co-pilot to help infer user intent and complete tasks by moving a robotic arm or a computer cursor. Published in Nature Machine Intelligence, the study shows that the interface demonstrates a new level of performance in noninvasive brain-computer interface, or BCI, systems. This could lead to a range of technologies to help people with limited physical capabilities, such as those with paralysis or neurological conditions, handle and move objects more easily and precisely. The team developed custom algorithms to decode electroencephalography, or EEG -- a method of recording the brain's electrical activity -- and extract signals that reflect movement intentions. They paired the decoded signals with a camera-based artificial intelligence platform that interprets user direction and intent in real time. The system allows individuals to complete tasks significantly faster than without AI assistance. "By using artificial intelligence to complement brain-computer interface systems, we're aiming for much less risky and invasive avenues," said study leader Jonathan Kao, an associate professor of electrical and computer engineering at the UCLA Samueli School of Engineering. "Ultimately, we want to develop AI-BCI systems that offer shared autonomy, allowing people with movement disorders, such as paralysis or ALS, to regain some independence for everyday tasks." State-of-the-art, surgically implanted BCI devices can translate brain signals into commands, but the benefits they currently offer are outweighed by the risks and costs associated with neurosurgery to implant them. More than two decades after they were first demonstrated, such devices are still limited to small pilot clinical trials. Meanwhile, wearable and other external BCIs have demonstrated a lower level of performance in detecting brain signals reliably. To address these limitations, the researchers tested their new noninvasive AI-assisted BCI with four participants -- three without motor impairments and a fourth who was paralyzed from the waist down. Participants wore a head cap to record EEG, and the researchers used custom decoder algorithms to translate these brain signals into movements of a computer cursor and robotic arm. Simultaneously, an AI system with a built-in camera observed the decoded movements and helped participants complete two tasks. In the first task, they were instructed to move a cursor on a computer screen to hit eight targets, holding the cursor in place at each for at least half a second. In the second challenge, participants were asked to activate a robotic arm to move four blocks on a table from their original spots to designated positions. All participants completed both tasks significantly faster with AI assistance. Notably, the paralyzed participant completed the robotic arm task in about six-and-a-half minutes with AI assistance, whereas without it, he was unable to complete the task. The BCI deciphered electrical brain signals that encoded the participants' intended actions. Using a computer vision system, the custom-built AI inferred the users' intent -- not their eye movements -- to guide the cursor and position the blocks. "Next steps for AI-BCI systems could include the development of more advanced co-pilots that move robotic arms with more speed and precision, and offer a deft touch that adapts to the object the user wants to grasp," said co-lead author Johannes Lee, a UCLA electrical and computer engineering doctoral candidate advised by Kao. "And adding in larger-scale training data could also help the AI collaborate on more complex tasks, as well as improve EEG decoding itself." The paper's authors are all members of Kao's Neural Engineering and Computation Lab, including Sangjoon Lee, Abhishek Mishra, Xu Yan, Brandon McMahan, Brent Gaisford, Charles Kobashigawa, Mike Qu and Chang Xie. A member of the UCLA Brain Research Institute, Kao also holds faculty appointments in the Computer Science Department and the Interdepartmental Ph.D. Program in Neuroscience. Funding: The research was funded by the National Institutes of Health and the Science Hub for Humanity and Artificial Intelligence, which is a collaboration between UCLA and Amazon. The UCLA Technology Development Group has applied for a patent related to the AI-BCI technology. Brain-computer interface control with artificial intelligence copilots Motor brain-computer interfaces (BCIs) decode neural signals to help people with paralysis move and communicate. Even with important advances in the past two decades, BCIs face a key obstacle to clinical viability: BCI performance should strongly outweigh costs and risks. To significantly increase the BCI performance, we use shared autonomy, where artificial intelligence (AI) copilots collaborate with BCI users to achieve task goals. We demonstrate this AI-BCI in a non-invasive BCI system decoding electroencephalography signals. We first contribute a hybrid adaptive decoding approach using a convolutional neural network and ReFIT-like Kalman filter, enabling healthy users and a participant with paralysis to control computer cursors and robotic arms via decoded electroencephalography signals. We then design two AI copilots to aid BCI users in a cursor control task and a robotic arm pick-and-place task. We demonstrate AI-BCIs that enable a participant with paralysis to achieve 3.9-times-higher performance in target hit rate during cursor control and control a robotic arm to sequentially move random blocks to random locations, a task they could not do without an AI copilot. As AI copilots improve, BCIs designed with shared autonomy may achieve higher performance.

[4]

AI co-pilot boosts noninvasive brain-computer interface by interpreting user intent

UCLA engineers have developed a wearable, noninvasive brain-computer interface system that utilizes artificial intelligence as a co-pilot to help infer user intent and complete tasks by moving a robotic arm or a computer cursor. Published in Nature Machine Intelligence, the study shows that the interface demonstrates a new level of performance in noninvasive brain-computer interface, or BCI, systems. This could lead to a range of technologies to help people with limited physical capabilities, such as those with paralysis or neurological conditions, handle and move objects more easily and precisely. The team developed custom algorithms to decode electroencephalography, or EEG -- a method of recording the brain's electrical activity -- and extract signals that reflect movement intentions. They paired the decoded signals with a camera-based artificial intelligence platform that interprets user direction and intent in real time. The system allows individuals to complete tasks significantly faster than without AI assistance. "By using artificial intelligence to complement brain-computer interface systems, we're aiming for much less risky and invasive avenues," said study leader Jonathan Kao, an associate professor of electrical and computer engineering at the UCLA Samueli School of Engineering. "Ultimately, we want to develop AI-BCI systems that offer shared autonomy, allowing people with movement disorders, such as paralysis or ALS, to regain some independence for everyday tasks." State-of-the-art, surgically implanted BCI devices can translate brain signals into commands, but the benefits they currently offer are outweighed by the risks and costs associated with neurosurgery to implant them. More than two decades after they were first demonstrated, such devices are still limited to small pilot clinical trials. Meanwhile, wearable and other external BCIs have demonstrated a lower level of performance in detecting brain signals reliably. To address these limitations, the researchers tested their new noninvasive AI-assisted BCI with four participants -- three without motor impairments and a fourth who was paralyzed from the waist down. Participants wore a head cap to record EEG, and the researchers used custom decoder algorithms to translate these brain signals into movements of a computer cursor and robotic arm. Simultaneously, an AI system with a built-in camera observed the decoded movements and helped participants complete two tasks. In the first task, they were instructed to move a cursor on a computer screen to hit eight targets, holding the cursor in place at each for at least half a second. In the second challenge, participants were asked to activate a robotic arm to move four blocks on a table from their original spots to designated positions. All participants completed both tasks significantly faster with AI assistance. Notably, the paralyzed participant completed the robotic arm task in about six-and-a-half minutes with AI assistance, whereas without it, he was unable to complete the task. The BCI deciphered electrical brain signals that encoded the participants' intended actions. Using a computer vision system, the custom-built AI inferred the users' intent -- not their eye movements -- to guide the cursor and position the blocks. "Next steps for AI-BCI systems could include the development of more advanced co-pilots that move robotic arms with more speed and precision, and offer a deft touch that adapts to the object the user wants to grasp," said co-lead author Johannes Lee, a UCLA electrical and computer engineering doctoral candidate advised by Kao. "And adding in larger-scale training data could also help the AI collaborate on more complex tasks, as well as improve EEG decoding itself." The paper's authors are all members of Kao's Neural Engineering and Computation Lab. A member of the UCLA Brain Research Institute, Kao also holds faculty appointments in the Computer Science Department and the Interdepartmental Ph.D. Program in Neuroscience.

[5]

AI brain interface lets users move robot arm with pure thought

Using the AI-BCI system, a participant successfully completed the "pick-and-place" task moving four blocks with the assistance of AI and a robotic arm. A new wearable, noninvasive brain-computer interface (BCI) system that uses artificial intelligence has been designed to help people with physical disabilities. The University of California, Los Angeles, developed this new BCI where an AI acts as a "co-pilot." It works alongside users to understand their intentions and help control a robotic arm or computer cursor. The system can potentially create new technologies to improve how people with limited mobility, like those with paralysis or neurological conditions, handle objects.

Share

Share

Copy Link

UCLA researchers develop a non-invasive brain-computer interface system with AI assistance, significantly improving performance for users, including those with paralysis, in controlling robotic arms and computer cursors.

Breakthrough in Brain-Computer Interface Technology

Researchers at the University of California, Los Angeles (UCLA) have developed a groundbreaking non-invasive brain-computer interface (BCI) system that incorporates artificial intelligence (AI) to significantly enhance performance. This innovative technology, detailed in a study published in Nature Machine Intelligence, demonstrates a new level of capability in non-invasive BCI systems, potentially revolutionizing assistive technologies for individuals with paralysis or motor impairments

1

2

.The AI-Enhanced BCI System

Source: Neuroscience News

The system utilizes electroencephalography (EEG) to record brain activity and custom algorithms to decode these signals into movement intentions. What sets this BCI apart is its integration with an AI "co-pilot" that uses computer vision to interpret user intent in real-time, allowing for more accurate and efficient control of robotic arms or computer cursors

3

.Jonathan Kao, the study leader and associate professor at UCLA, explains, "By using artificial intelligence to complement brain-computer interface systems, we're aiming for much less risky and invasive avenues"

4

. This approach addresses a key challenge in BCI development: achieving high performance without the risks associated with surgically implanted devices.Impressive Performance in User Tests

The research team conducted tests with four participants, including one individual with paralysis from the waist down. The trials involved two main tasks:

- Moving a computer cursor to hit eight targets on a screen

- Controlling a robotic arm to move blocks to designated positions

Source: Medical Xpress

Results showed that all participants completed the tasks significantly faster with AI assistance. Notably, the paralyzed participant was able to complete the robotic arm task in about six and a half minutes with AI assistance, a feat they could not accomplish without it

2

4

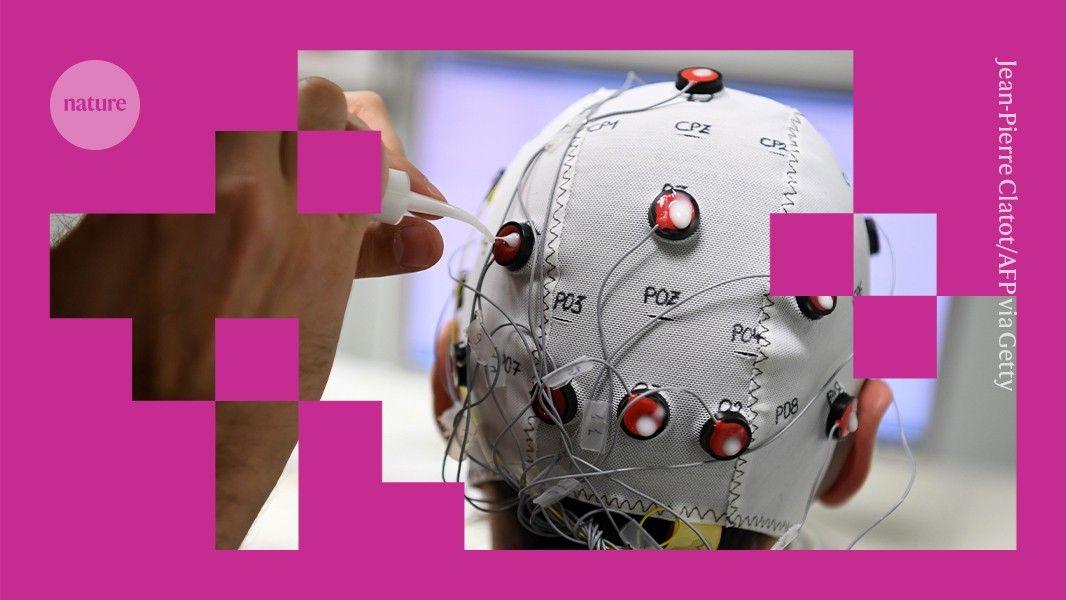

.How the System Works

The BCI system combines several key components:

- EEG recording: Participants wear a head cap that records brain electrical activity

- Signal decoding: Custom algorithms translate brain signals into movement commands

- AI interpretation: A camera-based AI system observes the decoded movements and infers user intent

- Task execution: The system guides the cursor or robotic arm based on the interpreted signals

3

4

Source: Nature

Related Stories

Implications for Assistive Technology

This breakthrough has significant implications for individuals with limited physical capabilities. The non-invasive nature of the system, combined with its enhanced performance, could make it a more accessible and practical solution for a wider range of users

2

.Johannes Lee, a co-lead author of the study, suggests that future developments could include "more advanced co-pilots that move robotic arms with more speed and precision, and offer a deft touch that adapts to the object the user wants to grasp"

4

.Challenges and Future Directions

While this AI-enhanced BCI system represents a significant advance, there are still challenges to overcome. The researchers aim to further improve the system's capabilities by:

- Developing more sophisticated AI co-pilots

- Incorporating larger-scale training data to handle more complex tasks

- Enhancing EEG decoding accuracy

3

4

As AI technology continues to evolve, BCIs designed with shared autonomy may achieve even higher performance, potentially transforming the lives of individuals with motor impairments and bringing us closer to seamless brain-computer interaction

1

.References

Summarized by

Navi

[1]

[3]

[4]

[5]

Related Stories

Breakthrough: AI-Powered Brain Implant Enables Paralyzed Man to Control Robotic Arm for Record 7 Months

07 Mar 2025•Science and Research

Paralyzed Man Flies Virtual Drone Using Brain-Computer Interface

22 Jan 2025•Science and Research

AI Co-Pilot for Bionic Hands Transforms How Amputees Control Prosthetics with Intuitive Grasping

09 Dec 2025•Health

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology