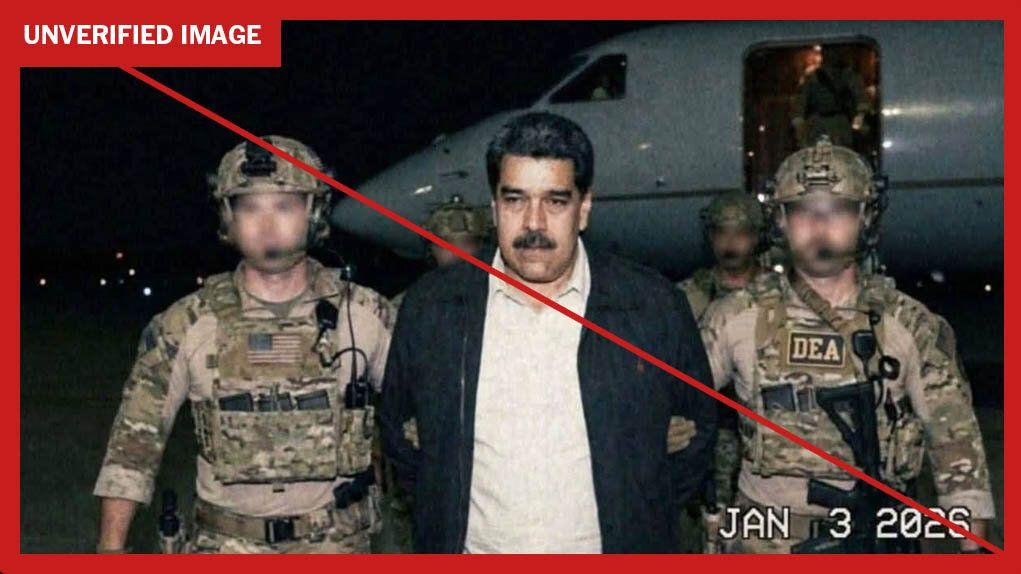

AI-generated images of Nicolás Maduro spread rapidly despite platform safeguards

12 Sources

12 Sources

[1]

AI-generated videos of crowds celebrating Maduro's removal go viral after U.S. operation

Governments move toward fines and laws as AI misinformation accelerates. Following the U.S. military operation in Venezuela that led to the removal of its leader, Nicolás Maduro, artificial intelligence-generated videos purporting to show Venezuelan citizens celebrating in the streets have gone viral across social media. These AI clips, depicting celebrating crowds, have amassed millions of views across platforms like TikTok, Instagram and X. One of the earliest and most widely shared clips on X was posted by an account named "Wall Street Apes," which has over 1 million followers on the platform. The post shows crowds crying and celebrating in the streets, thanking the U.S. and President Donald Trump for removing Maduro. The video has since been flagged by a community note, a crowdsourced fact-checking feature on X that allows users to add context to posts they believe are misleading. The note read: "This video is AI generated and is currently being presented as a factual statement intended to mislead people." The clip has been viewed over 5.6 million times and reshared by at least 38,000 accounts, including by business mogul Elon Musk, before he eventually removed the repost. CNBC was unable to confirm the origin of the video, though fact-checkers at BBC and AFP said the earliest known version of the clip appeared on the TikTok account @curiousmindusa, which regularly posts AI-generated content. Even before such videos appeared, AI-generated images showing Maduro in U.S. custody were circulating prior to the Trump administration releasing an authentic image of the captured leader. The deposed Venezuelan leader was captured on Jan. 3, 2026, after U.S. forces conducted airstrikes and a ground raid, an operation that has dominated global headlines at the start of the new year. Along with the AI-generated videos, the AFP's fact-check team also flagged a number of examples of misleading content about Maduro's ousting, including footage of celebrations in America falsely presented as scenes from Venezuela. Misinformation from major news events is not new. Similar false or misleading content circulated during the Israeli-Palestine and Russia-Ukraine conflicts. However, the massive reach and realism of AI-generated content related to recent developments in Venezuela are stark examples of how AI is advancing as a tool for misinformation. Platforms such as Sora and Midjourney have made it easier than ever to quickly generate hyper-realistic footage and pass it off as real in the chaos of fast-breaking events. Such deepfakes often seek to amplify certain political narratives or sow confusion among global audiences. Last year, AI-generated videos of women complaining about losing their Supplemental Nutrition Assistance Program, or SNAP, benefits during a government shutdown also went viral. One such AI-generated video fooled Fox News, which presented it as real in an article that was later removed. Social media companies face growing pressure to step up efforts to label this type of AI-generated content. Last year, India's government proposed a law requiring such labeling, while Spain approved fines of up to 35 million euros for unlabeled AI materials. Major platforms, including TikTok and Meta, have rolled out AI detection and labeling tools X, though results appear to have been mixed. CNBC identified some videos on TikTok claiming to be celebrations in Venezuela that were labeled as AI-generated. In the case of X, it has relied mostly on community notes, a system critics say often reacts too slowly to prevent falsehoods from spreading before being labeled. Adam Mosseri, who oversees Instagram and Threads, acknowledged the challenge in a recent post. "All the major platforms will do good work identifying AI content, but they will get worse at it over time as AI gets better at imitating reality," he said. "There is already a growing number of people who believe, as I do, that it will be more practical to fingerprint real media than fake media," he added.

[2]

A.I. Images of Maduro Spread Rapidly, Despite Safeguards

Just hours after news spread online that Nicolás Maduro, Venezuela's ousted president, was arrested by U.S. forces, social media was overrun with photographs depicting him in handcuffs, escorted by drug enforcement agents or surrounded by soldiers on a military aircraft. The images were fake -- the likely product of artificial intelligence tools -- in what experts said is one of the first times that A.I. imagery has depicted prominent figures while a historical moment was rapidly unfolding. "This was the first time I'd personally seen so many A.I.-generated images of what was supposed to be a real moment in time," said Roberta Braga, the executive director of the Digital Democracy Institute of the Americas, a think tank. Most major A.I. image generators have rules against deceptive or misleading content, and several explicitly ban fake images of public figures like Mr. Maduro. And while it is difficult to identify exactly which tool was responsible for creating the most popular fakes that circulated after the attack, many mainstream tools were willing to create similar images in tests conducted by The New York Times on Monday. They often did so free of charge and in a matter of seconds. The threat that such fakes could confuse and mislead people as news breaks has loomed large over the tools since they gained widespread popularity. The technology has now become advanced enough, and popular enough, to make that threat a reality. Jeanfreddy Gutiérrez, who runs a fact-checking operation in Caracas but is based in Colombia, noticed the fake images of Mr. Maduro spreading on Saturday, the day his arrest was announced. He said some circulated through Latin American news outlets before being quietly swapped out for an image shared by President Trump. Mr. Gutiérrez published a fact-check this weekend that identified several deepfake images of Mr. Maduro, including one that "showed clear signs" that it was made by Gemini, Google's A.I. app. "They spread so fast -- I saw them in almost every Facebook and WhatsApp contact I have," he said. NewsGuard, a company that tracks the reliability of online information, tracked five fabricated and out-of-context images and two misrepresented videos linked to Mr. Maduro's capture. It said the content collectively garnered more than 14.1 million views on X in under two days (the content also appeared, with more limited engagement, on Meta platforms like Instagram and Facebook). After Mr. Maduro's arrest, The Times tested a dozen A.I. generators to determine which tools would create fake images of Mr. Maduro. Most of the tools, including Gemini and models from OpenAI and X, quickly created the requested images. The fakes undermined assurances from many of technology companies that they have designed safeguards to prevent the tools from being abused. Google has rules prohibiting users from generating misinformation and specifically bars misleading content that is "related to governmental or democratic processes." But a Google spokesman said that the company does not categorically bar images of prominent people and that a fake image depicting Mr. Maduro being arrested that was generated in tests by The Times did not violate its rules. OpenAI's tool, ChatGPT, said it could not create images of Mr. Maduro. But when The Times queried through another website that taps into the same model of ChatGPT, it relented and produced the images. In an emailed statement, an OpenAI spokesperson said the company uses safeguards to protect public figures but focuses on preventing harms like sexual deepfakes or violence. Grok, the model by X.ai, immediately produced lifelike images of Mr. Maduro's arrest. The spokesman for Google said the company has focused on its hidden watermark, called SynthID, that Gemini embeds in every image it creates, enabling people to determine whether it was made by its A.I. tool. "You can simply upload an image to the Gemini app and instantly confirm whether it was generated using Google A.I.," he said. Grok has come under heavy scrutiny, including last week when watchdogs reported that it was responding to requests to remove clothing from images of children. Grok's account posted that its team identified "lapses in safeguards" and that it was "urgently fixing them." X.ai did not respond to a request for comment. Other tools, like Facebook's Meta A.I. chatbot and Flux.ai, responded by creating images showing a man with a mustache being arrested, but did not accurately depict Mr. Maduro's features. When prompted to create a more realistic depiction of the ousted president, Meta's chatbot replied: "I can't generate that." Meta declined to comment. Along with popular tools created by tech giants are a growing assortment of lesser-known image generators created by other A.I. companies. Many of those tools have few guardrails, allowing meme-makers and activists to create A.I. fakes targeting politicians, celebrities and other notable people. In tests by The Times, tools including Z-Image, Reve and Seedream responded by creating realistic images of Mr. Maduro being arrested outside a military aircraft. Generative artificial intelligence tools are more accessible than ever before, said Mariví Marín, the director of ProboxVE, a nonprofit that analyzes digital ecosystems in Latin America. She wrote in a text that the Maduro deepfakes that emerged over the weekend, most of them before an official photo was released, were a response to "a collective need to fill in 'the perfect image' of a moment that each Venezuelan imagines somewhat differently," as a sort of "reaction to the urgency of filling that visual gap (with real or unreal content) as quickly as possible." Venezuela is among the most restrictive countries in the world for press freedoms, with limited media sources, making trustworthy information hard to come by. In the news vacuum, the mistrust bred by deepfakes is exacerbated. Mr. Gutiérrez said many people were skeptical that the image of Mr. Maduro posted by Mr. Trump was authentic. "It's funny, but very common: Doubt the truth and believe the lie," he said. Latin American fact-checkers like Mr. Gutiérrez said they had been primed by earlier synthetic videos and images of Mr. Trump and former President Joseph R. Biden Jr. to be on the lookout for deepfakes of public figures. And since the pandemic, fact-checking groups across the region have collaborated to tackle cross-border rumors and conspiracy theories that surface during international conflicts and elections. "It just took a lot of work, because we always lose the battle to convince people of the truth," Mr. Gutiérrez said.

[3]

Debunking Venezuela celebration videos and AI images of Maduro

AI images claiming to show Nicolás Maduro in custody after his capture by the US have gained tens of millions of views online. Maduro was captured by the US in the early hours of Saturday morning. The first fake AI image apparently showing him being escorted off a plane emerging within hours. The image was not shared by any official US channels, it was instead posted on X by an "AI video art enthusiast" account.We've checked it using Google's SynthID AI-watermark detector, which found it was generated or edited with Google AI. More AI-generated pictures began to spread in the ensuing hours appearing to show more angles of Maduro in custody. Visible watermarks on these images show they originated from an Instagram account called ultravfx, whose profile describes it as being run by a "Professional in artificial intelligence". SynthID says all of these further images were generated or edited with Google AI. Donald Trump posted the first real photo of Maduro handcuffed aboard the USS Iwo Jima ship on Saturday morning. But even after this real photo was published AI-generated images continued to spread but were updated to include the grey tracksuit worn by Maduro. Reverse image searches show these updated fakes were first posted on TikTok by a graphic design account. Once again, SynthID AI-watermark detector says these further images were generated or edited with Google AI.

[4]

AI images of Maduro capture reap millions of views on social media

Lack of verified information and rapidly advanced AI tools make it difficult to separate fact from fiction on US attack Minutes after Donald Trump announced a "large-scale strike" against Venezuela early on Saturday morning, false and misleading AI-generated images began flooding social media. There were fake photos of Nicolás Maduro being escorted off a plane by US law enforcement agents, images of jubilant Venezuelans pouring into the streets of Caracas and videos of missiles raining down on the city - all fake. The fabricated content intermixed with real videos and photos of US aircraft flying over the Venezuelan capital and explosions lighting up the dark sky. A lack of verified information about the raid coupled with AI tools' rapidly advancing capabilities made discerning fact from fiction about the incursion on Caracas difficult. By the time Trump posted a verified photo of Maduro blindfolded, handcuffed and dressed in grey sweatpants aboard the USS Iwo Jima warship, the fake images with the US Drug Enforcement Administration (DEA) agents had already gone viral. Across X, Instagram, Facebook and TikTok, the AI photos have been seen and shared millions of times, according to the factchecking site NewsGuard. Vince Lago, the mayor of Coral Gables, Florida, posted the fake photo of Maduro being escorted by the DEA agents to Instagram, saying that the Venezuelan president "is the leader of a narco-terrorist organization threatening our country". Lago's post received more than 1500 likes and is still up as of this writing. Tools for detecting manipulated content, such as reverse image search and AI-detection sites, can help assess whether online images are accurate, but they are inconsistent. Sofia Rubinson, a senior editor who studies misinformation and conspiracy theories for NewsGuard, told the Guardian that the fake images of Caracas are similar to actual events, which makes it even more difficult to figure out what is real. "Many of the AI-generated and out-of-context visuals that are currently flooding social media do not drastically distort the facts on the ground," Rubinson said. "Still, the use of AI-generated fabrications and dramatic, out-of-context footage is being used to fill gaps in real-time reporting and represents another tactic in the misinformation wars - and one that is harder for fact checkers to expose because the visuals often approximate reality." NewsGuard released a report on Monday afternoon that identified five fabricated and out-of-context photos as well as two videos of the military operation in Venezuela. One AI-generated photo shows a soldier posing next to Maduro, who has a black hood over his head. An out-of-context video shows a US special forces helicopter descending on a supposed Venezuelan military site - the actual footage was taken in June at the Fort Bragg army base in North Carolina. NewsGuard said the seven misleading photos and videos it identified have now garnered more than 14m views on X alone. Other footage from past events is also being circulated online and passed off as part of Saturday's strike. Laura Loomer, a far-right influencer and Trump confidant, posted footage of a poster of the Venezuelan president on X saying that "the people of Venezuela are ripping down posters of Maduro". According to Wired, the footage is from 2024. Loomer has since removed the post. Another rightwing influencer and conspiracy theorist, Alex Jones, posted an aerial video on X of thousands of people cheering in Caracas. "Millions of Venezuelans flooded the streets of Caracas and other major cities in celebration of the ouster of Communist dictator Nicholas Maduro," Jones wrote. "Now we need to see the same type of energy here on the HomeFront!" The video, which is still up, has reached more than 2.2m views. Comments on the post from Community Notes, X's crowdsource moderation tool, say that the video is "at least 18 months old". A reverse image search of the video shows that the footage is actually from a protest in Caracas after Maduro's disputed presidential win in July 2024. Grok, the platform's AI chatbot, also disputes the timeline of Jones' video, saying: "Current sources show no such celebrations in Caracas today, but pro-Maduro gatherings instead." Meta, X and TikTok did not respond to requests for comment.

[5]

That Video of Happy Crying Venezuelans After Maduro's Kidnapping? It's AI Slop

"The people cry for their freedom, thanks to the United States for freeing us." In the wake of the deadly attack on Venezuela and kidnapping of president Nicolás Maduro by the United States, netizens looking to manufacture support for the strikes have found a friend in generative AI. Since the kidnapping, people in the West have been fiercely debating who should control the narrative about the military action. By many accounts, those most impacted by the attacks -- Venezuelans living and working in Venezuela -- are resolutely opposed to the strikes, with thousands mobilizing in numerous Venezuelan cities in protest. (The death toll from the US strikes currently stands at 80 soldiers and civilians, a figure whichwill likely go up as the dust settles.) Though the attacks are still too recent to get accurate polling data of the country's sentiments, a November survey found that 86 percent of Venezuelans preferred for Maduro to remain head of state to resolve the country's economic woes. Only 8 percent favored the far-right opposition party, which has support from US president Donald Trump. Even many Venezuelans who oppose Maduro are also opposed to the United States' incursion to oust him. Yet if you ask American Trump supporters, Venezuelans are actually thrilled about the invasion. Their evidence: good ol' AI slop. In a post with over five million views on X-formerly-Twitter, the account Wall Street Apes shared a minute-long video of what are supposed to be Venezuelan citizens crying tears of joy over the attacks. Of course, as anyone versed in the visual language of gen AI will quickly notice, the video is a compilation of low-quality AI clips. "The people cry for their freedom, thanks to the United States for freeing us," the video's AI narrator exclaims. "The hero, thank you Donald Trump." "I'm so jealous," a US-run account with nearly 140,000 followers replied under the clip. "I want the same freedom and the same joy for Iran and the Iranian people." Shooting back at the mega-viral post, critics warned that the AI slop augurs a frightening new era of misinformation. "The US empire's war propaganda is getting much more sophisticated," wrote geopolitical analyst Ben Norton. "You can bet the US government will use AI to try to justify its many more imperialist wars of aggression." Sure enough, plenty more AI generated misinformation has surfaced following the attacks, spread by conservative politicians like Vince Lago, mayor of Coral Gables, Florida. In some cases, AI generated images of Maduro in various US custody centers began to circulate in the hours immediately after his kidnapping -- and well before authentic images were released by the Trump administration. As Mexican political journalist José Luis Granados Ceja observed, the AI slop follows decades of efforts by the US government and media to manufacture consent among the western masses for intervention in the oil-rich South American state. "In 2002, Venezuelan President Hugo Chávez was briefly ousted in what came to be called the 'world's first media coup' where the lies said on TV paved the road," Ceja wrote in response to the AI propaganda. "It shouldn't be a surprise then that in 2025 new tech and fake AI videos are being used toward similar ends."

[6]

How an information void about Maduro's capture was filled by deepfakes

A real-time flood of AI-generated images and videos of Maduro's capture by US forces on Saturday were re-shared by public figures and garnered millions of views across Europe. Shortly after US President Donald Trump announced a "large-scale strike" on Venezuela on Saturday, European social media was awash with misleading, AI-generated images of Nicolás Maduro's capture and videos of Venezuelans celebrating around the world. Across TikTok, Instagram and X, AI-generated or altered images, old footage repurposed as new footage and out-of-context videos proliferated. Many racked up millions of views across platforms, and were shared by public figures, including Trump himself, X owner Elon Musk, the son of the former Brazilian president, Flávio Bolsonaro, and the official account of the Portuguese right-wing Chega party in Portugal. Some experts suggested this was one of the first incidents in which AI images of a major public political figure were created in real time as breaking news unfolded. But for others, what was unique about this wave of false information was not its scale, but the fact that so many people -- including public figures -- fell for it. On 3 January, US special forces captured the former leader of Venezuela and his wife in a lightning operation. Maduro faces federal drug trafficking charges, to which he has pleaded not guilty. Soon after his capture, multiple images of Maduro appeared across social media channels. Euronews' fact-checking team, The Cube, found examples of the below image shared in Spanish, Italian, French and Polish. One picture of Maduro disembarking an aircraft was shared by the official account of Portugal's far-right Chega party, as well as the party's founder André Ventura and other party members. It was also presented by several online media outlets as a real photo. As the image spread rapidly online, fact-checkers noted that when the photo was run through Gemini's SynthID verification tool, it contained digital watermarks indicating that all or part of the image had been generated or edited with Google AI. Analysis from Google Gemini found that "most or all of (the image) was generated or edited with Google AI." According to Detesia, a German startup that specialises in deepfake detection technology, its AI models found "substantial evidence" that the image was AI-generated. Detesia said the original photo sparked several similar ones, with a more modest social media reach, that also contained visible SynthID watermark artifacts. Later versions showed obvious signs of AI-generation, including images of soldiers with three hands and pictures depicting Maduro covered in blood. The image, which is very likely AI-generated, garnered millions of views across social media platforms, including one Spanish X post that had 2.6 million views alone. According to Tal Hagin, an information warfare analyst and media literacy lecturer, rapid advances in AI technology are making the challenge of identifying deepfakes even more challenging. "We are no longer at the stage where it's six months away, we are already there: unable to identify what's AI and what's not." In the immediate aftermath of Maduro's capture, individuals had little details and in particular, no images, "when you have this vacuum of information, it needs to be filled somehow", said Hagin. "Individuals started uploading AI-generated images of Maduro in custody of the US Special Forces in order to fill that gap", he concluded. Another image, with more than 4.6 million views, purports to show Maduro sitting in a military cargo plane in white pyjamas. Newsguard, a US-based platform that monitors information reliability, reported that the image shows clear signs of AI-generation, including a double row of passenger seat windows. It also contradicts evidence: Maduro was transported out of Venezuela by helicopter to a US navy ship -- netiher of which appear to have double-rowed images like in the picture. Shortly after false images of Maduro's arrest circulated, social media platforms were flooded with footage of protesters celebrating his capture. Some of these, including one shared by Musk of Venezuelans crying of joy at amassed more than 5.6 million views. Signs of AI generation include unnatural human movements, skin tones and abnormal licence plates on car. Dispatches from Venezuela indicate the public mood is complex, with a category of the population expressing joy and hope at Maduro's capture, as well as fear and uncertainty of what a transition of power may look like. Others have condemned the US' intervention in their country. Multiple videos have appeared with misleading captions on protest videos. One video shared on X which amassed more than 1 million views was shared on 4 January with the caption "this is Caracas today. Huge crowds in support of Maduro." In reality, this video is from a march which Maduro and his youth supporters took part in at the Miraflores Palace in Caracas in November 2025. Another video widely shared on French- language X shows a man on a balcony holding a phone up to a crowd of people with the caption, "I've rarely seen a people as happy as the Venezuelans to finally be rid of Maduro thanks to American intervention." Fireworks can be seen in the background. Hagin says there are several indicators that cast doubt on the video's authenticity, including that the fireworks appear to be coming from within the crowd and are not producing an appropriate level of smoke. The image displayed on phone also does not match the crowd below. The danger of this volume of AI-generated footage, according to Hagin, is that it creates a false sense of a track record in people's minds, "if I've seen five different examples of Maduro in custody in different outfits -- it must all be fake." "There are videos that are 100% real, with absolutely no reason to doubt them, and people still say they're AI because they don't want them to be true," Hagin said. "It takes time to verify information, ensure that you're correct in what you're saying, and while you're fiddling around trying to verify this video, people are churning out more and more misinformation onto a platform." In addition to misleading visuals, false claims that US forces struck former Venezuelan President Hugo Chavez's mausoleum have proliferated online in multiple languages -- and were even shared by Colombian President Gustavo Petro. One purports to show the mausoleum bombed by the US military during its capture operation. However, as noted by Hagin, the photo on the right is an AI-manipulated image based on a real photo of the mausoleum from 2013, with the destruction artificially added. The Hugo Chavez Foundation itself posted a video on Instagram on Monday showing the building intact with a video of a phone showing the date as Sunday.

[7]

How AI-generated images contributed to the disinformation about Maduro's capture

On the night of Friday, January 2, the US carried out a special forces raid and captured the president of Venezuela, Nicolas Maduro, and his wife, Cilia Flores. They were then transported to the US. As soon as the news broke, a number of images said to show the capture of the Venezuelan leader were widely shared on social media. However, these images were fake: they were generated by AI. A series of photos posted on January 3 - some taken face-on, others from above - were described as the first images to show the Venezuelan president after his capture by the US Army. According to one X user, they show Maduro being escorted across the tarmac by two US Drug Enforcement Administration (DEA) agents before being loaded onto a US-bound plane. The face-on image of Maduro was even shared by several news sites (examples here and here) to illustrate articles about his capture, and was also retweeted by a Republican lawmaker on X. At first glance, these images seem pretty realistic. They show the Venezuelan leader being escorted by DEA agents from a few different angles. Maduro is wearing the same black suit, and the agents are wearing identical uniforms in all the images. However, these photos are fake and were created by Google's AI image generator, Nano Banana Pro, which is able to create realistic images. These images are stamped with a watermark, which proves that they were AI-generated. This is a precaution put in place by Google so that artificially-generated content can be identified. This watermark is invisible to the naked eye, but it is visible to SynthID, a verification tool integrated into Google Lens. If you upload these images into Google Lens, it then reads, "Created with Google's AI" in the "About this image" section. The photo also features a defect that gives away the fact that it was AI-generated. There is an anomaly in Maduro's jacket: a giant, blurred mark on the right leaves a gaping hole in his jacket. AI sometimes struggles to reproduce specific details. Finally, @ultravfx, the name that appears on the watermark that is visible on the image, is an artist who creates AI-generated images. Another image of the capture posted online on January 3 has so far garnered more than 4 million views on X. The image appears to show the Venezuelan president in an embarrassing situation: in custody and en route to the United States in his pyjamas. This image also looks realistic: there are no flagrant anomalies, but its poor resolution means that it is hard to discern the details. However, there are a few clues that this image, too, was AI-generated. First of all, the soldier sitting to the right of the Venezuelan president isn't wearing the regulation camouflage print used by the US military. It actually seems to be a reproduction of the "Universal Camouflage Pattern" (UCP), a pattern that hasn't been used by the US Army since 2019. The US Army actually currently uses a pattern called the "Operational Camouflage Pattern". The OCP is made up of green and brown patches, unlike the UCP, which features grey and black patches. Maduro is wearing white pyjamas in the image shared online. US President Donald Trump posted an image on January 3 on the social media network Truth Social that features the Venezuelan president on board the USS Iwo Jima en route to the United States, wearing not white pyjamas but what appears to be a grey tracksuit. It is important to note that we can't guarantee the authenticity of this image shared by Trump, as underlined in an article published in the New York Times. Another image, posted on January 4 on X, where it garnered more than 800,000 views, shows Maduro apparently incarcerated in a US prison. You can see the Venezuelan leader in chains, wearing the classic orange prison jumpsuit. SynthID, Google's AI verification tool, shows that this image was created by Nano Banana, just like the images said to show Maduro on the tarmac. Images published by the media of Maduro's arrival in New York, or during his first appearance in court on January 5, show the president wearing black or brown, not an orange prisoner's uniform. This article has been translated from the original in French by Brenna Daldorph.

[8]

Altered and misleading images proliferate on social media amid Maduro's capture

AI-generated images, old videos and altered photos proliferated on social media in the hours following former Venezuelan President Nicolás Maduro's capture. Several of these images quickly went viral, fueling false information online. CBS News analyzed circulating images by comparing dubious images to verified content and using publicly available tools such as reverse image search. In some cases, CBS News ran images through AI detection tools, which can be inconsistent or inaccurate but can still help flag possibly manipulated content. Checking the source of the content, as well as the date, location, and other news sources are all ways to suss out whether an image is accurate, according to experts. After President Trump announced Maduro's capture in a social media post early Saturday morning, questions brewed about the logistics of the mission, where Maduro would be flown and the future of Venezuela. Meanwhile, images of Maduro that were likely manipulated or generated with AI tools circulated on social media, garnering millions of views and thousands of likes across platforms. One photo purporting to show Maduro after his capture was shared widely, including by the mayor of Coral Gables Florida, Vince Lago, and in a joint Instagram post by two popular conservative content accounts with over 6 million combined followers. Using Google's SynthID tool, CBS News Confirmed team found the photo was likely edited or generated using Google AI. CBS News also found a video generated from the photo, showing military personnel escorting Maduro from an aircraft. It was posted around 6:30 a.m. -- 12 hours before CBS News reported that a person in shackles was seen disembarking the plane carrying Maduro and confirmed his eventual arrival Saturday evening at Metropolitan Detention Center, a federal facility in Brooklyn. Another unverified photo that made the rounds on social media depicts Maduro in an aircraft with U.S. soldiers. While two different AI detection tools gave inconsistent results as to its authenticity, CBS was not able to confirm its legitimacy. On Saturday, Mr. Trump posted an image captioned "Nicolas Maduro on board the USS Iwo Jima" after the South American leader's capture. Later that evening, the White House Rapid Response account shared a video that appeared to show Maduro being escorted down a hallway by federal agents. Old videos and images from past events recirculated, purporting to show reactions to Maduro's capture and strikes in Caracas. One video showing people tearing down a billboard image of Maduro dates as far back as July 2024. Another video purporting to show a strike in Venezuela had circulated on social media as far back as June 2025. Another image showing a man with a sack over his head while sitting in the back of a car circulated widely, sparking online speculation as to whether the photo showed Maduro's capture. Many users flagged that the photo was probably not of Maduro, but as of this afternoon the post had 30,000 likes and over a thousand reposts. A Daily Mail article from 2023 reported that the photo shows Saddam Hussein after his capture, sitting with a Delta Force member, but CBS has not independently confirmed this. CBS News reached out to X and Meta regarding the companies' policies on AI-generated images, but has not received a response. X's rules page says it may label posts containing synthetic and manipulated media and Meta says it prohibits AI that contributes to misinformation or disinformation.

[9]

The ouster of Nicolás Maduro in Venezuela shows the pervasiveness of deepfakes

In moments of political chaos, deepfakes and AI-generated content can thrive. Case in point: the online reaction to the US government's shocking operation in Venezuela over the weekend, which included multiple airstrikes and a clandestine mission that ended with the capture of the country's president, Nicolás Maduro, and his wife. They were soon charged with narcoterrorism, along with other crimes, and they're currently being held at a federal prison in New York. Right now, the facts of the extraordinary operation are still coming to light, and the future of Venezuela is incredibly unclear. President Donald Trump says the U.S. government plans to "run" the country. Secretary of State Marco Rubio has indicated that, no, America isn't going to do that, and that the now-sworn-in former vice president, Delcy Rodriguez will lead instead. Others are still calling for opposition leaders María Corina Machado and Edmundo Gonalzez to take charge. It's in moments like this that deepfakes, disinformation campaigns, and even AI-generated memes, can pick up traction. When the truth, or the future, isn't yet obvious, generative artificial intelligence allows people to render content that answers the as-yet-unanswered questions, filling in the blanks with what they might want to be true. We've already seen AI videos about what's going on in Venezuela. Some are meme-y depictions of Maduro handcuffed on a military plane, but some could be confused for actual footage. While a large number of Venezuelans did come out to celebrate Maduro's capture, videos displaying AI-generated crowds have also popped up, including one that apparently tricked X CEO Elon Musk.

[10]

Truth or Fake - AI photos fuel fake news about Nicolas Maduro's capture

One of your browser extensions seems to be blocking the video player from loading. To watch this content, you may need to disable it on this site. After President Donald Trump announced Nicolas Maduro's capture in a social media post, AI-generated images claiming to show the incident flooded social media. These fake images were even used by some news sites and reposted by the official White House X account. Vedika Bahl talks us through what she's seen online, and how misleading they may have been in Truth or Fake.

[11]

How AI Hijacked the Venezuela Story

There's a familiar story about AI disinformation that goes something like this: With the arrival of technology that can easily generate plausible images and videos of people and events, the public, unable to reliably tell real from fake media, is suddenly at far greater risk of being misled and disinformed. In the late 2010s, when face-swapping tools started to go mainstream, this was a common prediction about "deepfakes," alongside a proliferation of nonconsensual nude images and highly targeted fraud. "Imagine a fake Senator Elizabeth Warren, virtually indistinguishable from the real thing, getting into a fistfight in a doctored video," wrote the New York Times in 2019. "Or a fake President Trump doing the same. The technology capable of that trickery is edging closer to reality." Creating deepfakes with modern AI tools is now so trivial that the term feels like an anachronistic overdescription. Many of the obvious fears of generate-anything tech have already come true. Regular people -- including children -- are being stripped and re-dressed in AI-generated images and videos, a problem that has trickled from celebrities and public figures to the general public courtesy of LLM-based "nudification" tools as well as, recently, X's in-house chatbot, Grok. Targeted and tailored fraud and identity theft are indeed skyrocketing, with scammers now able to mimic the voices and even faces of trusted parties quickly and at low cost. The story of AI disinformation, though -- an understandable if revealing fixation of the mainstream media beyond the LLM boom -- turned out to be little bit fuzzier. It's everywhere, of course: We no longer need to "imagine" the NYT's doctored-video scenario in part because posting such videos is now part of the official White House communication strategy. Nearly every major news event now sees a flurry of realistic, generated videos redepicting it in altered terms. But the reality of news-adjacent deepfakes is more complicated than the old hypothesis that they'd be deployed by shadowy actors to mislead the vulnerable masses and undermine democracy, and in some ways more depressing. As we begin 2026 with the American kidnapping of Venezuelan president Nicolás Maduro, the future of disinformation looks more like this post that was widely shared and reposted by Elon Musk: Here we have fake clips of people in Venezuela celebrating Maduro's capture, depicting something plausible: individuals relieved or ecstatic about a corrupt and authoritarian leader being removed. (In reality, domestic public celebration has been muted due to uncertainty about the future and fear of the administration still in power.) Narrowly, it's a realization of the deepfake-disinformation thesis, an example of realistic media created with the intent and potential to mislead people who might believe it's real. The way it actually moved through the world tells a weirder story, though. Shadowy actors did create fake videos to advance a particular narrative in a chaotic moment, reaching millions of people. But the spread of these videos didn't hinge, as the default AI-disinformation hypothesis suggested, on misunderstanding and an inability of curious but well-meaning people to discern the truth. Instead, they were passed around by a powerful person with a large following who is perhaps the best equipped in the entire world to know that they weren't real -- a politically connected guy who runs an AI company connected to a social network, with a fact-checking system, where he's promoting posts inviting people to use his image generator to change Maduro's captivity outfits -- for an audience of people who do not care whether they are. In other words, they provided texture for a determined narrative, stock-image illustrations for wishful ideological statements, and something to find and share for audiences who were expecting or hoping to see something like this anyway. For a clear example of an earnestly misled poster, we're left with Grok, which took a break from automating CSAM to respond to skeptical users by saying the video "shows real footage of celebrations in Venezuela following Maduro's capture" before, after being told to "take a proper look," suggesting that the video "appears to be manipulated," albeit in a completely incorrect way. (On X, the Musk-sanctioned spread of videos like this is less like a disinformation contagion running rampant and destroying a deliberative system than a news network simply deciding to show a fabricated video and refusing to admit that it's fake.) In contrast to the focused deception of a romance scam, or the targeted harassment or blackmail of an AI nudification, content like this isn't valuable for its ability to persuasively deceive people but rather as ambient ideological slop for backfilling a desired political reality. Rather than triggering or inciting anything, it instead slotted in right next to other more familiar and less technologically novel forms of social-media propaganda -- like simply saying a video portrays something it doesn't and getting a million views for every thousand on the eventual debunkings: I don't mean to brush off the dangers of AI video generation for the public's basic understanding of the world or suggest that scenarios in which people are misled in politically significant ways by fake videos are impossible or even unlikely. But today, after the proliferation of omnipurpose deepfakelike technology came faster than almost anyone expected, the early result seems less like a fresh rupture with reality than an escalation of past trends and a fresh demonstration of the power of social networks, where ideologically isolated and incurious groups are now slightly more able to fortify their aesthetic and political experiences with consonant and infinite background noise under the supervision of people for whom documentary truth is at the very most an afterthought. In the short term, the ability to generate documentary media by prompt hasn't primarily resulted in chaos or "trickery" but rather previewed a perverse form of order in which persuasion is unimportant, disinformation is primarily directed at ideological allies, and everyone gets to see -- or read -- exactly what they want.

[12]

AI deepfakes of Nicolás Maduro flood social media -- depict...

Within hours of President Trump's announcement of the dramatic weekend capture of Nicolás Maduro, social media was flooded with AI-generated deepfakes showing the captured Venezuelan dictator in handcuffs and even sitting alongside Sean "Diddy" Combs, who was previously locked up in the same jail where Maduro now is in real life. The onslaught of AI-altered images mixed with real facts and verified photos created a confusing mess in the midst of a stunning breaking news moment, with some public figures amplifying the deepfake content. "This was the first time I'd personally seen so many AI-generated images of what was supposed to be a real moment in time," Roberta Braga, executive director of Digital Democracy Institute of the Americas, a think tank, told the New York Times. One of the fakes shows Combs doing a weird dance in a jail cell as Maduro appears to hold back tears on a nearby jail cot. The disgraced rapper, whose sex-trafficking case mentioned lurid use of baby oil, is then seen spraying the deposed dictator with bottles of fluid as "You've Got a Friend in Me" plays. The rest of the bizarre clip is a montage of the two acting like a couple while Maduro sports a blonde wig. Maduro and his wife, Cilia Flores, have been locked up in the Metropolitan Detention Center in Brooklyn, where Combs spent the duration of his own trial before being convicted last year for transporting people across state lines for sexual encounters. Curtis "50 Cent" Jackson poked fun at the coincidence while taking his latest shot at his longtime nemesis Combs. Jackson made an Instagram post of a cartoon image of Combs doing Maduro's hair while exclaiming, "They got my oil, too!" -- a cheeky reference both to the Combs' sleazy habits and US plans to take over Venezuela's oil industry. NewsGuard, which tracks the accuracy of online information, found five AI-generated and out-of-context images and two misleading videos falsely linked to Maduro's capture that garnered more than 14.1 million views total on X in less than two days. The content also appeared on Meta platforms like Instagram and Facebook, though it racked up fewer clicks on those sites, NewsGuard said. "While many of these visuals do not drastically distort the facts on the ground, the use of AI and dramatic, out-of-context video represents another tactic in the misinformers' arsenal - and one that is harder for fact checkers to expose because the visuals often approximate reality," NewsGuard stated in a report released Monday. In one AI deepfake, Maduro is seen dressed in white pajamas and sitting on a US military cargo plane surrounded by soldiers in uniform, according to the report. Some other deepfakes showed Maduro in a grey sweat suit - the same outfit he was wearing in a verified photo from the White House - while being escorted out of a plane by US soldiers. By the time Trump posted a verified image of Maduro, handcuffed and dressed in a grey sweat suit aboard the USS Iwo Jima warship, it was too late. Many social media users were skeptical about whether the image posted by Trump was real. "It's funny, but very common: Doubt the truth and believe the lie," Jeanfreddy Gutiérrez, who runs a fact-checking operation in Caracas, told the Times. AI-detection platforms and tools like reverse-image search can help identify false content, but it can be difficult to trace deepfakes back to their original sources. The Times found that many mainstream AI tools, including Google's Gemini, OpenAI models and Grok, were quickly used to create false images of Maduro free of charge. Smaller platforms like Z-Image, Reve and Seedream were used, too. "We make it easy to determine if content is made with Google AI by embedding an imperceptible SynthID watermark in all media generated by our AI tools," a Google spokesperson told The Post in a statement. "You can simply upload an image to the Gemini app and instantly confirm whether it was generated using Google AI." A spokesperson for OpenAI said the company allows public figures to submit a form to prohibit AI-generated content using their likeness. The spokesperson added that OpenAI prefers to focus on preventing sexual deepfakes or content that incites violence. Representatives for xAI, which runs Grok, did not immediately respond to The Post's request for comment. Mayor Vince Lago of Coral Gables, Fla., posted a fake image of Maduro seemingly being escorted by Drug Enforcement Administration agents on Instagram. It had more than 1,500 likes and was still online as of Tuesday morning. Lago's office did not immediately answer a request for comment. Along with the Maduro deepfakes, social media was flooded with fake and misleading videos of Venezuelans appearing to rejoice in the streets of Caracas after the US raid. An account called Wall Street Apes, which has 1.2 million followers on X, posted a video purportedly showing Venezuelans crying in the streets of Caracas and thanking Trump for removing Maduro as leader. The video - which reached over 5.7 million views - was AI-generated and apparently originated from TikTok, according to fact-checkers at BBC and Agence France-Presse, as well as an X "Community Note." "The people of Venezuela are ripping down posters of Maduro and taking to the streets to celebrate his arrest by the Trump administration," MAGA influencer Laura Loomer wrote in a post on X, along with a video of a poster of Maduro being taken down. But the footage was originally filmed in 2024, according to Wired. Loomer has since deleted the post. Conspiracy theorist Alex Jones claimed "millions" of Venezuelans were flooding the streets of Caracas to celebrate the capture of Maduro, reposting an aerial video showing thousands of people cheering in Venezuela's capital. The video, which remained up as of Tuesday morning, racked up more than 2.2 million views. Attached to the post was a "Community Note" - the crowdsourcing form of fact-checking used on X, Elon Musk's platform - that said the video clips were actually from 2024. Jones also posted one of the AI-generated images flagged by NewsGuard, and replied to a post about the raid on Venezuela with a video appearing to show missiles raining down on a city. A "Community Note" labeled the video "misinformation," claiming it was "an old video from Israel-Iran tensions in 2024." "I did not say it was from Venezuela," Jones wrote in another post. "People jumped to their own conclusions and projected their own error on to me."

Share

Share

Copy Link

Following the U.S. military operation that led to Nicolás Maduro's capture, AI-generated images and videos depicting the Venezuelan leader in custody went viral across social media platforms. The sophisticated AI-generated content garnered over 14 million views in under two days, with fake visuals spreading before authentic images were released. Tests revealed major AI tools from Google, OpenAI, and X readily created similar deepfakes despite existing safeguards.

AI Misinformation Floods Social Media After Maduro Capture

Within hours of the U.S. military operation that resulted in the capture of Venezuelan leader Nicolás Maduro on January 3, 2026, social media platforms became flooded with AI-generated images and videos depicting events that never occurred

1

2

. The fake visuals depicting scenes ranged from photographs showing Maduro in handcuffs being escorted by Drug Enforcement Administration agents to viral videos of crowds celebrating his removal in the streets of Caracas. According to NewsGuard, a company tracking online information reliability, five fabricated and out-of-context images plus two misrepresented videos collectively garnered more than 14.1 million views on X alone in under two days2

4

.

Source: NYT

The widespread dissemination of AI-generated images began before President Donald Trump released an authentic photograph of the captured leader

1

. One of the earliest fake images was posted by an "AI video art enthusiast" account and was verified by Google's SynthID watermark detector as being generated with Google AI3

. Roberta Braga, executive director of the Digital Democracy Institute of the Americas, noted this was "the first time I'd personally seen so many A.I.-generated images of what was supposed to be a real moment in time"2

.Platform Safeguards Fail to Stop AI Imagery Depicting Public Figures

Despite assurances from technology companies about safeguards designed to prevent abuse, tests conducted by The New York Times revealed that most major AI image generators readily created fake images of Maduro

2

. Tools from Google, OpenAI, and X produced the requested images within seconds, often free of charge. Google's Gemini, OpenAI's ChatGPT (when accessed through third-party websites), and X's Grok all generated lifelike depictions of the Venezuelan leader's arrest.A Google spokesman stated the company does not categorically bar images of prominent people and that fake images generated in tests did not violate its rules, despite Google having policies prohibiting misinformation related to governmental or democratic processes

2

. OpenAI acknowledged focusing safeguards on preventing harms like sexual deepfakes or violence rather than political misinformation. Meta's AI chatbot and Flux.ai created less realistic depictions, though the effectiveness of these guardrails remains inconsistent2

.

Source: France 24

Sophisticated AI-Generated Content Amplifies Political Misinformation

One particularly viral piece of AI-driven misinformation featured AI-generated videos showing supposed Venezuelan citizens crying tears of joy and thanking the United States for removing Maduro

1

5

. Posted by the account "Wall Street Apes," which has over 1 million followers, the video accumulated over 5.6 million views and was resharped by at least 38,000 accounts, including briefly by Elon Musk1

. Fact-checkers at BBC and AFP traced the earliest version to TikTok account @curiousmindusa, which regularly posts AI-generated content1

.

Source: Futurism

The video was eventually flagged by community notes on X, a crowdsourced fact-checking feature, but critics argue this system reacts too slowly to prevent falsehoods from spreading

1

. This contrasts sharply with actual sentiment in Venezuela, where a November survey found 86 percent of Venezuelans preferred Maduro remain head of state5

. Sofia Rubinson from NewsGuard explained that the sophisticated AI-generated content "approximate reality," making it harder for fact-checkers to expose because the visuals don't drastically distort facts on the ground4

.Related Stories

Growing Challenges for Detection and Regulation

Jeanfreddy Gutiérrez, who runs a fact-checking operation in Caracas, observed the fake images spreading through Latin American news outlets on Saturday before being quietly replaced with Trump's official image

2

. "They spread so fast -- I saw them in almost every Facebook and WhatsApp contact I have," he said. Politicians including Vince Lago, mayor of Coral Gables, Florida, shared AI-generated images to their Instagram accounts, where they received thousands of likes and remain posted4

.Social media platforms face mounting pressure to improve AI detection and labeling. Last year, India proposed legislation requiring such labeling, while Spain approved fines up to 35 million euros for unlabeled AI materials

1

. Major platforms including TikTok, Meta, and X have rolled out detection tools, though results remain mixed. Adam Mosseri, who oversees Instagram and Threads, acknowledged the challenge: "All the major platforms will do good work identifying AI content, but they will get worse at it over time as AI gets better at imitating reality"1

.Google's response centers on SynthID, a hidden watermark embedded in images created by Gemini that allows users to verify whether content was generated by its AI tool

2

. However, this requires users to actively check images, and watermarks from lesser-known generative AI tools with few guardrails remain absent. The incident demonstrates how platforms like Sora and Midjourney have made it easier than ever to generate hyper-realistic footage and pass it off as real during fast-breaking events1

. Analysts warn these deepfakes represent a new tactic in information warfare, with geopolitical analyst Ben Norton noting that "the US empire's war propaganda is getting much more sophisticated" [5](https://futurism.com/artificial-intelligence/venezuela-maduro-ai-misinformation".References

Summarized by

Navi

Related Stories

AI reshaping the battle over Nicolas Maduro's capture as disinformation floods social media

20 Jan 2026•Entertainment and Society

Venezuela Claims Trump's Video of Caribbean Strike is AI-Generated, Sparking Debate on AI in Politics

04 Sept 2025•Technology

Trump's White House pushes AI images further, eroding public trust in government communications

23 Jan 2026•Entertainment and Society

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy