AMD Unveils Stable Diffusion 3.0 Medium AI Model for Local Image Generation on Ryzen AI Laptops

7 Sources

7 Sources

[1]

AMD unveils industry-first Stable Diffusion 3.0 Medium AI model generator tailored for XDNA 2 NPUs -- designed to run locally on Ryzen AI laptops

AMD, in collaboration with Stability AI, has unveiled the industry's first Stable Diffusion 3.0 Medium AI model tailored for the company's XDNA 2 NPUs which process data in the BF16 format. The model is designed to run locally on laptops based on AMD's Ryzen AI laptops and is available now via Amuse 3.1. The model is a text-to-image generator based on Stable Diffusion 3.0 Medium, which is optimized for BF16 precision and designed to run locally on machines with XDNA 2 NPUs. The model is suitable for generating customizable stock-quality visuals, which can be branded or tailored for design and marketing applications. The model interprets written prompts and produces 1024×1024 images, then uses a built-in NPU pipeline to upscale them to 2048×2048 resolution, resulting in 4MP outputs, which AMD claims are suitable for print and professional use. The model requires a PC equipped with an AMD Ryzen AI 300-series or Ryzen AI MAX+ processor, an XDNA 2 NPU capable of at least 50 TOPS, and a minimum of 24GB of system RAM, as the model alone uses 9GB during generation. The key advantage of the model is, of course, that it runs entirely on-device; the model enables fast, offline image generation without needing Internet access or cloud services. The model is aimed at content creators and designers who need customizable images and supports advanced prompting features for fine control over image composition. AMD even provides examples. A prompt to draw a toucan looks as follows: "Close up, award-winning wildlife photography, vibrant and exotic face of a toucan against a black background, focusing on the colorful beak, vibrant color, best shot, 8k, photography, high res." To use the model, users must install the latest AMD Adrenalin Edition drivers and the Amuse 3.1 Beta software from Tensorstack. Once installed, users should open Amuse, switch to EZ Mode, move the slider to HQ, and enable the 'XDNA 2 Stable Diffusion Offload' option. Usage of the model is subject to the Stability AI Community License. The model is free for individuals and small businesses with less than $1 million in annual revenue, though licensing terms may change eventually. Keep in mind that Amuse is still in beta, so its stability or performance may vary.

[2]

AMD gets GPU-quality AI art running on its NPUs

AMD and Stability AI on Monday said that the two companies have ported Stable Diffusion 3.0 Medium to Stability's Amuse AI art generator, allowing improved image generation, and even text, running just on the NPU alone. With most of the jaw-dropping advancements in AI art coming from the cloud (such as Google Veo 3) a new AI art model running locally on AMD's PC processors wouldn't seem to be newsworthy. But it's a step forward for AMD, which has lagged behind some of the AI art advancements from Intel. The new model will be part of Amuse 3.1, available for download from Stability AI. You'll want to download the Stable Diffusion Medium model via the Amuse app, which we tried out in April when Amuse began adding text to video. The new model uses what's known as BF16, which allows for a wider range of responses but less precision. It also looks way, way, better than some of the results I was able to generate in April. Stable Diffusion Medium is already available via Amuse, but it has previously required a GPU, limiting the mass appeal. Now you can choose from either. As more and more laptops begin adding NPUs, this will open up local image generation to a wider audience. AMD provided a press preview last week, and I tried it out using an MSI Prestige A16 AI+ with an AMD Ryzen AI 9 365 processor inside, and a NPU with 50 TOPS. Each image (with 20 steps) took about 90 seconds to generate. That's still far behind the cloud, but image generation on a local PC is unlimited. Amuse still makes mistakes, and you'll be able to tell if you look closely that it's not created by human hands. But it's steadily improving. Where will we be in a year or two?

[3]

AMD Amuse 3.1 Release: BF16 Precision SD 3.0 Medium on Ryzen AI

The new BF16 Stable Diffusion 3.0 Medium model is now available in Amuse 3.1. This release brings bfloat16 precision to the widely used Stable Diffusion 3 framework, letting you run complex image generation tasks directly on an AMD Ryzen AI laptop without sacrificing too much memory or accuracy. By adopting bfloat16 (BF16), the model keeps nearly the same numerical fidelity you'd expect from FP16 but slashes memory demands by more than half compared to traditional medium‑sized diffusion networks. To get started, you'll need an AMD Ryzen AI 300 series or a Ryzen AI MAX+ machine. These laptops come with the XDNA 2 neural processing unit (NPU) capable of 50 TOPs or higher. Coupled with at least 24 GB of system RAM, you can load the BF16 model and run it in HQ mode using about 9 GB of memory in practice. The steps are simple: update your Adrenalin graphics driver, install the Amuse 3.1 Beta, open the EZ mode controls, push the quality slider all the way up, and flip the "Stable Diffusion Offload" switch on. After that, you're ready to generate. The underlying inference process is split into two phases on the NPU. First, the model synthesizes a base image at 1024 × 1024 pixels (2 MP); next, it uses a dedicated upscaling kernel to boost that output to 2048 × 2048 (4 MP). This two‑stage pipeline takes advantage of the XDNA 2 architecture, delivering print‑quality assets without needing an external GPU or cloud service. That means you can brainstorm designs on the plane, in a café with spotty wifi, or at a client site without worrying about subscriptions or hidden fees. Even though it runs locally, you still need to think carefully about your prompts. BF16 precision preserves a lot of the nuance in the model's weights, so prompt structure and wording have an outsize impact on image quality. A good rule of thumb is to describe the general scene first -- say, "a forest at dawn" -- then layer on compositional details, like "mist rising between pine trees, light rays streaming," before adding color or style notes. After you craft a tidy prompt, run a batch of 25 to 30 seeds; typically, a few will hit the right visual structure. If you need to exclude something -- say, an unwanted background element -- use negative prompts sparingly, as overuse can degrade overall fidelity. One of the biggest benefits of this setup is that it makes high‑quality image creation accessible without hefty hardware or cloud accounts. A Ryzen AI‑powered laptop can generate assets that rival stock‑photo subscriptions, and you can tailor each image to your brand on the spot. For instance, a designer working on beverage marketing could rapidly produce dozens of soda label concepts, each with different color schemes and branding elements, then fine‑tune the best ones for client review. Because you're running BF16 locally, iteration is fast and doesn't eat up your GPU's VRAM budget or monthly cloud credits. Power efficiency is another plus. The XDNA 2 NPU was built to handle AI workloads with minimal energy draw, whether your laptop is plugged in or running on battery. That efficiency, combined with the modest memory footprint of BF16, means you can keep creating for hours without throttling performance or overheating your chassis. In practice, you'll notice consistent frame times and stable throughput, even on longer prompts or higher step counts. Under the hood, the BF16 SD 3.0 Medium model is a direct evolution of previous block FP16 efforts, like the SDXL Turbo unveiled at Computex 2024. Stability AI and AMD's collaboration has brought through lessons learned from those earlier releases -- chiefly, how to balance precision and quantization for the best user experience. By adopting block‑based tensor layouts and memory‑efficient kernels, they've managed to reduce peak RAM usage from around 24 GB to under 10 GB, all while preserving the same level of detail and color fidelity. Here's what you need to remember when using BF16 SD 3.0 Medium: * Hardware: Ryzen AI 300 series or AI MAX+ with XDNA 2 NPU (50 TOPs+), 24 GB RAM. * Software: Latest Adrenalin drivers, Amuse 3.1 Beta, EZ mode HQ with offload toggle. * Pipeline: 2 MP generation → 4 MP upscaling on NPU. * Memory: ~9 GB peak for medium model. * Prompting: General to specific structure, batch seeds, cautious use of negative prompts. Bespoke stock images, running BF16 SD 3.0 Medium on an AMD AI laptop delivers an excellent balance of quality, speed, and portability. The on‑device two‑stage pipeline and memory‑reduction techniques mean you can push the limits of creative AI generation anywhere, anytime, without a subscription or cloud connection. Enjoy the freedom to iterate rapidly, keep your assets local, and produce print‑ready images without compromise.

[4]

AMD opens the door to local Stable Diffusion AI image generation on Ryzen AI NPUs

TL;DR: AMD and Stability AI have unveiled the world's first FP16 Stable Diffusion 3.0 Medium model, optimized for Ryzen AI 300 Series XDNA 2 NPUs, which delivers enhanced AI image generation quality and 4MP resolution without the need for a dedicated GPU. Amuse 3.1 software enables free, efficient, high-quality image creation on compatible Ryzen AI laptops. At Computex 2024, AMD and Stability AI announced a partnership that introduced the world's first block FP16 stable diffusion model for image generation, offering improved quality and accuracy. This week, AMD is announcing another world's first, the FP16 SD 3.0 Medium model optimized for the AMD Ryzen AI 300 Series XDNA 2 NPUs. Promising a "significant increase in image quality" for AI image generation using Stable Diffusion, those with compatible Ryzen AI-powered laptops can download Amuse 3.1 by Tensorstack to test it out today. As long as you've got 24GB of memory, Amuse 3.1's HQ mode will deliver 16-bit quality without the need for a dedicated GPU. The results are impressive, with the ability to create Stable Diffusion 3.0 Medium model-powered images at a 4MP resolution (2048x2048) with XDNA Super Resolution. Best of all, it's free and doesn't require a subscription. Although it hasn't provided exact numbers, AMD notes that the tool can be used for the "speedy creation" of images with rapid iteration to get the desired result. "Having an AI PC that can keep up with ever-changing and ever-more-demanding Generative AI workloads is an excellent partner," AMD writes. "The AMD XDNA 2 NPU excels at AI operations while preserving power efficiency - so you can do what you do best - whether you are plugged in or 30,000 feet in the air." This is impressive because AMD's Ryzen AI 300 Series processors are designed for Copilot+ PCs and lightweight, efficient AI workloads. With the ability to generate images with BF16 precision, with one of the most powerful and versatile models, Ryzen AI laptops and portable devices have unlocked a new level of image generation for creators, designers, and AI enthusiasts.

[5]

AMD's New AI Image Model Can Run Locally on Ryzen AI Laptops

It is a text-to-image model that does not require Internet connectivity AMD has released a new Stable Diffusion 3 Medium artificial intelligence (AI) model optimised for XDNA 2 neural processing units (NPUs). The chipmaker claimed that it is the world's first AI model that processes outputs in the BF16 format. The model will be supported by the newer Ryzen AI laptops with at least 24GB RAM, after users download Tensorstack's Amuse 3.1 beta software. The Stable Diffusion 3 Medium is an on-device image generation model that does not require Internet connectivity. In a press release, the Santa Clara-based tech giant detailed the new image generation model. The AI model is based on Stable Diffusion 3 Medium, which is optimised for the company's XDNA NPUs and are equipped in the Ryzen AI laptops released in 2024 and newer. The company claims the model can be used to generate stock-quality images from text prompts. The model generates 1024×1024 resolution images, which are then upscaled to 2048×2048 print-ready resolution using the NPU's capabilities. The new AI model is part of AMD and Tensorstack's new Amuse 3.1 desktop app, which is free to download and install. Since the image generation model runs entirely locally, it even works when the device is not connected to the Internet. The data-processing occurs on-device, powered by the XDNA 2 NPUs. AMD said it has worked on the memory requirements of the AI model, and it now requires 24GB RAM, instead of 32GB RAM which was necessary for the Stable Diffusion XL Turbo model. Additionally, the new image model consumes only 9GB of RAM while active. The company achieved this by using the block floating point 16 or block fp16 (BF16) memory-efficient format. The tech giant highlighted that the Stable Diffusion 3 Medium AI model strictly adheres to the prompt, structure, and order. AMD said users trying out the model should first describe the type of image, then the structural components, and finally details and other context. Negative prompts can be used to remove elements from the image, and placement of full stops can change the context understanding of the model.

[6]

AMD Intros "SD 3 Medium", The World's First BF16 NPU Model Designed For XDNA 2 AI NPUs, Offer Reduced Memory Footprint & Faster Text-To-Image Gen

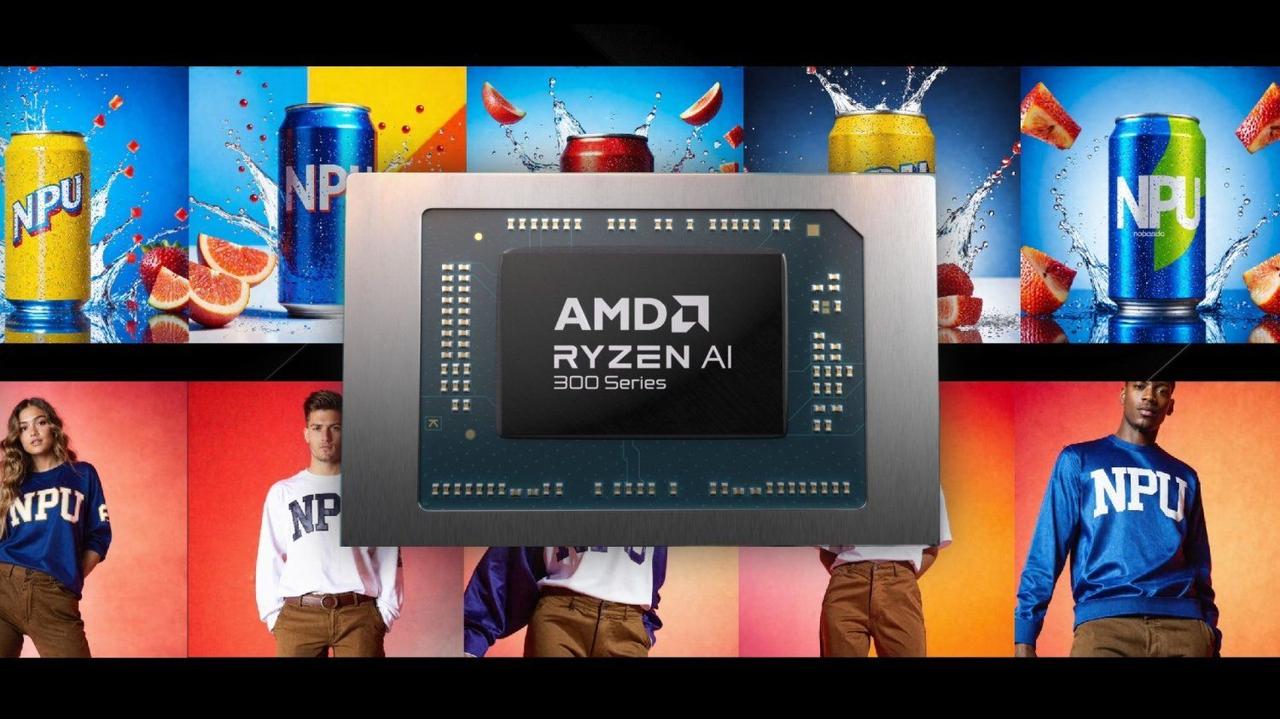

AMD has launched the world's first BF16 NPU model for XDNA 2 NPUs, which it is calling SD 3 Medium, and makes for faster and uncompromised text-to-image generation. AMD's SD 3 Medium Is A BF16 Model That Is Optimized For XDNA 2 "AI" NPUs, Now Available In Amuse 3.1 To Try Out Press Release: At Computex 2024, AMD introduced the world's first block FP16 stable diffusion model: the SDXL Turbo. The breakthrough model combined the accuracy of FP16 with the performance of INT8 and was a collaboration between AMD and Stability AI. Now, AMD is proud to announce another collaboration with Stability AI: the world's first block FP16 SD 3.0 Medium model - which delivers a significant increase in image quality and is optimized for AMD Ryzen AI XDNA 2 NPUs. Best of all: you can try it out today by downloading Amuse 3.1 by Tensorstack and running it in HQ mode with a compatible Ryzen AI-powered laptop. Try SD 3.0 Medium (with BF16 precision) right now on an AMD Ryzen AI 300 series or Ryzen AI MAX+ laptop with at least 24GB of memory by following three simple steps: SD3 Medium (NPU) Hardware Requirements: AMD Ryzen AI 300 series or Ryzen AI MAX+ laptop equipped with a 50 TOPs or higher AMD XDNA 2 NPU and at least 24GB of system RAM. * Download and Install the latest Adrenalin Driver * Download and Install Amuse 3.1 Beta * In EZ Mode, move the slider to HQ and toggle "XDNA 2 Stable Diffusion Offload" The first Block FP16 SD 3 Medium model designed for AMD XDNA 2 NPUs also comes with a reduction in the memory requirements for Stable Diffusion 3 Medium and will run on 24GB laptops while consuming only 9GB of memory. The use of this data type allows high-quality, local AI image generation to be available on laptops with under 32GB of memory in a high-precision format and without excessive quantization. Furthermore, a 2-stage pipeline powered by the AMD XDNA 2 NPU enhances the output 2 MP (1024x1024) image of the SD 3 Medium model to 4MP resolution (2048x2048), providing print-quality images tailored to your specifications on the go. Stock-quality images with no subscription fees* or Wi-Fi required An AMD Ryzen AI-powered PC can create high-quality images that can be used as stock images for your graphics design needs. What's more? You can configure and custom-tailor the stock image to your brand, allowing for rapid design iteration and the speedy creation of marketing assets. For example, given below are some hypothetical examples of an "NPU" brand: Having an AI PC that can keep up with ever-changing and ever-more-demanding Generative AI workloads is an excellent partner, and the AMD XDNA 2 NPU excels at AI operations while preserving power efficiency - so you can do what you do best, whether you are plugged in or 30,000 feet in the air.

[7]

AMD Intros "SDXL Turbo", The World's First BF16 NPU Model Designed For XDNA 2 AI NPUs, Offer Reduced Memory Footprint & Faster Text-To-Image Gen

AMD has launched the world's first BF16 NPU model for XDNA 2 NPUs, which it is calling SDXL Turbo, and makes for faster and uncompromised text-to-image generation. AMD SDXL Turbo Is A BF16 Model That Is Optimized For XDNA 2 "AI" NPUs, Now Available In Amuse 3.1 To Try Out Press Release: At Computex 2024, AMD introduced the world's first block FP16 stable diffusion model: the SDXL Turbo. The breakthrough model combined the accuracy of FP16 with the performance of INT8 and was a collaboration between AMD and Stability AI. Now, AMD is proud to announce another collaboration with Stability AI: the world's first block FP16 SD 3.0 Medium model - which delivers a significant increase in image quality and is optimized for AMD Ryzen AI XDNA 2 NPUs. Best of all: you can try it out today by downloading Amuse 3.1 by Tensorstack and running it in HQ mode with a compatible Ryzen AI-powered laptop. Try SD 3.0 Medium (with BF16 precision) right now on an AMD Ryzen AI 300 series or Ryzen AI MAX+ laptop with at least 24GB of memory by following three simple steps: SD3 Medium (NPU) Hardware Requirements: AMD Ryzen AI 300 series or Ryzen AI MAX+ laptop equipped with a 50 TOPs or higher AMD XDNA 2 NPU and at least 24GB of system RAM. * Download and Install the latest Adrenalin Driver * Download and Install Amuse 3.1 Beta * In EZ Mode, move the slider to HQ and toggle "XDNA 2 Stable Diffusion Offload" The first Block FP16 SD 3 Medium model designed for AMD XDNA 2 NPUs also comes with a reduction in the memory requirements for Stable Diffusion 3 Medium and will run on 24GB laptops while consuming only 9GB of memory. The use of this data type allows high-quality, local AI image generation to be available on laptops with under 32GB of memory in a high-precision format and without excessive quantization. Furthermore, a 2-stage pipeline powered by the AMD XDNA 2 NPU enhances the output 2 MP (1024x1024) image of the SD 3 Medium model to 4MP resolution (2048x2048), providing print-quality images tailored to your specifications on the go. Stock-quality images with no subscription fees* or Wi-Fi required An AMD Ryzen AI-powered PC can create high-quality images that can be used as stock images for your graphics design needs. What's more? You can configure and custom-tailor the stock image to your brand, allowing for rapid design iteration and the speedy creation of marketing assets. For example, given below are some hypothetical examples of an "NPU" brand: Having an AI PC that can keep up with ever-changing and ever-more-demanding Generative AI workloads is an excellent partner, and the AMD XDNA 2 NPU excels at AI operations while preserving power efficiency - so you can do what you do best, whether you are plugged in or 30,000 feet in the air.

Share

Share

Copy Link

AMD, in collaboration with Stability AI, has released a new Stable Diffusion 3.0 Medium AI model optimized for XDNA 2 NPUs, enabling high-quality image generation on Ryzen AI laptops without requiring internet connectivity or dedicated GPUs.

AMD Introduces Groundbreaking AI Image Generation Model

AMD, in collaboration with Stability AI, has unveiled the industry's first Stable Diffusion 3.0 Medium AI model tailored for XDNA 2 Neural Processing Units (NPUs)

1

. This innovative model is designed to run locally on AMD Ryzen AI laptops, marking a significant advancement in on-device AI image generation capabilities.

Source: TweakTown

Key Features and Requirements

The new AI model processes data in the BF16 format, optimized for local execution on machines equipped with XDNA 2 NPUs. It requires a PC with an AMD Ryzen AI 300-series or Ryzen AI MAX+ processor, an XDNA 2 NPU capable of at least 50 TOPS, and a minimum of 24GB of system RAM

2

.Users can access this technology through the Amuse 3.1 Beta software from Tensorstack, which is available for free download

3

. The model is subject to the Stability AI Community License, making it free for individuals and small businesses with less than $1 million in annual revenue.Image Generation Capabilities

Source: PCWorld

The Stable Diffusion 3.0 Medium model interprets written prompts to produce 1024x1024 images, which are then upscaled to 2048x2048 resolution using a built-in NPU pipeline

4

. This results in 4MP outputs suitable for print and professional use. The model supports advanced prompting features for fine control over image composition, allowing users to create customizable stock-quality visuals for design and marketing applications.Performance and Efficiency

AMD claims that each image generation (with 20 steps) takes about 90 seconds on compatible hardware

2

. While this is slower than cloud-based solutions, it offers unlimited local generation without internet connectivity requirements. The XDNA 2 NPU is designed to handle AI workloads efficiently, enabling consistent performance even during extended use on battery power3

.Related Stories

Technological Advancements

The BF16 Stable Diffusion 3.0 Medium model represents an evolution of previous block FP16 efforts. By adopting block-based tensor layouts and memory-efficient kernels, AMD and Stability AI have managed to reduce peak RAM usage from around 24GB to under 10GB while preserving image quality and color fidelity

3

.Implications for Users and Industry

Source: Tom's Hardware

This development opens up new possibilities for content creators, designers, and AI enthusiasts. It enables high-quality image generation without the need for dedicated GPUs or cloud services, making AI-powered creativity more accessible and portable

5

. Users can now generate and iterate on AI-created images quickly and efficiently, even in offline environments.As AMD continues to push the boundaries of on-device AI capabilities, this release signifies a notable step forward in making advanced AI technologies more widely available to consumers and professionals alike.

References

Summarized by

Navi

[1]

[5]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology