Bandcamp bans AI music as first major platform to take a stand against synthetic content

7 Sources

7 Sources

[1]

Bandcamp bans purely AI-generated music from its platform

On Tuesday, Bandcamp announced on Reddit that it will no longer permit AI-generated music on its platform. "Music and audio that is generated wholly or in substantial part by AI is not permitted on Bandcamp," the company wrote in a post to the r/bandcamp subreddit. The new policy also prohibits "any use of AI tools to impersonate other artists or styles." The policy draws a line that some in the music community have debated: Where does tool use end and full automation begin? AI models are not artists in themselves, since they lack personhood and creative intent. But people do use AI tools to make music, and the spectrum runs from using AI for minor assistance (cleaning up audio, suggesting chord progressions) to typing a prompt and letting a model generate an entire track. Bandcamp's policy targets the latter end of that spectrum while leaving room for human artists who incorporate AI tools into a larger creative process. The announcement emphasized the platform's desire to protect its community of human artists. "The fact that Bandcamp is home to such a vibrant community of real people making incredible music is something we want to protect and maintain," the company wrote. Bandcamp asked users to flag suspected AI-generated content through its reporting tools, and the company said it reserves "the right to remove any music on suspicion of being AI generated." As generative AI tools make it trivial to produce unlimited quantities of music, art, and text, this author once argued that platforms may need to actively preserve spaces for human expression rather than let them drown in machine-generated output. Bandcamp's decision seems to move in that direction, but it also leaves room for platforms like Suno, which primarily host AI-generated music. Two platforms, two approaches, one flood The policy represents a contrast with Spotify, which explicitly permits AI-generated music, although its users have expressed frustration with an influx of AI-generated tracks created by tools like Suno and Udio. Some of those AI music issues predate the latest tools, however. As far back as 2023, Spotify removed tens of thousands of AI-generated songs from distributor Boomy after discovering evidence of artificial streaming fraud, but the flood just kept coming. Last September, Spotify revealed that it had removed 75 million spam tracks over the previous year. It's a figure that rivals the scale of Spotify's actual catalog of 100 million tracks. Country music has also been particularly affected by AI music synthesis on Spotify, with AI-generated tracks sometimes topping genre charts above fully human tracks in December 2025. In a newsroom post from last year, Spotify wrote that it envisioned "a future where artists and producers are in control of how or if they incorporate AI into their creative processes" and that the company wants to leave "those creative decisions to artists themselves." Spotify focuses its enforcement on impersonation, spam, and deception rather than banning AI-generated content outright. In some ways, the stark contrast between Bandcamp and Spotify reflects their different business models. Bandcamp operates as a direct marketplace where artists sell music and merchandise to fans, taking a cut of each sale. Spotify pays artists per stream, creating incentives for bad actors to flood the platform with cheap AI content and game the algorithm. Bandcamp acknowledged the policy may evolve. "We will be sure to communicate any updates to the policy as the rapidly changing generative AI space develops," the company wrote. The announcement also noted that the company had received feedback about this issue previously, writing, "Given the response around this to our previous posts, we hope this news is welcomed." Enforcement remains an open question. Detecting AI-generated music is not straightforward, since today's products of AI synthesis realistically imitate voices and even acoustic instruments. Bandcamp did not specify what tools or methods it would use to identify AI content, only that its team would review flagged submissions. In a world where seemingly unlimited quantities of music can now be created at the push of a button, that's no minor task.

[2]

Bandcamp takes a stand against AI music, banning it from the platform | TechCrunch

The music distribution platform Bandcamp announced in a Reddit post on Tuesday that it's banning AI-generated music and audio. "We want musicians to keep making music, and for fans to have confidence that the music they find on Bandcamp was created by humans," the company said. Bandcamp's new guidelines state that music and audio generated "wholly or in substantial part by AI" is not permitted, and that it will not allow the use of AI tools to impersonate other artists or styles. So, if Drake had released "Taylor Made Freestyle" on Bandcamp, he would've had a problem (and maybe it would've been for his own good). As AI music generators like Suno become more sophisticated, it's become harder to avoid synthetic music -- songs created with AI tools have topped charts on Spotify and Billboard. AI music now sounds real enough that it can be difficult to decipher how it was made. In one high-profile example, Telisha Jones, a 31-year-old in Mississippi, used Suno to turn her (supposedly organic) poetry into the viral R&B song "How Was I Supposed To Know." Her AI "persona," Xania Monet, received multiple bids for record deals before signing with Hallwood Media in a deal reportedly worth $3 million. The legality of AI-generated music is up in the air. Suno is currently facing lawsuits from three major labels -- Sony Music Entertainment, Universal Music Group, and Warner Music Group -- alleging that the company trained its AI on copyrighted material from the labels. This hasn't deterred Silicon Valley, though. Suno raised a $250 million Series C round in November, which valued the company at $2.4 billion. While the raise was led by Menlo Ventures, Suno saw participation from Hallwood Media, the company backing Xania Monet. The legal outlook doesn't look good for artists. In a recent lawsuit, a judge ruled that Anthropic could use copyrighted books that it downloaded illegally to train its AI. What was illegal, the judge said, was that Anthropic pirated the books that it fed into its AI models. The company got a $1.5 billion slap on the wrist, which isn't very significant for a company that's valued at $183 billion. Unlike Spotify or Apple Music, Bandcamp doesn't pay artists per stream. Instead, Bandcamp allows artists to sell their music digitally alongside physical products like merch and CDs. Bandcamp only makes money from its cut of artists' sales -- but even if it presents itself as an artist-first distributor, a tech company is still a tech company, and the bottom line matters. Looking at Bandcamp's move optimistically, perhaps the company is confirming what artists hope to be true: no one is actually spending money to buy AI-generated music, at least not on Bandcamp.

[3]

Bandcamp becomes the first major music platform to ban AI content

Bandcamp has built its entire brand around serving artists. And, with the artist furor over AI growing every day, it's no surprise that the company has decided to take a stand against it. In a Reddit post, Bandcamp announced that AI-generated content would not be permitted on the platform and would be subject to removal. The guidelines leave little room for interpretation. In the post, the company says that "Music and audio that is generated wholly or in substantial part by AI is not permitted on Bandcamp." It also prohibits using AI tools to impersonate other artists or styles, similar to a rule implemented by Spotify in September. The support team also encourages people to use the site's reporting tool to flag any music that "appears to be made entirely or with heavy reliance on generative AI." In the thread, Bandcamp support also pointed people to the company's Acceptable Use and Moderation policy page, which includes an explicit ban on scraping content or using audio uploaded to Bandcamp for training AI. It says that users agree, "Not to train any machine learning or AI model using content on our site or otherwise ingest any data or content from Bandcamp's platform into a machine learning or AI model." Other platforms, such as Spotify and Deezer, have taken a much more conservative approach to combating AI-generated content, focusing primarily on imitation or relying on labels rather than bans. iHeartRadio pledged never to play AI-generated music or use AI DJs in late November. But Bandcamp is the first major music platform to outright ban AI-generated content.

[4]

Bandcamp prohibits music made 'wholly or in substantial part' by AI

Bandcamp has addressed the AI slop problem vexing musicians and their fans of late. The company is banning any music or audio on its platform that is "wholly or in substantial part" made by generative AI, according to its blog. It also clarified that the use of AI tools to impersonate other artists or styles is "strictly prohibited" by policies already in place. Any music suspected to be AI generated may be removed by the Bandcamp team and the company is giving users reporting tools to flag such content. "We believe that the human connection found through music is a vital part of our society and culture, and that music is much more than a product to be consumed," the company wrote. The announcement makes Bandcamp one of the first music platforms to offer a clear policy on the use of AI tech. AI-generated music (aka "slop") has increasingly been invading music-streaming platforms, with Deezer for one recently saying that 50,000 AI-generated songs are uploaded to the app daily, or around 34 percent of its music. Platforms have been relatively slow to act against this trend. Spotify has taken some baby steps on the matter, having recently promised to develop an industry standard for AI disclosure in music credits and debut an impersonation policy. For its part, Deezer said it remains the only streaming platform to sign a global statement on AI artist training signed by numerous actors and songwriters. Bandcamp has a solid track record for artist support, having recently unveiled Bandcamp Fridays, a day that it gives 100 percent of streaming revenue to artists. That led to over $120 million going directly to musicians, and the company plans to continue that policy in 2026.

[5]

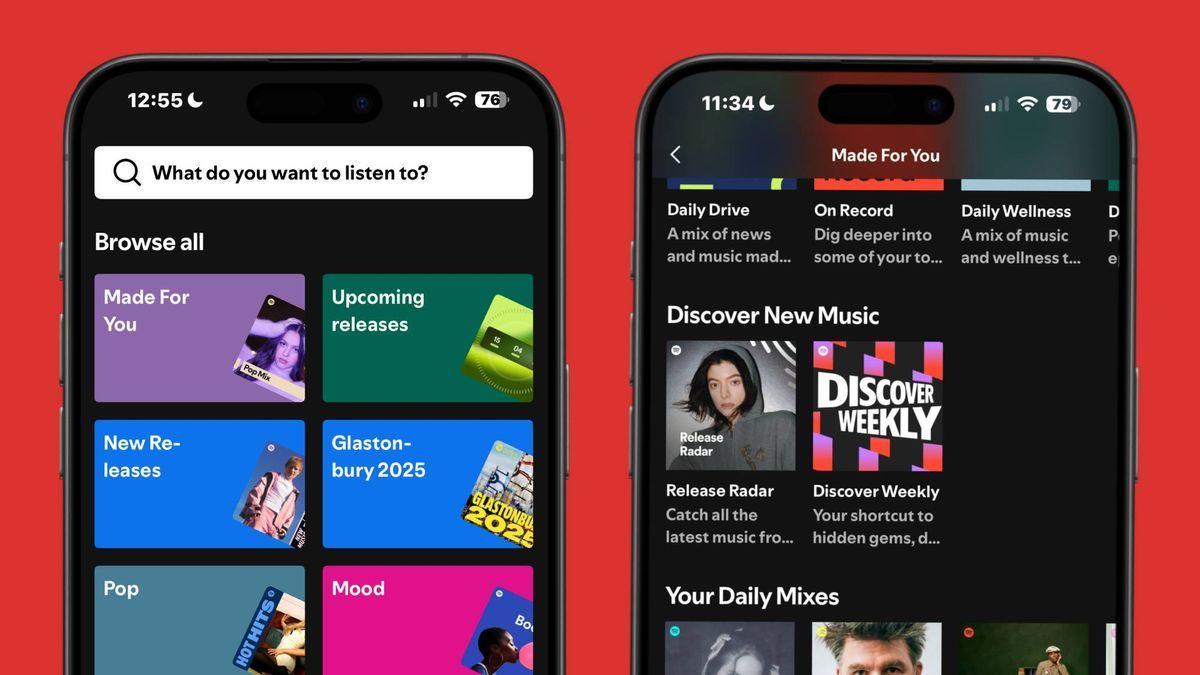

Spotify denies it's pushing unwanted AI slop onto subscribers, claiming it 'does not promote or penalize tracks created using AI tools'

While it claims it's implementing ways to tackle this, platforms such as Bandcamp have completely banned AI audio Spotify always seems to be running into controversy in some way, shape, or form, whether that's not paying artists what they deserve or taking its time to release a much-needed upgrade (looking at you, Spotify Lossless). As for its latest dispute, music fans are angrily drawing attention to AI-generated music flooding the Spotify app, claiming that the platform is forcing AI music into features such as Discover Weekly and Release Radar. I covered this recently, shedding light on a Reddit post that attracted a hefty response from users who have experienced this AI slop. The crux of the post was to call on Spotify to implement a filter system that flags whether a song has been generated using AI tools, which other music streaming services, such as Deezer, have already jumped on. So what does Spotify have to say about this heat? While the platform has yet to explain why AI music is still an occurrence in its ecosystem, we reached out for a comment, and they got back to us with the following statement: "AI is a fast-moving shift for the entire music industry, and it's not always possible to draw a simple line between 'AI' and 'non-AI' music. Spotify is focused on actions that guard against harmful AI use cases, including removing spam and deceptive content, strengthening enforcement against impersonation and unauthorized voice cloning, and supporting industry-standard AI disclosures in music credits. Spotify does not create or own any music, and does not promote or penalize tracks created using AI tools." Back in September, Spotify issued a post listing its plans to strengthen its AI protections, which included Improved enforcement of impersonation violations, a new spam filtering system, and AI disclosures for music with industry-standard credits. It's been four months since, and the AI overflow is still there for some users. With that in mind, why is it so hard to eradicate AI-generated tracks from music platforms? Well, it's not. While Spotify claims that it's doubling down on its attack against AI music, it doesn't seem to be working. Rival platforms are already tackling this, and Bandcamp has just banned AI music altogether, announcing this move via a Reddit post. Although AI music has been banned, the platform isn't blind to the fact that it can still slip in. That said, Bandcamp is encouraging users to use its reporting tools to flag any AI-generated music or audio they may run into. It's a very direct way of tackling unwanted AI rubbish, and one that could help Spotify gain back user trust.

[6]

Music Fans Explode With Joy as Bandcamp Bans AI Music

Bandcamp, a major music distribution platform favored by indie artists, announced Tuesday that it would be "putting human creativity first" by outright banning AI-generated songs -- and music aficionados are rejoicing. According to the company, music and audio generated "wholly or in substantial part by AI" will no longer be permitted on the platform. Furthermore, using AI tools "to impersonate other artists or styles" is also "strictly prohibited," representing a comprehensive crackdown on the tech. With many a "hell yeah" and a simple "good," fans have reacted to the measure with an outpouring of joy. For years, musicians and listeners alike have been frustrated with the proliferation of AI-generated music on streaming platforms like Spotify, which have largely turned a blind eye to the phenomenon. Most of the AI songs are clearly spam intended to game the algorithm and rack up views and dollars. Some pretend to be actual human bands, like the AI-generated rock group the Velvet Sundown, deceiving listeners. Still bolder spammers have even tried to impersonate famous musicians, such as when prog rock band King Gizzard & The Lizard Wizard pulled their music from Spotify in protest of the platform's stance on AI, only to be replaced by AI clones. Spotify's policies allow AI-generated music, and it's only taken action against AI tunes in cases when scammers have used it to fraudulently farm listens for money. In September, Spotify announced new measures to combat spam and the impersonation of real artists, but frequent incidents like King Gizzard's illustrate that the company is struggling to moderate against the influx of AI chum. Now, Bandcamp's drawing a clear line in the sand is being received as a breath of fresh air. "We believe that the human connection found through music is a vital part of our society and culture, and that music is much more than a product to be consumed," the company said in the announcement. "Today we are fortifying our mission by articulating our policy on generative AI, so that musicians can keep making music, and so that fans have confidence that the music they find on Bandcamp was created by humans." The company encouraged listeners to report AI-generated content, noting that it will "reserve the right to remove any music on suspicion of being AI-generated." As welcome Bandcamp's stance is, it remains to be seen how enforcing this policy will play out in practice. It seems inevitable that there could be AI witch hunts that result in innocent casualties, and Spotify's struggles show that even a multibillion dollar behemoth can be overwhelmed by the tide of AI slop.

[7]

Music marketplace Bandcamp bypasses AI disclosures and obtuse policies to announce a wholesale ban on AI slop

One of the internet's largest music marketplaces has banned content "that is generated wholly or in substantial part by AI". Bandcamp announced the new policy today, which also prohibits the use of AI tools designed to "impersonate other artists or styles". Here are the guidelines, straight from Bandcamp: The announcement comes after a surge in awful AI-generated music acts last year. The most infamous was probably The Velvet Sundown: at its peak the non-existent psych-rock four-piece was attracting 500,000 monthly listeners, but now generally only attracts around 120,000. Mind you, that is still a heck of a lot, especially for music so bland it sounds unmistakably like AI (though the same could have been said for Wolfmother in 2005, had generative AI existed back then). Bandcamp isn't a subscription streaming service like Spotify or Apple Music, but it's worth comparing policies. Spotify is lenient when it comes to AI-generated music, though it has announced it's working on AI disclosures for music -- probably something like Steam's mandatory AI disclosures, though with "industry-standard credits". Apple Music has been less transparent, though like Spotify and Bandcamp, it unambiguously forbids AI-generated impersonations. It's a welcome and bold move on Bandcamp's part, though I'm sure it won't be long before the "wholly or in substantial part" clause is tested. Spotify is often criticized for its ruthlessly low royalty payouts and algorithmic sleights of hand, but it's also terrible because it's flooded with irredeemable AI filler, not to mention the production companies it reportedly uses to fill its playlists with muzak, all the better to dampen its already-miserly royalty payouts to actual artists. (It's a bad company and a bad service and I think you should stop using it). Bandcamp managed to escape a brief stint under Epic Games in 2023, and it's probably for the best, because its policies might not be well-received by Epic boss Tim Sweeney. Last week Sweeney dismissed the need for AI disclosures, and in November, defended the use of AI-generated voices in Arc Raiders. As Tyler Wilde put it last week, it's not weird to want AI disclosures on games. As for AI use in general, Mollie Taylor puts it bluntly: It's more important than ever to call out developers for egregious AI usage if we want videogames to remain interesting.

Share

Share

Copy Link

Bandcamp announced it will no longer permit AI-generated music on its platform, becoming the first major music distributor to implement an outright ban. The new policy prohibits music created wholly or substantially by AI and any use of AI tools to impersonate other artists. This contrasts sharply with Spotify, which allows AI-generated tracks despite user frustration over an influx of synthetic content.

Bandcamp Takes Historic Stand Against AI Music

Bandcamp announced on Tuesday through a Reddit post that it will no longer permit AI-generated music on its platform, marking a decisive shift in how music platforms handle synthetic content

1

. The company stated that "music and audio that is generated wholly or in substantial part by AI is not permitted on Bandcamp," becoming the first major music platform to implement such a comprehensive ban3

. The policy also prohibits any use of AI tools to impersonate other artists or styles, drawing a clear line in an industry grappling with the flood of synthetic content2

.

Source: Engadget

The announcement emphasized protecting the platform's community of music created by humans. "The fact that Bandcamp is home to such a vibrant community of real people making incredible music is something we want to protect and maintain," the company wrote

1

. Bandcamp encouraged users to deploy reporting tools to flag suspected AI-generated content, reserving the right to remove any music on suspicion of being AI-generated4

.Contrasting Approaches Between Music Platforms

The policy represents a stark contrast with Spotify, which explicitly permits AI-generated music despite growing user frustration. Spotify removed 75 million spam tracks over the previous year, a figure rivaling the scale of its actual catalog of 100 million tracks

1

. When reached for comment, Spotify stated it "does not promote or penalize tracks created using AI tools," focusing enforcement on spam and fraudulent activities, impersonation, and voice cloning rather than outright bans5

.

Source: TechRadar

Deezer reports that approximately 50,000 AI-generated songs are uploaded to its app daily, representing around 34 percent of its music

4

. While iHeartRadio pledged in late November never to play AI-generated music or use AI DJs, Bandcamp remains the first major distributor to implement a comprehensive ban on AI-generated content3

.AI's Role in Music Creation and Legal Battles

The distinction between tool assistance and full automation remains contentious. AI models lack personhood and creative intent, but the spectrum of use runs from minor assistance like cleaning up audio to typing a prompt and generating an entire track

1

. Bandcamp's policy targets the latter while leaving room for human artists who incorporate AI tools into a larger creative process.Generative AI music tools like Suno face significant legal challenges. The platform is currently defending lawsuits from Sony Music Entertainment, Universal Music Group, and Warner Music Group alleging it trained AI models on copyrighted material

2

. Despite these legal uncertainties, Suno raised a $250 million Series C round in November, valuing the company at $2.4 billion2

.Related Stories

Business Models Drive Different Responses

The contrast between Bandcamp and Spotify reflects their different business models. Bandcamp operates as a direct marketplace where artists sell music and merchandise to fans, taking a cut of each sale

1

. This differs fundamentally from Spotify's per-stream payment model, which creates incentives for bad actors to flood the platform with cheap AI content and game the algorithm. Looking at this optimistically, Bandcamp's move may confirm what artists hope: no one is actually spending money to buy AI-generated music, at least not on their platform2

.Enforcement Challenges and Future Implications

Enforcement remains complex. Detecting AI-generated music is not straightforward since today's synthesis realistically imitates voices and acoustic instruments

1

. Bandcamp did not specify what tools or methods it would use to identify AI content, only that its team would review flagged submissions through moderation processes. The company acknowledged the policy may evolve, stating it will communicate updates as the rapidly changing generative AI space develops1

.

Source: Ars Technica

Bandcamp's Acceptable Use and Moderation policy also prohibits scraping content or using audio uploaded to the platform for training AI models

3

. This comprehensive approach addresses both the output and input sides of AI music generation, protecting user trust and preserving spaces for human expression. The platform's track record includes Bandcamp Fridays, where it gives 100 percent of streaming revenue to artists, leading to over $120 million going directly to musicians4

.References

Summarized by

Navi

[1]

Related Stories

Spotify Unveils New AI Policies to Combat Spam and Protect Artists

25 Sept 2025•Technology

King Gizzard & the Lizard Wizard quits Spotify, only to be replaced by AI music clones

08 Dec 2025•Entertainment and Society

YouTube Music users furious as AI slop floods recommendations, threatening paid subscriptions

06 Jan 2026•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology