Breakthrough in Optical Computing: Researchers Achieve AI Tensor Operations at the Speed of Light

4 Sources

4 Sources

[1]

A single beam of light runs AI with supercomputer power

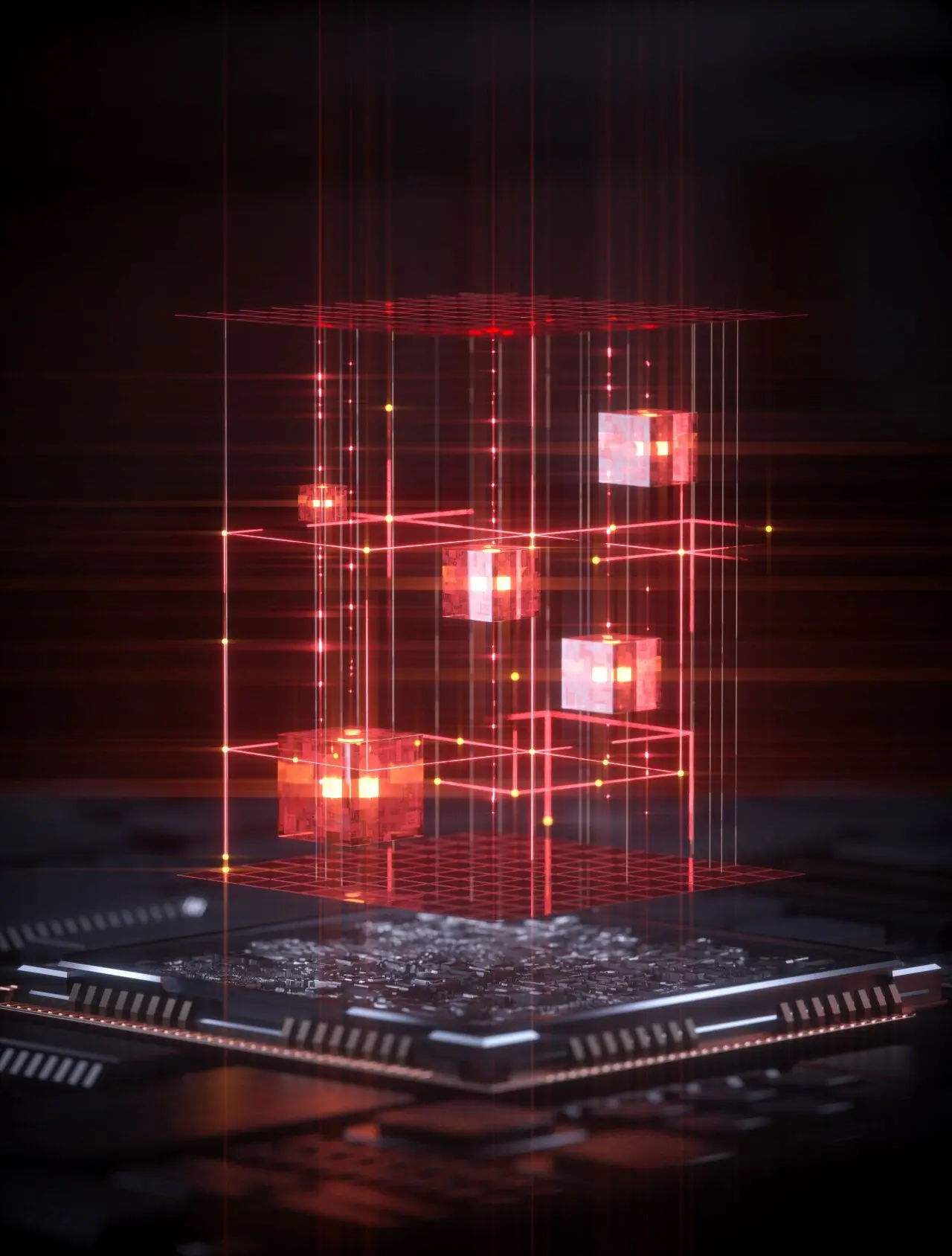

Tensor operations are a form of advanced mathematics that support many modern technologies, especially artificial intelligence. These operations go far beyond the simple calculations most people encounter. A helpful way to picture them is to imagine manipulating a Rubik's cube in several dimensions at once by rotating, slicing, or rearranging its layers. Humans and traditional computers must break these tasks into sequences, but light can perform all of them at the same time. Today, tensor operations are essential for AI systems involved in image processing, language understanding, and countless other tasks. As the amount of data continues to grow, conventional digital hardware such as GPUs faces increasing strain in speed, energy use, and scalability. Researchers Demonstrate Single-Shot Tensor Computing With Light To address these challenges, an international team led by Dr. Yufeng Zhang from the Photonics Group at Aalto University's Department of Electronics and Nanoengineering has developed a fundamentally new approach. Their method allows complex tensor calculations to be completed within a single movement of light through an optical system. The process, described as single-shot tensor computing, functions at the speed of light. "Our method performs the same kinds of operations that today's GPUs handle, like convolutions and attention layers, but does them all at the speed of light," says Dr. Zhang. "Instead of relying on electronic circuits, we use the physical properties of light to perform many computations simultaneously." Encoding Information Into Light for High-Speed Computation The team accomplished this by embedding digital information into the amplitude and phase of light waves, transforming numerical data into physical variations within the optical field. As these light waves interact, they automatically carry out mathematical procedures such as matrix and tensor multiplication, which form the basis of deep learning. By working with multiple wavelengths of light, the researchers expanded their technique to support even more complex, higher-order tensor operations. "Imagine you're a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins," Zhang says. "Normally, you'd process each parcel one by one. Our optical computing method merges all parcels and all machines together -- we create multiple 'optical hooks' that connect each input to its correct output. With just one operation, one pass of light, all inspections and sorting happen instantly and in parallel." Passive Optical Processing With Wide Compatibility One of the most striking benefits of this method is how little intervention it requires. The necessary operations occur on their own as the light travels, so the system does not need active control or electronic switching during computation. "This approach can be implemented on almost any optical platform," says Professor Zhipei Sun, leader of Aalto University's Photonics Group. "In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption." Path Toward Future Light-Based AI Hardware Zhang notes that the ultimate objective is to adapt the technique to existing hardware and platforms used by major technology companies. He estimates that the method could be incorporated into such systems within 3 to 5 years. "This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields," he concludes.

[2]

Optical method runs AI tensor operations at the speed of light

Tensor operations drive nearly every AI task today. GPUs handle them well, but the surge in data has exposed limits in speed, power efficiency and scalability. This pressure pushed an international team led by Dr. Yufeng Zhang of Aalto University to look beyond electronic circuits. The group has developed "single-shot tensor computing," a technique that uses the physical properties of light to process data. Light waves carry amplitude and phase. The team encoded digital information into these properties and allowed the waves to interact as they travel. That interaction performs the same mathematical operations as deep learning systems. "Our method performs the same kinds of operations that today's GPUs handle, like convolutions and attention layers, but does them all at the speed of light," says Dr. Zhang. He adds that the system avoids electronic switching because the optical operations unfold naturally during propagation. The researchers pushed this method further by adding multiple wavelengths of light. Each wavelength behaves like its own computational channel, which lets the system process higher-order tensor operations in parallel.

[3]

AI at the speed of light just became a possibility

Researchers at Aalto University have demonstrated single-shot tensor computing at the speed of light, a remarkable step towards next-generation artificial general intelligence hardware powered by optical computation rather than electronics. Tensor operations are the kind of arithmetic that form the backbone of nearly all modern technologies, especially artificial intelligence, yet they extend beyond the simple math we're familiar with. Imagine the mathematics behind rotating, slicing, or rearranging a Rubik's cube along multiple dimensions. While humans and classical computers must perform these operations step by step, light can do them all at once. Today, every task in AI, from image recognition to natural language processing, relies on tensor operations. However, the explosion of data has pushed conventional digital computing platforms, such as GPUs, to their limits in terms of speed, scalability and energy consumption. How light enables instant tensor math Motivated by this pressing problem, an international research collaboration led by Dr. Yufeng Zhang from the Photonics Group at Aalto University's Department of Electronics and Nanoengineering has unlocked a new approach that performs complex tensor computations using a single propagation of light. The result is single-shot tensor computing, achieved at the speed of light itself. The research was published in Nature Photonics. "Our method performs the same kinds of operations that today's GPUs handle, like convolutions and attention layers, but does them all at the speed of light," says Dr. Zhang. "Instead of relying on electronic circuits, we use the physical properties of light to perform many computations simultaneously." To achieve this, the researchers encoded digital data into the amplitude and phase of light waves, effectively turning numbers into physical properties of the optical field. When these light fields interact and combine, they naturally carry out mathematical operations such as matrix and tensor multiplications, which form the core of deep learning algorithms. By introducing multiple wavelengths of light, the team extended this approach to handle even higher-order tensor operations. Potential impact and future applications "Imagine you're a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins," Zhang explains. "Normally, you'd process each parcel one by one. Our optical computing method merges all parcels and all machines together -- we create multiple 'optical hooks' that connect each input to its correct output. With just one operation, one pass of light, all inspections and sorting happen instantly and in parallel." Another key advantage of this method is its simplicity. The optical operations occur passively as the light propagates, so no active control or electronic switching is needed during computation. "This approach can be implemented on almost any optical platform," says Professor Zhipei Sun, leader of Aalto University's Photonics Group. "In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption." Ultimately, the goal is to deploy the method on the existing hardware or platforms established by major companies, says Zhang, who conservatively estimates the approach will be integrated into such platforms within three to five years. "This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields," he concludes.

[4]

Light powered tensor computing could upend how AI hardware works

Aalto University researchers performed AI tensor operations with a single pass of light, encoding data into light waves for passive, simultaneous calculations integrated into photonic chips for faster, energy-efficient AI systems. The study was published in Nature Photonics on November 14th, 2025. Tensor operations, crucial for AI in image processing and language understanding, are advanced mathematical computations. Conventional digital hardware, including GPUs, faces speed, energy use, and scalability challenges with increasing data volumes. An international team, led by Dr. Yufeng Zhang from Aalto University's Photonics Group, developed an approach enabling complex tensor calculations in a single movement of light through an optical system. This process, termed single-shot tensor computing, operates at the speed of light. "Our method performs the same kinds of operations that today's GPUs handle, like convolutions and attention layers, but does them all at the speed of light," stated Dr. Zhang. "Instead of relying on electronic circuits, we use the physical properties of light to perform many computations simultaneously." The team embedded digital information into the amplitude and phase of light waves, converting numerical data into physical variations within the optical field. These light waves interact, automatically performing mathematical procedures like matrix and tensor multiplication, fundamental to deep learning. Utilizing multiple wavelengths of light expanded the technique to support higher-order tensor operations. "Imagine you're a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins," Zhang explained. "Normally, you'd process each parcel one by one. Our optical computing method merges all parcels and all machines together -- we create multiple 'optical hooks' that connect each input to its correct output. With just one operation, one pass of light, all inspections and sorting happen instantly and in parallel." Operations occur as light travels, eliminating the need for active control or electronic switching during computation. "This approach can be implemented on almost any optical platform," said Professor Zhipei Sun, leader of Aalto University's Photonics Group. "In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption." Zhang indicated the goal is to adapt the technique to existing hardware and platforms used by major technology companies, estimating integration within 3 to 5 years. "This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields," he concluded.

Share

Share

Copy Link

Aalto University researchers have developed single-shot tensor computing using light waves, potentially revolutionizing AI hardware by performing complex calculations at light speed with significantly reduced power consumption.

Revolutionary Optical Computing Method

Researchers at Aalto University have achieved a groundbreaking advancement in artificial intelligence hardware by developing a method to perform complex tensor operations using light waves at unprecedented speeds. The international team, led by Dr. Yufeng Zhang from the Photonics Group at Aalto University's Department of Electronics and Nanoengineering, has demonstrated what they term "single-shot tensor computing" – a technique that completes intricate mathematical calculations within a single movement of light through an optical system

1

.The research, published in Nature Photonics, represents a fundamental shift from traditional electronic computing methods. "Our method performs the same kinds of operations that today's GPUs handle, like convolutions and attention layers, but does them all at the speed of light," explains Dr. Zhang

2

. This approach addresses critical limitations in current AI hardware, particularly the increasing strain on conventional digital systems as data volumes continue to expand exponentially.Technical Innovation Behind Light-Based Computing

The breakthrough centers on encoding digital information directly into the amplitude and phase properties of light waves, effectively transforming numerical data into physical variations within optical fields. As these engineered light waves interact and propagate through the system, they automatically perform mathematical operations such as matrix and tensor multiplications – the fundamental building blocks of deep learning algorithms .

Source: Interesting Engineering

To enhance computational capacity, the research team incorporated multiple wavelengths of light, with each wavelength functioning as an independent computational channel. This multi-wavelength approach enables the system to process higher-order tensor operations in parallel, significantly expanding the method's applicability to complex AI tasks

4

.Dr. Zhang illustrates the concept through an analogy: "Imagine you're a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins. Normally, you'd process each parcel one by one. Our optical computing method merges all parcels and all machines together – we create multiple 'optical hooks' that connect each input to its correct output. With just one operation, one pass of light, all inspections and sorting happen instantly and in parallel"

1

.Related Stories

Advantages and Implementation Potential

One of the most significant advantages of this optical computing approach is its passive nature. The mathematical operations occur naturally as light propagates through the system, eliminating the need for active control or electronic switching during computation. This characteristic not only simplifies the hardware requirements but also contributes to the method's energy efficiency

2

.Professor Zhipei Sun, leader of Aalto University's Photonics Group, emphasizes the versatility of the approach: "This approach can be implemented on almost any optical platform. In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption" .

Source: Tech Xplore

The research team envisions practical implementation within existing technology infrastructure. Dr. Zhang estimates that the method could be incorporated into platforms used by major technology companies within three to five years, creating "a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields"

4

.References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

MIT Develops Ultrafast Photonic Chip for AI Computations with Extreme Energy Efficiency

03 Dec 2024•Technology

Photonic Chips: A Breakthrough in AI Processing and Energy Efficiency

10 Apr 2025•Technology

Revolutionary Light-Based AI Chip: Smaller Than a Speck of Dust, Faster Than Traditional Computing

08 Feb 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology