Attorneys General Warn OpenAI Over ChatGPT Safety Concerns Following User Deaths

10 Sources

10 Sources

[1]

Attorneys general warn OpenAI 'harm to children will not be tolerated' | TechCrunch

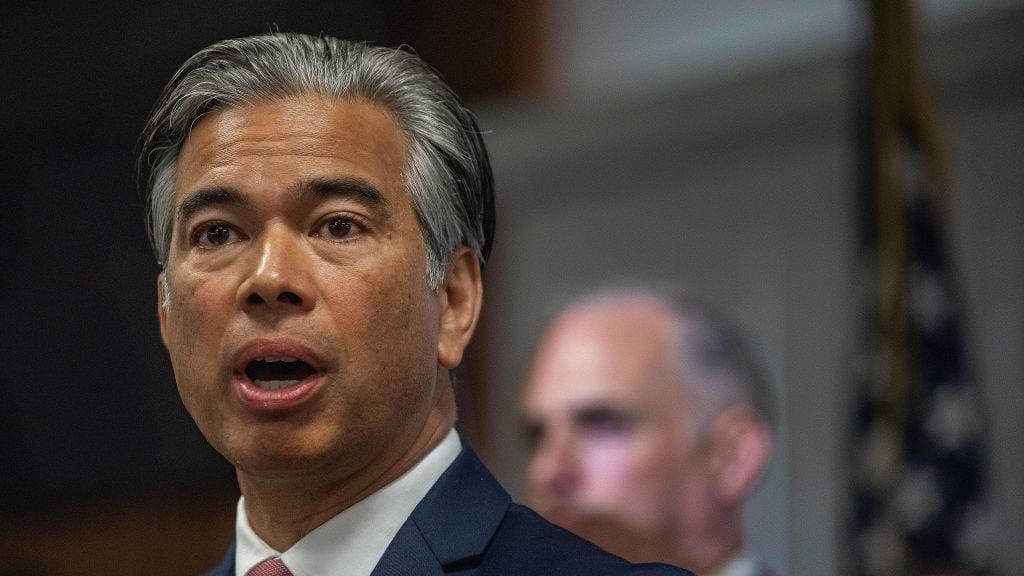

California Attorney General Rob Bonta and Delaware Attorney General Kathy Jennings met with and sent an open letter to OpenAI to express their concerns over the safety of ChatGPT, particularly for children and teens. The warning comes a week after Bonta and 44 other attorneys general sent a letter to 12 of the top AI companies, following reports of sexually inappropriate interactions between AI chatbots and children. "Since the issuance of that letter, we learned of the heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot, as well as a similarly disturbing murder-suicide in Connecticut," Bonta and Jennings write. "Whatever safeguards were in place did not work." The two state officials are currently investigating OpenAI's proposed restructuring into a for-profit entity to ensure that the mission of the nonprofit remains intact. That mission "includes ensuring that artificial intelligence is deployed safely" and building artificial general intelligence (AGI) to benefit all humanity, "including children," per the letter. "Before we get to benefiting, we need to ensure that adequate safety measures are in place to not harm," the letter continues. "It is our shared view that OpenAI and the industry at large are not where they need to be in ensuring safety in AI products' development and deployment. As Attorneys General, public safety is one of our core missions. As we continue our dialogue related to OpenAI's recapitalization plan, we must work to accelerate and amplify safety as a governing force in the future of this powerful technology." Bonta and Jennings have asked for more information about OpenAI's current safety precautions and governance, and said they expect the company to take immediate remedial measures where appropriate. TechCrunch has reached out to OpenAI for comment.

[2]

Attorneys General to OpenAI: We're Concerned About Child Safety, For-Profit Pivot

Don't miss out on our latest stories. Add PCMag as a preferred source on Google. California Attorney General Rob Bonta and Delaware Attorney General Kathy Jennings have sent a letter to OpenAI, expressing serious concerns about the risks the ChatGPT maker's products pose to children and promising to subject the company's for-profit pivot to review. The attorneys general also said they intend to review OpenAI's proposed pivot to becoming a commercial entity "to ensure the nonprofit beneficiaries' interests are adequately protected" and that "the mission of the nonprofit remains paramount." The letter comes just under a week after the parents of 16-year-old Adam Raine filed a lawsuit against the company, claiming it played a role in their son's suicide. "It is our shared view that OpenAI and the industry at large are not where they need to be in ensuring safety in AI products' development and deployment," read the letter to OpenAI's board. "As Attorneys General, public safety is one of our core missions." The letter called the recent deaths allegedly linked to ChatGPT "unacceptable" and said they have "rightly shaken" the American public's confidence in the company. OpenAI backpedaled on plans to transition into a for-profit company in May, but it still plans to transform its capped-profit commercial arm into what's called a "public benefit corporation," a legal entity in the state of Delaware that is obligated to weigh both the business and societal benefit of its decisions. OpenAI, which started out as a non-profit in 2015 and later started a for-profit division in 2019, has faced legal action from co-founder Elon Musk over its move away from its nonprofit roots, with Musk's lawyers accusing CEO Sam Altman of "brazen self-dealing." The company has been public about plans to shore up its child safety measures. OpenAI recently announced new parental controls to be rolled out next month, which will allow users to control how ChatGPT talks to their child, and get notifications in instances of "acute distress." Still, OpenAI isn't close to being the only AI firm on the receiving end of serious legal scrutiny over its child safety record. So far this year, Meta has also been hit with letters from Attorneys General and Senators over how its roleplaying features interact with children. Disclosure: Ziff Davis, PCMag's parent company, filed a lawsuit against OpenAI in April 2025, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

[3]

OpenAI reorg at risk as Attorneys General push AI safety

California, Delaware AGs blast ChatGPT shop over chatbot safeguards The Attorneys General of California and Delaware on Friday wrote to OpenAI's board of directors, demanding that the AI company take steps to ensure its services are safe for children. California Attorney General Rob Bonta and Delaware Attorney General Kathy Jennings in an open letter [PDF] cited "the heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot" as evidence that "whatever safeguards were in place did not work." ChatGPT is also said to have contributed to a recent murder-suicide in Connecticut, though the victims were adults. Expressing his horror upon hearing that children have been harmed by interacting with chatbots, Bonta said that he and Jennings have been reviewing the proposed restructuring of OpenAI, scrutiny [PDF] that began last year shortly before OpenAI announced plans to turn the nonprofit entity into a Public Benefit Corporation. "Together, we are particularly concerned with ensuring that the stated safety mission of OpenAI as a nonprofit remains front and center," Bonta said. Translation: "Nice restructuring plan you have there. It'd be a shame if something happens to it because OpenAI prioritized profits over people." OpenAI's planned corporate reconfiguration - which would allow the company to raise more money from investors and would enrich insiders - has been opposed by, among others, a group called Not for Private Gain, led by Page Hedley, a former policy and ethics adviser at OpenAI, and The Midas Project, recently subpoenaed by OpenAI based on its belief that the nonprofit is backed by Elon Musk. Musk helped found OpenAI then parted ways with the firm, challenging it in court, and launching rival xAI. Under its current structure, OpenAI has a legally enforceable requirement to put the public interest over profits. That would not be the case under the proposed restructuring. In a statement provided to The Register, Bret Taylor, Chair of the OpenAI Board, said that the company is fully committed to addressing the concerns raised by the attorneys general. "We are heartbroken by these tragedies and our deepest sympathies are with the families," Taylor said. "Safety is our highest priority and we're working closely with policymakers around the world. Today, ChatGPT includes safeguards such as directing people to crisis helplines, and we are working urgently with leading experts to make these even stronger." Taylor cited commitments made earlier this week to roll out expanded protections for teens, including parental controls and "the ability for parents to be notified when the system detects their teen is in a moment of acute distress." The Bonta-Jennings missive follows a related letter on August 25, 2025, from a bipartisan group of 44 State Attorneys General to the CEOs of 13 technology companies, including Apple, Google, Meta, Microsoft, and OpenAI. That letter warns that corporate leaders will be held accountable for platform safety policies that fail to protect children from inappropriate content - something that Meta reportedly has failed to do. Such threats, however, seem hollow in light of the absence of any consequential government enforcement against tech firms in the past two decades, to say nothing of the Trump administration rescinding President Biden's AI safety order and eliminating the term "Safety" in its rebranding of the US AI Safety Institute - now known as the Center for AI Standards and Innovation. ®

[4]

OpenAI pressed on safety after deaths of ChatGPT users

The top state lawyers in California and Delaware wrote to OpenAI raising "serious concerns" after the deaths of some chatbot users and said safety must be improved before they sign off on its planned restructuring. In their letter on Friday to OpenAI's chair Bret Taylor, the attorneys-general said that recent reports of young people committing suicide or murder after prolonged interactions with artificial intelligence chatbots including the company's ChatGPT had "shaken the American public's confidence in the company". They added that "whatever safeguards were in place did not work". OpenAI, which is incorporated in Delaware and headquartered in California, was founded in 2015 as a non-profit with a mission to build safe, powerful AI to benefit all humanity. The attorneys-general -- Rob Bonta in California and Kathy Jennings in Delaware -- have a crucial role in regulating the company and holding it to its public benefit mission. Bonta and Jennings will ultimately determine whether OpenAI can convert part of its businesses to allow investors to take traditional equity in it and unlock an eventual public offering. The pair's intervention on Friday, and a meeting earlier this week with OpenAI's legal team, followed "the heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot, as well as a similarly disturbing murder-suicide in Connecticut", they wrote. Those incidents have brought "into acute focus . . . the real-world challenges, and importance, of implementing OpenAI's mission", the attorneys-general added. Almost three years after the release of ChatGPT, some dangerous affects of the powerful technology are coming to light. OpenAI is being sued by the family of Adam Raine, who took his own life in April at the age of 16 after prolonged interactions with the chatbot. The company announced this week that it would introduce parental controls for ChatGPT. OpenAI has already ditched its original plans to convert the company into a for-profit, following discussions with the attorneys-general and under legal attack from Elon Musk and a number of other groups. Instead, it is seeking to convert only a subsidiary, allowing investors to hold equity while ensuring the non-profit board retains ultimate control. Friday's intervention suggests that even this more modest goal is under threat if OpenAI cannot demonstrate safety improvements. "Safety is a non-negotiable priority, especially when it comes to children," wrote the attorneys-general. Since 2015, the small research lab has grown into a commercial behemoth with 700mn regular users for its flagship ChatGPT. It has sought ever-larger amounts of outside capital, and is raising $40bn as it looks to fend off competition from rivals such as Anthropic, Meta, Google and Musk's xAI. The race between those groups has created a tension between upholding safety and commercialising the powerful technology. Last month, a group of 44 attorneys-general wrote to the leading companies building AI tools to warn that "the potential harms of AI, like the potential benefits, dwarf the impact of social media". "We wish you all success in the race for AI dominance. But we are paying attention. If you knowingly harm kids, you will answer for it," they wrote. OpenAI did not immediately respond to a request for comment.

[5]

California and Delaware AGs warn OpenAI over ChatGPT safety

The attorneys general of California and Delaware on Friday warned OpenAI they have "serious concerns" about the safety of its flagship chatbot, ChatGPT, especially for children and teens. The two state officials, who have unique powers to regulate nonprofits such as OpenAI, sent the letter to the company after a meeting with its legal team earlier this week in Wilmington, Delaware. California AG Rob Bonta and Delaware AG Kathleen Jennings have spent months reviewing OpenAI's plans to restructure its business, with an eye on "ensuring rigorous and robust oversight of OpenAI's safety mission." But they said they were concerned by "deeply troubling reports of dangerous interactions between" chatbots and their users, including the "heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot, as well as a similarly disturbing murder-suicide in Connecticut. Whatever safeguards were in place did not work." The parents of the 16-year-old boy, who died in April, sued OpenAI and its CEO, Sam Altman, last month. Founded as a nonprofit with a safety-focused mission to build better-than-human artificial intelligence, OpenAI had recently sought to have its for-profit arm take control of the nonprofit before dropping those plans in May after discussions with the offices of Bonta and Jennings and other nonprofit groups. The two elected officials, both Democrats, have oversight of any such changes because OpenAI is incorporated in Delaware and operates out of California, where it has its headquarters in San Francisco. After dropping its initial plans, OpenAI has been seeking the officials' approval for a "recapitalization," in which the nonprofit's existing for-profit arm will convert into a public benefit corporation that has to consider the interests of both shareholders and the mission. Bonta and Jennings wrote Friday of their "shared view" that OpenAI and the industry need better safety measures. "The recent deaths are unacceptable," they wrote. "They have rightly shaken the American public's confidence in OpenAI and this industry. OpenAI - and the AI industry - must proactively and transparently ensure AI's safe deployment. Doing so is mandated by OpenAI's charitable mission, and will be required and enforced by our respective offices." OpenAI didn't immediately respond to a request for comment on Friday.

[6]

Attorney generals warn OpenAI and other tech companies to improve chatbot safety

The attorneys general of California and Delaware on Friday warned OpenAI they have "serious concerns" about the safety of its flagship chatbot, ChatGPT, especially for children and teens. The two state officials, who have unique powers to regulate nonprofits such as OpenAI, sent the letter to the company after a meeting with its legal team earlier this week in Wilmington, Delaware. California AG Rob Bonta and Delaware AG Kathleen Jennings have spent months reviewing OpenAI's plans to restructure its business, with an eye on "ensuring rigorous and robust oversight of OpenAI's safety mission." But they said they were concerned by "deeply troubling reports of dangerous interactions between" chatbots and their users, including the "heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot, as well as a similarly disturbing murder-suicide in Connecticut. Whatever safeguards were in place did not work." The parents of the 16-year-old boy, who died in April, sued OpenAI and its CEO, Sam Altman, last month. OpenAI didn't immediately respond to a request for comment on Friday. Founded as a nonprofit with a safety-focused mission to build better-than-human artificial intelligence, OpenAI had recently sought to transfer more control to its for-profit arm from its nonprofit before dropping those plans in May after discussions with the offices of Bonta and Jennings and other nonprofit groups. The two elected officials, both Democrats, have oversight of any such changes because OpenAI is incorporated in Delaware and operates out of California, where it has its headquarters in San Francisco. After dropping its initial plans, OpenAI has been seeking the officials' approval for a "recapitalization," in which the nonprofit's existing for-profit arm will convert into a public benefit corporation that has to consider the interests of both shareholders and the mission. Bonta and Jennings wrote Friday of their "shared view" that OpenAI and the industry need better safety measures. "The recent deaths are unacceptable," they wrote. "They have rightly shaken the American public's confidence in OpenAI and this industry. OpenAI - and the AI industry - must proactively and transparently ensure AI's safe deployment. Doing so is mandated by OpenAI's charitable mission, and will be required and enforced by our respective offices." The letter to OpenAI from the California and Delaware officials comes after a bipartisan group of 44 attorneys general warned the company and other tech firms -- including Meta and Google -- last week of of "grave concerns" about the safety of children interacting with AI chatbots that can respond with "sexually suggestive conversations and emotionally manipulative behavior." The attorney generals specifically called out Meta for chatbots that reportedly engaged in flirting and "romantic roleplay" with children, saying they were alarmed that that these chatbots "are engaging in conduct that appears to be prohibited by our respective criminal laws." They said the companies would be held accountable for harming children, noting that in the past, regulators had not moved swiftly to respond to the harms posed by new technologies. "If you knowingly harm kids, you will answer for it," the Aug. 25 letter ends. -- -- The Associated Press and OpenAI have a licensing and technology agreement that allows OpenAI access to part of AP's text archives.

[7]

California and Delaware AGs warn OpenAI over ChatGPT safety

The attorneys general of California and Delaware on Friday warned OpenAI they have "serious concerns" about the safety of its flagship chatbot, ChatGPT, especially for children and teens. The two state officials, who have unique powers to regulate nonprofits such as OpenAI, sent the letter to the company after a meeting with its legal team earlier this week in Wilmington, Delaware. California AG Rob Bonta and Delaware AG Kathleen Jennings have spent months reviewing OpenAI's plans to restructure its business, with an eye on "ensuring rigorous and robust oversight of OpenAI's safety mission." But they said they were concerned by "deeply troubling reports of dangerous interactions between" chatbots and their users, including the "heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot, as well as a similarly disturbing murder-suicide in Connecticut. Whatever safeguards were in place did not work." The parents of the 16-year-old boy, who died in April, sued OpenAI and its CEO, Sam Altman, last month. Founded as a nonprofit with a safety-focused mission to build better-than-human artificial intelligence, OpenAI had recently sought to have its for-profit arm take control of the nonprofit before dropping those plans in May after discussions with the offices of Bonta and Jennings and other nonprofit groups. The two elected officials, both Democrats, have oversight of any such changes because OpenAI is incorporated in Delaware and operates out of California, where it has its headquarters in San Francisco. After dropping its initial plans, OpenAI has been seeking the officials' approval for a "recapitalization," in which the nonprofit's existing for-profit arm will convert into a public benefit corporation that has to consider the interests of both shareholders and the mission. Bonta and Jennings wrote Friday of their "shared view" that OpenAI and the industry need better safety measures. "The recent deaths are unacceptable," they wrote. "They have rightly shaken the American public's confidence in OpenAI and this industry. OpenAI - and the AI industry - must proactively and transparently ensure AI's safe deployment. Doing so is mandated by OpenAI's charitable mission, and will be required and enforced by our respective offices." The more focused letter from the California and Delaware officials follows a letter sent to OpenAI and other tech firms -- including Meta and Google -- last week from a bipartisan group of 44 attorneys general who also warned of "grave concerns" about the safety of children interacting with AI chatbots that can respond with "sexually suggestive conversations and emotionally manipulative behavior." OpenAI didn't immediately respond to a request for comment on Friday.

[8]

California and Delaware AGs Warn OpenAI Over ChatGPT Safety

The attorneys general of California and Delaware on Friday warned OpenAI they have "serious concerns" about the safety of its flagship chatbot, ChatGPT, especially for children and teens. The two state officials, who have unique powers to regulate nonprofits such as OpenAI, sent the letter to the company after a meeting with its legal team earlier this week in Wilmington, Delaware. California AG Rob Bonta and Delaware AG Kathleen Jennings have spent months reviewing OpenAI's plans to restructure its business, with an eye on "ensuring rigorous and robust oversight of OpenAI's safety mission." But they said they were concerned by "deeply troubling reports of dangerous interactions between" chatbots and their users, including the "heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot, as well as a similarly disturbing murder-suicide in Connecticut. Whatever safeguards were in place did not work." The parents of the 16-year-old boy, who died in April, sued OpenAI and its CEO, Sam Altman, last month. Founded as a nonprofit with a safety-focused mission to build better-than-human artificial intelligence, OpenAI had recently sought to have its for-profit arm take control of the nonprofit before dropping those plans in May after discussions with the offices of Bonta and Jennings and other nonprofit groups. The two elected officials, both Democrats, have oversight of any such changes because OpenAI is incorporated in Delaware and operates out of California, where it has its headquarters in San Francisco. After dropping its initial plans, OpenAI has been seeking the officials' approval for a "recapitalization," in which the nonprofit's existing for-profit arm will convert into a public benefit corporation that has to consider the interests of both shareholders and the mission. Bonta and Jennings wrote Friday of their "shared view" that OpenAI and the industry need better safety measures. "The recent deaths are unacceptable," they wrote. "They have rightly shaken the American public's confidence in OpenAI and this industry. OpenAI - and the AI industry - must proactively and transparently ensure AI's safe deployment. Doing so is mandated by OpenAI's charitable mission, and will be required and enforced by our respective offices." The more focused letter from the California and Delaware officials follows a letter sent to OpenAI and other tech firms -- including Meta and Google -- last week from a bipartisan group of 44 attorneys general who also warned of "grave concerns" about the safety of children interacting with AI chatbots that can respond with "sexually suggestive conversations and emotionally manipulative behavior." OpenAI didn't immediately respond to a request for comment on Friday.

[9]

States warn OpenAI of 'serious concerns' with chatbot

California and Delaware warned OpenAI on Friday that they have "serious concerns" about the artificial intelligence (AI) company's safety practices in the wake of several recent deaths reportedly connected to ChatGPT. In a letter to the OpenAI board, California Attorney General Rob Bonta and Delaware Attorney General Kathleen Jennings noted they recently met with the firm's legal team and "conveyed in the strongest terms that safety is a non-negotiable priority, especially when it comes to children." The pair's latest missive comes after the family of a 16-year-old boy sued OpenAI last Tuesday, alleging ChatGPT encouraged him to take his own life. The Wall Street Journal also reported last week that the chatbot fueled a 56-year-old Connecticut man's paranoia before he killed himself and his mother in August. "The recent deaths are unacceptable," Bonta and Jennings wrote. "They have rightly shaken the American public's confidence in OpenAI and this industry." "OpenAI - and the AI industry - must proactively and transparently ensure AI's safe deployment," they continued. "Doing so is mandated by OpenAI's charitable mission, and will be required and enforced by our respective offices." The state attorneys general underscored the need to center safety as they continue discussions with the company about its restructuring plans. "It is our shared view that OpenAI and the industry at large are not where they need to be in ensuring safety in AI products' development and deployment," Bonta and Jennings said. "As we continue our dialogue related to OpenAI's recapitalization plan, we must work to accelerate and amplify safety as a governing force in the future of this powerful technology," they added. OpenAI, which is based in California and incorporated in Delaware, has previously engaged with the pair on its efforts to alter the company's corporate structure. It initially announced plans to fully transition the firm into a for-profit company without non-profit oversight last December. However, it later walked back the push, agreeing to keep the non-profit in charge, citing discussions with the attorneys general and other leaders. In the wake of recent reports about ChatGPT-connected deaths, OpenAI announced Tuesday that it was adjusting how its chatbots respond to people in crisis and enacting stronger protections for teens. OpenAI is not the only tech company under fire lately over its AI chatbots. Reuters reported last month that a Meta policy document featured examples suggesting its chatbots could engage in "romantic or sensual" conversations with children. The social media company said it has since removed this language. It also later told TechCrunch it is updating its policies to restrict certain topics for teen users, including discussions of self-harm, suicide, disordered eating or potentially inappropriate romantic conversations.

[10]

US attorneys general warn OpenAI, rivals to improve chatbot safety - The Economic Times

California and Delaware's attorneys general have expressed serious safety concerns to OpenAI regarding ChatGPT, especially its impact on children and teens, following reports of dangerous interactions and tragic deaths. They are reviewing OpenAI's restructuring plans to ensure rigorous oversight of its safety mission.The attorneys general of California and Delaware on Friday warned OpenAI they have "serious concerns" about the safety of its flagship chatbot, ChatGPT, especially for children and teens. The two state officials, who have unique powers to regulate nonprofits such as OpenAI, sent the letter to the company after a meeting with its legal team earlier this week in Wilmington, Delaware. California AG Rob Bonta and Delaware AG Kathleen Jennings have spent months reviewing OpenAI's plans to restructure its business, with an eye on "ensuring rigorous and robust oversight of OpenAI's safety mission." But they said they were concerned by "deeply troubling reports of dangerous interactions between" chatbots and their users, including the "heartbreaking death by suicide of one young Californian after he had prolonged interactions with an OpenAI chatbot, as well as a similarly disturbing murder-suicide in Connecticut. Whatever safeguards were in place did not work." The parents of the 16-year-old California boy, who died in April, sued OpenAI and its CEO, Sam Altman, last month. OpenAI didn't immediately respond to a request for comment on Friday. Founded as a nonprofit with a safety-focused mission to build better-than-human artificial intelligence, OpenAI had recently sought to transfer more control to its for-profit arm from its nonprofit before dropping those plans in May after discussions with the offices of Bonta and Jennings and other nonprofit groups. The two elected officials, both Democrats, have oversight of any such changes because OpenAI is incorporated in Delaware and operates out of California, where it has its headquarters in San Francisco. After dropping its initial plans, OpenAI has been seeking the officials' approval for a "recapitalization," in which the nonprofit's existing for-profit arm will convert into a public benefit corporation that has to consider the interests of both shareholders and the mission. Bonta and Jennings wrote Friday of their "shared view" that OpenAI and the industry need better safety measures. "The recent deaths are unacceptable," they wrote. "They have rightly shaken the American public's confidence in OpenAI and this industry. OpenAI - and the AI industry - must proactively and transparently ensure AI's safe deployment. Doing so is mandated by OpenAI's charitable mission, and will be required and enforced by our respective offices." The letter to OpenAI from the California and Delaware officials comes after a bipartisan group of 44 attorneys general warned the company and other tech firms last week of "grave concerns" about the safety of children interacting with AI chatbots that can respond with "sexually suggestive conversations and emotionally manipulative behavior." The attorneys general specifically called out Meta for chatbots that reportedly engaged in flirting and "romantic role-play" with children, saying they were alarmed that these chatbots "are engaging in conduct that appears to be prohibited by our respective criminal laws." Meta, the parent company of Facebook, Instagram and WhatsApp, declined to comment on the letter but recently rolled out new controls that aim to block its chatbots from talking with teens about self-harm, suicide, disordered eating and inappropriate romantic conversations, and instead directs them to expert resources. The attorneys general said the companies would be held accountable for harming children, noting that in the past, regulators had not moved swiftly to respond to the harms posed by new technologies. "If you knowingly harm kids, you will answer for it," the Aug. 25 letter ends.

Share

Share

Copy Link

California and Delaware Attorneys General express serious concerns about OpenAI's ChatGPT safety, especially for children and teens, following reports of user deaths. They warn that the company's restructuring plans may be at risk if safety measures aren't improved.

Attorneys General Raise Alarm Over ChatGPT Safety

California Attorney General Rob Bonta and Delaware Attorney General Kathy Jennings have issued a stern warning to OpenAI, expressing "serious concerns" about the safety of its flagship chatbot, ChatGPT, particularly for children and teens

1

2

. This warning comes in the wake of tragic incidents allegedly linked to interactions with AI chatbots, including the suicide of a young Californian and a murder-suicide in Connecticut1

3

.

Source: AP

Recent Tragedies Spark Urgent Action

The attorneys general cited these incidents as evidence that "whatever safeguards were in place did not work"

1

. The letter to OpenAI's board emphasized that these events have "rightly shaken the American public's confidence in the company"4

. The parents of 16-year-old Adam Raine, who took his own life in April after prolonged interactions with ChatGPT, have filed a lawsuit against OpenAI2

4

.Scrutiny of OpenAI's Restructuring Plans

Bonta and Jennings, who have unique powers to regulate nonprofits like OpenAI, are currently reviewing the company's proposed restructuring from a nonprofit to a Public Benefit Corporation

3

5

. They stressed that safety must be improved before they sign off on this planned reorganization4

. The attorneys general are particularly concerned with ensuring that OpenAI's stated safety mission as a nonprofit remains paramount3

.Demands for Enhanced Safety Measures

The letter calls for OpenAI to take immediate remedial measures where appropriate and provide more information about its current safety precautions and governance

1

. OpenAI has announced plans to introduce new parental controls for ChatGPT, including the ability to control how the AI interacts with children and notifications for instances of "acute distress"2

4

.

Source: The Register

Related Stories

Broader Industry Implications

This warning is part of a larger trend of increased scrutiny on AI companies. Last month, 44 state attorneys general sent a letter to 13 leading technology companies, including OpenAI, Apple, Google, Meta, and Microsoft, warning that corporate leaders will be held accountable for platform safety policies that fail to protect children from inappropriate content

3

5

.OpenAI's Response and Future Challenges

Bret Taylor, Chair of the OpenAI Board, stated that the company is fully committed to addressing the concerns raised by the attorneys general

3

. However, the incident highlights the ongoing challenges in balancing rapid AI development with necessary safety precautions. As OpenAI seeks to raise $40 billion to compete with rivals like Anthropic, Meta, Google, and Elon Musk's xAI, the tension between safety and commercialization of AI technology remains a critical issue4

.

Source: PC Magazine

The attorneys general's intervention suggests that even OpenAI's more modest goal of converting only a subsidiary to allow investor equity might be at risk if the company cannot demonstrate significant safety improvements

4

. As the AI race intensifies, the industry faces increasing pressure to prioritize user safety, especially for vulnerable populations like children and teens.References

Summarized by

Navi

[3]

Related Stories

State Attorneys General warn 13 AI firms including OpenAI and Google to fix harmful outputs

11 Dec 2025•Policy and Regulation

US Attorneys General Warn AI Companies: Protect Children or Face Consequences

26 Aug 2025•Policy and Regulation

OpenAI updates ChatGPT with teen safety rules as child exploitation reports surge 80-fold

18 Dec 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation